DSmT Decision-Making Algorithms for Finding Grasping Configurations of Robot Dexterous Hands

Abstract

:1. Introduction

2. Objects Grasping and Its Classification

- Power grasping: The contact with the objects is made on large surfaces of the hand, including hand phalanges and the palm of the hand. For this kind of grasping, high forces can be exerted on the object.

- Spherical grasping: used to grasp spherical objects;

- Cylindrical grasping: used to grasp long objects which cannot be completely surrounded by the hand;

- Lateral grasping: the thumb exerts a force towards the lateral side of the index finger.

- Precision grasping: the contact is made only with the tip of the fingers.

- Prismatic grasping (pinch): used to grasp long objects (with small diameter) or very small. Can be achieved with two to five fingers.

- Circular grasping (tripod): used in grasping circular or round objects. Can be achieved with three, four, or five fingers.

- No grasping:

- Hook: the hand forms a hook on the object and the hand force is exerted against an external force, usually gravity.

- Button pressing or pointing

- Pushing with open hand.

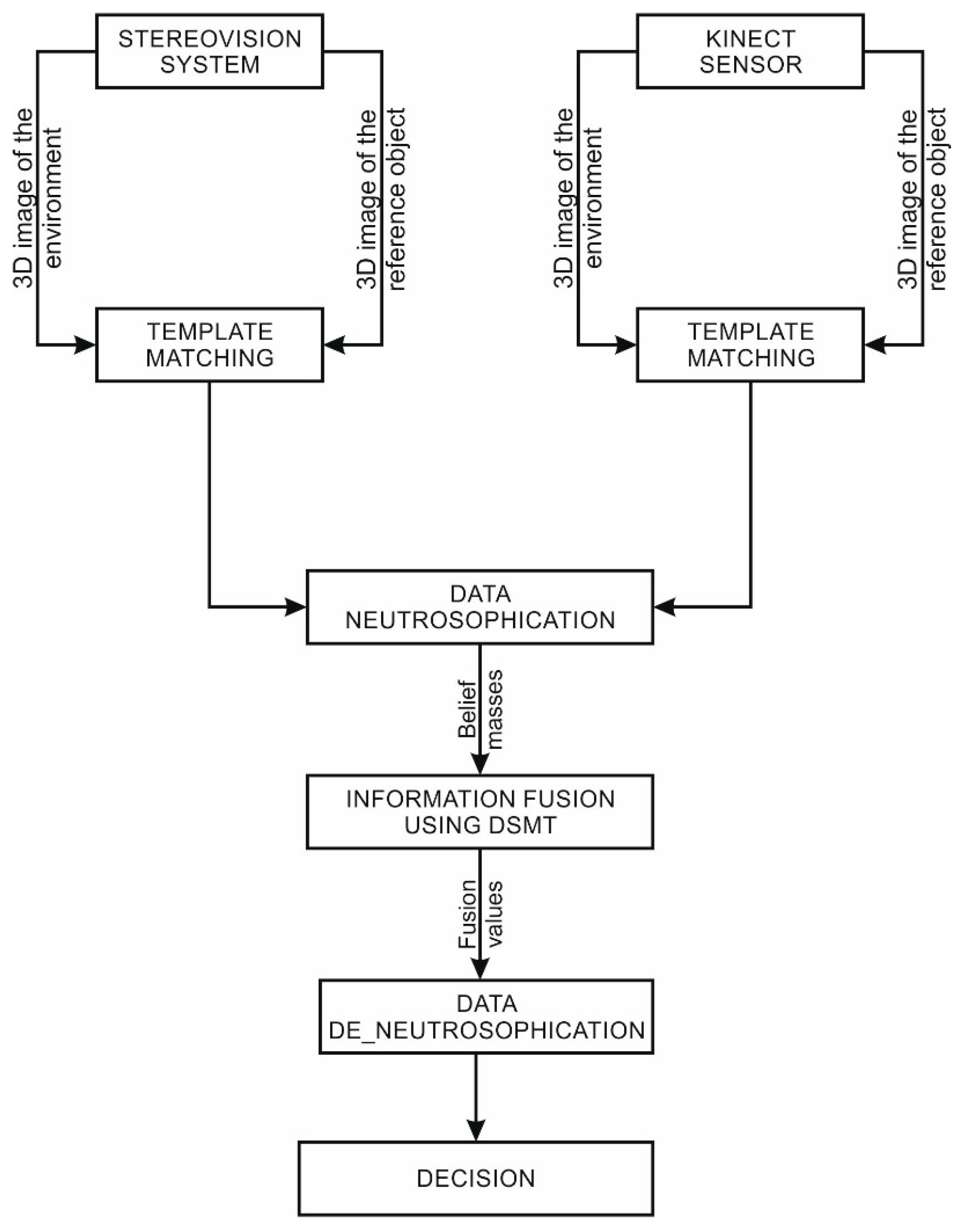

3. Object Detection Using Stereo-Vision and Kinect Sensor

4. Neutrosophic Logic and DSm Theory

4.1. Neutrosophic Logic

4.1.1. Neutrosophic Logic Definition

4.1.2. Neutrosophic Components Definition

4.2. Dezert–Smarandache Theory (DSmT)

- the probability theory works (assuming exclusivity and completeness assumptions) with basic probability assignments (bpa) such that

- the Dempster–Shafer theory works, (assuming exclusivity and completeness assumptions) with basic belief assignments (bba) such that

- the DSm theory works (assuming exclusivity and completeness assumptions) with basic belief assignment (bba) such that

4.2.1. The Hyperpower Set Notion

- If , then and .

- No other element is included in with the exception of those mentioned at 1 and 2.

4.2.2. Generalized Belief Functions

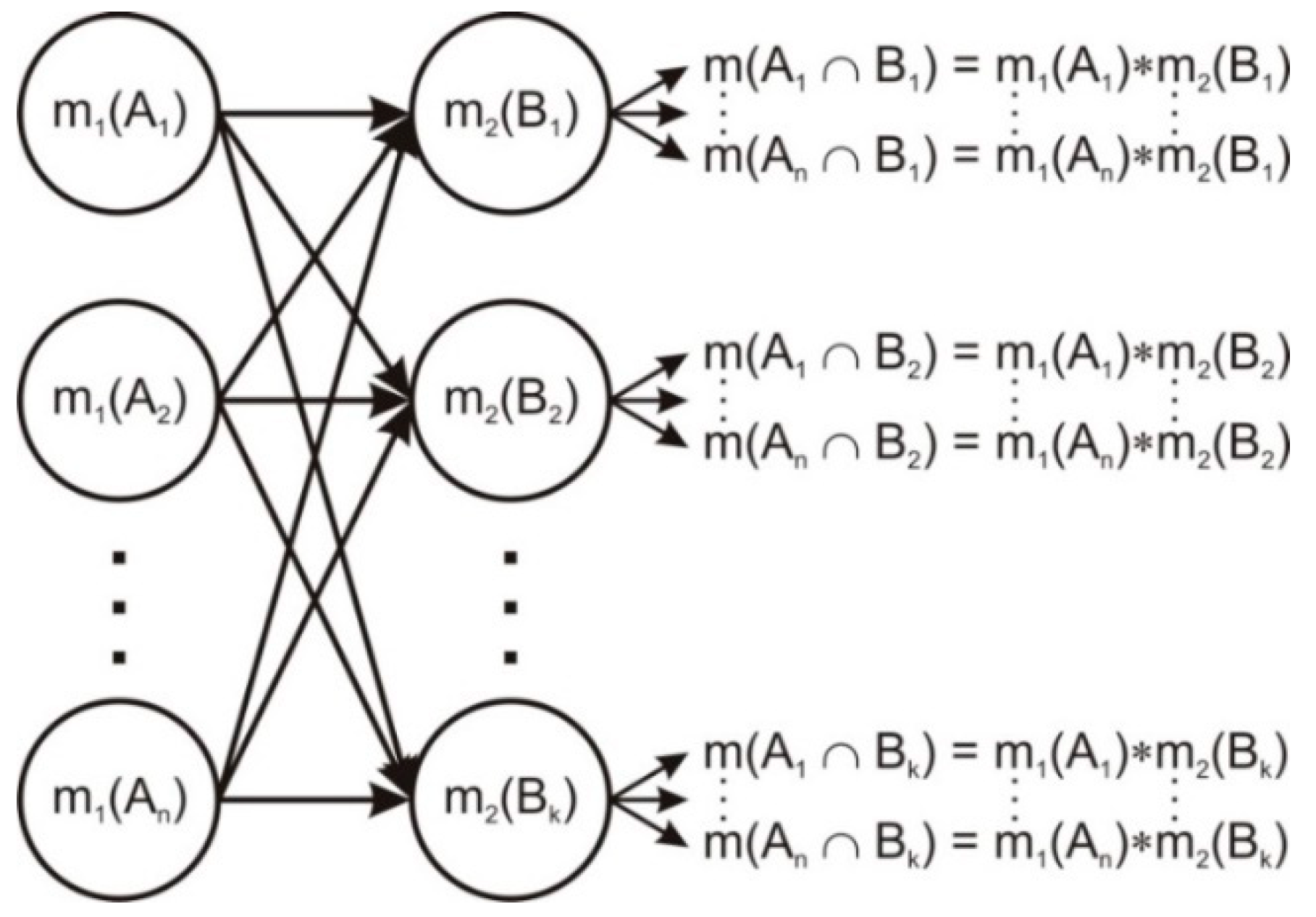

4.2.3. DSm Classic Rule of Combination

5. Decision-Making Algorithm

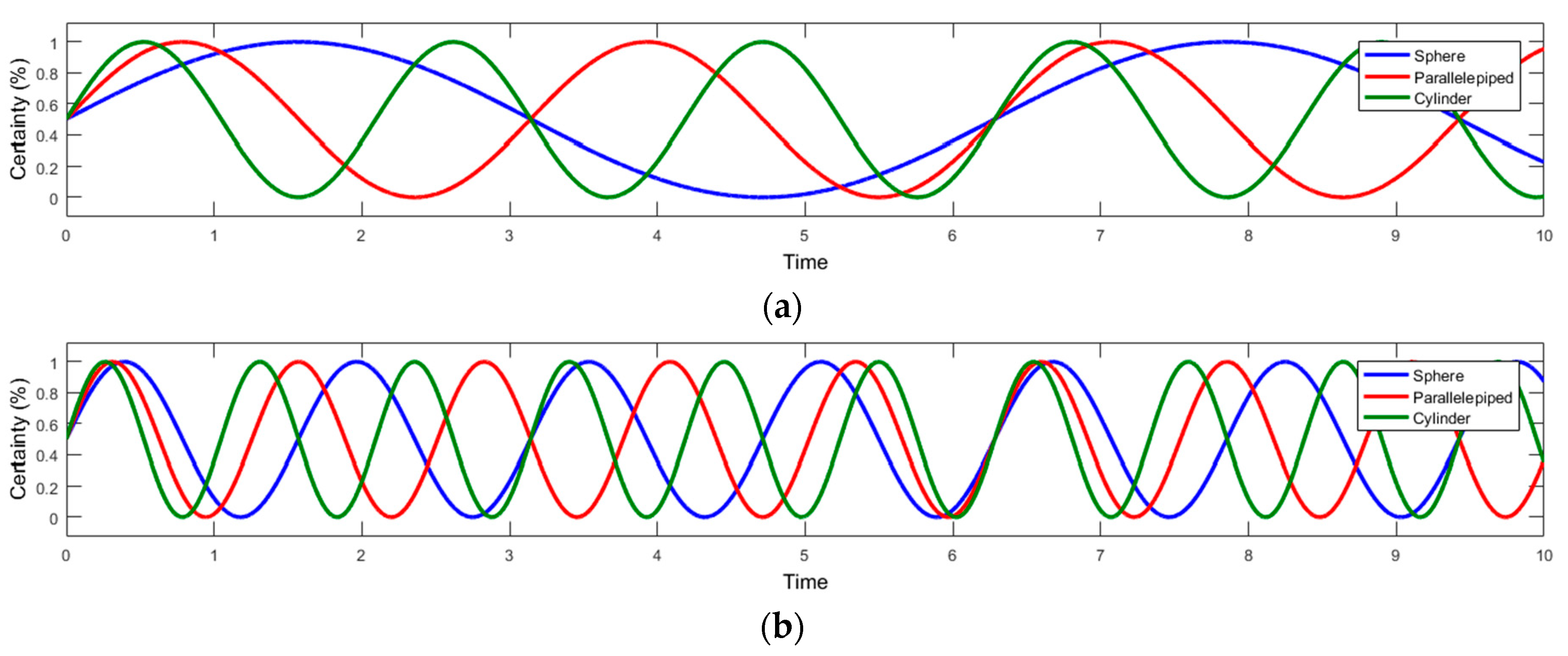

5.1. Data Neutrosophication

5.2. Information Fusion

5.3. Data Deneutrosophication and Decision-Making

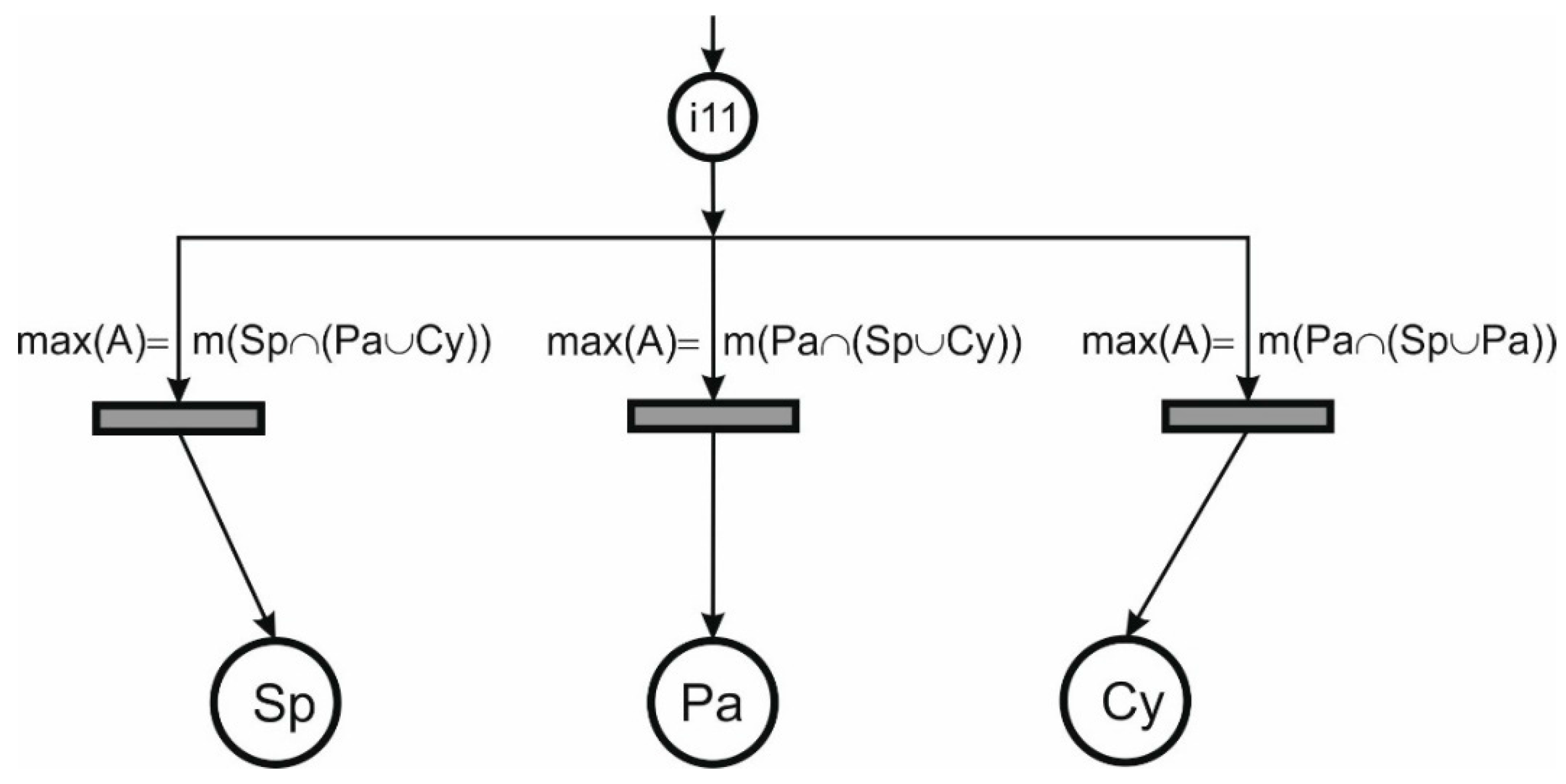

- sub_p1 (Figure 5)—this sub diagram deals with the contradiction between:

- the certainty that the target object is a ‘sphere’ and the uncertainty that the target object is either a ‘parallelepiped’ or a ‘cylinder’.

- the certainty that the target object is a ‘parallelepiped’ and the uncertainty that the target object is either a ‘sphere’ or a ‘cylinder’.

- the certainty that the target object is a ‘cylinder’ and the uncertainty that the target object is either a ‘parallelepiped’ or a ‘sphere’.

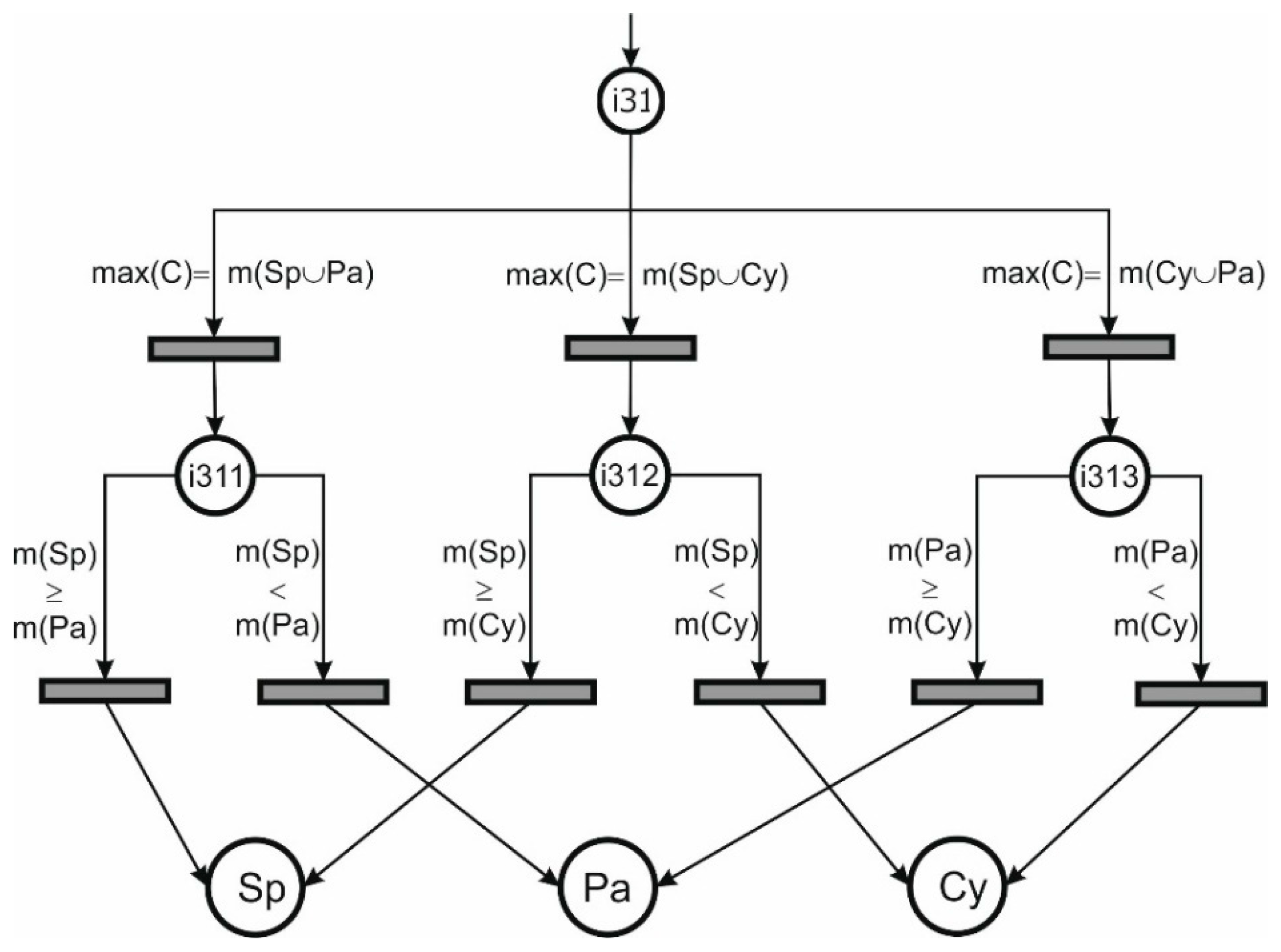

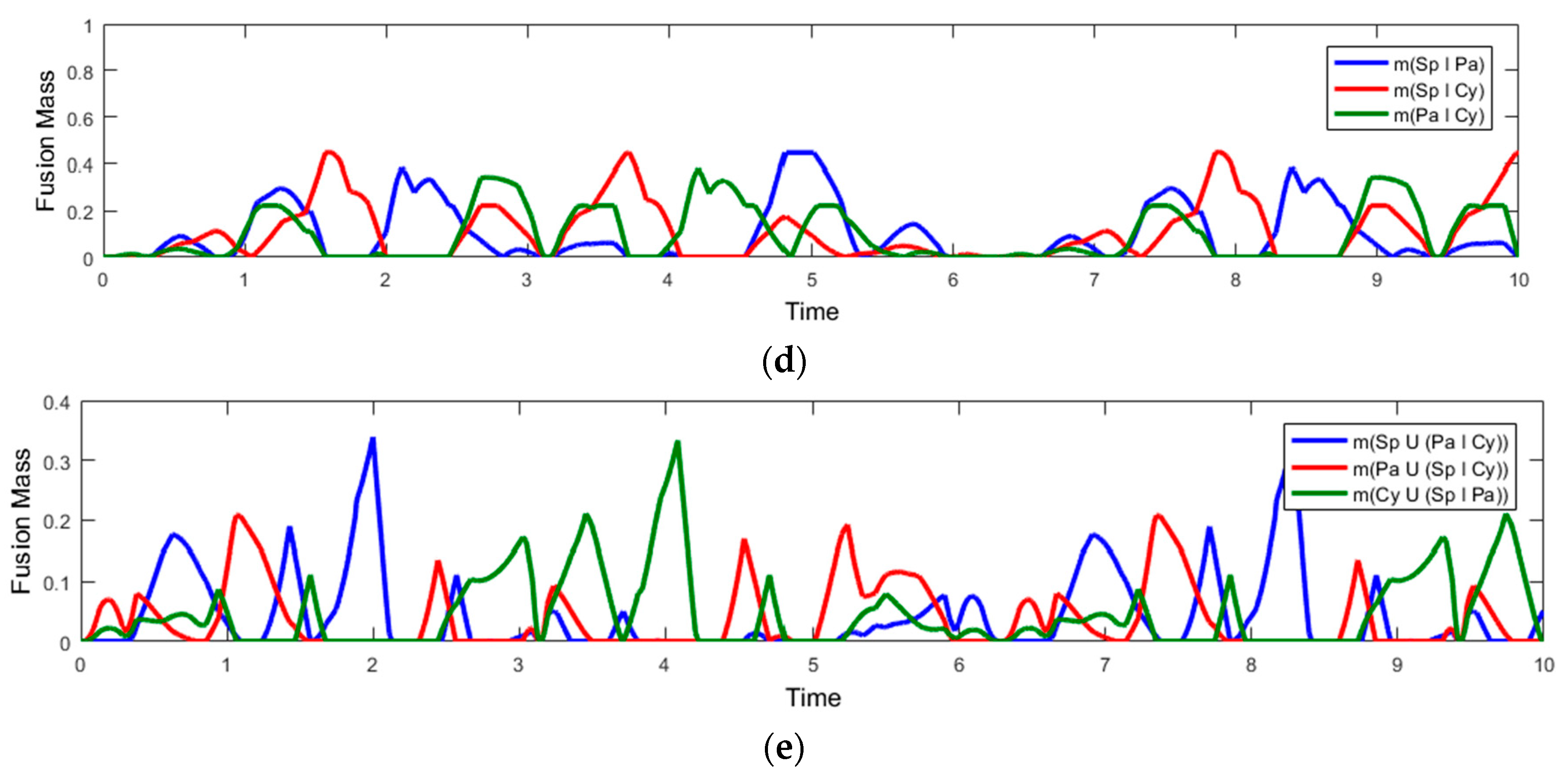

- sub_p2 (Figure 6)—this sub diagram deals with the contradiction between:

- The certainty that the target object is a ‘sphere’ and a ‘parallelepiped’.

- The certainty that the target object is a ‘sphere’ and a ‘cylinder’.

- The certainty that the target object is a ‘cylinder’ and a ‘parallelepiped’.

- sub_p3 (Figure 7)—this sub diagram deals with the uncertainty that the target object is:

- a ‘sphere’ or a ‘parallelepiped’

- a ‘sphere’ or a ‘cylinder’

- a ‘cylinder’ or a ‘parallelepiped’

- Determine .

- If , the contradiction between the certainty value that the target object is a ‘sphere’ and the uncertainty value that the target object is a ‘cylinder’ or ‘parallelepiped’ is compared with a threshold determined through an experimental trial-error process. If this is higher than or equal to the chosen threshold, the target object is a ‘sphere’.

- If , the contradiction between the certainty value that the target object is ‘parallelepiped’ and the uncertainty value that the target object is a ‘sphere’ or ‘cylinder’ is compared with the threshold mentioned above. If this is higher than or equal to the chosen threshold, the target object is a ‘parallelepiped’.

- If , the contradiction between the certainty value that the target object is a ‘cylinder’ and the uncertainty value that the target object is a ‘parallelepiped’ or ‘sphere’ is compared with the threshold mentioned above. If this is higher than or equal to the chosen threshold, the target object is a ‘cylinder’.

If none of the three conditions are met, we proceed to the next step: - Determine

- If , the contradiction between the certainty values that the target object is a ‘sphere’ and ‘parallelepiped’ is compared with a threshold determined through an experimental trial-error process. If this is higher or equal with the chosen threshold, we check if . If this condition if fulfilled, then the target objects is a ‘sphere’. Otherwise, the target object is ‘parallelepiped’.

- If , the contradiction between the certainty values that the target object is a ‘sphere’ and ‘cylinder’ is compared with the threshold mentioned above. If this is higher or equal with the chosen threshold, we check if . If this condition if fulfilled, then the target objects is a ‘sphere’. Otherwise, the target object is a ‘cylinder’.

- If , the contradiction between the certainty values that the target object is a ‘cylinder’ and a ‘parallelepiped’ is compared with the threshold mentioned above. If this is higher or equal with the chosen threshold, we check if . If this condition if fulfilled, then the target objects is a ‘cylinder’. Otherwise, the target object is a ‘parallelepiped’.

If in none of the situations, the contradiction is not larger that the chosen threshold, we go to the next step: - Determine

- If , the uncertainty probability that the target object is a ‘sphere’ or ‘ parallelepiped’ is larger than a threshold determined through an experimental trial-error process, we check if . If the condition is fulfilled, the target object is a ‘sphere’. Otherwise, the target object is a ‘parallelepiped’.

- If , the uncertainty probability that the target object is a ‘sphere’ or ‘cylinder’ is larger than the threshold mentioned above, we check if . If the condition is fulfilled, the target object is a ‘sphere’. Otherwise, the target object is a ‘cylinder’.

- If , the uncertainty probability that the target object is a ‘cylinder’ or ‘ parallelepiped’ is larger than the threshold mentioned above, we check if . If the condition is fulfilled, the target object is a ‘cylinder’. Otherwise, the target object is a ‘parallelepiped’.

If none of the hypotheses mentioned above are not fulfilled, we go to the next step: - Determine

- If , the target object is a ‘sphere’.

- If , the target object is a ‘parallelepiped’.

- If , the target object is a ‘cylinder’.

6. Discussion

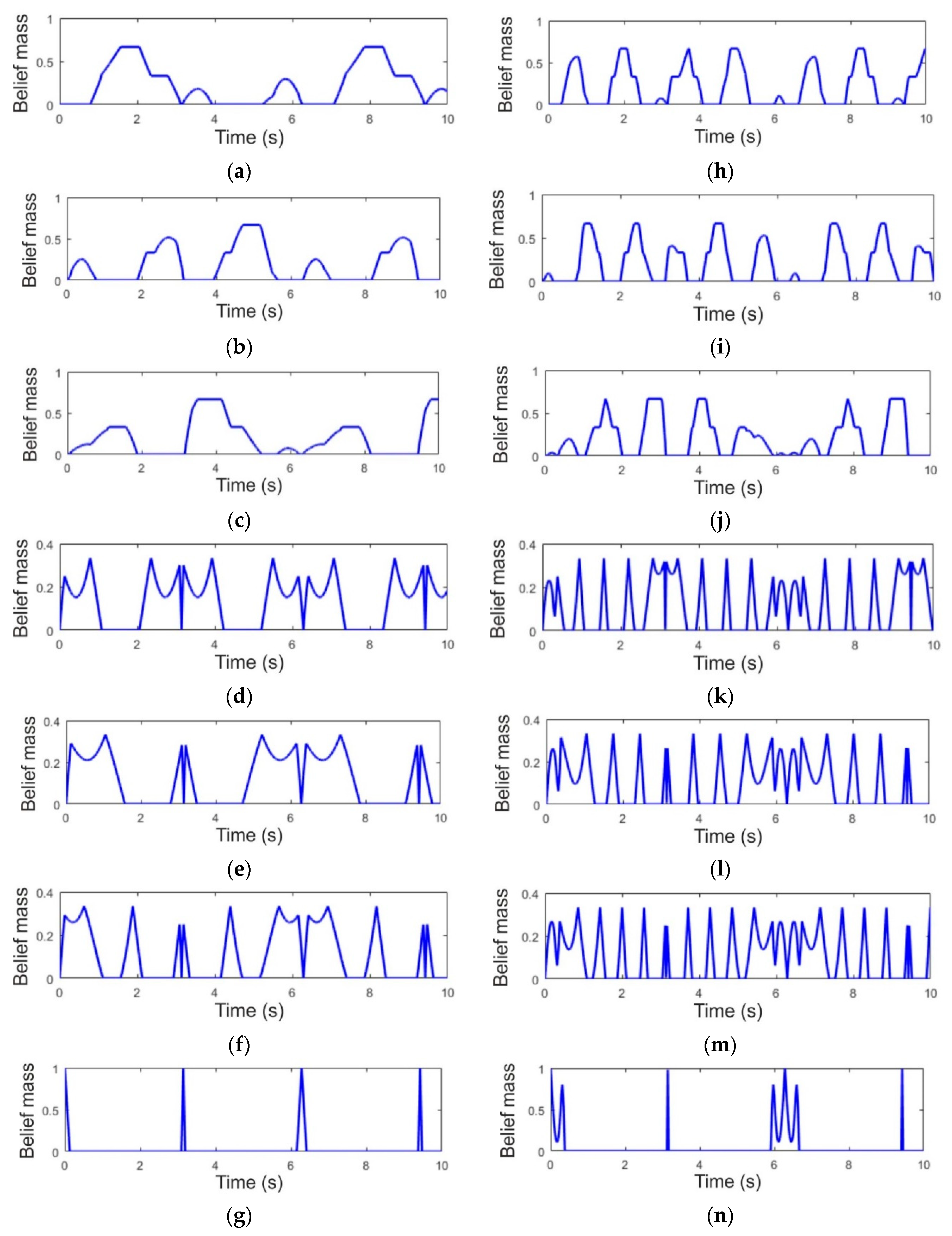

- The certainty probability that the object is a ‘sphere’ (Figure 9a,h)

- The certainty probability that the object is a ‘parallelepiped’ (Figure 9b,i)

- The certainty probability that the object is a ‘cylinder’ (Figure 9c,j)

- The uncertainty probability that the object is a ‘sphere’ or a ‘parallelepiped’ (Figure 9d,k)

- The uncertainty probability that the object is a ‘sphere’ or a ‘cylinder’ (Figure 9e,l)

- The uncertainty probability that the object is a ‘cylinder’ or a ‘parallelepiped’ (Figure 9f,m)

- The uncertainty probability that the object is a ‘sphere’, a ‘cylinder’, or a ‘parallelepiped’ (Figure 9g,n).

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Vladareanu, V.; Sandru, O.I.; Vladareanu, L.; Yu, H. Extension dynamical stability control strategy for the walking Robots. Int. J. Technol. Manag. 2013, 12, 1741–5276. [Google Scholar]

- Xu, Z.; Kumar, V.; Todorov, E. A low-cost and modular, 20-DOF anthropomorphic robotic hand: Design, actuation and modeling. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots (Humanoids), Atlanta, GA, USA, 15–17 October 2013. [Google Scholar]

- Jaffar, A.; Bahari, M.S.; Low, C.Y.; Jaafar, R. Design and control of a Multifingered anthropomorphic Robotic hand international. Int. J. Mech. Mechatron. Eng. 2011, 11, 26–33. [Google Scholar]

- Roa, M.A.; Argus, M.J.; Leidner, D.; Borst, C.; Hirzinger, G. Power grasp planning for anthropomorphic robot hands. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 563–569. [Google Scholar] [CrossRef]

- Lippiello, V.; Siciliano, B.; Villani, L. Multi-fingered grasp synthesis based on the object Dynamic properties. Robot. Auton. Syst. 2013, 61, 626–636. [Google Scholar] [CrossRef]

- Bullock, I.M.; Ma, R.R.; Dollar, A.M. A hand-centric classification of Human and Robot dexterous manipulation. IEEE Trans. Haptics 2013, 6, 129–144. [Google Scholar] [CrossRef] [PubMed]

- Ormaechea, R.C. Robotic Hands. Adv. Mech. Robot. Syst. 2011, 1, 19–39. [Google Scholar] [CrossRef]

- Enabling the Future. Available online: http://enablingthefuture.org (accessed on 26 March 2018).

- Prattichizzo, D.; Trinkle, J.C. Grasping. In Springer Handbook of Robotics; Siciliano, B., Khatib, O., Eds.; Springer: Berlin, Germany, 2016; pp. 955–988. ISBN 978-3-319-32552-1. [Google Scholar]

- Feix, T.; Romero, J.; Schmiedmayer, H.B.; Dollar, A.M.; Kragic, D. The GRASP taxonomy of human grasp types. IEEE Trans. Hum.-Mach. Syst. 2016, 46, 66–77. [Google Scholar] [CrossRef]

- Alvarez, A.G.; Roby-Brami, A.; Robertson, J.; Roche, N. Functional classification of grasp strategies used by hemiplegic patients. PLoS ONE 2017, 12, e0187608. [Google Scholar] [CrossRef]

- Fermuller, C.; Wang, F.; Yang, Y.Z.; Zampogiannis, K.; Zhang, Y.; Barranco, F.; Pfeiffer, M. Prediction of manipulation actions. Int. J. Comput. Vis. 2018, 126, 358–374. [Google Scholar] [CrossRef]

- Tsai, J.R.; Lin, PC. A low-computation object grasping method by using primitive shapes and in-hand proximity sensing. In Proceedings of the IEEE ASME International Conference on Advanced Intelligent Mechatronics, Munich, Germany, 3–7 July 2017; pp. 497–502. [Google Scholar]

- Song, P.; Fu, Z.Q.; Liu, LG. Grasp planning via hand-object geometric fitting. Vis. Comput. 2018, 34, 257–270. [Google Scholar] [CrossRef]

- Ma, C.; Qiao, H.; Li, R.; Li, X.Q. Flexible robotic grasping strategy with constrained region in environment. Int. J. Autom. Comput. 2017, 14, 552–563. [Google Scholar] [CrossRef] [Green Version]

- Stavenuiter, R.A.J.; Birglen, L.; Herder, J.L. A planar underactuated grasper with adjustable compliance. Mech. Mach. Theory 2017, 112, 295–306. [Google Scholar] [CrossRef]

- Choi, C.; Yoon, S.H.; Chen, C.N.; Ramani, K. Robust hand pose estimation during the interaction with an unknown object. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3142–3151. [Google Scholar]

- Seredynski, D.; Szynkiewicz, W. Fast grasp learning for novel objects. In Challenges in Automation, Robotics and Measurement Techniques, Proceedings of AUTOMATION-2016, Warsaw, Poland, 2–4 March 2016; Advances in Intelligent Systems and Computing Book Series; Springer: Warsaw, Poland, 2016; Volume 440, pp. 681–692. ISBN 978-3-319-29357-8; 978-3-319-29356-1. [Google Scholar]

- Shaw-Cortez, W.; Oetomo, D.; Manzie, C.; Choong, P. Tactile-based blind grasping: A discrete-time object manipulation controller for robotic hands. IEEE Robot. Autom. Lett. 2018, 3, 1064–1071. [Google Scholar] [CrossRef]

- Gu, H.W.; Zhang, Y.F.; Fan, S.W.; Jin, M.H.; Liu, H. Grasp configurations optimization of dexterous robotic hand based on haptic exploration information. Int. J. Hum. Robot. 2017, 14. [Google Scholar] [CrossRef]

- Yamakawa, Y.; Namiki, A.; Ishikawa, M.; Shimojo, M. Planning of knotting based on manipulation skills with consideration of robot mechanism/motion and its realization by a robot hand system. Symmetry 2017, 9, 194. [Google Scholar] [CrossRef]

- Nacy, S.M.; Tawfik, M.A.; Baqer, I.A. A novel approach to control the robotic hand grasping process by using an artificial neural network algorithm. J. Intell. Syst. 2017, 26, 215–231. [Google Scholar] [CrossRef]

- Zaidi, L.; Corrales, J.A.; Bouzgarrou, B.C.; Mezouar, Y.; Sabourin, L. Model-based strategy for grasping 3D deformable objects using a multi-fingered robotic hand. Robot. Auton. Syst. 2017, 95, 196–206. [Google Scholar] [CrossRef]

- Liu, H.; Meusel, P.; Hirzinger, G.; Jin, M.; Liu, Y.; Xie, Z. The modular multisensory DLR-HIT-Hand: Hardware and software architecture. IEEE/ASME Trans. Mechatron. 2008, 13, 461–469. [Google Scholar] [CrossRef]

- Zollo, L.; Roccella, S.; Guglielmelli, E.; Carrozza, M.C.; Dario, P. Biomechatronic design and control of an anthropomorphic artificial hand for prosthetic and robotic applications. IEEE/ASME Trans. Mechatron. 2007, 12, 418–429. [Google Scholar] [CrossRef]

- Lopez-Damian, E.; Sidobre, D.; Alami, R. Grasp planning for non-convex objects. Int. Symp. Robot. 2009, 36. [Google Scholar] [CrossRef]

- Miller, A.T.; Knoop, S.; Christensen, H.I.; Allen, P.K. Automatic grasp planning using shape primitives. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation (Cat. No.03CH37422), Taipei, Taiwan, 14–19 September 2003. [Google Scholar]

- Napier, J. The prehensile movements of the human hand. J. Bone Jt. Surg. 1956, 38, 902–913. [Google Scholar] [CrossRef]

- Cutkosky, M.R.; Wright, P.K. Modeling manufacturing grips and correlation with the design of robotic hands. In Proceedings of the 1986 IEEE International Conference on Robotics and Automation, San Francisco, CA, USA, 7–10 April 1986; pp. 1533–1539. [Google Scholar]

- Iberall, T. Human prehension and dexterous robot hands. Int. J. Robot. Res. 1997, 16, 285–299. [Google Scholar] [CrossRef]

- Stansfield, S.A. Robotic grasping of unknown objects: A knowledge-based approach. Int. J. Robot. Res. 1991, 10, 314–326. [Google Scholar] [CrossRef]

- Lai, X.; Wang, H.; Xu, Y. A real-time range finding system with binocular stereo vision. Int. J. Adv. Robot. Syst. 2012, 9, 26. [Google Scholar] [CrossRef]

- Oliver, A.; Wünsche, B.C.; Kang, S.; MacDonald, B. Using the Kinect as a navigation sensor for mobile robotics. In Proceedings of the 27th Conference on Image and Vision Computing New Zealand, Dunedin, New Zealand, 26–28 November 2012; pp. 509–514, ISBN 978-1-4503-1473-2. [Google Scholar]

- Aljarrah, I.A.; Ghorab, A.S.; Khater, I.M. Object recognition system using template matching based on signature and principal component analysis. Int. J. Digit. Inf. Wirel. Commun. 2012, 2, 156–163. [Google Scholar]

- Smarandache, F.; Dezert, J. Applications and Advances of DSmT for Information Fusion; American Research Press: Rehoboth, DE, USA, 2009; Volume 3. [Google Scholar]

- Khaleghi, B.; Khamis, A.; Karray, F.; Razavi, S.N. Multisensor data fusion: A review of the state-of-the-art. Inf. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- Jian, Z.; Hongbing, C.; Jie, S.; Haitao, L. Data fusion for magnetic sensor based on fuzzy logic theory. In Proceedings of the IEEE International Conference on Intelligent Computation Technology and Automation, Shenzhen, China, 28–29 March 2011. [Google Scholar]

- Munz, M.; Dietmayer, K.; Mahlisch, M. Generalized fusion of heterogeneous sensor measurements for multi target tracking. In Proceedings of the 13th Conference on Information Fusion (FUSION), Edinburgh, UK, 26–29 July 2010; pp. 1–8. [Google Scholar]

- Jiang, Y.; Wang, H.G.; Xi, N. Target object identification and location based on multi-sensor fusion. Int. J. Autom. Smart Technol. 2013, 3, 57–67. [Google Scholar] [CrossRef]

- Hall, D.L.; Hellar, B.; McNeese, M.D. Rethinking the data overload problem: Closing the gap between situation assessment and decision-making. In Proceedings of the National Symposium on Sensor Data Fusion, McLean, VA, USA, 11–15 June 2007. [Google Scholar]

- Esteban, J.; Starr, A.; Willetts, R.; Hannah, P.; Bryanston-Cross, P. A review of data fusion models and architectures: Towards engineering guidelines. Neural Comput. Appl. 2005, 14, 273–281. [Google Scholar] [CrossRef] [Green Version]

- Chilian, A.; Hirschmuller, H.; Gorner, M. Multisensor data fusion for robust pose estimation of a six-legged walking robot. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Francisco, CA, USA, 25–30 September 2011. [Google Scholar]

- Dasarathy, B.V. Editorial: Information fusion in the realm of medical applications—A bibliographic glimpse at its growing appeal. Inf. Fusion 2012, 13, 1–9. [Google Scholar] [CrossRef]

- Hall, D.L.; Linn, R.J. Survey of commercial software for multisensor data fusion. Proc. SPIE 1993. [Google Scholar] [CrossRef]

- Vladareanu, L.; Vladareanu, V.; Schiopu, P. Hybrid force-position dynamic control of the robots using fuzzy applications. Appl. Mech. Mater. 2013, 245, 15–23. [Google Scholar] [CrossRef]

- Gaines, B.R. Fuzzy and probability uncertainty logics. Inf. Control 1978, 38, 154–169. [Google Scholar] [CrossRef]

- Shafer, G. A Mathematical Theory of Evidence; Princeton University Press: Princeton, NJ, USA, 1976; Volume 73. [Google Scholar]

- Sahbani, A.; El-Khoury, S.; Bidaud, P. An overview of 3D object grasp synthesis algorithms. Robot. Auton. Syst. 2012, 60, 326–336. [Google Scholar] [CrossRef] [Green Version]

- De Souza, R.; El Khoury, S.; Billard, A. Towards comprehensive capture of human grasping and manipulation skills. In Proceedings of the Thirteenth International Symposium on the 3-D Analysis of Human Movement, Lausanne, Switzerland, 14–17 July 2014. [Google Scholar]

- Bullock, I.M.; Dollar, A.M. Classifying human manipulation behavior. In Proceedings of the 2011 IEEE International Conference on Rehabilitation Robotics, Zurich, Switzerland, 29 June–1 July 2011; pp. 1–6. [Google Scholar]

- Morales, A.; Azad, P.; Asfour, T.; Kraft, D.; Knoop, S.; Dillmann, R.; Kargov, A.; Pylatiuk, C.H.; Schulz, S. An anthropomorphic grasping approach for an assistant humanoid robot. Int. Symp. Robot. 2006, 1956, 149. [Google Scholar]

- Etienne–Cwnmings, R.; Pouliquen, P.; Lewis, M. A Single chip for imaging, color segmentation, histogramming and template matching. Electron. Lett. 2002, 2, 172–174. [Google Scholar] [CrossRef]

- Guskov, I. Kernel—based template alignment. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; pp. 610–617. [Google Scholar]

- Smarandache, F.; Dezert, J. Applications and Advances of DSmT for Information Fusion; American Research Press: Rehoboth, DE, USA, 2004. [Google Scholar]

- Smarandache, F.; Vladareanu, L. Applications of neutrosophic logic to robotics: An introduction. In Proceedings of the 2011 IEEE International Conference on Granular Computing, Kaohsiung, Taiwan, 8–10 November 2011; pp. 607–612. [Google Scholar]

- Gal, I.A.; Vladareanu, L.; Yu, H. Applications of neutrosophic logic approaches in ‘RABOT’ real time control. In Proceedings of the SISOM 2012 and Session of the Commission of Acoustics, Bucharest, Romania, 30–31 May 2013. [Google Scholar]

- Gal, I.A.; Vladareanu, L.; Munteanu, R.I. Sliding motion control with bond graph modeling applied on a robot leg. Rev. Roum. Sci. Tech. 2010, 60, 215–224. [Google Scholar]

- Vladareanu, V.; Dumitrache, I.; Vladareanu, L.; Sacala, I.S.; Tont, G.; Moisescu, M.A. Versatile intelligent portable robot control platform based on cyber physical systems principles. Stud. Inform. Control 2015, 24, 409–418. [Google Scholar] [CrossRef]

- Melinte, D.O.; Vladareanu, L.; Munteanu, R.A.; Wang, H.; Smarandache, F.; Ali, M. Nao robot in virtual environment applied on VIPRO platform. In Proceedings of the Annual Symposium of the Institute of Solid Mechanics and Session of the Commission of Acoustics, Bucharest, Romania, 21–22 May 2016; Volume 57. [Google Scholar]

- Smarandache, F. A unifying field in logics: Neutrosophic field. Multiple-valued logic. Int. J. 2002, 8, 385–438. [Google Scholar]

- Wang, H.; Smarandache, F.; Zhang, Y.Q.; Sunderraman, R. Interval Neutrosophic Sets and Logic: Theory and Application in Computing; HEXIS Neutrosophic Book Series No.5; Georgia State University: Atlanta, GA, USA, 2005; ISBN 1-931233-94-2. [Google Scholar]

- Smarandache, F. Neutrosophy: Neutrosophic Probability, Set, and Logic: Analytic Synthesis & Synthetic Analysis; American Research Press: Gallup, NM, USA, 1998; 105p, ISBN 1-87958-563-4. [Google Scholar]

- Rosell, J.; Sierra, X.; Palomo, F.L. Finding grasping configurations of a dexterous hand and an industrial robot. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 1178–1183. [Google Scholar] [CrossRef]

- Emadi, S.; Shams, F. Modeling of component diagrams using Petri Nets. Indian J. Sci. Technol. 2010, 3, 1151–1161. [Google Scholar] [CrossRef]

| Object | Activity | Grasping Position |

|---|---|---|

| Bottles, cups, and mugs | Transport, pouring/filling | Force: Cylindrical grasping (from the side or the top) |

| Cups (using handles) | Pouring/filling | Force: Lateral grasping Precision: Prismatic grasping |

| Plates/trays | Transport Receiving from humans | Power: Lateral grasping Precision: Prismatic grasping No grasp: pushing (open hand) |

| Pens, cutlery | Transport | Precision: Prismatic grasping |

| Door handle | Open/Close | Force: Cylindrical grasping No grasp: Hook |

| Small objects | Transport | Power: Spherical grasping Precision: Circular grasping (tripod) |

| Switches, buttons | Pushing | No grasp: Button pressing |

| Round switches, bottle caps | Rotation | Force: Lateral grasping Precision: Circular grasping (tripod) |

| Mathematical Representation | Description |

|---|---|

| Certainty that the target object is a ‘sphere’ | |

| Certainty that the target object is a ‘parallelepiped’ | |

| Certainty that the target object is a ‘cylinder’ | |

| Uncertainty that the target object is a ‘sphere’ or ‘parallelepiped’ | |

| Uncertainty that the target object is a ‘sphere’ or ‘cylinder’ | |

| Uncertainty that the target object is a ‘cylinder’ or ‘parallelepiped’ | |

| Uncertainty that the target object is a ‘sphere’, ‘cylinder’, or ‘parallelepiped’ |

| Mathematical Representation | Description |

|---|---|

| Contradiction between the certainties that the target object is a ‘sphere’ and ‘parallelepiped’ | |

| Contradiction between the certainties that the target object is a ‘sphere’ and ‘cylinder’ | |

| Contradiction between the certainties that the target object is a ‘cylinder’ and ‘parallelepiped’ | |

| Contradiction between the certainty that the target object is a ‘sphere’ and the uncertainty that the target object is a ‘cylinder’ or ‘parallelepiped’ | |

| Contradiction between the certainty that the target object is a ‘parallelepiped’ and the uncertainty that the target object is a ‘sphere’ or ‘cylinder’ | |

| Contradiction between the certainty that the target object is ‘cylinder’ and the uncertainty that the target object is a ‘parallelepiped’ or ‘sphere’ | |

| Contradiction between the certainties that the target object is a ‘sphere’, ‘cylinder’, and ‘parallelepiped’ |

| Time | 3.14 s | 6.28 s | 9.42 s | |||||

|---|---|---|---|---|---|---|---|---|

| Source | State | Obs. 1 | Obs. 2 | Obs. 1 | Obs. 2 | Obs. 1 | Obs. 2 | |

| Hypothesis | ||||||||

| Generalized belief assignment values | ||||||||

| 0.0001 | 0.0001 | 0.0001 | 0.0001 | 0.0005 | 0.0007 | |||

| 0.0001 | 0 | 0 | 0 | 0.0011 | 0.0067 | |||

| 0 | 0.0008 | 0 | 0 | 0 | 0 | |||

| 0.0106 | 0.0234 | 0.0085 | 0.0085 | 0.0317 | 0.0692 | |||

| 0.0106 | 0.0231 | 0.0085 | 0.0085 | 0.0315 | 0.0669 | |||

| 0.0106 | 0.0231 | 0.0085 | 0.0085 | 0.0312 | 0.0662 | |||

| 0.9680 | 0.9296 | 0.9744 | 0.9744 | 0.9040 | 0.7904 | |||

| Fusion values | ||||||||

| 0.0006 | 0.0001 | 0.0054 | ||||||

| 0.0006 | 0.0003 | 0.0053 | ||||||

| 0.0012 | 0.0002 | 0.0106 | ||||||

| 0.0328 | 0.0166 | 0.0898 | ||||||

| 0.0325 | 0.0166 | 0.0875 | ||||||

| 0.0324 | 0.0166 | 0.0866 | ||||||

| 0.8999 | 0.9495 | 0.7145 | ||||||

| 0 | 0 | 0 | ||||||

| 0 | 0 | 0 | ||||||

| 0 | 0 | 0 | ||||||

| 0 | 0 | 0.0001 | ||||||

| 0 | 0 | 0.0001 | ||||||

| 0 | 0 | 0.0002 | ||||||

| Decision | Cylinder | Sphere | Cylinder | |||||

| Method | Execution Time (s) |

|---|---|

| Data neutrosophication for Obs. 1 | 0.0026 |

| Data neutrosophication for Obs. 2 | 0.0026 |

| Data fusion using DSmT | 0.0002 |

| Data deneutrosophication/decision-making | 0.0092 |

| Total time | 0.0146 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gal, I.-A.; Bucur, D.; Vladareanu, L. DSmT Decision-Making Algorithms for Finding Grasping Configurations of Robot Dexterous Hands. Symmetry 2018, 10, 198. https://doi.org/10.3390/sym10060198

Gal I-A, Bucur D, Vladareanu L. DSmT Decision-Making Algorithms for Finding Grasping Configurations of Robot Dexterous Hands. Symmetry. 2018; 10(6):198. https://doi.org/10.3390/sym10060198

Chicago/Turabian StyleGal, Ionel-Alexandru, Danut Bucur, and Luige Vladareanu. 2018. "DSmT Decision-Making Algorithms for Finding Grasping Configurations of Robot Dexterous Hands" Symmetry 10, no. 6: 198. https://doi.org/10.3390/sym10060198