1. Introduction

Cloud and shadow detection in satellite images is a crucial step before analysis. Clouds and shadows disturb the modelling of land cover parameters from the reflectance values. With the new, frequently imaging satellites like Suomi NPP (National Polar-orbiting Partnership) and its potential to near real-time (NRT) operational applications, the detection method has to be robust and automated to allow for fast analysis without extra delays.

The Suomi NPP satellite was launched in October 2011 by NASA (National Aeronautics and Space Administration) to monitor and predict climate change and weather conditions over land, sea and atmosphere. Its optical VIIRS (Visible Infrared Imaging Radiometer Suite) instrument provides for instance critical data for environmental assessments, forecasts and warnings [

1]. In this study the main motivation was to provide cloud free Suomi NPP VIIRS imagery for daily snow mapping of the Fennoscandia area.

The VIIRS cloud mask (VCM) algorithm was developed and tested in the Calibration-Validation (CalVal) program of the Joint Polar Satellite Systems [

1,

2]. The VCM algorithm uses both moderate resolution ’M’ bands (750 m at nadir) and the imagery resolution ’I’ bands (375 m at nadir) in pixel-level decisions on the presence of clouds [

3]. The cloud tests used for each pixel are a function of the surface type: water, land, desert, coast, snow or ice, so these surface types have to be known beforehand in order to apply the VCM algorithm. The cloud tests differ also between daytime and night-time (solar zenith angle ≥85

) images. The cloud tests provide an overall probability of clouds, based on whether the pixel is finally classified as confidently cloudy, probably cloudy, probably clear or confidently clear. The method is clear sky conservative so that, also in the case of low probability of cloud, the pixel is not assigned as clear sky. The higher resolution ’I’ bands are used only after these initial assignments, and only to ocean-coast pixels to check and change from confidently clear to more cloudy classes.

Hutchinson et al. [

3] compared the VCM mask to a manually generated cloud mask over Golden Granules and to CALIOP-VIIRS match-ups. The Golden Granules are binary cloud masks over challenging VIIRS instances assessed by several experts. CALIOP is a Cloud-Aerosol Lidar with Orthogonal Polarisation on board CALIPSO satellite (Cloud-Aerosol Lidar and Infrared Pathfinder Satellite Observation). VIIRS and CALIOP are in alignment for about 1–2 orbits every 3–4 days. However, the two sensors are within 20 min of each other, so the cloud situation has time to change. The important result was that the performance was consistent with both sources of reference data. Both manual and CALIOP-based cloud references showed almost as high a probability of correct classification to the clouds of VCM, each of them being a minimum of 93.9% on ocean, land, and desert backgrounds [

3]. The percentage of missing and false alarms also fulfilled the performance goals for Validation Phase-2 maturity level of 1–8%.

Piper and Bahr [

4] presented a rapid cloud-masking algorithm that used the ’I’ bands of the VIIRS imageös. It identified cloud pixels using reflectance values in 0.64

m, 0.865

m and 1.61

m, and temperature value in 11.45

m. Cloud pixels on snow covered areas were identified by first classifying the snow areas with the NDSI (Normalized Difference Snow Index [

5,

6]) and then identifying cloud pixels by the reflectance in 0.865

m. The results from this VIBCM (VIIRS I-Band Cloud Mask) algorithm were compared to the results from the VCM algorithm. The advantage of the VIBCM algorithm is that it can quickly compute the cloud mask at 375 m resolution. However, it was not as accurate as VCM. The hit rate of cloud pixels (cloud in both VCM and VIBCM cloud mask) was 72.0% in Hawaii area, 77.9% in Eastern United States area, and 94.5% in Northern Europe area. The corresponding false alarms rates (cloud in VIBCM cloud mask but not in VCM cloud mask) were 3.7%, 8.4% and 42.1%, respectively. Piper and Bahr pointed out three main issues with the method. The first was a likely temperature dependency. As the temperature values decrease from Hawaii to Northern Europe, the number of missing cloud pixels decreased but the number of false alarms increased. The other issue is that the accuracy assessment is done by comparison to the results of another cloud masking method, here VCM, which has its own accuracy issues. The third issue was that while one scene in the study had snow, the VIBCM failed to identify it. Piper and Bahr [

4] concluded that additional work is needed to differentiate between snow and cold clouds with a range of VIIRS images that contain snow, cold surface temperatures and cold clouds. Shadow detection was not assessed in [

4].

Cloud masking methods have been developed for MODIS (Moderate Resolution Imaging Spectro-radiometer) satellite images, which is the closest sensor to Suomi NPP VIIRS imagery for spatial resolution and spectral bands. Luo et al. [

7] presented a method to classify cloud, shadow, snow and ice, water bodies, vegetated lands and non-vegetated lands from the MODIS 250-m imagery. The algorithm uses seven MODIS bands between 0.459

m and 2.155

m in 250 m resolution with a number of thresholds for reflectance values, differences and ratios. Shadows are mapped by the projection of clouds to the ground, using the sun angle and estimated elevation range from 0.5 km to 12 km. Shadow areas are confirmed with the ratios between 1.64

m and 0.47

m bands, and 0.86

m and 0.47

m bands. The accuracy assessment of the results was based on the visual comparison to MODIS standard cloud and shadow masks. The comparison showed more detailed and more comprehensive cloud and shadow masks. Luo et al. [

7] concluded that more quantitative analyses are required to understand its performance over a range of input scenes in various seasons.

The SWIR (Short-Wave Infrared) band at 1.38

m (cirrus band), common to both in MODIS and VIIRS images, is located in a very strong water vapour absorption area and shows only the upper layers of the atmosphere. This has been used successfully to detect upper atmosphere clouds from MODIS imagery [

8,

9] and works well with thin cirrus clouds that are difficult to detect otherwise [

10]. Zhu et al. [

11] concluded that the cirrus band is more helpful than thermal band in cloud detection.

Snow and clouds have similar reflectance in the visible and near infrared (NIR) bands, but the reflectance of snow at 1.6

m is lower than that of clouds [

12]. Both Piper and Bahr [

4] and Dozier [

12] mentioned the utilization of this band in the NDSI (Normalized Difference Snow Index) for snow detection, with the exception that Dozier proposed the utilization of green band and Piper and Bahr the utilization of the red band to normalize the difference index.

Metsämäki et al. [

13] described a cloud screening method designed to be used for low-resolution satellite imagery, like Terra/MODIS, ERS-2/ATRS-2 (European Remote Sensing, Along Track Scanning Radiometer), Envisat/AATSR (Advanced Along Track Scanning Radiometer), Suomi NPP VIIRS, and future Sentinel-3 SLSTR (Sea and Land Surface Temperature Radiometer) imagery. The method uses the wavelength bands of 0.55

m, 1.6

m, 3.7

m, 11

m and 12

m, which are common to these sensors. The method is based on several empirically determined decision rules from selected training areas representing clouds, snow-covered terrain, partially snow-covered terrain and snow-free terrain. The method is used in the generation of GlobSnow data [

13].

FMask is the state-of-the-art method for cloud, shadow and snow detection from Landsat 8 images. It uses the temperature bands to detect clouds at different heights [

11,

14]. The height of the cloud is then used also in shadow detection where the cloud is first projected to the ground before further analysis and detection of shadow pixels. The new Sentinel 2 optical imagery has all the same spectral bands as the VIIRS instrument. Zhu et al. [

11] note that it is challenging to design a good cloud detection algorithm because Sentinel-2 does not have the thermal bands and most of the cloud detection algorithms are heavily dependent on the thermal band, as cloud pixels are much colder than clear-sky pixels.

Also multitemporal methods have been developed for cloud and shadow detection (Hagolle et al. [

15] and Zhu et al. [

16]). Multi-temporal cloud detection is usually more robust than single-date approaches. Multi-temporal approaches are well suited to time series analysis applications, however they increase significantly data volumes and computational costs for applications like single-date classifications over wide areas. Also, land cover changes bring new challenges to multi-temporal approaches. Single data approaches are easier to implement for NRT applications.

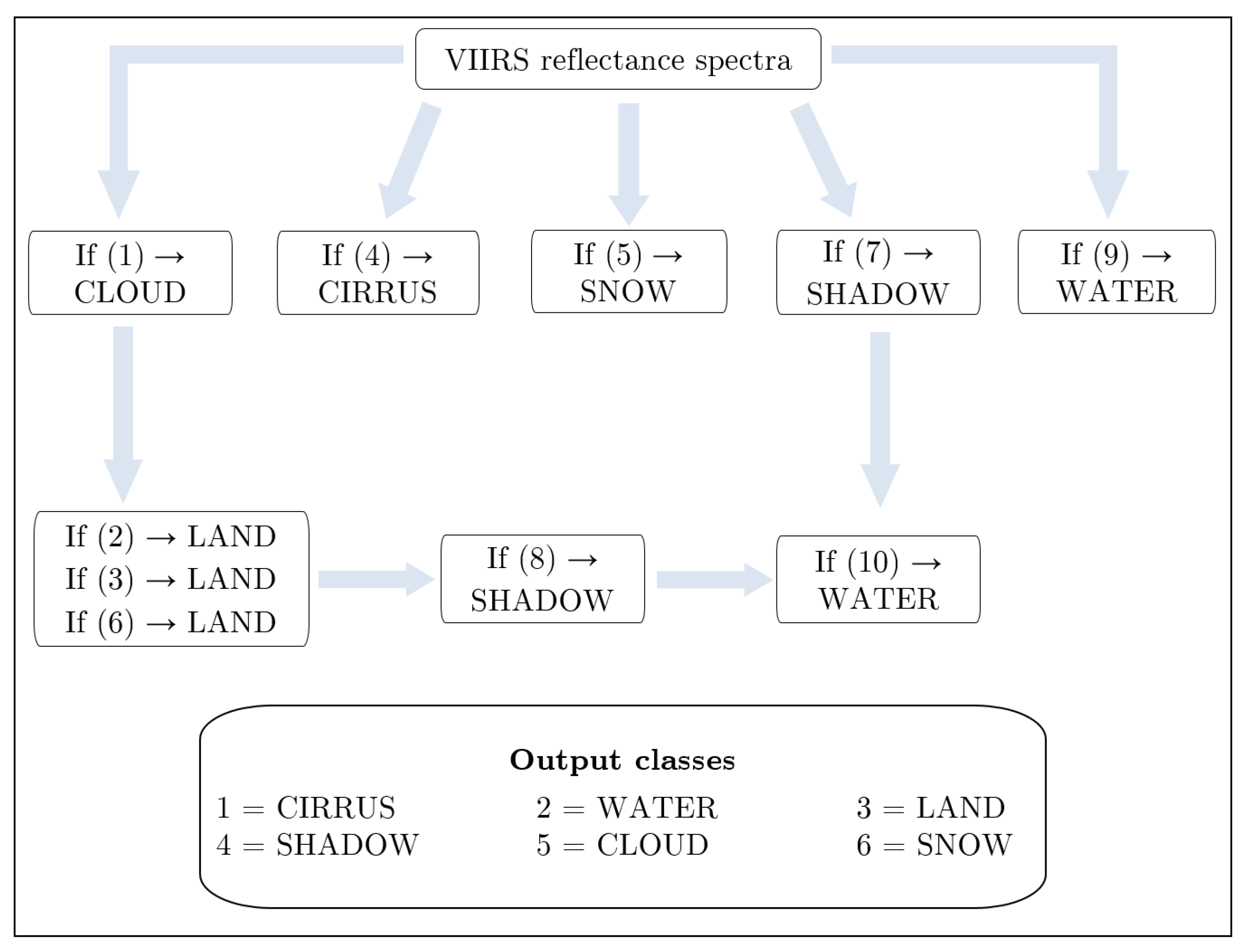

In this paper, we propose an automatic cloud and shadow detection method for optical satellite images (e.g., Suomi NPP VIIRS or Sentinel-2). In this paper, neither thermal bands nor multi-temporal analysis is used. Thermal bands are highly sensitive to atmospheric temperature variance during different seasons and consequently need time and space-specific thresholds [

11]. One major advantage of not including the thermal bands in the algorithm is that the method is then also applicable to Sentinel-2 images that do not have these bands. For shadow detection, we present a novel method using the ratio between blue and green reflectances, that does not rely on cloud projection. The method is tested with VIIRS data in the boreal region with an abundance of dense forest intermingled with water areas, and snow and ice areas during several winter months. The test imagery covers Finland, large parts of Sweden and Norway, with snow covered mountains, and small parts of other neighbouring countries like Russia and the Baltic countries.

2. Suomi NPP VIIRS Imagery

Suomi NPP VIIRS images are acquired daily, with 5 high resolution bands (I-bands) at 375 m and 16 moderate resolution bands (M-bands) at 750 m. Because the M-bands include more spectral information for cloud detection and contain the spectral bands of Sentinel-2, M-bands were selected as the basis for cloud detection.

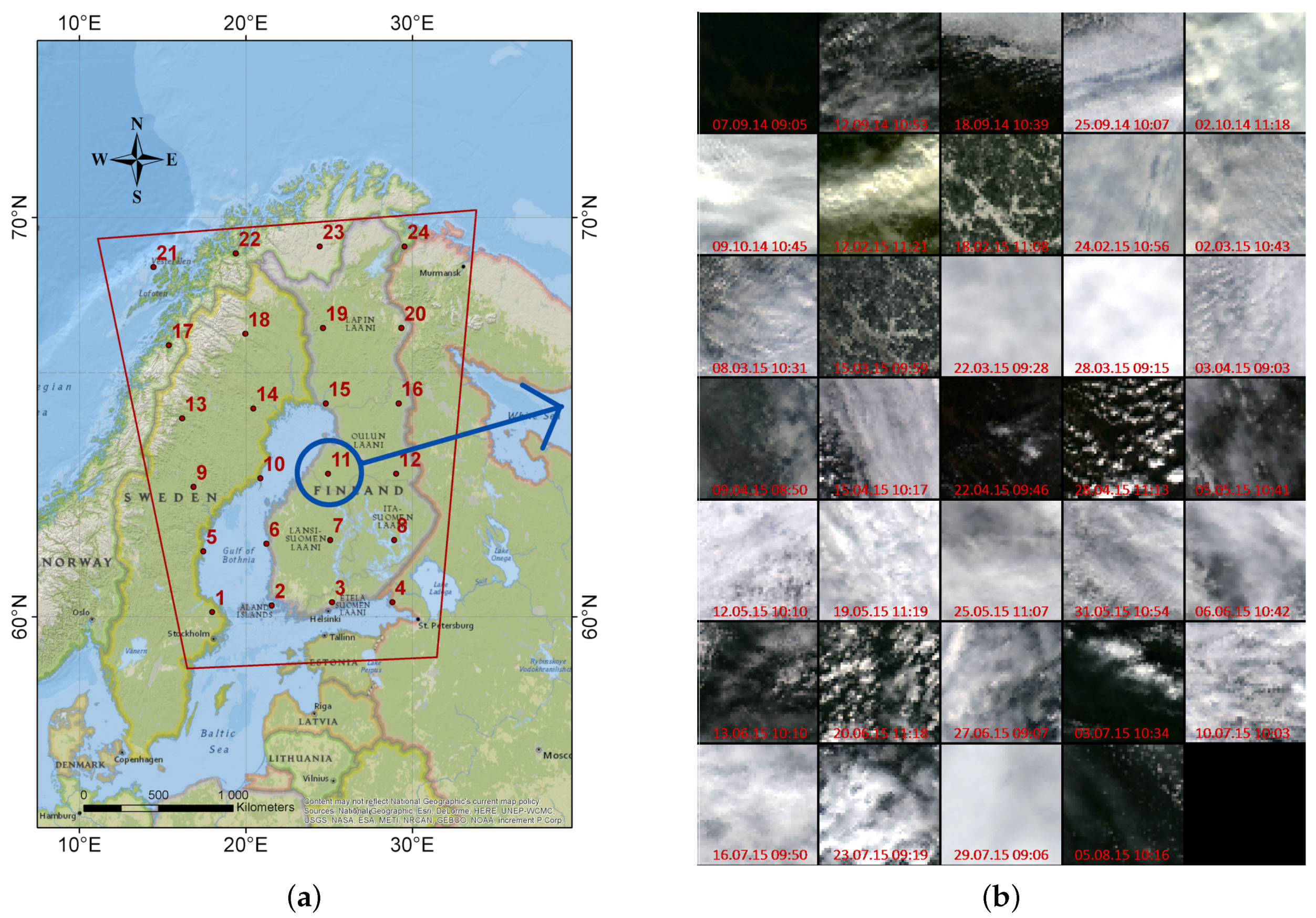

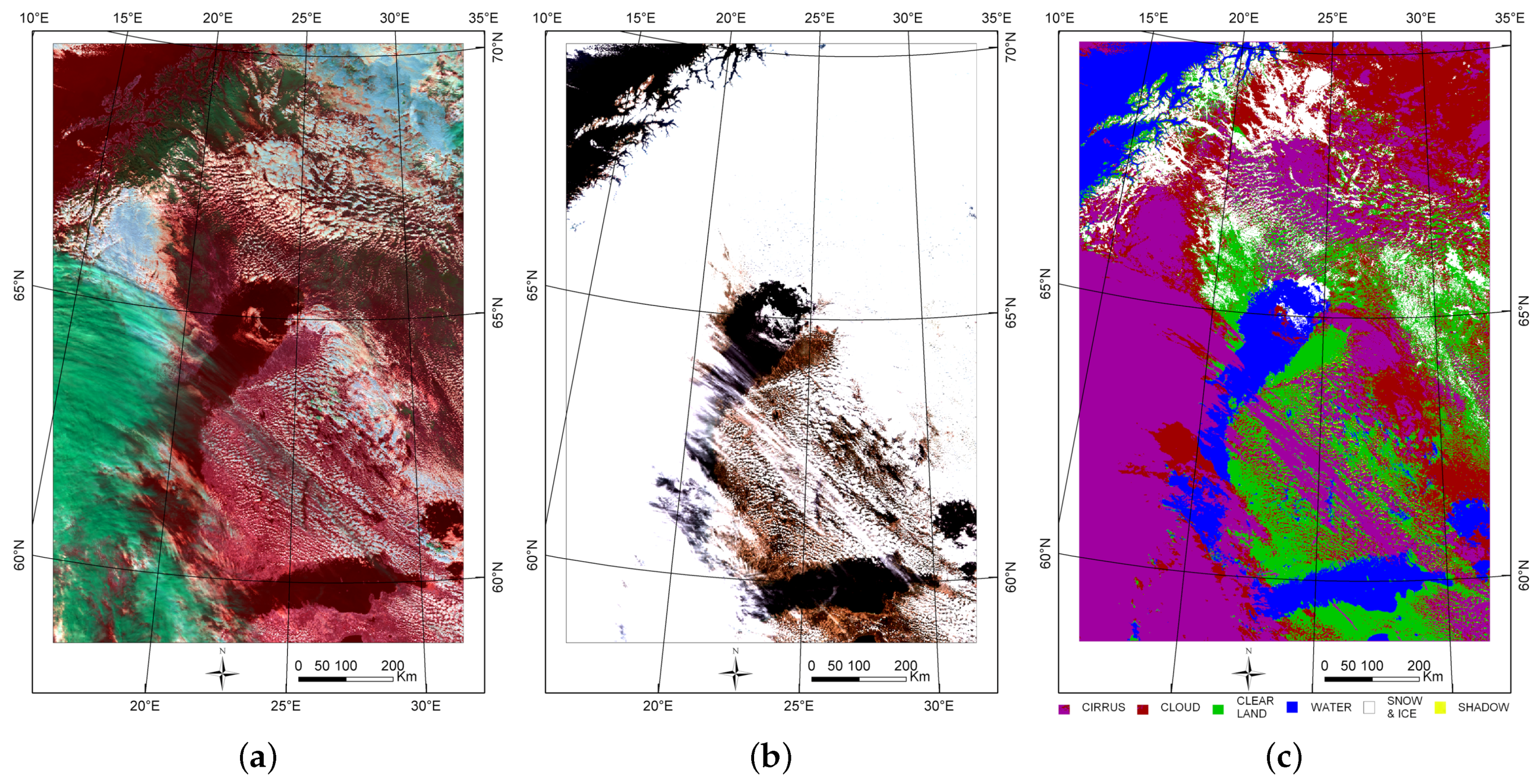

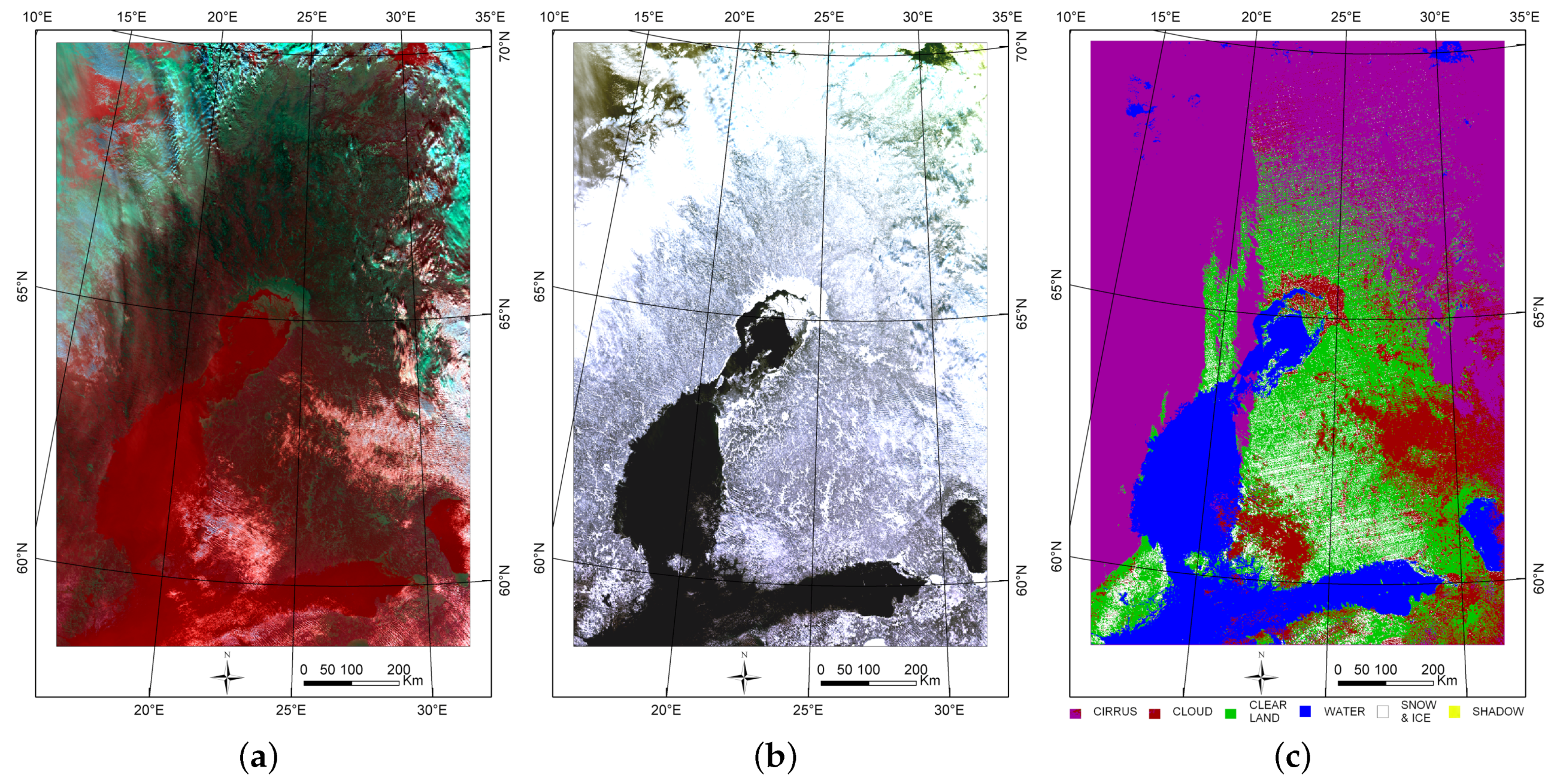

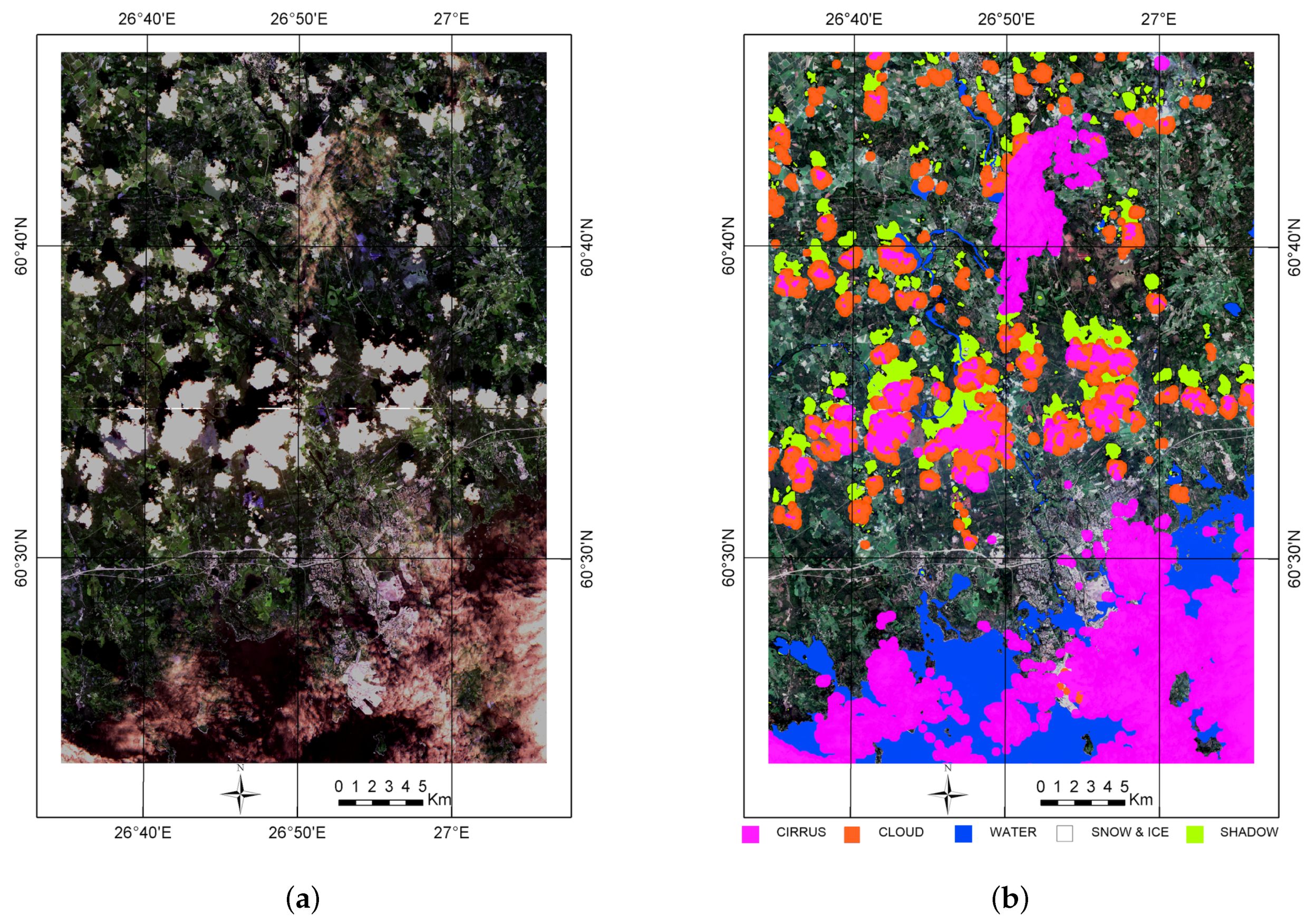

A total of 747 VIIRS images were acquired for the method development. The images were from boreal zone and cover Finland in total, as well as parts of Scandinavia and Russia—

Figure 1a.

Seven hundred and forty-four VIIRS images (from 2 September 2014 to 25 April 2016) were loaded from the receiving station of the Finnish Meteorological Institute in Sodankylä, Finland, and to obtain some less cloudy summer images, three more images (4–6 August 2014) were downloaded from the CLASS (Comprehensive Large Array Stewardship System) archive of NOAA (National Oceanic and Atmospheric Administration). VIIRS images were georeferenced in Sodankylä, and only the relatively small rectified VIIRS images were downloaded to local computers. The images were resampled to 1 × 1 km pixel size in UTM zone 35/WGS84, Northern hemisphere, with nearest neighbour interpolation.

The atmospheric correction (conversion to surface reflectance or BOA reflectance—Bottom Of Atmosphere) of Suomi NPP/VIIRS data was implemented in the VTT in-house smac_viirs tool, which uses the SMAC algorithm (Simplified Model for Atmospheric Correction) by Rahman and Dedieu [

17]. The following atmospheric values were used in the correction: Aerosol Optical Depth

, water vapour = 3.0 g/cm

, ozone = 0.3 atm/cm

and pressure

P = 1013 hpa. The low value of AOD was used in order to preserve the effect of clouds and haze in the reflectance values. At the time of processing (3 June 2015) there were no SMAC coefficient files for the bands of the VIIRS instrument. Coefficient files of the closest bands of other satellite sensors were used for VIIRS bands (see

Table 1).

5. Discussion

The paper presented an automated method to classify clouds and shadows from Suomi NPP VIIRS and Sentinel-2 images.

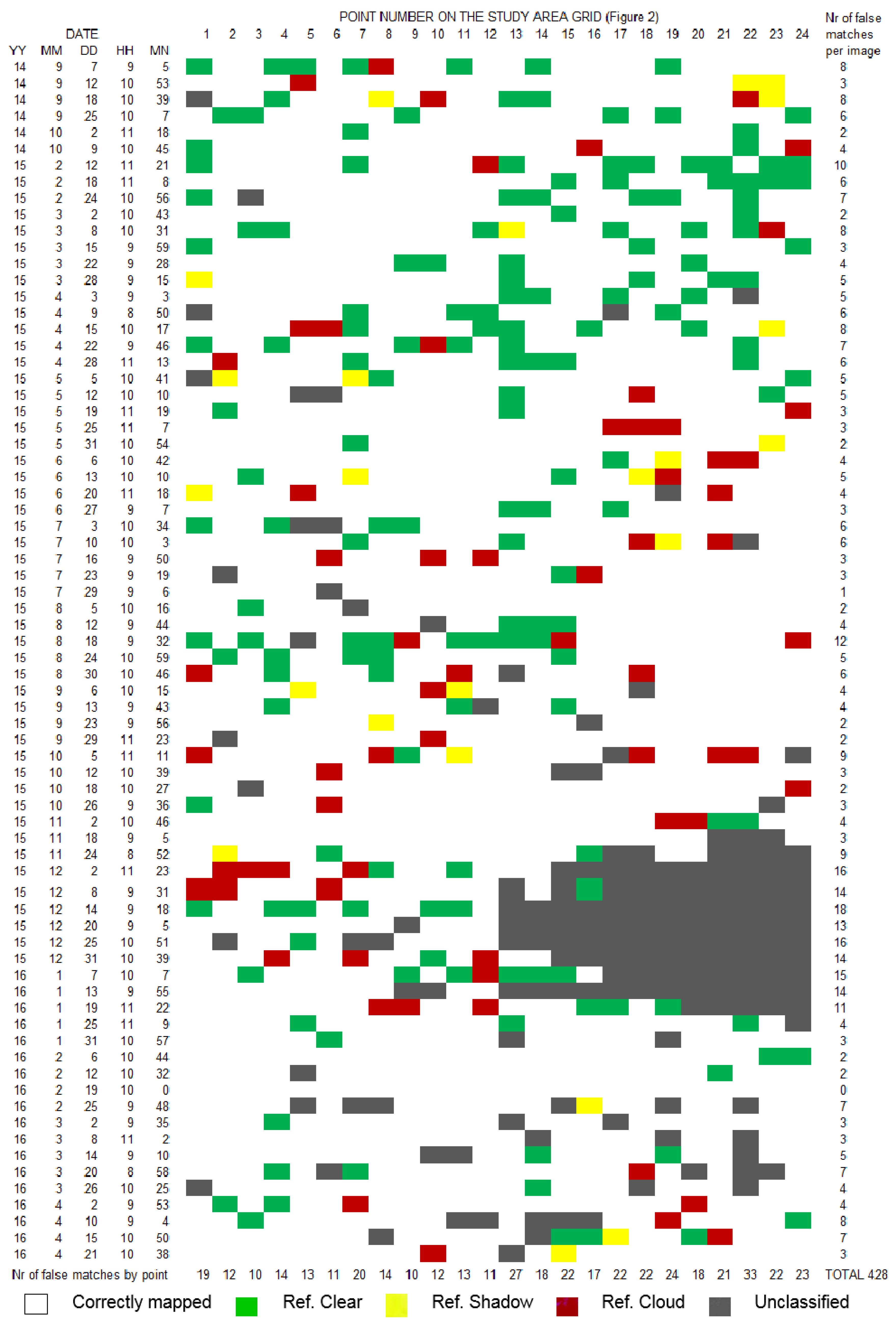

Thin clouds remained problematic to detect. When decreasing the cloud threshold of the visible bands to detect thin clouds, the number of false detections of built areas to cloud class increased. Because of the application of a grid, the reference points were located sporadically in the center or in the edges of the clouds and shadows. It would have been easier to detect the center of the clouds or shadows.

The ratio between blue and green detected shadows but thin mixed pixels shadows (one to two pixel wide) remained undetected. Part of the shadows were mixed with shallow water and dense coniferous forest. Cloud shadows on clouds were also problematic to detect. Sometimes the origin of the shadow is something else than cloud, for instance, in mountainous areas. However, this cannot be seen as a defect of the method because these areas also need special attention during analysis. For applications very sensitive to omitted shadows and clouds (e.g., model-based forest variable estimation), it is more desirable to reduce omissions as much as possible even at the cost of more false alarms. In optical satellite images, shadows are expected to appear in the vicinity of clouds along the sun angle direction [

14]. A possibility to reduce omission of shadows adjacent to detected clouds would be to dilate cloud masks by several pixels along the sun angle direction. However, omitted shadows not contiguous with any cloud would require such a wide buffer around cloud masks that many clear pixels would be unnecessarily masked out, further increasing the false alarm rate.

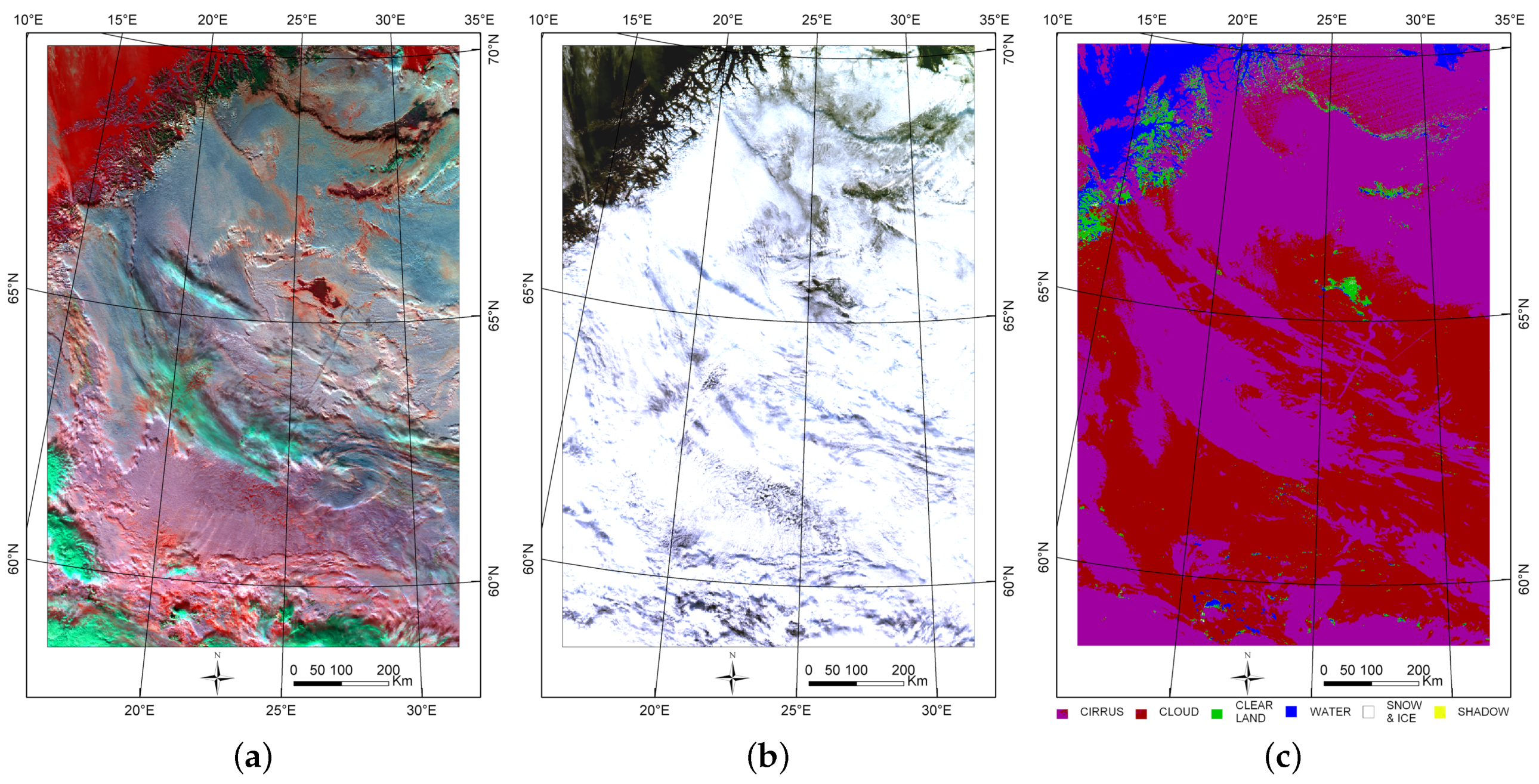

Because of a low sun angle, winter images were challenging in separation of snow and ice from the clouds. The snow index worked quite well, but part of the snow mixed with clouds. In winter images, the order of cirrus and snow detection affected the result. If snow and ice detection was performed after cirrus cloud detection, the snow detection found ice covered lakes from under the cirrus clouds. More generally, the threshold for the cirrus detection is subjective, and though the same threshold gave good results for all seasons’ images, some applications may need imagery with less cirrus cover removed. They are not visible in the visible bands, and so applications using only visible bands may use higher threshold for the cirrus detection. The subjective threshold can then be selected interactively from the cirrus band image. During winter season from end of November to end of January, the visual interpretation could not be done in the Northern part of Fennoscandia (with latitudes north of

) due to the low sun angle. In February 2015 a slightly bigger proportion of false alarms for clouds were observed in the latitudes north of about

(

Figure 8). This did not show in February 2016 results. The reason may be that in 2015 the thermal spring came one month earlier than in 2016 (according to the Finnish Meteorological Institute—

http://www.fmi.fi) and there was a bigger amount of clear melted or nearly melted land pixels in the north. These pixels were wrongly assigned to cirrus or cumulus clouds and decreased the separation between clear land and cloud, which was the biggest mismatch in

Table 3 with 120 occurrences.

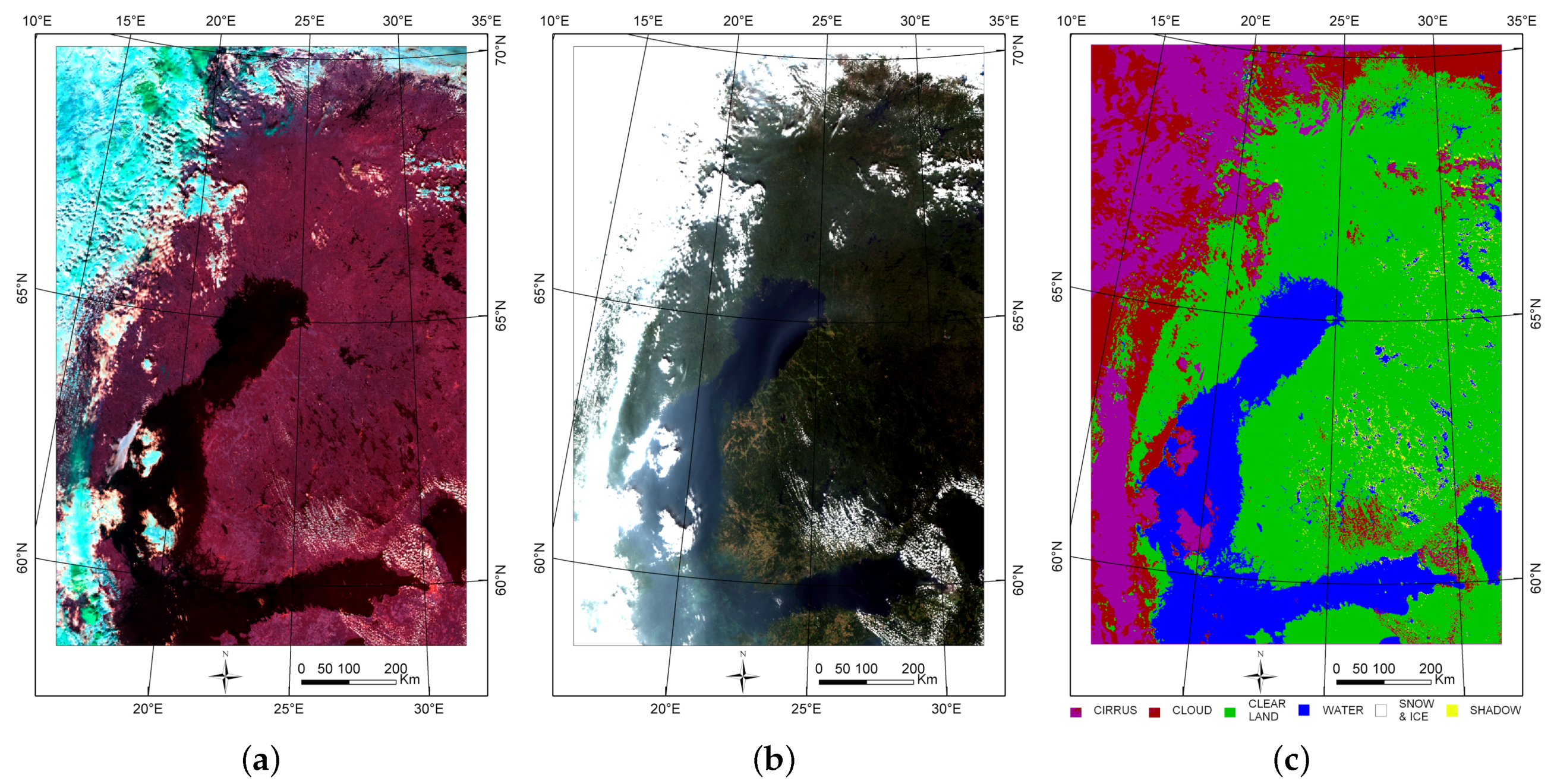

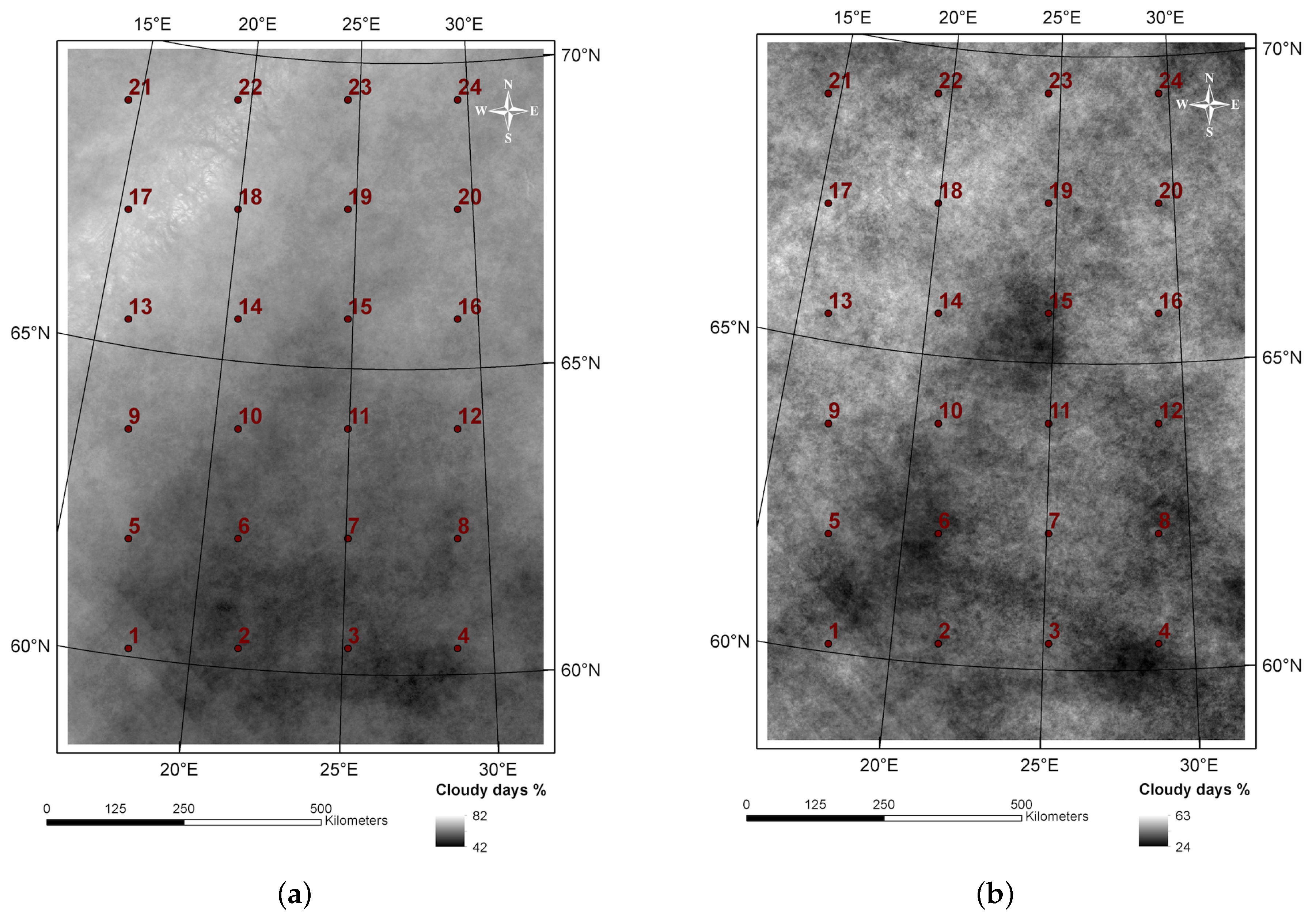

Pixel-wise percentage of cloudy days (

Figure 8) were calculated from the 747 images based on the cloud and shadow masks. The percentage of cloudy days from the whole dataset in

Figure 8a, which also includes winter images, showed snow and ice areas in northern Norway (north-west part of the image) to be more frequently covered by clouds. This can be an error in cloud interpretation or an indication of high cloud cover in high mountains with also snow or ice coverage during all seasons. In both images, the sea and big lakes areas were less cloudy than other areas. This was because some thin clouds and their shadows are difficult to separate from water, but also because cumulus clouds typically do not exist over sea areas. These statistics images also highlighted noise striping in the south-west corner of the area, which were due most probably to the scanning instrument or the pre-processing lines. Otherwise the statistics images did not show any systematic features that could be assigned to biases in the cloud computing algorithm.

The results of the proposed method can be compared to published results from other methods applied to VIIRS images in Northern Europe. For the proposed method, the correct detection rate of clouds was 94.2% and false alarms rate 11.1%. For the VIBCM method in Northern Europe area [

4], a slightly higher correct detection rate was reported for clouds (94.5%), at the cost of a much larger false alarm rate (42.1%). However, the proposed method does not use thermal bands, yet reaches similar accuracies as the VCM and VIBCM methods, which makes it an interesting operational method for several sensors, including Sentinel-2. As an example,

Figure 9 presents the result of cloud and shadow masking for a Sentinel-2 image acquired in June 2016 in Southern Finland, using the same method with corresponding bands from Sentinel-2 imagery.

The VIIRS dataset was quite challenging, with high latitude (sun angle effects), frequent snow/ice and cloud cover. Because of the coarse resolution, most shadows in VIIRS images were very small (2–3 pixels wide), and there were many mixed pixels.

The method used the green band with blue band in shadow ratio. This ratio increased in cloud shadows because shadow pixels are illuminated by the predominantly blue, diffuse sky radiation. In [

7] the SWIR, NIR or red band was used instead of the green band to yield optimal results. The reason might be the different land cover types dominating the area. In the boreal study area used in this paper, forested and water areas dominated with high reflectance in green band. On the contrary, in bright built and desert areas, the red band reflectance is higher than in vegetated areas and might give better results.