1. Introduction

Very high spatial resolution (VHSR) remote sensing imagery, such as aerial images and unmanned aerial vehicle (UAV) images, reveal ground details, including texture, geometry, and topology, and thus provide an outstanding visual performance [

1]. Therefore, classification of VHSR images for various applications has received much research interest [

2,

3,

4]. However, compared with the classification of high spatial resolution hyperspectral remote sensing images , the classification of remote sensing images with a high spatial resolution but a relatively low spectral resolution (such as images obtained by airborne or UAV) has become challenging. Given the improvement in spatial resolution, several zones may appear too small and heterogeneous when a VHSR image is processed by multi-scale segmentation. These zones may be meaningless relative to the classes of interest. Furthermore, the increase in spatial resolution enhances the correlative strength of the pixels of the intra-class. Consequently, the spectral signatures inside a target become highly heterogeneous, and different targets present increasingly similar spectra. A high intra-class and a low inter-class variability reduce the separability of different land cover classes in the spectral domain [

1,

5,

6].

Numerous strategies have been adopted to overcome these challenges in VHSR image classification. Spatial–spectral feature extraction is the most popular approach. It aims to complement the insufficiency of spectral information by exploiting the spatial features of a ground object [

7,

8]. These features, such as the pixel shape index (PSI) [

9], the pixel spatial feature set [

10], and structural features, are exploited through mathematical morphology and its related models [

11,

12,

13,

14,

15]. In addition, object-based image analysis is also a new paradigm for VHSR image classification [

16]. The object-based approach usually begins with segmentation to generate an image object, which is a group of pixels that are spectrally similar and spatially contiguous. The application of the object-based approach in practical situations has been studied extensively [

13,

17,

18,

19,

20]. The object-based approach has several advantages over pixel-based VHSR image classification in terms of classification accuracy [

21,

22].

Image filters, especially the edge-preserving filter, have recently been proposed to smooth noise in images with a high spatial resolution and improve land cover classification accuracy. Edge-preserving filters have been adopted in many applications [

23,

24,

25]. For example, Kang et al., proposed a spectral–spatial classification framework based on an edge-preserving filter and obtained a significantly improved classification accuracy [

26]. They also presented a recursive filter combined with image fusion to enhance image classification [

27]. Xia et al., proposed a method that combines subspace independent component analysis and a rolling guidance filter for the classification of hyperspectral images with high spatial resolution [

28]. Experimental results showed that the proposed method gives a better accuracy than the traditional approach without the use of image filtering. From the application viewpoint, these simple yet effective approaches imply the many potential applications of VHSR images.

In this study, we adopted the idea of image filters and extended it to the context of the object-based approach. We refer to this adoption–extension approach as “object filter based on topology and features” (OFTF). We used the unique capabilities of the object-based image technique, which allows the noise in a VHSR image to be addressed in a multi-scale object manner. To achieve this purpose, first, a popular multi-scale algorithm-Fractal Net Evolution Algorithm (FNEA) which was embedded in the eCognition software was adopted to generate image objects [

29,

30]. FNEA, which is a widely used multi-scale segmentation algorithm, was first introduced by Batz and Schape. This algorithm quickly became one of the most important segmentation algorithms within the object-based analysis domain [

30]. The basic idea of the algorithm is a bottom-up region merging technique. It starts with each image pixel as a separate object. Subsequently, pairs of image objects are merged into larger objects. The process terminates when no pair of objects satisfies the merging criterion. Second, the segmented object was exported as a vector with corresponding spectral features, such as the mean or the standard deviation of the pixels within an object for a band. In this case, a target usually exists as a group of objects that are similar in features and spatially continuous to allow for the smoothing of the difference among the objects from one target. To demonstrate the effectiveness of the proposed OFTF approach, we compared it with the original object-based approach (OO) and OCI [

19] through image classification. In addition, two relatively new approaches, the recursive filter (RF) [

27] and the rolling guided filter (RGF) [

28], which have been applied successfully to high-resolution image classification, were compared with the proposed OFTF approach.

The remainder of this paper is organized as follows.

Section 2 details the proposed OFTF approach.

Section 3 presents the experimental setup and the results.

Section 4 gives the discussion on the experimental results.

Section 5 provides the conclusion.

2. Proposed Object Filter Based on Topology and Feature Constraints

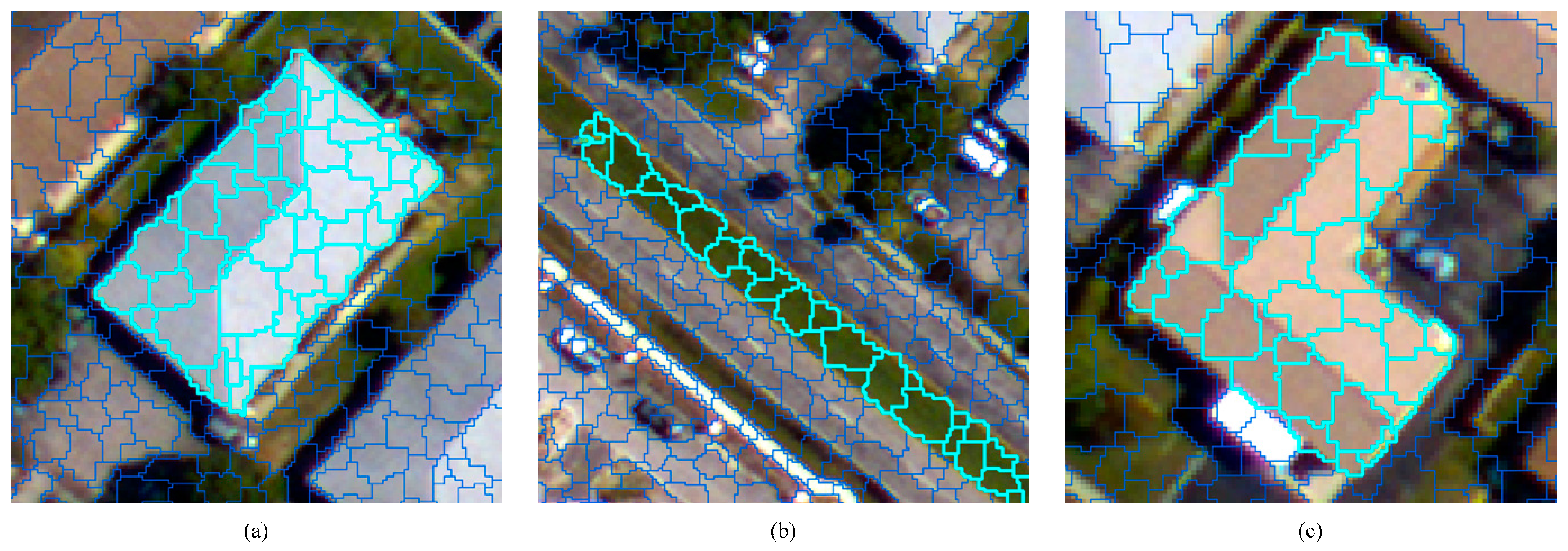

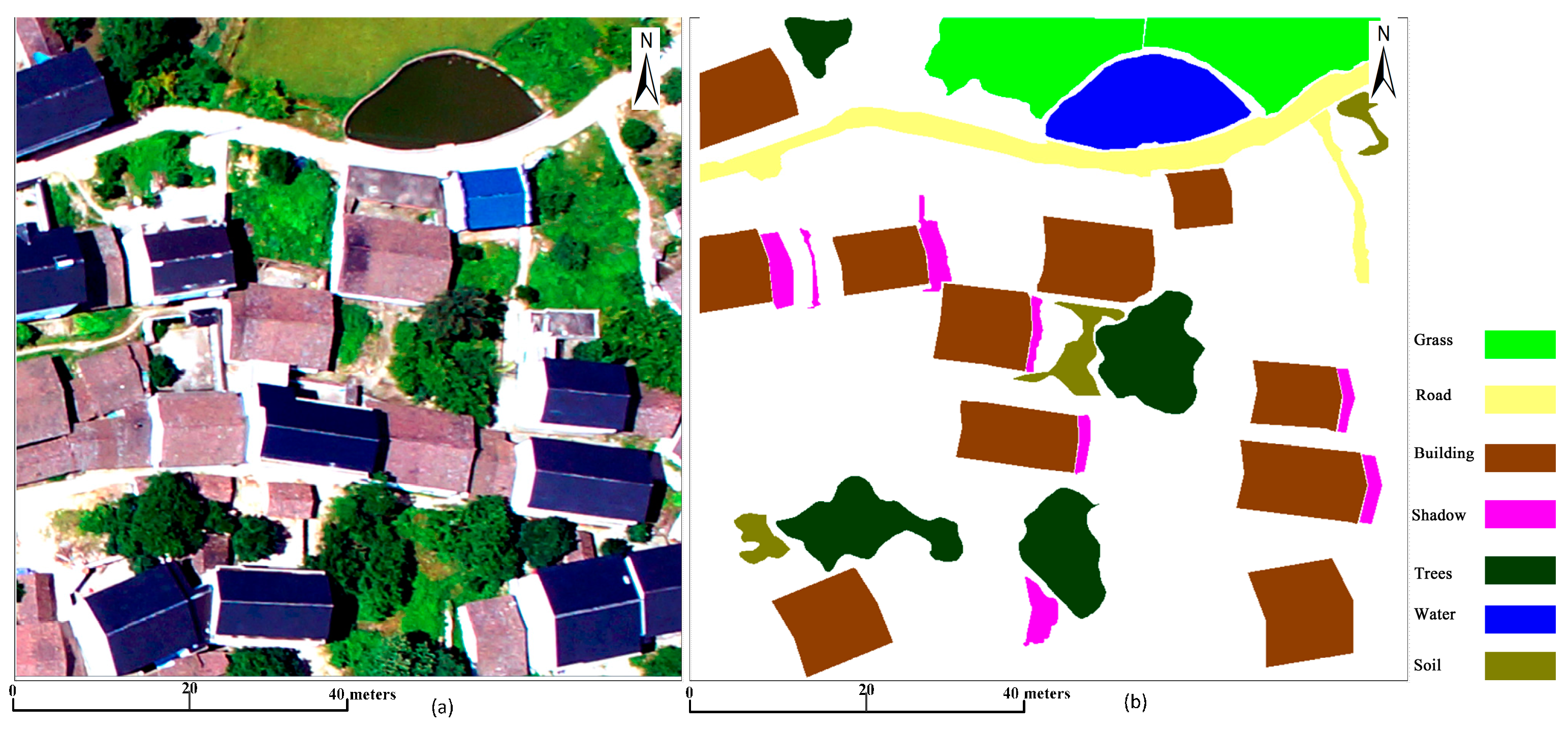

The aim of the proposed OFTF is to improve the classification of VHSR images by smoothing the noise of the ground target in an object manner. Due to the complexity and uncertainty of the spatial arrangement of segmented objects, the proposed OFTF is based on a simple assumption: the objects comprising a target usually have strong correlations with one another and present spatial continuity. As shown in

Figure 1, regardless of the shape of the target (e.g., rectangle, line, or “L”), its units are subject to the abovementioned assumption.

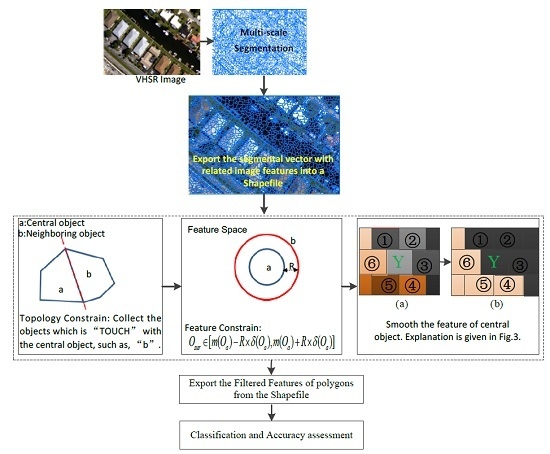

In this case, several pre-processing steps, such as multi-scale segmentation and exporting the corresponding object’s vector, are necessary when the proposed OFTF approach is used. As shown in

Figure 2, the proposed approach consists of the following three consecutive main steps (labeled as a dotted line).

Topology constraint: Based on the object’s vector, the neighboring object that touches the central object in the topology is obtained.

Feature constraint: In the feature space, the object in the set of the touched neighboring object that is dissimilar to the central object should be excluded. Details of how to judge the “dissimilarity” are presented in

Section 2.2.

The feature of the central object is smoothed through the corresponding feature of the remaining neighboring objects. Each step is discussed in detail below.

2.1. Topology Constraint

Compared with spectral features, topology may include information on geographic location, spatial arrangement, and geometry. This information is usually studied in the spatial analysis of geographic information systems (GIS). In addition, topology can be described in the VHSR remote sensing imagery. When an image was segmented into multi-scale image objects, a target consists of a group of objects which are spatially continuous. In this study, topology was introduced to reveal the spatial relationship between the central object and its neighboring objects. A topology called “TOUCH” was used as the spatial constraint for the proposed object filter. “TOUCH” represents the condition in which the central object and an adjacent object share a common boundary (or a part of a common boundary) with no gaps and overlaps. A group of objects that consists of a given target are usually spatially continuous. Therefore, this topology constraint can be regarded as spatial knowledge for image analysis.

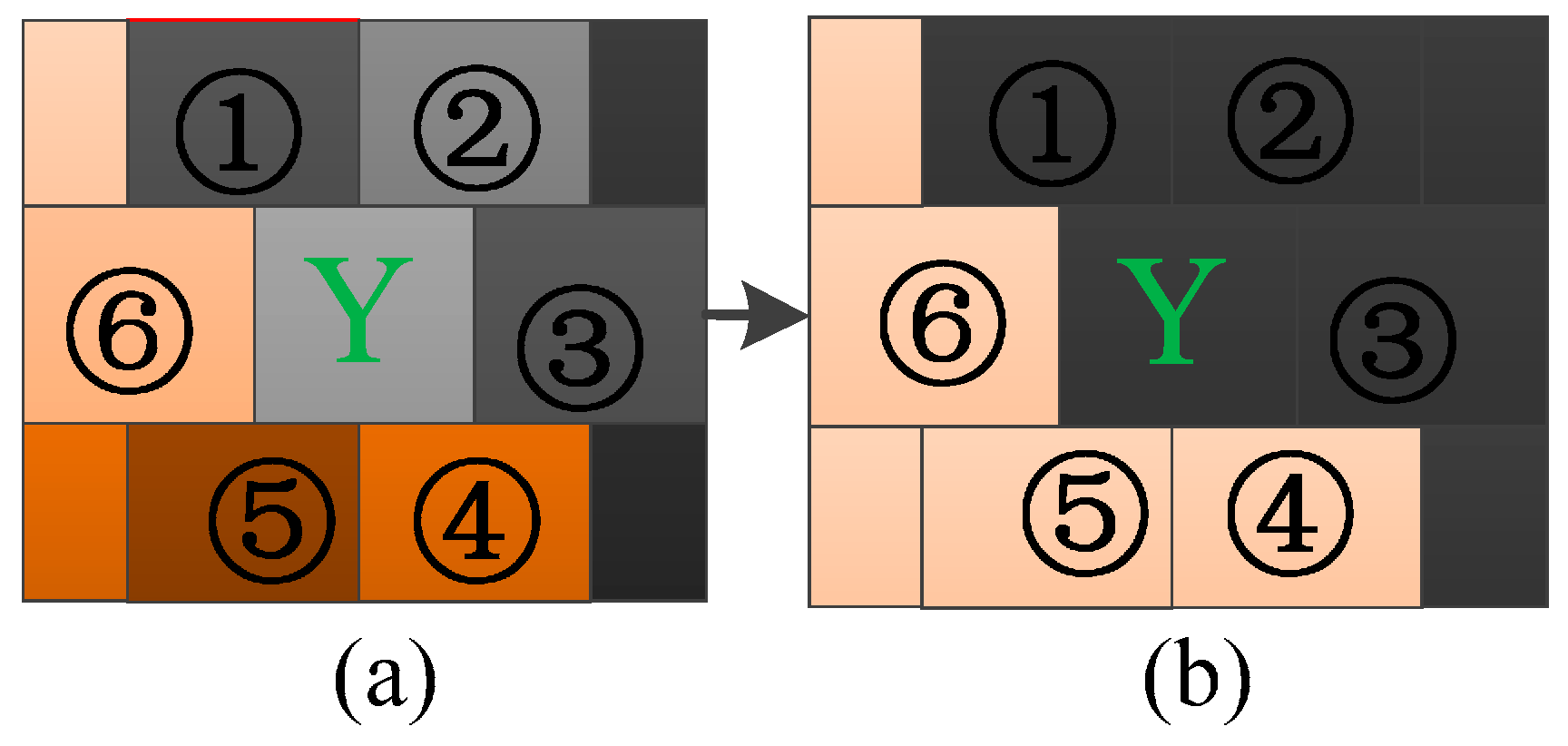

As shown in

Figure 3, each block symbolizes an image object, and similar colors indicate the difference of the same material for a target. “Y” is a central object. Therefore, objects 1–6 touch “Y” according to our proposed topology constraint.

2.2. Feature Constraint

When the central object “Y” is located at the interior of a target, the adjacent surrounding objects are the same material of the target. However, when the central object “Y” is located at the boundary between different targets with a different material, smoothing the feature of “Y” by using all topology-touched objects is unreasonable. Therefore, the feature constraint is introduced to exclude objects that are different from the material of the central object.

To achieve this purpose, the difference among the objects that have the same material as the central object are denoted by the standard deviation and mean value of the pixels in the central object. Therefore, in the case of the topology constraint, the feature is introduced as another constraint in the spectral domain, the feature constraint is provided as Equation (1).

where

is the set of objects that satisfies the topology and feature constraints surrounding the central object “c”, “b” is the

b-th spectral band.

and

are the mean and the standard deviation of band “b” of the central object, respectively.

R is the relaxation parameter to ensure that the constraint has general adaptability. It controls the degree of constraint in the feature domain. When

R = 0, the feature constraint is “

,” and a large

R implies a large relaxation range of the feature constraint. A suitable

R is the key to obtaining a reasonable filtered result. If

R is excessively small, the relaxation would be too strict to cover the “variety” of different objects that belong to the same target. If

R is excessively large, more noise will be smoothed, but the object having a material that is different from that of the central object will be introduced to smooth the central object’s feature.

In the proposed OFTF approach, the relationship among the feature constraints of each band is denoted by “AND”. In other words, a neighboring object can only be applied to smooth the feature of a central object until the feature constraints of each band for an object satisfy Equation (1).

2.3. Smoothing the Feature of the Central Object

In the case of topology and feature constraints, object set

is obtained. The elements of this set meet the spatial and feature constraints. The proposed OFTF approach smooths the central object through the average of

, i.e., by:

where

is the total number of objects that satisfy the topology and feature constraints surrounding the central object. Therefore, the filtered feature of each band for the central object can be calculated with Equation (2).

It is worth noting that it is difficult to utilize the topology information for an image object directly. Therefore, from the technical point of view, a series of transformation is adopted. The workflow of OFTF is presented: First, the spectral feature and the topology information can be transformed into a shapefile with the aid of eCognition software. (The shapefile format is a popular geospatial vector data format for geographic information system). Then, a customize application was developed based on ArcEngine 10.0 for realizing the proposed OFTF. Finally, the filtered value of each image object can be exported, and the format can be customized for classification. To promise the repeatability of the proposed approach, the sourcing code of the customize application can be obtained from the first author.

4. Discussion

In the first experiment, the sensitivity between the relaxing parameter

R and the overall accuracy was investigated. As shown in

Figure 7, when the value of R ranges from 0.5 to 1.5, the accuracy of the proposed approach increased initially. However, when the value of

R became larger than 1.5, the accuracy decreased. In a practical application,

R can be adjusted and determined in accordance with different images.

In addition, in the first experiment, the adaptability of the proposed OFTF was also investigated with different supervised classifiers. The quantitative results for each classifier are shown in

Table 4. It can be seen that the proposed OFTF-based classification exhibits the highest accuracy with the SVM classifier. Therefore, SVM was employed as the “classifier” in the second and third experiments.

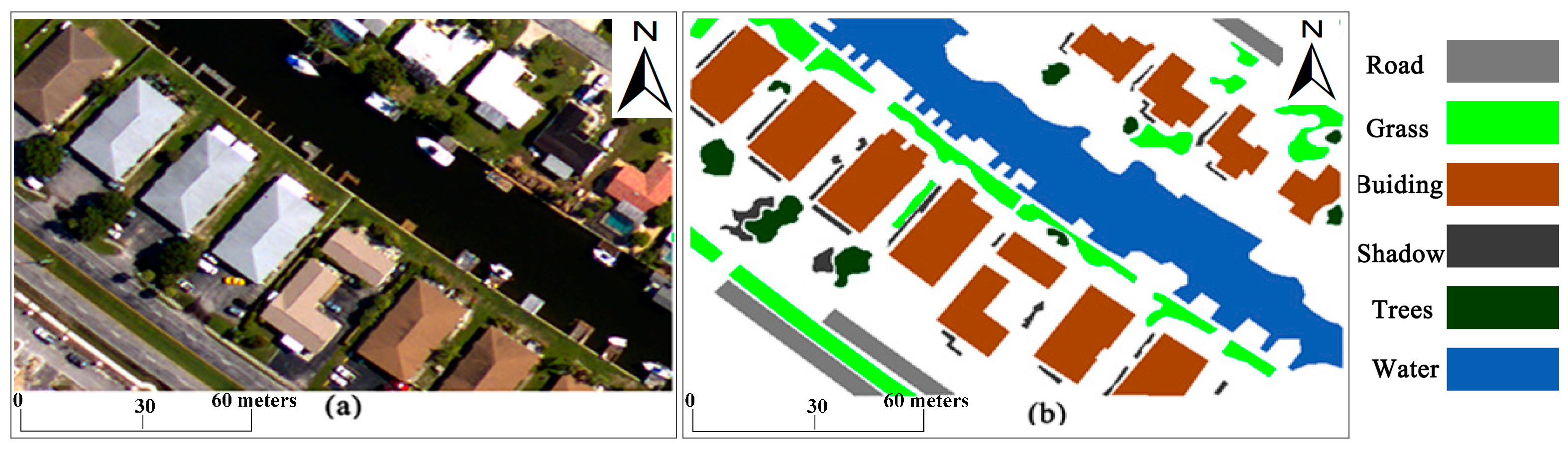

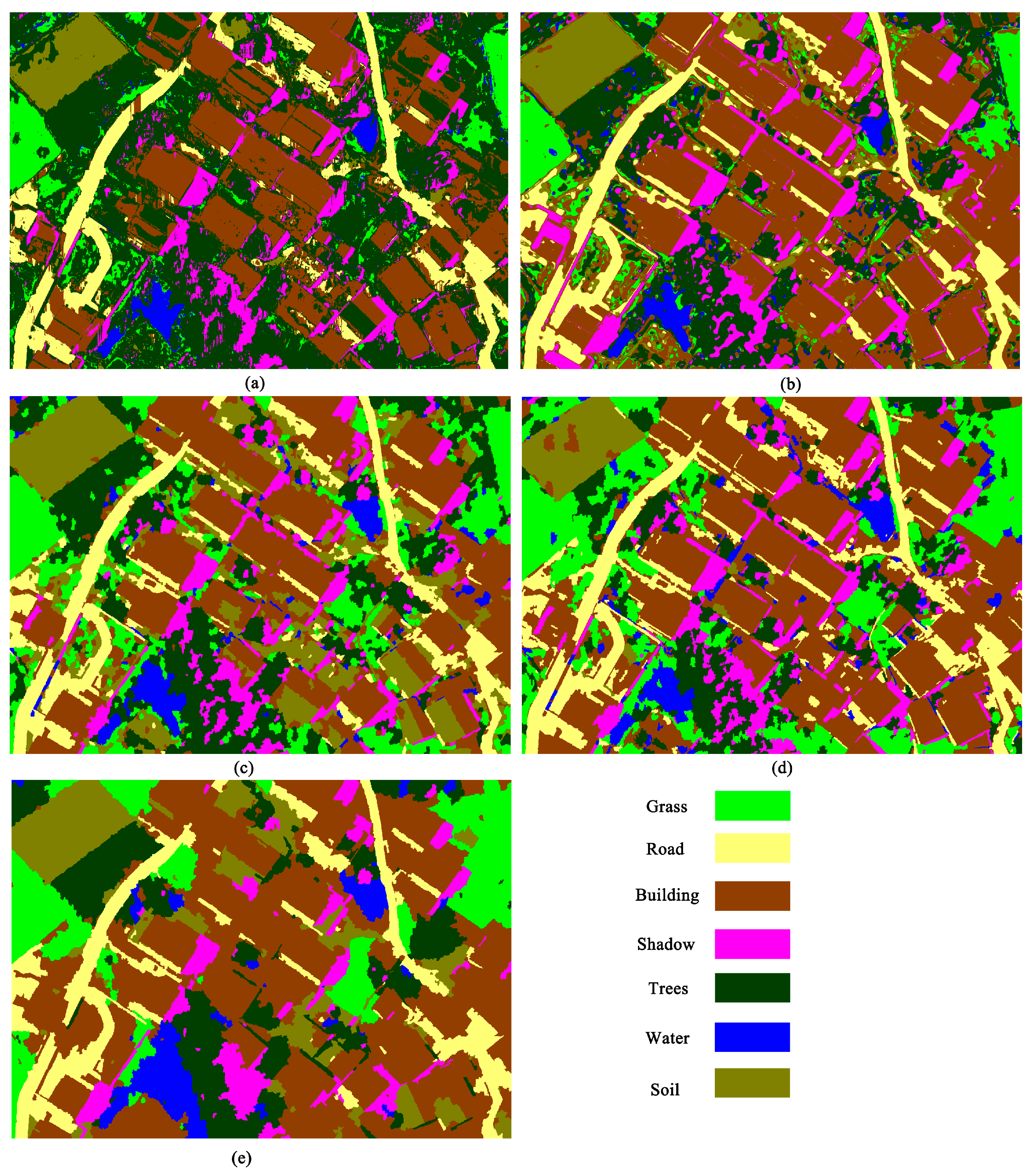

In the second experiment, the class-specific accuracies for this parameter setup are shown in

Table 7, and the visual classification map is shown in

Figure 9. The table and its corresponding classification map show that the proposed OFTF-based approach achieves a higher classification accuracy than the original object-based approach without any filtering process. Furthermore, the proposed OFTF-based approach also obtains a higher classification accuracy than RF [

27], RGF [

28], and OCI [

19] in terms of OA and Ka. In terms of visual performance, the proposed OFTF-based approach is better at smoothing the noise of the classification map when compared to the other approaches. Therefore, the proposed OFTF method can be considered as suitable for improving the performance of VHSR images.

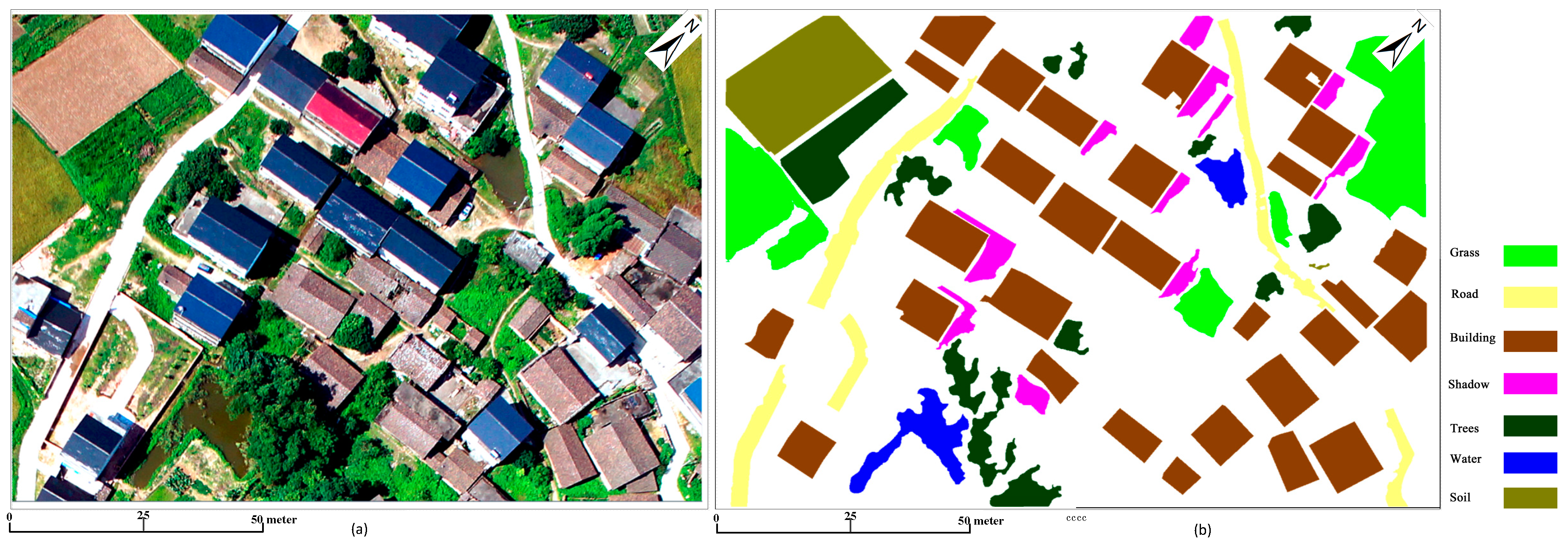

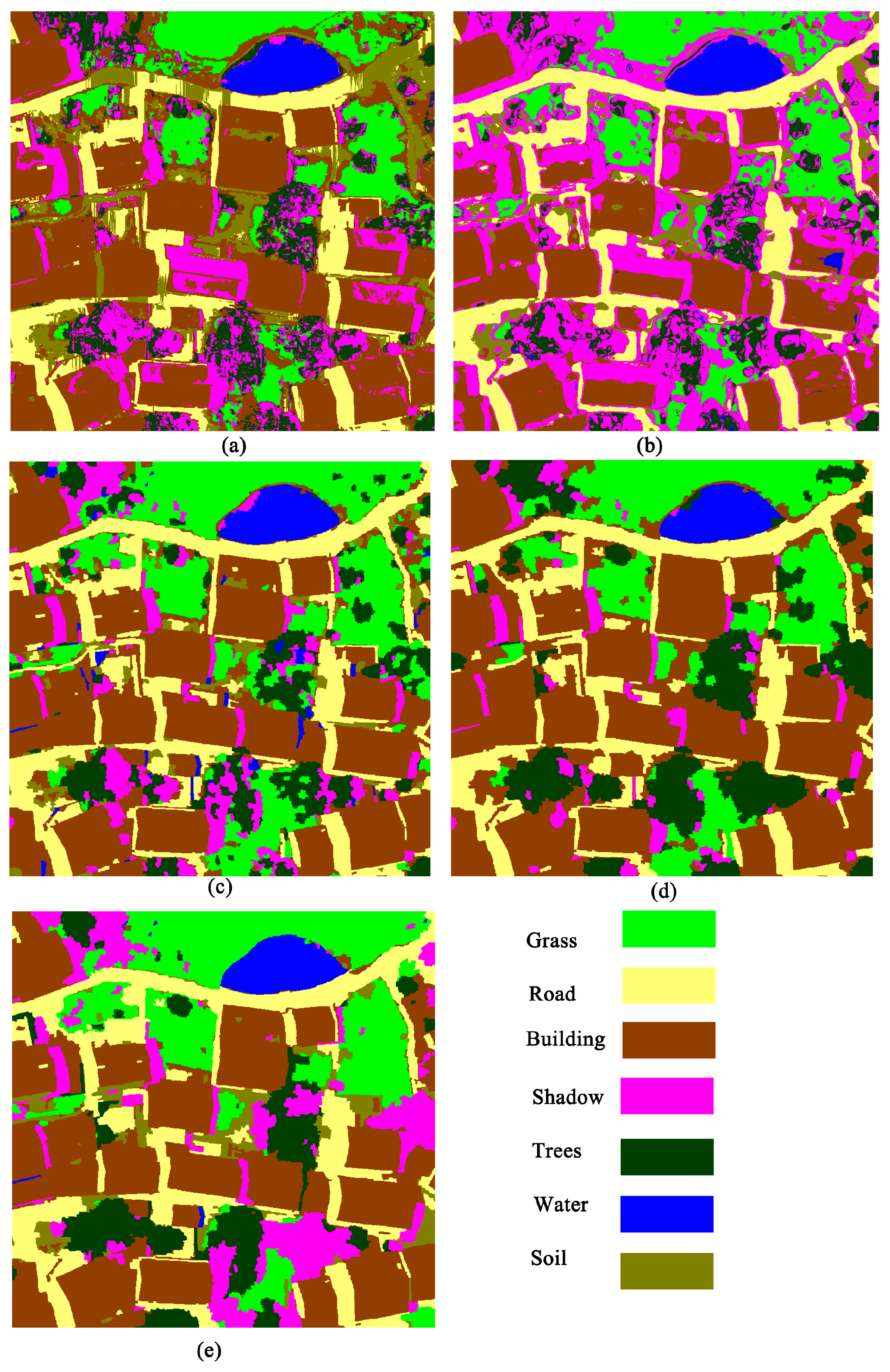

In the third experiment, the specific classification with the different approaches under such a parameter setup is shown in

Table 8 and

Figure 10. Similar results to those of the second image data were obtained. The proposed OFTF-based classification exhibits the highest accuracy in terms of OA and Ka and provides better visual performance than the other approaches.

Currently, aerial images (including UAV images) are involved in a wide range of remote sensing applications and object-based techniques have been widely applied for VHSR image classification. However, to our best knowledge, although object-based classification methods have been studied extensively, this type of object-based filtering has previously not been proposed. In this study, a novel object-based image filter is proposed to improve the accuracy when applied to aerial images for land cover survey tasks. In addition, it is worth noting that the proposed OFTF is easy to use for applications. It has two parameters: R and iteration. Regarding the optimized relaxing parameter, R can be available by trial-and-error experiments when applied for classification. The other parameter, iteration, can be fixed as a constant, because the proposed OFTF is based on two-fold constraints (topology and features) and a larger iteration will not result in over-smoothing the results. With the rapid development of high resolution remote sensing images (such as aerial and UAV images), this novel object-based filter is significant and may promote more potential applications.

The results and discussions reveal that the proposed OFTF is feasible for and effective in reducing the differences among intra-classes. This feature is helpful in improving the performance of VHSR image land cover classification.

5. Conclusions

In this paper, a new approach called OFTF was proposed to improve the performance of VHSR image classification. Experiments were conducted on three real VHSR images to show the effectiveness of the proposed approach. The results of OFTF were better in terms of classification accuracies than those of widely used object-based approaches, i.e., two relatively new methods based on considering contextual spatial information [

27,

28,

31]. The novel contribution of the proposed OFTF approach is three-fold. First, although object-based image analysis approaches have been studied extensively, to the best of our knowledge, the concept of object-based filtering has not been proposed yet. Second, a traditional pixel-wise image filter usually smooths an image through a regular window and it cannot be used directly for smoothing the difference of segmented image objects. Meanwhile, the proposed OFTF provides a novel way to smooth the difference of the target's objects through topology-feature constraints. The procedure of the proposed OFTF is more intuitive and reasonable for various grounded targets because a ground target is usually presented as objects that are spatially contiguous and possess similar features. Third, compared with traditional spatial feature extraction algorithms, the proposed OFTF approach is simple and may imply more potential applications for analysis of VHSR remote sensing images.

In addition to topology, the spatial knowledge implied in the VHSR image is difficult to portray both quantitatively and precisely. Therefore, as a topic for future research, a more comprehensive topology relationship and spatial knowledge, such as azimuth or location, will be extracted from VHSR imagery. In theory, using a more reasonable feature to model the target in the remote sensing image scene results in higher accuracy.