1. Introduction

Urban landscapes are a unique combination of natural and built environments. Natural systems and the intertwined ecological services they provide are key components of a city’s infrastructure. For example, a city’s urban tree cover could be considered part of the local storm water management system, aiding in pollutant filtration, and reduction of surface runoff and thermal loading on streams. However, our ability to quantify and monitor these services over time is heavily dependent on accurate and timely tree cover assessments, which can also be used to optimize ecosystem health and resiliency [

1]. These assessments are typically achieved through field methods limited by access to private lands. Moreover, few cities have adequate staff or budget resources that are required to undertake urban forestry assessments to achieve planning and management goals. Remote sensing approaches can provide a complimentary, spatially explicit dataset that can be used to devise an on ground sampling regime. Moreover, remote sensing canopy assessments are reasonably simple and can be conducted quickly, inexpensively, and without access or disturbance issues encountered in ground-based data collections [

2,

3]; thus, they can provide valuable supplemental information in areas that cannot be accessed on the ground. These spatially explicit assessments provide a means to measure and monitor over time complex urban environments, and their dynamic ecologies [

4,

5], for example, through the use of spatial metrics [

6]. For instance, tree cover surveys and forest pattern metrics are useful to help a city quantify current tree cover status [

7], determine the locations and drivers of cover loss or gain [

8], and monitor these trends over time [

9]. These data can then be used to establish tree protection requirements for new developments, assist with urban tree health management, and determine target areas for planting projects. Tree cover information is but one variable available from this remote sensing approach, which can also provide information on impervious surfaces and many other ground covers.

Land use/land cover (LULC) classifications are often created to visually assess the composition of urban landscapes and quantify different aspects of the environment. “Land cover” describes natural and built objects covering the land surface, while “land use” documents human uses of the landscape [

10]. Remote sensing imagery effectively captures characteristics of the Earth’s surface, but it takes an interpreter’s knowledge about shape, texture, patterns, and site context to derive information about land use activities from information about land cover [

11]. Classifications typically utilize some modification of the Anderson hierarchical system (

Table 1), with generalized LULC classes described at Levels I and II and more detailed classifications for Levels III and beyond [

10]. Land use/land cover needs to be classified at a very fine scale to be effective for city planning and urban land management [

11,

12,

13].

Table 1.

Anderson hierarchical classification system showing examples of Levels I–III for Urban and Forest Lands [

10]; only the classes in red and green have been expanded upon in the hierarchies.

Table 1.

Anderson hierarchical classification system showing examples of Levels I–III for Urban and Forest Lands [10]; only the classes in red and green have been expanded upon in the hierarchies.

| Level I | Level II | Level III |

| | 111. Single-family Units |

| | 12. Commercial and Services | 112. Multiple-family Units |

| | 13. Industrial | 113. Group Quarters |

| | 14. Transportation, Communications and Utilities | 114. Residential Hotels |

| | 15. Industrial and Commercial Complexes | 115. Mobile Home Parks |

| | 16. Mixed Urban or Built-up Land | 116. Transient Lodging |

| | 17. Other Urban or Built-up Land | 117. Other |

| …. | | |

| 41. Deciduous Forest Land | 421. Natural/Unmanaged Trees |

| | | 422. Natural/Managed Park Trees |

| | 43. Mixed Forest Land | 423. Managed Residential/Street Trees |

| | | 423. Plantation Trees |

In 2006, City of Seattle launched the Environmental Action Agenda, which called for an increase in urban tree cover from the existing 18% to 30% in 30 years [

14]; this assessment was performed by non-spatially explicit, semi-manual image interpretation [

15]. This ambitious policy goal would attain the difference in current estimated and planned urban forest cover and certainly increase the urban forest. To establish a spatially explicit baseline, an independent remotely sensed-based urban tree cover assessment was contracted in 2007 by the City of Seattle. Native Communities Development Corporation (NCDC) used high spatial resolution or hyperspatial [

16] QuickBird satellite imagery in this assessment and tree cover was reported at 22.9% [

15]. If an overall goal for tree cover is 30%, as is common for many Pacific Northwest cities, a 5% discrepancy in cover could affect planning, management, and policy decisions aimed at increasing overall urban tree cover. As such, a good monitoring program is required not only in Seattle, but most urban areas interested in preserving or increasing their tree cover. An effective monitoring program will help us quantify how much tree cover there was in the past, is currently, and will be in the future in order to meet tree cover goals.

Remote sensing technologies can provide a means to classify tree cover and a variety of other continuous environmental variables over large spatial extents and moderate temporal extents [

9]. Interestingly, remote sensing of urban forests originally evolved from aerial photography assessment to coarse pixel remote sensing and now is returning to hyperspatial image analysis [

17]. Traditional pixel-based classification methods use Landsat satellite imagery to produce LULC maps (e.g., 2001 National Land Cover Database) by assigning individual pixels to a specific class based on a unique spectral signature [

11,

18]. These coarse per-pixel resolution data, including Landsat, AVHRR and MODIS, generally have greater spectral extents (Landsat) and temporal extents (AVHRR and MODIS), compared to hyperspatial satellite (e.g., QuickBird and IKONOS) and aerial photography (e.g., US National Agricultural Imagery Program—NAIP). Thus, moderate resolution remote sensing has played a critical role in urban tree cover assessment at regional and global scales [

19,

20]. However, in general, the spatial resolution of Landsat imagery (30 m) limits the ability to identify and map features within a property parcel, yet, decision making typically takes place at the parcel level, and 900 m

2 is larger than most urban property parcels [

13]. Furthermore, in heterogeneous canopies found in urban environments, the scale between what is considered a forest patch and what can be resolved by a 30 m or coarser pixel presents a special challenge [

17]. Thus, it is now a well-accepted principle that this moderate resolution imagery is not appropriate for LULC mapping in heterogeneous urban areas [

12,

13,

18].

A relatively new classification method, object-based image analysis (OBIA), sometimes referred to as feature extraction, feature analysis or object-based remote sensing, appears to work best on hyperspatial satellite and aerial imagery as well as LiDAR [

11,

13]. This form of feature extraction allows for use of additional variables such as shape, texture, and contextual relationships to classify image features. This can both improve accuracy results and allows us to map very small urban features, such as mature individual trees or small clusters of shrubs [

21,

22]. Furthermore, others have shown that per-pixel classification approaches, although appropriate on Landsat imagery, are outperformed by OBIA approaches on hyperspatial imagery in urban, suburban, and agricultural landscapes [

21] and in the urban-wildland interface, especially in instances where the tree cover is complex and heterogeneous [

18]. The significant strength of OBIA is that it can also be used on free, publicly available, hyperspatial, NAIP imagery, which is limited in the number of spectral bands available. NAIP imagery has a national extent, offers repeatability, allows for spatial and often spectral comparability, and can be classified to achieve detailed LULC maps for urban planning, management, and scientific research [

13,

21]. OBIA approach on NAIP imagery has been used in other applications, specifically wetland detection [

23], but its utility in urban tree cover mapping has not been explored.

The purpose of this study was to examine the ability of OBIA methods using freely-available, public domain imagery and ancillary datasets for classifying land use and land cover in a heterogeneous urban landscape with suitable detail to allow for monitoring and planning at the parcel level. Therefore, specific attention is paid to developing a method that not only determines the amount or extent of tree cover, but also provides the explicit spatial location of that tree cover. It was our goal to generate a repeatable algorithm that can be tailored for use with imagery that might have other spatial/spectral resolutions and that could allow for the integration of other data sources.

Specific objectives included:

- (1)

create a flexible algorithm,

- (2)

test algorithm performance on imagery of varying spatial and spectral resolutions, and

- (3)

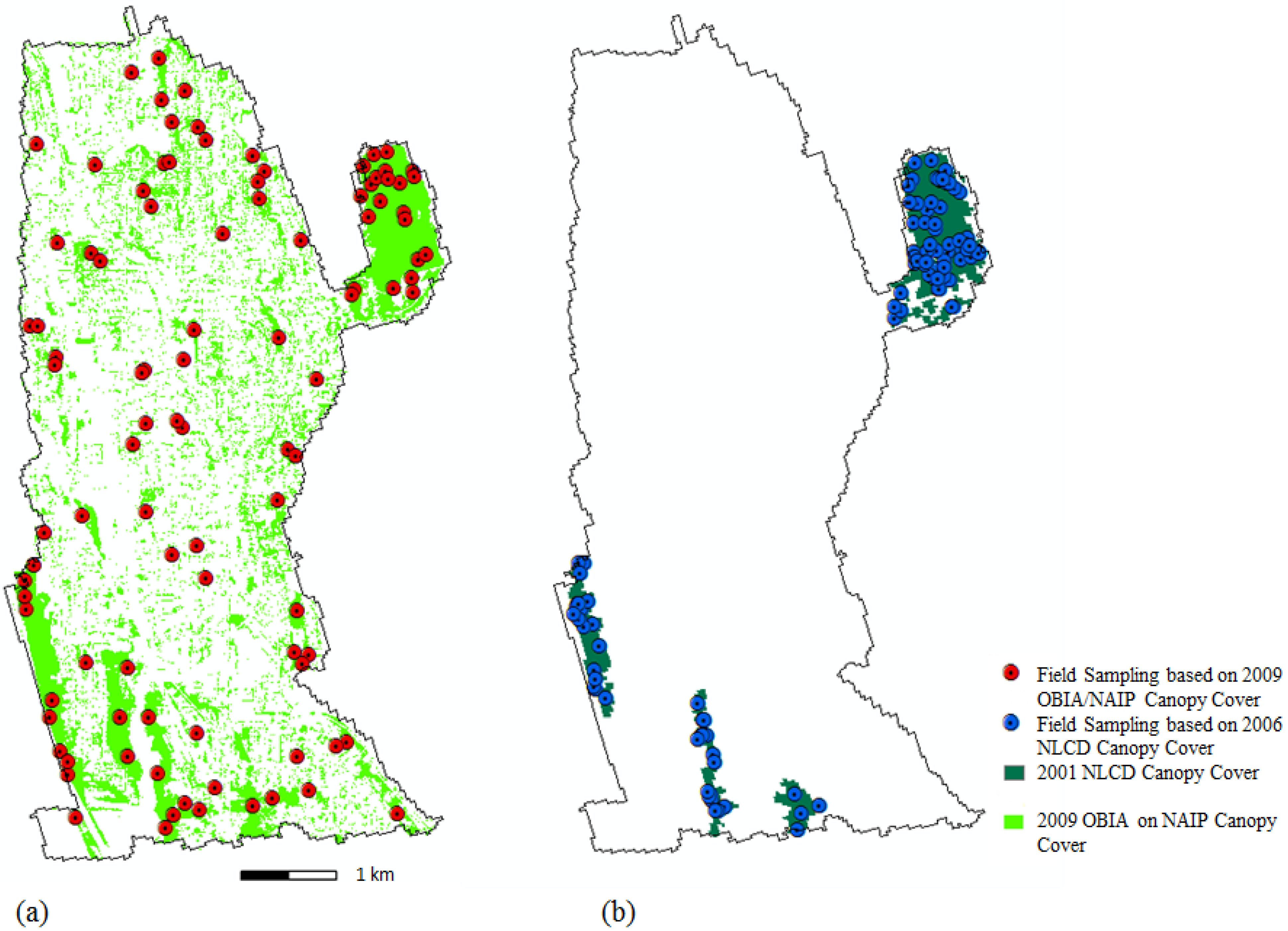

assess the accuracy and implications for tree cover assessment of the resulting classifications, specifically, the implication on ground sampling design.

We aimed to develop an accurate method that is repeatable on future dates of imagery and at other locations.

4. Conclusions

Similar to previous research applying OBIA to urban landscape characterization [

13,

35,

36], we demonstrated that the OBIA approach can generate good and repeatable LULC classifications suitable for tree cover assessment in urban areas. This has been shown in arid urban environments [

35], and human-dominated eastern US forest types [

36], and is now confirmed in a more temperate urban setting such as Seattle. More importantly, we demonstrate that these objectives can be met using freely available hyperspatial imagery such as NAIP which has not been done in previous studies. Whereas others have shown the applicability of non-publically available true color imagery only [

35], or, near-infrared imagery only [

36], we compare publically available true color imagery to near-infrared imagery as well as satellite derived imagery. Moreover, NAIP near-infrared imagery has characteristics similar to hyperspatial satellite imagery such as IKONOS and QuickBird, making these techniques potentially interchangeable with those data when the data are available. The OBIA-based classification examined in our three case studies demonstrates higher accuracies in heterogeneous forests compared to classifications such as NLCD. This discrepancy is likely due to the classification methods used (e.g., per-pixel

vs. OBIA) and the resolution (e.g., spatial and spectral) of the data. This confirms work done by others on urban, suburban, and agricultural landscapes [

21] and the urban-wildland interface [

12].

More importantly, our work suggests that there could be potential trade-offs in spatial

vs. spectral resolutions, where spectral content appears to be of more use than spatial detail in tree cover assessments. This trend should be investigated further in experiments where spectral and spatial resolutions can be manipulated. This trend is opposite of the trade-offs found when using medium spatial resolution imagery with increased spectral resolution (e.g., Landsat), where higher spatial resolutions are often preferred to additional increases in spectral resolution [

37]. While undertaking an OBIA approach it is critical to define the ‘object’, captured by image segments, of analysis. For example, in the instance of mapping urban tree cover, we suspect that imagery with a spatial resolution greater than a pixel size of ~5 m (average Seattle urban tree crown width, based on 2010 iTree Eco assessment data) would show lowered accuracies using an OBIA method because the object or image segment no longer represents an individual tree crown. Thus, at that point, additional spectral or 3D spatial (e.g., LiDAR) information might be of benefit. Further research into data resolution trade-offs that also take into consideration temporal resolution is needed.

Land cover classifications derived from hyperspatial remotely sensed data yields information on the urban forest that is more accurate and spatially consistent with other high resolution GIS datasets, such as parcel data [

13]. Such spatial consistency is critical if the data are to be used as a sustainable management and a decision support tool at the local level. As such, OBIA-based LULC are detailed enough to facilitate parcel-based analysis. A range of software solutions capable of the OBIA approach is available; further study looking into open-source

vs. proprietary solutions would be valuable. Although OBIA analysis is resource intensive, requiring large data storage and processing capabilities, these issues could be resolved through a consortia approach where partners off-set some of these costs because a significant cost of production comes from fixed costs (e.g., algorithm development). A consortia approach would take advantage of economies of scale and lower production costs through replication of the algorithm over greater extents. Partnership in field data acquisition [

15,

38], using sampling design derived from hyperspatial data, can aid in the validation of these heterogeneous datasets; this role could potentially rely on citizen science/public involvement [

39].