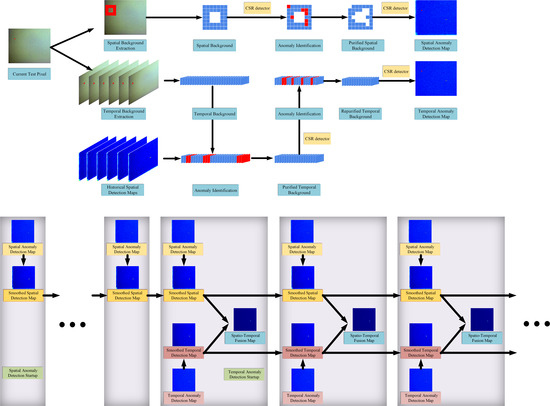

In this section, a novel CSR-based spatio-temporal anomaly algorithm is proposed to detect dim moving targets accurately in HSI sequences. Our algorithm is divided into four steps, namely spatial anomaly detection, iterative smoothing filter, temporal anomaly detection, and spatial-temporal fusion. The spatial anomaly detection finds abnormal targets by utilizing the spectral information of the current frame. An iterative smoothing filter can reduce noise and false alarms in the time and space domains. Different from AD, CD, and the temporal detection [

12] using the information between two adjacent frames, our proposed temporal anomaly detection constructs background dictionaries with the historical spectral curves of the test pixels. The proposed temporal anomaly detection explores anomaly characteristics in the time dimension and provides anomaly information different from that in the spatial detection. The fusion of spatial and temporal anomaly detection can explore the target information more comprehensively. The framework of the proposed CSR-ST algorithm is displayed in

Figure 1.

3.2. Iterative Smoothing Filter

The spectra change with time due to the measurement noise, resulting in temporal fluctuation of anomaly scores. Meanwhile, spatial background clutter is also generated in the detection maps due to the fluctuation. The literature [

17] has used a simple smoothing filter as a post-processing procedure to decrease false alarms and noise in detection maps. Inspired by [

17], an iterative smoothing filter is adopted to reduce noise both in the spatial and temporal domains simultaneously.

To avoid the overall drift of anomaly scores on the spatial detection map

caused by sudden changes in imaging conditions, Z-score normalization should be first performed:

In typical image preprocessing,

and

are the mean value and standard deviation of pixels in the whole image, respectively. However, because anomaly scores of anomalous pixels are much higher than those of background pixels on

, it is more accurate to describe the distribution of

by a truncated normal distribution or a half-normal distribution [

39] rather than a normal distribution. Therefore, it is more reasonable to set

and

to the mean value and standard deviation of the collection of

and its symmetric set about zero.

Then, an iterative smoothing operation is performed on

to reduce spatial and temporal clutter:

where

is the normalized spatial anomaly scores of

,

and

are the smoothed spatial anomaly scores of

and

, respectively,

denotes the spatial neighborhood used for smoothing, and

and

denote filter weights. When the first spatial detection map is smoothed, let

. The latter part of Equation (

15) is actually a spatial smoothing filter such as the mean filter or the Gaussian filter. Furthermore, one-dimensional denoising algorithms can also replace the temporal iterative smoothing part of Equation (

15) to reduce temporal clutter. Compared to the original spatial detection map

, background clutter and noise on

are suppressed, and detection performance can be improved.

3.3. Temporal Anomaly Detection

Note that, using the dual-window strategy to select a background dictionary has several disadvantages. Firstly, the selection of an inappropriate dual-window size can cause the local background to be contaminated by target pixels in spatial anomaly detection. If the inner window of dual-windows is too small, the chosen local background of the test target pixel can contain some target pixels. Moreover, the contamination problem can also occur when multiple targets are densely distributed. Secondly, the spatial distributions of moving targets are usually unknown and change in the real world. Therefore, it is difficult to determine the optimal dual-window size to detect moving targets in advance. Thirdly, the performance of these algorithms still varies with the dual-window size, and the best performance of the dual-window-based AD algorithms is a local optimum. For instance, detection results can be further improved after combining with a weight matrix obtained by segmentation or clustering in the literature [

40,

41], where background pixels are assigned lower weight values. An interesting phenomenon is that the best local background of some detection algorithms for subpixel targets are eight neighborhoods [

42], and large dual-windows are harmful to these algorithms. Fourthly, the dual-window-based spatial detection cannot eliminate motionless objects, the spectra of which are also different from the background spectra.

To accurately detect moving targets in HSI sequences, we propose a new approach for constructing background dictionaries of test pixels. Compared to hyperspectral CD, the interval between two contiguous frames in moving target detection is short; thus, the camera angle, illumination, weather, and other imaging conditions are almost unchanged. In this case, the spectrum of the same object in short HSI sequences can only be affected by the measured noise. Moreover, due to camera shake and the error of frame registration, the imaging space corresponding to the same pixel in the HSI moves back and forth in a local background region. Therefore, it can be assumed that the spectra of the same pixel in adjacent frames,

, are a linear combination of the same set of endmembers. According to the LMM, the current pixel

can be expressed as a linear combination of its former spectra

:

where

is defined as the former spectra matrix,

is defined as the abundance vector,

P is defined as the number of former spectra, and

is defined as the noise item.

Equation (

16) means that the test pixel

and its former spectra

can be also applied to the CSR detector.

and

can be considered to consist of the same set of background endmembers. In the spatial anomaly detection, the background dictionary

constructed by the dual-window strategy contains some endmembers independent of

. Compared to

,

is more suitable as a background dictionary for the CSR and KCSR detectors. In this subsection, temporal anomaly detection is defined as a method to calculate the anomaly scores of the test pixel

in the current frame by using its former spectra

. Because the positions of non-homogeneous background pixels or motionless objects are almost unchanged in the HSI after inter-frame registration, the temporal anomaly detection can avoid false alarms caused by these pixels.

However,

is not a pure background dictionary sometimes. When the target is moving slowly, it takes more than one frame to pass through a pixel. In this case, if

is a target pixel, it is possible that its former spectra are also target spectra. Besides, if the trajectories of moving targets intersect, the former spectra of pixels at the intersection can also be contaminated by targets. Therefore, we delete the abnormal atoms in

based on the spatial anomaly detection results.

and

are defined as the number of atoms in the background dictionary and its candidate set, respectively. Specifically, for the test pixel

in the current frame, smoothed spatial anomaly scores

of its former spectra are sorted at first. In order from smallest to largest, the sort result is

, where the subscripts

are the sequence numbers. The smaller the spatial anomaly score is, the higher the probability that the corresponding former spectrum belongs to the background. Therefore,

former spectra

are selected to construct a pure background dictionary

for the test pixel

. Then, the minimizing problem in the CSR algorithm can be transformed as:

where

. The background dictionary

can be further purified by removing the atoms with

. The temporal anomaly detection result

of

is transformed as:

where

is the approximately calculated sparse vector without anomalous atoms in the background dictionary

and

is the

-norm of the approximate error. Similarly, the KCSR algorithm can also be applied to the temporal anomaly detection. After all pixels on

are detected in sequence, a two-dimensional temporal detection map

is obtained.

The lower limit of the constraint parameter

C is connected with the number of anomalous atoms in the background dictionary. To obtain a convenient setting of

C in the spatial and temporal anomaly detection,

C can be represented as:

where

. If

and

, then the inequality constraint

is invalid. To further explore the meaning of

, two definitions are given as follows:

where

is defined as the number of anomalous atoms and

is defined as the abundance relevant to the anomaly endmember in the LMM of the

l-th anomalous atom. In the hyperspectral AD,

. We proofed a proposition of the parameter

in the article [

23]:

Proposition 1. To delete all anomalous atoms from the background dictionary, ν must satisfy:where is defined as the abundance relevant to the anomaly endmember in the LMM of the test pixel. The proposition gives an intuitive interpretation of . When is larger than , all anomalous atoms can be deleted. Regardless of spatial detection or temporal detection, of the same test pixel is constant. Therefore, it is practicable to set to the same value in both detections. and in temporal detection can be set to values smaller than those in spatial detection by reducing the proportion of anomalous atoms in . One method is to enlarge , the size of . Another method is to decrease , the number of anomalous atoms, by enlarging the size of the candidate set or sample the former spectra at intervals before constructing . Through the above operations, the lower limit of in temporal detection is less than that in spatial detection. When is set to an excessively large value, numerous background atoms are exorbitantly deleted, resulting in slight degeneration in the ability of the CSR and KCSR algorithms to represent test background pixels. Therefore, should be a trade-off value between the inadequate deletion of anomalous atoms and unnecessary deletion in spatial detection. The same can cause the excessive deletion of atoms in temporal detection, but a large can avoid this situation.

3.4. Spatio-Temporal Fusion

Compared to the spatial anomaly detection, the temporal anomaly detection can suppress spatially non-homogeneous background pixels and stationary objects. Furthermore, compared to the temporal profile filtering algorithms, the proposed temporal anomaly detection can identify moving targets with different speeds simultaneously and is robust to the situation where multiple targets pass through the same trajectory one after the other. However, the temporal detection is inferior to the spatial detection in some situations. If there are some moving background pixels in the scene, such as clouds, temporal anomaly detection can judge them as targets. Besides, if the frame registration error is too large, the temporal background dictionary cannot describe the background accurately. To improve the stability and robustness of the detection algorithm, it is necessary to combine spatial and temporal detection results.

Before fusion, the filtering operation in

Section 3.2 can also be performed on the temporal detection map

. First, perform Z-score normalization on

:

where

and

are set to the mean value and standard deviation of the collection of

and its symmetric set about zero. Then, the same iterative smoothing operation as Equation (

15) is performed on

to reduce temporal clutter:

where

is the normalized temporal anomaly scores of

and

and

are the temporal spatial anomaly scores of

and

, respectively. The smoothed detection maps can be combined by the multiplication fusion strategy:

where

and

are the maximum values in

and

,

and

are the minimum values in

and

, the symbol ∘ denotes the Hadamard product, and

is the fusion spatio-temporal detection map. The overall description of the proposed spatio-temporal anomaly detection is presented in Algorithm 1.

| Algorithm 1 CSR-based spatio-temporal anomaly detection for moving targets |

| Input: Hyperspectral sequences, dual-window size , temporal background dictionary size , candidate set size , parameter , and kernel parameter for KCSR. |

| for each frame in the hyperspectral sequences do |

| Output: Spatio-temporal anomaly detection map when . |