Toward Super-Resolution Image Construction Based on Joint Tensor Decomposition

Abstract

:1. Introduction

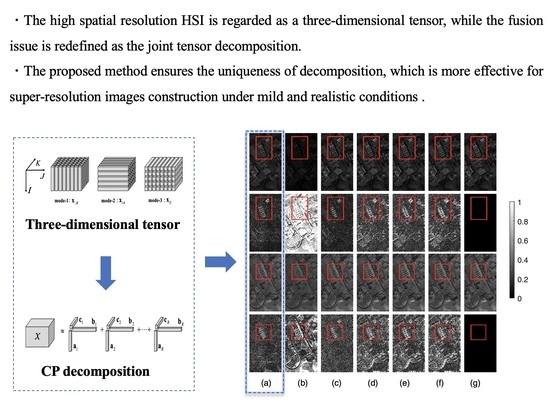

- In the proposed method, the high-spatial resolution HSI is regarded as a three-dimensional tensor, while the fusion issue is redefined as the joint estimation of the coupling factor matrix, which is also expressed as the joint tensor decomposition problem for the hyperspectral image tensor, multispectral image tensor, and noise regularization term.

- In order to observe the reconstruction effect of this method, the performance of this algorithm is compared with the five algorithms. Experiments show that the JTF method provides clearer spatial details than the real SRI, while the running time of JTF is acceptable compared with the excellent performance.

- Besides, we conduct experiments under the incorrect Gaussian kernel (, , ), correct Gaussian kernel (), and different noises, while showing the fusion effect of the six test methods with the Pavia University data captured by the ROSISsensor as well. The results reveal that the JTF method performs best in comparison with the other methods in terms of reconstruction accuracy regardless of whether the Gaussian kernel is correct and the level of added noise. This indicates that the JTF algorithm is more suitable for degradation operators that are unknown or contain noise.

2. Preliminaries on Tensors

2.1. Definition and Notations

2.2. Tensor Decomposition

3. Problem Formulation

3.1. Image Fusion Based on Matrix Decomposition

3.2. Image Fusion Based on Tensor Decomposition

4. The Joint Tensor Decomposition Method

The Joint Tensor Decomposition Method

| Algorithm 1: Algorithm for coupled images. |

| Initialization: |

| Apply blind STEREO Algorithm [44] with random |

| initializations to obtain |

| While not converged, do |

| , |

| , |

| , |

| , |

| . |

| end while |

5. Experiments And Results

5.1. Experimental Data

5.2. Evaluation Criterion

5.3. Selection Of Parameters

5.4. Experimental Results

5.5. Experimental Results of the Noisy Case

5.6. Analysis of Computational Costs

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhu, Z.Y.; Dong, S.J.; Yu, C.L.; He, J. A Text Hybrid Clustering Algorithm Based on HowNet Semantics. Key Eng. Mater. 2011, 474, 2071–2078. [Google Scholar] [CrossRef]

- Sui, J.; Adali, T.; Yu, Q.; Chen, J.; Calhoun, V.D. A review of multivariate methods for multimodal fusion of brain imaging data. J. Neurosci. Methods 2012, 204, 68–81. [Google Scholar] [CrossRef] [Green Version]

- Acar, E.; Lawaetz, A.J.; Rasmussen, M.A.; Bro, R. Structure-revealing data fusion model with applications in metabolomics. IEEE Eng. Med. Biol. Soc. 2013, 14, 6023–6026. [Google Scholar]

- Pohl, C.; Van Genderen, J.L. Review article Multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef] [Green Version]

- Chabrillat, S.; Pinet, P.C.; Ceuleneer, G.; Johnson, P.E.; Mustard, J.F. Ronda peridotite massif: Methodology for its geological mapping and lithological discrimination from airborne hyperspectral data. Int. J. Remote Sens. 2000, 21, 2363–2388. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Adam, E.; Mutanga, O.; Rugege, D. Multispectral and hyperspectral remote sensing for identification and mapping of wetland vegetation: A review. Wetl. Ecol. Manag. 2010, 18, 281–296. [Google Scholar] [CrossRef]

- Ellis, R.J.; Scott, P. Evaluation of hyperspectral remote sensing as a means of environmental monitoring in the St. Austell China clay (kaolin) region, Cornwall, UK. Remote Sens. Environ 2004, 93, 118–130. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, Z.; Li, J.; Plaza, A.; Wei, Z. Anomaly detection in hyperspectral images based on low-rank and sparse representation. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1990–2000. [Google Scholar] [CrossRef]

- Zhang, M.; Li, W.; Du, Q. Diverse region based CNN for hyperspectral image classification. IEEE Trans. Image Process 2018, 27, 2623–2634. [Google Scholar] [CrossRef]

- Xue, B.; Yu, C.; Wang, Y.; Song, M.; Li, S.; Wang, L.; Chen, H.M.; Chang, C.I. A subpixel target detection approach to hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5093–5114. [Google Scholar] [CrossRef]

- Kang, X.; Duan, P.; Xiang, X.; Li, S.; Benediktsson, J.A. Detection and correction of mislabeled training samples for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5673–5686. [Google Scholar] [CrossRef]

- Lv, Z.; Shi, W.; Zhou, X.; Benediktsson, J.A. Semi-automatic system for land cover change detection using bi-temporal remote sensing images. Remote Sens. 2017, 9, 1112. [Google Scholar] [CrossRef] [Green Version]

- Licciardi, G.; Vivone, G.; Dalla Mura, M.; Restaino, R.; Chanussot, J. Multi-resolution analysis techniques and nonlinear PCA for hybrid pansharpening applications. Multidimens. Syst. Signal Process. 2015, 27, 807–830. [Google Scholar] [CrossRef]

- Shah, V.P.; Younan, N.H.; King, R.L. An efficient pan-sharpening method via a combined adaptive PCA approach and contourlets. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1323–1335. [Google Scholar] [CrossRef]

- Nason, G.P.; Silverman, B.W. The Stationary Wavelet Transform and Some Statistical Applications. In Wavelets and Statistics; Antoniadis, A., Oppenheim, G., Eds.; Lecture Notes in Statistics; Springer: New York, NY, USA, 1995; Volume 103. [Google Scholar] [CrossRef]

- Yokoya, N.; Grohnfeldt, C.; Chanussot, J. Hyperspectral and multispectral data fusion: A comparative review of the recent literature. IEEE Geosci. Remote Sens. Mag. 2017, 5, 29–56. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A. Context-driven fusion of high spatial and spectral resolution images based on oversampled multiresolution analysis. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2300–2312. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. MTF-tailored multiscale fusion of high-resolution MS and pan imagery. Photogramm. Eng. Remote Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Vivone, G.; Restaino, R.; Dalla Mura, M.; Licciardi, G.; Chanussot, J. Contrast and error based fusion schemes for multispectral image pansharpening. IEEE Geosci. Remote Sens. Lett. 2013, 11, 930–934. [Google Scholar] [CrossRef] [Green Version]

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2565–2586. [Google Scholar] [CrossRef]

- Selva, M.; Aiazzi, B.; Butera, F.; Chiarantini, L.; Baronti, S. Hyper-sharpening: A first approach on SIM-GA data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3008–3024. [Google Scholar] [CrossRef]

- Wang, Z.; Ziou, D.; Armenakis, C.; Li, D.; Li, Q. A comparative analysis of image fusion methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1391–1402. [Google Scholar] [CrossRef]

- Eismann, M.T. Resolution enhancement of hyperspectral imagery using maximum a posteriori estimation with a stochastic mixing model. Diss. Abstr. Int. 2004, 65, 1385. [Google Scholar]

- Elad, M.; Aharon, M. Image denoising via learned dictionaries and sparse representation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 895–900. [Google Scholar]

- Aharon, M.; Elad, M.; Bruckstein, A. RK-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Yang, J.; Wright, J.; Huang, T.S.; Ma, Y. Image super-resolution via sparse representation. IEEE Trans. Image Process. 2010, 19, 2861–2873. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Dobigeon, N.; Parente, M.; Du, Q.; Gader, P.; Chanussot, J. Hyperspectral unmixing overview: Geometrical, statistical, and sparse regression based approaches. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 354–379. [Google Scholar] [CrossRef] [Green Version]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled nonnegative matrix factorization unmixing for hyperspectral and multispectral data fusion. IEEE Trans. Geosci. Remote Sens. 2012, 50, 528–537. [Google Scholar] [CrossRef]

- Wycoff, E.; Chan, T.H.; Jia, K.; Ma, W.K.; Ma, Y. A non-negative sparse promoting algorithm for high resolution hyperspectral imaging. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 1409–1413. [Google Scholar]

- Lanaras, C.; Baltsavias, E.; Schindler, K. Hyperspectral super-resolution by coupled spectral unmixing. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3586–3594. [Google Scholar]

- Bolte, J.; Sabach, S.; Teboulle, M. Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 2014, 146, 459–494. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M. A variable splitting augmented lagrangian approach to linear spectral unmixing. In Proceedings of the First Workshop on Hyperspectal Image and Signal Processing, Grenoble, France, 26–28 August 2009; pp. 1–4. [Google Scholar]

- Li, W.; Du, Q. MLaplacian Regularized Collaborative Graph for Discriminant Analysis of Hyperspectral Imagery. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7066–7076. [Google Scholar] [CrossRef]

- Guo, X.; Huang, X.; Zhang, L.; Zhang, L.; Plaza, A.; Benediktsson, J.A. Support Tensor Machines for Classification of Hyperspectral Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3248–3264. [Google Scholar] [CrossRef]

- An, J.; Zhang, X.; Zhou, H.; Jiao, L. Tensor-Based Low-Rank Graph with Multimanifold Regularization for Dimensionality Reduction of Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4731–4746. [Google Scholar] [CrossRef]

- Renard, N.; Bourennane, S.; Blanc-Talon, J. Denoising and Dimensionality Reduction Using Multilinear Tools for Hyperspectral Images. IEEE Geosci. Remote Sens. Lett. 2008, 5, 138–142. [Google Scholar] [CrossRef]

- Zhong, Z.; Fan, B.; Duan, J.; Wang, L.; Ding, K.; Xiang, S.; Pan, C. Discriminant Tensor Spectral Spatial Feature Extraction for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2017, 12, 1028–1032. [Google Scholar] [CrossRef]

- Makantasis, K.; Doulamis, A.D.; Doulamis, N.D.; Nikitakis, A. Tensor-Based Classification Models for Hyperspectral Data Analysis. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6884–6898. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, Z.; Chanussot, J.; Comon, P.; Wei, Z. Nonlocal Coupled Tensor CP Decomposition for Hyperspectral and Multispectral Image Fusion. IEEE Trans. Geosci. Remote Sens. 2020, 58, 348–362. [Google Scholar] [CrossRef]

- Cohen, J.; Farias, R.C.; Comon, P. Fast decomposition of large nonnegative tensors. IEEE Signal Process. Lett. 2015, 22, 862–866. [Google Scholar] [CrossRef] [Green Version]

- Shashua, A.; Levin, A. Linear image coding for regression and classification using the tensor-rank principle. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; pp. 42–49. [Google Scholar]

- Bauckhage, C. Robust tensor classifiers for color object recognition. Int. Conf. Image Anal. Recognit. 2007, 4633, 352–363. [Google Scholar]

- Kanatsoulis, C.I.; Fu, X.; Sidiropoulos, N.D.; Ma, W.K. Hyperspectral super-resolution: A coupled tensor factorization approach. IEEE Trans. Signal Process. 2018, 66, 6503–6517. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Dian, R.; Fang, L.; Bioucas-Dias, J.M. Fusing hyperspectral and multispectral images via coupled sparse tensor factorization. IEEE Trans. Image Process. 2018, 27, 4118–4130. [Google Scholar] [CrossRef]

- Kolda, T.G.; Bader, B.W. Tensor Decompositions and Applications. Siam Rev. 2009, 51, 455–500. [Google Scholar] [CrossRef]

- Chiantini, L.; Ottaviani, G. On generic identifiability of 3-tensors of small rank. SIAM J. Matrix Anal. Appl. 2012, 33, 1018–1037. [Google Scholar] [CrossRef]

- Iordache, M.D.; Bioucas-Dias, J.M.; Plaza, A. Sparse unmixing of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2014–2039. [Google Scholar] [CrossRef] [Green Version]

- Quickbird Satellite Sensor. Available online: http://www.satimagingcorp.com/satellite-sensors/quickbird (accessed on 4 April 2018).

- Vervliet, N.; Debals, O.; Sorber, L.; Barel, M.V.; Lathauwer, L.D. Tensorlab v3.0, March 2016. Available online: http://www.tensorlab.net (accessed on 4 March 2018).

- Liu, J.G. Smoothing filter based intensity modulation: A spectral preserve image fusion technique for improving spatial details. Int. J. Remote Sens. 2000, 21, 3461–3472. [Google Scholar] [CrossRef]

| Gaussian Kernel | Method | R-SNR | NMSE | RMSE | ERGAS | SAM | UIQI |

|---|---|---|---|---|---|---|---|

| JTF | 17.8267 | 0.1284 | 0.0266 | 4.3495 | 5.95 | 0.9031 | |

| Blind STEREO | 11.0252 | 0.281 | 0.0581 | 9.9924 | 15.841 | 0.7777 | |

| CNMF | 15.8798 | 0.1607 | 0.0332 | 5.684 | 7.8655 | 0.8922 | |

| SFIM | 10.7811 | 0.289 | 0.0598 | 10.3849 | 11.2828 | 0.7374 | |

| MTF-GLP | 12.8459 | 0.2279 | 0.0471 | 7.608 | 10.4577 | 0.7845 | |

| MAPSMM | 11.8642 | 0.2551 | 0.0528 | 8.3346 | 10.4203 | 0.7449 | |

| JTF | 17.6357 | 0.1313 | 0.0272 | 4.4351 | 5.9266 | 0.8983 | |

| Blind STEREO | 11.163 | 0.2766 | 0.0572 | 9.6563 | 15.6988 | 0.7938 | |

| CNMF | 15.5546 | 0.1668 | 0.0345 | 5.7987 | 7.9809 | 0.8623 | |

| SFIM | 12.6416 | 0.2333 | 0.0483 | 7.9097 | 10.2705 | 0.7725 | |

| MTF-GLP | 13.6742 | 0.2072 | 0.0428 | 6.9561 | 9.7086 | 0.8038 | |

| MAPSMM | 12.8407 | 0.228 | 0.0472 | 7.4954 | 9.5283 | 0.7733 | |

| JTF | 17.7156 | 0.1301 | 0.0269 | 4.4008 | 5.8517 | 0.9001 | |

| Blind STEREO | 13.4084 | 0.2136 | 0.0442 | 7.421 | 11.9428 | 0.8584 | |

| CNMF | 16.0028 | 0.1584 | 0.0328 | 5.5489 | 7.2096 | 0.8747 | |

| SFIM | 13.1113 | 0.221 | 0.0457 | 7.493 | 9.9524 | 0.7804 | |

| MTF-GLP | 13.9393 | 0.2009 | 0.0416 | 6.7583 | 9.461 | 0.8057 | |

| MAPSMM | 13.1616 | 0.2197 | 0.0454 | 7.2442 | 9.3344 | 0.7777 | |

| JTF | 17.603 | 0.1318 | 0.0273 | 4.4091 | 5.7811 | 0.8991 | |

| Blind STEREO | 13.7402 | 0.2056 | 0.0425 | 7.0851 | 11.3721 | 0.8621 | |

| CNMF | 16.3576 | 0.1521 | 0.0315 | 5.3307 | 7.1092 | 0.8792 | |

| SFIM | 13.3654 | 0.2147 | 0.0444 | 7.2704 | 9.7603 | 0.7875 | |

| MTF-GLP | 14.1333 | 0.1965 | 0.0406 | 6.6077 | 9.3225 | 0.8109 | |

| MAPSMM | 13.2983 | 0.2163 | 0.0447 | 7.1304 | 9.1704 | 0.7813 |

| Method | Gaussian Kernel () | Gaussian Kernel () | Gaussian Kernel () | Gaussian Kernel () |

|---|---|---|---|---|

| SNR (10 db) | SNR (10 db) | SNR (20 db) | SNR (20 db) | |

| JTF | 17.266912 | 15.883985 | 15.303149 | 15.035694 |

| Blind STEREO | 15.179482 | 13.761605 | 13.057704 | 12.79495 |

| CNMF | 91.838425 | 89.438305 | 82.124058 | 82.466387 |

| SFIM | 1.421629 | 0.821533 | 0.836197 | 0.829191 |

| MTF-GLP | 24.859017 | 25.753572 | 24.809271 | 31.731484 |

| MAPSMM | 301.682105 | 283.545812 | 266.800409 | 299.233003 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ren, X.; Lu, L.; Chanussot, J. Toward Super-Resolution Image Construction Based on Joint Tensor Decomposition. Remote Sens. 2020, 12, 2535. https://doi.org/10.3390/rs12162535

Ren X, Lu L, Chanussot J. Toward Super-Resolution Image Construction Based on Joint Tensor Decomposition. Remote Sensing. 2020; 12(16):2535. https://doi.org/10.3390/rs12162535

Chicago/Turabian StyleRen, Xiaoxu, Liangfu Lu, and Jocelyn Chanussot. 2020. "Toward Super-Resolution Image Construction Based on Joint Tensor Decomposition" Remote Sensing 12, no. 16: 2535. https://doi.org/10.3390/rs12162535