Morphological Band Registration of Multispectral Cameras for Water Quality Analysis with Unmanned Aerial Vehicle

Abstract

:1. Introduction

2. Materials

2.1. Micasense Rededge-M and Data

2.2. Acquisition Time Difference in Rededge-M Band Images

3. Methodology and Analysis

3.1. Radiometric and Geometric Calibration

3.2. Water Color Analysis

3.3. The Impact of Pixel Misregistration on Water Quality Analysis

3.4. Proposed Approach for the Morphological Registration

4. Algorithm Application and Results

4.1. Algorithm Development for the Entire Image

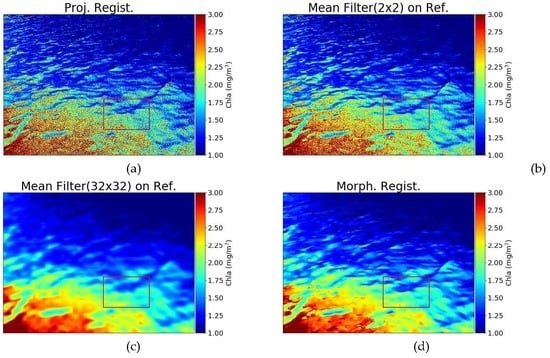

4.2. Results

5. Discussion and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Holtkamp, D.J.; Goshtasby, A.A. Precision registration and mosaicking of multicamera images. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3446–3455. [Google Scholar] [CrossRef]

- Jhan, J.-P.; Rau, J.-Y.; Huang, C.-Y. Band-to-band registration and ortho-rectification of multilens/multispectral imagery: A case study of MiniMCA-12 acquired by a fixed-wing UAS. ISPRS J. Photogramm. Remote Sens. 2016, 114, 66–77. [Google Scholar] [CrossRef]

- Anuta, P.E. Spatial registration of multispectral and multitemporal digital imagery using fast Fourier transform techniques. IEEE Trans. Geosci. Electron. 1970, 8, 353–368. [Google Scholar] [CrossRef]

- D’Agostino, E.; Maes, F.; Vandermeulen, D.; Suetens, P. A Viscous Fluid Model for Multimodal Non-rigid Image Registration Using Mutual Information. Med. Image Anal. 2003, 7, 565–575. [Google Scholar] [CrossRef]

- Droske, M.; Rumpf, M. A Variational Approach to Nonrigid Morphological Image Registration. SIAM J. Appl. Math. 2004, 64, 668–687. [Google Scholar] [CrossRef] [Green Version]

- Matsopoulos, G.K.; Mouravliansky, N.A.; Delibasis, K.K.; Nikita, K.S. Automatic retinal image registration scheme using global optimization techniques. IEEE Trans. Inf. Technol. Biomed. 1999, 3, 47–60. [Google Scholar] [CrossRef] [PubMed]

- Soille, P. Morphological image compositing. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 673–683. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and Photographic Infrared Linear Combinations for Monitoring Vegetation. Remote Sens. Environ. 1978, 8, 127–150. [Google Scholar]

- Huete, A.; Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- O’Reilly, J.E. Ocean color chlorophyll algorithms for Sea WiFS. J. Geophys. Res. Oceans 1998, 103, 24937–24953. [Google Scholar] [CrossRef] [Green Version]

- Siegel, D.A.; Wang, M.; Maritorena, S.; Robinson, W. Atmospheric correction of satellite ocean color imagery: The black pixel assumption. Appl. Opt. 2000, 39, 3582–3591. [Google Scholar] [CrossRef] [PubMed]

- Doxaran, D.; Froidefond, J.-M.; Castaing, P. A reflectance band ratio used to estimate suspended matter concentrations in sediment-dominated coastal waters. Int. J. Remote Sens. 2002, 23, 5079–5085. [Google Scholar] [CrossRef]

- Hu, C.; Carder, K.L.; Muller-Karger, F.E. Atmospheric correction of SeaWiFS imagery over turbid coastal waters: A practical method. Remote Sens. Environ. 2000, 74, 195–206. [Google Scholar] [CrossRef]

- Ruddick, K.G.; Ovidio, F.; Rijkeboer, M. Atmospheric correction of SeaWiFS imagery for turbid coastal and inland waters. Appl. Opt. 2000, 39, 897–912. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, W.; Roh, S.-H.; Moon, Y.; Jung, S. Evaluation of Rededge-M Camera for Water Color Observation after Image Preprocessing. J. Korean Soc. Surv. Geod. Photogramm. Cartogr. 2019, 37, 167–175. [Google Scholar]

- Mueller, J.L.; Davis, C.; Arnone, R.; Frouin, R.; Carder, K.; Lee, Z.P.; Steward, R.G.; Hooker, S.; Mobley, C.D.; McLean, S.; et al. Above-water radiance and remote sensing reflectance measurements and analysis protocols. Ocean Opt. Protoc. Satell. Ocean Color Sens. Valid. Revis. 2000, 2, 98–107. [Google Scholar]

- Mobley, C.D. Estimation of the remote-sensing reflectance from above-surface measurements. Appl. Opt. 1999, 38, 7442–7455. [Google Scholar] [CrossRef] [PubMed]

- MicaSense GitHub. Available online: https://github.com/micasense/imageprocessing (accessed on 30 May 2020).

- Lee, Z.; Carder, K.L.; Mobley, C.D.; Steward, R.G.; Patch, J.S. Hyperspectral remote sensing for shallow waters I A semianalytical model. Appl. Opt. 1998, 37, 6329. [Google Scholar] [CrossRef] [PubMed]

- Lee, Z.; Ahn, Y.-H.; Mobley, C.; Arnone, R. Removal of surface-reflected light for the measurement of remote-sensing reflectance from an above-surface platform. Opt. Express 2010, 18, 26313–26324. [Google Scholar] [CrossRef] [PubMed]

- NASA. Ocean Biology Processing Group, Algorithm Theoretical Basis Documents for Chlorophyll-a Concentration Product. Available online: https://oceancolor.gsfc.nasa.gov/atbd/chlor_a/ (accessed on 30 May 2020).

- Noh, J.H.; Kim, W.; Son, S.H.; Ahn, J.-H.; Park, Y.-J. Remote quantification of Cochlodinium polykrikoides blooms occurring in the East Sea using geostationary ocean color imager (GOCI). Harmful Algae 2018, 73, 129–137. [Google Scholar] [CrossRef] [PubMed]

| Scene ID | Time | Location | Altitude (m) | Scene Description |

|---|---|---|---|---|

| A | 26–07–2019 15:23 | Sumoon | 85.4 | Coastal Area |

| B | 31–08–2019 12:54 | Yeosu | 8.1 | From Ship |

| C | 31–08–2019 13:29 | Yeosu | 196 | Coastal Area |

| D | 31–08–2019 13:17 | Yeosu | 390 | Red Tide |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, W.; Jung, S.; Moon, Y.; Mangum, S.C. Morphological Band Registration of Multispectral Cameras for Water Quality Analysis with Unmanned Aerial Vehicle. Remote Sens. 2020, 12, 2024. https://doi.org/10.3390/rs12122024

Kim W, Jung S, Moon Y, Mangum SC. Morphological Band Registration of Multispectral Cameras for Water Quality Analysis with Unmanned Aerial Vehicle. Remote Sensing. 2020; 12(12):2024. https://doi.org/10.3390/rs12122024

Chicago/Turabian StyleKim, Wonkook, Sunghun Jung, Yongseon Moon, and Stephen C. Mangum. 2020. "Morphological Band Registration of Multispectral Cameras for Water Quality Analysis with Unmanned Aerial Vehicle" Remote Sensing 12, no. 12: 2024. https://doi.org/10.3390/rs12122024