Fusion of Various Band Selection Methods for Hyperspectral Imagery

Abstract

:1. Introduction

2. Band Selection Fusion

- The improvement of individual band selection methods;

- A great advantage from BSF is that there is no need for band de-correlation, which has been a major issue in many BP-based BS methods due to their use of BP as a criterion to select bands;

- BSF can adapt to different data structures characterized by statistics and be applicable to various applications. This is because bands selected by BSF can be from different band subsets, which are obtained by various BP criteria or application-based BS methods;

- Although BSF does not implement any BP criterion, it can actually prioritize bands according to their appearing frequencies in different band subsets;

- BSF is flexible and can be implemented in various forms, specifically progressive fusion, which can be carried out by different numbers of BS methods.

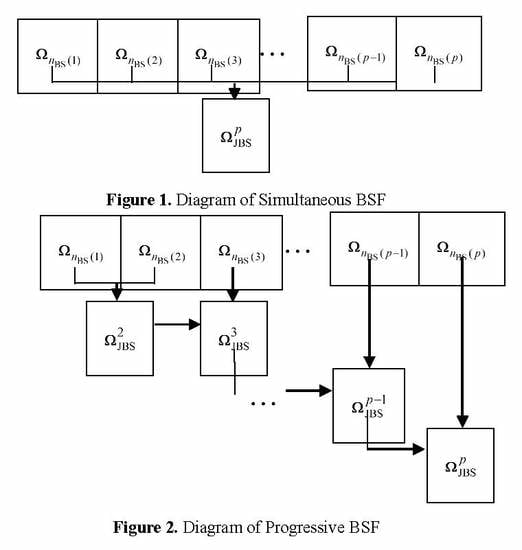

2.1. Simultaneous Band Selection Fusion

| Simultaneous Band Selection Fusion (SBSF) |

|

2.2. Progressive Band Selection Fusion

| Progressive Band Selection Fusion (PBSF) |

|

3. Real Hyperspectral Image Experiments

3.1. Linear Spectral Unmixing

3.2. Hyperspectral Image Classification

4. Discussions

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Acronyms

| BS | Band Selection |

| BSF | Band Selection Fusion |

| BP | Band Prioritization |

| PBSF | Progressive BSF |

| SBSF | Simultaneous BSF |

| JBS | Joint Band Subset |

| VD | Virtual Dimensionality |

| E | Entropy |

| V | Variance |

| SNR (S) | Signal-to-Noise Ratio |

| ID | Information Divergence |

| CBS | Constrained Band Selection |

| UBS | Uniform Band Selection |

| HFC | Harsanyi–Farrand–Chang |

| NWHFC | Noise-Whitened HFC |

| ATGP | Automated Target Generation Process |

| OA | Overall Accuracy |

| AA | Average Accuracy |

| PR | Precision Rate |

Appendix A. Descriptions of Four Hyperspectral Data Sets

Appendix A.1. HYDICE Data

Appendix A.2. AVIRIS Purdue Data

Appendix A.3. AVIRIS Salinas Data

Appendix A.4. ROSIS Data

Appendix B. Classification Results of Salinas and University of Pavia Data Sets

| BS and BSF Methods | EPF-B-c | EPF-G-c | EPF-B-g | EPF-G-g | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| POA | PAA | PPR | POA | PAA | PPR | POA | PAA | PPR | POA | PAA | PPR | |

| Full bands | 0.9584 | 0.9829 | 0.9773 | 0.9679 | 0.9875 | 0.9826 | 0.9603 | 0.9840 | 0.9784 | 0.9616 | 0.9844 | 0.9789 |

| V | 0.9239 | 0.9665 | 0.9588 | 0.9257 | 0.9660 | 0.9613 | 0.9252 | 0.9667 | 0.9591 | 0.9180 | 0.9627 | 0.9557 |

| S | 0.9328 | 0.9687 | 0.9516 | 0.9351 | 0.9692 | 0.9556 | 0.9342 | 0.9696 | 0.9531 | 0.9280 | 0.9648 | 0.9475 |

| E | 0.9322 | 0.9707 | 0.9570 | 0.9358 | 0.9711 | 0.9605 | 0.9336 | 0.9710 | 0.9570 | 0.9250 | 0.9667 | 0.9530 |

| ID | 0.8236 | 0.8817 | 0.8682 | 0.8406 | 0.8979 | 0.8892 | 0.8245 | 0.8838 | 0.8697 | 0.8304 | 0.8884 | 0.8767 |

| CBS | 0.8593 | 0.9402 | 0.9337 | 0.8660 | 0.9483 | 0.9419 | 0.8604 | 0.9416 | 0.9353 | 0.8632 | 0.9442 | 0.9372 |

| UBS | 0.9558 | 0.9804 | 0.9754 | 0.9660 | 0.9857 | 0.9812 | 0.9583 | 0.9816 | 0.9765 | 0.9601 | 0.9826 | 0.9776 |

| V-S-CBS (PBSF) | 0.9039 | 0.9372 | 0.9403 | 0.9208 | 0.9469 | 0.9506 | 0.9071 | 0.9388 | 0.9420 | 0.9102 | 0.9407 | 0.9437 |

| E-ID-CBS (PBSF) | 0.9332 | 0.9693 | 0.9610 | 0.9490 | 0.9775 | 0.9733 | 0.9366 | 0.9709 | 0.9634 | 0.9407 | 0.9733 | 0.9668 |

| V-S-E-ID (PBSF) | 0.9342 | 0.9610 | 0.9513 | 0.9466 | 0.9766 | 0.9640 | 0.9360 | 0.9706 | 0.9530 | 0.9389 | 0.9721 | 0.9552 |

| V-S-E-ID-CBS (PBSF) | 0.9146 | 0.9448 | 0.9457 | 0.9301 | 0.9555 | 0.9582 | 0.9186 | 0.9473 | 0.9484 | 0.9218 | 0.9496 | 0.9511 |

| {V,S,CBS} (SBSF) | 0.9477 | 0.9775 | 0.9731 | 0.9590 | 0.9836 | 0.9806 | 0.9496 | 0.9784 | 0.9744 | 0.9523 | 0.9799 | 0.9760 |

| {E,ID,CBS} (SBSF) | 0.9220 | 0.9609 | 0.9585 | 0.9380 | 0.9717 | 0.9692 | 0.9261 | 0.9636 | 0.9610 | 0.9291 | 0.9656 | 0.9631 |

| {V,S,E,ID} (SBSF) | 0.9337 | 0.9675 | 0.9516 | 0.9448 | 0.9741 | 0.9641 | 0.9357 | 0.9681 | 0.9531 | 0.9359 | 0.9680 | 0.9537 |

| {V,S,E,ID,CBS} (SBSF) | 0.9227 | 0.9647 | 0.9476 | 0.9436 | 0.9751 | 0.9621 | 0.9259 | 0.9666 | 0.9501 | 0.9309 | 0.9689 | 0.9532 |

| BS and BSF Methods | EPF-B-c | EPF-G-c | EPF-B-g | EPF-G-g | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| POA | PAA | PPR | POA | PAA | PPR | POA | PAA | PPR | POA | PAA | PPR | |

| Full bands | 0.9862 | 0.9848 | 0.9818 | 0.9894 | 0.9901 | 0.9863 | 0.9866 | 0.9852 | 0.9829 | 0.9853 | 0.9837 | 0.9820 |

| V | 0.9055 | 0.9332 | 0.8676 | 0.9139 | 0.9408 | 0.8776 | 0.9055 | 0.9344 | 0.8672 | 0.9067 | 0.9365 | 0.8697 |

| S | 0.8852 | 0.9205 | 0.8487 | 0.8910 | 0.9222 | 0.8556 | 0.8852 | 0.9190 | 0.8487 | 0.8850 | 0.9197 | 0.8489 |

| E | 0.9055 | 0.9332 | 0.8676 | 0.9139 | 0.9408 | 0.8776 | 0.9055 | 0.9344 | 0.8672 | 0.9067 | 0.9365 | 0.8697 |

| ID | 0.6543 | 0.7688 | 0.6659 | 0.6657 | 0.7814 | 0.6707 | 0.6543 | 0.7695 | 0.6662 | 0.6523 | 0.7676 | 0.6593 |

| CBS | 0.7227 | 0.8507 | 0.7320 | 0.7402 | 0.8567 | 0.7398 | 0.7218 | 0.8488 | 0.7292 | 0.7177 | 0.8439 | 0.7226 |

| UBS | 0.9811 | 0.9829 | 0.9731 | 0.9859 | 0.9865 | 0.9808 | 0.9820 | 0.9833 | 0.9750 | 0.9806 | 0.9810 | 0.9735 |

| V-S-CBS (PBSF) | 0.9088 | 0.9482 | 0.8867 | 0.9245 | 0.9566 | 0.9017 | 0.9126 | 0.9497 | 0.8900 | 0.9109 | 0.9474 | 0.8888 |

| E-ID-CBS (PBSF) | 0.8831 | 0.9268 | 0.8537 | 0.8990 | 0.9375 | 0.8653 | 0.8838 | 0.9290 | 0.8531 | 0.8822 | 0.9277 | 0.8520 |

| V-S-E-ID (PBSF) | 0.8662 | 0.9301 | 0.8441 | 0.8757 | 0.9365 | 0.8499 | 0.8695 | 0.9318 | 0.8456 | 0.8628 | 0.9298 | 0.8412 |

| V-S-E-ID-CBS (PBSF) | 0.9178 | 0.9497 | 0.8947 | 0.9260 | 0.9567 | 0.9030 | 0.9186 | 0.9498 | 0.8954 | 0.9175 | 0.9472 | 0.8941 |

| {V,S,CBS} (SBSF) | 0.8848 | 0.9135 | 0.8481 | 0.9014 | 0.9256 | 0.8623 | 0.8880 | 0.9152 | 0.8505 | 0.8882 | 0.9138 | 0.8497 |

| {E,ID,CBS} (SBSF) | 0.8504 | 0.9101 | 0.8368 | 0.8615 | 0.9210 | 0.8488 | 0.8508 | 0.9092 | 0.8370 | 0.8517 | 0.9088 | 0.8391 |

| {V,S,E,ID} (SBSF) | 0.9055 | 0.9332 | 0.8676 | 0.9139 | 0.9408 | 0.8776 | 0.9055 | 0.9344 | 0.8672 | 0.9067 | 0.9365 | 0.8697 |

| {V,S,E,ID,CBS} (SBSF) | 0.9055 | 0.9332 | 0.8676 | 0.9139 | 0.9408 | 0.8776 | 0.9055 | 0.9344 | 0.8672 | 0.9067 | 0.9365 | 0.8697 |

| BS and BSF Methods | POA | PAA | PPR | Iteration Times |

|---|---|---|---|---|

| Full bands | 0.9697 | 0.9662 | 0.9446 | 13 |

| V | 0.9621 | 0.9587 | 0.9467 | 19 |

| S | 0.9622 | 0.9573 | 0.9392 | 19 |

| E | 0.9622 | 0.9584 | 0.9445 | 18 |

| ID | 0.9588 | 0.9569 | 0.9432 | 20 |

| CBS | 0.9608 | 0.9581 | 0.9382 | 17 |

| UBS | 0.9609 | 0.9609 | 0.9418 | 15 |

| V-S-CBS (PBSF) | 0.9595 | 0.9530 | 0.9331 | 17 |

| E-ID-CBS (PBSF) | 0.9640 | 0.9597 | 0.9417 | 19 |

| V-S-E-ID (PBSF) | 0.9595 | 0.9520 | 0.9330 | 17 |

| V-S- E-ID-CBS (PBSF) | 0.9601 | 0.9548 | 0.9385 | 17 |

| {V,S,CBS} (SBSF) | 0.9577 | .09525 | 0.9423 | 16 |

| {E,ID,CBS} (SBSF) | 0.9615 | 0.9601 | 0.9439 | 19 |

| {V,S,E,ID} (SBSF) | 0.9645 | 0.9570 | 0.9423 | 19 |

| {V,S,E,ID,CBS} (SBSF) | 0.9659 | 0.9603 | 0.9457 | 19 |

| BS and BSF Methods | POA | PAA | PPR | Iteration Times |

|---|---|---|---|---|

| Full bands | 0.8853 | 0.8868 | 0.6878 | 75 |

| V | 0.8764 | 0.8731 | 0.6898 | 77 |

| S | 0.8722 | 0.8736 | 0.6868 | 92 |

| E | 0.8763 | 0.8730 | 0.6898 | 77 |

| ID | 0.8690 | 0.8553 | 0.6870 | 100 |

| CBS | 0.8842 | 0.8844 | 0.6906 | 100 |

| UBS | 0.8836 | 0.8857 | 0.6876 | 82 |

| V-S-CBS (PBSF) | 0.8886 | 0.8817 | 0.6962 | 92 |

| E-ID-CBS (PBSF) | 0.8965 | 0.8881 | 0.7078 | 99 |

| V-S-E-ID (PBSF) | 0.8904 | 0.8783 | 0.6974 | 87 |

| V-S-E-ID-CBS (PBSF) | 0.8993 | 0.8893 | 0.6998 | 100 |

| {V,S,CBS} (SBSF) | 0.8900 | 0.8878 | 0.6966 | 99 |

| {E,ID,CBS} (SBSF) | 0.8917 | 0.8906 | 0.6816 | 84 |

| {V,S,E,ID} (SBSF) | 0.8764 | 0.8731 | 0.6898 | 77 |

| {V,S,E,ID,CBS} (SBSF) | 0.8764 | 0.8731 | 0.6898 | 77 |

References

- Chang, C.-I. Hyperspectral Data Processing: Signal Processing Algorithm Design and Analysis; Wiley: Hoboken, NJ, USA, 2013. [Google Scholar]

- Mausel, P.W.; Kramber, W.J.; Lee, J.K. Optimum band selection for supervised classification of multispectral data. Photogramm. Eng. Remote Sens. 1990, 56, 55–60. [Google Scholar]

- Conese, C.; Maselli, F. Selection of optimum bands from TM scenes through mutual information analysis. ISPRS J. Photogramm. Remote Sens. 1993, 48, 2–11. [Google Scholar] [CrossRef]

- Chang, C.-I.; Du, Q.; Sun, T.S.; Althouse, M.L.G. A joint band prioritization and band decorrelation approach to band selection for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2631–2641. [Google Scholar] [CrossRef]

- Keshava, N. Distance metrics and band selection in hyperspectral processing with applications to material identification and spectral libraries. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1552–1565. [Google Scholar] [CrossRef]

- Martínez-Usó, A.; Pla, F.; Sotoca, J.M.; García-Sevilla, P. Clustering-based hyperspectral band selection using information measures. IEEE Trans. Geosci. Remote Sens. 2007, 45, 4158–4171. [Google Scholar] [CrossRef]

- Zare, A.; Gader, P. Hyperspectral band selection and endmember detection using sparisty promoting priors. IEEE Geosci. Remote Sens. Lett. 2008, 5, 256–260. [Google Scholar] [CrossRef]

- Du, Q.; Yang, H. Similarity-based unsupervised band selection for hyperspectral image analysis. IEEE Geosci. Remote Sens. Lett. 2008, 5, 564–568. [Google Scholar] [CrossRef]

- Xia, W.; Wang, B.; Zhang, L. Band selection for hyperspectral imagery: A new approach based on complex networks. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1229–1233. [Google Scholar] [CrossRef]

- Huang, R.; He, M. Band selection based feature weighting for classification of hyperspectral data. IEEE Geosci. Remote Sens. Lett. 2005, 2, 156–159. [Google Scholar] [CrossRef]

- Koonsanit, K.; Jaruskulchai, C.; Eiumnoh, A. Band selection for dimension reduction in hyper spectral image using integrated information gain and principal components analysis technique. Int. J. Mach. Learn. Comput. 2012, 2, 248–251. [Google Scholar] [CrossRef]

- Yang, H.; Su, Q.D.H.; Sheng, Y. An efficient method for supervised hyperspectral band selection. IEEE Geosci. Remote Sens. Lett. 2011, 8, 138–142. [Google Scholar] [CrossRef]

- Su, H.; Du, Q.; Chen, G.; Du, P. Optimized hyperspectral band selection using particle swam optimization. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2659–2670. [Google Scholar] [CrossRef]

- Su, H.; Yong, B.; Du, Q. Hyperspectral band selection using improved firefly algorithm. IEEE Geosci. Remote Sens. Lett. 2016, 13, 68–72. [Google Scholar] [CrossRef]

- Yuan, Y.; Zhu, G.; Wang, Q. Hyperspectral band selection using multitask sparisty pursuit. IEEE Trans. Geosci. Remote Sens. 2015, 53, 631–644. [Google Scholar] [CrossRef]

- Yuan, Y.; Zheng, X.; Lu, X. Discovering diverse subset for unsupervised hyperspectral band selection. IEEE Trans. Image Process. 2017, 26, 51–64. [Google Scholar] [CrossRef] [PubMed]

- Chen, P.; Jiao, L. Band selection for hyperspectral image classification with spatial-spectral regularized sparse graph. J. Appl. Remote Sens. 2017, 11, 1–8. [Google Scholar] [CrossRef]

- Geng, X.; Sun, K.; Ji, L. Band selection for target detection in hyperspectral imagery using sparse CEM. Remote Sens. Lett. 2014, 5, 1022–1031. [Google Scholar] [CrossRef]

- Chang, C.-I.; Wang, S. Constrained band selection for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1575–1585. [Google Scholar] [CrossRef]

- Chang, C.-I.; Liu, K.-H. Progressive band selection for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2002–2017. [Google Scholar] [CrossRef]

- Tschannerl, J.; Ren, J.; Yuen, P.; Sun, G.; Zhao, H.; Yang, Z.; Wang, Z.; Marshall, S. MIMR-DGSA: Unsupervised hyperspectral band selection based on information theory and a modified discrete gravitational search algorithm. Inf. Fusion 2019, 51, 189–200. [Google Scholar] [CrossRef] [Green Version]

- Zhu, G.; Huang, Y.; Li, S.; Tang, J.; Liang, D. Hyperspectral band selection via rank minimization. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2320–2324. [Google Scholar] [CrossRef]

- Wei, X.; Zhu, W.; Liao, B.; Cai, L. Scalable One-Pass Self-Representation Learning for Hyperspectral Band Selection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4360–4374. [Google Scholar] [CrossRef]

- Chang, C.-I.; Du, Q. Estimation of number of spectrally distinct signal sources in hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2004, 42, 608–619. [Google Scholar] [CrossRef]

- Chang, C.-I.; Jiao, X.; Du, Y.; Chen, H.M. Component-based unsupervised linear spectral mixture analysis for hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4123–4137. [Google Scholar] [CrossRef]

- Ren, H.; Chang, C.-I. Automatic spectral target recognition in hyperspectral imagery. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 1232–1249. [Google Scholar]

- Chang, C.-I.; Chen, S.Y.; Li, H.C.; Wen, C.-H. A comparative analysis among ATGP, VCA and SGA for finding endmembers in hyperspectral imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4280–4306. [Google Scholar] [CrossRef]

- Nascimento, J.M.P.; Bioucas-Dias, J.M. Vertex component analysis: A fast algorithm to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 898–910. [Google Scholar] [CrossRef]

- Chang, C.-I.; Wu, C.; Liu, W.; Ouyang, Y.C. A growing method for simplex-based endmember extraction algorithms. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2804–2819. [Google Scholar] [CrossRef]

- Chang, C.-I.; Jiao, X.; Du, Y.; Chang, M.-L. A review of unsupervised hyperspectral target analysis. EURASIP J. Adv. Signal Process. 2010, 2010, 503752. [Google Scholar] [CrossRef]

- Li, F.; Zhang, P.P.; Lu, H.C.H. Unsupervised Band Selection of Hyperspectral Images via Multi-Dictionary Sparse Representation. IEEE Access 2018, 6, 71632–71643. [Google Scholar] [CrossRef]

- Heinz, D.; Chang, C.-I. Fully constrained least squares linear mixture analysis for material quantification in hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 529–545. [Google Scholar] [CrossRef]

- Chang, C.-I. Real-Time Progressive Image Processing: Endmember Finding and Anomaly Detection; Springer: New York, NY, USA, 2016. [Google Scholar]

- Chang, C.-I. A unified theory for virtual dimensionality of hyperspectral imagery. In Proceedings of the High-Performance Computing in Remote Sensing Conference, SPIE 8539, Edinburgh, UK, 24–27 September 2012. [Google Scholar]

- Chang, C.-I. Real Time Recursive Hyperspectral Sample and Band Processing; Springer: New York, NY, USA, 2017. [Google Scholar]

- Chang, C.-I. Hyperspectral Imaging: Techniques for Spectral Detection and Classification; Kluwer Academic/Plenum Publishers: New York, NY, USA, 2003. [Google Scholar]

- Chang, C.-I. Spectral Inter-Band Discrimination Capacity of Hyperspectral Imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1749–1766. [Google Scholar] [CrossRef]

- Harsanyi, J.C.; Farrand, W.; Chang, C.-I. Detection of subpixel spectral signatures in hyperspectral image sequences. In Proceedings of the American Society of Photogrammetry & Remote Sensing Annual Meeting, Reno, NV, USA, 25–28 April 1994; pp. 236–247. [Google Scholar]

- Kang, X.; Li, S.; Benediktsson, J.A. Spectral-spatial hyperspectral image classification with edge-preserving filtering. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2666–2677. [Google Scholar] [CrossRef]

- Xue, B.; Yu, C.; Wang, Y.; Song, M.; Li, S.; Wang, L.; Chen, H.M.; Chang, C.-I. A subpixel target approach to hyperpsectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5093–5114. [Google Scholar] [CrossRef]

| BS Methods | Selected Bands (p = 18) | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| V | 60 | 61 | 67 | 66 | 65 | 59 | 57 | 68 | 62 | 64 | 56 | 78 | 77 | 76 | 79 | 63 | 53 | 80 |

| S | 78 | 80 | 93 | 91 | 92 | 95 | 89 | 94 | 90 | 88 | 102 | 96 | 79 | 82 | 105 | 62 | 107 | 108 |

| E | 65 | 60 | 67 | 53 | 66 | 61 | 52 | 68 | 59 | 64 | 62 | 78 | 77 | 57 | 79 | 49 | 76 | 56 |

| ID | 154 | 157 | 156 | 153 | 150 | 158 | 145 | 164 | 163 | 160 | 142 | 144 | 148 | 143 | 141 | 152 | 155 | 135 |

| CBS | 62 | 77 | 63 | 61 | 13 | 91 | 30 | 69 | 76 | 56 | 38 | 45 | 16 | 20 | 39 | 34 | 24 | 47 |

| UBS | 1 | 10 | 19 | 28 | 37 | 46 | 55 | 64 | 73 | 82 | 91 | 100 | 109 | 118 | 127 | 136 | 145 | 154 |

| SBSF Methods | Fused Bands (p = 18) | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| {V,S} | 78 | 80 | 62 | 79 | 60 | 61 | 67 | 93 | 66 | 91 | 65 | 92 | 59 | 95 | 57 | 89 | 68 | 94 |

| {E,ID} | 65 | 154 | 60 | 157 | 67 | 156 | 53 | 153 | 66 | 150 | 61 | 158 | 52 | 145 | 68 | 164 | 59 | 163 |

| {V,S,CBS} | 62 | 78 | 61 | 77 | 80 | 63 | 91 | 76 | 56 | 79 | 60 | 67 | 93 | 66 | 13 | 65 | 92 | 59 |

| {E,ID,CBS} | 62 | 77 | 61 | 76 | 56 | 65 | 154 | 60 | 157 | 63 | 67 | 156 | 53 | 153 | 13 | 66 | 150 | 91 |

| {V,S,E,ID} | 78 | 62 | 79 | 60 | 65 | 61 | 80 | 67 | 53 | 66 | 59 | 57 | 68 | 64 | 56 | 77 | 76 | 154 |

| {V,S,E,ID,CBS} | 62 | 78 | 61 | 77 | 76 | 56 | 79 | 60 | 65 | 80 | 63 | 67 | 53 | 66 | 91 | 59 | 57 | 68 |

| PBSF Methods | Fused Bands (p = 18) | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| V-S | 78 | 80 | 62 | 79 | 60 | 61 | 67 | 93 | 66 | 91 | 65 | 92 | 59 | 95 | 57 | 89 | 68 | 94 |

| E-ID | 65 | 154 | 60 | 157 | 67 | 156 | 53 | 153 | 66 | 150 | 61 | 158 | 52 | 145 | 68 | 164 | 59 | 163 |

| V-S-CBS | 62 | 78 | 61 | 77 | 80 | 63 | 91 | 76 | 56 | 79 | 60 | 67 | 93 | 66 | 13 | 65 | 92 | 59 |

| E-ID-CBS | 62 | 77 | 61 | 76 | 56 | 65 | 154 | 60 | 157 | 63 | 67 | 156 | 53 | 153 | 13 | 66 | 150 | 91 |

| V-S-E-ID | 62 | 65 | 60 | 67 | 78 | 66 | 61 | 53 | 80 | 154 | 52 | 93 | 68 | 91 | 59 | 157 | 64 | 92 |

| V-S-E-ID-CBS | 62 | 154 | 78 | 157 | 65 | 156 | 80 | 153 | 60 | 150 | 93 | 158 | 61 | 145 | 91 | 164 | 67 | 163 |

| Various BS and BSF Methods | p = 18 | p = 9 | Last Pixel Found as the Fifth R Panel Pixel Using p = 18 |

|---|---|---|---|

| Full bands | p11, p312, p411, p521 | p11, p312, p521 | no |

| V | p11, p312, p521 | p312, p521 | no |

| S | p11, p22, p311, p412, p521 | p11, p311, p521 | 16th pixel, p412 |

| E | p11, p311, p521 | p311, p521 | no |

| ID | p11, p211, p412, p521 | p11, p211, p412, p521 | no |

| CBS | p11, p312, p411, p521 | p312, p521 | no |

| UBS | p11, p211, p311, p412, p521 | p11, p311, p521 | 13th pixel, p412 |

| V-S-CBS (PBSF) | p11, p312, p42, p521 | P412, p521 | no |

| E-ID-CBS (PBSF) | p11, p22, p312, p412, p521 | p11, p412, p521 | 16th pixel, p412 |

| V-S-E-ID (PBSF) | p11, p312, p412, p521 | p521 | no |

| V-S- E-ID-CBS (PBSF) | p11, p312, p412, p521 | p11, p521, p53 | no |

| {V,S,CBS} (SBSF) | p11, p312, p521 | p412 | no |

| {E,ID,CBS} (SBSF) | p11, p311, p412, p521 | p22, p521 | no |

| {V,S,E,ID} (SBSF) | p11, p312, p412, p521 | p412, p521 | no |

| {V,S,E,ID,CBS} (SBSF) | p11, p211, p412, p521 | p11, p312, p521 | no |

| BS Methods | Unmixed Error |

|---|---|

| Full bands | 222.09 |

| UBS | 245.58 |

| V | 268.71 |

| S | 211.27 |

| E | 296.72 |

| ID | 22.104 |

| CBS | 207.32 |

| V-S-CBS (PBSF) | 209.22 |

| E-ID-CBS (PBSF) | 195.27 |

| V-S-E-ID (PBSF) | 201.51 |

| V-S-E-ID-CBS (PBSF) | 96.203 |

| {V,S,CBS} (SBSF) | 181.70 |

| {E,ID,CBS} (SBSF) | 228.63 |

| {V,S,E,ID} (SBSF) | 263.62 |

| {V,S,E,ID,CBS} (SBSF) | 249.34 |

| PF = 10−1 | PF = 10−2 | PF = 10−3 | PF = 10−4 | PF = 10−5 | |

|---|---|---|---|---|---|

| Purdue | 73/21 | 49/19 | 35/18 | 27/18 | 25/17 |

| Salinas | 32/33 | 28/24 | 25/21 | 21/21 | 20/20 |

| University of Pavia | 25/34 | 21/27 | 16/17 | 14/14 | 13/12 |

| BS methods | Purdue Indian Pines (18 bands) | Salinas (21 bands) | University of Pavia (14 bands) |

|---|---|---|---|

| UBS | 1/13/25/37/49/61/73/85/97/109/ 121/133/145/157/169/181/193/205 | 1/11/21/31/41/51/61/71/81/91/ 101/111/121/131/141/151/161/ 171/181/191/201 | 1/8/15/22/29/36/43/ 50/57/64/71/78/85/92 |

| V | 29/28/27/26/25/30/42/32/41/24/33/ 23/31/43/22/44/39/21 | 45/46/42/47/44/48/52/51/53/41/ 54/55/50/56/49/57/43/58/40/32/34 | 91/88/90/89/87/92/ 93/95/94/96/82/86/ 83/97 |

| S | 28/27/26/29/30/123/121/122/25/ 120/124/119/24/129/131/127/130/125 | 46/45/74/52/55/71/72/56/53/73/ 54/76/75/57/48/70/50/77/44/51/47 | 63/62/64/61/65/60/ 59/66/67/58/68/69/ 48/57 |

| E | 41/42/43/44/39/29/28/48/49/25/51/ 50/52/27/45/31/24/38 | 42/47/46/45/44/51/41/55/53/52/ 48/54/56/49/50/57/35/40/58/36/37 | 91/90/88/92/89/87/ 95/93/94/96/82/83/ 86/97 |

| ID | 156/157/158/220/155/159/161/160/ 162/95/4/219/154/2/190/32/153/1 | 107/108/109/110/111/112/113/ 114/115/116/152/153/154/155/ 156/157/158/159/160/161/162 | 8/10/9/11/7/12/13/ 14/15/6/16/17/18/19 |

| CBS (LCMV-BCC) | 9/114/153/198/191/159/152/163/ 161/130/167/150/219/108/160/ 180/215/213 | 153/154/113/152/167/114/223/ 222/224/166/115/107/112/168/ 116/165/221/109/174/151/218 | 37/38/39/40/36/32/ 41/33/42/31/34/30/ 43/35 |

| V-S-CBS (PBSF) | 9/28/29/114/27/153/26/198/25/191/ 30/159/24/152/123/163/42/161 | 45/153/46/154/47/113/52/152/44/ 167/55/114/48/223/51/222/56/224/ 53/166/54 | 37/63/38/91/39/62/ 40/88/36/64/32/90/ 41/61 |

| E-ID-CBS (PBSF) | 153/159/161/9/156/41/157/42/158/ 114/220/43/155/44/160/198/39/162 | 42/107/47/153/46/154/45/109/44/ 113/51/152/41/112/55/114/53/115/ 52/116/48 | 8/91/37/90/10/88/38/ 92/9/89/39/87/11/95 |

| V-S-E-ID (PBSF) | 28/29/27/41/26/25/30/42/43/44/39/ 156/32/157/24/33/123/23 | 45/46/42/52/47/55/44/53/48/54/ 56/51/41/107/108/50/74/49/109/ 57/43 | 91/88/90/89/87/92/ 95/8/63/10/62/93/9/94 |

| V-S- E-ID-CBS (PBSF) | 28/156/29/157/27/158/26/220/30/ 155/25/159/24/161/41/160/42/162 | 113/107/112/109/45/153/47/154/ 46/44/152/51/167/52/114/55/223/ 53/222/48/224 | 37/91/38/90/39/88/8/ 40/36/63/10/32/41/92 |

| {V,S,CBS} (SBSF) | 28/29/27/26/25/30/24/130/9/114/ 153/198/191/123/159/42/121/152 | 45/46/47/52/44/55/48/51/56/53/ 54/50/57/153/154/42/74/113/152/ 167/71 | 37/63/91/38/62/88/ 39/64/90/40/61/89/ 36/65 |

| {E,ID,CBS} (SBSF) | 153/159/161/160/219/9/41/156/42/ 114/157/43/158/44/198/220/39/155 | 107/153/154/109/113/152/112/ 114/115/116/42/47/108/46/45/110/ 44/111/167/51/41 | 8/37/91/10/38/90/9/ 39/88/11/40/92/7/36 |

| {V,S,E,ID} (SBSF) | 28/29/27/25/24/41/42/26/43/44/30/ 39/32/31/156/157/158/220 | 45/46/47/52/44/55/48/51/56/53/54/ 50/57/42/41/49/40/58/107/108/74 | 91/88/90/89/92/87/ 93/95/94/96/82/83/ 86/97 |

| {V,S,E,ID,CBS} (SBSF) | 28/29/27/25/24/41/42/26/43/153/ 44/30/39/159/161/32/160/130 | 45/46/47/52/44/55/48/51/56/53/ 54/50/57/42/107/153/154/109/113/ 152/112 | 91/88/90/89/92/87/ 93/95/94/96/82/83/ 86/97 |

| BS and BSF Methods | EPF-B-c | EPF-G-c | EPF-B-g | EPF-G-g | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| POA | PAA | PPR | POA | PAA | PPR | POA | PAA | PPR | POA | PAA | PPR | |

| Full bands | 0.8973 | 0.9282 | 0.9177 | 0.8896 | 0.9313 | 0.9186 | 0.8938 | 0.9269 | 0.9146 | 0.8932 | 0.9389 | 0.9121 |

| V | 0.8264 | 0.9011 | 0.8816 | 0.8297 | 0.9029 | 0.8805 | 0.8276 | 0.8938 | 0.8814 | 0.8255 | 0.9040 | 0.8782 |

| S | 0.8054 | 0.8161 | 0.7873 | 0.8096 | 0.8409 | 0.8573 | 0.8051 | 0.8126 | 0.7834 | 0.8000 | 0.8406 | 0.8223 |

| E | 0.8361 | 0.9062 | 0.8901 | 0.8296 | 0.9107 | 0.8826 | 0.8371 | 0.9027 | 0.8878 | 0.8352 | 0.9129 | 0.8859 |

| ID | 0.6525 | 0.5372 | 0.5232 | 0.6441 | 0.5289 | 0.5123 | 0.6532 | 0.5360 | 0.5282 | 0.6499 | 0.5360 | 0.5357 |

| CBS | 0.8119 | 0.8630 | 0.8630 | 0.8000 | 0.8010 | 0.8519 | 0.8116 | 0.8231 | 0.8628 | 0.8024 | 0.8407 | 0.8572 |

| V-S-CBS (PBSF) | 0.8336 | 0.8587 | 0.8687 | 0.8278 | 0.8255 | 0.8009 | 0.8363 | 0.8577 | 0.8698 | 0.8315 | 0.8526 | 0.8693 |

| E-ID-CBS (PBSF) | 0.7698 | 0.8091 | 0.7945 | 0.7473 | 0.7879 | 0.7728 | 0.7658 | 0.8076 | 0.7926 | 0.7650 | 0.8064 | 0.7949 |

| V-S-E-ID (PBSF) | 0.8315 | 0.9153 | 0.8836 | 0.8249 | 0.9078 | 0.8725 | 0.8321 | 0.9130 | 0.8830 | 0.8239 | 0.9045 | 0.8734 |

| V-S-E-ID-CBS (PBSF) | 0.6825 | 0.7197 | 0.7449 | 0.6623 | 0.6884 | 0.7266 | 0.6794 | 0.6865 | 0.7408 | 0.6791 | 0.7237 | 0.7403 |

| {V,S,CBS} (SBSF) | 0.8540 | 0.9036 | 0.8964 | 0.8529 | 0.8969 | 0.8942 | 0.8506 | 0.8881 | 0.8923 | 0.8531 | 0.9139 | 0.8935 |

| {E,ID,CBS} (SBSF) | 0.7793 | 0.7980 | 0.8099 | 0.7541 | 0.7733 | 0.7330 | 0.7710 | 0.7916 | 0.7991 | 0.7760 | 0.8059 | 0.8102 |

| {V,S,E,ID} (SBSF) | 0.7771 | 0.8484 | 0.8495 | 0.7704 | 0.8409 | 0.8247 | 0.7740 | 0.8491 | 0.8510 | 0.7761 | 0.8461 | 0.8441 |

| {V,S,E,ID,CBS} (SBSF) | 0.8300 | 0.8884 | 0.8677 | 0.8134 | 0.8680 | 0.8451 | 0.8279 | 0.8827 | 0.8483 | 0.8205 | 0.8814 | 0.8654 |

| BS and BSF Methods | POA | PAA | PPR | Iteration Times |

|---|---|---|---|---|

| Full bands | 0.9650 | 0.9673 | 0.9018 | 24 |

| V | 0.9715 | 0.9767 | 0.8909 | 30 |

| S | 0.9717 | 0.9750 | 0.8826 | 29 |

| E | 0.9700 | 0.9736 | 0.8940 | 30 |

| ID | 0.9728 | 0.9762 | 0.8940 | 31 |

| CBS | 0.9729 | 0.9791 | 0.8871 | 30 |

| UBS | 0.9699 | 0.9753 | 0.8852 | 29 |

| V-S-CBS (PBSF) | 0.9738 | 0.9745 | 0.8822 | 30 |

| E-ID-CBS (PBSF) | 0.9720 | 0.9760 | 0.8873 | 30 |

| V-S-E-ID (PBSF) | 0.9742 | 0.9773 | 0.8833 | 30 |

| V-S-E-ID-CBS (PBSF) | 0.9751 | 0.9760 | 0.8925 | 33 |

| {V,S,CBS} (SBSF) | 0.9701 | 0.9729 | 0.8781 | 27 |

| {E,ID,CBS} (SBSF) | 0.9737 | 0.9762 | 0.8912 | 31 |

| {V,S,E,ID} (SBSF) | 0.9723 | 0.9750 | 0.8953 | 31 |

| {V,S,E,ID,CBS} (SBSF) | 0.9701 | 0.9767 | 0.8867 | 31 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Wang, L.; Xie, H.; Chang, C.-I. Fusion of Various Band Selection Methods for Hyperspectral Imagery. Remote Sens. 2019, 11, 2125. https://doi.org/10.3390/rs11182125

Wang Y, Wang L, Xie H, Chang C-I. Fusion of Various Band Selection Methods for Hyperspectral Imagery. Remote Sensing. 2019; 11(18):2125. https://doi.org/10.3390/rs11182125

Chicago/Turabian StyleWang, Yulei, Lin Wang, Hongye Xie, and Chein-I Chang. 2019. "Fusion of Various Band Selection Methods for Hyperspectral Imagery" Remote Sensing 11, no. 18: 2125. https://doi.org/10.3390/rs11182125