Combing Triple-Part Features of Convolutional Neural Networks for Scene Classification in Remote Sensing

Abstract

:1. Introduction

2. Related Works

2.1. Convolutional Neural Network (CNN)

2.2. Bag-of-View-Word (BoVW)

2.3. Local Binary Pattern (LBP) Descriptor

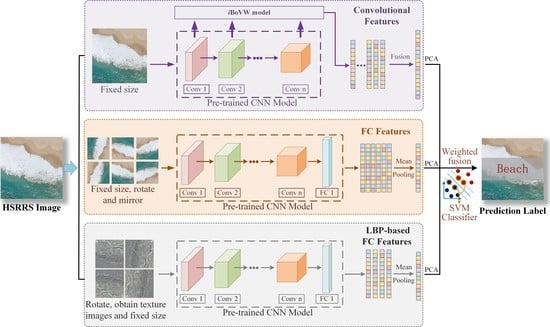

3. Proposed Framework

3.1. Convolutional Features

3.2. Features from the Fully Connected Layer

3.3. CNN-Based LBP Features

3.4. Feature Fusion and Classification

4. Experiments and Discussion

4.1. Dataset Description

4.2. Experimental Setup

4.3. Parameter Evaluation

4.4. Comparison and Analysis of Proposed Methods

4.5. Comparisons with the Most Recent Methods

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Cheng, G.; Han, J.W.; Lu, X.Q. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Zhou, W.X.; Newsam, S.; Li, C.M.; Shao, Z.F. PatternNet: A benchmark dataset for performance evaluation of remote sensing image retrieval. ISPRS J. Photogramm. Remote Sens. 2018, 145, 197–209. [Google Scholar] [CrossRef] [Green Version]

- Zhang, F.; Du, B.; Zhang, L.P. Scene Classification via a Gradient Boosting Random Convolutional Network Framework. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1793–1802. [Google Scholar] [CrossRef]

- Wang, Q.; Liu, S.T.; Chanussot, J.; Li, X.L. Scene Classification with Recurrent Attention of VHR Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1155–1167. [Google Scholar] [CrossRef]

- Pham, M.T.; Mercier, G.; Regniers, O.; Michel, J. Texture Retrieval from VHR Optical Remote Sensed Images Using the Local Extrema Descriptor with Application to Vineyard Parcel Detection. Remote Sens. 2016, 8, 368. [Google Scholar] [CrossRef]

- Napoletano, P. Visual descriptors for content-based retrieval of remote-sensing images. Int. J. Remote Sens. 2018, 39, 1343–1376. [Google Scholar] [CrossRef]

- Yang, Y.; Newsam, S. Geographic image retrieval using local invariant features. IEEE Trans. Geosci. Remote Sens. 2013, 51, 818–832. [Google Scholar] [CrossRef]

- Sun, W.W.; Wang, R.S. Fully Convolutional Networks for Semantic Segmentation of Very High Resolution Remotely Sensed Images Combined With DSM. IEEE Geosci. Remote Sens. Lett. 2018, 15, 474–478. [Google Scholar] [CrossRef]

- Hu, T.Y.; Yang, J.; Li, X.C.; Gong, P. Mapping Urban Land Use by Using Landsat Images and Open Social Data. Remote Sens. 2016, 8, 151. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, B.; Song, Y.M. Urban land-use mapping using a deep convolutional neural network with high spatial resolution multispectral remote sensing imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Zhong, Y.F.; Han, X.B.; Zhang, L.P. Multi-class geospatial object detection based on a position-sensitive balancing framework for high spatial resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2018, 138, 281–294. [Google Scholar] [CrossRef]

- Deng, Z.P.; Sun, H.; Zhou, S.L.; Zhao, J.P.; Lei, L.; Zou, H.X. Multi-scale object detection in remote sensing imagery with convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2018, 145, 3–22. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.W.; Zhou, P.C.; Guo, L. Multi-class geospatial object detection and geographic image classification based on collection of part detectors. ISPRS J. Photogramm. Remote Sens. 2014, 98, 119–132. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the use of unmanned aerial systems for environmental monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef]

- Khan, N.; Chaudhuri, U.; Banerjee, B.; Chaudhuri, S. Graph convolutional network for multi-label VHR remote sensing scene recognition. Neurocomputing 2019, 357, 36–46. [Google Scholar] [CrossRef]

- He, N.J.; Fang, L.Y.; Li, S.T.; Plaza, A.; Plaza, J. Remote Sensing Scene Classification Using Multilayer Stacked Covariance Pooling. IEEE Trans. Geosci. Remote Sens. 2018, 51, 6899–6910. [Google Scholar] [CrossRef]

- Liu, N.; Wan, L.H.; Zhang, Y.; Zhou, T.; Huo, H.; Fang, T. Exploiting Convolutional Neural Networks with Deeply Local Description for Remote Sensing Image Classification. IEEE Access 2018, 6, 11215–11228. [Google Scholar] [CrossRef]

- Jin, P.; Xia, G.S.; Hu, F.; Lu, Q.K.; Zhang, L.P. AID++: An Updated Version of AID on Scene Classification. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Valencia, Spain, 22–27 July 2018; pp. 4721–4724. [Google Scholar]

- Hu, F.; Xia, G.S.; Yang, W.; Zhang, L.P. Recent advances and opportunities in scene classification of aerial images with deep models. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Valencia, Spain, 22–27 July 2018; pp. 4371–4374. [Google Scholar]

- Romero, A.; Gatta, C.; Camps-Valls, G. Unsupervised Deep Feature Extraction for Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1349–1362. [Google Scholar] [CrossRef]

- Yu, Y.L.; Liu, F.X. Dense connectivity based two-stream deep feature fusion framework for aerial scene classification. Remote Sens. 2018, 10, 1158. [Google Scholar] [CrossRef]

- Xia, G.S.; Hu, J.; Hu, F.; Shi, B.; Bai, X.; Zhong, Y.; Zhang, L.; Lu, X. AID: A benchmark data set for performance evaluation of aerial scene classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef]

- Luo, B.; Jiang, S.J.; Zhang, L.P. Indexing of remote sensing images with different resolutions by multiple features. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 1899–1912. [Google Scholar] [CrossRef]

- Yang, Y.; Newsam, S. Comparing SIFT descriptors and Gabor texture features for classification of remote sensed imagery. In Proceedings of the 15th IEEE International Conference on Image Processing (ICIP 2008), San Diego, CA, USA, 12–15 October 2008; pp. 1852–1855. [Google Scholar]

- Yang, Y.; Newsam, S. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010; pp. 270–279. [Google Scholar]

- Zhu, Q.Q.; Zhong, Y.F.; Zhao, B.; Xia, G.S.; Zhang, L.P. Bag-of-visual-words scene classifier with local and global features for high spatial resolution remote sensing imagery. IEEE Geosci. Remote Sens. Lett. 2016, 13, 747–751. [Google Scholar] [CrossRef]

- Zhao, L.J.; Tang, P.; Huo, L.Z. Land-use scene classification using a concentric circle-structured multiscale bag-of-visual-words model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4620–4631. [Google Scholar] [CrossRef]

- Wu, H.; Liu, B.Z.; Su, W.H.; Zhang, W.; Sun, J.G. Hierarchical coding vectors for scene level land-use classification. Remote Sens. 2016, 8, 436. [Google Scholar] [CrossRef]

- Qi, K.L.; Wu, H.Y.; Shen, C.; Gong, J.Y. Land-Use Scene Classification in High-Resolution Remote Sensing Images Using Improved Correlatons. Remote Sens. Lett. 2015, 12, 2403–2407. [Google Scholar]

- Perronnin, F.; Sánchez, J.; Mensink, T. Improving the Fisher Kernel for Large-Scale Image Classification. In Proceedings of the European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; pp. 143–156. [Google Scholar]

- Wang, G.L.; Fan, B.; Xiang, S.M.; Pan, C.H. Aggregating rich hierarchical features for scene classification in remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4104–4115. [Google Scholar] [CrossRef]

- Lu, X.Q.; Zheng, X.T.; Yuan, Y. Remote Sensing Scene Classification by Unsupervised Representation Learning. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5148–5157. [Google Scholar] [CrossRef]

- Zou, J.; Li, W.; Chen, C.; Du, Q. Scene classification using local and global features with collaborative representation fusion. Inf. Sci. 2018, 348, 209–226. [Google Scholar] [CrossRef]

- Fernandez-Beltran, R.; Haut, J.M.; Paoletti, M.E.; Plaza, J.; Plaza, A.; Pla, F. Remote Sensing Image Fusion Using Hierarchical Multimodal Probabilistic Latent Semantic Analysis. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4982–4993. [Google Scholar] [CrossRef]

- Zhong, Y.F.; Zhu, Q.Q.; Zhang, L.P. Scene Classification Based on the Multifeature Fusion Probabilistic Topic Model for High Spatial Resolution Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6207–6222. [Google Scholar] [CrossRef]

- Ball, J.E.; Anderson, D.T.; Chan, C.S. Comprehensive survey of deep learning in remote sensing: Theories, tools, and challenges for the community. J. Appl. Remote Sens. 2017, 11, 042609. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Yang, H.P.; Yu, B.; Luo, J.C.; Chen, F. Semantic segmentation of high spatial resolution images with deep neural networks. GISci. Remote Sens. 2019, 56, 749–768. [Google Scholar] [CrossRef]

- Wang, Q.; Yuan, Z.H.; Du, Q.; Li, X.L. GETNET: A General End-to-End 2-D CNN Framework for Hyperspectral Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3–13. [Google Scholar] [CrossRef]

- Yuan, Q.Q.; Zhang, Q.; Li, J.; Shen, H.F.; Zhang, L.P. Hyperspectral Image Denoising Employing a Spatial-Spectral Deep Residual Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1205–1218. [Google Scholar] [CrossRef]

- Jian, L.; Gao, F.H.; Ren, P.; Song, Y.Q.; Luo, S.H. A Noise-Resilient Online Learning Algorithm for Scene Classification. Remote Sens. 2018, 10, 1836. [Google Scholar] [CrossRef]

- Scott, G.J.; Hagan, K.C.; Marcum, R.A.; Hurt, J.A.; Anderson, D.T.; Davis, C.H. Enhanced Fusion of Deep Neural Networks for Classification of Benchmark High-Resolution Image Data Sets. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1451–1455. [Google Scholar] [CrossRef]

- Zhang, W.; Tang, P.; Zhao, L.J. Remote Sensing Image Scene Classification Using CNN-CapsNet. Remote Sens. 2019, 11, 494. [Google Scholar] [CrossRef]

- Chen, J.B.; Wang, C.Y.; Ma, Z.; Chen, J.S.; He, D.X.; Ackland, S. Remote Sensing Scene Classification Based on Convolutional Neural Networks Pre-Trained Using Attention-Guided Sparse Filters. Remote Sens. 2018, 10, 290. [Google Scholar] [CrossRef]

- Liu, Y.S.; Suen, C.Y.; Liu, Y.B.; Ding, L.W. Scene Classification Using Hierarchical Wasserstein CNN. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2494–2509. [Google Scholar] [CrossRef]

- Othman, E.; Bazi, Y.; Melgani, F.; Alhichri, H.; Alajlan, N.; Zuair, M. Domain Adaptation Network for Cross-Scene Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4441–4456. [Google Scholar] [CrossRef]

- Liu, Y.S.; Huang, C. Scene Classification via Triplet Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 220–237. [Google Scholar] [CrossRef]

- Zou, Q.; Ni, L.H.; Zhang, T.; Wang, Q. Deep Learning Based Feature Selection for Remote Sensing Scene Classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2321–2325. [Google Scholar] [CrossRef]

- Penatti, O.A.; Nogueira, K.; dos Santos, J.A. Do deep features generalize from everyday objects to remote sensing and aerial scenes domains? In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7 June 2015; pp. 44–51. [Google Scholar]

- Hu, F.; Xia, G.S.; Hu, J.W.; Zhang, L.P. Transferring Deep Convolutional Neural Networks for the Scene Classification of High-Resolution Remote Sensing Imagery. Remote Sens. 2015, 7, 14680–14707. [Google Scholar] [CrossRef] [Green Version]

- Liu, B.D.; Jie, M.; Xie, W.Y.; Shao, S.; Li, Y.; Wang, Y.J. Weighted Spatial Pyramid Matching Collaborative Representation for Remote-Sensing-Image Scene Classification. Remote Sens. 2019, 11, 518. [Google Scholar] [CrossRef]

- Liu, B.D.; Xie, W.Y.; Meng, J.; Li, Y.; Wang, Y.J. Hybrid collaborative representation for remote-sensing image scene classification. Remote Sens. 2018, 10, 1934. [Google Scholar] [CrossRef]

- Flores, E.; Zortea, M.; Scharcanski, J. Dictionaries of deep features for land-use scene classification of very high spatial resolution images. Pattern Recognit. 2019, 89, 32–44. [Google Scholar] [CrossRef]

- Li, E.Z.; Xia, J.S.; Du, P.J.; Lin, C.; Samat, A. Integrating Multilayer Features of Convolutional Neural Networks for Remote Sensing Scene Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5653–5665. [Google Scholar] [CrossRef]

- Chaib, S.; Liu, H.; Gu, Y.F.; Yao, H.X. Deep Feature Fusion for VHR remote sensing scene classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4775–4784. [Google Scholar] [CrossRef]

- Anwer, R.M.; Khan, F.S.; van deWeijer, J.; Monlinier, M.; Laaksonen, J. Binary patterns encoded convolutional neural networks for texture recognition and remote sensing scene classification. ISPRS J. Photogramm. Remote Sens. 2018, 138, 74–85. [Google Scholar] [CrossRef] [Green Version]

- Gu, J.X.; Wang, Z.H.; Kuen, J.; Ma, L.Y.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.X.; Wang, G.; Cai, J.F.; et al. Recent Advances in Convolutional Neural Networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Yang, X.; He, H.B.; Wei, Q.L.; Luo, B. Reinforcement learning for robust adaptive control of partially unknown nonlinear systems subject to unmatched uncertainties. Inf. Sci. 2018, 463, 307–322. [Google Scholar] [CrossRef]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, S.; Darrell, T. Caffe: Convolutional architecture for fast feature embedding. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 675–678. [Google Scholar]

- Lima, E.; Sun, X.; Dong, J.Y.; Wang, H.; Yang, Y.T.; Liu, L.P. Learning and Transferring Convolutional Neural Network Knowledge to Ocean Front Recognition. IEEE Geosci. Remote Sens. Lett. 2017, 14, 354–358. [Google Scholar] [CrossRef]

- Zhao, F.A.; Mu, X.D.; Yang, Z.; Yi, Z.X. Hierarchical feature coding model for high-resolution satellite scene classification. J. Appl. Remote Sens. 2019, 13, 016520. [Google Scholar] [CrossRef]

- Ahonen, T.; Hadid, A.; Pietikainen, M. Face description with local binary patterns: Application to face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 2037–2041. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Chen, C.; Su, H.J.; Du, Q. Local Binary Patterns and Extreme Learning Machine for Hyperspectral Imagery Classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3681–3693. [Google Scholar] [CrossRef]

- Huang, H.; Li, Z.Y.; Pan, Y.S. Multi-Feature Manifold Discriminant Analysis for Hyperspectral Image Classification. Remote Sens. 2019, 11, 651. [Google Scholar] [CrossRef]

- Yang, F.; Xu, Q.Z.; Li, B. Ship Detection From Optical Satellite Images Based on Saliency Segmentation and Structure-LBP Feature. IEEE Geosci. Remote Sens. Lett. 2017, 14, 602–606. [Google Scholar] [CrossRef]

- Fan, R.E.; Chang, K.W.; Hsieh, C.J.; Wang, X.R.; Lin, C.J. LIBLINEAR: A library for large linear classification. J. Mach. Learn. Res. 2008, 9, 1871–1874. [Google Scholar]

- Vedaldi, A.; Lenc, K. Matconvnet: Convolutional neural networks for matlab. In Proceedings of the 23rd ACM International Conference on Multimedia, Brisbane, Australia, 26–30 October 2015; ACM: New York, NY, USA, 2015; pp. 689–692. [Google Scholar]

- Levi, G.; Hassner, T. Emotion recognition in the wild via convolutional neural networks and mapped binary patterns. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, Seattle, WA, USA, 9–13 Novmber 2015; pp. 503–510. [Google Scholar]

- Negrel, R.; Picard, D.; Gosselin, P.H. Evaluation of second-order visual features for land-use classification. In Proceedings of the 2014 12th International Workshop on Content-Based Multimedia Indexing (CBMI 2014), Klagenfurt, Austria, 18–20 June 2014; pp. 1–5. [Google Scholar]

- Huang, L.H.; Chen, C.; Li, W.; Du, Q. Remote sensing image scene classification using multi-scale completed local binary patterns and fisher vectors. Remote Sens. 2016, 8, 483. [Google Scholar] [CrossRef]

- Nogueira, K.; Penatti, O.A.B.; dos Santos, J.A. Towards better exploiting convolutional neural networks for remote sensing scene classification. Pattern Recognit. 2017, 61, 539–556. [Google Scholar] [CrossRef] [Green Version]

- Ji, W.J.; Li, X.L.; Lu, X.Q. Bidirectional Adaptive Feature Fusion for Remote Sensing Scene Classification. In Proceedings of the Second CCF Chinese Conference (CCCV 2017), Tianjin, China, 11–14 October 2017; pp. 486–497. [Google Scholar]

- Weng, Q.; Mao, Z.Y.; Lin, J.W.; Guo, W.Z. Land-use classification via extreme learning classifier based on deep convolutional features. IEEE Geosci. Remote Sens. Lett. 2017, 14, 704–708. [Google Scholar] [CrossRef]

- Bian, X.Y.; Chen, C.; Tian, L.; Du, Q. Fusing local and global features for high-resolution scene classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2889–2901. [Google Scholar] [CrossRef]

- Qi, K.L.; Yang, C.; Guan, Q.F.; Wu, H.Y.; Gong, J.Y. A Multiscale Deeply Described Correlatons-Based Model for Land-Use Scene Classification. Remote Sens. 2017, 9, 917. [Google Scholar] [CrossRef]

- Yuan, B.H.; Li, S.J.; Li, N. Multiscale deep features learning for land-use scene recognition. J. Appl. Remote Sens. 2018, 12, 015010. [Google Scholar] [CrossRef]

- Liu, Q.S.; Hang, R.L.; Song, H.H.; Li, Z. Learning Multiscale Deep Features for High-Resolution Satellite Image Scene Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 117–126. [Google Scholar] [CrossRef]

- Liu, Y.S.; Liu, Y.B.; Ding, L.W. Scene Classification Based on Two-Stage Deep Feature Fusion. IEEE Geosci. Remote Sens. Lett. 2018, 15, 183–186. [Google Scholar] [CrossRef]

- Yu, Y.; Liu, F. Aerial Scene Classification via Multilevel Fusion Based on Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 287–291. [Google Scholar] [CrossRef]

| Name | ♯ of Images | Name | ♯ of Images | Name ♯ of Images | |

|---|---|---|---|---|---|

| airport | 360 | farmland | 370 | port | 380 |

| bare land | 310 | forest | 250 | railway station | 260 |

| baseball field | 220 | industrial | 390 | resort | 290 |

| beach | 400 | meadow | 280 | river | 410 |

| bridge | 360 | medium residential | 290 | school | 300 |

| center | 260 | mountain | 340 | sparse residential | 300 |

| church | 240 | park | 350 | square | 330 |

| commercial | 350 | parking | 390 | stadium | 290 |

| dense residential | 410 | playground | 370 | storage tanks | 360 |

| desert | 300 | pond | 420 | viaduct | 420 |

| Method | Published Time | Classification Accuracy (%) |

|---|---|---|

| VLAD [69] | 2014 | 92.50 |

| VLAT [69] | 2014 | 94.30 |

| MS-CLBP + FV [70] | 2016 | 93.00 ± 1.20 |

| OverFeat [71] | 2017 | 90.91 ± 1.19 |

| GoogLeNet [22] | 2017 | 94.31 ± 0.89 |

| CaffeNet [22] | 2017 | 95.02 ± 0.81 |

| VGG-VD-16 [22] | 2017 | 95.21 ± 1.20 |

| Bidirectional adaptive feature fusion [72] | 2017 | 95.48 |

| CNN-ELM [73] | 2017 | 95.62 ± 0.32 |

| salMLBP-CLM [74] | 2017 | 95.75 ± 0.80 |

| TEX-Net-LF [56] | 2017 | 96.62 ± 0.49 |

| MDDC [75] | 2017 | 96.92 ± 0.57 |

| CaffeNet (conv1 ∼ 5 + fc1) [54] | 2017 | 97.76 ± 0.46 |

| DCA by concatenation [55] | 2017 | 96.90 ± 0.56 |

| DCA by addition [55] | 2017 | 96.90 ± 0.09 |

| DAN (with adaptation) [46] | 2017 | 96.51 ± 0.36 |

| SSF-AlexNet [44] | 2018 | 92.43 |

| Aggregate strategy 1 [17] | 2018 | 97.28 |

| Aggregate strategy 2 [17] | 2018 | 97.40 |

| LASC-CNN (multiscale) [76] | 2018 | 97.14 |

| SPP-net+MKL [77] | 2018 | 96.38 ± 0.92 |

| VGG19 + Hybrid-KCRC (RBF) [52] | 2018 | 96.26 |

| pre-trained ResNet-50 + SRC [53] | 2019 | 96.67 |

| VGG19 + SPM-CRC [51] | 2019 | 96.02 |

| VGG19 + WSPM-CRC [51] | 2019 | 96.14 |

| CTFCNN | Ours | 98.44 ± 0.58 |

| Method | Published Time | Classification Accuracy (%) |

|---|---|---|

| MS-CLBP+FV [70] | 2017 | 86.48 ± 0.27 |

| GoogLeNet [22] | 2017 | 86.39 ± 0.55 |

| VGG-VD-16 [22] | 2017 | 89.64 ± 0.36 |

| CaffeNet [22] | 2017 | 89.53 ± 0.31 |

| DCA with concatenation [55] | 2017 | 89.71 ± 0.33 |

| Fusion by concatenation [55] | 2017 | 91.86 ± 0.28 |

| Fusion by addition [55] | 2017 | 91.87 ± 0.36 |

| Bidirectional adaptive feature fusion [72] | 2017 | 93.56 |

| salMLBP-CLM [74] | 2017 | 89.76 ± 0.45 |

| TEX-Net-LF [56] | 2017 | 92.96 ± 0.18 |

| Converted CaffeNet [78] | 2018 | 92.17 ± 0.31 |

| Two-stage deep feature fusion [78] | 2018 | 94.65 ± 0.33 |

| Multilevel fusion [79] | 2018 | 94.17 ± 0.32 |

| ARCNet-VGG16 [4] | 2019 | 93.10 ± 0.55 |

| VGG19 + Hybrid-KCRC (RBF) [52] | 2018 | 91.82 |

| VGG-16-CapsNet [43] | 2019 | 94.74 ± 0.17 |

| VGG19 + SPM-CRC [51] | 2019 | 92.55 |

| VGG19 + WSPM-CRC [51] | 2019 | 92.57 |

| CTFCNN | Ours | 94.91 ± 0.24 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, H.; Xu, K. Combing Triple-Part Features of Convolutional Neural Networks for Scene Classification in Remote Sensing. Remote Sens. 2019, 11, 1687. https://doi.org/10.3390/rs11141687

Huang H, Xu K. Combing Triple-Part Features of Convolutional Neural Networks for Scene Classification in Remote Sensing. Remote Sensing. 2019; 11(14):1687. https://doi.org/10.3390/rs11141687

Chicago/Turabian StyleHuang, Hong, and Kejie Xu. 2019. "Combing Triple-Part Features of Convolutional Neural Networks for Scene Classification in Remote Sensing" Remote Sensing 11, no. 14: 1687. https://doi.org/10.3390/rs11141687