Machine Learning Approaches for Detecting Tropical Cyclone Formation Using Satellite Data

Abstract

:1. Introduction

2. Data and Methods

2.1. Data and Preprocessing

2.2. Sampling

2.3. Model Construction

2.3.1. Linear Discriminant Analysis (LDA)

2.3.2. Decision Trees (DT)

2.3.3. Random Forests (RF)

2.3.4. Support Vector Machines (SVM)

2.4. Verification Methods

3. Results

3.1. Model Calibration

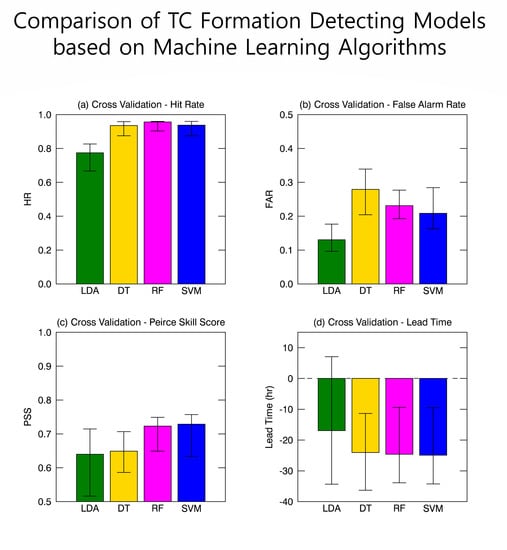

3.2. Verification

4. Discussions

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Pielke, R.A., Jr.; Gratz, J.; Landsea, C.W.; Collins, D.; Saunders, M.A.; Musulin, R. Normalized hurricane damage in the united states: 1900–2005. Nat. Hazards Rev. 2008, 9, 29–42. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, Q.; Wu, L. Tropical Cyclone Damages in China 1983–2006. Am. Meteorol. Soc. 2009, 90, 489–496. [Google Scholar] [CrossRef] [Green Version]

- Halperin, D.J.; Fuelberg, H.E.; Hart, R.E.; Cossuth, J.H.; Sura, P.; Pasch, R.J. An Evaluation of Tropical Cyclone Genesis Forecasts from Global Numerical Models. Weather. Forecast. 2013, 28, 1423–1445. [Google Scholar] [CrossRef]

- Burton, D.; Bernardet, L.; Faure, G.; Herndon, D.; Knaff, J.; Li, Y.; Mayers, J.; Radjab, F.; Sampson, C.; Waqaicelua, A. Structure and intensity change: Operational guidance. In Proceedings of the 7th International Workshop on Tropical Cyclones, La Réunion, France, 15–20 November 2010. [Google Scholar]

- Park, M.-S.; Elsberry, R.L. Latent Heating and Cooling Rates in Developing and Nondeveloping Tropical Disturbances during TCS-08: TRMM PR versus ELDORA Retrievals*. J. Atmos. Sci. 2013, 70, 15–35. [Google Scholar] [CrossRef] [Green Version]

- Schumacher, A.B.; DeMaria, M.; Knaff, J.A. Objective Estimation of the 24-h Probability of Tropical Cyclone Formation. Weather. Forecast. 2009, 24, 456–471. [Google Scholar] [CrossRef] [Green Version]

- Kalnay, E.; Kanamitsu, M.; Kistler, R.; Collins, W.; Deaven, D.; Gandin, L.; Iredell, M.; Saha, S.; White, G.; Woollen, J.; et al. The NCEP/NCAR 40-Year Reanalysis Project. Am. Meteorol. Soc. 1996, 77, 437–471. [Google Scholar] [CrossRef] [Green Version]

- Hennon, C.C.; Hobgood, J.S. Forecasting Tropical Cyclogenesis over the Atlantic Basin Using Large-Scale Data. Mon. Weather. Rev. 2003, 131, 2927–2940. [Google Scholar] [CrossRef]

- Fan, K. A Prediction Model for Atlantic Named Storm Frequency Using a Year-by-Year Increment Approach. Weather. Forecast. 2010, 25, 1842–1851. [Google Scholar] [CrossRef]

- Houze, R.A., Jr. Clouds in tropical cyclones. Mon. Weather Rev. 2010, 138, 293–344. [Google Scholar] [CrossRef]

- Hennon, C.C.; Marzban, C.; Hobgood, J.S. Improving Tropical Cyclogenesis Statistical Model Forecasts through the Application of a Neural Network Classifier. Weather. Forecast. 2005, 20, 1073–1083. [Google Scholar] [CrossRef] [Green Version]

- Rhee, J.; Im, J.; Carbone, G.J.; Jensen, J.R. Delineation of climate regions using in-situ and remotely-sensed data for the Carolinas. Remote. Sens. Environ. 2008, 112, 3099–3111. [Google Scholar] [CrossRef]

- DeFries, R. Multiple Criteria for Evaluating Machine Learning Algorithms for Land Cover Classification from Satellite Data. Remote. Sens. Environ. 2000, 74, 503–515. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. Isprs J. Photogramm. Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Park, M.-S.; Kim, M.; Lee, M.-I.; Im, J.; Park, S. Detection of tropical cyclone genesis via quantitative satellite ocean surface wind pattern and intensity analyses using decision trees. Remote. Sens. Environ. 2016, 183, 205–214. [Google Scholar] [CrossRef]

- Zhang, W.; Fu, B.; Peng, M.S.; Li, T. Discriminating Developing versus Nondeveloping Tropical Disturbances in the Western North Pacific through Decision Tree Analysis. Weather. Forecast. 2015, 30, 446–454. [Google Scholar] [CrossRef]

- Bayler, G.; Lewit, H. The Navy Operational Global and Regional Atmospheric Prediction Systems at the Fleet Numerical Oceanography Center. Weather. Forecast. 1992, 7, 273–279. [Google Scholar] [CrossRef] [Green Version]

- Gaiser, P.; Germain, K.S.; Twarog, E.; Poe, G.; Purdy, W.; Richardson, D.; Grossman, W.; Jones, W.L.; Spencer, D.; Golba, G.; et al. The WindSat spaceborne polarimetric microwave radiometer: sensor description and early orbit performance. IEEE Trans. Geosci. Sens. 2004, 42, 2347–2361. [Google Scholar] [CrossRef] [Green Version]

- Han, H.; Lee, S.; Im, J.; Kim, M.; Lee, M.-I.; Ahn, M.H.; Chung, S.-R. Detection of Convective Initiation Using Meteorological Imager Onboard Communication, Ocean, and Meteorological Satellite Based on Machine Learning Approaches. Remote. Sens. 2015, 7, 9184–9204. [Google Scholar] [CrossRef] [Green Version]

- Kim, D.H.; Ahn, M.H. Introduction of the in-orbit test and its performance for the first meteorological imager of the Communication, Ocean, and Meteorological Satellite. Atmos. Meas. Tech. 2014, 7, 2471–2485. [Google Scholar] [CrossRef] [Green Version]

- Sesnie, S.E.; Finegan, B.; Gessler, P.E.; Thessler, S.; Bendana, Z.R.; Smith, A.M.S. The multispectral separability of Costa Rican rainforest types with support vector machines and Random Forest decision trees. Int. J. Sens. 2010, 31, 2885–2909. [Google Scholar] [CrossRef]

- Ritchie, E.A.; Holland, G.J. Scale Interactions during the Formation of Typhoon Irving. Mon. Weather. Rev. 1997, 125, 1377–1396. [Google Scholar] [CrossRef]

- Usama, F. Data mining and knowledge discovery in databases: Implications for scientific databases. In Proceedings of the 9th International Conference on Scientific and Statistical Database Management (SSDBM’97), Olympia, WA, USA, 11–13 August 1997. [Google Scholar]

- Quinlan, J.R. Simplifying decision trees. Int. J. Man-Mach. Stud. 1987, 27, 221–234. [Google Scholar] [CrossRef] [Green Version]

- Helms, C.N.; Knapp, K.R.; Bowen, A.R.; Hennon, C.C. An Objective Algorithm for Detecting and Tracking Tropical Cloud Clusters: Implications for Tropical Cyclogenesis Prediction. J. Atmos. Ocean. Technol. 2011, 28, 1007–1018. [Google Scholar]

- McGarigal, K.; Cushman, S.A.; Ene, E. Fragstats v4: Spatial Pattern Analysis Program for Categorical and Continuous Maps. Computer software program produced by the authors at the University of Massachusetts, Amherst. Available online: http://www. umass. edu/landeco/research/fragstats/fragstats. html (accessed on 18 May 2019).

- Refaeilzadeh, P.; Tang, L.; Liu, H. Cross-validation. In Encyclopedia of Database Systems, 1st ed.; Liu, L., Özsu, M.T., Eds.; Springer US: New York, NY, USA, 2009; Volume 1, pp. 532–538. [Google Scholar]

- Bradford, J.P.; Kunz, C.; Kohavi, R.; Brunk, C.; Brodley, C.E. Pruning decision trees with misclassification costs. In Proceedings of the European Conference on Machine Learning, Chemnitz, Germany, 21–23 April 1998. [Google Scholar]

- Cao, J.; Liu, K.; Liu, L.; Zhu, Y.; Li, J.; He, Z. Identifying Mangrove Species Using Field Close-Range Snapshot Hyperspectral Imaging and Machine-Learning Techniques. Remote. Sens. 2018, 10, 2047. [Google Scholar] [CrossRef]

- Switzer, P. Extensions of linear discriminant analysis for statistical classification of remotely sensed satellite imagery. Math. Geosci. 1980, 12, 367–376. [Google Scholar] [CrossRef]

- Roth, V.; Steinhage, V. Nonlinear discriminant analysis using kernel functions. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 29 November 1999. [Google Scholar]

- Peirce, C.S. The numerical measure of the success of predictions. Science 1884, 4, 453–454. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.; Han, H.; Im, J.; Jang, E.; Lee, M.-I. Detection of deterministic and probabilistic convection initiation using Himawari-8 Advanced Himawari Imager data. Atmos. Meas. Tech. 2017, 10, 1859–1874. [Google Scholar] [CrossRef] [Green Version]

- Im, J.; Jensen, J.R.; Coleman, M.; Nelson, E. Hyperspectral remote sensing analysis of short rotation woody crops grown with controlled nutrient and irrigation treatments. Geocarto Int. 2009, 24, 293–312. [Google Scholar] [CrossRef] [Green Version]

- Kim, Y.H.; Im, J.; Ha, H.K.; Choi, J.-K.; Ha, S. Machine learning approaches to coastal water quality monitoring using GOCI satellite data. GISci. Remote Sens. 2014, 51, 158–174. [Google Scholar] [CrossRef]

- Rhee, J.; Im, J. Meteorological drought forecasting for ungauged areas based on machine learning: Using long-range climate forecast and remote sensing data. Agric. Meteorol. 2017, 237, 105–122. [Google Scholar] [CrossRef]

- Lu, Z.; Im, J.; Quackenbush, L. A Volumetric Approach to Population Estimation Using Lidar Remote Sensing. Photogramm. Eng. Sens. 2011, 77, 1145–1156. [Google Scholar] [CrossRef]

- Lee, S.; Im, J.; Kim, J.; Kim, M.; Shin, M.; Kim, H.-C.; Quackenbush, L.J. Arctic Sea Ice Thickness Estimation from CryoSat-2 Satellite Data Using Machine Learning-Based Lead Detection. Remote. Sens. 2016, 8, 698. [Google Scholar] [CrossRef]

- Quinlan, J. C5. 0 Online Tutorial. Available online: www.rulequest.com (accessed on 18 May 2019).

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Guo, Z.; Du, S. Mining parameter information for building extraction and change detection with very high-resolution imagery and gis data. GISci. Remote Sens. 2017, 54, 38–63. [Google Scholar] [CrossRef]

- Kim, M.; Im, J.; Han, H.; Kim, J.; Lee, S.; Shin, M.; Kim, H.-C. Landfast sea ice monitoring using multisensor fusion in the Antarctic. GISci. Remote Sens. 2015, 52, 239–256. [Google Scholar] [CrossRef]

- Lu, Z.; Im, J.; Rhee, J.; Hodgson, M. Building type classification using spatial and landscape attributes derived from LiDAR remote sensing data. Landsc. Urban Plan. 2014, 130, 134–148. [Google Scholar] [CrossRef]

- Richardson, H.J.; Hill, D.J.; Denesiuk, D.R.; Fraser, L.H. A comparison of geographic datasets and field measurements to model soil carbon using random forests and stepwise regressions (British Columbia, Canada). GISci. Remote Sens. 2017, 17, 1–19. [Google Scholar] [CrossRef]

- Park, S.; Im, J.; Jang, E.; Rhee, J. Drought assessment and monitoring through blending of multi-sensor indices using machine learning approaches for different climate regions. Agric. Meteorol. 2016, 216, 157–169. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by randomforest. R News 2002, 2, 18–22. [Google Scholar]

- Chu, H.-J.; Wang, C.-K.; Kong, S.-J.; Chen, K.-C. Integration of Full-waveform LiDAR and Hyperspectral Data to Enhance Tea and Areca Classification. GISci. Remote Sens. 2016, 53, 542–559. [Google Scholar] [CrossRef]

- Zhang, C.; Smith, M.; Fang, C. Evaluation of Goddard’s LiDAR, hyperspectral, and thermal data products for mapping urban land-cover types. GISci. Remote Sens. 2018, 55, 90–109. [Google Scholar]

- Tesfamichael, S.; Newete, S.; Adam, E.; Dubula, B. Field spectroradiometer and simulated multispectral bands for discriminating invasive species from morphologically similar cohabitant plants. GISci. Remote Sens. 2018, 55, 417–436. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A.; Morton, J.; Wilhelm, V.L.; Jon, M. Comparing fully convolutional networks, random forest, support vector machine, and patch-based deep convolutional neural networks for object-based wetland mapping using images from small unmanned aircraft system. GISci. Remote Sens. 2018, 55, 243–264. [Google Scholar] [CrossRef]

- Wylie, B.; Pastick, N.; Picotte, J.; Deering, C. Geospatial data mining for digital raster mapping. GISci. Remote Sens. 2019, 56, 406–429. [Google Scholar] [CrossRef]

- Xun, L.; Wang, L. An object-based SVM method incorporating optimal segmentation scale estimation using Bhattacharyya Distance for mapping salt cedar (Tamarisk spp.) with QuickBird imagery. GISci. Remote Sens. 2015, 52, 257–273. [Google Scholar] [CrossRef]

- Zhu, X.; Li, N.; Pan, Y. Optimization Performance Comparison of Three Different Group Intelligence Algorithms on a SVM for Hyperspectral Imagery Classification. Remote. Sens. 2019, 11, 734. [Google Scholar] [CrossRef]

- Powers, D.M. Evaluation: from precision, recall and F-measure to ROC, informedness, markedness and correlation. J. Mach. Learn. Technol. 2011, 2, 37–63. [Google Scholar]

- Chang, C.-C. Libsvm: A Library for Support Vector Machines, Acm Transactions on Intelligent Systems and Technology, 2: 27: 1--27: 27, 2011. Available online: http://www.csie.ntu.edu.tw/~cjlin/libsvm (accessed on 18 May 2019).

- Chen, Y.; Lin, Z.; Zhao, X. Optimizing Subspace SVM Ensemble for Hyperspectral Imagery Classification. IEEE J. Sel. Top. Appl. Earth Obs. Sens. 2014, 7, 1295–1305. [Google Scholar] [CrossRef]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K.-I. Assessing the suitability of data from Sentinel-1A and 2A for crop classification. GISci. Remote Sens. 2017, 54, 1–21. [Google Scholar] [CrossRef]

- Georanos, S.; Grippa, T.; Vanhuysse, S.; Lennert, M.; Shimoni, M.; Kalogirou, S.; Wolff, E. Less is more: optimizing classification performance through feature selection in a very-high-resolution remote sensing object-based urban application. GISci. Remote Sens. 2018, 55, 221–242. [Google Scholar]

- Figa-Saldaña, J.; Wilson, J.J.; Attema, E.; Gelsthorpe, R.; Drinkwater, M.; Stoffelen, A. The advanced scatterometer (ASCAT) on the meteorological operational (MetOp) platform: A follow on for European wind scatterometers. Can. J. Sens. 2002, 28, 404–412. [Google Scholar] [CrossRef]

| Predictor | Description |

|---|---|

| wind_ave | Average of wind speed over the disturbance |

| wind_cv_fix | Circular variance (CV) of wind to measure the degree of symmetry in the circulation within a fixed widnow |

| wind_cv_mv | The maximum CV value found by moving a small () window over a larger area |

| wind_ci | Clumpiness Index (CI) of the wind speed over 15 m s−1 in a large area |

| wind_pladj | Percentage of Like ADJacency (PLADJ) of wind speed over 15 m s−1 in a large area |

| rain_ave | Average of rain rates near the center of the disturbance |

| rain_ci | Clumpiness Index (CI) of the rain rate exceeding 5 mm h−1 in a large area |

| rain_paldj | Percentage of Like ADJacency (PLADJ) of the rain rate exceeding 5 mm h−1 in a large area |

| constant | ||||

|---|---|---|---|---|

| Average | 0.321 | 5.223 | 0.048 | 8.776 |

| Standard deviation | 4.517 | 0.806 | 0.124 | 1.215 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, M.; Park, M.-S.; Im, J.; Park, S.; Lee, M.-I. Machine Learning Approaches for Detecting Tropical Cyclone Formation Using Satellite Data. Remote Sens. 2019, 11, 1195. https://doi.org/10.3390/rs11101195

Kim M, Park M-S, Im J, Park S, Lee M-I. Machine Learning Approaches for Detecting Tropical Cyclone Formation Using Satellite Data. Remote Sensing. 2019; 11(10):1195. https://doi.org/10.3390/rs11101195

Chicago/Turabian StyleKim, Minsang, Myung-Sook Park, Jungho Im, Seonyoung Park, and Myong-In Lee. 2019. "Machine Learning Approaches for Detecting Tropical Cyclone Formation Using Satellite Data" Remote Sensing 11, no. 10: 1195. https://doi.org/10.3390/rs11101195