A Comprehensive Evaluation of Approaches for Built-Up Area Extraction from Landsat OLI Images Using Massive Samples

Abstract

:1. Introduction

1.1. Feature Engineering versus Feature Learning

1.2. Pixel-Based versus Patch-Based Classification

1.3. Built-Up Area Extraction from Medium-Resolution Images

2. Study Site and Data

2.1. Study Area and OLI Image

2.2. Massive Samples Automatically Selected from Built-Up Production

3. Research Methods

3.1. Feature Engineering

3.1.1. Pixel-Based Classification

3.1.2. Patch-Based Classification

3.1.3. Classification Algorithm

3.2. Feature Learning

3.2.1. CNN_1D Classification

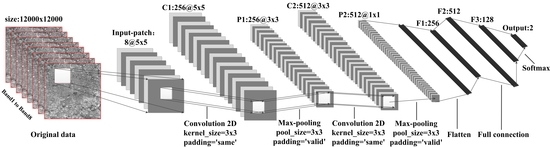

3.2.2. CNN_2D Classification

4. Experimental Results and Evaluation

4.1. Feature Engineering and Feature Learning

4.2. Single Pixel and Image Patch

4.3. Classification Strategy

4.4. Time Complexity

5. Discussion

5.1. Support Vectors of SVM

5.2. CNN_2D versus VGG16

6. Conclusions

- (1)

- The classification accuracy based on feature learning is generally better than that based on feature engineering. However in feature engineering, when the original eight bands consider the neighborhood and the classifier is RF, the overall accuracy reaches 90%, which is comparable to the results of CNN_2D.

- (2)

- The classification effect and accuracy based on the image patch are better than those based on a single pixel; however, the feature dimension of the image patch is large, and there may be feature redundancy. When training samples are large, a more complex classification model is needed. CNNs based on image patches have a significant advantage over CNNs based on single pixels. The results of CNN_2D, water, and vegetation in dense buildings can be discriminated from built-up areas within the Landsat 8 images. Meanwhile, in the suburbs, small built-up areas and narrow roads become distinguishable from the background.

- (3)

- Compared with traditional machine learning algorithms, such as SVM, RF, and AdaBoost, CNN has the advantages of autonomous learning, stability, and robustness. The classification accuracy of P5-RF and P7-RF differs little from that of CNN_2D and is far superior to the other classification results. The accuracy of CNN_2D is significantly better than that of VGG16. L2 regularization can eliminate the noise and scattering of the original eight-band data, effectively suppress SVM overfitting, and significantly reduce the number of support vectors.

Author Contributions

Funding

Conflicts of Interest

References

- Chen, X.H.; Cao, X.; Liao, A.P.; Chen, L.J.; Peng, S.; Lu, M.; Chen, J.; Zhang, W.W.; Han, G.; Wu, H.; et al. Global mapping of artificial surfaces at 30-m resolution. Sci. China Earth Sci. 2016, 59, 2295–2306. [Google Scholar] [CrossRef]

- Chaudhuri, D.; Kushwaha, N.K.; Samal, A.; Agarwal, R.C. Automatic Building Detection from High-Resolution Satellite Images Based on Morphology and Internal Gray Variance. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1767–1779. [Google Scholar] [CrossRef]

- Jin, X.; Davis, C.H. Automated building extraction from high-resolution satellite imagery in urban areas using structural, contextual, and spectral information. EURASIP J. Adv. Signal Process. 2005, 2005, 2196–2206. [Google Scholar] [CrossRef]

- Pesaresi, M.; Guo, H.; Blaes, X.; Ehrlich, D.; Ferri, S.; Gueguen, L.; Halkia, M.; Kauffmann, M.; Kemper, T.; Lu, L.L.; et al. A Global Human Settlement Layer from Optical HR/VHR RS Data: Concept and First Results. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2102–2131. [Google Scholar] [CrossRef]

- Goldblatt, R.; Stuhlmacher, M.F.; Tellman, B.; Clinton, N.; Hanson, G.; Georgescu, M.; Wang, C.Y.; Serrano-Candela, F.; Khandelwa, A.K.; Cheng, W.H.; et al. Using Landsat and nighttime lights for supervised pixel-based image classification of urban land cover. Remote Sens. Environ. 2018, 205, 253–275. [Google Scholar] [CrossRef]

- Yang, J.; Meng, Q.Y.; Huang, Q.Q.; Sun, Z.H. A New Method of Building Extraction from High Resolution Remote Sensing Images Based on NSCT and PCNN. Int. Conf. Agro-Geoinform. 2016, 428–432. [Google Scholar]

- Zhong, P.; Wang, R. A Multiple Conditional Random Fields Ensemble Model for Urban Area Detection in Remote Sensing Optical Images. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3978–3988. [Google Scholar] [CrossRef]

- Schaaf, C.B.; Gao, F.; Strahler, A.H.; Lucht, W.; Tsang, T.; Strugnell, N.C.; Zhang, X.Y.; Jin, Y.F.; Muller, J.P.; Lewis, P.; et al. First operational BRDF, albedo nadir reflectance products from MODIS. Remote Sens. Environ. 2002, 83, 135–148. [Google Scholar] [CrossRef] [Green Version]

- Zhu, X.X.; Tuia, D.; Mou, L.C.; Xia, G.S.; Zhang, L.P.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Minar, M.R.; Naher, J. Recent Advances in Deep Learning: An Overview. arXiv, 2018; arXiv:1807.08169. [Google Scholar]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Hasan, M.; Van Esesn, B.; Awwal, A.S.; Asari, V.K. The History Began from AlexNet: A Comprehensive Survey on Deep Learning Approaches. arXiv, 2018; arXiv:1803.01164. [Google Scholar]

- Li, C.; Wang, J.; Wang, L.; Hu, L.Y.; Gong, P. Comparison of Classification Algorithms and Training Sample Sizes in Urban Land Classification with Landsat Thematic Mapper Imagery. Remote Sens. 2014, 6, 964–983. [Google Scholar] [CrossRef] [Green Version]

- Momeni, R.; Aplin, P.; Boyd, D.S. Mapping Complex Urban Land Cover from Spaceborne Imagery: The Influence of Spatial Resolution, Spectral Band Set and Classification Approach. Remote Sens. 2016, 8, 88. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef] [Green Version]

- Xiang, D.; Tang, T.; Canbin, H.; Fan, Q.H.; Su, Y. Built-up Area Extraction from PolSAR Imagery with Model-Based Decomposition and Polarimetric Coherence. Remote Sens. 2016, 8, 685. [Google Scholar] [CrossRef]

- Xiang, D.; Tang, T.; Ban, Y.; Su, Y.; Kuang, G. Unsupervised polarimetric SAR urban area classification based on model-based decomposition with cross scattering. ISPRS J. Photogramm. Remote Sens. 2016, 116, 86–100. [Google Scholar] [CrossRef]

- Xiang, D.; Tang, T.; Ban, Y.; Su, Y.; Samhällsplanering, Q.M.; Skolan, F.A.O.; Samhällsbyggnad; Geoinformatik. Man-Made Target Detection from Polarimetric SAR Data via Nonstationarity and Asymmetry. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1459–1469. [Google Scholar] [CrossRef]

- Zha, Y.; Gao, J.; Ni, S. Use of normalized difference built-up index in automatically mapping urban areas from TM imagery. Int. J. Remote Sens. 2003, 24, 583–594. [Google Scholar] [CrossRef]

- Xu, H. A new index for delineating built-up land features in satellite imagery. Int. J. Remote Sens. 2008, 29, 4269–4276. [Google Scholar] [CrossRef]

- Pesaresi, M.; Gerhardinger, A.; Kayitakire, F. A Robust Built-Up Area Presence Index by ani-sotropic Rotation-Invariant Texture Measure. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2009, 1, 180–192. [Google Scholar] [CrossRef]

- Benedek, C.; Descombes, X.; Zerubia, J. Building development monitoring in multitemporal remotely sensed image pairs with stochastic birth-death dynamics. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 33–50. [Google Scholar] [CrossRef] [PubMed]

- Grinias, I.; Panagiotakis, C.; Tziritas, G. MRF-based Segmentation and Unsupervised Classification for Building and Road Detection in Peri-urban Areas of High-resolution. ISPRS J. Photogramm. Remote Sens. 2016, 122, 145–166. [Google Scholar] [CrossRef]

- Inglada, J. Automatic recognition of man-made objects in high resolution optical remote sensing images by SVM classification of geometric image features. ISPRS J. Photogramm. Remote Sens. 2007, 62, 236–248. [Google Scholar] [CrossRef]

- Anagiotakis, C.; Kokinou, E.; Sarris, A. Curvilinear Structure Enhancement and Detection in Geophysical Images. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2040–2048. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [Green Version]

- Silver, D.; Schrittwieser, K.; Antonoglou, L.; Huang, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; Chen, Y.T.; Lillicrap, T.; et al. Mastering the game of Go without human knowledge. Nature 2017, 550, 354–359. [Google Scholar] [CrossRef] [PubMed]

- Li, Y. Deep Reinforcement Learning: An Overview. arXiv, 2017; arXiv:1701.07274. [Google Scholar]

- Andreas, J.; Klein, D.; Levine, S. Modular Multitask Reinforcement Learning with Policy Sketches. arXiv, 2016; arXiv:1611.01796. [Google Scholar]

- Anschel, O.; Baram, N.; Shimkin, N. Averaged-DQN: Variance Reduction and Stabilization for Deep Reinforcement Learning. arXiv, 2016; arXiv:1611.01929. [Google Scholar]

- Arulkumaran, K.; Deisenroth, K.; Deisenroth, M.; Bharath, A.A. A Brief Survey of Deep Reinforcement Learning. arXiv, 2017; arXiv:1708.05866. [Google Scholar] [CrossRef]

- Babaeizadeh, M.; Frosio, L.; Tyree, S.; Clemons, J.; Kautz, J. Reinforcement Learning through Asynchronous Advantage Actor-Critic on a GPU. arXiv, 2016; arXiv:1611.06256. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Ackley, D.H.; Hinton, G.E.; Sejnowski, T.J. A Learning Algorithm for Boltzmann Machines. Connectionist Models and Their Implications: Readings from Cognitive Science; Ablex Publishing Corp.: New York, NY, USA, 1988; pp. 147–169. [Google Scholar]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A. Generative Adversarial Networks: An Overview. IEEE Signal Process. Mag. 2017, 35, 53–65. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S. Learning multiscale and deep representations for classifying remotely sensed imagery. ISPRS J. Photogramm. Remote Sens. 2016, 113, 155–165. [Google Scholar] [CrossRef]

- Han, J.; Zhang, D.; Cheng, G.; Guo, L.; Ren, J.C. Object Detection in Optical Remote Sensing Images Based on Weakly Supervised Learning and High-Level Feature Learning. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3325–3337. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In International Conference on Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv, 2014; arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Castelluccio, M.; Poggi, G.; Sansone, C.; Verdoliva, L. Land Use Classification in Remote Sensing Images by Convolutional Neural Networks. Acta Ecol. Sin. 2015, 28, 627–635. [Google Scholar]

- Vakalopoulou, M.; Karantzalos, K.; Komodakis, N.; Paragios, N. Building detection in very high resolution multispectral data with deep learning features. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 1873–1876. [Google Scholar]

- Huang, Z.; Cheng, G.; Wang, H.; Li, H.; Shi, L.; Pan, C. Building extraction from multi-source remote sensing images via deep deconvolution neural networks. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1835–1838. [Google Scholar]

- Makantasis, K.; Karantzalos, K.; Doulamis, A.; Loupos, K. Deep Learning-Based Man-Made Object Detection from Hyperspectral Data. Lect. Notes Comput. Sci. 2015, 717–727. [Google Scholar]

- Yang, N.; Tang, H.; Sun, H.; Yang, X. DropBand: A Simple and Effective Method for Promoting the Scene Classification Accuracy of Convolutional Neural Networks for VHR Remote Sensing Imagery. IEEE Geosci. Remote Sens. Lett. 2018, 5, 257–261. [Google Scholar] [CrossRef]

- Wang, L.; Zhu, J.H.; Xu, Y.Q.; Wang, Z.Q. Urban Built-Up Area Boundary Extraction and Spatial-Temporal Characteristics Based on Land Surface Temperature Retrieval. Remote Sens. 2018, 10, 473. [Google Scholar] [CrossRef]

- Ning, X.; Lin, X. An Index Based on Joint Density of Corners and Line Segments for Built-Up Area Detection from High Resolution Satellite Imagery. ISPRS Int. J. Geo-Inf. 2017, 6, 338. [Google Scholar] [CrossRef]

- Friedl, M.A.; Mciver, D.K.; Hodges, J.C.F.; Zhang, X.Y.; Muchoney, D.; Strahler, A.H.; Woodcock, C.E.; Gopal, S.; Schneider, A.; Cooper, A.; et al. Global land cover mapping from MODIS: Algorithms and early results. Remote Sens. Environ. 2002, 83, 287–302. [Google Scholar] [CrossRef]

- Gong, P.; Wang, J.; Yu, L.; Zhao, Y.C.; Zhao, Y.Y.; Liang, L.; Niu, Z.G.; Huang, X.M.; Fu, H.H.; Liu, S.; et al. Finer resolution observation and monitoring of global land cover: First mapping results with Landsat TM and ETM+ data. Int. J. Remote Sens. 2013, 34, 2607–2654. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Liao, A.; Cao, X.; Chen, L.J.; Chen, X.H.; He, C.Y.; Han, G.; Peng, S.; Lu, M.; et al. Global land cover mapping at 30 m resolution: A POK-based operational approach. ISPRS J. Photogramm. Remote Sens. 2015, 103, 7–27. [Google Scholar] [CrossRef]

- Pesaresi, M.; Ehrlich, D.; Ferri, S.; Florczyk, A.; Carneiro Freire, S.; Halkia, S.; Julea, A.; Kemper, T.; Soille, P.; Syrris, V. Operating Procedure for the Production of the Global Human Settlement Layer from Landsat Data of the Epochs 1975, 1990, 2000, and 2014; EUR 27741; Publications Office of the European Union: Luxembourg, 2016; JRC97705. [Google Scholar]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Liu, X.; Hu, G.; Ai, B.; Li, X.; Shi, Q. A Normalized Urban Areas Composite Index (NUACI) Based on Combination of DMSP-OLS and MODIS for Mapping Impervious Surface Area. Remote Sens. 2015, 7, 17168–17189. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Hu, G.; Chen, Y.; Li, X.; Xu, X.C.; Li, S.Y.; Pei, F.S.; Wang, S.J. High-resolution multi-temporal mapping of global urban land using Landsat images based on the Google Earth Engine Platform. Remote Sens. Environ. 2018, 209, 227–239. [Google Scholar] [CrossRef]

- Zhang, P.; Sun, Q.; Liu, M.; Li, J.; Sun, D.F. A Strategy of Rapid Extraction of Built-Up Area Using Multi-Seasonal Landsat-8 Thermal Infrared Band 10 Images. Remote Sens. 2017, 9, 1126. [Google Scholar] [CrossRef]

- Ma, X.; Tong, X.; Liu, S.; Luo, X.; Xie, H.; Li, C.M. Optimized Sample Selection in SVM Classification by Combining with DMSP-OLS, Landsat NDVI and GlobeLand30 Products for Extracting Urban Built-Up Areas. Remote Sens. 2017, 9, 236. [Google Scholar] [CrossRef]

- Goldblatt, R.; You, W.; Hanson, G.; Khandelwal, A.K. Detecting the Boundaries of Urban Areas in India: A Dataset for Pixel-Based Image Classification in Google Earth Engine. Remote Sens. 2016, 8, 634. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. A Multidirectional and Multiscale Morphological Index for Automatic Building Extraction from Multispectral GeoEye-1 Imagery. Photogramm. Eng. Remote Sens. 2011, 77, 721–732. [Google Scholar] [CrossRef]

- Smith, J.R.; Chang, S. Automated Binary Texture Feature Sets for Image Retrieval. Acoust. Speech Signal Process. Conf. IEEE Int. Conf. IEEE Comput. Soc. 1996, 4, 2239–2242. [Google Scholar]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 6, 610–621. [Google Scholar] [CrossRef]

- Haralick, R.M. Statistical and structural approaches to texture. Proc. IEEE 1979, 67, 786–804. [Google Scholar] [CrossRef]

- Tamura, H.; Mori, S.; Yamawaki, T. Textural features corresponding to visual perception. IEEE Trans. Syst. Man Cybern. 1978, 8, 460–473. [Google Scholar] [CrossRef]

- Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; Dedieu, G. Assessing the robustness of Random Forests to map land cover with high resolution satellite image time series over large areas. Remote Sens. Environ. 2016, 187, 156–168. [Google Scholar] [CrossRef]

| Landsat 8-OLI | Landsat 7-ETM+ | ||||||

|---|---|---|---|---|---|---|---|

| Band Index | Band Name | Bandwidth (μm) | Resolution (m) | Band Index | Band Name | Bandwidth (μm) | Resolution (m) |

| Band 1 | COASTAL | 0.43–0.45 | 30 | ||||

| Band 2 | BLUE | 0.45–0.51 | 30 | Band 1 | BLUE | 0.45–0.52 | 30 |

| Band 3 | GREEN | 0.53–0.59 | 30 | Band 2 | GREEN | 0.52–0.60 | 30 |

| Band 4 | RED | 0.64–0.67 | 30 | Band 3 | RED | 0.63–0.69 | 30 |

| Band 5 | NIR | 0.85–0.88 | 30 | Band 4 | NIR | 0.77–0.90 | 30 |

| Band 6 | SWIR1 | 1.57–1.65 | 30 | Band 5 | SWIR1 | 1.55–1.75 | 30 |

| Band 7 | SWIR2 | 2.11–2.29 | 30 | Band 7 | SWIR2 | 2.09–2.35 | 30 |

| Band 8 | PAN | 0.50–0.68 | 15 | Band 8 | PAN | 0.52–0.90 | 15 |

| Feature Engineering | Feature Learning | |||||

|---|---|---|---|---|---|---|

| Feature Description | Abbreviations | Classifier | Network Architecture | Abbreviations | ||

| Pixel | Spectrum | Original eight Bands | OS8 | CNN with one-dimensional convolution on inputting bands of each pixel | CNN_1D | |

| Pan + NDBI + IBI | RSBI | |||||

| Morphology | Pan + EMBI | EMBI | SVM | |||

| Texture | Pan + Built-up presence index | PanTex | RF | |||

| Texture from GLCM | TEGL | |||||

| Patch | Original eight Bands | 3 × 3 neighborhood | P3 | AdaBoost | CNN fed with an image patch of size 5 × 5 | CNN_2D |

| 5 × 5 neighborhood | P5 | |||||

| 7 × 7 neighborhood | P7 | |||||

| Algorithm | Abbreviation | Parameter Type | Parameter Name (sklearn) | Parameter Set |

|---|---|---|---|---|

| Support Vector Machine | SVM | kernel-type | kernel | rbf |

| penalty coefficient | C | 10 | ||

| gamma | gamma | 100 | ||

| Random Forests | RF | base classifier | base_estimator | decision Tree |

| number of trees | n_estimators | 60 | ||

| AdaBoost Classifier | AdaBoost | base classifier | base_estimator | decision Tree |

| number of trees | n_estimators | 60 | ||

| learning rate | learning_rate | 10−3 |

| Prediction | ||||

|---|---|---|---|---|

| Non-Built-Up | Built-Up | Sum | ||

| Ground Truth | Non-Built-up | True Negative (TN) | False Positive (FP) | Actual Negative (TN + FP) |

| Built-up | False Negative (FN) | True Positive (TP) | Actual Positive (FN + TP) | |

| Sum | Predicted Negative (TN + FN) | Predicted Positive (FP + TP) | TN+ TP+ FN+ FP | |

| Feature Engineering | SVM | RF | AdaBoost | ||||||

|---|---|---|---|---|---|---|---|---|---|

| OA | Recall | Precision | OA | Recall | Precision | OA | Recall | Precision | |

| OS8 | 0.849 | 0.922 | 0.794 | 0.887 | 0.904 | 0.864 | 0.841 | 0.834 | 0.831 |

| RSBI | 0.782 | 0.762 | 0.774 | 0.805 | 0.781 | 0.802 | 0.780 | 0.745 | 0.781 |

| EMBI | 0.810 | 0.760 | 0.825 | 0.810 | 0.760 | 0.825 | 0.809 | 0.755 | 0.827 |

| PanTex | 0.824 | 0.825 | 0.808 | 0.821 | 0.820 | 0.805 | 0.817 | 0.811 | 0.804 |

| TEGL | 0.662 | 0.517 | 0.693 | 0.758 | 0.733 | 0.752 | 0.696 | 0.664 | 0.686 |

| P3 | 0.730 | 0.960 | 0.644 | 0.900 | 0.902 | 0.889 | 0.850 | 0.838 | 0.841 |

| P5 | 0.525 | 0.001 | 0.429 | 0.903 | 0.906 | 0.891 | 0.855 | 0.839 | 0.853 |

| P7 | 0.525 | 0.000 | 0.000 | 0.906 | 0.907 | 0.896 | 0.855 | 0.846 | 0.848 |

| CNN Model | Training Accuracy | OA | Recall | Precision |

|---|---|---|---|---|

| CNN_1D | 0.872 | 0.823 | 0.675 | 0.935 |

| CNN_2D | 0.968 | 0.901 | 0.915 | 0.882 |

| Features | SVM | RF | AdaBoost |

|---|---|---|---|

| OS8 | 32.86 | 0.44 | 0.03 |

| RSBI | 24.39 | 0.35 | 1.07 |

| EMBI | 3.87 | 0.21 | 0.44 |

| PanTex | 3.16 | 0.2 | 0.44 |

| TEGL | 32.78 | 0.56 | 1.93 |

| P3 | 77.75 | 1.32 | 0.27 |

| P5 | 162.29 | 2.52 | 0.89 |

| P7 | 273.53 | 3.62 | 1.81 |

| Classifier | OS8 | P3 | P5 | P7 |

|---|---|---|---|---|

| SVM | 9612 | 11,431 | 11,485 | 11,493 |

| SVM-L2 | 6368 | 5201 | 4564 | 4250 |

| Feature | Training Accuracy | OA | Recall | Precision |

|---|---|---|---|---|

| OS8 | 0.799 | 0.800 | 0.721 | 0.835 |

| P3 | 0.833 | 0.832 | 0.769 | 0.862 |

| P5 | 0.863 | 0.858 | 0.821 | 0.872 |

| P7 | 0.881 | 0.874 | 0.858 | 0.874 |

| CNN-Strategy | Training Accuracy | OA | Recall | Precision | Training Time (s) |

|---|---|---|---|---|---|

| CNN_2D | 0.968 | 0.901 | 0.915 | 0.882 | 4000 |

| VGG16-PCA | 0.886 | 0.806 | 0.782 | 0.812 | 36,000 |

| VGG16-RGB | 0.873 | 0.790 | 0.755 | 0.781 | 34,000 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, T.; Tang, H. A Comprehensive Evaluation of Approaches for Built-Up Area Extraction from Landsat OLI Images Using Massive Samples. Remote Sens. 2019, 11, 2. https://doi.org/10.3390/rs11010002

Zhang T, Tang H. A Comprehensive Evaluation of Approaches for Built-Up Area Extraction from Landsat OLI Images Using Massive Samples. Remote Sensing. 2019; 11(1):2. https://doi.org/10.3390/rs11010002

Chicago/Turabian StyleZhang, Tao, and Hong Tang. 2019. "A Comprehensive Evaluation of Approaches for Built-Up Area Extraction from Landsat OLI Images Using Massive Samples" Remote Sensing 11, no. 1: 2. https://doi.org/10.3390/rs11010002