3.2.3. Network Flow Fair Allocation (NFF)

In Network Flow Fairness, the entire network is the resource whose capacity should be equally shared between flows. This network capacity is determined by summing up the throughput of all flows inside the network, or their subflows in case of multipath transport. Unlike the link capacity which can be easily specified due to the physical nature of a link, the network capacity is difficult to calculate as already mentioned in Definition 8. It depends on the topology of the network, the location of source and sink nodes and which flows or subflows get bottlenecked on which link. Furthermore, there is a trade-off between the goal of resource usage maximization and fairness as discussed in

Section 2.3: using multipath might on the one hand be desired to maximize network utilization but on the other hand be unwanted for fairness reasons to avoid that a flow occupies multiple paths which should be assigned to other flows. An example for a network where NFF is applied was already given in

Figure 4.

When the entire network is the resource, fairness cannot be expressed by link-level constraints in the LP formulation but can only be included in the objective function. It is proposed to reflect the network fairness by a negative term whose absolute value increases in case of a large difference between the capacity assignments among the flows.

The objective function for NFF is developed in multiple steps. After each step, an example of a network architecture is shown where the fairness methods specified up to that step fail to ensure fair allocation of the flows and thus require an extension which is then shown in the next step; the respective objective functions are denoted as NFF-objective-I, -II, etc.

Let

be the sum of the allocation differences between flow

and all other flows

.

estimates the total allocation differences between all flows not concerning whether the flows share a common congested link or not. In Equation (

13), the denominator is introduced because the allocation difference between two flows is added twice, between flows

s,

t and vice versa:

The objective function NFF–objective-I (Equation (

14)) maximizes the differences of the aggregated allocation (

) and the total allocation difference among the flows (

). Though it seems that the objective function provides a network flow fair solution, it does not always fully utilize the available network capacity, as shown in the previously discussed scenario in

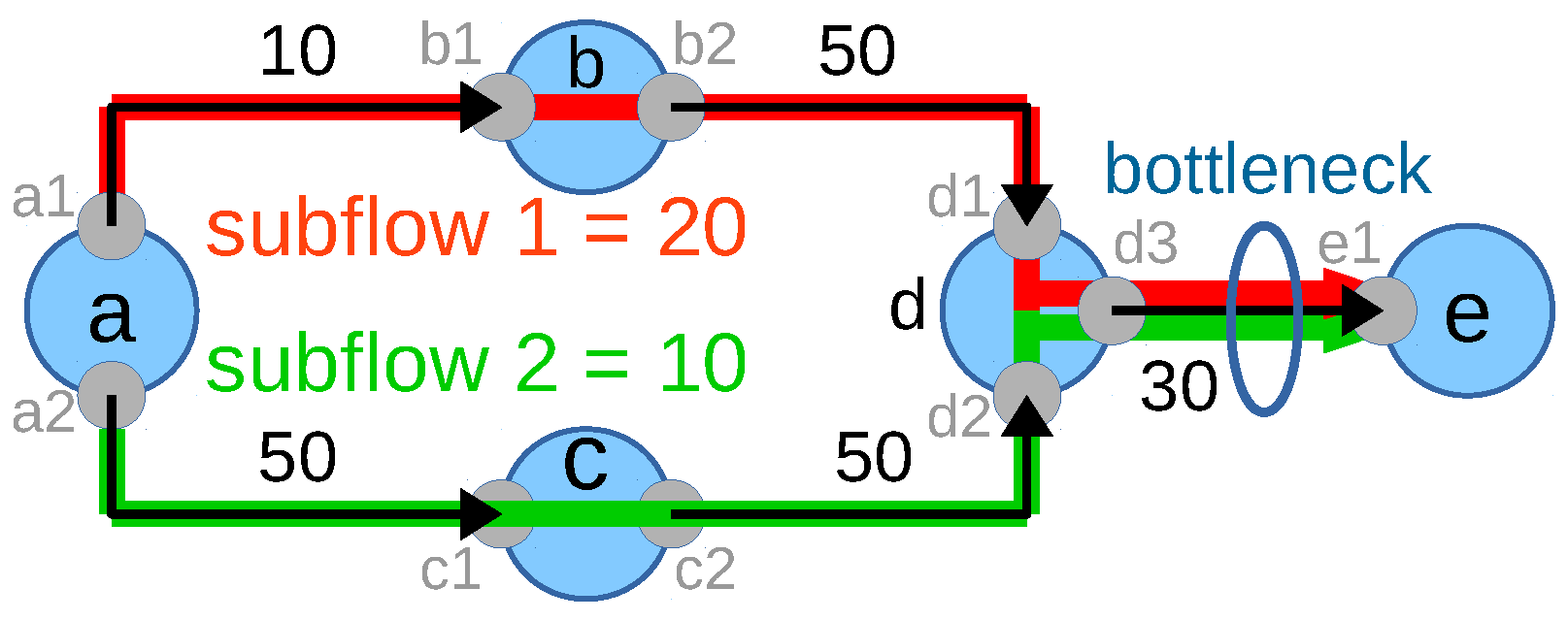

Figure 4. As explained earlier, according to the network flow fair allocation method, flow

should get 20 Mbps and flow

should get 10 Mbps from the network. This implies the objective function value of 20 (

) for this scenario. However, if each flow gets 10 Mbps, then the optimum value from the the objective function remains the same (

) as there is no difference between the flow allocations. Thus, both allocations are valid and optimum for this scenario; however, they differ in the fairness:

Nevertheless, if the solver allocates 10 Mbps to each flow, the network capacity is not fully utilized, which is not desirable. From the closer look, it is visible that the flow

does not utilize multipath though the flow is multipath capable and not affecting any other flow. Based on these insights, the objective function is extended to Equation (

15) in order to push the system towards multipath if flows are multipath capable:

M is the aggregated allocation of all multipath flows as defined in Equation (

8). Though the objective function solves the above-mentioned problem for the scenario in

Figure 4, adding

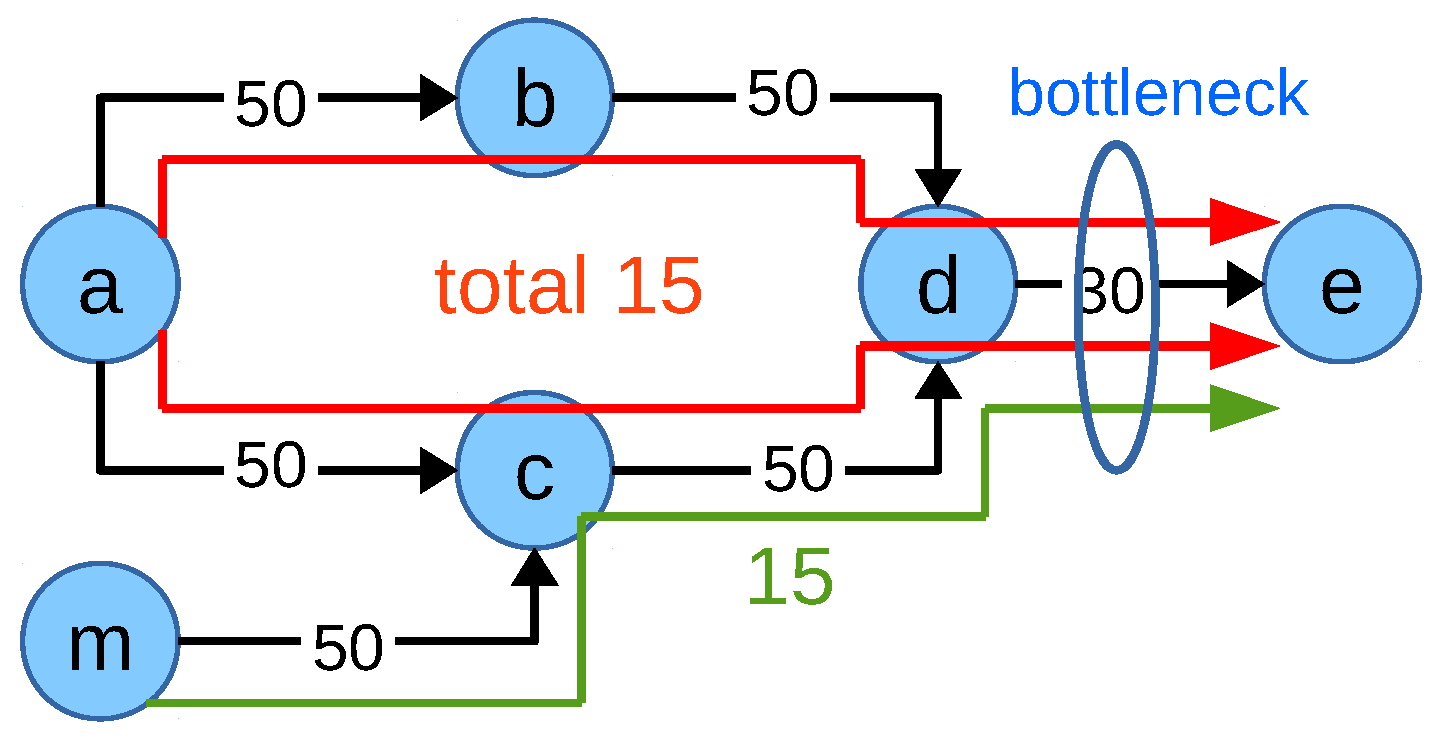

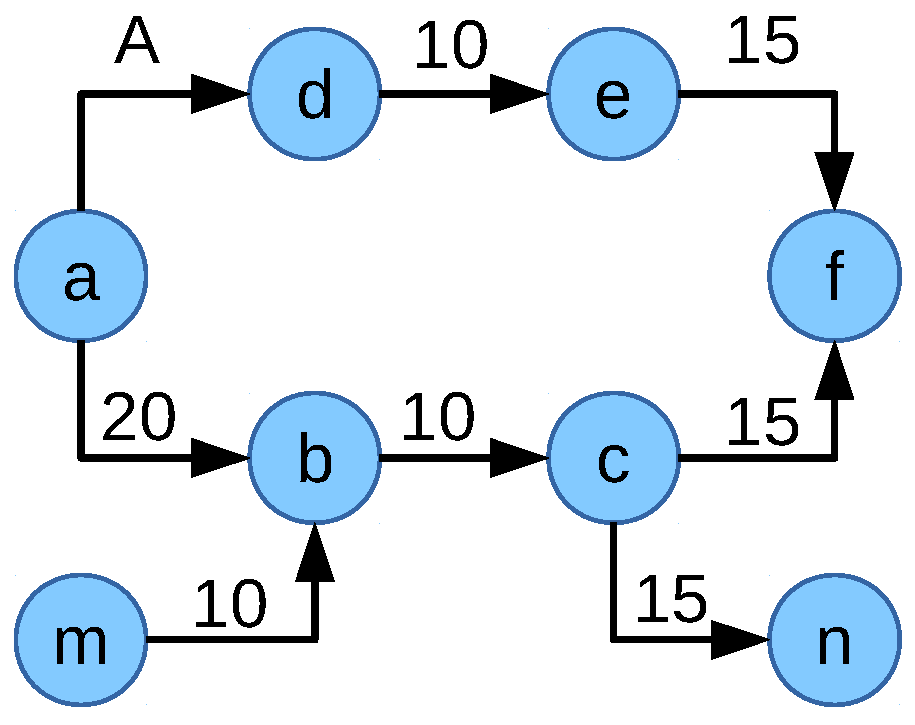

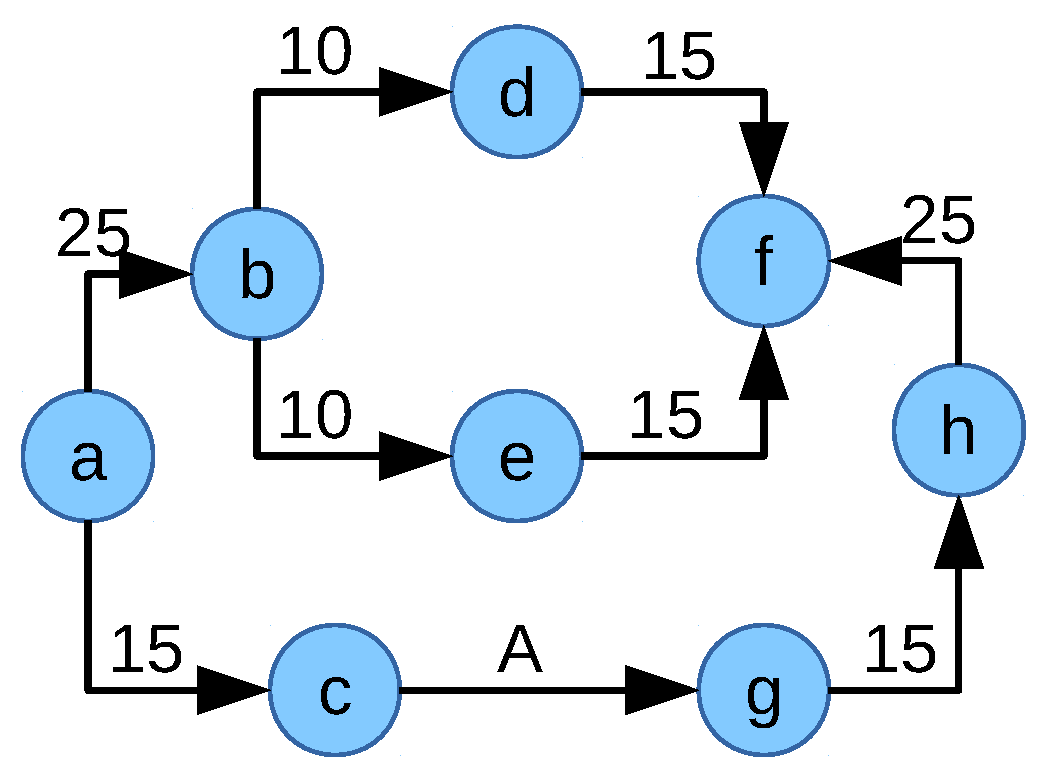

M to the objective function might not be enough for ideal network flow fair allocation. Consider the network scenario in

Figure 7. The network is composed of eight nodes

and

. All link capacities are in Mbps. There are five flows

,

and

inside the network. The ideal network flow fair solution would be flow

,

,

,

and

. Now, the value of the objective function NFF-objective-II can be calculated as follows:

NFF-objective-II

The allocations of the flows are , , , and where multipath capable flow does not utilize multipath then the value of the objective function NFF-objective-II is:

NFF-objective-II

This means the latter allocation where flow does not utilize multipath is the optimum solution of the objective function NFF-objective-II for this scenario. If flow was assigned to the 100 Mbps path via node c in addition to the 10 Mbps path via node b, there would be a high allocation difference between flow and each of the other flows. The penalization of this high difference by the negative element in the objective function outweighs the multipath capacity gain M.

In order to tackle this problem, a multiplication factor

for the capacity gain is introduced, where

is the number of flows inside flow set

K and

is the sum of the overall allocation gain due to multipath. The modified objective function is given in Equation (

16):

To formulate the equation sets for calculating

, let

be the maximum subflow allocation for the multipath flow

. If the flow

is a single path flow, then

.

is the equivalent single path allocation for the multipath flow

because, in single path allocation, a flow tries to take the path from which it can get maximum capacity:

The variable specifies the capacity allocation to the subflow between interface o to h, where the subflow is part of the flow from s to t and uses the link between nodes i and j.

The overall allocation gain due to multipath

is the difference between the aggregated allocation when flows inside the network utilize multipath and when flows would use single path only. Let,

be the aggregated allocation when each flow would use single path.

where

is the allocation of the single path flow

which is computed by the constraints given in Equations (

A48) to (

A50) in the

Appendix A.2.3.

The overall allocation gain due to multipath is then:

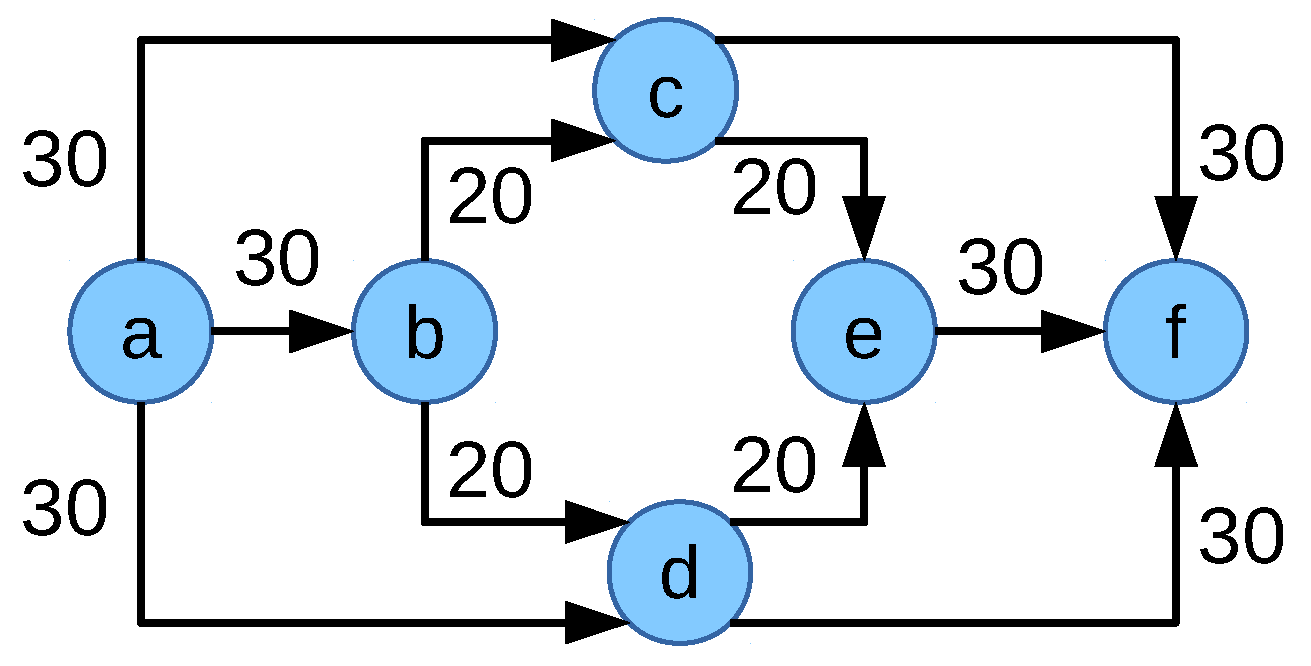

Though the objective function NFF-objective-III (Equation (

16)) overcomes the limitation of the objective function NFF-objective-II for the scenario in

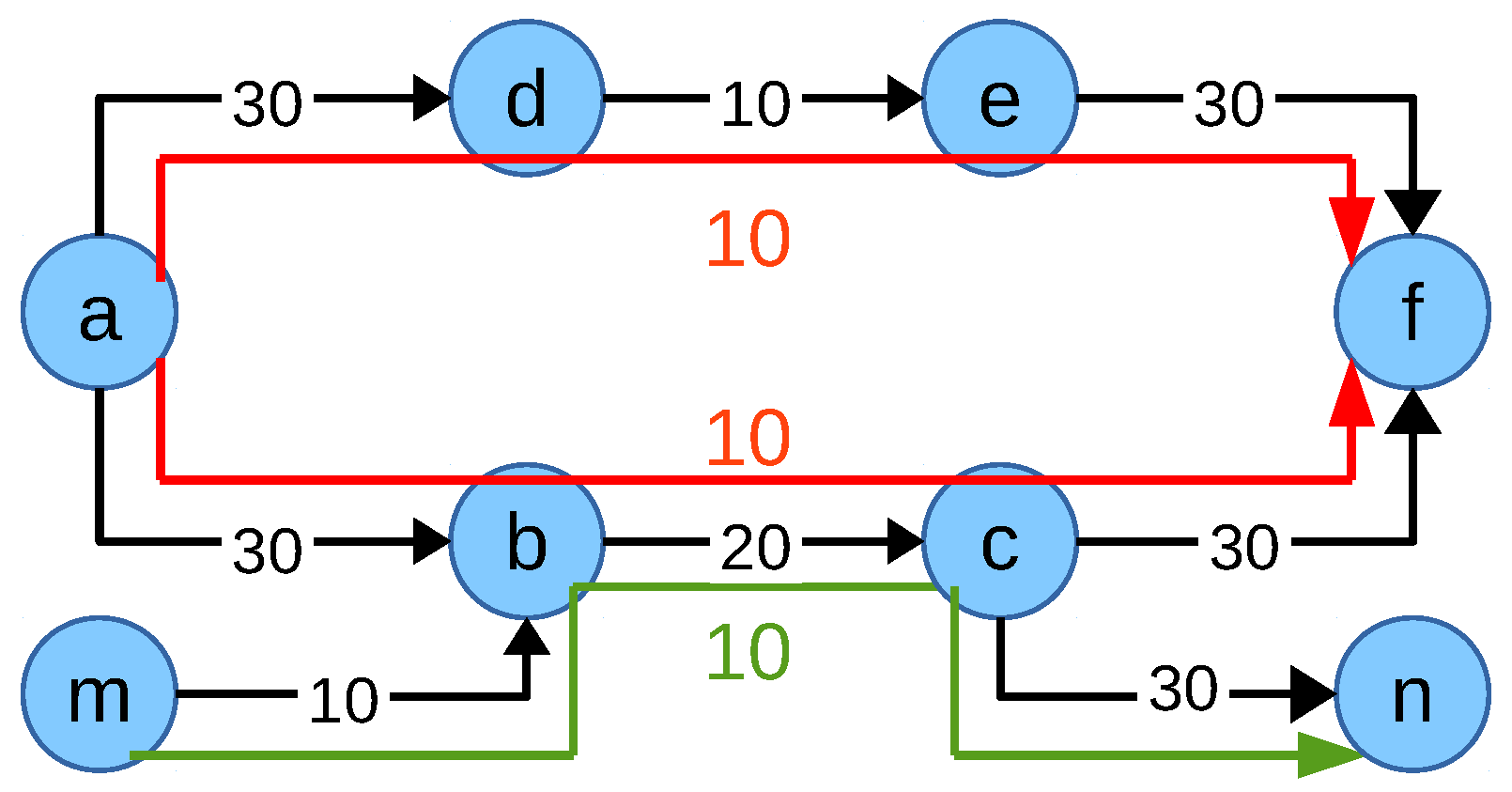

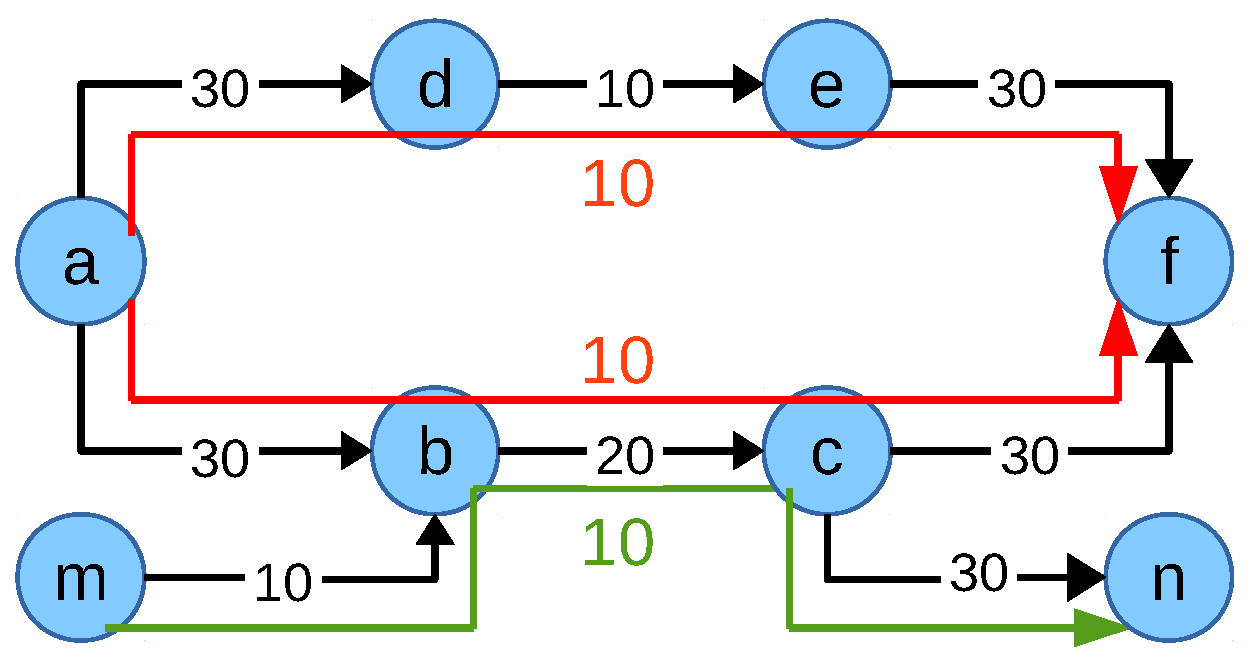

Figure 8, it may not always provide the optimum allocation. For example, consider the scenario in

Figure 9. The allocation of the flow

is limited by the links

and

. Flow

is limited by the link

and the flow

is limited by the link

. This leads to the ideal network flow fair allocation of 20 Mbps to each flow by utilizing the whole network capacity. The corresponding value of the objective function NFF-objective-III =

. However, if the allocation is considered as in

Figure 10 where the allocation of the flows

,

and

are 30, 30 and 10 Mbps, respectively, flows

and

limit the allocation of the flow

by utilizing multipath. In this case, the multipath gain of each individual multipath flow is 10 Mbps. This results in a value of the objective function NFF-objective-III =

which is higher than the previous allocation. Thus, the objective function NFF-objective-III would allocate 30 Mbps to the flows

and

whereas flow

would be allocated 10 Mbps, which is not an optimum network flow fair solution.

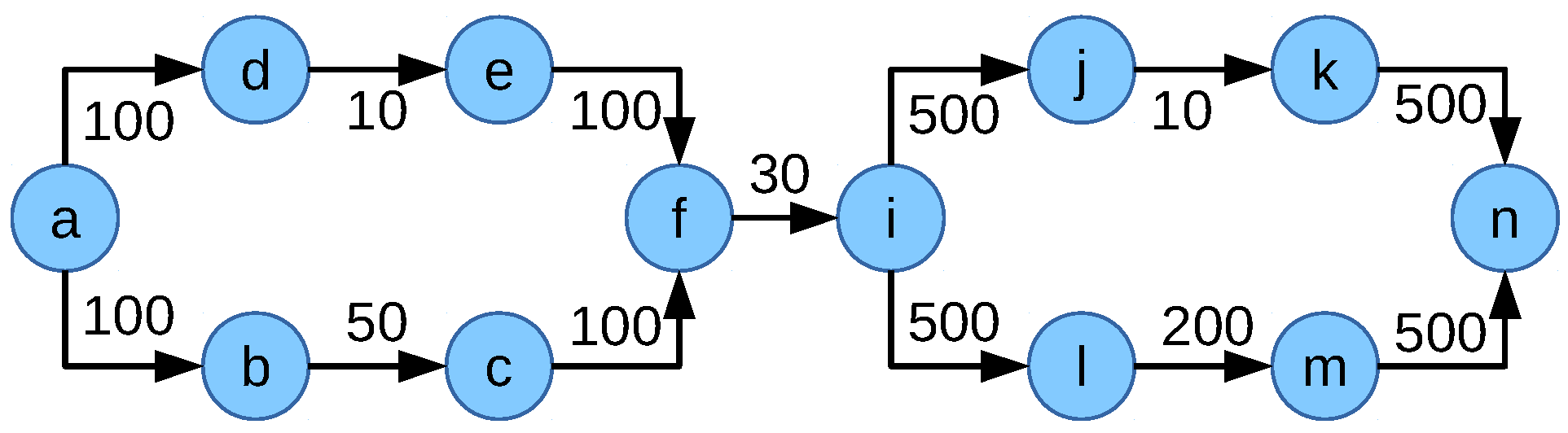

Going back to the objective function NFF-objective-II, the reason behind the problem in

Figure 8 was comparing all flows for the network flow fair solution even if they do not share any congested links, which means they are disjoint. Comparing all flows might create higher allocation differences which can not be compensated by the

M value. Introducing

with the multiplication factor

is more biased to multipath allocation, which has a negative effect on fairness. This results in the idea of comparing flows only when they share a congested link.

To compare flows according to the shared congested link, let

be the sum of the allocation differences between the flow

and all other flows sharing the congested link

with the flow

. In addition, consider a binary variable

which identifies the flow

sharing the congested link

.

when the flow

shares the congested link

with other flows. Equation (

20) means when flow

and all other flows

sharing the same congested link

i.e.,

and

then

is the sum of the allocation differences between the flow

and all other flows

. After calculating the allocation differences between the same congested flow group,

sums the total allocation difference of all those flows:

Formulating the equation sets to identify the flows sharing the same congested link requires that all links which are congested for the flows are identified. A link is said to be congested only when the link is fully utilized. In the constraints, a number of variables is defined which keeps track of whether a link is fully loaded, how many congested flows are on the link and whether these flows share the link with flows which are congested on another link.

Equation (

22) is the revised objective function for network flow fair allocation comparing the flows sharing the same congested link.

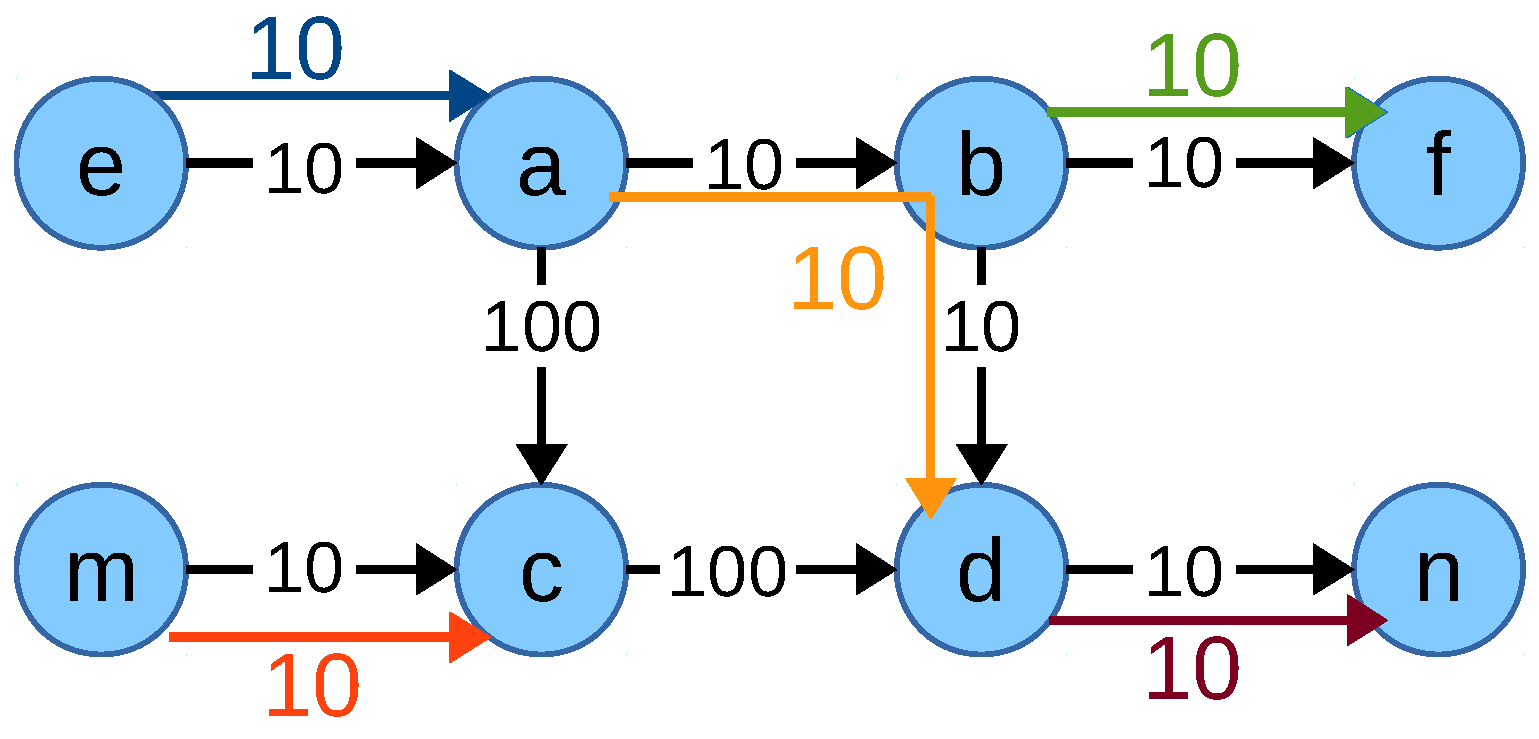

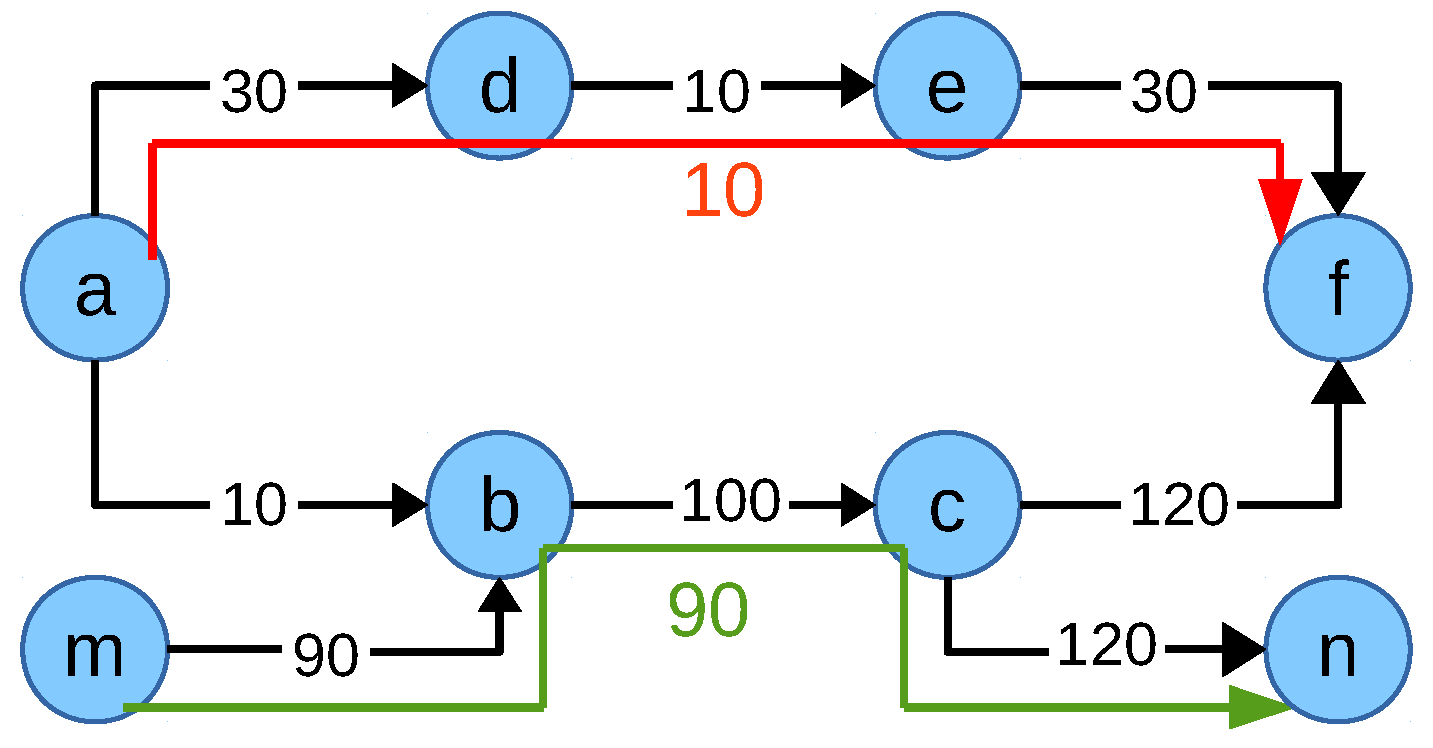

is dependent on the allocation of flows sharing a congested link which might lead to having a smaller number of common congested links among the flows which means multipath capable flows might not use multipath in cases where using multipath would create a common congested link with other flows. Consider the scenario in

Figure 11 with two flows

and

. Allocation of the multipath capable flow

is limited on link

and

, the single path flow

is limited on the link

. The resulting maximum allocation is 20 Mbps for flow

and 90 Mbps for the flow

. This leads to the network flow fair solution of 20 Mbps to the flow

and 90 Mbps to the flow

sharing the congested link

. Since the flows share a congested link, their allocation difference is considered in the variable

of the objective function NFF-objective-IV.

M is the total allocation to multipath flows as defined in Equation (

8). The value of the objective function for this allocation is

:

The value of is determined by a number of equations which perform the following tasks:

Compute the allocation on a link or if it is congested (Equations (

A51) and (

A52)).

Compute how many flows on a link are congested (Equations (

A53) to (

A55)).

Check whether a given flow shares a link with other flows (Equations (

A56) to (

A58)).

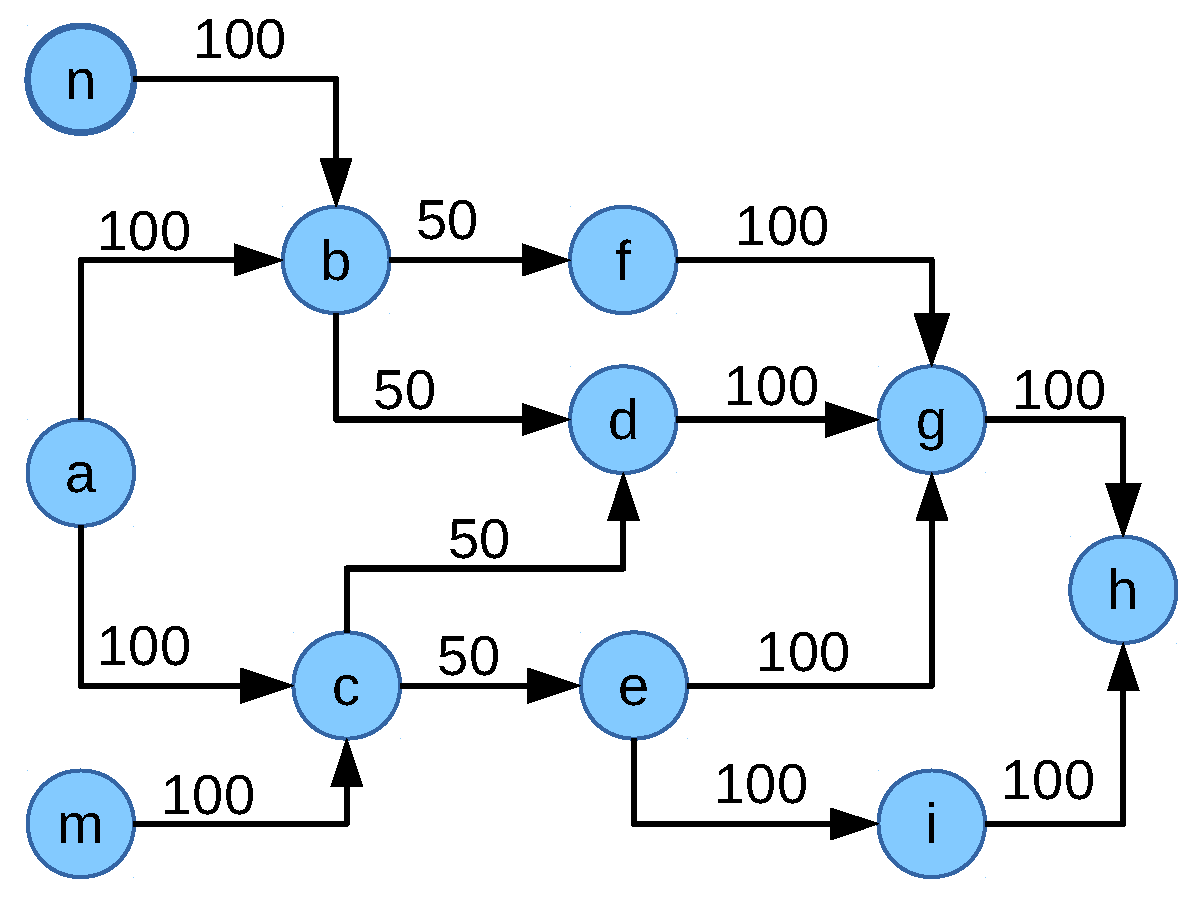

However, if the flow

uses only one path as in

Figure 12 and does not use the path

then flows

and

do not have a shared congested link which would nullify the value of

and

M. In that case, the allocations of the flows are

and

. This implies the value of the objective function NFF-objective-IV is

, which is higher than the previous allocation. The optimum allocation is then 10 Mbps to the flow

and 90 Mbps to the flow

by the objective function NFF-objective-IV, which is not an ideal network flow fair solution for this scenario.

All versions of objective functions discussed until now may still insufficiently utilize multipath for some scenarios: multipath may be deployed although it degrades fairness or it may not be deployed despite the requirement of efficient network usage. Therefore, the goal is to identify an objective function which does not affect fairness while maintaining multipath so that it can achieve optimum results for any scenario. In order to specify such objective function, a counter

for the total number of subflows assigned to all flows is introduced:

The objective function for the optimum network flow fair solution is formulated in Equation (

24) where

is the constant multiplication factor which is used to ensure multipath. The value of

must be larger than the total network capacity to eliminate the effect of network utilization (

) and fairness (

) on multipath uses. Moreover,

has a negligible adverse effect on fairness because

only specifies the

number of subflows and does not have any effect on the amount of subflow allocation, which means fairness can be adjusted by providing a very small allocation (as small as 0.01 unit) to unnecessary subflows. On the other hand, by using all the possible paths for multipath flows, total network capacity utilization is ensured by the congested link identifier constraints described earlier in this section:

However, NFF-objective-V still might not yield an optimum result if a subflow is assigned a path with a link not used by other flows and that link contributes a smaller capacity to the subflow than a bottlenecked, but a faster link on a different path. The solver would select the unused link to keep low while, on the other hand, the gain would suffer. This suggests that achieving a network flow fair solution which works for any type of scenario is improbable with a single objective function.

In order to cope with the conflict of considering both network allocation and fairness in the objective function in any kind of scenario, the optimization needs to be performed in two steps. In both of them, network utilization as well as fairness are optimized; however, the first step puts the priority on fair capacity assignment, whereas, in the second step, a high network utilization is preferred.

Equations (

25) and (

26) show the final objective function pair NFF-objective-VI of the two step process, which yields the optimum network flow fair solution. In the first step, a minimum allocation is set for each flow however still utilizing the network capacity as much as possible. The flow allocation of step-1 is used as the minimum allocation constraint for the step-2 to ensure fairness in step-2.

is the allocation of the flow

in step-1. Equation (

27) makes sure that each flow gets the minimum fair share from the network in step-2. If any network capacity has not been allocated, which might affect fair allocation, step-2 makes sure to utilize the spare capacity by allocating fairly among the competing flows. In Equation (

26),

is the total number of flows inside the network. The

multiplication factor helps to prioritize the network utilization (

) over fairness (

) in step-2.

NFF-objective-VI

Minimum allocation constraint for step-2: