Data Filtering Based Recursive and Iterative Least Squares Algorithms for Parameter Estimation of Multi-Input Output Systems

Abstract

:1. Introduction

- By using the data filtering technique and the auxiliary model identification idea, a data filtering based recursive generalized least squares (F-RGLS) identification algorithm is derived for the multi-input OEAR system.

- A data filtering based iterative least squares (F-LSI) identification algorithm is developed for the multi-input OEAR system.

- The proposed F-LSI identification algorithm updates the parameter estimation by using all of the available data, and can produce highly accurate parameter estimates compared to the F-RGLS identification algorithm.

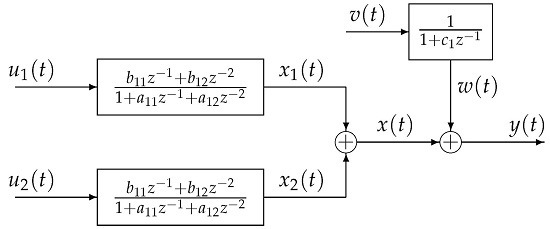

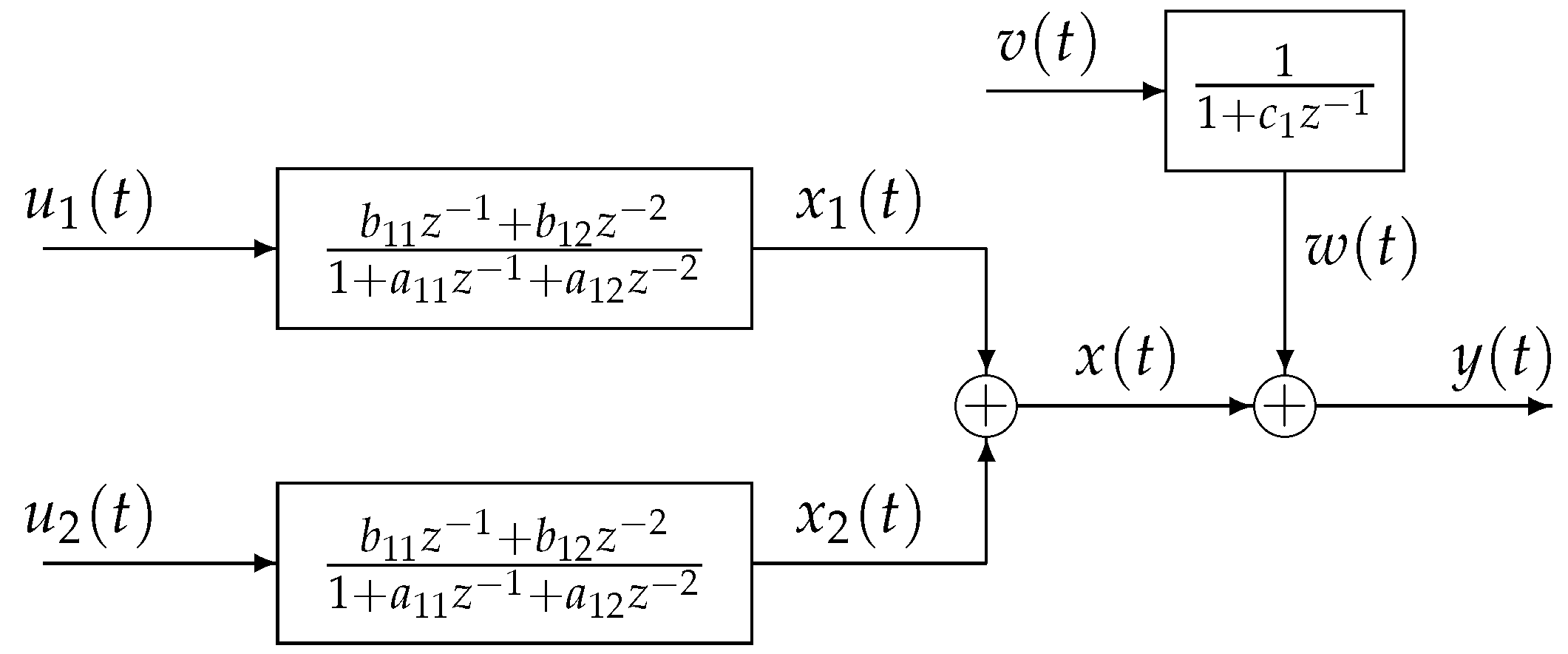

2. The System Description

3. The Data Filtering Based Recursive Least Squares Algorithm

4. The Data Filtering Based Iterative Least Squares Algorithm

- To initialize, let , , , , , , .

- Collect the input–output data : .

- Form by Equation (64), by Equation (63), and by Equation (62).

- Compute by Equation (66), update the parameter estimate by Equation (61).

- Read by Equation (69), compute and by Equations (58) and (59).

- Form and by Equations (56) and (57), update the parameter estimate by Equation (55).

- Read by Equation (68), compute and by Equations (60) and (65).

- Give a small positive ε, compare with , if , obtain the iterative time k and the parameter estimate , increase k by 1 and go to Step 2; otherwise, increase k by 1 and go to Step 3.

5. Examples

- Increasing the data length L can improve the parameter estimation accuracy of the F-RGLS algorithm and the F-LSI algorithm, and as the data length L increases, the parameter estimates are getting more stationary.

- Under the same data length, the estimation accuracy of the F-RGLS algorithm and the F-LSI algorithm increases as the noise variance decreases.

- Under the same data length and noise variance, the estimation errors of the F-LSI algorithm are smaller than the F-RGLS algorithm.

- The F-LSI algorithm has fast convergence speed, and the parameter estimates only need several iterations close to their true values.

6. Conclusions

Acknowledgments

Conflicts of Interest

References

- Mobayen, S. An LMI-based robust tracker for uncertain linear systems with multiple time-varying delays using optimal composite nonlinear feedback technique. Nonlinear Dyn. 2015, 80, 917–927. [Google Scholar] [CrossRef]

- Saab, S.S.; Toukhtarian, R. A MIMO sampling-rate-dependent controller. IEEE Trans. Ind. Electron. 2015, 62, 3662–3671. [Google Scholar] [CrossRef]

- Garnier, H.; Gilson, M.; Young, P.C.; Huselstein, E. An optimal iv technique for identifying continuous-time transfer function model of multiple input systems. Control Eng. Pract. 2007, 46, 471–486. [Google Scholar] [CrossRef]

- Chouaba, S.E.; Chamroo, A.; Ouvrard, R.; Poinot, T. A counter flow water to oil heat exchanger: MISO quasi linear parameter varying modeling and identification. Simulat. Model. Pract. Theory 2012, 23, 87–98. [Google Scholar] [CrossRef]

- Halaoui, M.E.; Kaabal, A.; Asselman, H.; Ahyoud, S.; Asselman, A. Dual band PIFA for WLAN and WiMAX MIMO systems for mobile handsets. Procedia Technol. 2016, 22, 878–883. [Google Scholar] [CrossRef]

- Al-Hazemi, F.; Peng, Y.Y.; Youn, C.H. A MISO model for power consumption in virtualized servers. Cluster Comput. 2015, 18, 847–863. [Google Scholar] [CrossRef]

- Yerramilli, S.; Tangirala, A.K. Detection and diagnosis of model-plant mismatch in MIMO systems using plant-model ratio. IFAC-Papers OnLine 2016, 49, 266–271. [Google Scholar] [CrossRef]

- Sannuti, P.; Saberi, A.; Zhang, M.R. Squaring down of general MIMO systems to invertible uniform rank systems via pre- and/or post- compensators. Automatica 2014, 50, 2136–2141. [Google Scholar] [CrossRef]

- Wang, Y.J.; Ding, F. Novel data filtering based parameter identification for multiple-input multiple-output systems using the auxiliary model. Automatica 2016, 71, 308–313. [Google Scholar] [CrossRef]

- Wang, D.Q.; Ding, F. Parameter estimation algorithms for multivariable Hammerstein CARMA systems. Inf. Sci. 2016, 355, 237–248. [Google Scholar] [CrossRef]

- Liu, Y.J.; Tao, T.Y. A CS recovery algorithm for model and time delay identification of MISO-FIR systems. Algorithms 2015, 8, 743–753. [Google Scholar] [CrossRef]

- Wang, X.H.; Ding, F. Convergence of the recursive identification algorithms for multivariate pseudo-linear regressive systems. Int. J. Adapt. Control Signal Process. 2016, 30, 824–842. [Google Scholar] [CrossRef]

- Ji, Y.; Liu, X.M. Unified synchronization criteria for hybrid switching-impulsive dynamical networks. Circuits Syst. Signal Process. 2015, 34, 1499–1517. [Google Scholar] [CrossRef]

- Meng, D.D.; Ding, F. Model equivalence-based identification algorithm for equation-error systems with colored noise. Algorithms 2015, 8, 280–291. [Google Scholar] [CrossRef]

- Wang, Y.J.; Ding, F. The filtering based iterative identification for multivariable systems. IET Control Theory Appl. 2016, 10, 894–902. [Google Scholar] [CrossRef]

- Dehghan, M.; Hajarian, M. Finite iterative methods for solving systems of linear matrix equations over reflexive and anti-reflexive matrices. Bull. Iran. Math. Soc. 2014, 40, 295–323. [Google Scholar]

- Xu, L. The damping iterative parameter identification method for dynamical systems based on the sine signal measurement. Signal Process. 2016, 120, 660–667. [Google Scholar] [CrossRef]

- Zhang, W.G. Decomposition based least squares iterative estimation for output error moving average systems. Eng. Comput. 2014, 31, 709–725. [Google Scholar] [CrossRef]

- Zhou, L.C.; Li, X.L.; Xu, H.G.; Zhu, P.Y. Gradient-based iterative identification for Wiener nonlinear dynamic systems with moving average noises. Algorithms 2015, 8, 712–722. [Google Scholar] [CrossRef]

- Shi, P.; Luan, X.L.; Liu, F. H-infinity filtering for discrete-time systems with stochastic incomplete measurement and mixed delays. IEEE Trans. Ind. Electron. 2012, 59, 2732–2739. [Google Scholar] [CrossRef]

- Wang, Y.J.; Ding, F. The auxiliary model based hierarchical gradient algorithms and convergence analysis using the filtering technique. Signal Process. 2016, 128, 212–221. [Google Scholar] [CrossRef]

- Li, X.Y.; Sun, S.L. H-infinity filtering for networked linear systems with multiple packet dropouts and random delays. Digital Signal Process. 2015, 46, 59–67. [Google Scholar] [CrossRef]

- Ding, F.; Liu, X.M.; Gu, Y. An auxiliary model based least squares algorithm for a dual-rate state space system with time-delay using the data filtering. J. Franklin Inst. 2016, 353, 398–408. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, Z.P.; Sun, F.C.; Dorrell, D.G. Online parameter identification of ultracapacitor models using the extended kalman filter. Algorithms 2014, 7, 3204–3217. [Google Scholar] [CrossRef]

- Basin, M.; Shi, P.; Calderon-Alvarez, D. Joint state filtering and parameter estimation for linear stochastic time-delay systems. Signal Process. 2011, 91, 782–792. [Google Scholar] [CrossRef]

- Scarpiniti, M.; Comminiello, D.; Parisi, R.; Uncini, A. Nonlinear system identification using IIR Spline Adaptive filters. Signal Process. 2015, 108, 30–35. [Google Scholar] [CrossRef]

- Wang, C.; Tang, T. Several gradient-based iterative estimation algorithms for a class of nonlinear systems using the filtering technique. Nonlinear Dyn. 2014, 77, 769–780. [Google Scholar] [CrossRef]

- Ding, F.; Wang, Y.J.; Ding, J. Recursive least squares parameter identification for systems with colored noise using the filtering technique and the auxiliary model. Digital Signal Process. 2015, 37, 100–108. [Google Scholar] [CrossRef]

- Wang, D.Q. Least squares-based recursive and iterative estimation for output error moving average systems using data filtering. IET Control Theory Appl. 2011, 5, 1648–1657. [Google Scholar] [CrossRef]

| 100 | 0.14240 | 0.23494 | 0.96900 | −0.75613 | −0.11071 | 0.35200 | −0.51875 | −0.80734 | 0.00302 | 12.18486 | |

| 200 | 0.14579 | 0.25005 | 0.96382 | −0.78341 | −0.10189 | 0.35013 | −0.49750 | −0.78942 | 0.11045 | 5.68913 | |

| 500 | 0.15222 | 0.26364 | 0.97647 | −0.77308 | −0.09060 | 0.35005 | −0.48724 | −0.78857 | 0.13262 | 4.41957 | |

| 1000 | 0.15892 | 0.25970 | 0.98546 | −0.76410 | −0.10005 | 0.34897 | −0.49297 | −0.78559 | 0.15257 | 3.28600 | |

| 2000 | 0.15443 | 0.25820 | 0.98663 | −0.77005 | −0.10214 | 0.34954 | −0.49407 | −0.79532 | 0.15569 | 2.85038 | |

| 3000 | 0.15318 | 0.25979 | 0.99026 | −0.77528 | −0.10025 | 0.35272 | −0.49559 | −0.79963 | 0.15830 | 2.63155 | |

| 100 | 0.16661 | 0.22088 | 0.97687 | −0.76637 | −0.04796 | 0.28133 | −0.58256 | −0.92613 | −0.01077 | 16.67281 | |

| 200 | 0.14230 | 0.24704 | 0.95213 | −0.83607 | −0.06133 | 0.30376 | −0.51120 | −0.82406 | 0.10977 | 7.91161 | |

| 500 | 0.15181 | 0.28154 | 0.96971 | −0.78348 | −0.06034 | 0.33148 | −0.46893 | −0.78559 | 0.14472 | 5.25893 | |

| 1000 | 0.17142 | 0.27161 | 0.98894 | −0.74692 | −0.09093 | 0.33498 | −0.48227 | −0.76910 | 0.15722 | 4.45410 | |

| 2000 | 0.16154 | 0.27069 | 0.98704 | −0.75643 | −0.10049 | 0.34152 | −0.48438 | −0.79306 | 0.16109 | 3.31329 | |

| 3000 | 0.15847 | 0.27633 | 0.99597 | −0.76992 | −0.09664 | 0.35335 | −0.48844 | −0.80372 | 0.16376 | 2.95257 | |

| True values | 0.15000 | 0.25000 | 0.99000 | −0.78000 | −0.10000 | 0.35000 | −0.50000 | −0.80000 | 0.20000 | ||

| k | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | −0.02217 | −0.02741 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | −0.04161 | 100.72791 | |

| 2 | 0.00000 | 0.00000 | 0.97952 | −0.91091 | 0.00000 | 0.00000 | −0.49792 | −0.86478 | 0.15858 | 29.65842 | |

| 5 | 0.15601 | 0.25797 | 0.98928 | −0.77078 | −0.09937 | 0.34653 | −0.49427 | −0.79873 | 0.19811 | 0.92811 | |

| 10 | 0.15519 | 0.25727 | 0.98927 | −0.77191 | −0.09993 | 0.34719 | −0.49479 | −0.79810 | 0.20207 | 0.83049 | |

| 1 | −0.02217 | −0.02741 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | −0.04161 | 100.72791 | |

| 2 | 0.00000 | 0.00000 | 0.97808 | −0.90354 | 0.00000 | 0.00000 | −0.48514 | −0.86083 | 0.16440 | 29.49952 | |

| 5 | 0.16653 | 0.27248 | 0.98778 | −0.75450 | −0.09914 | 0.34084 | −0.48385 | −0.79505 | 0.20121 | 2.56878 | |

| 10 | 0.16596 | 0.27194 | 0.98782 | −0.75539 | −0.09986 | 0.34146 | −0.48438 | −0.79426 | 0.20209 | 2.49317 | |

| True values | 0.15000 | 0.25000 | 0.99000 | −0.78000 | −0.10000 | 0.35000 | −0.50000 | −0.80000 | 0.20000 | ||

| k | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | −0.00166 | −0.02686 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | −0.04216 | 100.60660 | |

| 2 | 0.00000 | 0.00000 | 0.98621 | −0.91856 | 0.00000 | 0.00000 | −0.50246 | −0.86196 | 0.16056 | 29.74749 | |

| 5 | 0.15307 | 0.25903 | 0.99062 | −0.77955 | −0.09866 | 0.34570 | −0.49802 | −0.80145 | 0.18988 | 0.89726 | |

| 10 | 0.15239 | 0.25845 | 0.99072 | −0.78046 | −0.09964 | 0.34658 | −0.49821 | −0.80051 | 0.19238 | 0.74333 | |

| 1 | −0.00166 | −0.02686 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | −0.04216 | 100.60660 | |

| 2 | 0.00000 | 0.00000 | 0.98710 | −0.92316 | 0.00000 | 0.00000 | −0.49770 | −0.86426 | 0.16301 | 29.83299 | |

| 5 | 0.15776 | 0.27565 | 0.99202 | −0.78054 | −0.09757 | 0.33874 | −0.49436 | −0.80278 | 0.19163 | 1.87793 | |

| 10 | 0.15708 | 0.27501 | 0.99217 | −0.78149 | −0.09882 | 0.33965 | −0.49462 | −0.80160 | 0.19240 | 1.79377 | |

| True values | 0.15000 | 0.25000 | 0.99000 | −0.78000 | −0.10000 | 0.35000 | −0.50000 | −0.80000 | 0.20000 | ||

| L | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 2000 | 0.15519 | 0.25727 | 0.98927 | −0.77191 | −0.09993 | 0.34719 | −0.49479 | −0.79810 | 0.20207 | 0.83049 | |

| 4000 | 0.15239 | 0.25845 | 0.99072 | −0.78046 | −0.09964 | 0.34658 | −0.49821 | −0.80051 | 0.19238 | 0.74333 | |

| 2000 | 0.16596 | 0.27194 | 0.98782 | −0.75539 | −0.09986 | 0.34146 | −0.48438 | −0.79426 | 0.20209 | 2.49317 | |

| 4000 | 0.15708 | 0.27501 | 0.99217 | −0.78149 | −0.09882 | 0.33965 | −0.49462 | −0.80160 | 0.19240 | 1.79377 | |

| True values | 0.15000 | 0.25000 | 0.99000 | −0.78000 | −0.10000 | 0.35000 | −0.50000 | −0.80000 | 0.20000 | ||

| t | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 100 | 0.31044 | 0.27323 | 0.47823 | 0.79593 | −0.16988 | 0.31233 | 0.34733 | 0.79082 | −0.35990 | 11.48781 |

| 200 | 0.35712 | 0.26107 | 0.47534 | 0.80554 | −0.20188 | 0.32807 | 0.38468 | 0.77922 | −0.30469 | 7.07660 |

| 500 | 0.37558 | 0.30333 | 0.48485 | 0.83653 | −0.22143 | 0.30058 | 0.41246 | 0.78396 | −0.28669 | 4.56000 |

| 1000 | 0.36022 | 0.29164 | 0.49690 | 0.84216 | −0.23072 | 0.29199 | 0.40519 | 0.76406 | −0.27138 | 2.49100 |

| 2000 | 0.36115 | 0.29540 | 0.49771 | 0.84667 | −0.24094 | 0.30037 | 0.39938 | 0.74612 | −0.27434 | 2.05076 |

| 3000 | 0.36636 | 0.30139 | 0.50263 | 0.84719 | −0.24389 | 0.30650 | 0.39658 | 0.74012 | −0.26554 | 1.89797 |

| True values | 0.35000 | 0.30000 | 0.50000 | 0.84000 | −0.25000 | 0.30000 | 0.40000 | 0.75000 | −0.25000 |

| k | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | −0.00611 | 0.03601 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00000 | 0.00165 | 99.63926 |

| 2 | 0.00000 | 0.00000 | 0.49960 | 0.67716 | 0.00000 | 0.00000 | 0.41014 | 0.84617 | −0.26452 | 43.64422 |

| 3 | 0.48512 | 0.14379 | 0.50775 | 0.90619 | −0.22359 | 0.25926 | 0.39984 | 0.75210 | −0.26452 | 15.35917 |

| 4 | 0.35231 | 0.33645 | 0.50464 | 0.84218 | −0.25181 | 0.31725 | 0.39804 | 0.73802 | −0.10385 | 10.48969 |

| 5 | 0.35968 | 0.29443 | 0.50270 | 0.84889 | −0.24823 | 0.31243 | 0.39831 | 0.73841 | −0.04162 | 14.44370 |

| 6 | 0.36474 | 0.29771 | 0.50305 | 0.84987 | −0.24911 | 0.31358 | 0.39819 | 0.73823 | −0.24951 | 1.76580 |

| 7 | 0.36336 | 0.29924 | 0.50326 | 0.84956 | −0.24869 | 0.31289 | 0.39808 | 0.73850 | −0.25696 | 1.73424 |

| 8 | 0.36328 | 0.29861 | 0.50327 | 0.84966 | −0.24868 | 0.31286 | 0.39809 | 0.73855 | −0.25698 | 1.73382 |

| 9 | 0.36336 | 0.29862 | 0.50327 | 0.84967 | −0.24865 | 0.31286 | 0.39808 | 0.73856 | −0.25703 | 1.73724 |

| 10 | 0.36335 | 0.29864 | 0.50327 | 0.84966 | −0.24865 | 0.31285 | 0.39808 | 0.73856 | −0.25702 | 1.73638 |

| True values | 0.35000 | 0.30000 | 0.50000 | 0.84000 | −0.25000 | 0.30000 | 0.40000 | 0.75000 | −0.25000 |

© 2016 by the author; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, J. Data Filtering Based Recursive and Iterative Least Squares Algorithms for Parameter Estimation of Multi-Input Output Systems. Algorithms 2016, 9, 49. https://doi.org/10.3390/a9030049

Ding J. Data Filtering Based Recursive and Iterative Least Squares Algorithms for Parameter Estimation of Multi-Input Output Systems. Algorithms. 2016; 9(3):49. https://doi.org/10.3390/a9030049

Chicago/Turabian StyleDing, Jiling. 2016. "Data Filtering Based Recursive and Iterative Least Squares Algorithms for Parameter Estimation of Multi-Input Output Systems" Algorithms 9, no. 3: 49. https://doi.org/10.3390/a9030049

APA StyleDing, J. (2016). Data Filtering Based Recursive and Iterative Least Squares Algorithms for Parameter Estimation of Multi-Input Output Systems. Algorithms, 9(3), 49. https://doi.org/10.3390/a9030049