Semi-Supervised Classification Based on Low Rank Representation

Abstract

:1. Introduction

2. Methodology

2.1. Low-Rank Representation for Graph Construction

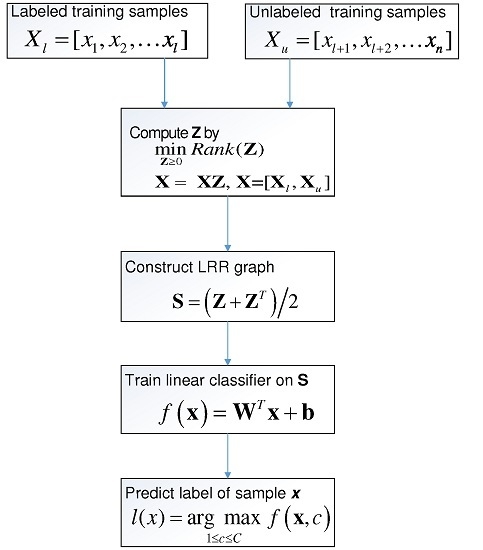

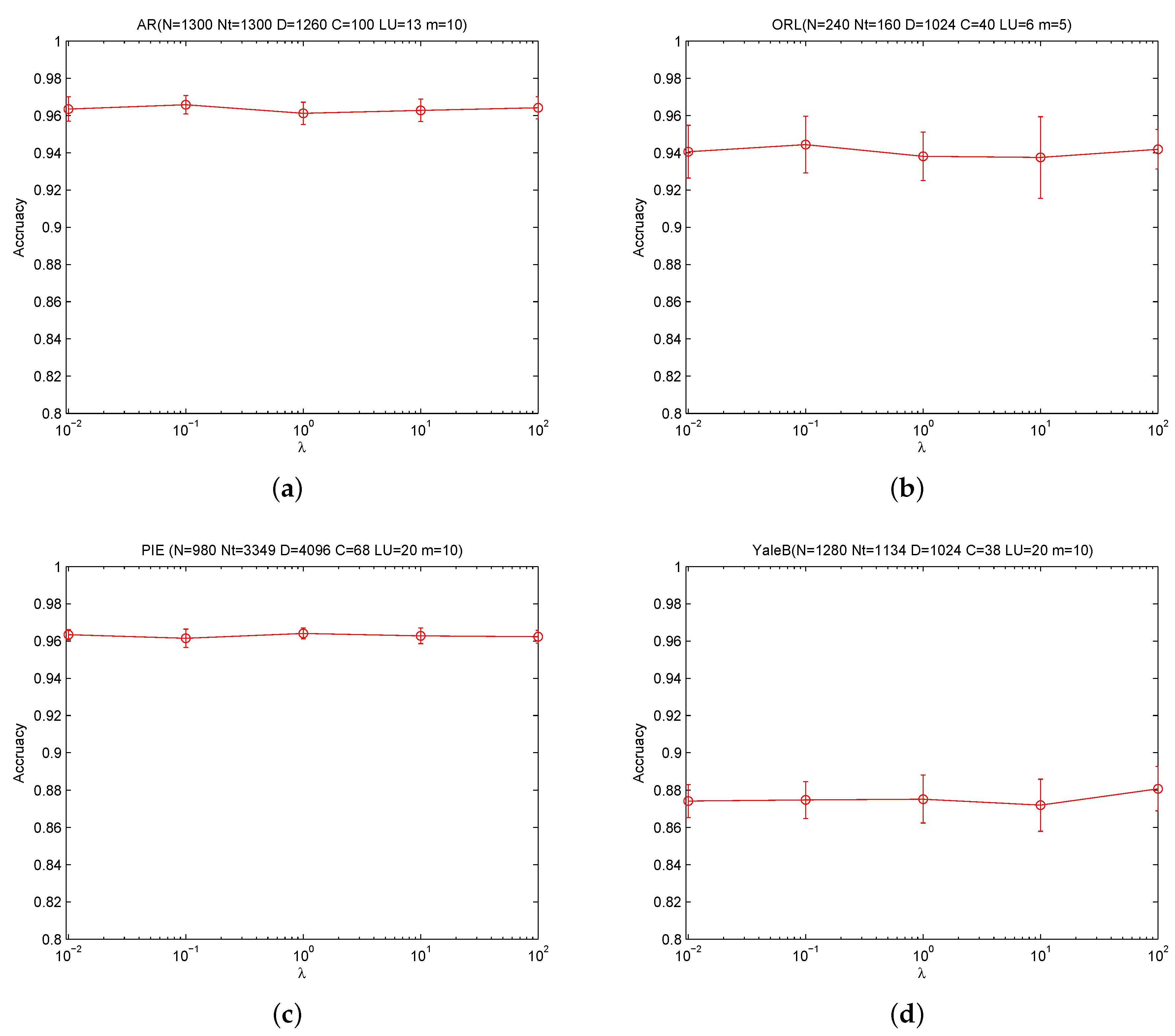

2.2. Semi-Supervised Classification Based on Low Rank Representation

3. Experiments

3.1. Experiments Setup

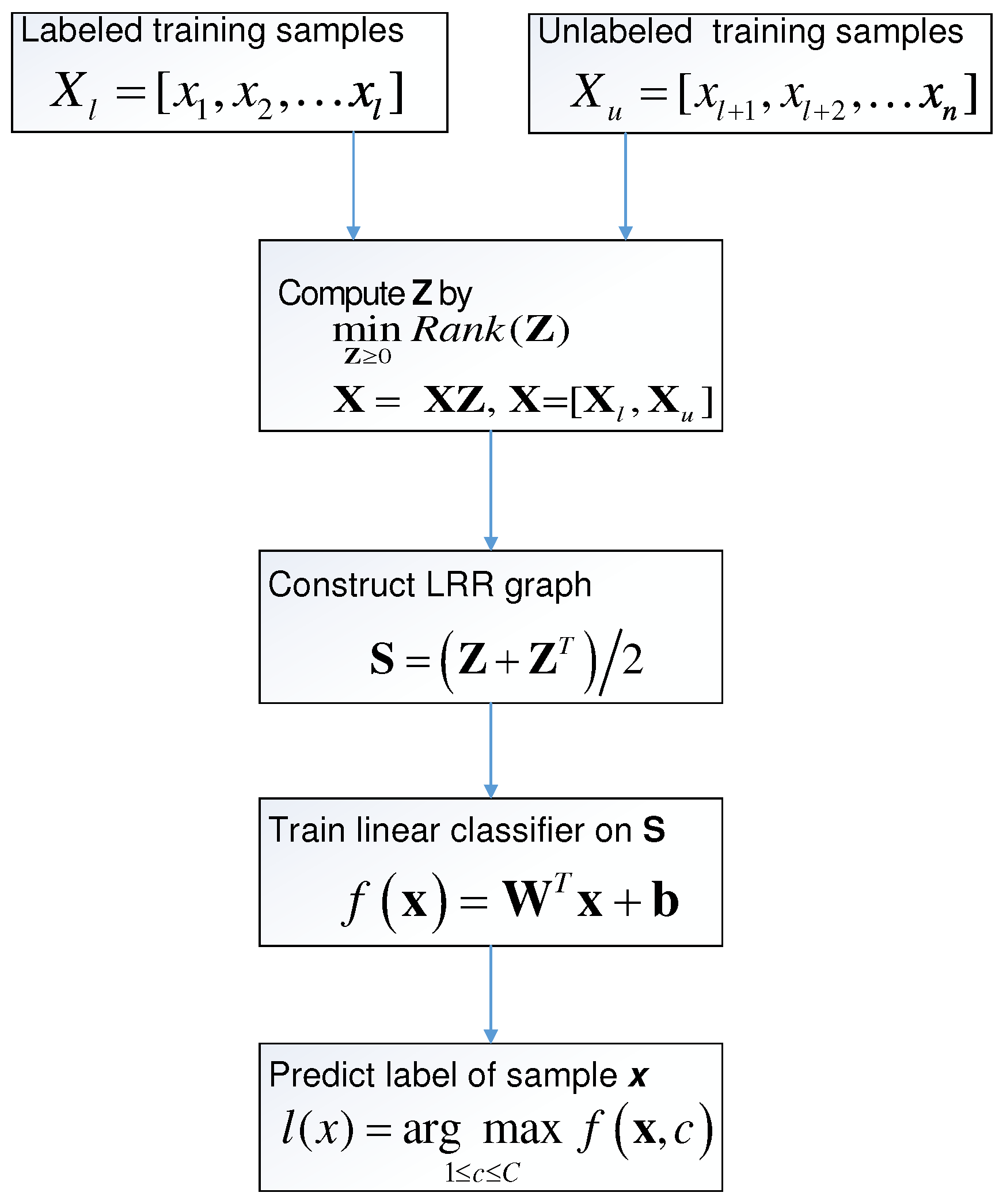

3.2. Accuracy with Respect to Different Number of Labeled Samples

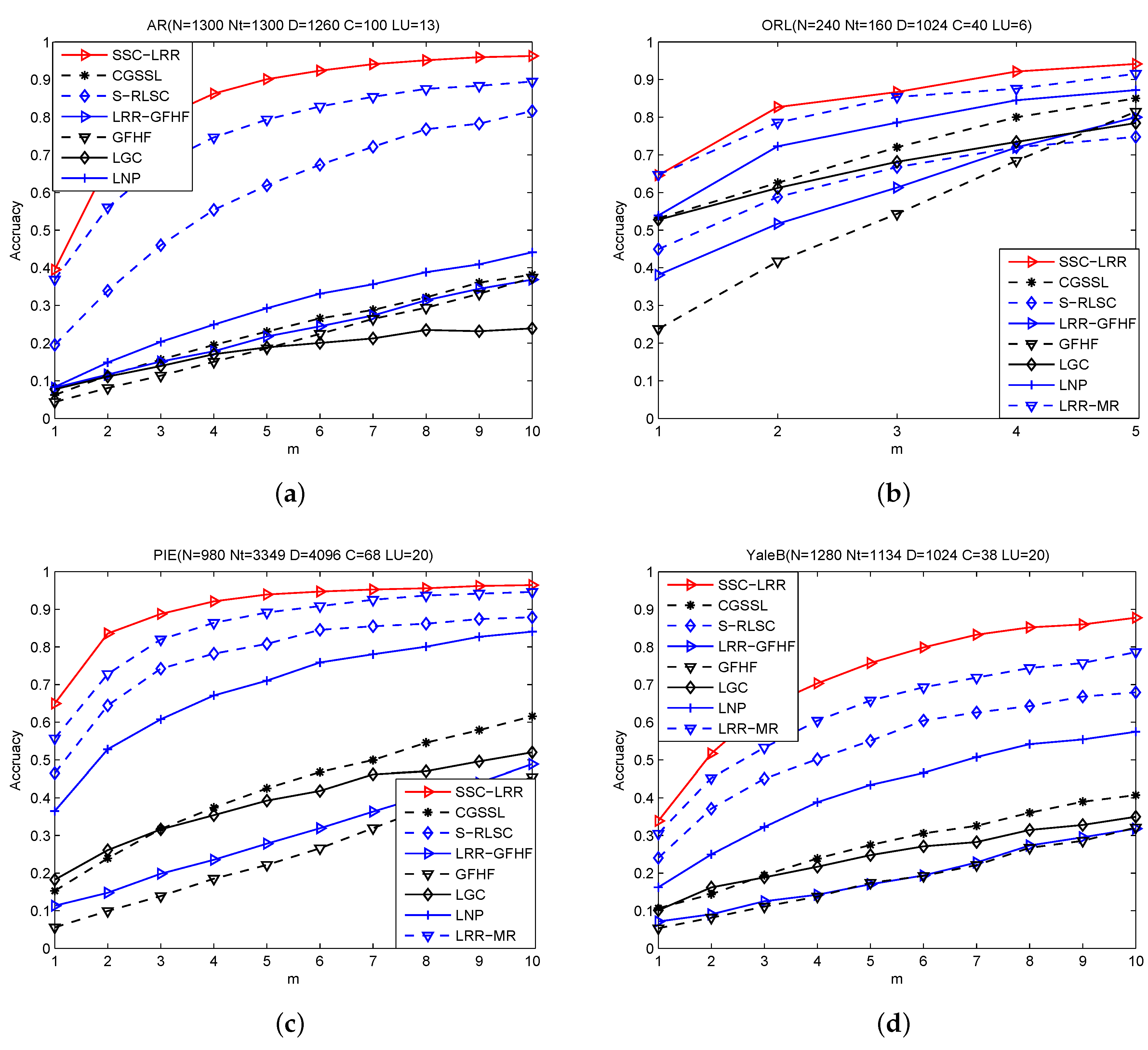

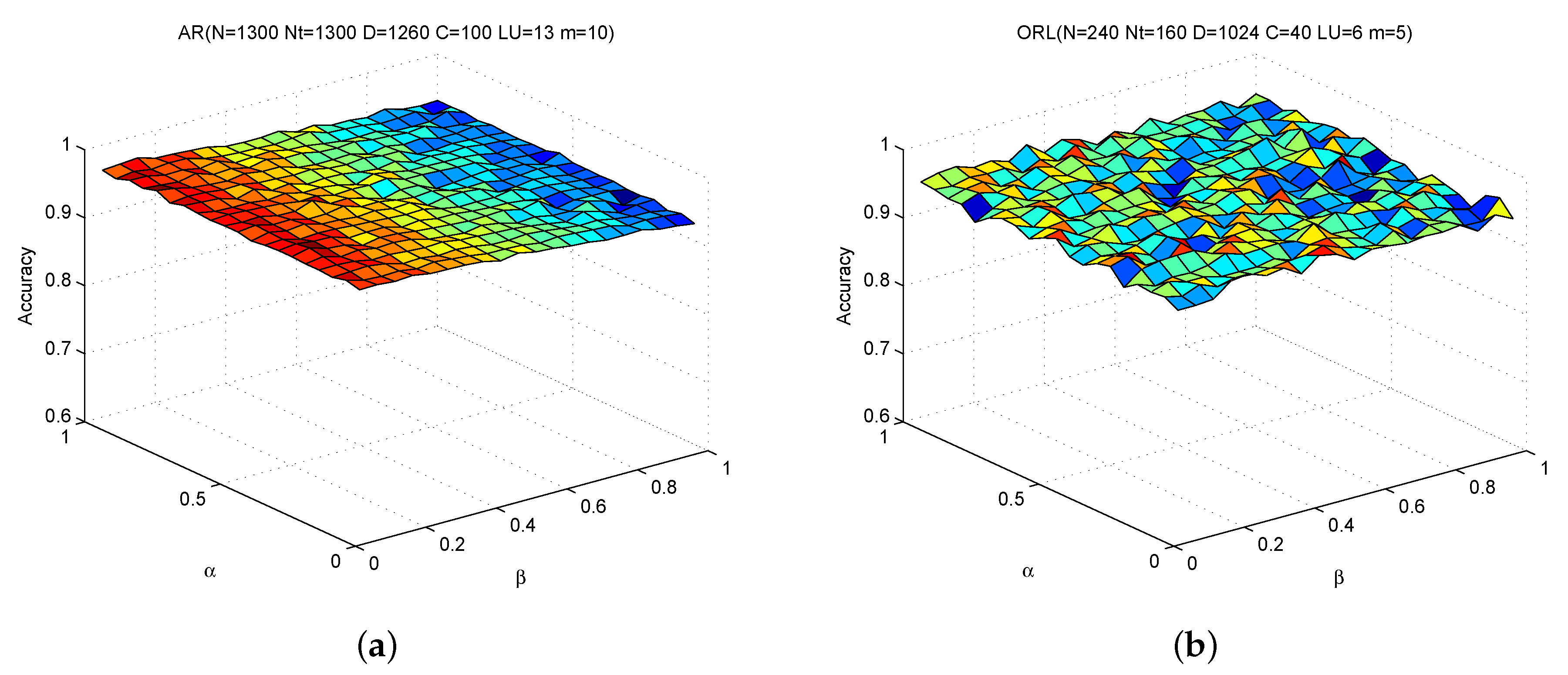

3.3. Sensitivity Analysis on Input Parameters

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zhu, X. Semi-Supervised Learning Literature Survey; Technical Report 1530; University of Wisconsin-Madison: Madison, WI, USA, 2008. [Google Scholar]

- Zhu, X.; Ghahramani, Z.; Lafferty, J. Semi-supervised learning using gaussian fields and harmonic functions. In Proceedings of the International Conference on Machine Learning, Washington, DC, USA, 21–24 August 2003; pp. 912–919.

- Zhou, D.; Bousquet, O.; Lal, T.; Weston, J.; Scholkopf, B. Learning with local and global consistency. Adv. Neural Inf. Process. Syst. 2004, 16, 321–328. [Google Scholar]

- Zhu, X.; Lafferty, O.J.; Ghahramani, Z. Semi-Supervised Learning: From Gaussian Fields to Gaussian Processes; Technical Report CMU-CS-03-175; Carnegie Mellon University: Pittsburgh, PA, USA, 2003. [Google Scholar]

- Wang, J.; Wang, F.; Zhang, C.; Shen, H.; Quan, L. Linear neighborhood propagation and its applications. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 1600–1615. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Chang, S. Robust multi-class transductive learning with graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 381–388.

- Parson, L.; Haque, E.; Liu, H. Subspace clustering for high dimensional data: A review. ACM SIGKDD Explor. Newsl. 2004, 6, 90–105. [Google Scholar] [CrossRef]

- Roweis, S.T.; Saul, L.K. Nonlinear dimensionality reduction by locally linear embedding. Science 2000, 290, 2323–2326. [Google Scholar] [CrossRef] [PubMed]

- Zhao, M.; Chow, T.W.; Zhang, Z.; Li, B. Automatic image annotation via compact graph based semi-supervised learning. Knowl.-Based Syst. 2015, 76, 148–165. [Google Scholar] [CrossRef]

- Cheng, B.; Yang, J.; Yan, S. Learning With l1 Graph for Image Analysis. IEEE Trans. Image Process. 2010, 19, 858–866. [Google Scholar] [CrossRef] [PubMed]

- Fan, M.; Gu, N.; Qiao, H.; Zhang, B. Sparse regularization for semi-supervised classification. Pattern Recognit. 2011, 44, 1777–1784. [Google Scholar] [CrossRef]

- Yu, G.; Zhang, G.; Zhang, Z.; Yu, Z.; Deng, L. Semi-supervised classification based on subspace sparse representation. Knowl. Inf. Syst. 2015, 43, 81–101. [Google Scholar] [CrossRef]

- Yang, S.; Wang, X.; Wang, M.; Han, Y.; Jiao, L. Semi-supervised low-rank representation graph for pattern recognition. IET Image Process. 2013, 7, 131–136. [Google Scholar] [CrossRef]

- Liu, G.; Lin, Z.; Yan, S.; Sun, J.; Ma, Y. Robust recovery of subspace structures by low-rank representation. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 171–184. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Lin, Z.; Yu, Y. Robust subspace segmentation by low-rank representation. In Proceedings of the 27th International Conference on Machine Learning, Haifa, Israe, 21–24 June 2010; pp. 663–670.

- Peng, Y.; Long, X.; Lu, B. Graph based semi-supervised learning via structure preserving low-rank representation. Neural Process. Lett. 2015, 41, 389–406. [Google Scholar] [CrossRef]

- Yang, S.; Feng, Z.; Ren, Y.; Liu, H.; Jiao, L. Semi-supervised classification via kernel low-rank representation graph. Knowl. Based Syst. 2014, 69, 150–158. [Google Scholar] [CrossRef]

- Zhuang, L.; Gao, H.; Lin, Z.; Ma, Y.; Zhang, X.; Yu, N. Non-negative low rank and sparse graph for semi-supervised learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2328–2335.

- Cai, J.F.; Candès, E.J.; Shen, Z. A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 2010, 20, 1956–1982. [Google Scholar] [CrossRef]

- Candès, E.; Li, X.; Ma, Y.; Wright, J. Robust principal component analysis. J. ACM 2011, 58, 11. [Google Scholar] [CrossRef]

- Liu, J.; Ji, S.; Ye, J. Multi-task feature learning via efficient l2,1-norm minimization. In Proceedings of the International Conference on Uncertainty in Artificial Intelligence, Montreal, QC, Canada, 18–21 June 2009; pp. 339–348.

- Lin, Z.; Liu, R.; Su, Z. Linearized alternating direction method with adaptive penalty for low-rank representation. In Proceedings of the Neural Information Processing Systems, Granada, Spain, 12–17 December 2011; pp. 612–620.

- Chung, F.R. Spectral Graph Theory; American Mathematical Society: Providence, RI, USA, 1997; Volume 92. [Google Scholar]

- Martinez, A.; Benavente, R. The AR-Face Database; Technical Report 24; Computer Vision Center: Barcelona, Spain, 1998. [Google Scholar]

- Samaria, F.; Harter, A. Parameterisation of a stochastic model for human face identification. In Proceedings of the the Second IEEE Workshop on Applications of Computer Vision, Sarasota, FL, USA, 5–7 December 1994; pp. 138–142.

- Sim, T.; Baker, S.; Bsat, M. The CMU Pose, Illumination, and Expression Database. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1615–1618. [Google Scholar]

- Georghiades, A.; Belhumeur, P.; Kriegman, D. From few to many: Illumination cone models for face recognition under variable lighting and pose. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 643–660. [Google Scholar] [CrossRef]

- Belkin, M.; Niyogi, P.; Sindhwani, V. Manifold regularization: A geometric framework for learning from labeled and unlabeled examples. J. Mach. Learn. Res. 2006, 7, 2399–2434. [Google Scholar]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hou, X.; Yao, G.; Wang, J. Semi-Supervised Classification Based on Low Rank Representation. Algorithms 2016, 9, 48. https://doi.org/10.3390/a9030048

Hou X, Yao G, Wang J. Semi-Supervised Classification Based on Low Rank Representation. Algorithms. 2016; 9(3):48. https://doi.org/10.3390/a9030048

Chicago/Turabian StyleHou, Xuan, Guangjun Yao, and Jun Wang. 2016. "Semi-Supervised Classification Based on Low Rank Representation" Algorithms 9, no. 3: 48. https://doi.org/10.3390/a9030048