Potential of ILRIS3D Intensity Data for Planar Surfaces Segmentation

Abstract

:1. Introduction

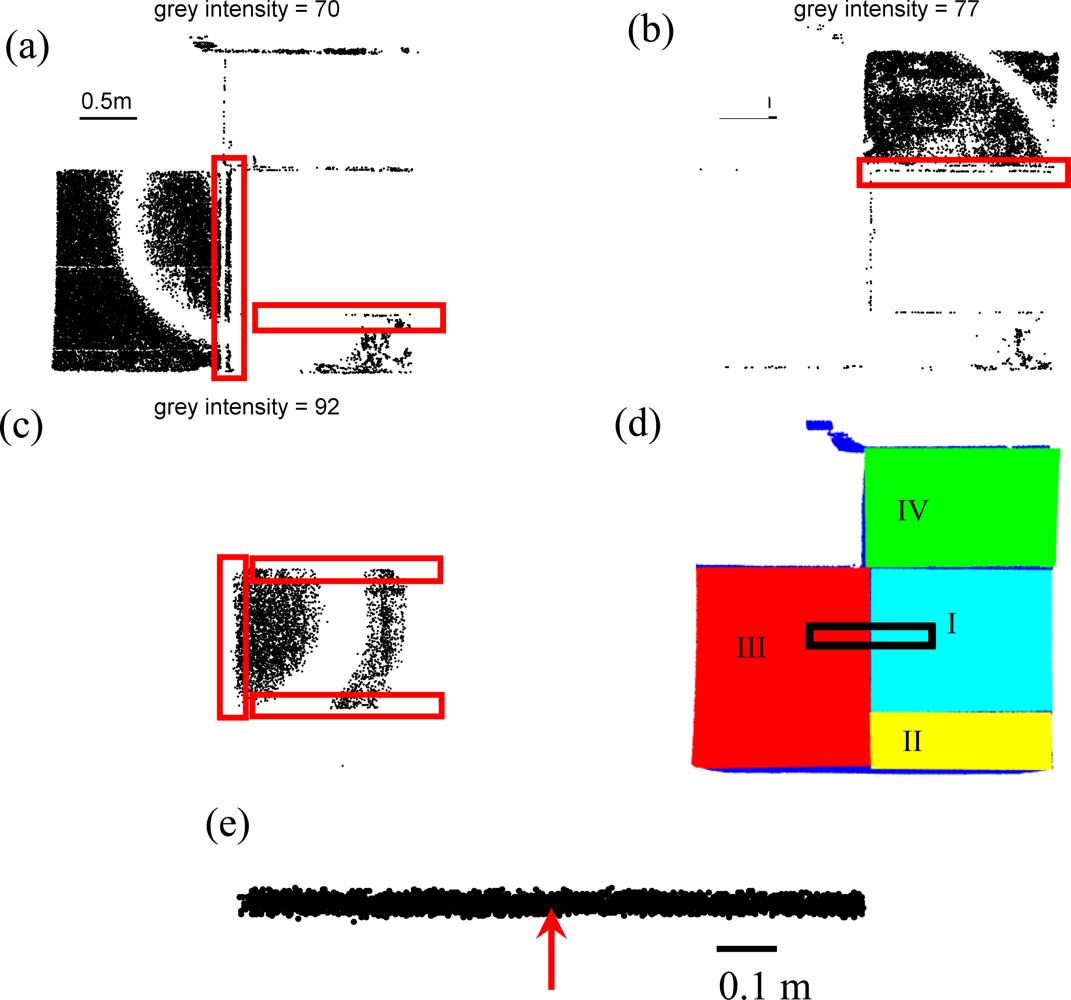

2. Methods and Material

2.1. ILRIS3D

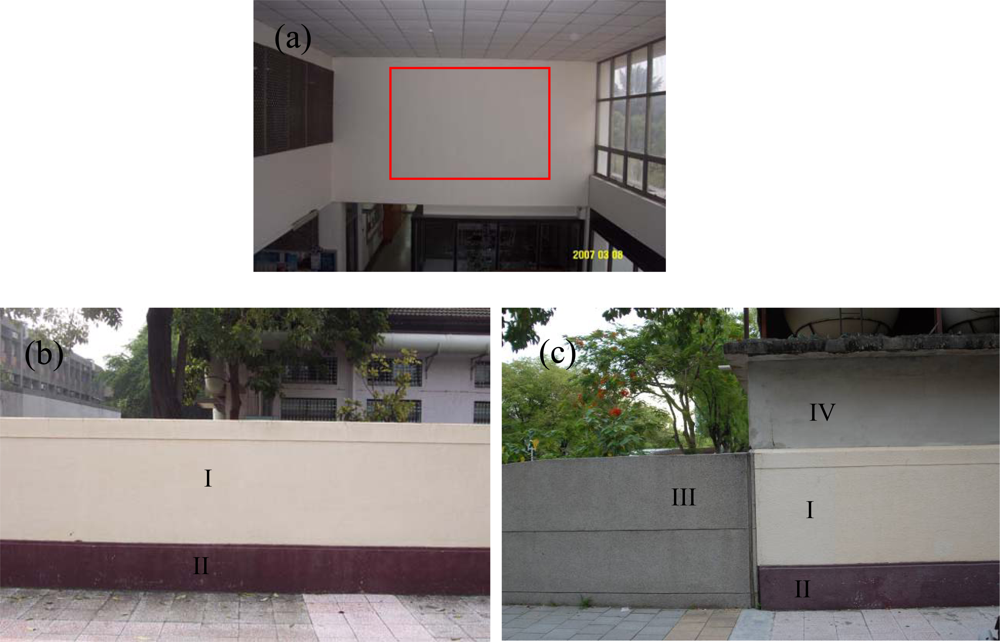

2.2. Experiments

3. Results and Discussion

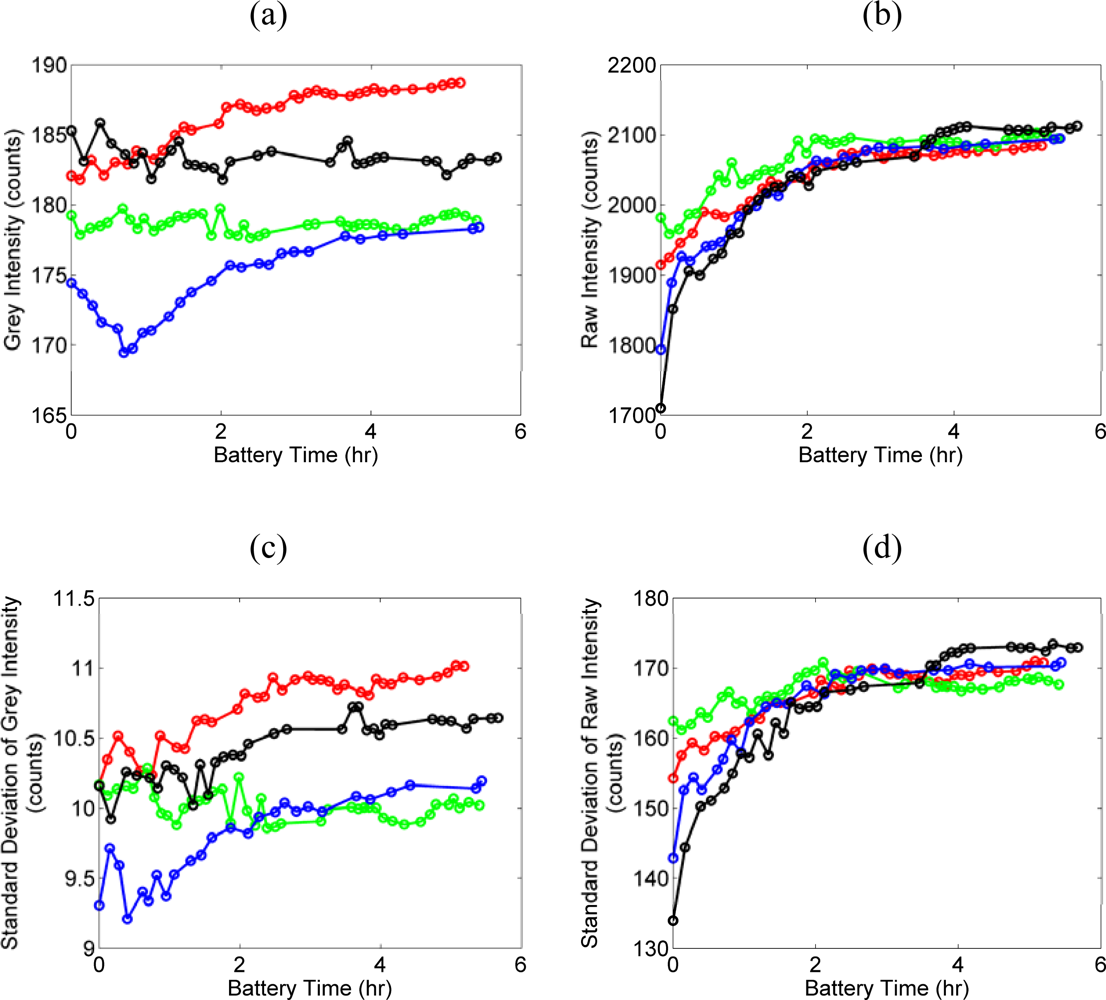

3.1. Temporal Intensity Variation

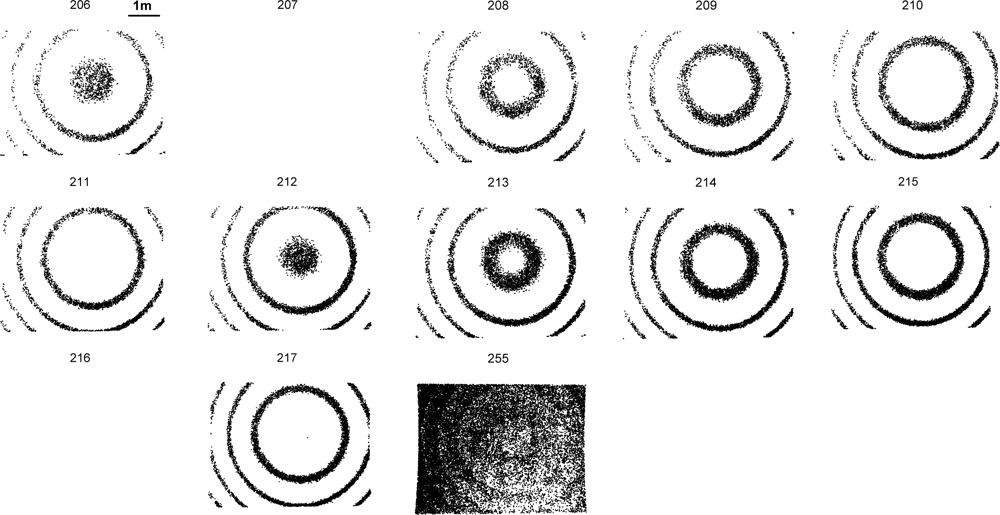

3.2. Intensity Image and Intensity Image Sequence

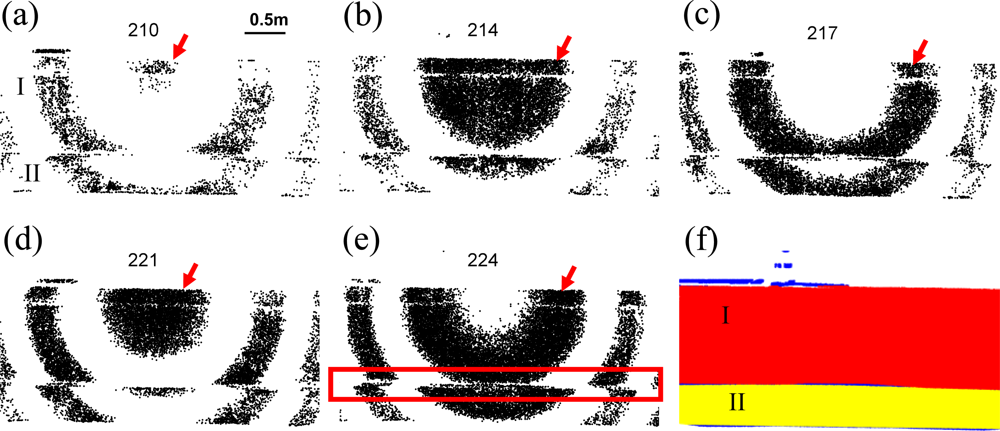

3.3. Planar Surface Scans

4. Conclusions

- Step 1. Construct gray intensity images. Gray intensity images with no point cloud can be discarded if desirable.

- Step 2. Construct intensity image sequence by composing the grain intensity images in sequence.

- Step 3. Identify the presence of surfaces by recognizing the visual cues of the evolving pattern of concentric circle with varying radii in the intensity image sequence.

- Step 4. Identify the presence of boundaries by recognizing the linear features that separate different group of varying radii or delineate the boundary of a group of vary radii.

- Step 5. Group the point cloud into different planar surfaces according to the visual cures of the concentric circular pattern in Step 3 and linear features identified in Step 4 by manual selection.

Acknowledgments

References and Notes

- Baltsavias, E.P. Airborne laser scanning: basic relations and formulas. ISPRS J. Photogram. Remote Sens 1999, 54, 199–214. [Google Scholar]

- Dorninger, P.; Pfeifer, N. A comprehensive automated 3D approach for building extraction, reconstruction, and regularization from airborne laser scanning point clouds. Sensors 2008, 8, 7323–7343. [Google Scholar]

- Rutzinger, M.; Höfle, B.; Hollaus, M.; Pfeifer, N. Object-based point cloud analysis of full-waveform airborne laser scanning data for urban vegetation classification. Sensors 2008, 8, 4505–4528. [Google Scholar]

- Coren, F.; Sterzai, P. Radiometric correction in laser scanning. Int. J. Remote Sens 2006, 27, 3097–3104. [Google Scholar]

- Orka, H.O.; Naesset, E.; Bollandsas, O.M. Utilizing airborne laser intensity for tree species classification. Proceedings of ISPRS Workshop on Laser Scanning 2007 and SilviLaser 2007, Espoo, Finland, September 12–14; 2007; pp. 300–304. [Google Scholar]

- Jaakkola, A.; Hyyppä, J.; Hyyppä, H.; Kukko, A. Retrieval algorithms for road surface modelling using laser-based mobile mapping. Sensors 2008, 8, 5238–5249. [Google Scholar]

- Wang, C.K.; Philpot, W.D. Using airborne bathymetric lidar to detect bottom type variation in shallow waters. Remote Sens. Environ 2007, 106, 123–135. [Google Scholar]

- Donoghue, D.N.M.; Watt, P.J.; Cox, N.J.; Wilson, J. Remote sensing of species mixtures in conifer plantations using LiDAR height and intensity data. Remote Sens. Environ 2007, 110, 509–522. [Google Scholar]

- Hofle, B.; Pfeifer, N. Correction of laser scanning intensity data: data and model-driven approaches. ISPRS J. Photogram. Remote Sens 2007, 62, 415–433. [Google Scholar]

- Kaartinen, S.; Hyyppa, J.; Litkey, P.; Hyyppa, H.; Ahokas, E.; Kukko, A.; Kaartinen, H. Radiometric calibration of ALS intensity. Proceedings of ISPRS Workshop on Laser Scanning 2007 and SilviLaser 2007, Espoo, Finland, September 12–14, 2007; pp. 201–205.

- Kaasalainen, S.; Ahokas, E.; Hyyppa, J.; Suomalainen, J. Study of surface brightness from backscattered laser intensity: Calibration of laser data. IEEE Geosci. Remote Sens. Lett 2005, 2, 255–259. [Google Scholar]

- Kaasalainen, S.; Kukko, A.; Lindroos, T.; Litkey, P.; Kaartinen, H.; Hyyppae, J.; Ahokas, E. Brightness measurements and calibration with airborne and terrestrial laser scanners. IEEE Trans. Geosci. Remote Sens 2008, 46, 528–534. [Google Scholar]

- Kaasalainen, S.; Lindroos, T.; Hyyppa, J. Toward hyperspectral lidar: measurement of spectral backscatter intensity with a supercontinuum laser source. IEEE Geosci. Remote Sens. Lett 2007, 4, 211–215. [Google Scholar]

- Kukko, A.; Kaasalainen, S.; Litkey, P. Effect of incidence angle on laser scanner intensity and surface data. Appl. Opt 2008, 47, 986–992. [Google Scholar]

- Lemmens, M. Terrestrial laser scanners. GIM International 2007. [Google Scholar]

- Kaasalainen, S.; Hyyppa, H.; Kukko, A.; Litkey, P.; Ahokas, E.; Hyyppa, J.; Lehner, H.; Jaakkola, A.; Suomalainen, J.; Akujarvi, A.; Kaasalainen, M.; Pyysalo, U. Radiometric calibration of lidar intensity with commercially available reference targets. IEEE Trans. Geosci. Remote Sens 2009, 47, 588–598. [Google Scholar]

- Revelle, J. Field Operations Specialist, Optech Incorporated. Vaughan, ON, Canada, (personal communication, 2009).

- Khoshelham, K. Extending generalized hough transform to detect 3d objects in laser range data. ISPRS Workshop on Laser Scanning and SilviLaser, Espoo, Finland, September 12–14, 2007; Rönnholm, P., Hyyppä, H., Hyyppä, J., Eds.; International Archives of Photogrammetry and Remote Sensing: Espoo, Finland, 2007; pp. 206–210. [Google Scholar]

- Tarsha-Kurdi, F.; Landes, T.; Grussenmeyer, P.; Koehl, M. Photogrammetric Image Analysis. In Model-driven and data-driven approaches using lidar data: analysis and comparison; Stilla, U, Mayer, H, Rottensteiner, F.C., Heipke, S.H., Eds.; International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences: Munich, Germany, 2007; pp. 87–92. [Google Scholar]

- Optech Incorporated. ILRIS-3D Operation Manual; Optech Incorporated: Toronto, Ontario, Canada, 2002; p. 121. [Google Scholar]

| surfaces | I | II | III | IV |

|---|---|---|---|---|

| grey intensity range (counts) | 82–99 | 59–80 | 66–75 | 73–85 |

© 2009 by the authors; licensee MDPI, Basel, Switzerland This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Wang, C.-K.; Lu, Y.-Y. Potential of ILRIS3D Intensity Data for Planar Surfaces Segmentation. Sensors 2009, 9, 5770-5782. https://doi.org/10.3390/s90705770

Wang C-K, Lu Y-Y. Potential of ILRIS3D Intensity Data for Planar Surfaces Segmentation. Sensors. 2009; 9(7):5770-5782. https://doi.org/10.3390/s90705770

Chicago/Turabian StyleWang, Chi-Kuei, and Yao-Yu Lu. 2009. "Potential of ILRIS3D Intensity Data for Planar Surfaces Segmentation" Sensors 9, no. 7: 5770-5782. https://doi.org/10.3390/s90705770

APA StyleWang, C.-K., & Lu, Y.-Y. (2009). Potential of ILRIS3D Intensity Data for Planar Surfaces Segmentation. Sensors, 9(7), 5770-5782. https://doi.org/10.3390/s90705770