ZYNQ-Based Visible Light Defogging System Design Realization

Abstract

1. Introduction

2. Defogging Algorithm Overview

2.1. Foggy Day Imaging Model and Dark Channel Defogging Algorithm

2.2. Limitations of Dark Channel Defogging

2.3. Causes of Color Distortion in the Dark Channel Algorithm

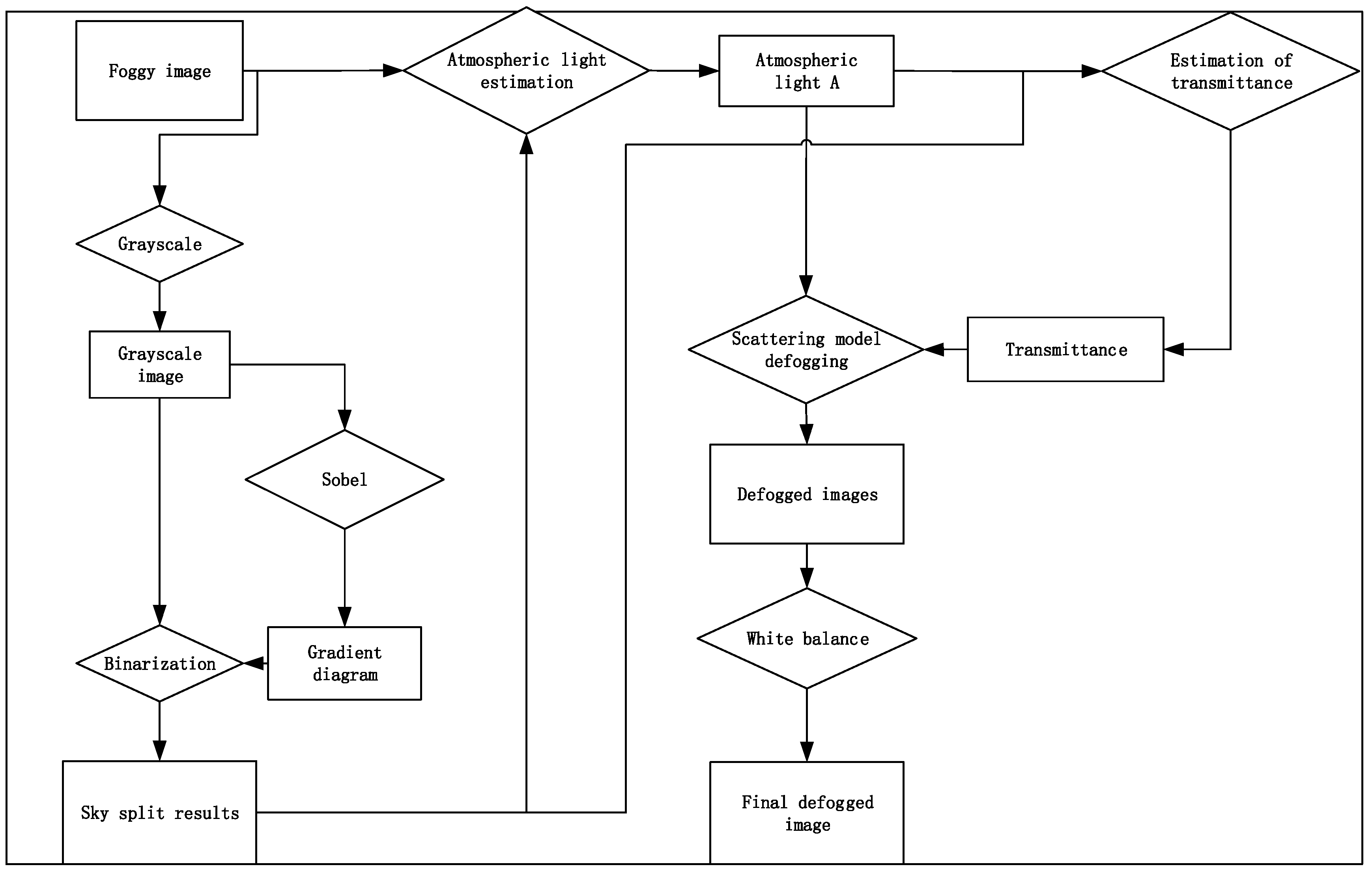

3. Algorithmic Principle

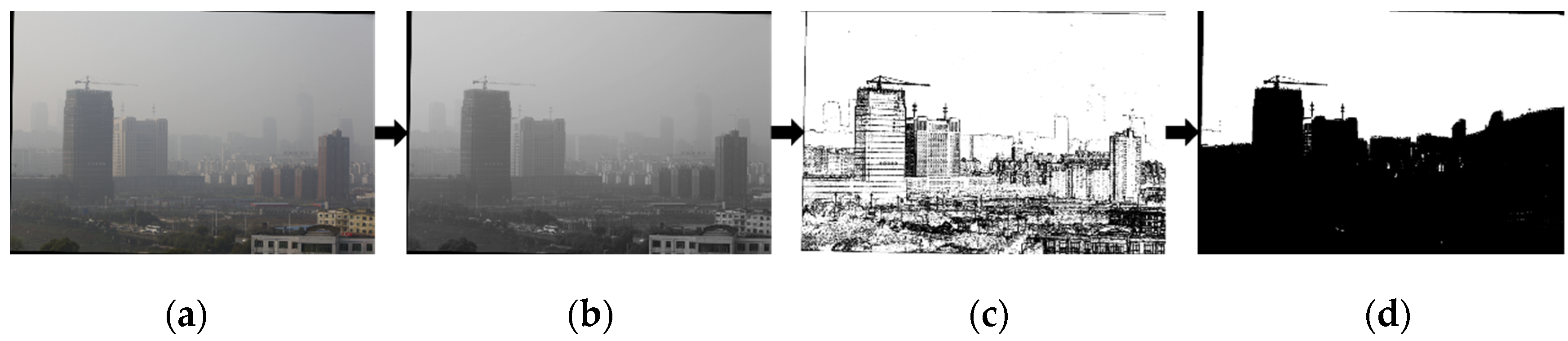

3.1. Sky Segmentation

- Convert an RGB image into a grayscale map.

- Compute the image gradient using the Sobel operator.

- Segment the image based on the image gradient and gray value binarization, where the gradient value is less than the threshold and the gray value is greater than .

- Mark the image pixels that satisfy the threshold as sky regions and perform morphological filtering on the image to segment the sky regions.

3.2. Parameter Corrections

3.2.1. Atmospheric Light Estimation

3.2.2. Estimation of Transmittance

3.2.3. Color Correction

4. ZYNQ-Based Hardware Implementation

4.1. System Design Architecture

4.2. FPGA Design of Image Defogging Module

4.2.1. Design of Sky Area Segmentation Module and Calculation of Atmospheric Light Values

4.2.2. Transmittance Module Design

4.2.3. Image Defogging Module

4.2.4. Color Recovery Module

5. Hardware Optimization

5.1. Image Window Filter Design

5.2. Pipeline Computing Architecture Design

6. Experimental Results and Analysis

6.1. Experimental Platforms

6.2. Experimental Results and Analysis

6.2.1. Evaluation Indicators

- 1.

- Information entropy

- 2.

- Peak Signal to Noise Ratio (PSNR) [16]

- 3.

- Average Gradient

- 4.

- Fog Aware Density Evaluator (FADE)

- 5.

- Structural Similarity Index Measure (SSIM)

6.2.2. Qualitative Inorganic Experiment

6.2.3. Quantitative Experiment

6.3. Practical Application Experiment

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhong, H.; Ma, X.; Zhang, J.; Dong, Y.; Liu, L. Image defogging algorithm based on superpixel dark channel and automatic color scale optimization. J. Optoelectron. Laser 2023, 34, 1059–1067. [Google Scholar] [CrossRef]

- Wang, J.; Wang, S.; Hou, G. Design of Zynq-based embedded digital image processing system. J. Lab. Sci. 2020, 23, 70–73+79. [Google Scholar]

- Nayar, S.K.; Narasimhan, S.G. Vision in bad weather. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 820–827. [Google Scholar] [CrossRef]

- Narasimhan, S.G.; Nayar, S.K. Chromatic framework for vision in bad weather. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2000 (Cat. No. PR00662), Hilton Head, SC, USA, 15 June 2000; Volume 1, pp. 598–605. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single Image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [PubMed]

- Huang, H.; Hu, K.; Song, J.; Wang, H.; Ru, F.; Guo, L. A quadratic optimization method for solving transmittance of haze line. J. Xi’an Jiaotong Univ. 2021, 55, 130–138. [Google Scholar]

- Kim, J.; Jang, W.; Sim, J.; Kim, C. Optimized contrast enhancement for real-time image and video dehazing. J. Vis. Commun. Image Represent. 2013, 24, 410–425. [Google Scholar] [CrossRef]

- Levin, A.; Lischinski, D.; Weiss, Y. A Closed-Form Solution to Natural Image Matting. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 228–242. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Guo, B.; Bi, J.; Zhu, J. Algorithm of single image fog removal based on joint bilateral filter. J. Beijing Univ. Posts Telecommun. 2012, 35, 19. [Google Scholar]

- Lu, C.; Yao, X.; Xie, Q.; Zhang, R. Design and realization of real-time defogging system based on ZYNQ. Comput. Eng. Des. 2023, 44, 314–321. [Google Scholar] [CrossRef]

- Zhang, C.; Bi, S.; Jiang, T.; Wang, J.; Mao, W. Implementation of ZYNQ for image defogging. In Proceedings of the 2020 IEEE 9th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 11–13 December 2020; pp. 1971–1977. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhou, W. Study on the improvement of image defogging algorithm based on dark primary color prior. Electron. Technol. 2018, 47, 52–56. [Google Scholar]

- Chen, G.; Zhang, L. Research and implementation of white balance algorithm for night images. Microelectron. Comput. 2018, 35, 33–36+41. [Google Scholar] [CrossRef]

- Yin, Z.; Xu, G.; Zhang, F.; Xu, F.; Li, X. Design and implementation of convolutional neural network cells for Zynq platform. J. Chin. Comput. Syst. 2022, 43, 231–235. [Google Scholar]

- Huang, W.; Chen, R.; Zhang, J. Improvement and realization of objective measurement method for digital video image quality. J. Beijing Univ. Posts Telecommun. 2005, 28, 87–90. [Google Scholar] [CrossRef]

- Kwon, L.C.; Jaehee, Y.; Conrad, A.B. Referenceless Prediction of Perceptual Fog Density and Perceptual Image Defogging. IEEE Trans. Image Process. 2015, 24, 3888–3901. [Google Scholar]

- Zhao, S.; Zhang, L.; Huang, S.; Shen, Y.; Zhao, S. Dehazing Evaluation: Real-world Benchmark Datasets, Criteria and Baselines. IEEE Trans. Image Process. 2020, 29, 6947–6962. [Google Scholar] [CrossRef]

- Zhu, Q.; Mai, J.; Shao, L. A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [PubMed]

| Submodule Name | Functional Description | Input and Output Description |

|---|---|---|

| Sky Split Module | Splitting of the sky area | Input image, output segmented binary data |

| Atmospheric Light Estimation Module | Calculate atmospheric light | Input RGB image and segmented binary data, output atmospheric light estimate |

| Transmittance Estimation Module | Calculate transmittance | Input RGB image, atmospheric light value, segmented binary data, output transmittance |

| Image Defogging Module | Image Defogging | Input RGB image, transmittance, atmospheric light value, output defogging image |

| Color Recovery Module | White balance and color recovery | Input defogged image, output color restored image |

| Location | Algorithm | IE | AG | PSNR | FADE | SSIM |

|---|---|---|---|---|---|---|

| Lanzhou | original | 5.8723 | 1.579 | / | 5.3041 | / |

| He | 6.8910 | 3.7979 | 14.2081 | 1.7291 | 0.6850 | |

| Zhu | 6.7022 | 2.8417 | 19.2824 | 0.6680 | 0.7031 | |

| Retinex | 7.0603 | 3.5633 | 20.9224 | 1.8821 | 0.4483 | |

| proposed | 7.3431 | 3.1376 | 16.5864 | 0.5786 | 0.6951 | |

| Chengdu | original | 6.7493 | 2.0676 | / | 2.4586 | / |

| He | 6.7301 | 2.5304 | 15.0803 | 1.1993 | 0.6962 | |

| Zhu | 6.9432 | 2.628 | 20.3080 | 1.3677 | 0.8990 | |

| Retinex | 7.7157 | 7.295 | 12.1497 | 0.8875 | 0.6555 | |

| proposed | 7.3467 | 3.5013 | 18.3036 | 0.7977 | 0.7682 | |

| Nanchang | original | 7.2685 | 1.995 | / | 3.6027 | / |

| He | 7.1825 | 2.4205 | 12.2666 | 1.4509 | 0.6741 | |

| Zhu | 7.5062 | 2.6928 | 14.4561 | 1.1892 | 0.7542 | |

| Retinex | 7.7210 | 4.0853 | 19.0270 | 1.6263 | 0.8277 | |

| proposed | 7.7710 | 3.1436 | 15.7339 | 1.2038 | 0.7521 | |

| Nanjing | original | 7.3903 | 5.9233 | / | 1.2922 | / |

| He | 7.3074 | 5.2014 | 13.6277 | 0.7078 | 0.6498 | |

| Zhu | 7.4411 | 5.9445 | 16.1896 | 0.6680 | 0.7823 | |

| Retinex | 7.7474 | 10.9883 | 17.9105 | 0.7023 | 0.7894 | |

| proposed | 7.6409 | 9.5108 | 18.9955 | 0.5786 | 0.7255 | |

| Hangzhou | original | 7.1206 | 2.3443 | / | 2.2199 | / |

| He | 6.7988 | 2.4548 | 14.3508 | 1.0598 | 0.6550 | |

| Zhu | 7.0579 | 2.6500 | 17.2248 | 0.9674 | 0.7702 | |

| Retinex | 7.8312 | 6.1488 | 15.3961 | 0.9038 | 0.7451 | |

| proposed | 7.5173 | 3.4645 | 17.9642 | 0.7954 | 0.7597 | |

| Beijing | original | 7.1474 | 2.0687 | / | 2.9377 | / |

| He | 6.9726 | 2.7313 | 13.8229 | 1.2686 | 0.6738 | |

| Zhu | 7.2241 | 2.5025 | 16.8757 | 0.9622 | 0.7371 | |

| Retinex | 7.6815 | 4.1496 | 18.4141 | 1.4905 | 0.8408 | |

| proposed | 7.5604 | 3.0022 | 17.4263 | 1.1061 | 0.7962 | |

| Changsha | original | 6.7011 | 1.2792 | / | 4.9663 | / |

| He | 7.0550 | 2.5069 | 13.2670 | 1.8195 | 0.7730 | |

| Zhu | 7.2705 | 2.0447 | 17.3320 | 2.4672 | 0.9073 | |

| Retinex | 7.3400 | 2.5568 | 20.0884 | 2.4456 | 0.8342 | |

| proposed | 7.5243 | 1.7719 | 15.4866 | 1.9376 | 0.7731 | |

| Tianjin | original | 6.5346 | 1.5423 | / | 3.6766 | / |

| He | 7.0202 | 3.0832 | 15.3363 | 1.2330 | 0.6743 | |

| Zhu | 6.9932 | 2.3151 | 19.8832 | 1.9124 | 0.8699 | |

| Retinex | 7.0140 | 2.8915 | 24.4179 | 1.9765 | 0.8564 | |

| proposed | 7.3762 | 2.3734 | 19.7190 | 1.3998 | 0.7178 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, B.; Wei, Q.; Ding, K. ZYNQ-Based Visible Light Defogging System Design Realization. Sensors 2024, 24, 2276. https://doi.org/10.3390/s24072276

Liu B, Wei Q, Ding K. ZYNQ-Based Visible Light Defogging System Design Realization. Sensors. 2024; 24(7):2276. https://doi.org/10.3390/s24072276

Chicago/Turabian StyleLiu, Bohan, Qihai Wei, and Kun Ding. 2024. "ZYNQ-Based Visible Light Defogging System Design Realization" Sensors 24, no. 7: 2276. https://doi.org/10.3390/s24072276

APA StyleLiu, B., Wei, Q., & Ding, K. (2024). ZYNQ-Based Visible Light Defogging System Design Realization. Sensors, 24(7), 2276. https://doi.org/10.3390/s24072276