4.1. Metrics

To evaluate the quality of the demosaicing results, we used quantitative metrics, such as the PSNR, SSIM, and SAM.

The PSNR, which measures the logarithm of the average difference between the reference image and the estimated image, was calculated as follows:

where

represents the maximum value of the image,

represents the mean squared error between the reference image

and the estimated image

, and

W and

H represent the width and height of the image, respectively.

The SSIM was used to evaluate the similarity between the reference image

and the estimated image

. It was calculated using the following equation:

where

and

represent the means of the image vectors

and

, respectively. The standard deviations of

and

are represented by

and

, respectively. The covariance between

and

is represented by

, and

and

are constants used to prevent the denominator from approaching zero.

The SAM is commonly used to evaluate multispectral images. It represents the average of the angles formed by the reference and estimated image vectors and is calculated using the following formula:

For the PSNR and SSIM, larger values indicated better performance, and for the SAM, smaller values indicated better performance.

4.2. Dataset and Implementation Detail

In our experiments, we compared the proposed method to previously reported methods using the TokyoTech-31band (TT31) [

23] and TokyoTech-59band (TT59) [

24] datasets. The TT31 dataset included 35 multispectral images, each containing 31 spectral bands ranging from 420 to 720 nm. The TT59 dataset included 16 multispectral images with 59 spectral bands ranging from 420 to 1000 nm, with the bands spaced 10 nm apart. We excluded the popular CAVE [

25] dataset from our experiments because it was used to train conventional deep-learning methods. To generate the synthetic dataset, we used IMEC’s “snapshot mosaic” multispectral camera sensor, i.e., XIMEA’s xiSpec [

26]. We utilized the publicly available normalized transmittance of this camera [

15] and the camera had the central spectral band

to be an MSFA of

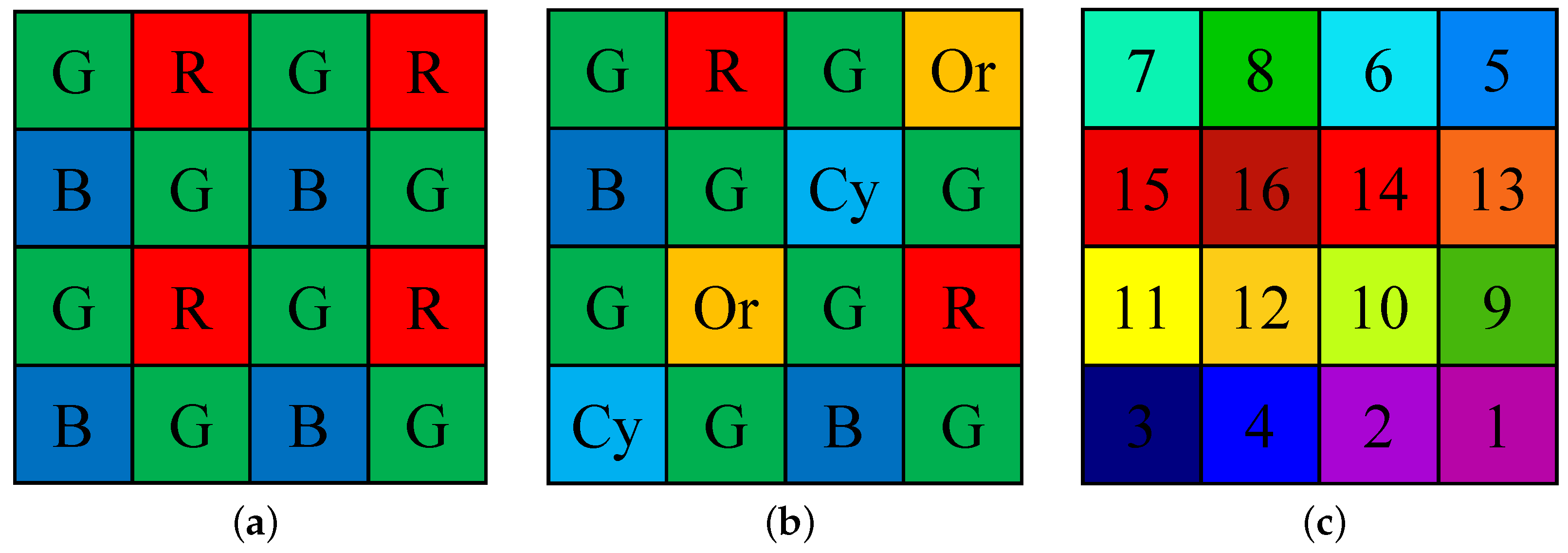

arrays, consisting of 469, 480, 489, 499, 513, 524, 537, 551, 552, 566, 580, 590, 602, 613, 621, and 633 nm. The arrays were arranged in ascending order in

Figure 1c. We used the normalized transmittance and D65 illuminant to obtain multispectral images for each band, in accordance with (

1). The obtained images were then sampled using (

2) to generate the raw MSFA images.

The pixel values of the synthesis datasets ranged from 0 to 1. The window size was set to and for horizontal guided filtering, and for vertical guided filtering, and and for the final guided upsampling. We also experimented with for , which determined the change in each pixel, and for , which determined the change in the entire image.

4.3. Results for Synthesis Dataset and Real-World Image

For a quantitative evaluation of the proposed method, we compared it to six other methods. The first conventional method (CM1) was the BTES method [

13], which prioritized the interpolation of the empty center pixel in the spatial domain of each channel. Interpolation was performed using a weighted sum, and the weights were calculated using the reciprocal of the difference between neighboring pixels. The second conventional method (CM2) was a spectral-difference-(SD) method that employed weighted bilinear filtering in the spectral-difference domain [

27]. The third conventional method (CM3) was an iterative-spectral-difference-(ItSD) method that used weighted bilinear filtering in the spectral-difference domain [

28]. The CM2 method was applied repeatedly for each channel. The fourth conventional method (CM4) was an MLDI method similar to the BTES method of [

14], except that the interpolation was performed in the spectral domain instead of the spatial domain. The fifth conventional method (CM5) was a PPID method that estimated the PPI of a guide image [

15] and performed interpolation in the spectral-difference domain based on the PPI. The sixth conventional method (CM6) was a mosaic-convolution-attention-network-(MCAN) method, in which the mosaic pattern was erased by generating an end-to-end demosaicing network [

16]. This deep-learning method was implemented using the code published online by the author.

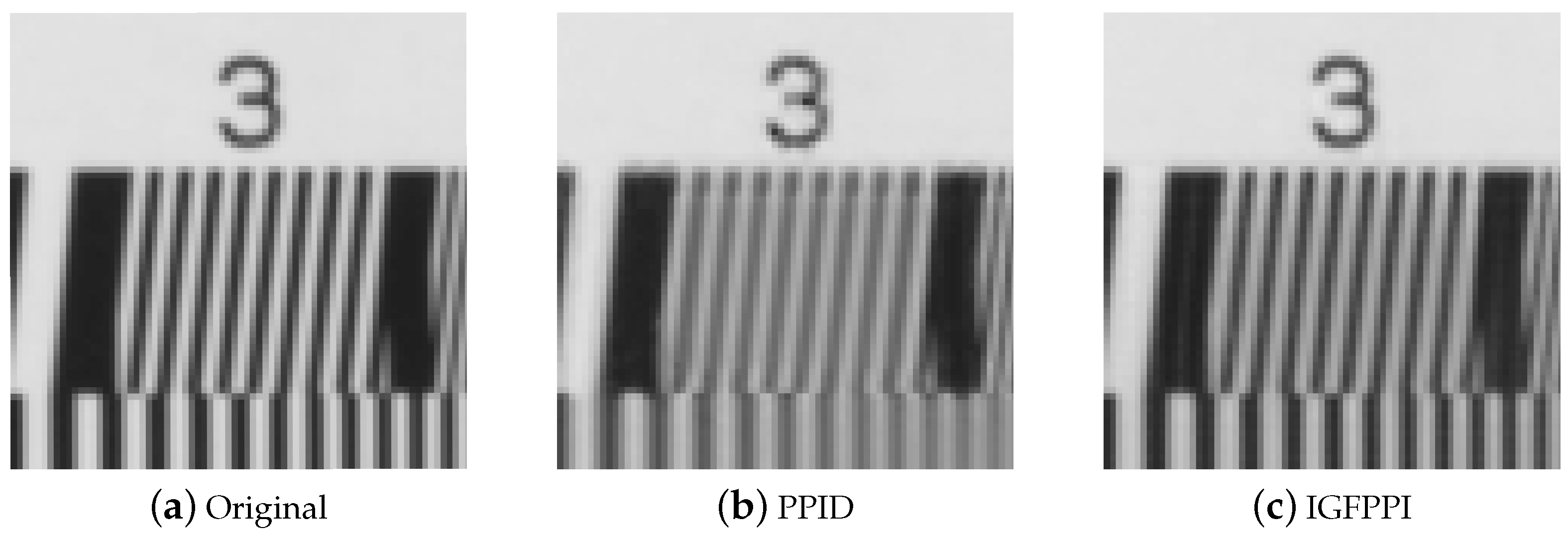

Figure 4 shows the results of the estimated PPIs as a guide image.

Figure 4a displays the average of the original multispectral cube,

Figure 4b shows the estimated PPI of PPID [

15], and

Figure 4c shows the estimated PPI of the proposed IGFPPI. The estimated PPI of PPID is blurred and has low contrast. However, the proposed IGFPPI restored high-frequency components better than PPID, and the contrast is also close to the original.

The results for the PSNR, SSIM, and SAM of TT31 are presented in

Table 1,

Table 2 and

Table 3, respectively. In the tables, a dark-gray background indicates the best score and a light-gray background indicates the second-best score. Of the 35 images in the TT31 dataset, the proposed method had the best PSNR for 19 images and the second-best PSNR for 16 images. Additionally, it had the best SSIM for 20 images, the second-best SSIM for 15 images, the best SAM for 18 images, and the second-best SAM for 17. The average PSNR, SSIM, and SAM values for the TT31 dataset indicated that the proposed method outperformed the other methods.

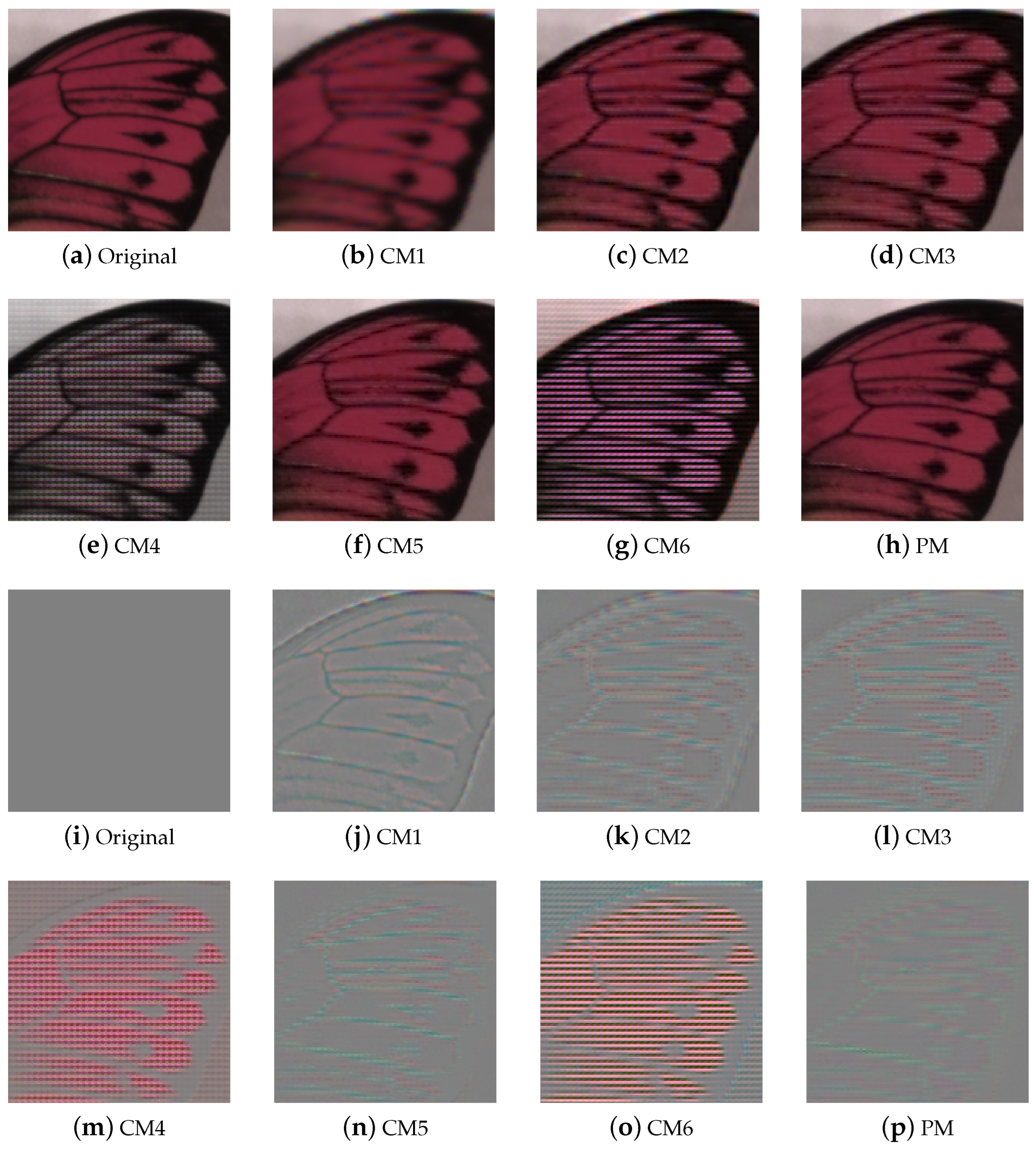

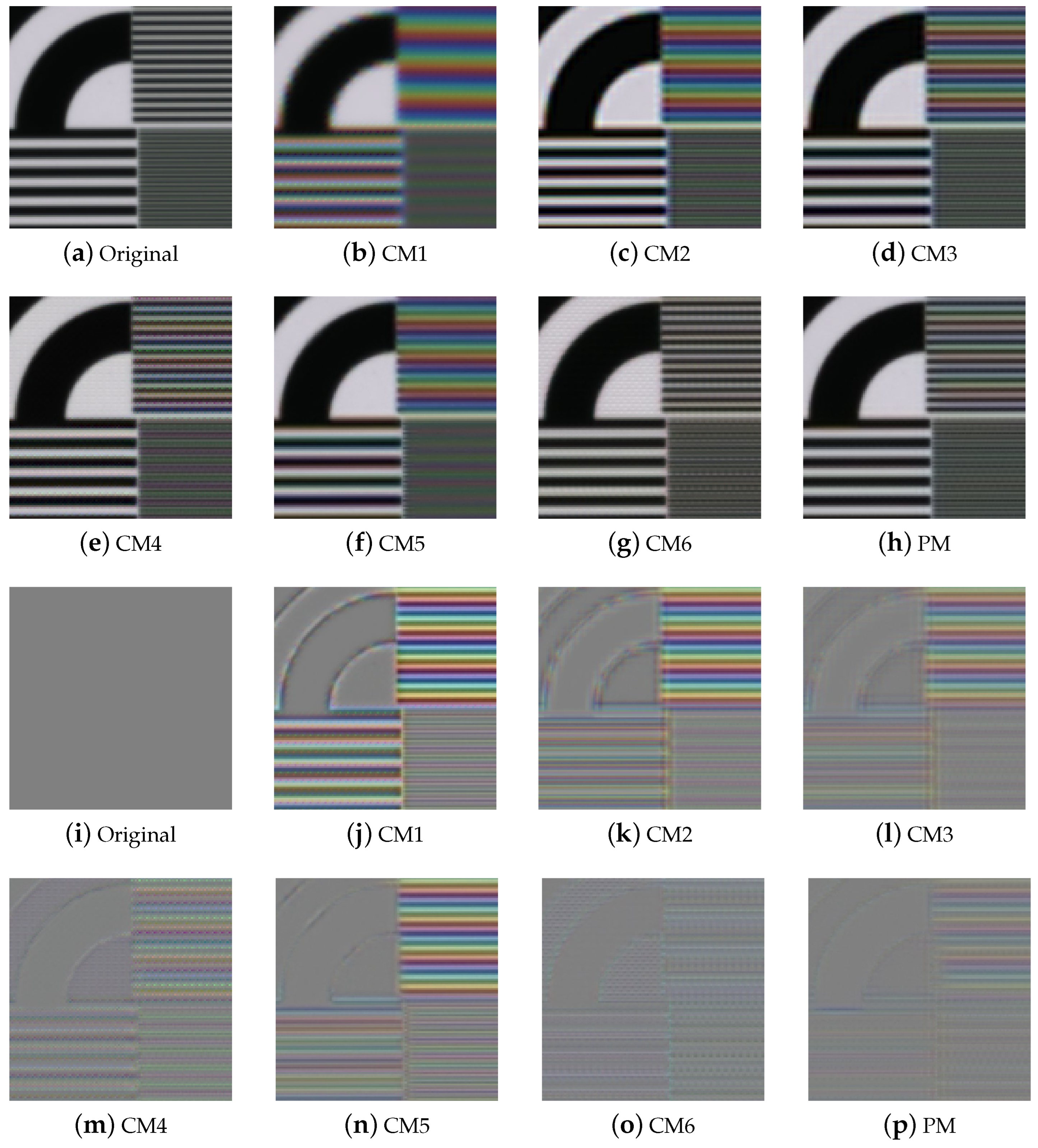

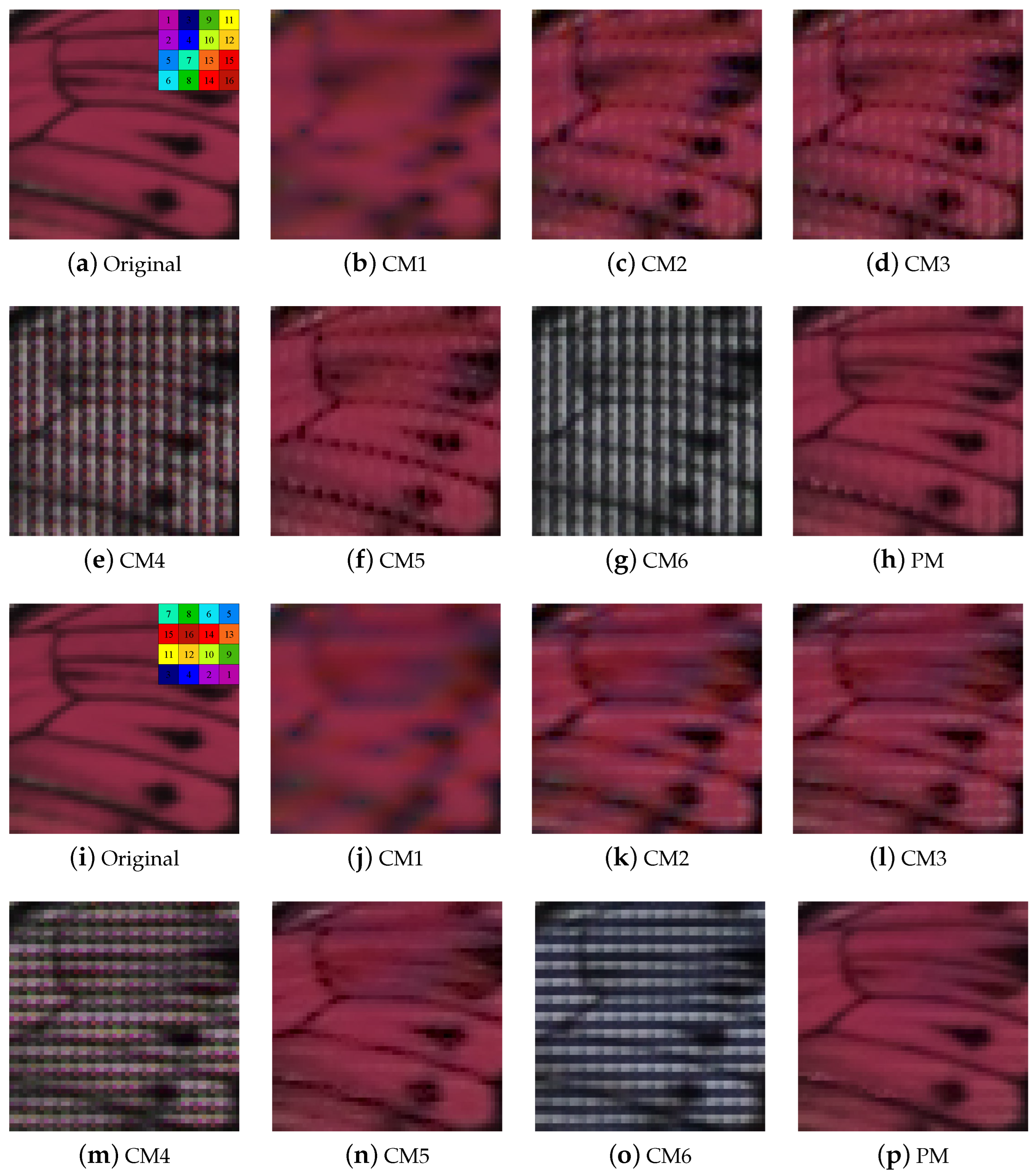

Figure 5 and

Figure 6 present the qualitative evaluation results for TT31, including those for the Butterfly and ChartCZP images, with the images cropped to highlight differences. We obtained red, green, and blue channels from the multispectral demosaicing image cube and represented them as the RGB images for qualitative evaluation.

Figure 5a–h and

Figure 6a–h show RGB images from which we extracted channel 16 for red, channel 6 for green, and channel 1 for blue from the multispectral image cube.

Figure 5i–p and

Figure 6i–p show the error maps of

Figure 5a–h and

Figure 6a–h. The results of CM1 show the blurriest image, and the results of CM2 and CM3 estimated high frequencies somewhat well, but artifacts can be seen. CM4 and CM6 nearly perfectly restored high frequencies in the resolution chart; however, the mosaic pattern was not entirely removed from the general color image. In CM6, demosaicing is performed using a network that erases the mosaic pattern for each channel. This method performs demosaicing on 16 channels of an MSFA; however, the arrangement is different from the paper of CM6. In the experimental results of this method, we can observe that only the evaluation metrics of chart images corresponding to monotone are of high score. This is because the mosaic pattern is easily erased in images where changes in all channels are constant, but the mosaic pattern is not erased in images where a large change occurs in a specific color. In general, the outcomes of CM5 and PM (referring to the proposed method) appeared to be similar. However, for images such as the resolution chart, PM exhibited superior high-frequency recovery and less color aliasing than CM5. Overall, the image produced by PM had fewer mosaic pattern artifacts and less color aliasing than those produced by the conventional methods.

For quantitative evaluation of the TT59 dataset, we computed the PSNR, SSIM, and SAM values, which are presented in

Table 4,

Table 5 and

Table 6, respectively. Of the 16 images in the TT59 dataset, the proposed method had the best PSNR for 10 images, and the second-best PSNR for 4 images. Moreover, it had the best SSIM for 8 images, the second-best SSIM for 7 images, the best SAM for 12 images and the second-best SAM for 4 images. The average PSNR, SSIM, and SAM values for the TT59 dataset indicated that the proposed method achieved the best results.

The results for the TT59 dataset were similar to those for the TT31 dataset. In the gray areas, CM4 and CM6 effectively recovered the high frequencies. However, in the colored sections, MSFA pattern artifacts were introduced, resulting in grid-like artifacts. By comparison, CM5 and PM performed better overall, with PM recovering high frequencies better than CM5, as shown in the resolution chart.

Figure 7 shows the demosaicing results for different MSFA arrangements.

Figure 7a–h shows the MSFAs in which adjacent spectra are grouped in a 2 × 2 shape.

Figure 7i–p are the MSFAs of the original IMEC camera. The proposed method can be observed to be more robust and to have fewer artifacts than conventional methods. In particular,

Figure 7c,d,f show grid artifacts where the black line of the butterfly is broken, whereas the proposed method shows reduced grid artifacts compared with other methods.

Table 7 presents a comparison of the execution times, with the desktop specifications of an Intel i7-11700k processor, 32 GB of memory, and an Nvidia RTX 3090 GPU. CM6 was tested using Pytorch, whereas the remaining methods were tested using MATLAB R2021a. To obtain the average execution times for all the datasets, we conducted timing measurements. We found that the method with the shortest execution time was CM1, followed by CM5, PM, CM4, CM2, CM6, and CM3.

In addition, as shown in

Figure 8, the methods were tested on images captured using an IMEC camera. To qualitatively evaluate the multispectral image cube in the real world, we used the same method that was employed to evaluate the synthesis dataset. Channels 16, 6, and 1 of the multispectral image cube were extracted as the R, G, and B images, respectively, as shown in

Figure 8. These results were similar to the experimental results obtained for the synthesis dataset. CM1, CM2, and CM3 exhibited blurred images and strong color aliasing, whereas CM4 exhibited MSFA pattern artifacts. Among the conventional methods, CM5 achieved the best results. CM6, which is a deep-learning method, performed well for the resolution chart. However, the proposed method exhibited better high-frequency recovery and less color aliasing.