Imitation Learning-Based Performance-Power Trade-Off Uncore Frequency Scaling Policy for Multicore System

Abstract

:1. Introduction

- We collect offline training datasets and obtain one UFS policy with 99% classification accuracy and three models with 99% prediction accuracy. The latter three constitute the expert model and optimize the learning efficiency by converting the learning cost of requesting experts for new data annotation into fine-tuning of the prediction model during the online learning phase;

- We implement uncore governors based on the idea of dynamic power management governors already in use and compare them with the UFS_IL policy under the same load conditions. It is found that the processor performs best in terms of power efficiency under the latter control.

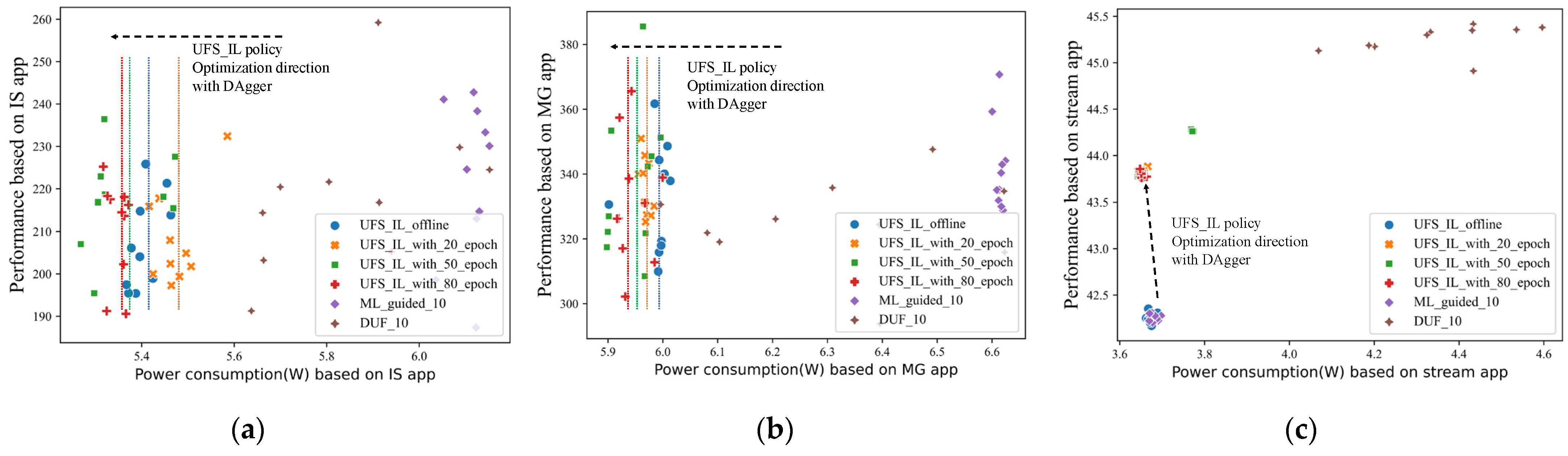

- Online imitation learning improves the generality of the UFS policy for unseen loads. Experiments show that after collecting about 50 aggregation data, the tuning selection of the UFS_IL policy maintains the processor’s power efficiency under unseen load at near-optimal levels.

2. Related Work

3. Problem Setup

4. Imitation Learning-Based UFS Policy

4.1. Imitation Learning

4.2. Challenges of Applying Imitation Learning to UFS Policy

4.3. UFS_IL Policy Framework

4.3.1. Offline Construction

4.3.2. Runtime Evaluation and Data Aggregation

5. Experimental Evaluation

5.1. Offline Models Evaluation

5.2. Compared Power Efficiency with Different Advanced UFS Policies

- DUF

- ML_guided Policy

5.3. Compared Power Efficiency with Different Power Management Governors

5.4. Compared Generalization on Unseen Loads

5.5. Complexity Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Epoch | ML_guided_10 | DUF_10 | UFS_IL_offline | UFS_IL_with_20_epoch | UFS_IL_with_50_epoch | UFS_IL_with_80_epoch |

|---|---|---|---|---|---|---|

| 1 | 2.2 | 2.2 | 2.2 | 2.2 | 2.2 | 2.2 |

| 2 | 2.2 | 2.2 | 1.4 | 1.5 | 1.5 | 1.3 |

| 3 | 2.2 | 2.1 | 1.4 | 1.5 | 1.5 | 1.3 |

| 4 | 2.2 | 2.0 | 1.4 | 1.5 | 1.2 | 1.4 |

| 5 | 2.2 | 2.0 | 1.4 | 1.5 | 1.5 | 1.3 |

| 6 | 2.2 | 1.9 | 1.4 | 1.5 | 1.5 | 1.3 |

| 7 | 2.2 | 1.8 | 1.4 | 1.5 | 1.2 | 1.5 |

| 8 | 2.2 | 1.7 | 1.2 | 1.5 | 1.5 | 1.5 |

| 9 | 2.2 | 1.6 | 1.4 | 1.5 | 1.2 | 1.3 |

| 10 | 2.2 | 1.5 | 1.4 | 1.5 | 1.2 | 1.3 |

References

- Cheng, H.Y.; Zhan, J.; Zhao, J.; Xie, Y.; Sampson, J.; Irwin, M.J. Core vs. uncore: The heart of darkness. In Proceedings of the 52nd ACM/EDAC/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 8–12 June 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Gupta, V.; Brett, P.; Koufaty, D.; Gupta, V.; Brett, P.; Koufaty, D.A.; Reddy, D.; Hahn, S.; Schwan, K.; Srinivasa, G. The Forgotten ‘Uncore’: On the Energy-Efficiency of Heterogeneous Cores. In Proceedings of the USENIX Annual Technical Conference (USENIX ATC 12), Boston, MA, USA, 13–15 June 2012; pp. 367–372. [Google Scholar]

- Hill, D.L.; Bachand, D.; Bilgin, S.; Greiner, R.; Hammarlund, P.; Huff, T.; Kulick, S.; Safranek, R. The Uncore: A Modular Approach to Feeding the High-Performance Cores. Intel Technol. J. 2010, 14, 30–49. [Google Scholar]

- Subramaniam, B.; Feng, W. Towards energy-proportional computing for enterprise-class server workloads. In Proceedings of the 4th ACM/SPEC International Conference on Performance Engineering, Prague, Czech Republic, 21–24 April 2013; pp. 15–26. [Google Scholar]

- Schaal, S. Is imitation learning the route to humanoid robots? Trends Cogn. Sci. 1999, 3, 233–242. [Google Scholar] [CrossRef]

- Ross, S.; Gordon, G.; Bagnell, D. A reduction of imitation learning and structured prediction to no-regret online learning. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Ft. Lauderdale, FL, USA, 11–13 April 2011; pp. 627–635. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Won, J.Y. Dynamic Voltage and Frequency Scaling Techniques for Chip Multiprocessor Designs; Texas A&M University: College Station, TX, USA, 2015. [Google Scholar]

- Tai, K.-Y.; Liu, B.-C.; Hsiao, C.-H.; Tsai, M.-C.; Lin, F.Y.-S. A Near-Optimal Energy Management Mechanism Considering QoS and Fairness Requirements in Tree Structure Wireless Sensor Networks. Sensors 2023, 23, 763. [Google Scholar] [CrossRef] [PubMed]

- Wang, N.-C.; Lee, C.-Y.; Chen, Y.-L.; Chen, C.-M.; Chen, Z.-Z. An Energy Efficient Load Balancing Tree-Based Data Aggregation Scheme for Grid-Based Wireless Sensor Networks. Sensors 2022, 22, 9303. [Google Scholar] [CrossRef]

- Sun, W.; Venkatraman, A.; Gordon, G.J.; Boots, B.; Bagnell, J.A. Deeply aggrevated: Differentiable imitation learning for sequential prediction. In Proceedings of the International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 3309–3318. [Google Scholar]

- Gholkar, N.; Mueller, F.; Rountree, B. Uncore power scavenger: A runtime for uncore power conservation on hpc systems. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, Denver, CO, USA, 17–19 November 2019; pp. 1–23. [Google Scholar]

- Bekele, S.A.; Balakrishnan, M.; Kumar, A. ML guided energy-performance trade-off estimation for uncore frequency scaling. In Proceedings of the Spring Simulation Conference (SpringSim), Tucson, AZ, USA, 29 April–2 May 2019; pp. 1–12. [Google Scholar]

- Kumaraswamy, M.; Gerndt, M. Exploiting Dynamism in HPC Applications to Optimize Energy-Efficiency. In Proceedings of the 49th International Conference on Parallel Processing-ICPP: Workshops, Edmonton, AB, Canada, 17–20 August 2020. [Google Scholar] [CrossRef]

- André, É.; Dulong, R.; Guermouche, A.; Trahay, F. Duf: Dynamic uncore frequency scaling to reduce power consumption. Concurr. Comput. Pr. Exp. 2021, 34, e6580. [Google Scholar] [CrossRef]

- Corbalan, J.; Vidal, O.; Alonso, L.; Aneas, J. Explicit uncore frequency scaling for energy optimisation policies with EAR in Intel ar-chitectures. In Proceedings of the 2021 IEEE International Conference on Cluster Computing (CLUSTER), Portland, OR, USA, 7–10 September 2021; pp. 572–581. [Google Scholar]

- Sundriyal, V.; Sosonkina, M.; Westheimer, B.M.; Gordon, M. Comparisons of Core and Uncore Frequency Scaling Modes in Quantum Chemistry Application GAMESS. In Proceedings of the High Performance Computing Symposium, Baltimore, MD, USA, 23–26 April 2017; p. 13. [Google Scholar] [CrossRef]

- Wang, Z.; Tian, Z.; Xu, J.; Maeda, R.K.V.; Li, H.; Yang, P.; Wang, Z.; Duong, L.H.K.; Wang, Z.; Chen, X. Modular reinforcement learning for self-adaptive energy efficiency optimization in multicore system. In Proceedings of the 2017 22nd Asia and South Pacific Design Automation Conference (ASP-DAC), Tokyo, Japan, 16–19 January 2017; pp. 684–689. [Google Scholar] [CrossRef]

- Liu, W.; Tan, Y.; Qiu, Q. Enhanced Q-learning algorithm for dynamic power management with performance constraint. In Proceedings of the 2010 Design, Automation & Test in Europe Conference & Exhibition (DATE 2010), Dresden, Germany, 8–12 March 2010; pp. 602–605. [Google Scholar] [CrossRef]

- Shen, H.; Tan, Y.; Lu, J.; Wu, Q.; Qiu, Q. Achieving autonomous power management using reinforcement learning. ACM Trans. Des. Autom. Electron. Syst. TODAES 2013, 18, 1–32. [Google Scholar] [CrossRef]

- Chen, Z.; Marculescu, D. Distributed Reinforcement Learning for Power Limited Many-Core System Performance Optimization. In Proceedings of the 2015 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 9–13 March 2015; pp. 1521–1526. [Google Scholar] [CrossRef]

- Qi, X.; Yang, J.; Zhang, Y.; Xiao, B. BIOS-Based Server Intelligent Optimization. Sensors 2022, 22, 6730. [Google Scholar] [CrossRef] [PubMed]

- Mandal, S.K.; Bhat, G.; Patil, C.A.; Doppa, J.R.; Pande, P.P.; Ogras, U.Y. Dynamic resource management of heterogeneous mobile platforms via imitation learning. IEEE Trans. Very Large Scale Integr. VLSI Syst. 2019, 27, 2842–2854. [Google Scholar] [CrossRef]

- Mandal, S.K.; Bhat, G.; Doppa, J.R.; Pande, P.P.; Ogras, U.Y. An energy-aware online learning framework for resource management in heterogeneous platforms. ACM Trans. Des. Autom. Electron. Syst. TODAES 2020, 25, 1–26. [Google Scholar] [CrossRef]

- Kim, R.G.; Choi, W.; Chen, Z.; Doppa, J.R.; Pande, P.P.; Marculescu, D.; Marculescu, R. Imitation learning for dynamic VFI control in large-scale manycore systems. IEEE Trans. Very Large Scale Integr. VLSI Syst. 2017, 25, 2458–2471. [Google Scholar] [CrossRef]

- Gupta, U.; Babu, M.; Ayoub, R.; Kishinevsky, M.; Paterna, F.; Ogras, U.Y. STAFF: Online Learning with Stabilized Adaptive Forgetting Factor and Feature Selection Algorithm. In Proceedings of the 55th Annual Design Automation Conference, San Francisco, CA, USA, 24–29 June 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Gupta, U.; Babu, M.; Ayoub, R.; Kishinevsky, M.; Paterna, F.; Gumussoy, S.; Ogras, U.Y. An Online Learning Methodology for Performance Modeling of Graphics Processors. IEEE Trans. Comput. 2018, 67, 1677–1691. [Google Scholar] [CrossRef] [Green Version]

- Intel Xeon Gold 5118 Processor. Available online: https://www.intel.com/content/www/us/en/products/sku/120473/intel-xeon-gold-5118-processor-16-5m-cache-2-30-ghz/specifications.html (accessed on 10 January 2022).

- Bucek, J.; Lange, K.D.; Kistowski, J.v. SPEC CPU2017: Next-generation compute benchmark. In Proceedings of the Companion of the 2018 ACM/SPEC International Conference on Performance Engineering, Berlin, Germany, 9–13 April 2018; pp. 41–42. [Google Scholar]

- Perf Tool. Available online: https://perf.wiki.kernel.org/index.php/Main_Page (accessed on 10 January 2022).

- Treibig, J.; Hager, G.; Wellein, G. LIKWID: A Lightweight Performance-Oriented Tool Suite for x86 Multicore Environments. In Proceedings of the 2010 39th International Conference on Parallel Processing Workshops, San Diego, CA, USA, 13–16 September 2010; pp. 207–216. [Google Scholar] [CrossRef]

- Pallipadi, V.; Starikovskiy, A. The ondemand governor. In Proceedings of the Linux Symposium, Ottawa, ON, Canada, 19–22 July 2006; Volume 2, pp. 215–230. [Google Scholar]

| cycles | instructions | branch-misses |

| cpu-clock | llc_misses.mem_write | llc_misses.mem_read |

| unc_m_cas_count.all | l2_rqsts.miss | br_inst_retired.all_branches |

| mem_load_retired.l1_hit | mem_load_retired.l1_miss | mem_load_retired.l2_hit |

| mem_load_retired.l2_miss | mem_load_retired.l3_hit | mem_load_retired.l3_miss |

| Application Name | Application Area | |

|---|---|---|

| A_train | lbm | Fluid dynamics |

| mcf | Route planning | |

| namd | Molecular dynamics | |

| povray | Ray tracing | |

| xalancbmk | XML to HTML conversion via XSLT | |

| bwaves | Explosion modeling | |

| cactuBSSN | Physics: relativity | |

| A_test | parset | Biomedical imaging: optical tomography with finite elements |

| omnetpp | Discrete Event simulation—computer network |

| Regressor | R2 | MSE | RSS | Construction Time |

|---|---|---|---|---|

| Decision Tree Regressor | 0.9973 | 1.761 × 10−3 | 3.397 × 10−2 | 350 ms |

| SVM Regressor | 0.9468 | 5.373 × 10−2 | 1.128 | 446 ms |

| KNeighbors Regressor | 0.9125 | 9.237 × 10−3 | 1.939 | 544 ms |

| Random Forest Regressor | 0.9997 | 2.991 × 10−4 | 6.281 × 10−3 | 310 ms |

| Adaboost Regressor | 0.9762 | 1.101 × 10−2 | 2.313 × 10−1 | 440 ms |

| Governors | Principles |

|---|---|

| Performance governor | Always maintain maximum uncore frequency |

| Powersave governor | Maximize power savings |

| Ondemand governor [32] | The CPU load is calculated periodically. When the CPU load exceeds 80%, the frequency will be set to the maximum, otherwise, the frequency will be calculated proportionally according to the current load |

| Conservation governor | The CPU load is calculated periodically. When the CPU load is more than 80%, the default will be incremented at a 5% pace. When the CPU load is less than 20%, the default will be decremented at a 5% pace |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiao, B.; Yang, J.; Qi, X. Imitation Learning-Based Performance-Power Trade-Off Uncore Frequency Scaling Policy for Multicore System. Sensors 2023, 23, 1449. https://doi.org/10.3390/s23031449

Xiao B, Yang J, Qi X. Imitation Learning-Based Performance-Power Trade-Off Uncore Frequency Scaling Policy for Multicore System. Sensors. 2023; 23(3):1449. https://doi.org/10.3390/s23031449

Chicago/Turabian StyleXiao, Baonan, Jianfeng Yang, and Xianxian Qi. 2023. "Imitation Learning-Based Performance-Power Trade-Off Uncore Frequency Scaling Policy for Multicore System" Sensors 23, no. 3: 1449. https://doi.org/10.3390/s23031449

APA StyleXiao, B., Yang, J., & Qi, X. (2023). Imitation Learning-Based Performance-Power Trade-Off Uncore Frequency Scaling Policy for Multicore System. Sensors, 23(3), 1449. https://doi.org/10.3390/s23031449