A Method of Setting the LiDAR Field of View in NDT Relocation Based on ROI

Abstract

:1. Introduction

2. Algorithm Principle

2.1. Loam

2.2. NDT Relocation Algorithm

2.3. Point Cloud Data Preprocessing

3. The KITTI Dataset Test

4. Urban Dataset Testing

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ng, H.F.; Hsu, L.T.; Lee, M.J.L.; Feng, J.; Naeimi, T.; Beheshti, M.; Rizzo, J.R. Real-Time Loosely Coupled 3DMA GNSS/Doppler Measurements Integration Using a Graph Optimization and Its Performance Assessments in Urban Canyons of New York. Sensors 2022, 22, 6533. [Google Scholar] [CrossRef] [PubMed]

- He, G.; Yuan, X.; Zhuang, Y.; Hu, H. An integrated GNSS/LiDAR-SLAM pose estimation framework for large-scale map building in partially GNSS-denied environments. IEEE Trans. Instrum. Meas. 2020, 70, 1–9. [Google Scholar] [CrossRef]

- Ye, F.; Pan, S.; Gao, W.; Wang, H.; Liu, G.; Ma, C.; Wang, Y. An Improved Single-Epoch GNSS/INS Positioning Method for Urban Canyon Environment Based on Real-Time DISB Estimation. IEEE Access 2020, 8, 227566–227578. [Google Scholar] [CrossRef]

- Kusaka, T.; Tanaka, T. Stateful Rotor for Continuity of Quaternion and Fast Sensor Fusion Algorithm Using 9-Axis Sensors. Sensors 2022, 22, 7989. [Google Scholar] [CrossRef]

- Li, Y.; Yang, S.; Xiu, X.; Miao, Z. A Spatiotemporal Calibration Algorithm for IMU–LiDAR Navigation System Based on Similarity of Motion Trajectories. Sensors 2022, 22, 7637. [Google Scholar] [CrossRef]

- Lyu, P.; Bai, S.; Lai, J.; Wang, B.; Sun, X.; Huang, K. Optimal Time Difference-Based TDCP-GPS/IMU Navigation Using Graph Optimization. IEEE Trans. Instrum. Meas. 2021, 70, 1–10. [Google Scholar] [CrossRef]

- Aghili, F.; Salerno, A. Driftless 3-D attitude determination and positioning of mobile robots by integration of IMU with two RTK GPSs. IEEE ASME Trans. Mechatron. 2011, 18, 21–31. [Google Scholar] [CrossRef]

- Takai, R.; BARAWID Jr, O.; Ishii, K.; Noguchi, N. Development of crawler-type robot tractor based on GPS and IMU. IFAC Proc. Vol. 2010, 43, 151–156. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Huang, T.; Zhang, R.; Yi, X. PLD-SLAM: A new RGB-D SLAM method with point and line features for indoor dynamic scene. ISPRS Int. J. Geoinf 2021, 10, 163. [Google Scholar] [CrossRef]

- Bakkay, M.C.; Arafa, M.; Zagrouba, E. Dense 3D SLAM in dynamic scenes using Kinect. In Proceedings of the Iberian Conference on Pattern Recognition and Image Analysis, Santiago de Compostela, Spain, 17–19 June 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 121–129. [Google Scholar]

- Zheng, Z.; Li, X.; Sun, Z.; Song, X. A novel visual measurement framework for land vehicle positioning based on multimodule cascaded deep neural network. IEEE Trans. Industr. Inform. 2020, 17, 2347–2356. [Google Scholar] [CrossRef]

- Yang, K.; Zhang, W.; Li, C.; Wang, X. Accurate location in dynamic traffic environment using semantic information and probabilistic data association. Sensors 2022, 22, 5042. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Zhang, Y.; Shi, J.; Long, L.; Lu, Z. Robust Lidar-Inertial Odometry with Ground Condition Perception and Optimization Algorithm for UGV. Sensors 2022, 22, 7424. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Li, X.; Song, J. Point Cloud Completion Network Applied to Vehicle Data. Sensors 2022, 22, 7346. [Google Scholar] [CrossRef]

- Schulte-Tigges, J.; Förster, M.; Nikolovski, G.; Reke, M.; Ferrein, A.; Kaszner, D.; Matheis, D.; Walter, T. Benchmarking of Various LiDAR Sensors for Use in Self-Driving Vehicles in Real-World Environments. Sensors 2022, 22, 7146. [Google Scholar] [CrossRef]

- Wen, W.; Hsu, L.T.; Zhang, G. Performance analysis of NDT-based graph SLAM for autonomous vehicle in diverse typical driving scenarios of Hong Kong. Sensors 2018, 18, 3928. [Google Scholar] [CrossRef] [Green Version]

- Wen, W.; Bai, X.; Zhan, W.; Tomizuka, M.; Hsu, L.T. Uncertainty estimation of LiDAR matching aided by dynamic vehicle detection and high definition map. Electron. Lett. 2019, 55, 348–349. [Google Scholar] [CrossRef] [Green Version]

- Akai, N.; Morales, L.Y.; Takeuchi, E.; Yoshihara, Y.; Ninomiya, Y. Robust localization using 3D NDT scan matching with experimentally determined uncertainty and road marker matching. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 1356–1363. [Google Scholar]

- Javanmardi, E.; Javanmardi, M.; Gu, Y.; Kamijo, S. Pre-estimating self-localization error of NDT-based map-matching from map only. IEEE trans. Intell. Transp. Syst. 2020, 22, 7652–7666. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhang, W.; Gu, J.; Yang, J.; Huang, K. Lidar mapping optimization based on lightweight semantic segmentation. IEEE Trans. Veh. Technol. 2019, 4, 353–362. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. LOAM: Lidar odometry and mapping in real-time. In Robotics: Science and Systems; IFRR: Berkeley, CA, USA, 2014; Volume 2, pp. 1–9. [Google Scholar]

- Anderson, S.; Barfoot, T.D. RANSAC for motion-distorted 3D visual sensors. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 2093–2099. [Google Scholar]

- Tong, C.H.; Barfoot, T.D. Gaussian process Gauss-Newton for 3D laser-based visual odometry. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, Tokyo, Japan, 3–7 November; pp. 5204–5211.

- Zhou, Z.; Zhao, C.; Adolfsson, D.; Su, S.; Gao, Y.; Duckett, T.; Sun, L. Ndt-transformer: Large-scale 3d point cloud localisation using the normal distribution transform representation. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 5654–5660. [Google Scholar]

- Chen, S.; Ma, H.; Jiang, C.; Zhou, B.; Xue, W.; Xiao, Z.; Li, Q. NDT-LOAM: A Real-time Lidar odometry and mapping with weighted NDT and LFA. IEEE Sens. J. 2021, 22, 3660–3671. [Google Scholar] [CrossRef]

- Kan, Y.C.; Hsu, L.T.; Chung, E. Performance Evaluation on Map-based NDT Scan Matching Localization using Simulated Occlusion Datasets. IEEE Sens. Lett. 2021, 5, 1–4. [Google Scholar] [CrossRef]

- Wang, X.; Shang, H.; Jiang, L. Improved Point Pair Feature based Cloud Registration on Visibility and Downsampling. In Proceedings of the 2021 International Conference on Networking Systems of AI (INSAI), Shanghai, China, 19–20 November 2021; pp. 82–89. [Google Scholar]

- Yang, D.; Jiabao, B. An Optimization Method for Video Upsampling and Downsampling Using Interpolation-Dependent Image Downsampling. In Proceedings of the 2021 4th International Conference on Information Communication and Signal Processing (ICICSP), Shanghai, China, 24–26 September 2021; pp. 438–442. [Google Scholar]

- Hirose, O. Acceleration of non-rigid point set registration with downsampling and Gaussian process regression. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2858–2865. [Google Scholar] [CrossRef] [PubMed]

- Zou, B.; Qiu, H.; Lu, Y. Point cloud reduction and denoising based on optimized downsampling and bilateral filtering. IEEE Access 2020, 8, 136316–136326. [Google Scholar] [CrossRef]

| Max (m) | Mean (m) | Min (m) | Rmse (m) | Num (m) | Ratio (%) | |

|---|---|---|---|---|---|---|

| 90_front | 1.803023 | 0.820853 | 0.048090 | 0.953158 | 224,995 | 34.7 |

| 180_front | 0.468620 | 0.213139 | 0.019969 | 0.224281 | 316,011 | 48.7 |

| 270_front | 0.323754 | 0.115183 | 0.010731 | 0.126000 | 423,800 | 65.3 |

| 90_back | 1.081605 | 0.646901 | 0.023327 | 0.690814 | 238,414 | 36.8 |

| 180_back | 0.930584 | 0.452172 | 0.024949 | 0.500723 | 332,584 | 51.3 |

| 270_back | 0.600951 | 0.250809 | 0.023253 | 0.276960 | 437,715 | 67.5 |

| 90_left | 0 | 0 | 0 | 0 | 117,606 | 18.1 |

| 180_left | 0.492303 | 0.244453 | 0.018364 | 0.265370 | 337,150 | 52.0 |

| 270_left | 0.310869 | 0.132304 | 0.014551 | 0.141764 | 566,296 | 87.3 |

| 90_right | 0 | 0 | 0 | 0 | 7658 | 0.12 |

| 180_right | 1.422529 | 0.739286 | 0.022606 | 0.804200 | 311,443 | 48.0 |

| 270_right | 1.029491 | 0.498957 | 0.009679 | 0.562762 | 545,708 | 84.1 |

| Max (m) | Mean (m) | Min (m) | Rmse (m) | Num (m) | Ratio (%) | |

|---|---|---|---|---|---|---|

| 90_left | 0 | 0 | 0 | 0 | 117606 | 18.1 |

| 120_left | 1.408121 | 0.533117 | 0.010324 | 0.576745 | 162,363 | 25.0 |

| 150_left | 1.042893 | 0.504791 | 0.013068 | 0.549890 | 223,012 | 34.5 |

| 180_left | 0.492303 | 0.244453 | 0.018364 | 0.265370 | 337,150 | 52.0 |

| 210_left | 0.425172 | 0.164817 | 0.012010 | 0.177659 | 482,429 | 74.4 |

| 240_left | 0.374192 | 0.143122 | 0.009739 | 0.154102 | 534,265 | 82.4 |

| 270_left | 0.310869 | 0.132304 | 0.014551 | 0.141764 | 566,296 | 87.3 |

| 90_right | 0 | 0 | 0 | 0 | 7658 | 12.2 |

| 120_right | 0 | 0 | 0 | 0 | 135,137 | 20.8 |

| 150_right | 2.502934 | 1.440530 | 0.036937 | 1.535981 | 191,471 | 29.5 |

| 180_right | 1.422529 | 0.739286 | 0.022606 | 0.804200 | 311,443 | 48.0 |

| 210_right | 1.326894 | 0.649623 | 0.014481 | 0.731533 | 451,772 | 69.7 |

| 240_right | 1.371113 | 0.661285 | 0.014154 | 0.760515 | 508,636 | 78.4 |

| 270_right | 1.029491 | 0.498957 | 0.009679 | 0.562762 | 545,708 | 84.1 |

| Max (m) | Mean (m) | Min (m) | Rmse (m) | Num (m) | Ratio (%) | |

|---|---|---|---|---|---|---|

| 41_front | 3.008521 | 1.307617 | 0.075811 | 1.509459 | 161,495 | 24.9 |

| 33_back | 3.445162 | 1.199947 | 0.156978 | 1.278492 | 154,256 | 23.8 |

| 113_left | 5.058614 | 0.807393 | 0.025104 | 0.913465 | 151,678 | 23.4 |

| 122_right | 2.680347 | 1.295943 | 0.035288 | 1.380118 | 137,933 | 21.3 |

| Max (m) | Min (m) | Rmse (m) | Num (m) | Ratio (%) | |

|---|---|---|---|---|---|

| 20_front | 1.715141 | 0.021566 | 0.547751 | 367,065 | 14.7 |

| 22_back | 6.650557 | 0.018378 | 1.027503 | 378,291 | 15.2 |

| 97_left | 2.507830 | 0.031737 | 0.995748 | 460,454 | 18.5 |

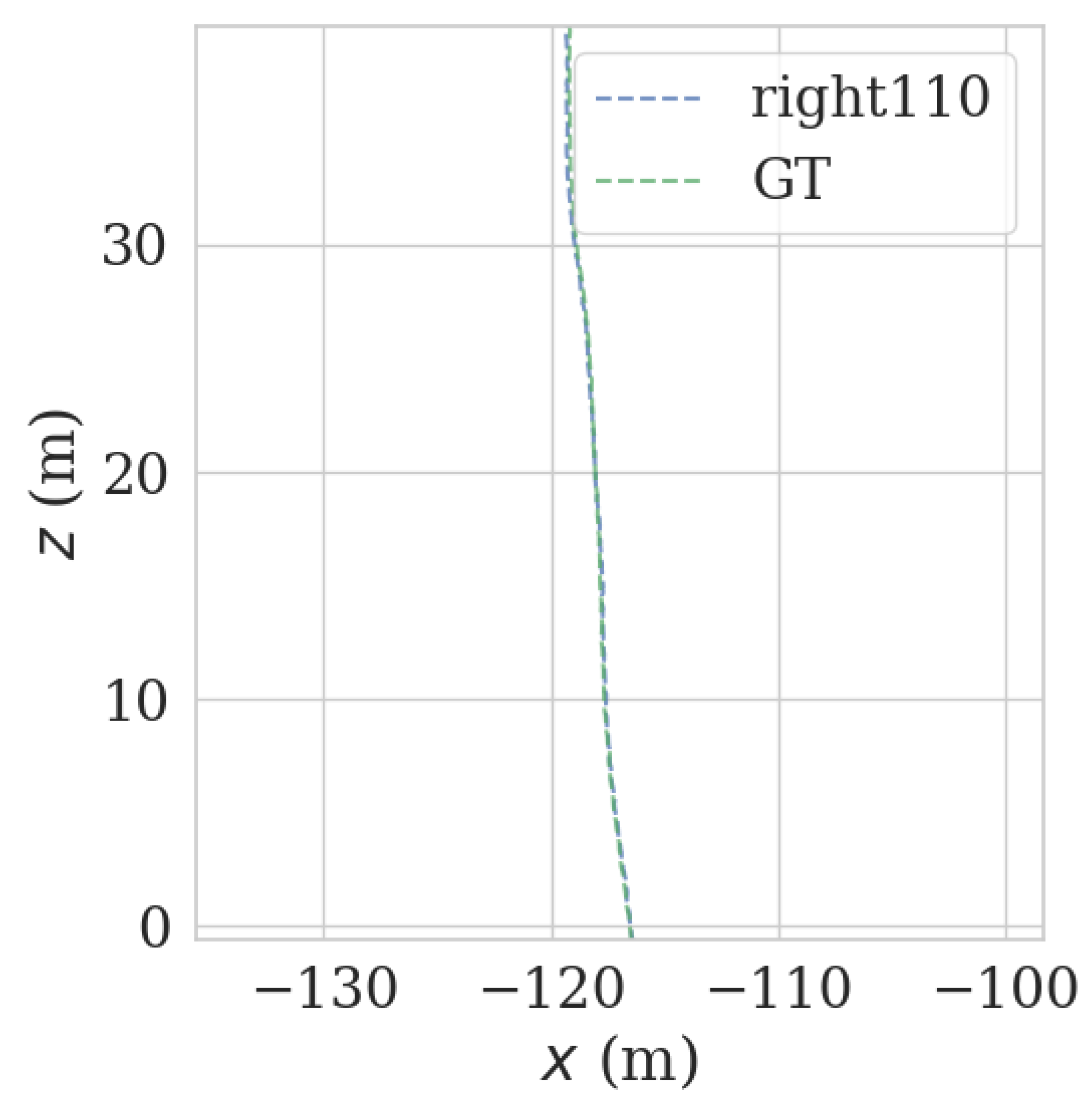

| 110_right | 1.038546 | 0.023148 | 0.703556 | 406,214 | 16.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gu, J.; Lan, Y.; Kong, F.; Liu, L.; Sun, H.; Liu, J.; Yi, L. A Method of Setting the LiDAR Field of View in NDT Relocation Based on ROI. Sensors 2023, 23, 843. https://doi.org/10.3390/s23020843

Gu J, Lan Y, Kong F, Liu L, Sun H, Liu J, Yi L. A Method of Setting the LiDAR Field of View in NDT Relocation Based on ROI. Sensors. 2023; 23(2):843. https://doi.org/10.3390/s23020843

Chicago/Turabian StyleGu, Jian, Yubin Lan, Fanxia Kong, Lei Liu, Haozheng Sun, Jie Liu, and Lili Yi. 2023. "A Method of Setting the LiDAR Field of View in NDT Relocation Based on ROI" Sensors 23, no. 2: 843. https://doi.org/10.3390/s23020843