Automatic Individual Pig Detection and Tracking in Pig Farms

Abstract

:1. Introduction

2. Related Work

3. Methods

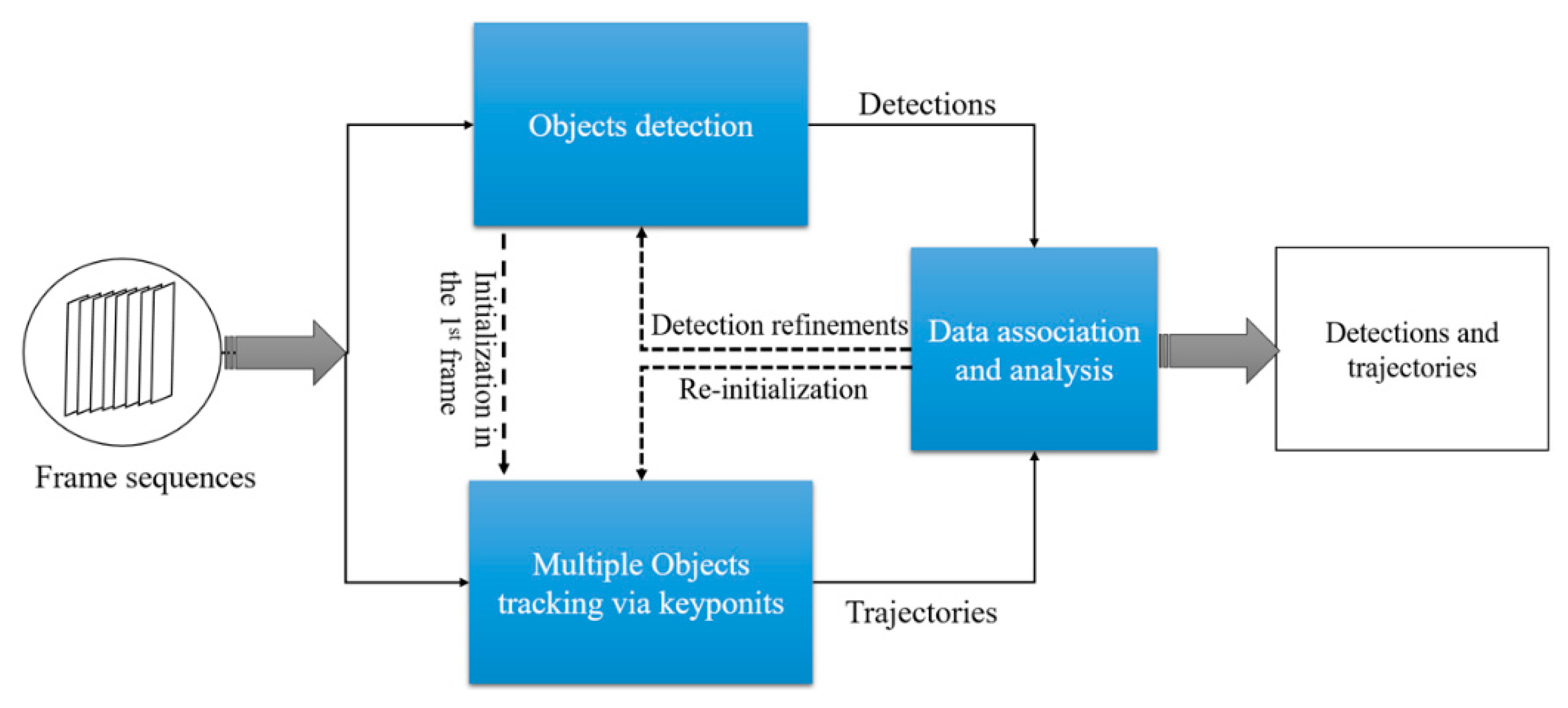

3.1. Method Overview

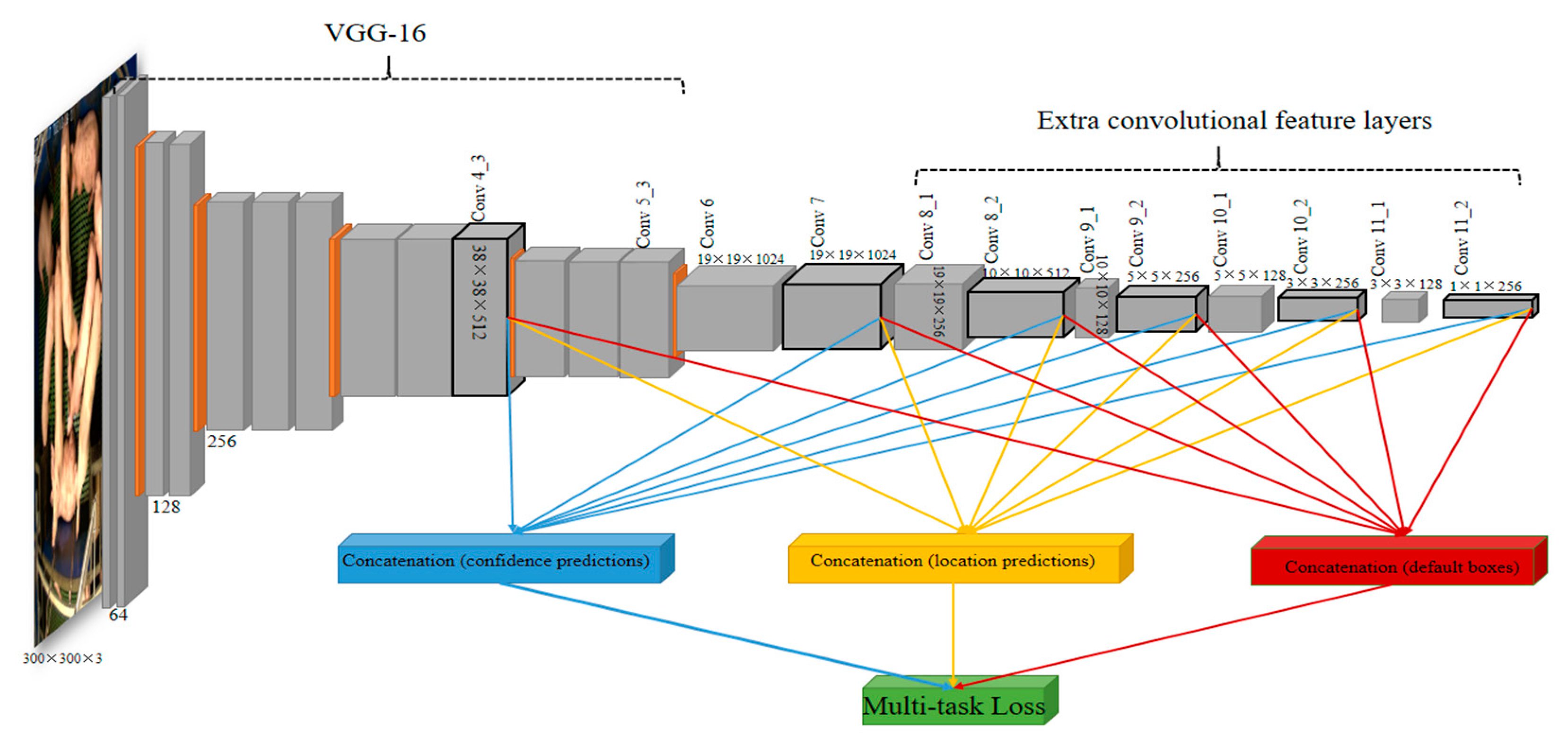

3.2. CNN Based Object Detection

3.3. Pig Detection Using SSD

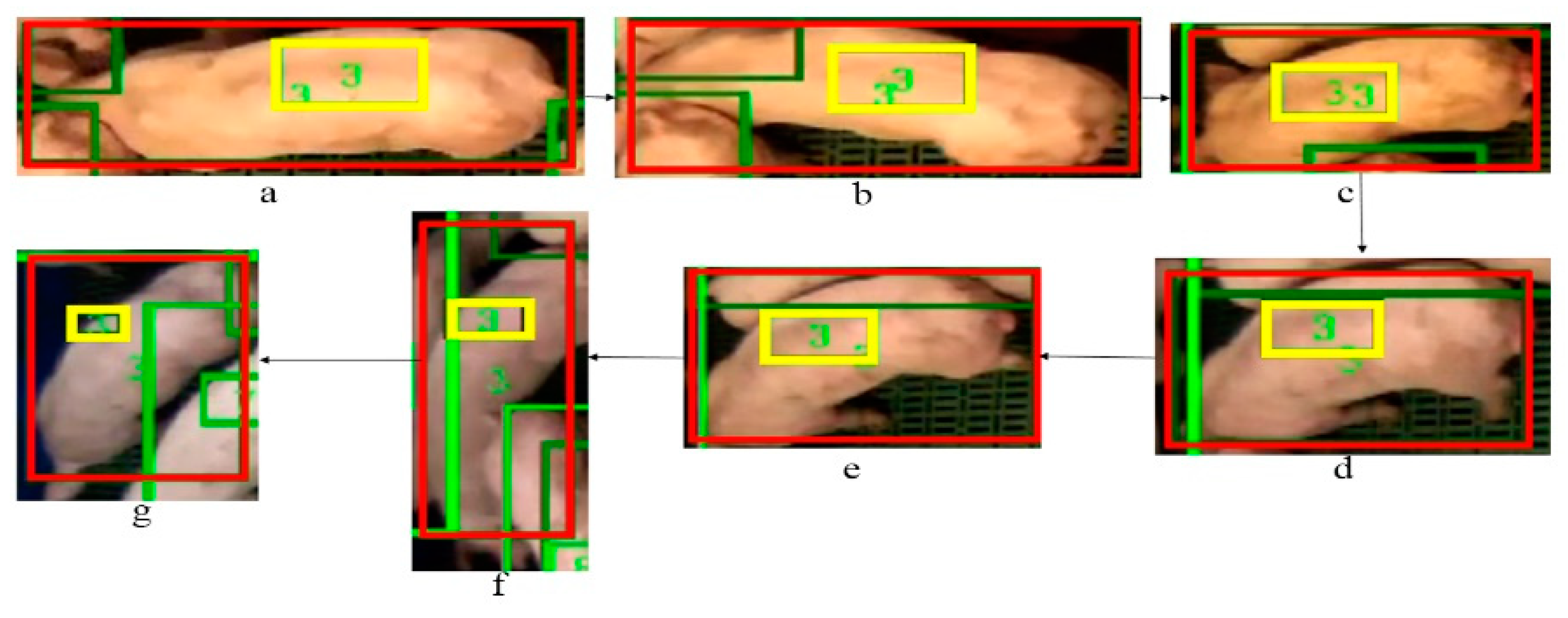

3.4. Individual Pig Tracking Using Correlation Filter

3.5. Data Association

- (1).

- An object is tracked if a contains only one , which means the one-to-one association between the detection bounding boxes and the tag-boxes has been established. (State: tracked)

- (2).

- An object is not currently tracked due to an occlusion if the is not assigned to any existing tracks, namely the tag-box of the tracker is located out of the default detection bounding box . This will trigger a tag-box initialization scheme. (State: tracking drift [tracking target shifts away from the detection bounding box])

- (3).

- Unstable detection occurs if the which is restrained by the is not assigned to any . This will trigger a detection refinement scheme based on the tracklet derived from the historical detections. (State: unstable detection).

- (4).

- If more than one are assigned to a , it means the tag-box with less assignment confidence is drifting to an associated bounding box. This triggers a tag-box pending process followed by the initialization in condition (2). (State: tracking drift)

| Algorithm 1 our hierarchical data association algorithm | |

| Input: | Current tth frame and previous trajectories |

| The detection bounding boxes computed from the detector described in Section 3.3 | |

| The tag-boxes computed from the tracker described in Section 3.4 | |

| Output: | bounding boxes and trajectories for the tth frame |

| Procedure | |

| 1 | associate the detection bounding boxes to the tag-boxes |

| 2 | If each in is assigned to the corresponding in with one-to-one manner |

| 3 | Return the as tracked bounding boxes and update the tracklets |

| 4 | else |

| 5 | Update the tracklets related to the and return the assigned detection boxes (), unassigned detection boxes () and unassigned tag-boxes (). |

| 6 | end if |

| 7 | for each tag-box in the unassigned tag-boxes |

| 8 | set a default box () to the tag-box according to the updated tracklets; |

| 9 | associate the unsigned detection boxes () to the default box () |

| 10 | if find a best matched box in the |

| 11 | set the best matched box as the tracked bounding box and update the tracklet |

| 12 | else |

| 13 | set the default box as the tracked bounding box and update the tracklet, |

| 14 | end if |

| 15 | associate the unassigned tag-boxes () to the default box () |

| 16 | if no matched tag-box is founded |

| 17 | set a counter array (age), age[] = age[] + 1 |

| 18 | else |

| 19 | age[] = age[] − 1 |

| 20 | end if |

| 21 | if age[] > threshold value (T) |

| 22 | Initialize the tag-box and reset age[] = 0; |

| 23 | end if |

| 24 | associate the assigned detection boxes () to the unassigned tag-box |

| 25 | if the assigned detection box has more than one tag-boxes |

| 26 | pend the tag-box |

| 27 | end if |

| 28 | end for |

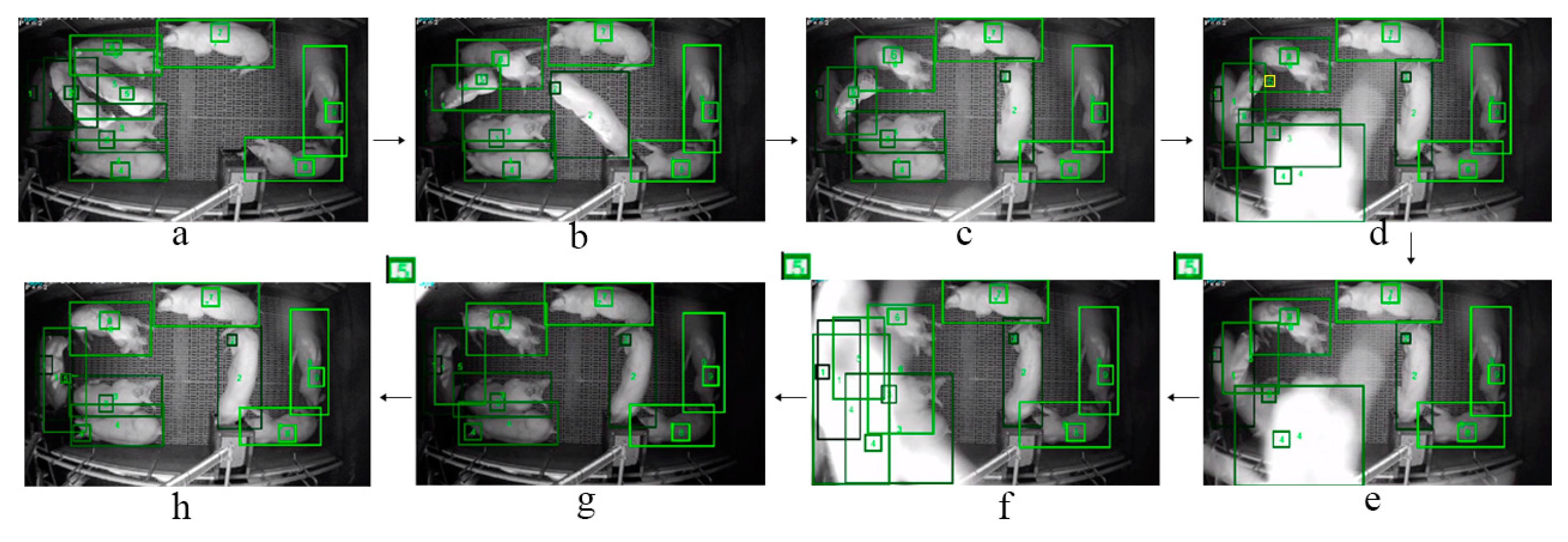

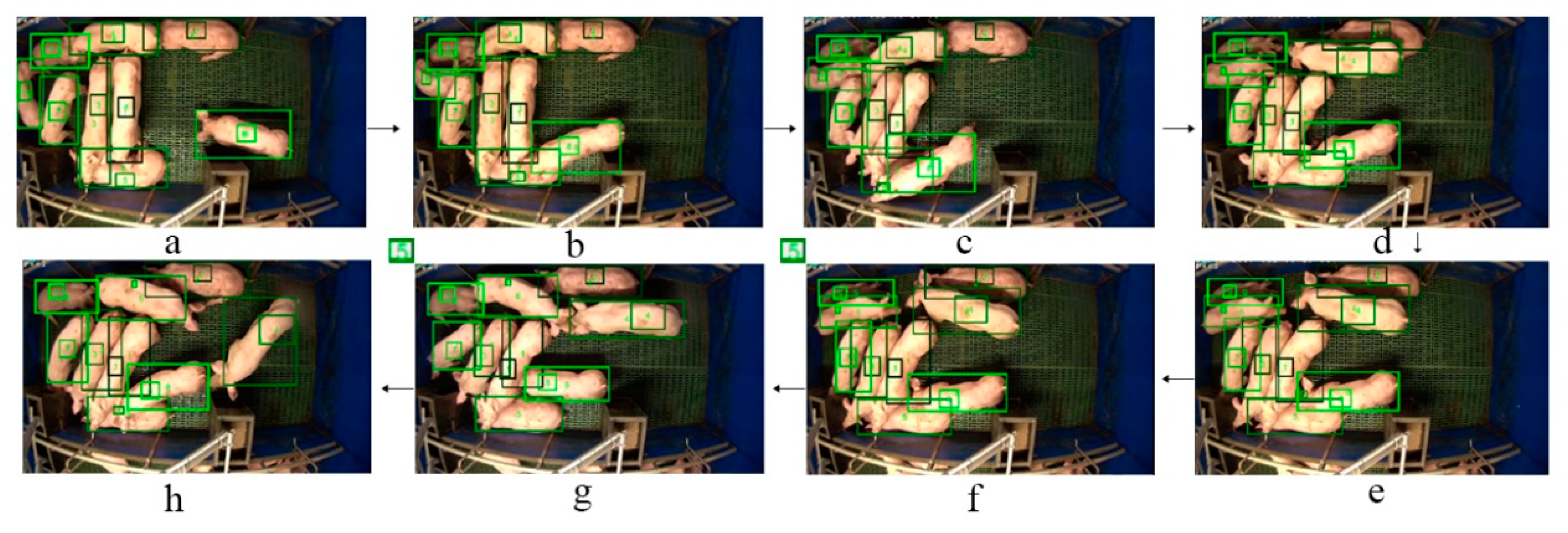

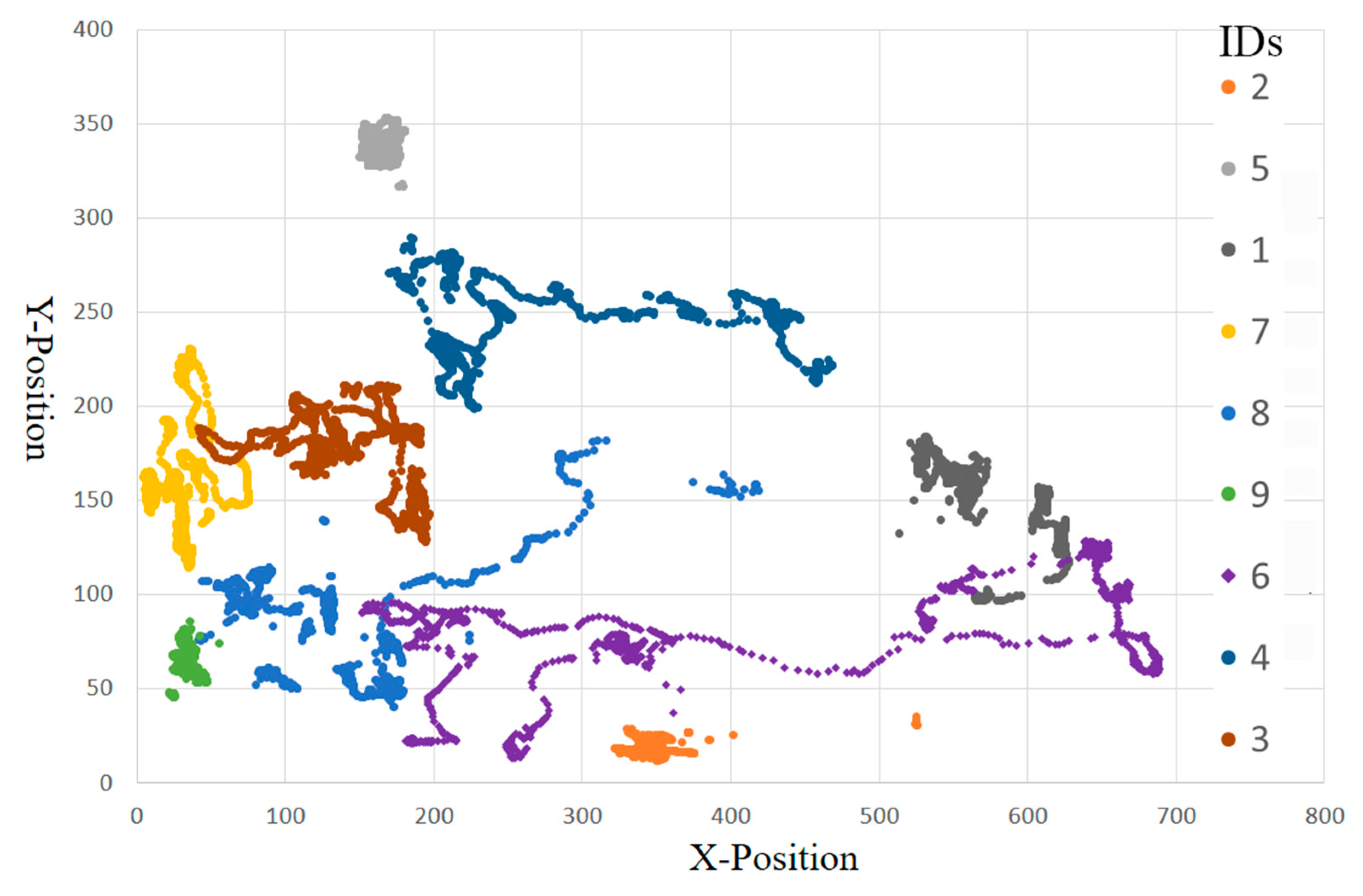

4. Experiments and Results

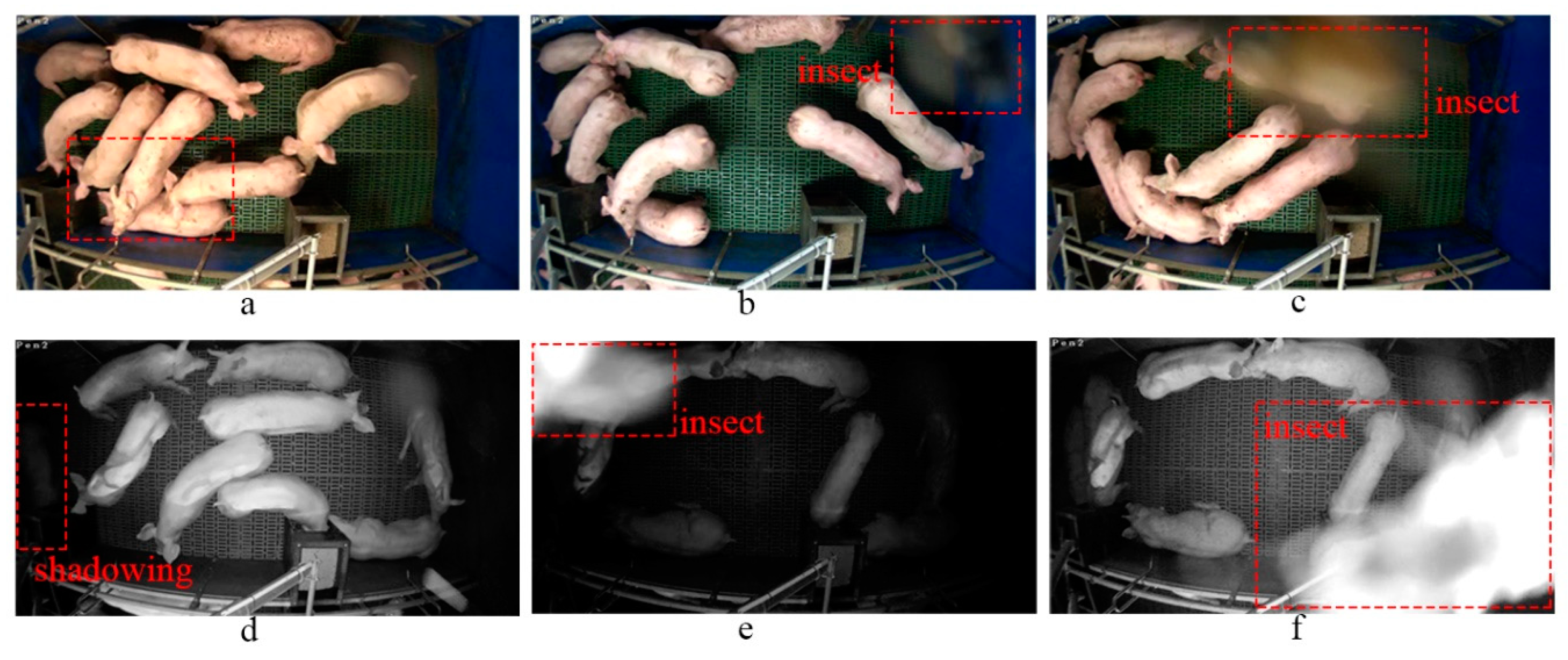

4.1. Materials and Evaluation Metrics

4.2. Implementation Details

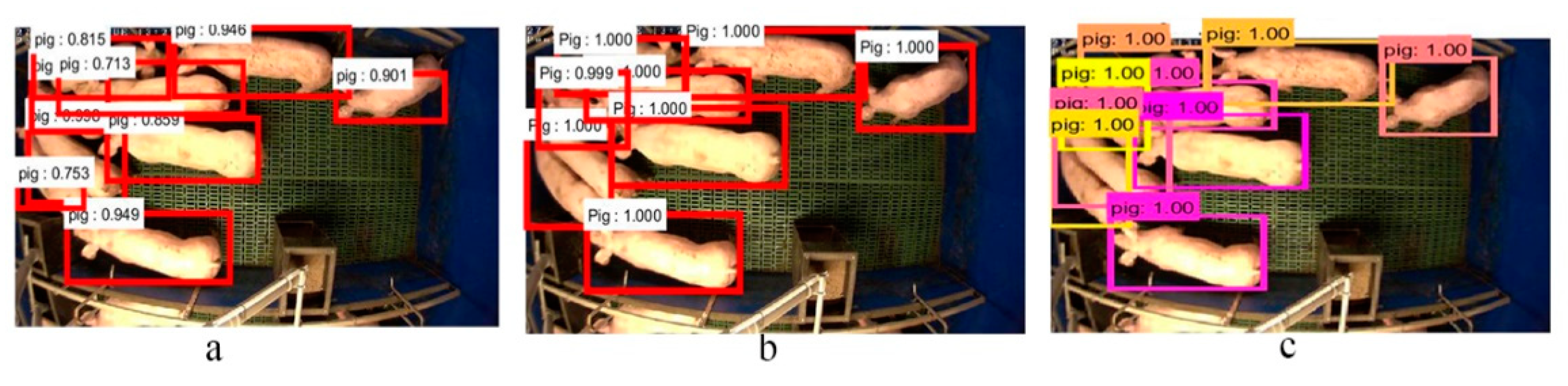

4.3. Experimental Results

5. Discussion and Further Work

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Leruste, H.; Bokkers, E.A.; Sergent, O.; Wolthuis-Fillerup, M.; van Reenen, C.G.; Lensink, B.J. Effects of the observation method (direct v. From video) and of the presence of an observer on behavioural results in veal calves. Animal 2013, 7, 1858–1864. [Google Scholar] [CrossRef] [PubMed]

- Jack, K.M.; Lenz, B.B.; Healan, E.; Rudman, S.; Schoof, V.A.; Fedigan, L. The effects of observer presence on the behavior of cebus capucinus in costa rica. Am. J. Primatol. 2008, 70, 490–494. [Google Scholar] [CrossRef] [PubMed]

- Iredale, S.K.; Nevill, C.H.; Lutz, C.K. The influence of observer presence on baboon (papio spp.) and rhesus macaque (macaca mulatta) behavior. Appl. Anim. Behav. Sci. 2010, 122, 53–57. [Google Scholar] [CrossRef] [PubMed]

- Tuyttens, F.A.M.; de Graaf, S.; Heerkens, J.L.T.; Jacobs, L.; Nalon, E.; Ott, S.; Stadig, L.; Van Laer, E.; Ampe, B. Observer bias in animal behaviour research: Can we believe what we score, if we score what we believe? Anim. Behav. 2014, 90, 273–280. [Google Scholar] [CrossRef]

- Taylor, D.J. Pig Diseases, 9th ed.; 5M Publishing: Sheffield, UK, 2013. [Google Scholar]

- Martinez-Aviles, M.; Fernandez-Carrion, E.; Garcia-Baones, J.M.L.; Sanchez-Vizcaino, J.M. Early detection of infection in pigs through an online monitoring system. Transbound. Emerg. Dis. 2017, 64, 364–373. [Google Scholar] [CrossRef] [PubMed]

- Kulikov, V.A.; Khotskin, N.V.; Nikitin, S.V.; Lankin, V.S.; Kulikov, A.V.; Trapezov, O.V. Application of 3-d imaging sensor for tracking minipigs in the open field test. J. Neurosci. Meth. 2014, 235, 219–225. [Google Scholar] [CrossRef] [PubMed]

- Stavrakakis, S.; Li, W.; Guy, J.H.; Morgan, G.; Ushaw, G.; Johnson, G.R.; Edwards, S.A. Validity of the microsoft kinect sensor for assessment of normal walking patterns in pigs. Comput. Electron. Agr. 2015, 117, 1–7. [Google Scholar] [CrossRef]

- Kim, J.; Chung, Y.; Choi, Y.; Sa, J.; Kim, H.; Chung, Y.; Park, D.; Kim, H. Depth-based detection of standing-pigs in moving noise environments. Sensors 2017, 17, 2757. [Google Scholar] [CrossRef]

- Matthews, S.G.; Miller, A.L.; Plotz, T.; Kyriazakis, I. Automated tracking to measure behavioural changes in pigs for health and welfare monitoring. Sci. Rep. 2017, 7, 17582. [Google Scholar] [CrossRef] [Green Version]

- Chung, Y.; Kim, H.; Lee, H.; Park, D.; Jeon, T.; Chang, H.H. A cost-effective pigsty monitoring system based on a video sensor. KSII Trans. Internet Inf. 2014, 8, 1481–1498. [Google Scholar]

- Li, Y.Y.; Sun, L.Q.; Zou, Y.B.; Li, Y. Individual pig object detection algorithm based on gaussian mixture model. Int. J. Agr. Biol. Eng. 2017, 10, 186–193. [Google Scholar]

- Mcfarlane, N.J.B.; Schofield, C.P. Segmentation and tracking of piglets in images. Mach. Vision. Appl. 1995, 8, 187–193. [Google Scholar] [CrossRef]

- Kashiha, M.; Bahr, C.; Ott, S.; Moons, C.P.H.; Niewold, T.A.; Odberg, F.O.; Berckmans, D. Automatic identification of marked pigs in a pen using image pattern recognition. Comput. Electron. Agr. 2013, 93, 111–120. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Richter, U.; Hensel, O.; Edwards, S.; Sturm, B. Using machine vision for investigation of changes in pig group lying patterns. Comput. Electron. Agr. 2015, 119, 184–190. [Google Scholar] [CrossRef]

- Tu, G.J.; Karstoft, H.; Pedersen, L.J.; Jorgensen, E. Foreground detection using loopy belief propagation. Biosyst. Eng. 2013, 116, 88–96. [Google Scholar] [CrossRef] [Green Version]

- Ahrendt, P.; Gregersen, T.; Karstoft, H. Development of a real-time computer vision system for tracking loose-housed pigs. Comput. Electron. Agr. 2011, 76, 169–174. [Google Scholar] [CrossRef]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEE Trans. Pattern Anal. 2015, 37, 583–596. [Google Scholar] [CrossRef]

- Nilsson, M.; Herlin, A.H.; Ardo, H.; Guzhva, O.; Astrom, K.; Bergsten, C. Development of automatic surveillance of animal behaviour and welfare using image analysis and machine learned segmentation technique. Animal 2015, 9, 1859–1865. [Google Scholar] [CrossRef] [Green Version]

- Stauffer, C.; Grimson, W.E.L. Learning patterns of activity using real-time tracking. IEEE Trans. Pattern Anal. 2000, 22, 747–757. [Google Scholar] [CrossRef] [Green Version]

- Sandau, M.; Koblauch, H.; Moeslund, T.B.; Aanaes, H.; Alkjaer, T.; Simonsen, E.B. Markerless motion capture can provide reliable 3d gait kinematics in the sagittal and frontal plane. Med. Eng. Phys. 2014, 36, 1168–1175. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, San Diego, CA, USA, 20–25 June 2015; pp. 1440–1448. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. arXiv, 2016; arXiv:1605.06409. [Google Scholar]

- Ren, S.Q.; He, K.M.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. Lect. Notes Comput. Sci. 2016, 9905, 21–37. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Uijlings, J.R.R.; van de Sande, K.E.A.; Gevers, T.; Smeulders, A.W.M. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. Eco: Efficient convolution operators for tracking. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 6931–6939. [Google Scholar]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Learning spatially regularized correlation filters for visual tracking. In Proceedings of the IEEE International Conference Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4310–4318. [Google Scholar]

- Danelljan, M.; Robinson, A.; Khan, F.S.; Felsberg, M. Beyond correlation filters: Learning continuous convolution operators for visual tracking. Comput. Vis. ECCV 2016, 9909 Pt V, 472–488. [Google Scholar]

- Declercq, A.; Piater, J.H. Online learning of gaussian mixture models–A two-level approach. In Proceedings of the Third International Conference on Computer Vision Theory and Applications, Funchal, Portugal, 22–25 January 2008; Volume 1, pp. 605–611. [Google Scholar]

- Munkres, J. Algorithms for the assignment and transportation problems. J. Soc. Ind. Appl. Math. 1957, 5, 32–38. [Google Scholar] [CrossRef]

- Bernardin, K.; Stiefelhagen, R. Evaluating multiple object tracking performance: The clear mot metrics. EURASIP J. Image Video 2008, 2008, 246309. [Google Scholar] [CrossRef]

- Li, Y.A.; Huang, C.; Nevatia, R. Learning to associate: Hybridboosted multi-target tracker for crowded scene. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; Volume 1–4, pp. 2945–2952. [Google Scholar]

- Vedaldi, A.; Lenc, K. Matconvnet convolutional neural networks for matlab. In Proceedings of the 2015 ACM Multimedia Conference, Brisbane, Australia, 26–30 October 2015; pp. 689–692. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. 2010, 32, 1627–1645. [Google Scholar] [CrossRef]

- Van de Weijer, J.; Schmid, C.; Verbeek, J.; Larlus, D. Learning color names for real-world applications. IEEE Trans. Image Process. 2009, 18, 1512–1523. [Google Scholar] [CrossRef]

| Sequence | Model | Number of Frames | The Conditions of the Sequence |

|---|---|---|---|

| S1 | Day | 800 | Deformations, light fluctuation, occlusions caused by pigs and a long stay insect on the camera |

| S2 | Day | 700 | Deformations, severe occlusions happened among pigs that resulted in the object instances becoming invisible during the occlusions |

| S3 | Day | 1500 | Deformations, light fluctuation, occlusions caused by an insect, occlusions among the pigs |

| S4 | Night | 600 | Deformations, occlusions caused by an insect, occlusions among the pigs |

| S5 | Night | 600 | Deformations, occlusions among the pigs |

| Metric | Description |

|---|---|

| Recall ↑ | Percentage of correctly matched detections to ground-truth detections |

| Precision ↑ | Percentage of correctly matched detections to total detections |

| FAF ↓ | Number of false alarms per frame |

| MT ↑, PT, ML ↓ | Number of mostly tracked, partially tracked and mostly lost trajectories |

| IDs ↓ | Number of identity switches |

| FRA ↓ | Number of fragmentations of trajectories |

| MOTA ↑ | The overall multiple objects tracking accuracy |

| Sequences ID | Recall (%) ↑ | Precision (%) ↑ | FAF ↓ | MT ↑ | PT | ML ↓ | IDs ↓ | FRA ↓ | MOTA (%) ↑ |

|---|---|---|---|---|---|---|---|---|---|

| S1 | 91.51 | 91.95 | 0.72 | 8 | 1 | 0 | 28 | 106 | 83.9 |

| S2 | 94.12 | 93.98 | 0.54 | 9 | 0 | 0 | 30 | 123 | 88.1 |

| S3 | 97.64 | 97.57 | 0.22 | 9 | 0 | 0 | 10 | 52 | 95.2 |

| S4 | 92.90 | 92.79 | 0.65 | 9 | 0 | 0 | 14 | 26 | 85.7 |

| S5 | 97.52 | 97.30 | 0.21 | 9 | 0 | 0 | 8 | 24 | 95.0 |

| Average | 94.74 | 94.72 | 0.47 | 8.8 | 0.2 | 0 | 18 | 66.2 | 89.58 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Gray, H.; Ye, X.; Collins, L.; Allinson, N. Automatic Individual Pig Detection and Tracking in Pig Farms. Sensors 2019, 19, 1188. https://doi.org/10.3390/s19051188

Zhang L, Gray H, Ye X, Collins L, Allinson N. Automatic Individual Pig Detection and Tracking in Pig Farms. Sensors. 2019; 19(5):1188. https://doi.org/10.3390/s19051188

Chicago/Turabian StyleZhang, Lei, Helen Gray, Xujiong Ye, Lisa Collins, and Nigel Allinson. 2019. "Automatic Individual Pig Detection and Tracking in Pig Farms" Sensors 19, no. 5: 1188. https://doi.org/10.3390/s19051188