Integrate Weather Radar and Monitoring Devices for Urban Flooding Surveillance

Abstract

:1. Introduction

1.1. The Urban Flood Disaster

1.2. Establishment of Automated Urban Flood Monitoring System

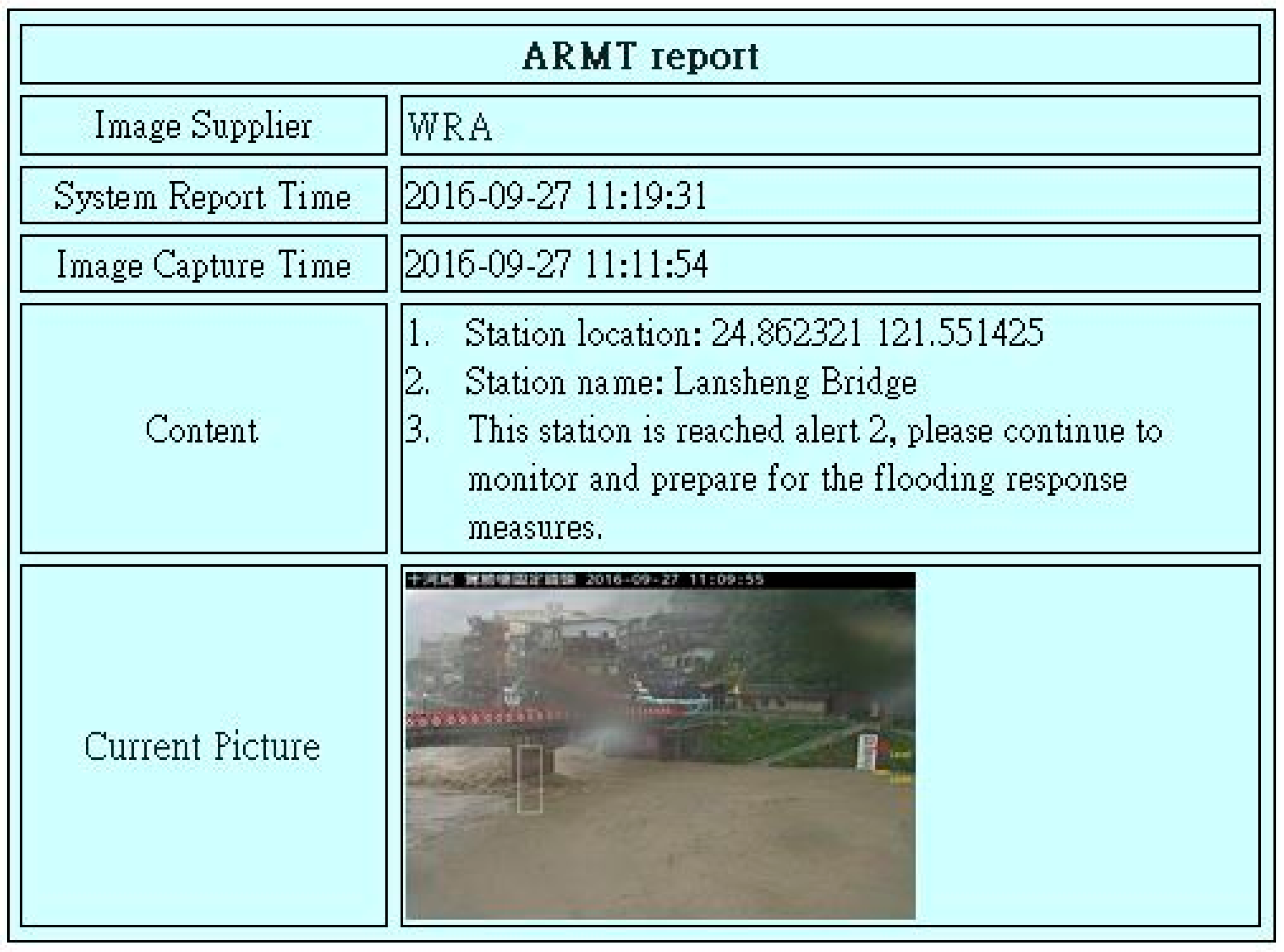

2. Intelligent Urban Flood Surveillance

2.1. Cloud Thickness Estimation with Respect to Weather Radar

- Step 1:

- Link the Central Weather Bureau website to acquire terrain-free weather radar.

- Step 2:

- Process images, including grayscale conversion processing, echo map matrix scaling to overlap the Taiwan administrative region map, and conversion of pixel position into latitude and longitude.

- Step 3:

- Estimate the cloud cover appearing on the weather radar within the scope between latitude ranging from 21 to 26 degrees north and longitude ranging from 119 to 123 degrees east: cloud thickness estimation, centroid calculation, comparison of CCTV images within 5, 10, and 50 km radius from the centroid, which is achieved by searching for CCTV locations through the database to obtain the images.

- Step 4:

- Monitor unceasingly through CCTV cameras for 24 h. If waterlogging or flooding occurs, a notice is issued.

- Step 5:

- The stop mechanism: the monitoring stops when there is no cloud cover detected for 2 consecutive hours within the field of latitude ranging from 21 to 26 degrees north and longitude ranging from 119 to 123 degrees east.

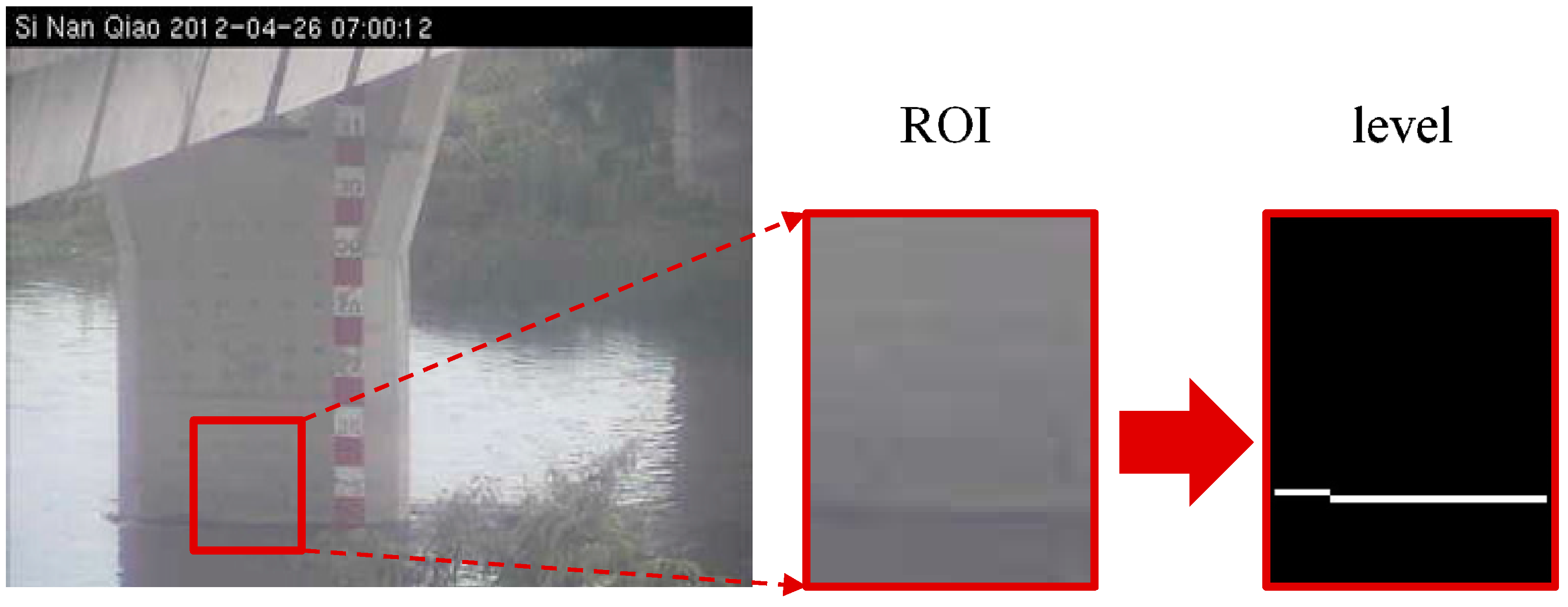

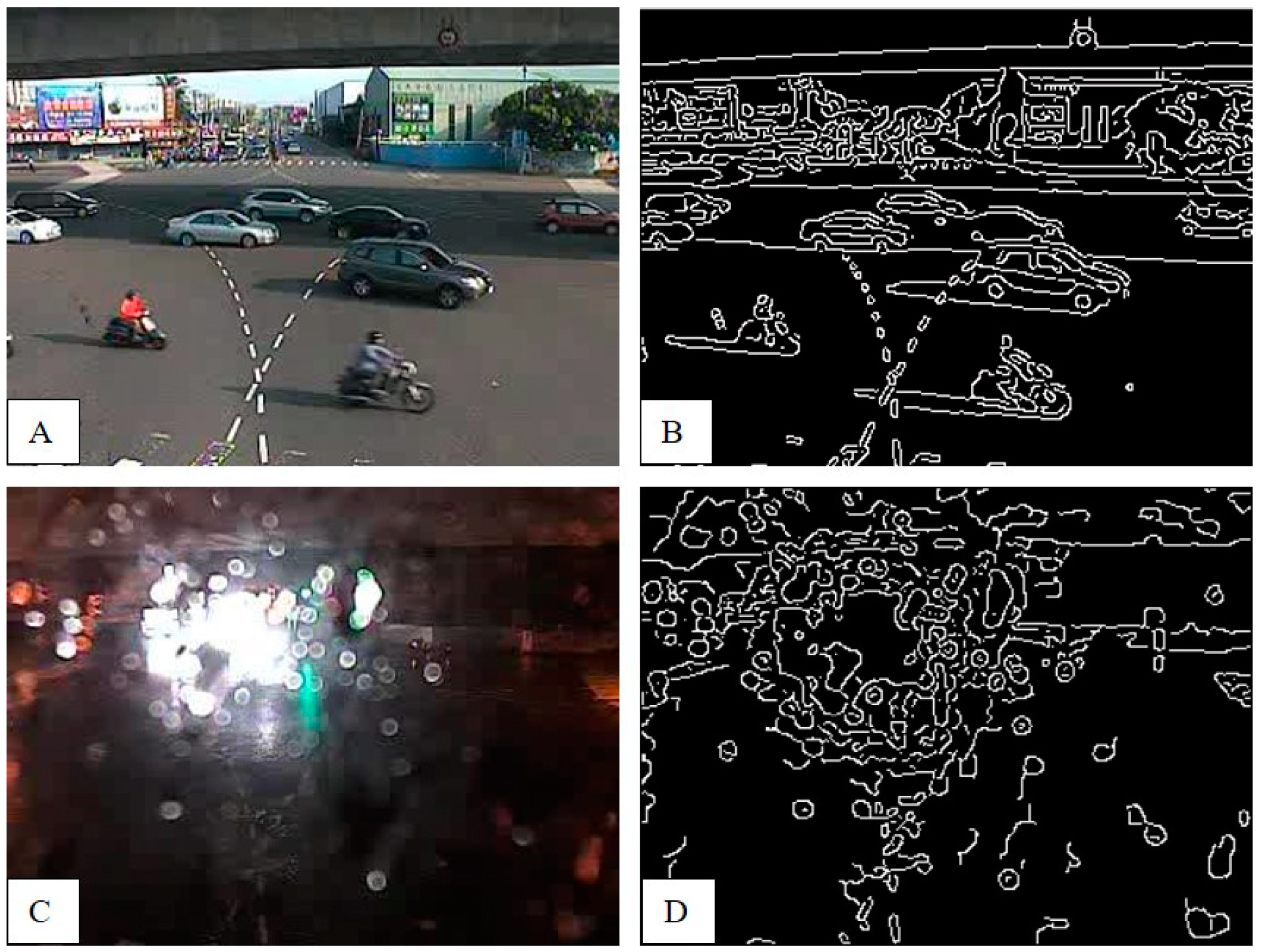

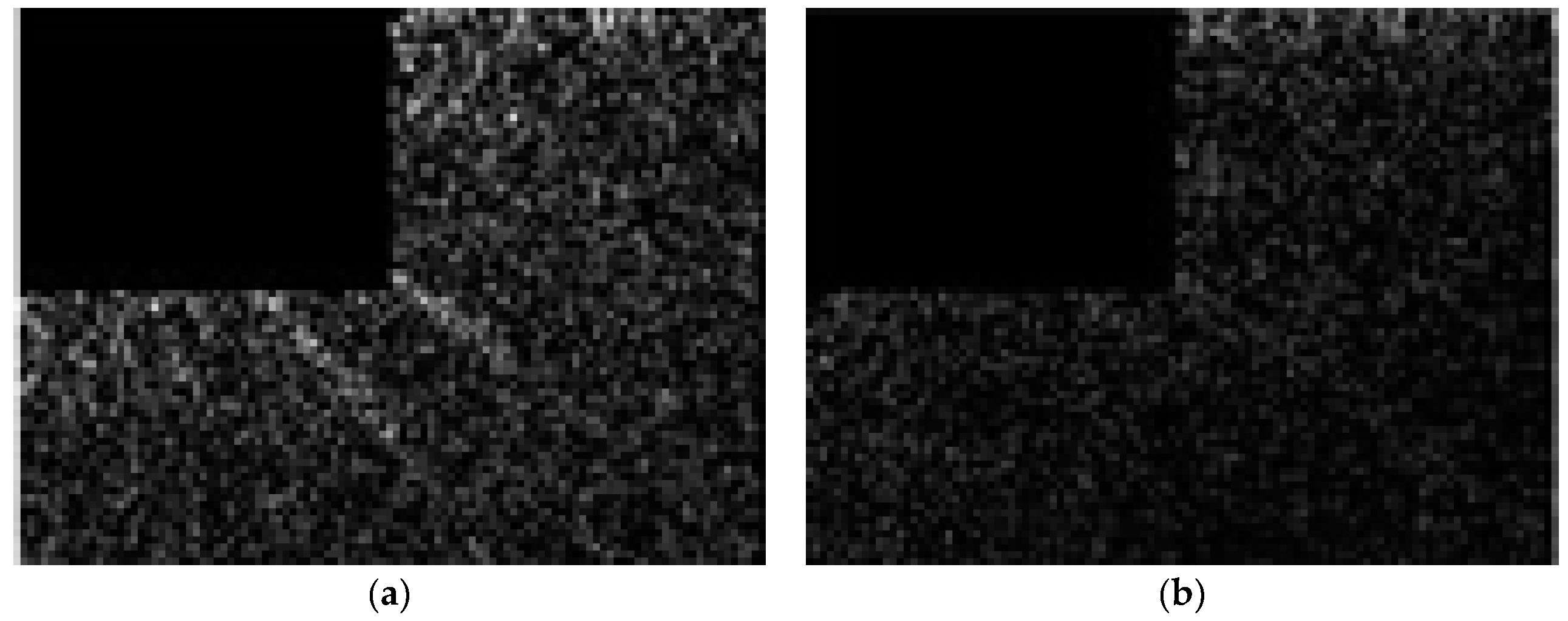

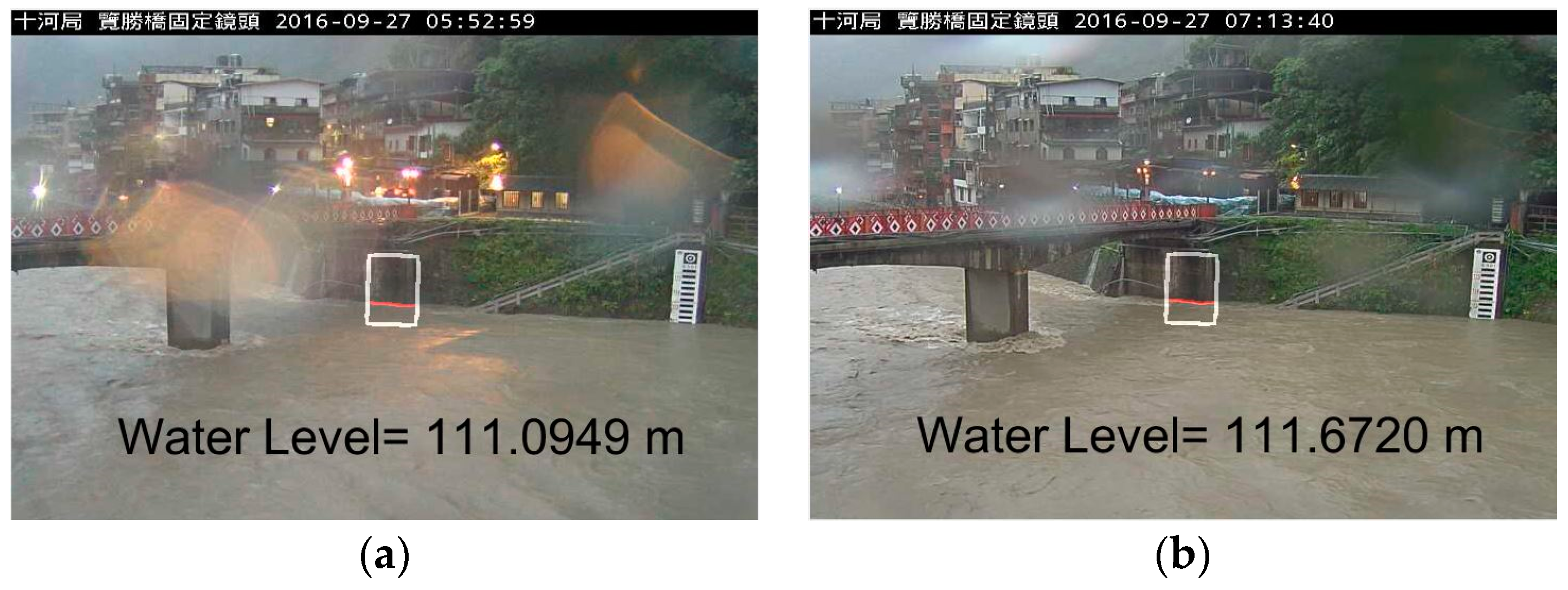

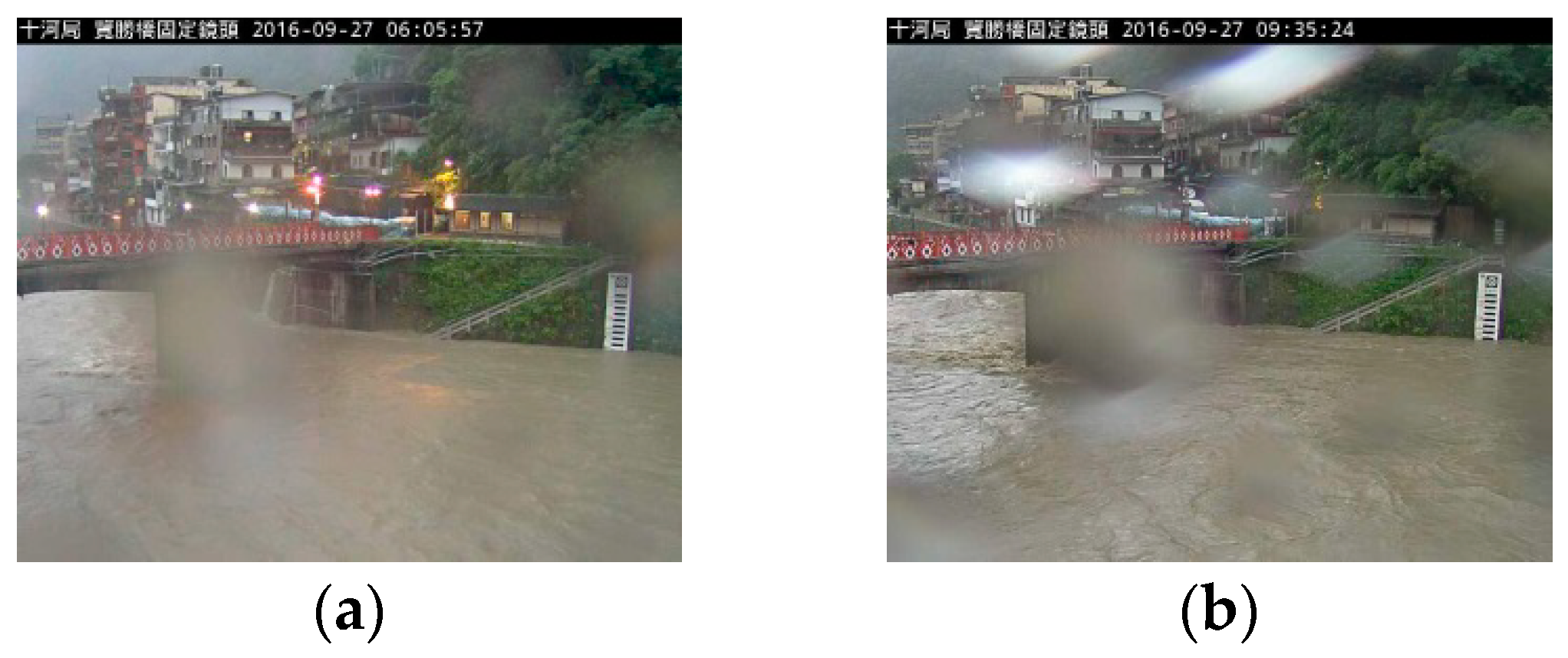

2.2. Image Water Level Identification Technology

- Step 1:

- Select appropriate images according to the image features.

- Step 2:

- Select images with obvious target objects (e.g., a white wall, a column).

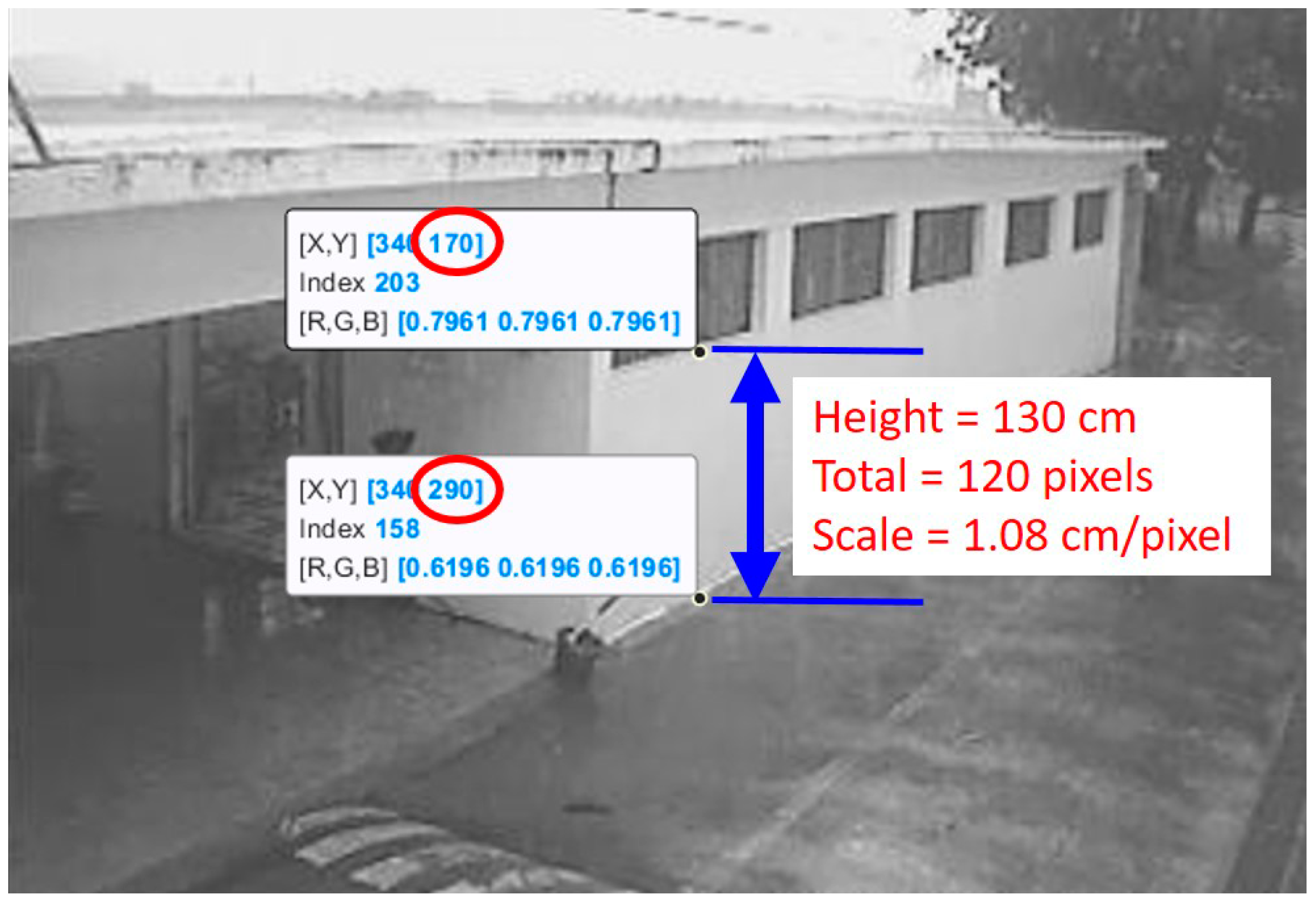

- Step 3:

- Design the virtual water gauge—also called digital water gauge, image water depth identification region, or region of interest (ROI)—and obtain the scale regarding the image to estimate the flood water depth through setting the conversion between the image pixel values and actual scale. The unit of the image scale is in centimeter per pixel (cm/pixel). The conversion of the image scale is performed as follows. (1) The target objects identifiable in the image, such as a white wall, a telecommunications cabinet, an electric pole and so on, are used to conduct the conversion of image scale. (2) For the case without any fixed and obvious target objects in the image, we went to scout the site for information to attain the image scale conversion between image pixels and on-site actual size.

- Step 4:

- Use the technology of image processing and image quantification to estimate the water level.

- Step 5:

- Correct error in the estimated water level and store it in the database for future warning issuing (issued by the implementation unit) and water level situation notifying (decided by the implementation unit).

2.3. Performance of Image Water Level Identification

3. Experimental Results and Discussion

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Guhathakurta, P.; Sreejith, O.P.; Menon, P.A. Impact of Climate Change on Extreme Rainfall Events and Flood Risk in India. J. Earth Syst. Sci. 2011, 120, 359. [Google Scholar] [CrossRef]

- Wade, S.D.; Rance, J.; Reynard, N. The UK Climate Change Risk Assessment 2012: Assessing the Impacts on Water Resources to Inform Policy Makers. Water Resour. Manag. 2013, 27, 1085–1109. [Google Scholar] [CrossRef]

- Ratnapradipa, D. Guest Commentary: 2012 Neha/Ul Sabbatical Report Vulnerability to Potential Impacts of Climate Change: Adaptation and Risk Communication Strategies for Environmental Health Practitioners in the United Kingdom. J. Environ. Health 2014, 76, 28–33. [Google Scholar] [PubMed]

- Tian, X.; ten Veldhuis, M.C.; See, L.; van de Giesen, N.; Wang, L.P.; Dagnachew Seyoum, S.; Lubbad, I.; Verbeiren, B. Crowd-Sourced Data: How Valuable and Reliable Are They for Real-Time Urban Flood Monitoring and Forecasting? EGU Gen. Assem. Conf. Abstr. 2018, 20, 361. [Google Scholar]

- Dai, Q.; Rico-Ramirez, M.A.; Han, D.; Islam, T.; Liguori, S. Probabilistic Radar Rainfall Nowcasts Using Empirical and Theoretical Uncertainty Models. Hydrol. Process. 2015, 29, 66–79. [Google Scholar] [CrossRef]

- Cristiano, E.; Veldhuis, M.C.T.; Giesen, N.V.D. Spatial and Temporal Variability of Rainfall and Their Effects on Hydrological Response in Urban Areas—A Review. Hydrol. Earth Syst. Sci. 2017, 21, 3859–3878. [Google Scholar] [CrossRef]

- Berne, A.; Krajewski, W.F. Radar for Hydrology: Unfulfilled Promise or Unrecognized Potential? Adv. Water Resour. 2013, 51, 357–366. [Google Scholar] [CrossRef]

- Otto, T.; Russchenberg, H.W. Estimation of Specific Differential Phase and Differential Backscatter Phase from Polarimetric Weather Radar Measurements of Rain. IEEE Geosci. Remote Sens. Lett. 2011, 8, 988–992. [Google Scholar] [CrossRef]

- Kim, Y.J.; Park, H.S.; Lee, C.J.; Kim, D.; Seo, M. Development of a Cloud-Based Image Water Level Gauge. IT Converg. Pract. (INPRA) 2014, 2, 22–29. [Google Scholar]

- Che-Hao, C.; Ming-Ko, C.; Song-Yue, Y.; Hsu, C.T.; Wu, S.J. A Case Study for the Application of an Operational Two-Dimensional Real-Time Flooding Forecasting System and Smart Water Level Gauges on Roads in Tainan City, Taiwan. Water 2018, 10, 574. [Google Scholar] [CrossRef]

- Narayanan, R.; Lekshmy, V.M.; Rao, S.; Sasidhar, K. A Novel Approach to Urban Flood Monitoring Using Computer Vision. In Proceedings of the 2014 IEEE International Conference on Computing, Communication and Networking Technologies (ICCCNT), Hefei, China, 11–13 July 2014; pp. 1–7. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image Denoising by Sparse 3-D Transform-Domain Collaborative Filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hoeher, P.; Kaiser, S.; Robertson, P. Two-Dimensional Pilot-Symbol-Aided Channel Estimation by Wiener Filtering. In Proceedings of the 1997 IEEE International Conference on Acoustics, Speech, and Signal Processing, Munich, Germany, 21–24 April 1997; p. 1845. [Google Scholar]

- Rokni, K.; Ahmad, A.; Solaimani, K.; Hazini, S. A New Approach for Surface Water Change Detection: Integration of Pixel Level Image Fusion and Image Classification Techniques. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 226–234. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Kim, J.Y.; Kim, L.S.; Hwang, S.H. An Advanced Contrast Enhancement Using Partially Overlapped Sub-Block Histogram Equalization. IEEE Trans. Circuits Syst. Video Technol. 2011, 1, 475–484. [Google Scholar]

- Abdullah-Al-Wadud, M.; Kabir, M.H.; Dewan, M.A.A.; Chae, O. A Dynamic Histogram Equalization for Image Contrast Enhancement. IEEE Trans. Consum. Electron. 2007, 53, 593–600. [Google Scholar] [CrossRef]

- Hughes, D.; Greenwood, P.; Coulson, G.; Blair, G. Gridstix: Supporting Flood Prediction Using Embedded Hardware and Next Generation Grid Middleware. In Proceedings of the IEEE International Symposium on a World of Wireless, Mobile and Multimedia Networks, WoWMoM 2006, Buffalo-Niagara Falls, NY, USA, 26–29 June 2006; p. 6. [Google Scholar]

- Pappenberger, F.; Beven, K.J.; Hunter, N.M.; Bates, P.D.; Gouweleeuw, B.T.; Thielen, J.; De Roo, A.P.J. Cascading Model Uncertainty from Medium Range Weather Forecasts (10 Days) through a Rainfall-Runoff Model to Flood Inundation Predictions within the European Flood Forecasting System (Effs). Hydrol. Earth Syst. Sci. Discuss. 2005, 9, 381–393. [Google Scholar] [CrossRef]

- Sonka, M.; Hlavac, V.; Boyle, R. Image Processing, Analysis, and Machine Vision; Cengage Learning: Boston, MA, USA, 2014. [Google Scholar]

- Gore, J.A.; Banning, J. Discharge Measurements and Streamflow Analysis. In Methods in Stream Ecology, 3rd ed.; Elsevier: Amsterdam, The Netherlands, 2017; Volume 1, pp. 49–70. [Google Scholar]

- Ziegler, A.D. Water Management: Reduce Urban Flood Vulnerability. Nature 2012, 481, 145. [Google Scholar] [CrossRef] [PubMed]

- Ziegler, A.D.; She, L.H.; Tantasarin, C.; Jachowski, N.R.; Wasson, R. Floods, False Hope, and the Future. Hydrol. Process. 2012, 26, 1748–1750. [Google Scholar] [CrossRef]

- Lee, C.S.; Huang, L.R.; Chen, D.C. The Modification of the Typhoon Rainfall Climatology Model in Taiwan. Nat. Hazards Earth Syst. Sci. 2013, 13, 65–74. [Google Scholar] [CrossRef]

- Lee, C.S.; Ho, H.Y.; Lee, K.T.; Wang, Y.C.; Guo, W.D.; Chen, D.Y.C.; Hsiao, L.F.; Chen, C.H.; Chiang, C.C.; Yang, M.J. Assessment of Sewer Flooding Model Based on Ensemble Quantitative Precipitation Forecast. J. Hydrol. 2013, 506, 101–113. [Google Scholar] [CrossRef]

| Station | Images | Resolution | Image Scales (cm/pixel) | |Error| < 50 cm (Number) | Reliability |

|---|---|---|---|---|---|

| Baoqiao | 200 | 352 × 288 | 8.55 | 166 | 0.83 |

| Xinan Bridge | 200 | 352 × 240 | 3.76 | 174 | 0.87 |

| Shimen Reservoir | 180 | 352 × 240 | 0.60 | 162 | 0.90 |

| Chiayi County Donggang | 200 | 352 × 240 | 2.32 | 184 | 0.92 |

| Station | Images | Resolution | Image Scales (cm/pixel) | |Error| < 50 cm (Number) | Reliability | RMSE (cm) | MAE (cm) |

|---|---|---|---|---|---|---|---|

| Nangang Bridge | 198 | 704 × 480 | 14.44 | 185 | 0.93 | 95.04 | 30.25 |

| Xinan Bridge | 257 | 704 × 480 | 9.74 | 213 | 0.83 | 104.51 | 49.52 |

| Jiquan Bridge | 285 | 704 × 480 | 2.86 | 264 | 0.93 | 58.96 | 22.85 |

| Baoqiao | 340 | 704 × 480 | 3.45 | 316 | 0.93 | 21.77 | 10.79 |

| Shanggueishan Bridge | 338 | 704 × 480 | 10.06 | 267 | 0.79 | 42.09 | 40.30 |

| Lansheng Bridge | 156 | 704 × 480 | 7.21 | 141 | 0.90 | 41.41 | 21.86 |

| Location | Hit (Rate) | Miss (Rate) | False (Rate) | Total |

|---|---|---|---|---|

| Nangang Bridge | 196 (0.990) | 0 (0.000) | 2 (0.010) | 198 |

| Xinan Bridge | 256 (0.996) | 0 (0.000) | 1 (0.004) | 257 |

| Jiquan Bridge | 282 (0.990) | 0 (0.000) | 3 (0.010) | 285 |

| Baoqiao | 319 (0.938) | 11 (0.032) | 10 (0.030) | 340 |

| Shanggueishan Bridge | 335 (0.991) | 0 (0.000) | 3 (0.009) | 338 |

| Lansheng Bridge | 143 (0.917) | 9 (0.057) | 4 (0.026) | 156 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hsu, S.-Y.; Chen, T.-B.; Du, W.-C.; Wu, J.-H.; Chen, S.-C. Integrate Weather Radar and Monitoring Devices for Urban Flooding Surveillance. Sensors 2019, 19, 825. https://doi.org/10.3390/s19040825

Hsu S-Y, Chen T-B, Du W-C, Wu J-H, Chen S-C. Integrate Weather Radar and Monitoring Devices for Urban Flooding Surveillance. Sensors. 2019; 19(4):825. https://doi.org/10.3390/s19040825

Chicago/Turabian StyleHsu, Shih-Yen, Tai-Been Chen, Wei-Chang Du, Jyh-Horng Wu, and Shih-Chieh Chen. 2019. "Integrate Weather Radar and Monitoring Devices for Urban Flooding Surveillance" Sensors 19, no. 4: 825. https://doi.org/10.3390/s19040825