Towards Goal-Directed Navigation Through Combining Learning Based Global and Local Planners

Abstract

1. Introduction

- We present a goal-directed navigation system that integrates the global planning and the local planning which are both based on learning-based approaches

- We extend the standard end-to-end learning to the goal-directed end-to-end learning algorithm that incorporates both the local observation and the goal representation in predicting actions. The proposed algorithm is used to train a global planner that predict actions conditional on the goal in the goal-directed navigation.

- We use deep reinforcement learning to train a local planner that performs object avoidance behaviors. Dueling architecture based deep double Q network (D3QN) is adopted. The planner is trained in the simulation and then transferred into the real world.

- Extensive experiments in both the simulation and the real world are conducted to demonstrate the efficiency of the proposed navigation system.

2. Related Work

2.1. Robot Navigation Based on Supervised Learning

2.2. Robot Navigation Based on Reinforcement Learning

2.3. Combing Global and Local Planners for Robot Navigation

3. Materials and Models

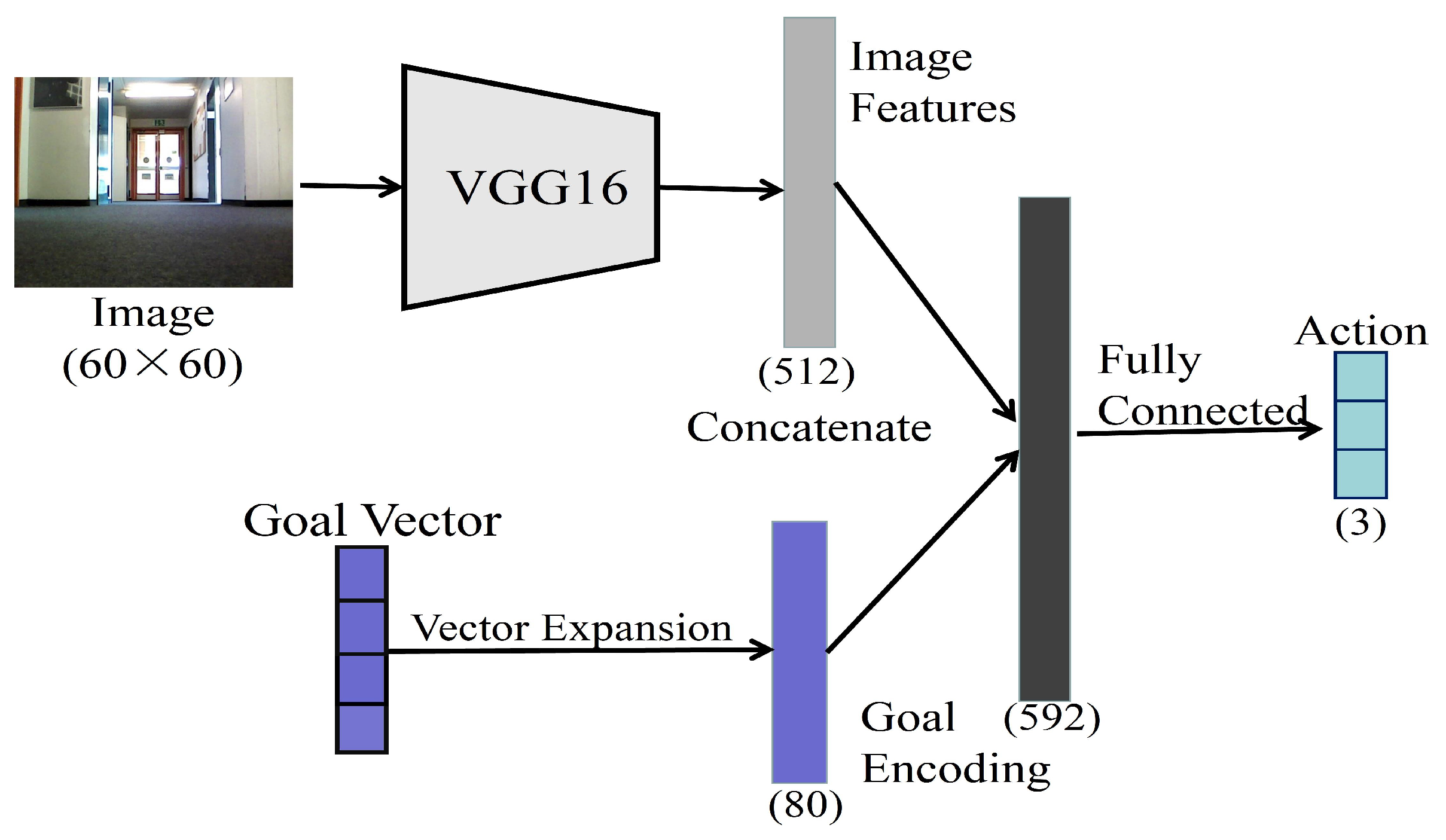

3.1. Goal-Directed End-to-End Learning

3.2. Implementation of the Goal-Directed End-to-End Learning

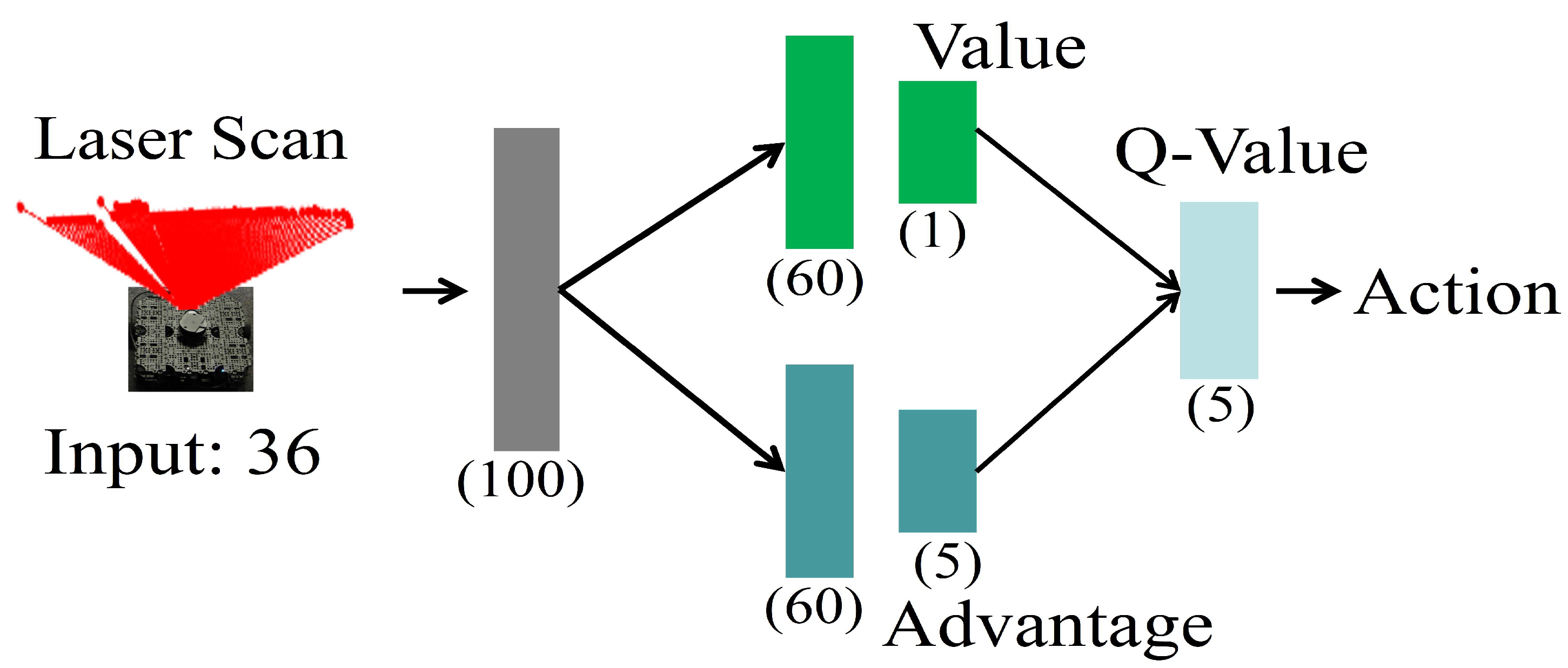

3.3. Reinforcement Learning for Local Object Avoidance

3.4. Deep Q-Learning

3.5. Double Q-Learning

3.6. Dueling Q-Learning

3.7. Implementation of D3QN

3.8. Switching Between Two Different Strategies

4. Experimental Results

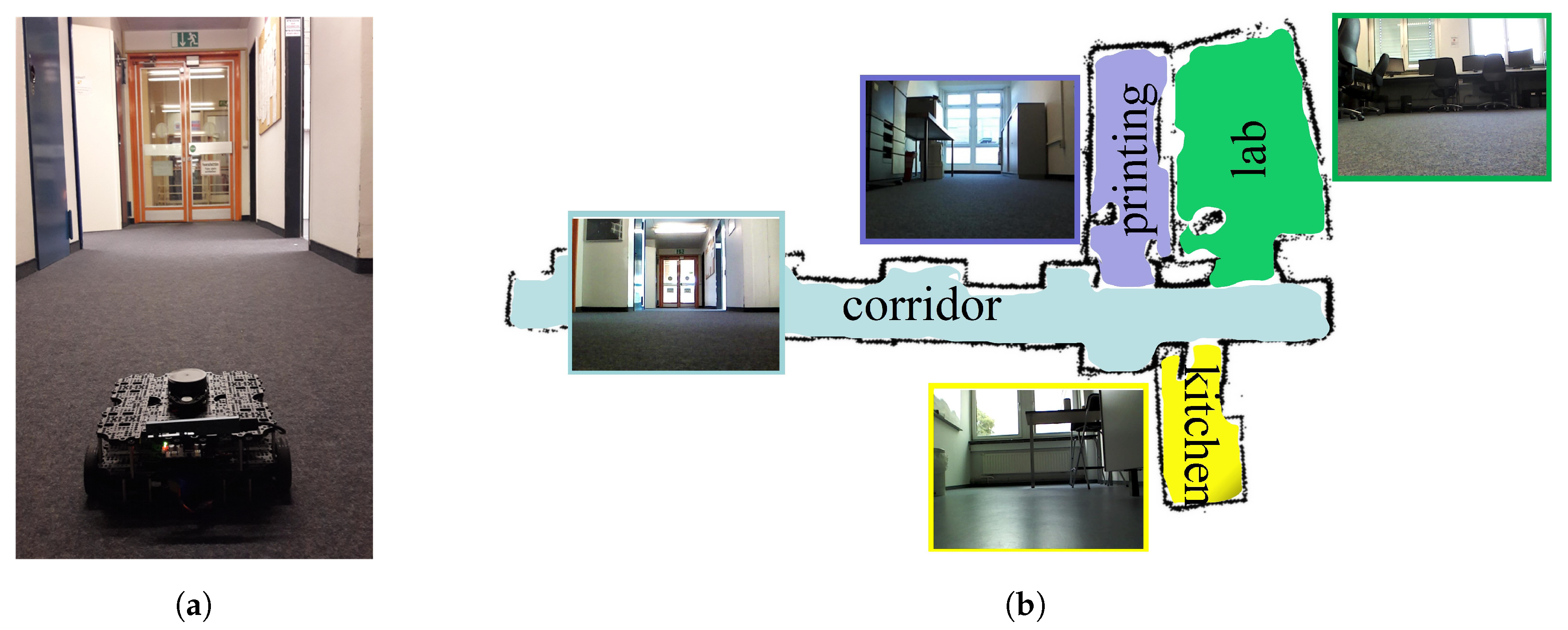

4.1. Experimental Setup

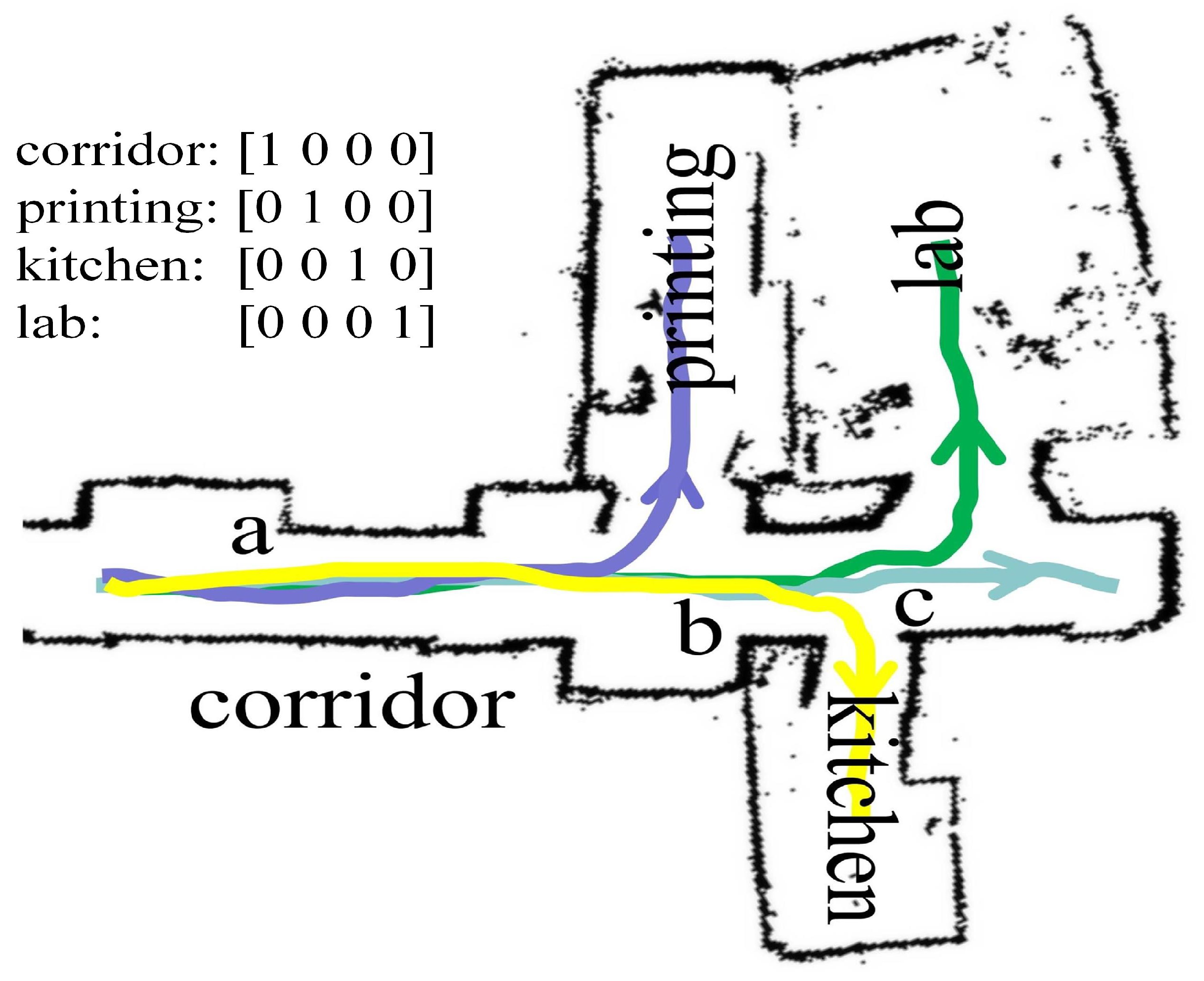

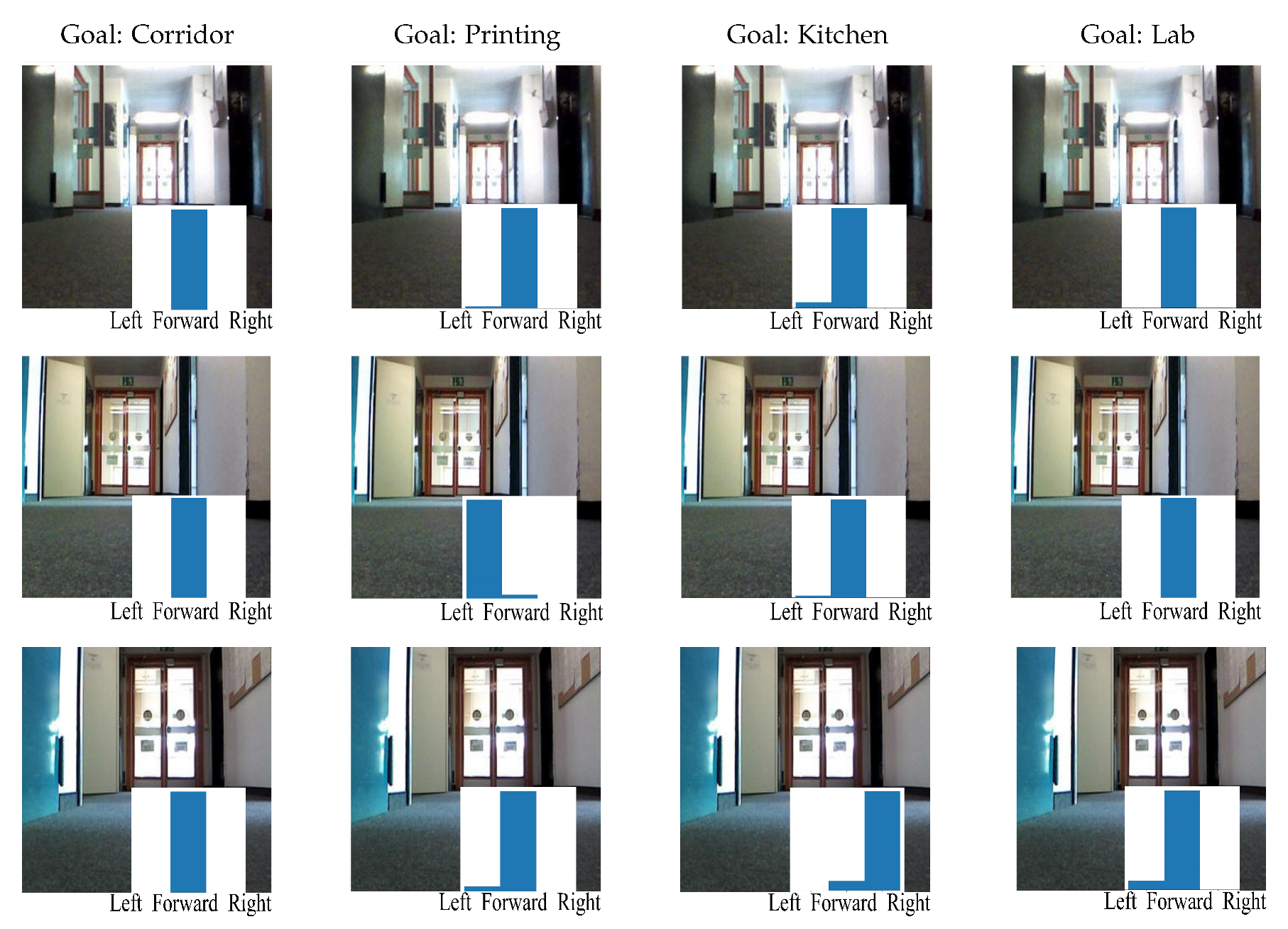

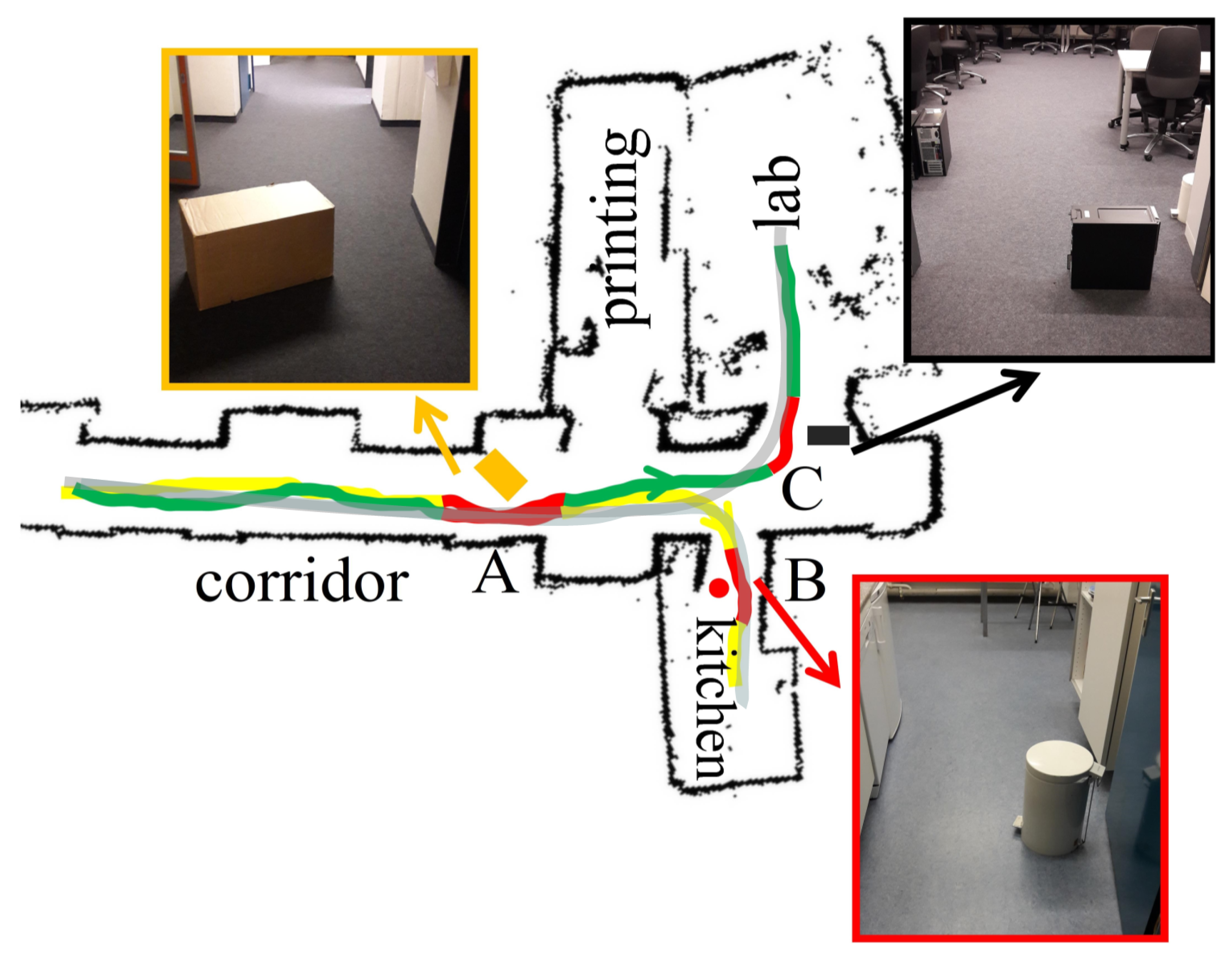

4.2. Global Planner for Navigating Towards the Goal

4.2.1. Data Preparation and Training

4.2.2. Results of the Global Planner

4.3. Local Planner for Object Avoidance

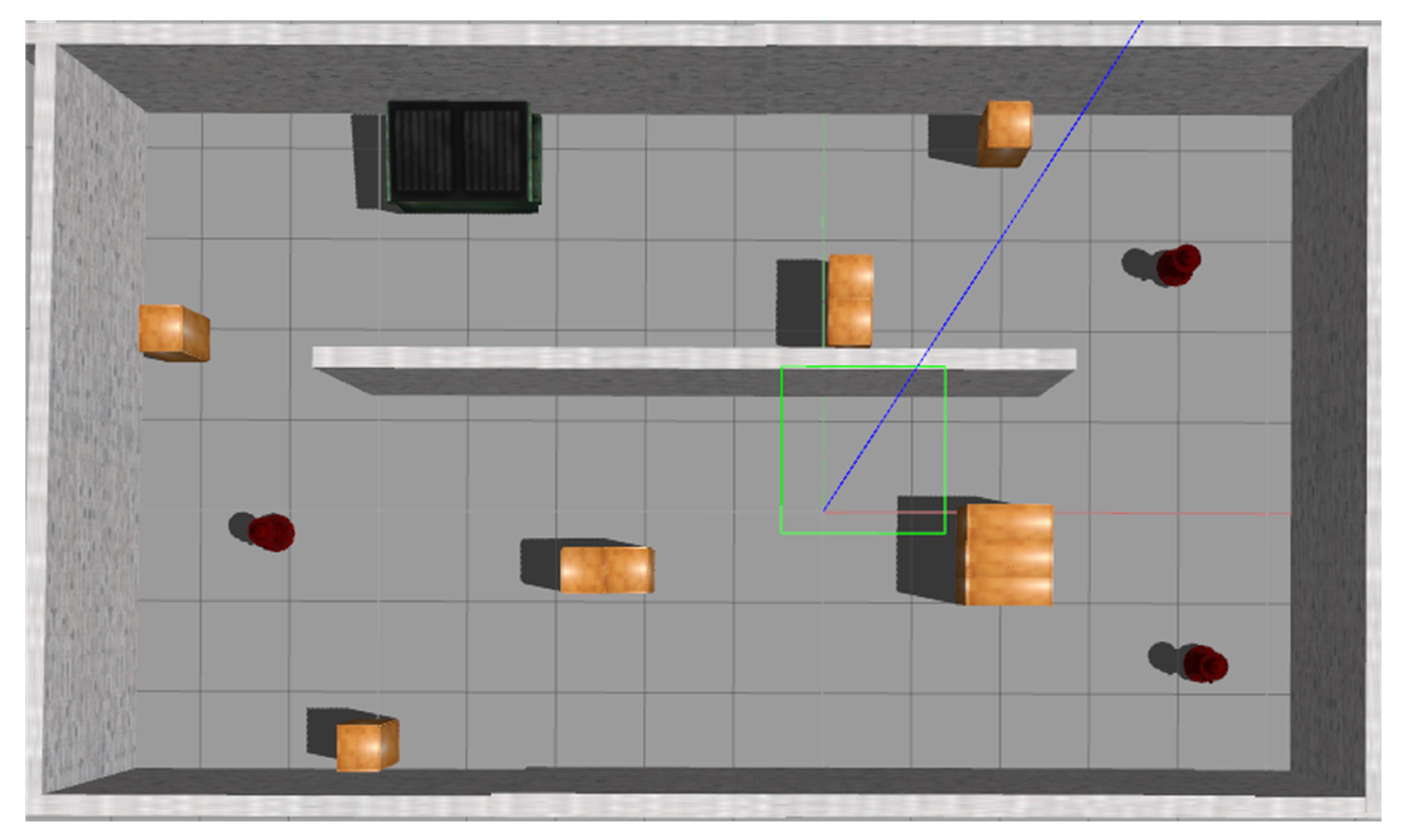

4.3.1. Training in Simulation

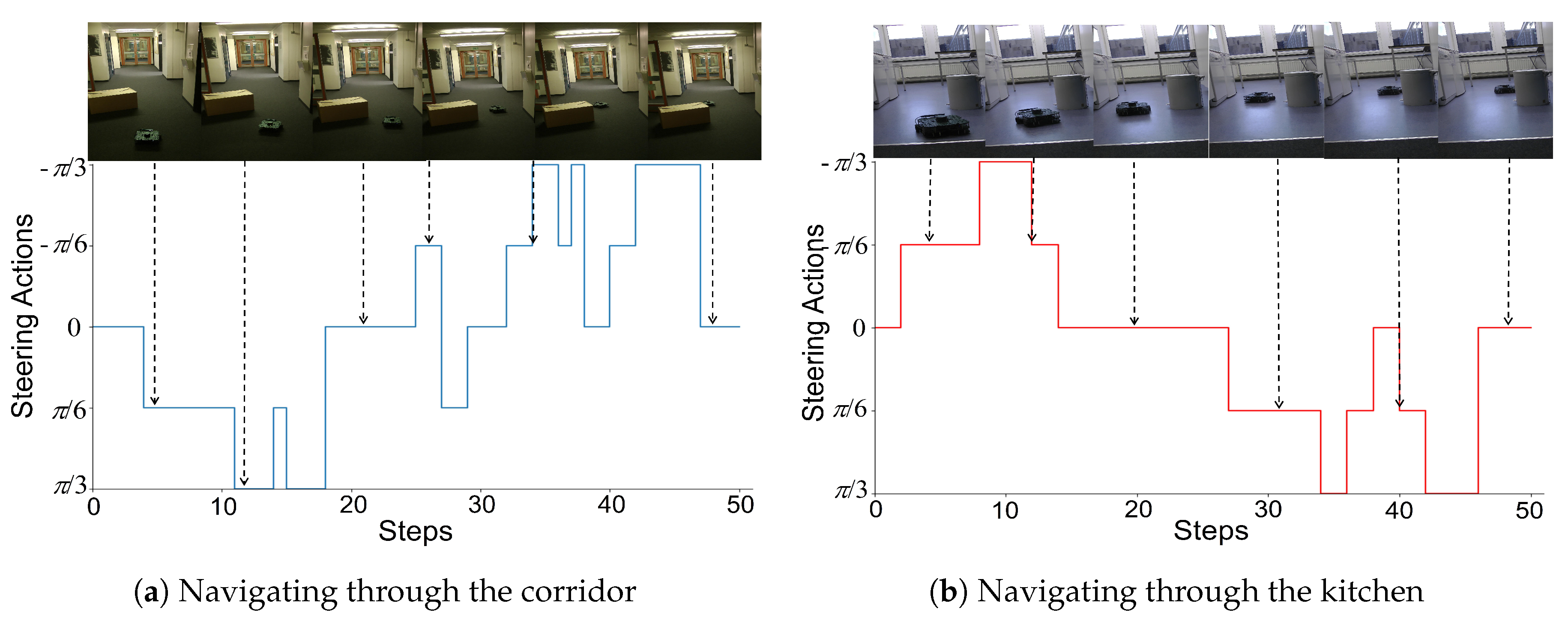

- Action space: In this navigation task, the robot is instructed to move forward with a constant step length (0.03 m) and the actions are defined to control the robot’s angular velocity in a discretized format. It includes 5 actions: Turning left by , turning left by , moving forward, turning right by , turning right by . In contrast to the global planner that has only 3 action behaviors, the local planner has a more fine-grained action resolution, which is more convenient for local manipulations.

- Observations and Goals: The state of the robot is represented by 36 sampled laser range findings from the raw laser scan. During navigation, the robot’s objective is to learn the action policy that enables the robot to bypass the objects placed in the environment. Since the robot moves with a constant linear velocity, the learning task basically requires the robot to change its angular velocity based on the relative spatial positions between itself and the objects.

- Reward: For each step, the immediate reward is 0.1. If a collision is detected, the episode terminates immediately with an additional punishment of −20. Otherwise, the episode lasts until it reaches the maximum number of steps (200 steps in our experiments) and terminates without punishment. Also, simply rotating on the spot will be punished. The reward function is designed to let the robot move in the environment without colliding into walls and objects as long as possible. The total episode reward is the accumulation of instantaneous rewards over all steps within an episode.

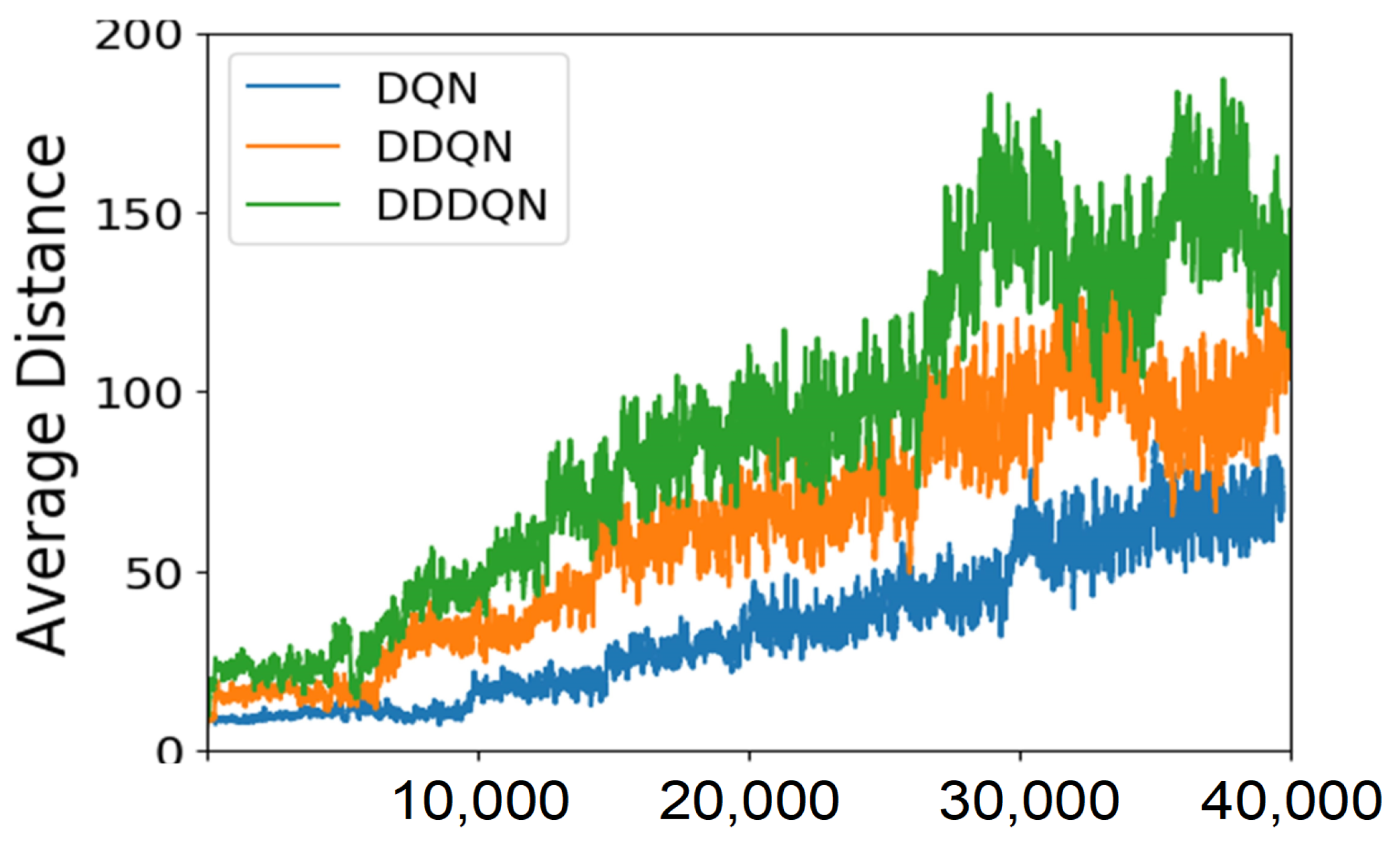

4.3.2. Results in the Simulation

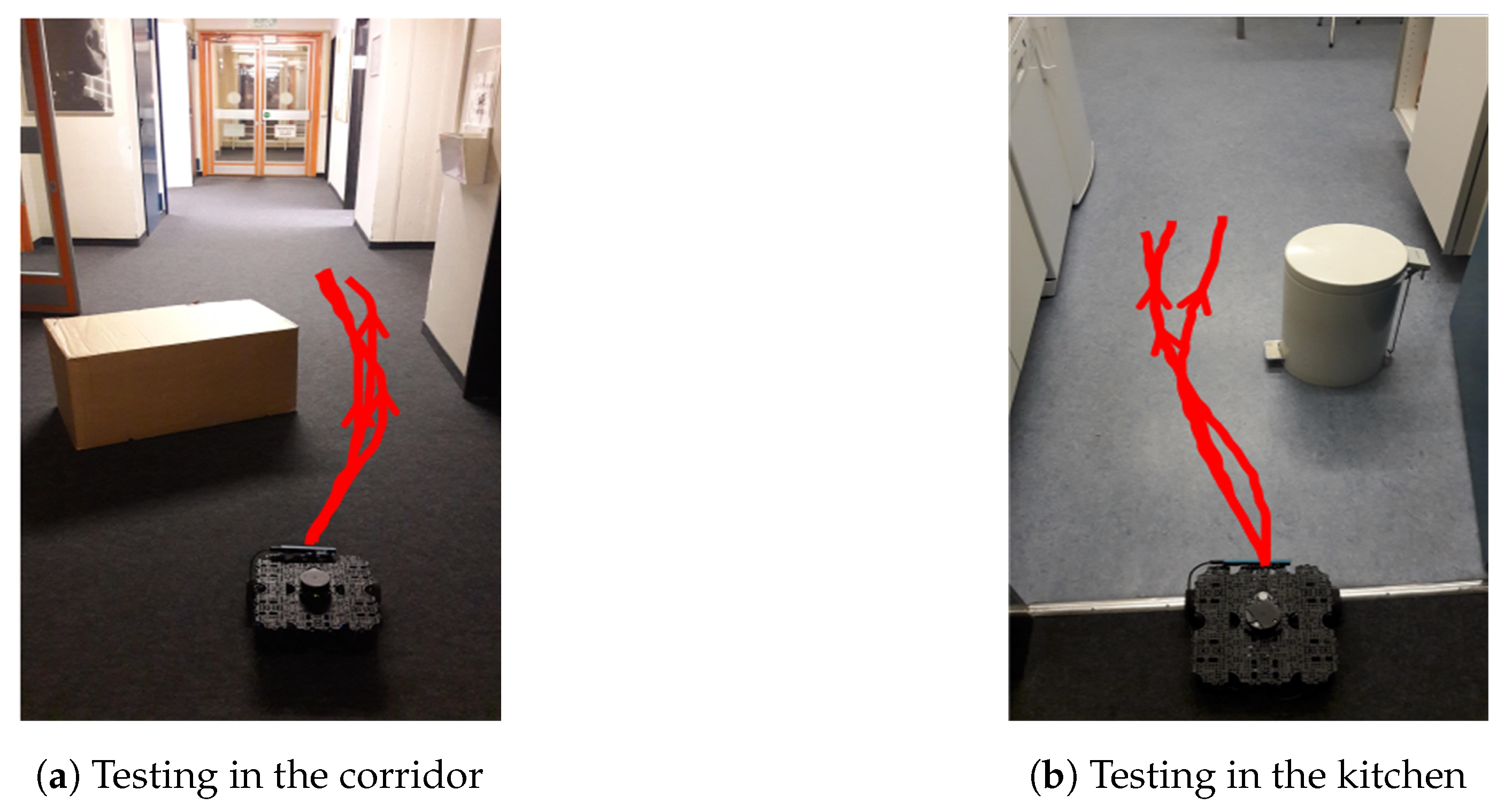

4.3.3. Results in the Real World

4.4. Combining the Global and Local Planners

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Leonard, J.J.; Durrant-Whyte, H.F. Mobile robot localization by tracking geometric beacons. IEEE Trans. Robot. Autom. 1991, 7, 376–382. [Google Scholar] [CrossRef]

- Davison, A.J.; Murray, D.W. Simultaneous localization and map-building using active vision. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 7, 865–880. [Google Scholar] [CrossRef]

- Khatib, O. Real-time obstacle avoidance for manipulators and mobile robots. In Autonomous Robot Vehicles; Springer: Berlin, Germany, 1986; pp. 396–404. [Google Scholar]

- Barraquand, J.; Langlois, B.; Latombe, J.C. Numerical potential field techniques for robot path planning. IEEE Trans. Syst. Man Cybern. 1992, 22, 224–241. [Google Scholar] [CrossRef]

- Dissanayake, M.G.; Newman, P.; Clark, S.; Durrant-Whyte, H.F.; Csorba, M. A solution to the simultaneous localization and map building (SLAM) problem. IEEE Trans. Robot. Autom. 2001, 17, 229–241. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Robert, C. Machine learning, a probabilistic perspective. Chance 2014. [Google Scholar] [CrossRef]

- Lenz, I.; Lee, H.; Saxena, A. Deep learning for detecting robotic grasps. Int. J. Robot. Res. 2015, 34, 705–724. [Google Scholar] [CrossRef]

- Tai, L.; Liu, M. Deep-learning in mobile robotics-from perception to control systems: A survey on why and why not. arXiv, 2016; arXiv:1612.07139. Available online: https://arxiv.org/pdf/1612.07139.pdf (accessed on 22 August 2018).

- Kotsiantis, S.B.; Zaharakis, I.; Pintelas, P. Supervised machine learning: A review of classification techniques. Emerg. Artif. Intell. Appl. Comput. Eng. 2007, 160, 3–24. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529. [Google Scholar] [CrossRef] [PubMed]

- Lison, P. An Introduction to Machine Learning; Springer: Berlin, Germany, 2015. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Seff, A.; Kornhauser, A.; Xiao, J. Deepdriving: Learning affordance for direct perception in autonomous driving. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015; pp. 2722–2730. [Google Scholar]

- Bojarski, M.; Del Testa, D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, L.D.; Monfort, M.; Muller, U.; Zhang, J.; et al. End to end learning for self-driving cars. arXiv, 2016; arXiv:1604.07316. Available online: https://arxiv.org/pdf/1604.07316.pdf (accessed on 2 September 2018).

- Muller, U.; Ben, J.; Cosatto, E.; Flepp, B.; Cun, Y.L. Off-road obstacle avoidance through end-to-end learning. In Advances in Neural Information Processing Systems; Neural Information Processing Systems Foundation: Vancouver, BC, Canada, 2005; pp. 739–746. [Google Scholar]

- Pomerleau, D.A. Efficient training of artificial neural networks for autonomous navigation. Neural Comput. 1991, 3, 88–97. [Google Scholar] [CrossRef]

- Pfeiffer, M.; Schaeuble, M.; Nieto, J.; Siegwart, R.; Cadena, C. From perception to decision: A data-driven approach to end-to-end motion planning for autonomous ground robots. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1527–1533. [Google Scholar]

- Barnes, D.; Maddern, W.; Posner, I. Find your own way: Weakly-supervised segmentation of path proposals for urban autonomy. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 203–210. [Google Scholar]

- Hadsell, R.; Sermanet, P.; Ben, J.; Erkan, A.; Scoffier, M.; Kavukcuoglu, K.; Muller, U.; LeCun, Y. Learning long-range vision for autonomous off-road driving. J. Field Robot. 2009, 26, 120–144. [Google Scholar] [CrossRef]

- Richter, C.; Roy, N. Safe visual navigation via deep learning and novelty detection. Robot. Sci. Syst. 2017. [Google Scholar] [CrossRef]

- Ross, S.; Melik-Barkhudarov, N.; Shankar, K.S.; Wendel, A.; Dey, D.; Bagnell, J.A.; Hebert, M. Learning monocular reactive UAV control in cluttered natural environments. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 1765–1772. [Google Scholar]

- Kerzel, M.; Wermter, S. Neural end-to-end self-learning of visuomotor skills by environment interaction. In Proceedings of the International Conference on Artificial Neural Networks (ICANN), Sardinia, Italy, 11–14 September 2017; pp. 27–34. [Google Scholar]

- Giusti, A.; Guzzi, J.; Ciresan, D.C.; He, F.L.; Rodríguez, J.P.; Fontana, F.; Faessler, M.; Forster, C.; Schmidhuber, J.; Di Caro, G.; et al. A Machine Learning Approach to Visual Perception of Forest Trails for Mobile Robots. IEEE Robot. Autom. Lett. 2016, 1, 661–667. [Google Scholar] [CrossRef]

- Tai, L.; Paolo, G.; Liu, M. Virtual-to-real deep reinforcement learning: Continuous control of mobile robots for mapless navigation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 31–36. [Google Scholar]

- Zhu, Y.; Mottaghi, R.; Kolve, E.; Lim, J.J.; Gupta, A.; Fei-Fei, L.; Farhadi, A. Target-driven visual navigation in indoor scenes using deep reinforcement learning. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3357–3364. [Google Scholar]

- Codevilla, F.; Müller, M.; Dosovitskiy, A.; López, A.; Koltun, V. End-to-end driving via conditional imitation learning. arXiv, 2017; arXiv:1710.02410. Available online: https://arxiv.org/pdf/1710.02410.pdf (accessed on 12 September 2018).

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. arXiv, 2015; arXiv:1509.02971. Available online: https://arxiv.org/pdf/ 1509.02971.pdf (accessed on 12 September 2018).

- Van Hasselt, H.; Guez, A.; Silver, D. Deep Reinforcement Learning with Double Q-Learning; AAAI: Phoenix, AZ, USA, 2016; Volume 2, p. 5. [Google Scholar]

- Wang, Z.; Schaul, T.; Hessel, M.; Van Hasselt, H.; Lanctot, M.; De Freitas, N. Dueling network architectures for deep reinforcement learning. arXiv, 2015; arXiv:1511.06581. Available online: https://arxiv.org/pdf/1511. 06581.pdf (accessed on 12 September 2018).

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous methods for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning (ICML), New York, NY, USA, 19–24 June 2016; pp. 1928–1937. [Google Scholar]

- Xie, L.; Wang, S.; Markham, A.; Trigoni, N. Towards monocular vision based obstacle avoidance through deep reinforcement learning. arXiv, 2017; arXiv:1706.09829. Available online: https://arxiv.org/pdf/1706. 09829.pdf (accessed on 12 September 2018).

- Chentanez, N.; Barto, A.G.; Singh, S.P. Intrinsically motivated reinforcement learning. In Advances in Neural Information Processing Systems; Neural Information Processing Systems Foundation: Vancouver, BC, Canada, 2005; pp. 1281–1288. [Google Scholar]

- Mirowski, P.; Pascanu, R.; Viola, F.; Soyer, H.; Ballard, A.J.; Banino, A.; Denil, M.; Goroshin, R.; Sifre, L.; Kavukcuoglu, K.; et al. Learning to navigate in complex environments. arXiv, 2016; arXiv:1611.03673. Available online: https://arxiv.org/pdf/1611.03673.pdf (accessed on 12 September 2018).

- Kulkarni, T.D.; Narasimhan, K.; Saeedi, A.; Tenenbaum, J. Hierarchical deep reinforcement learning: Integrating temporal abstraction and intrinsic motivation. In Advances in Neural Information Processing Systems; Neural Information Processing Systems Foundation: Barcelona, Spain, 2016; pp. 3675–3683. [Google Scholar]

- Beattie, C.; Leibo, J.Z.; Teplyashin, D.; Ward, T.; Wainwright, M.; Küttler, H.; Lefrancq, A.; Green, S.; Valdés, V.; Sadik, A.; et al. Deepmind lab. arXiv, 2016; arXiv:1612.03801. Available online: https://arxiv.org/pdf/ 1612.03801.pdf (accessed on 12 September 2018).

- Tobin, J.; Fong, R.; Ray, A.; Schneider, J.; Zaremba, W.; Abbeel, P. Domain randomization for transferring deep neural networks from simulation to the real world. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 23–30. [Google Scholar]

- Wu, Y.; Wu, Y.; Gkioxari, G.; Tian, Y. Building generalizable agents with a realistic and rich 3D environment. arXiv, 2018; arXiv:1801.02209. Available online: https://arxiv.org/pdf/1801.02209.pdf (accessed on 19 September 2018).

- Wang, L.C.; Yong, L.S.; Ang, M.H. Hybrid of global path planning and local navigation implemented on a mobile robot in indoor environment. In Proceedings of the IEEE International Symposium on Intelligent Control, Vancouver, BC, Canada, 30–30 October 2002; pp. 821–826. [Google Scholar]

- Gaspar, J.; Winters, N.; Santos-Victor, J. Vision-based navigation and environmental representations with an omnidirectional camera. IEEE Trans. Robot. Autom. 2000, 16, 890–898. [Google Scholar] [CrossRef]

- Bouraine, S.; Fraichard, T.; Azouaoui, O. Real-time Safe Path Planning for Robot Navigation in Unknown Dynamic Environments. In Proceedings of the CSA 2016-2nd Conference on Computing Systems and Applications, Algiers, Algeria, 13–14 December 2016. [Google Scholar]

- Guimarães, R.L.; de Oliveira, A.S.; Fabro, J.A.; Becker, T.; Brenner, V.A. ROS navigation: Concepts and tutorial. In Robot Operating System (ROS); Springer: Berlin, Germany, 2016; pp. 121–160. [Google Scholar]

- LaValle, S.M. Planning Algorithms; Cambridge University Press: Cambridge, UK, 2006. [Google Scholar]

- Brock, O.; Khatib, O. High-speed navigation using the global dynamic window approach. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Detroit, MI, USA, 10–15 May 1999; pp. 341–346. [Google Scholar]

- Ferrer, G.; Sanfeliu, A. Anticipative kinodynamic planning: multi-objective robot navigation in urban and dynamic environments. In Autonomous Robots; Springer: Berlin, Germany, 2018; pp. 1–16. [Google Scholar]

- Mehta, D.; Ferrer, G.; Olson, E. Autonomous navigation in dynamic social environments using multi-policy decision making. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 1190–1197. [Google Scholar]

- Wei, G.; Hus, D.; Lee, W.S.; Shen, S.; Subramanian, K. Intention-Net: Integrating Planning and Deep Learning for Goal-Directed Autonomous Navigation. arXiv, 2017; arXiv:1710.05627. Available online: https://arxiv.org/pdf/1710.05627.pdf (accessed on 19 September 2018).

- Kato, Y.; Kamiyama, K.; Morioka, K. Autonomous robot navigation system with learning based on deep Q-network and topological maps. In Proceedings of the IEEE/SICE International Symposium on System Integration (SII), Taipei, Taiwan, 11–14 December 2017; pp. 1040–1046. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv, 2014; arXiv:1409.1556. Available online: https://arxiv.org/pdf/1409.1556.pdf (accessed on 19 September 2018).

- Robot Operating System. Available online: http://www.ros.org (accessed on 26 August 2018).

- Gazebo. Available online: http://gazebosim.org/ (accessed on 26 August 2018).

| Layer Name | Layer Type | Number of Neurons | Activation Type |

|---|---|---|---|

| Input | Dense | 36 | – |

| Shared FC | Dense | 100 | ReLU |

| FC1 for value | Dense | 60 | ReLU |

| FC1 for advantage | Dense | 60 | ReLU |

| FC2 for value | Dense | 1 | Linear |

| FC2 for advantage | Dense | 5 | Linear |

| Output | Dense | 5 | – |

| Success Times/Attempts in Navigating to | Success | Navigation Requirement | ||||||

|---|---|---|---|---|---|---|---|---|

| Model | Corridor | Kitchen | Printing | Lab | Rate | Sensor | Training | Map |

| Standard | 7/10 | 2/10 | 0/10 | 1/10 | 10% | Camera | Yes | No |

| Goal-directed | 10/10 | 8/10 | 7/10 | 8/10 | 82% | Camera | Yes | Topological |

| SLAM | 10/10 | 10/10 | 10/10 | 10/10 | 100% | LDS | No | Occupancy |

| Success Rate | Success Rate | Success | Navigation Requirement | |||

|---|---|---|---|---|---|---|

| Planner | in Experiment (a) | in Experiment (b) | Rate | Sensor | Training | Map |

| The Global Planner | 0/10 | 2/10 | 10% | Camera | Yes | Topological |

| The Local Planner | 8/10 | 9/10 | 85% | LDS | Yes | No |

| SLAM | 10/10 | 10/10 | 100% | LDS | No | Occupancy |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, X.; Gao, Y.; Guan, L. Towards Goal-Directed Navigation Through Combining Learning Based Global and Local Planners. Sensors 2019, 19, 176. https://doi.org/10.3390/s19010176

Zhou X, Gao Y, Guan L. Towards Goal-Directed Navigation Through Combining Learning Based Global and Local Planners. Sensors. 2019; 19(1):176. https://doi.org/10.3390/s19010176

Chicago/Turabian StyleZhou, Xiaomao, Yanbin Gao, and Lianwu Guan. 2019. "Towards Goal-Directed Navigation Through Combining Learning Based Global and Local Planners" Sensors 19, no. 1: 176. https://doi.org/10.3390/s19010176

APA StyleZhou, X., Gao, Y., & Guan, L. (2019). Towards Goal-Directed Navigation Through Combining Learning Based Global and Local Planners. Sensors, 19(1), 176. https://doi.org/10.3390/s19010176