Modeling the Human Visuo-Motor System to Support Remote-Control Operation

Abstract

:1. Introduction

1.1. Motivation

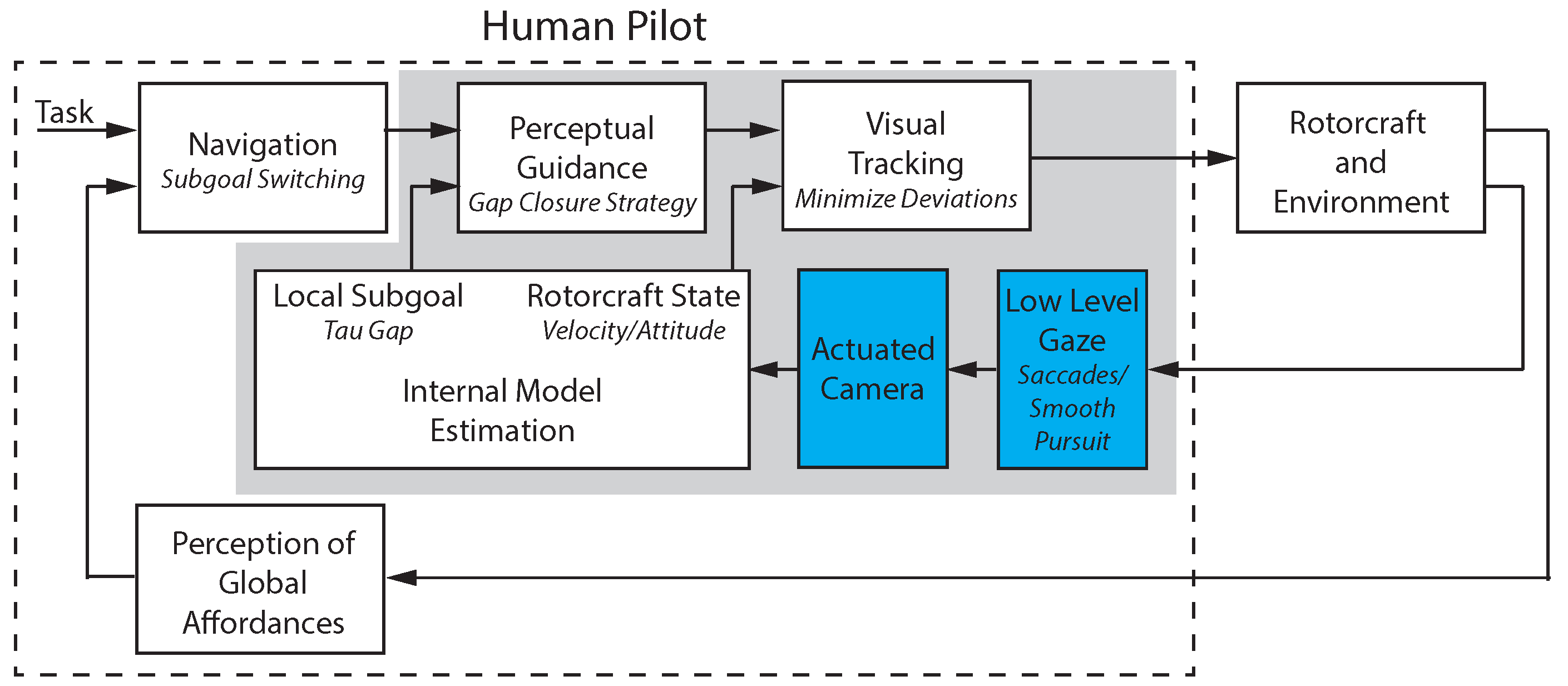

1.1.1. Teleoperation Example Application

1.1.2. Human-in-the-Loop Systems

1.2. Research Objectives

1.3. Paper Organization

2. Related Work

2.1. High-Level Human Models

2.1.1. General Cognitive Models

2.1.2. Human Perception–Action

2.2. Human Guidance Behavior

2.3. Perceptual Guidance

2.4. Human Control Models

2.4.1. Control Theoretic Models of Human Performance

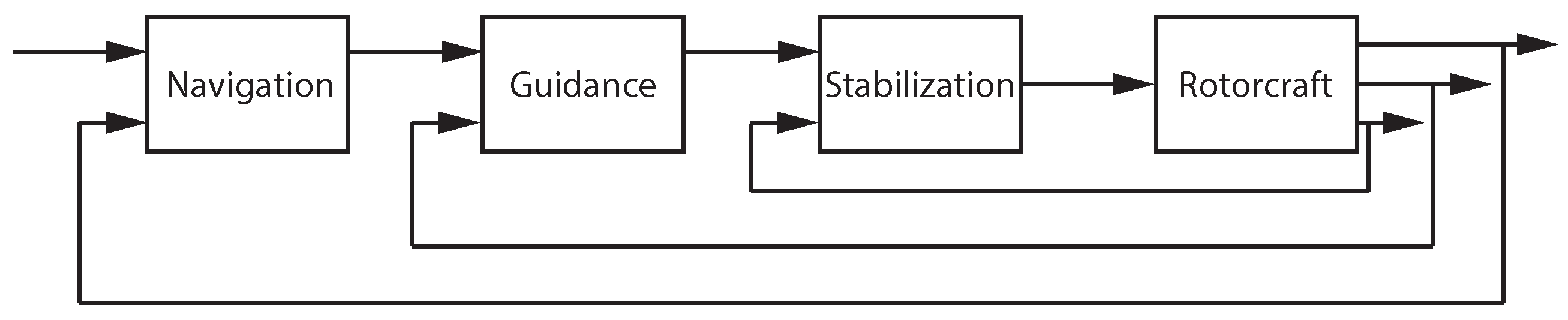

2.4.2. Multi-Loop Control Analysis

2.5. Biological Motor Control Theories

2.6. Gaze Modeling and Classification

2.6.1. Gaze Models Based on Eye–Head Coordination

2.6.2. Gaze Classification

3. Experimental Setup

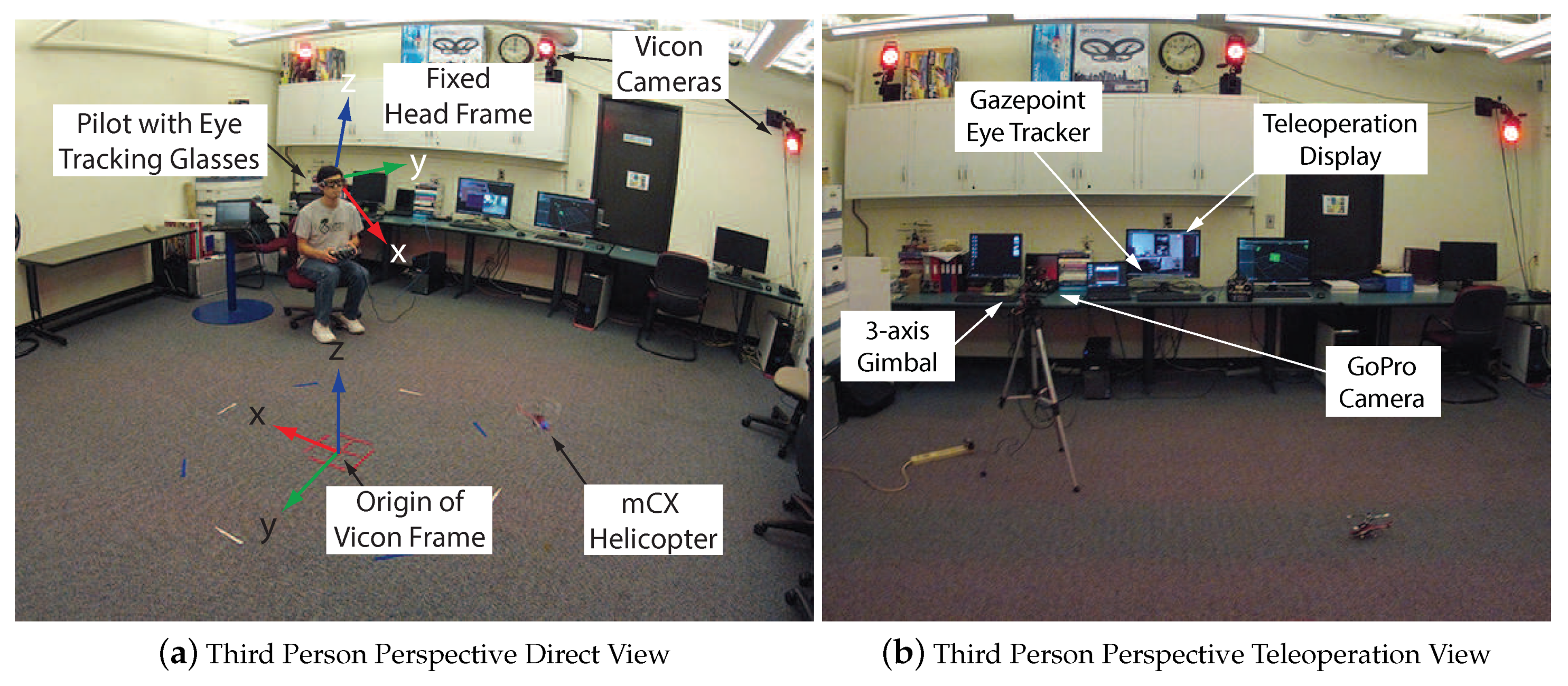

3.1. Experimental Infrastructure

3.2. Experimental Tasks

3.2.1. Hovering

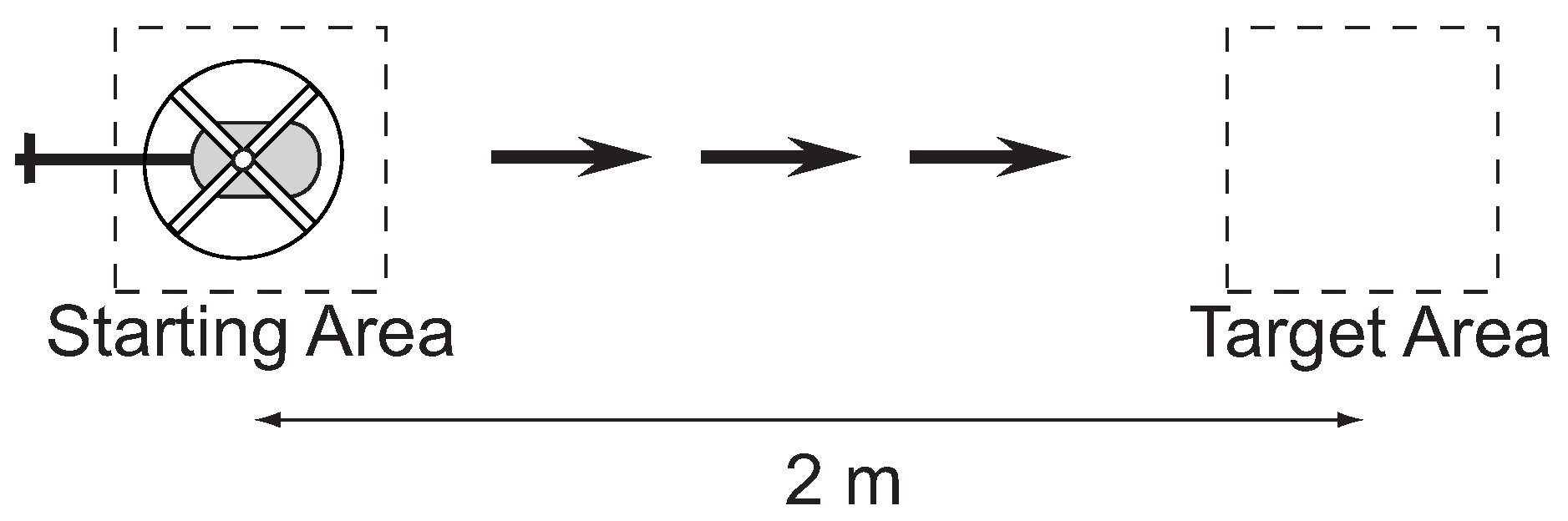

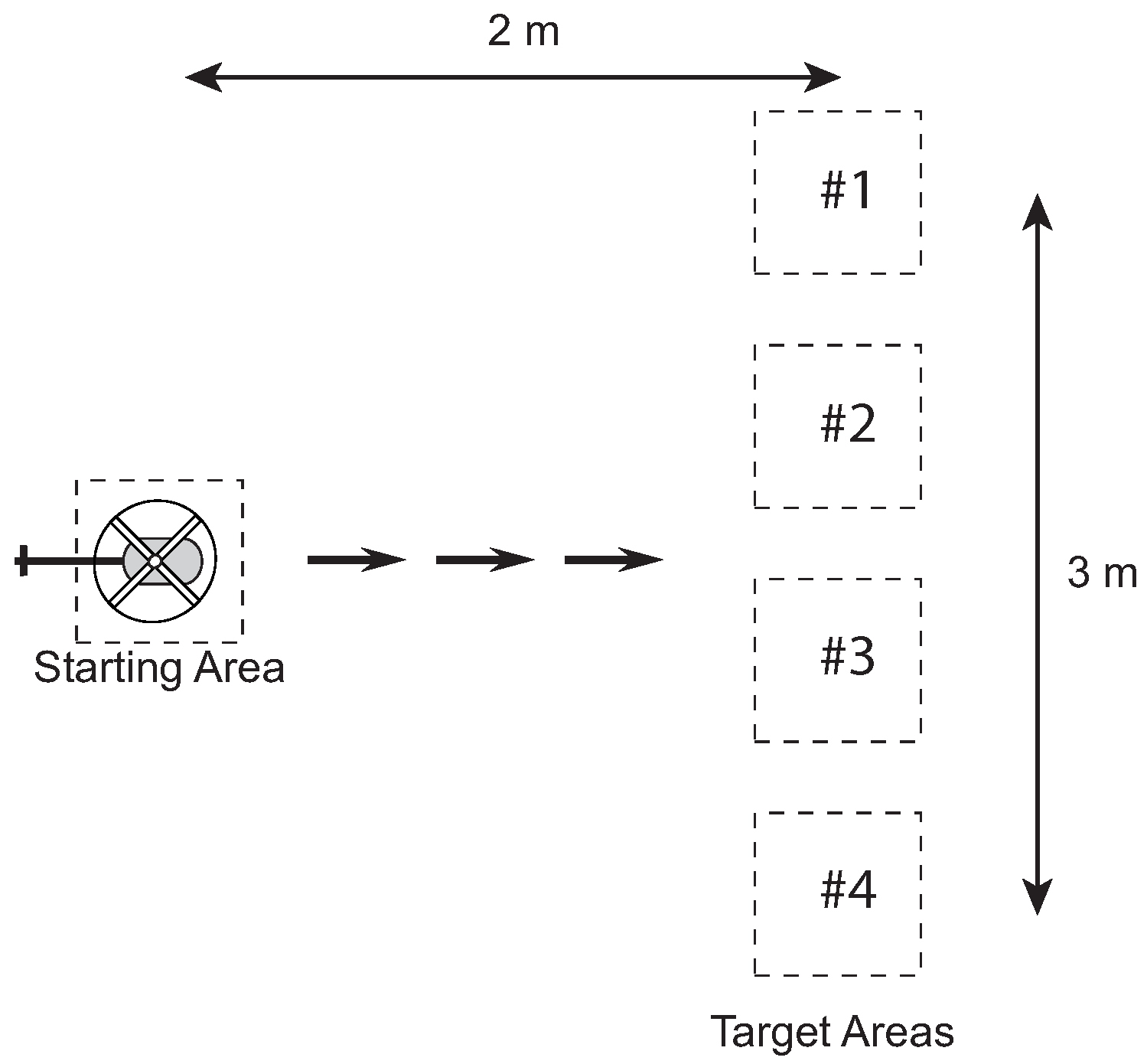

3.2.2. Target Interception

3.3. Characterization Methods

3.3.1. Speed

3.3.2. Accuracy

3.3.3. Workload

3.4. Test Pilots

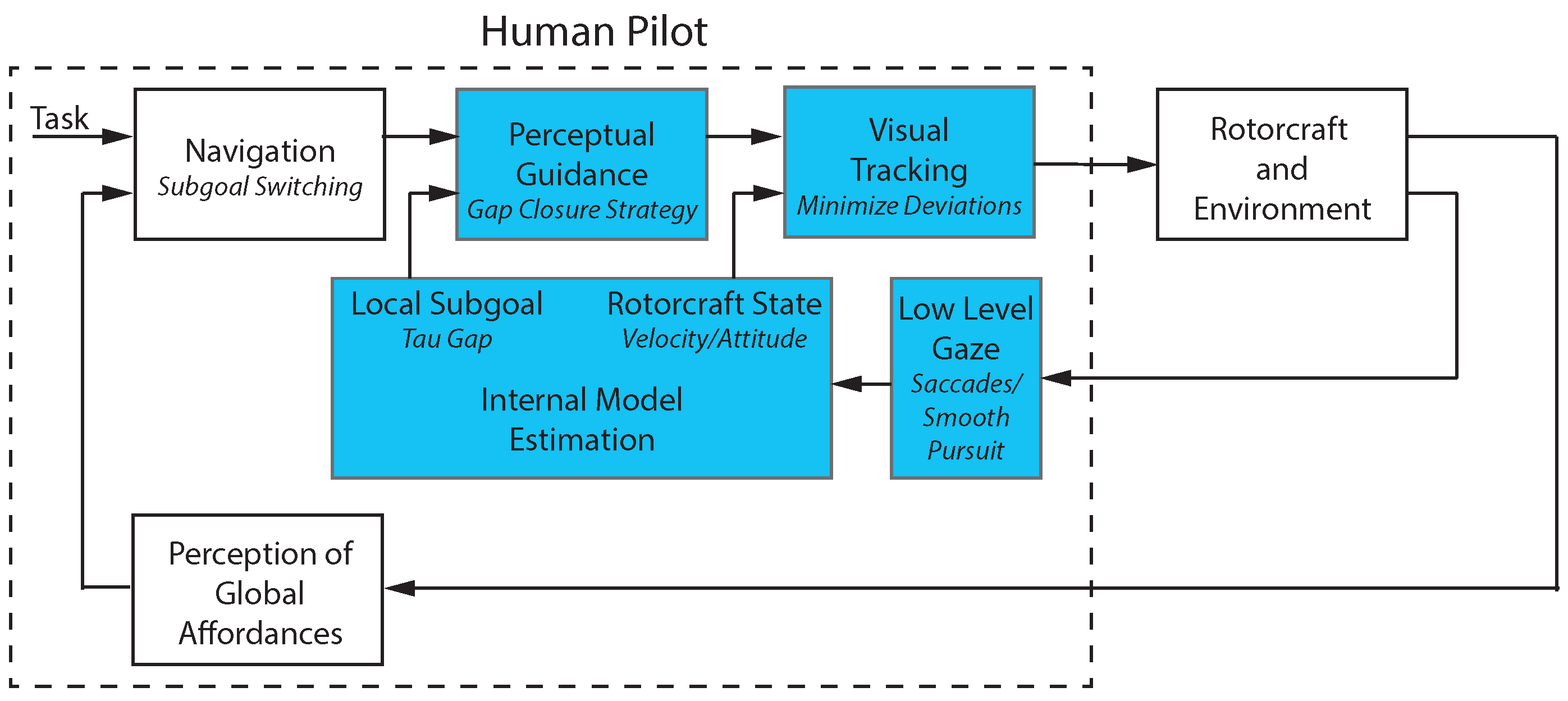

4. Model of Pilot Control

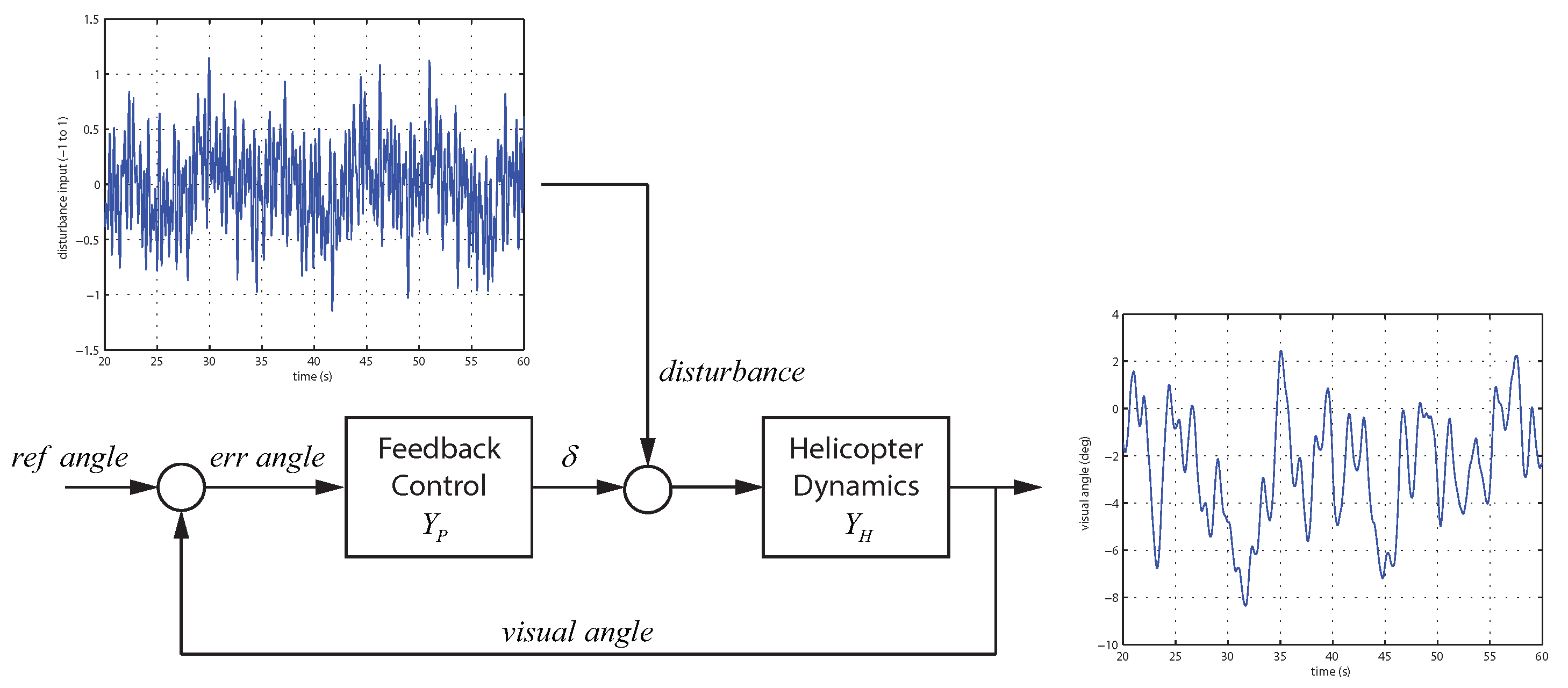

4.1. Visual Tracking: Linear Control

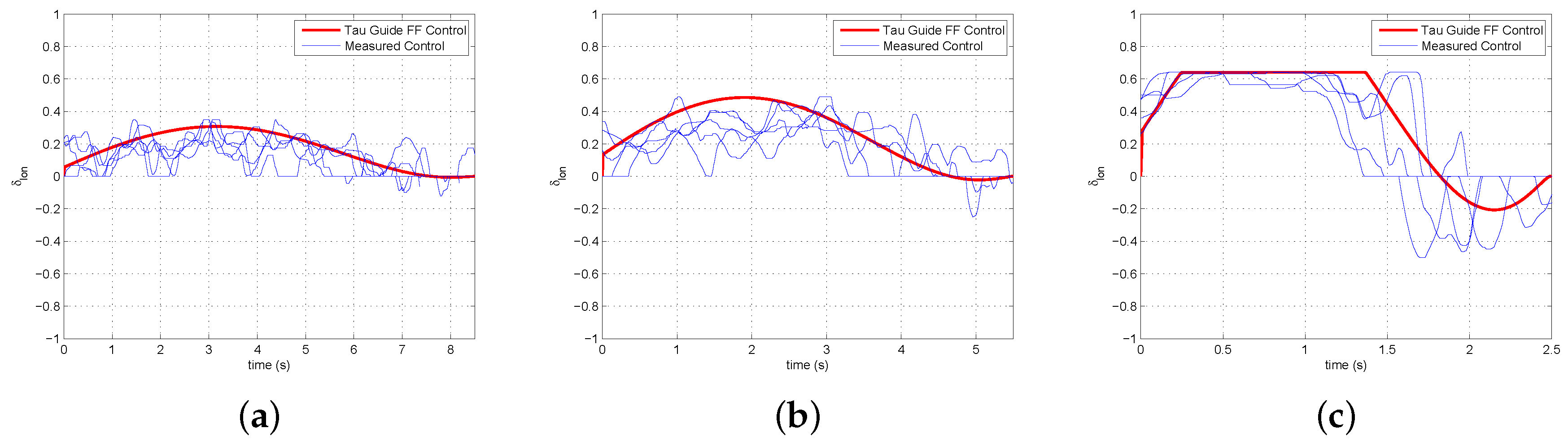

4.1.1. Feed-Forward Control

4.1.2. Feedback Control

4.2. Perceptual Guidance

5. Gaze for Guidance and Control

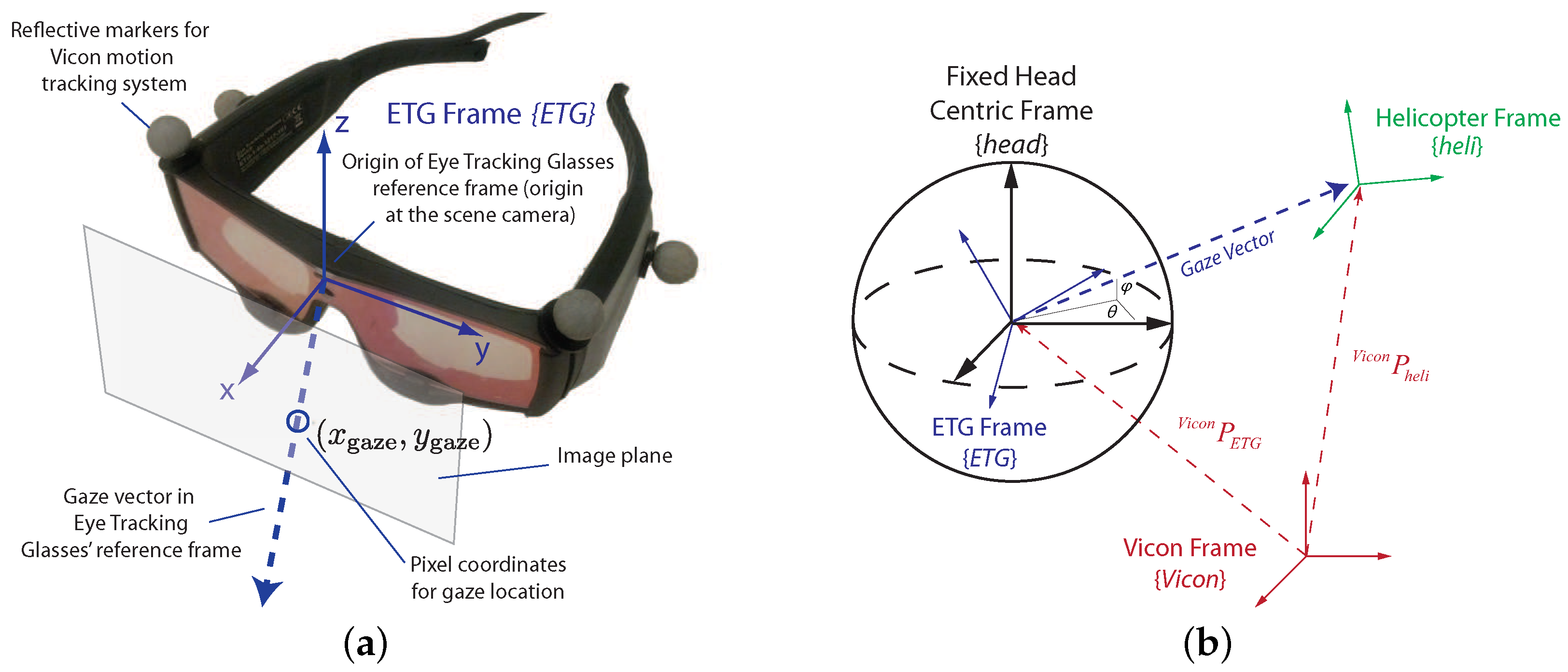

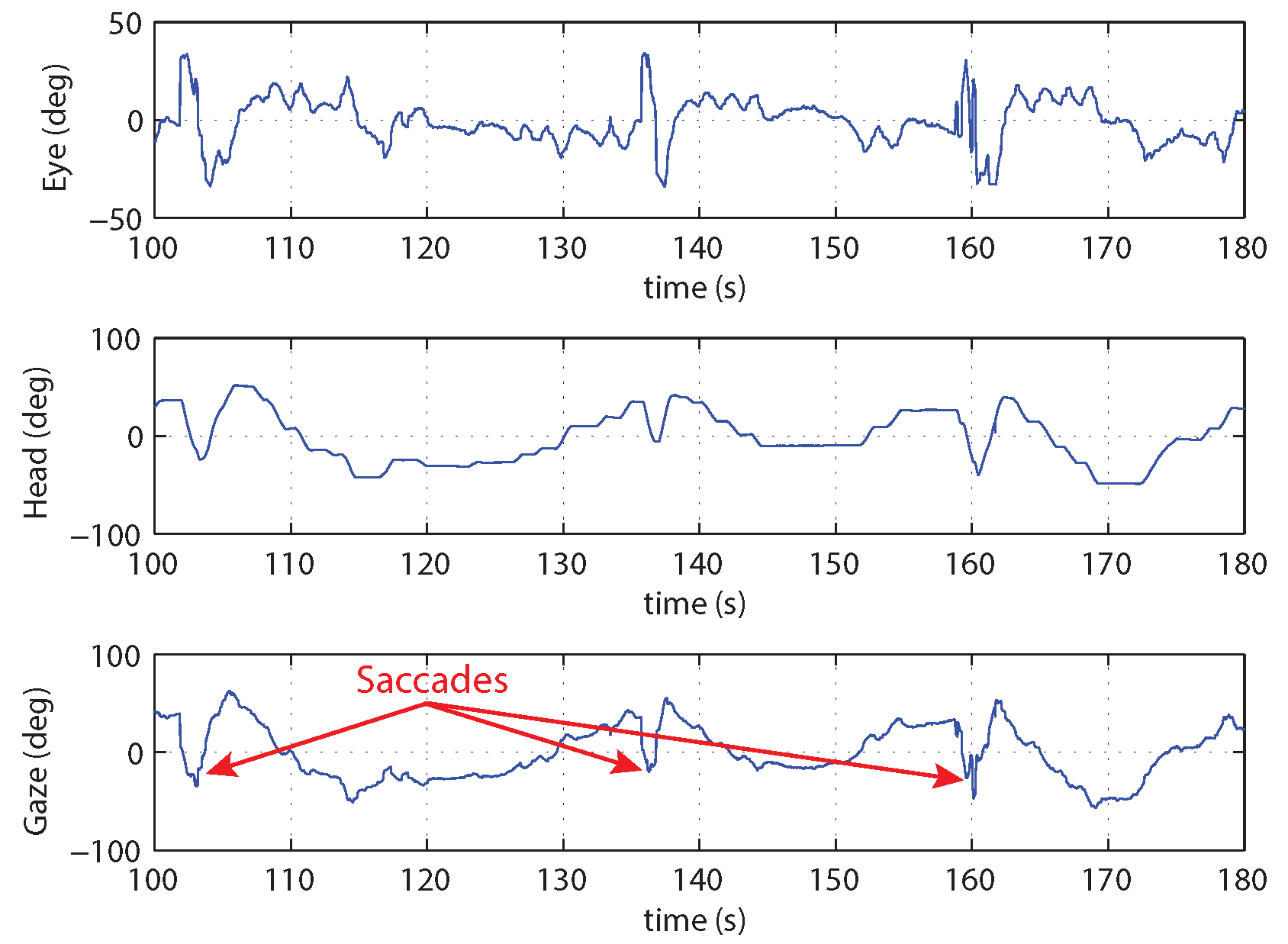

5.1. Gaze Processing

5.1.1. Registration of Gaze and Motion Tracking Measurements

5.1.2. Gaze Classification

5.2. Experiments

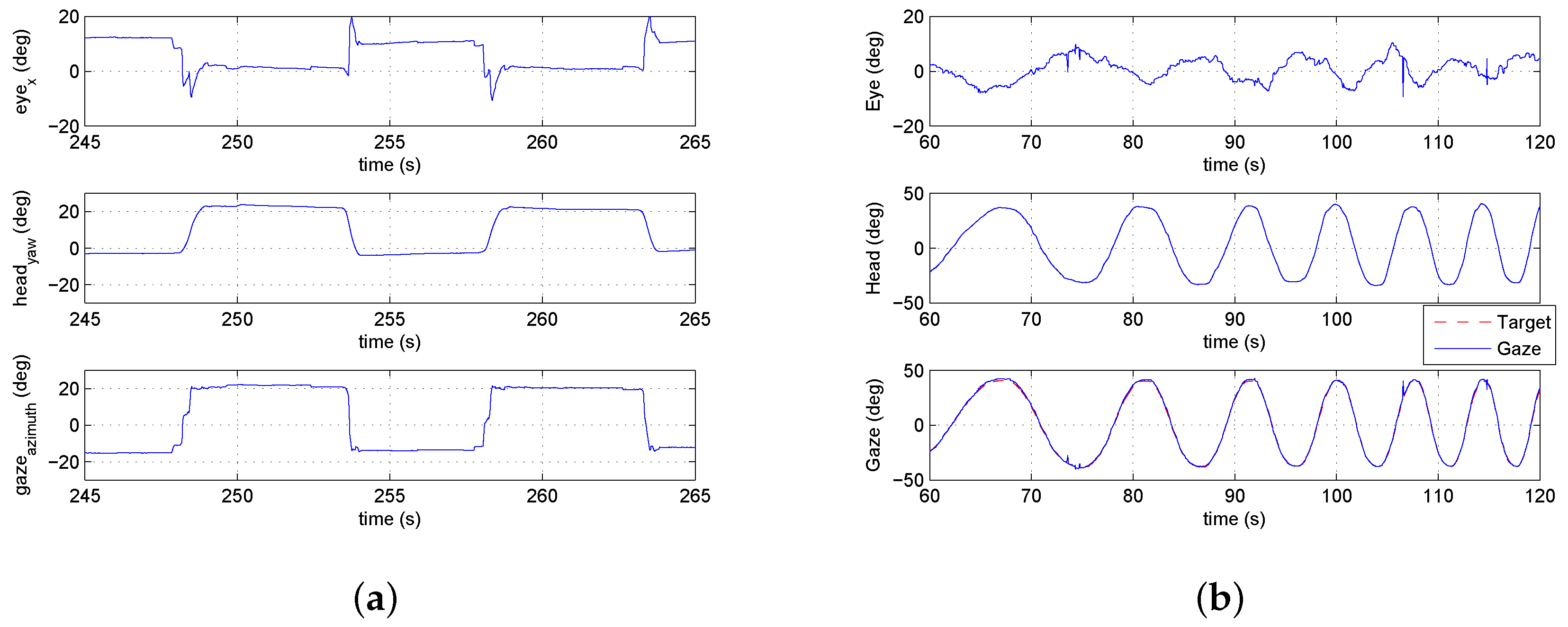

5.2.1. Stabilization—Hover Task

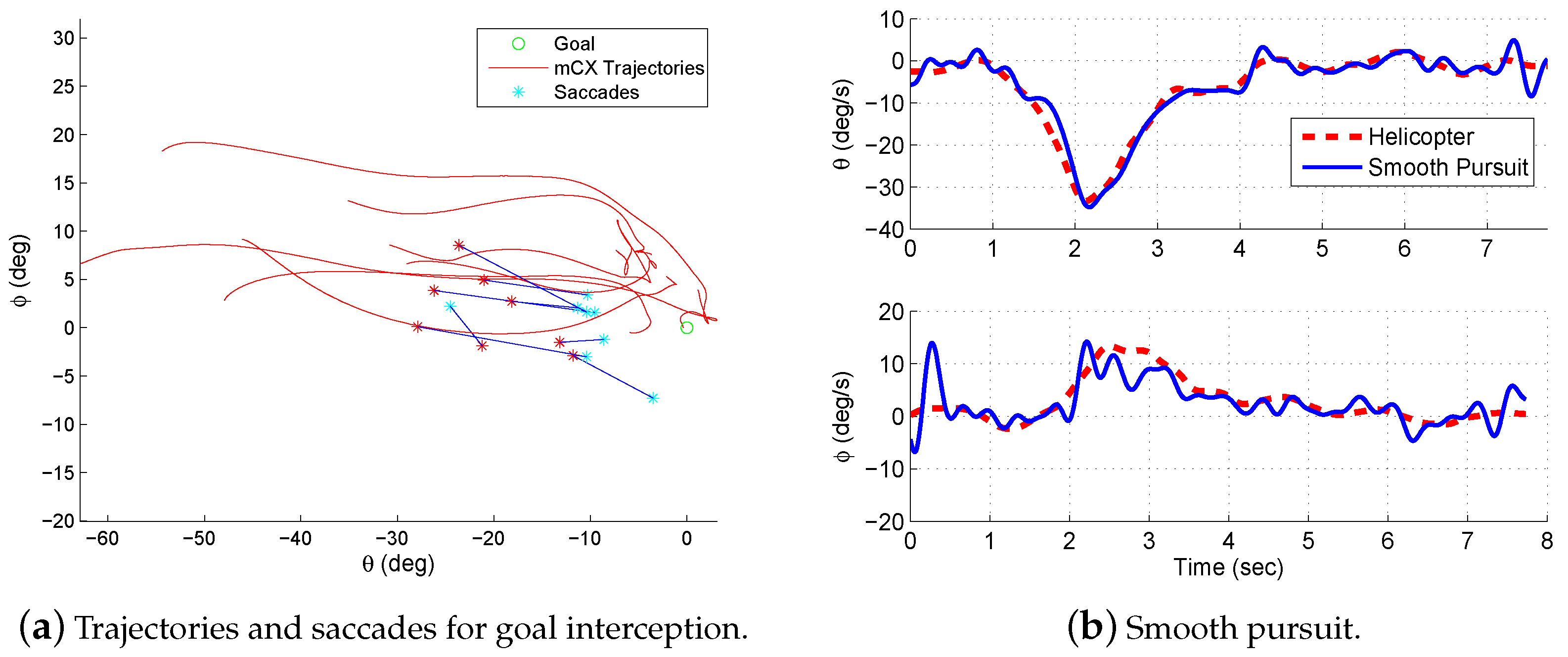

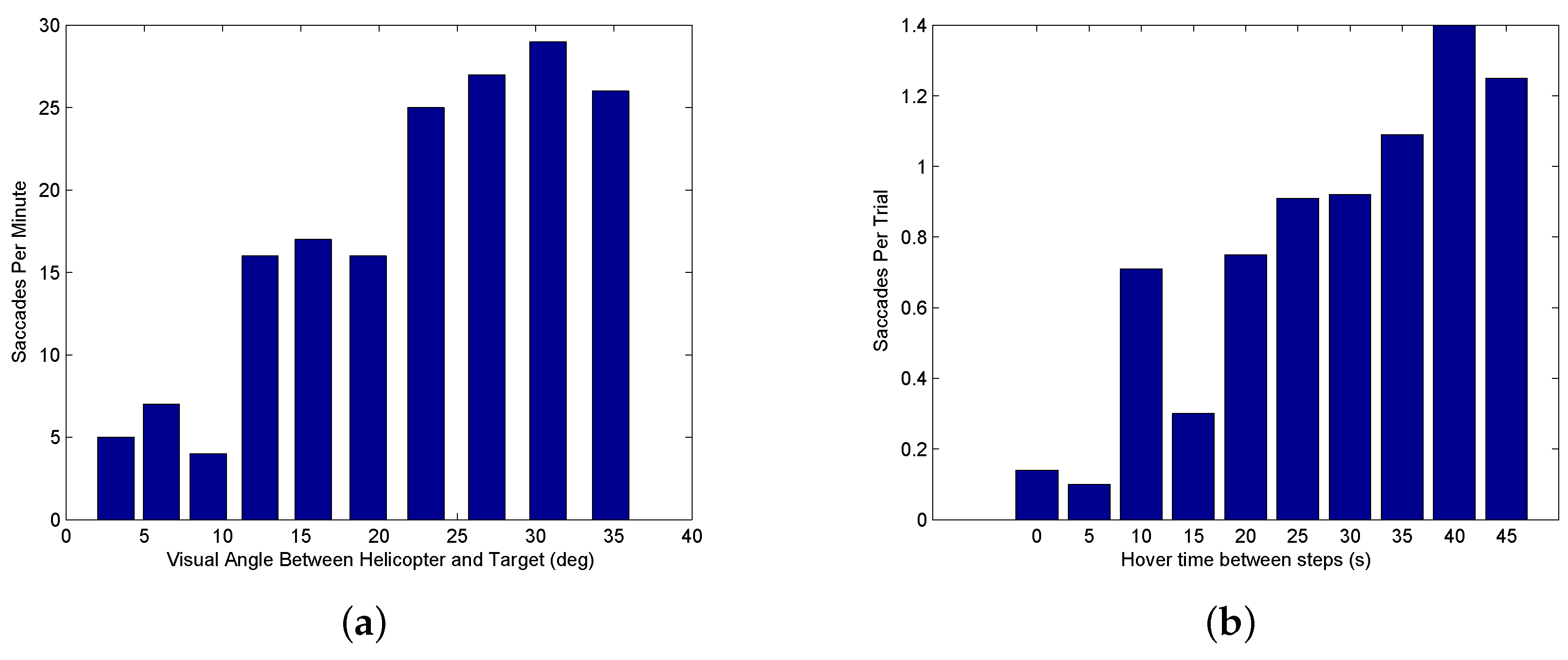

5.2.2. Interception—Step Task

5.3. Models

5.3.1. Gaze Modalities Summary

5.3.2. Pursuit Model

5.3.3. Saccade Model

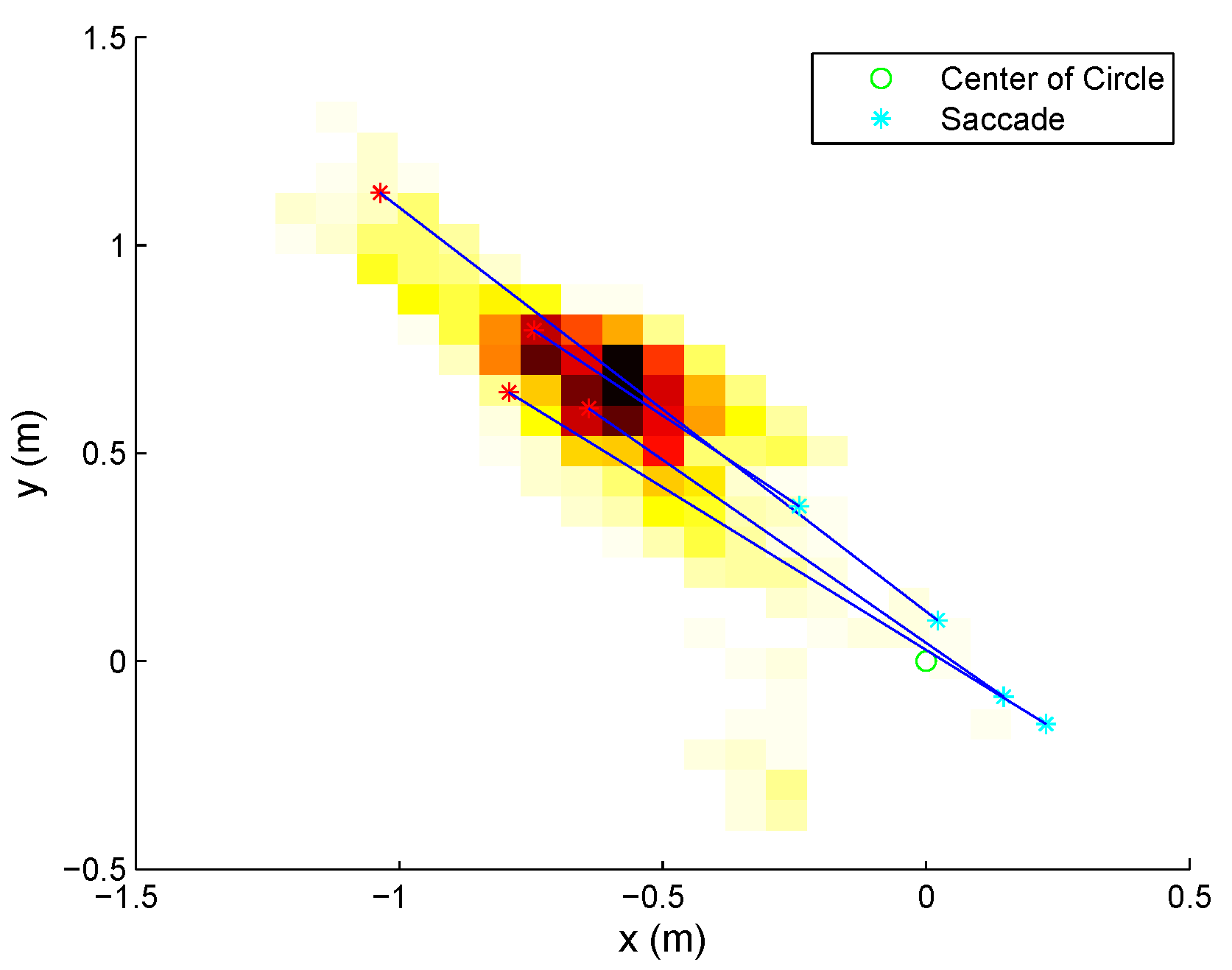

5.4. Integrated Gaze and Control Model

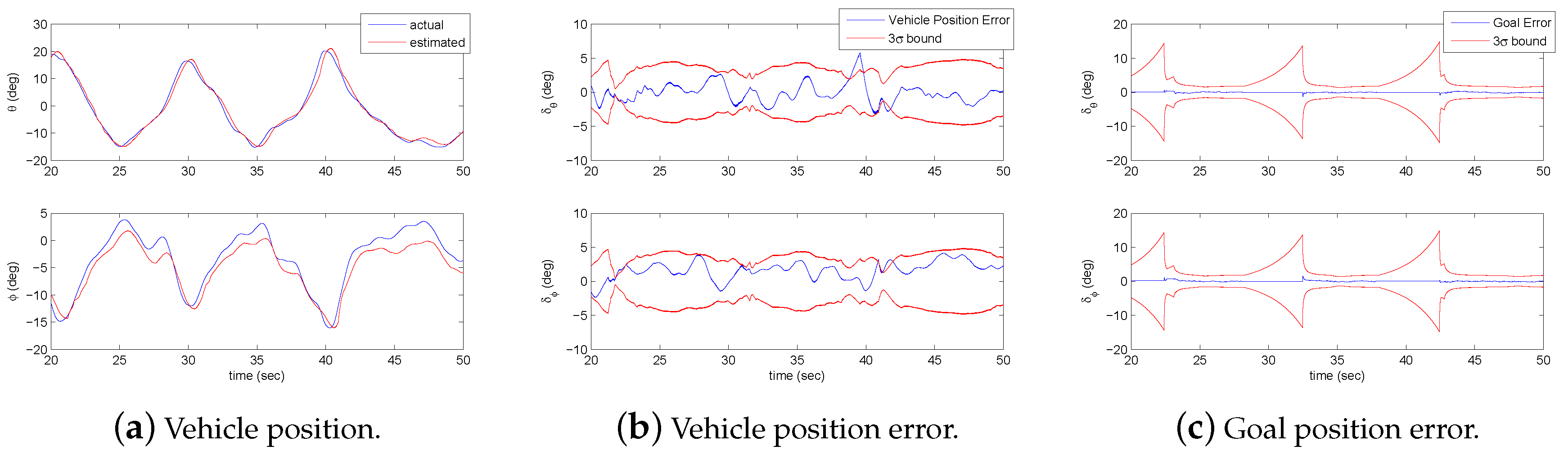

5.5. Estimation of Vehicle and Goal States

6. Application Demonstration

6.1. Background and Overview

6.1.1. Application Overview

6.1.2. Related Work

6.1.3. Approach Overview

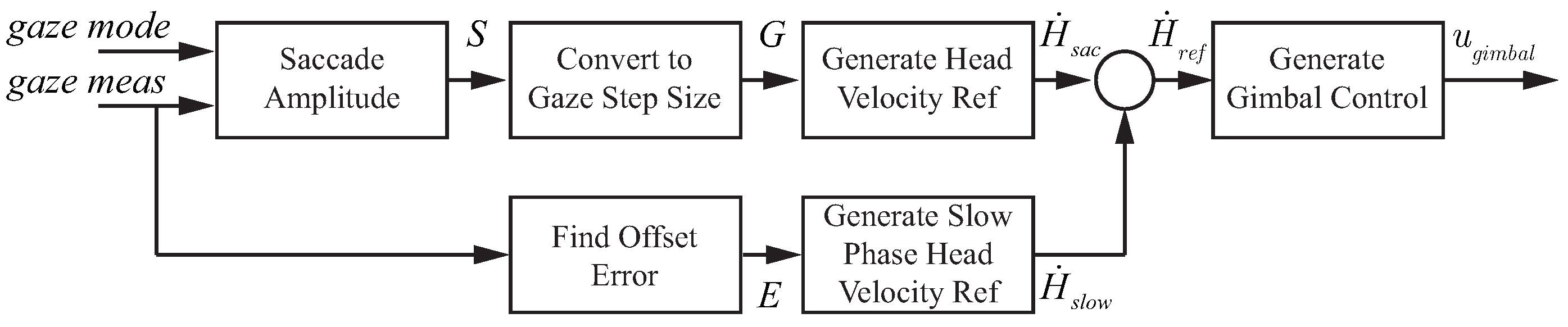

6.2. Gaze-Mediated Camera Control

6.2.1. System Overview

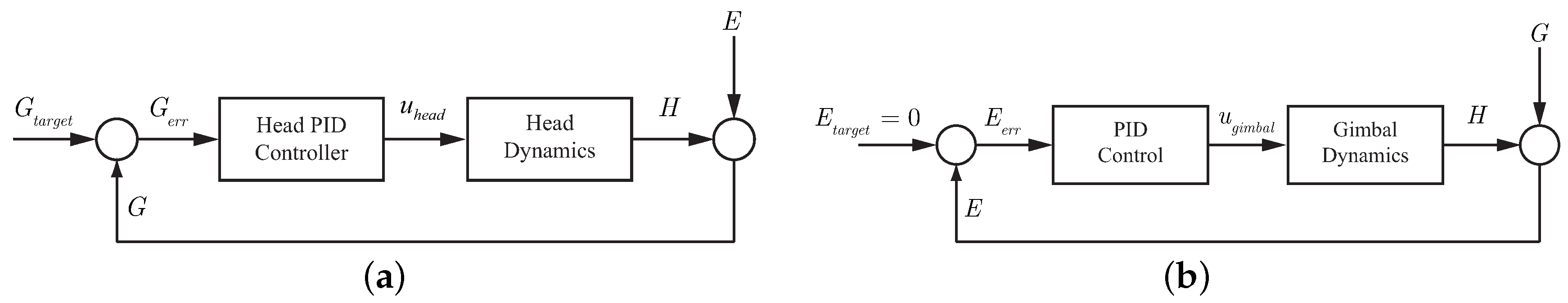

6.2.2. Gimbal Control

Control for Saccades and Fixation

Control for Smooth Pursuit

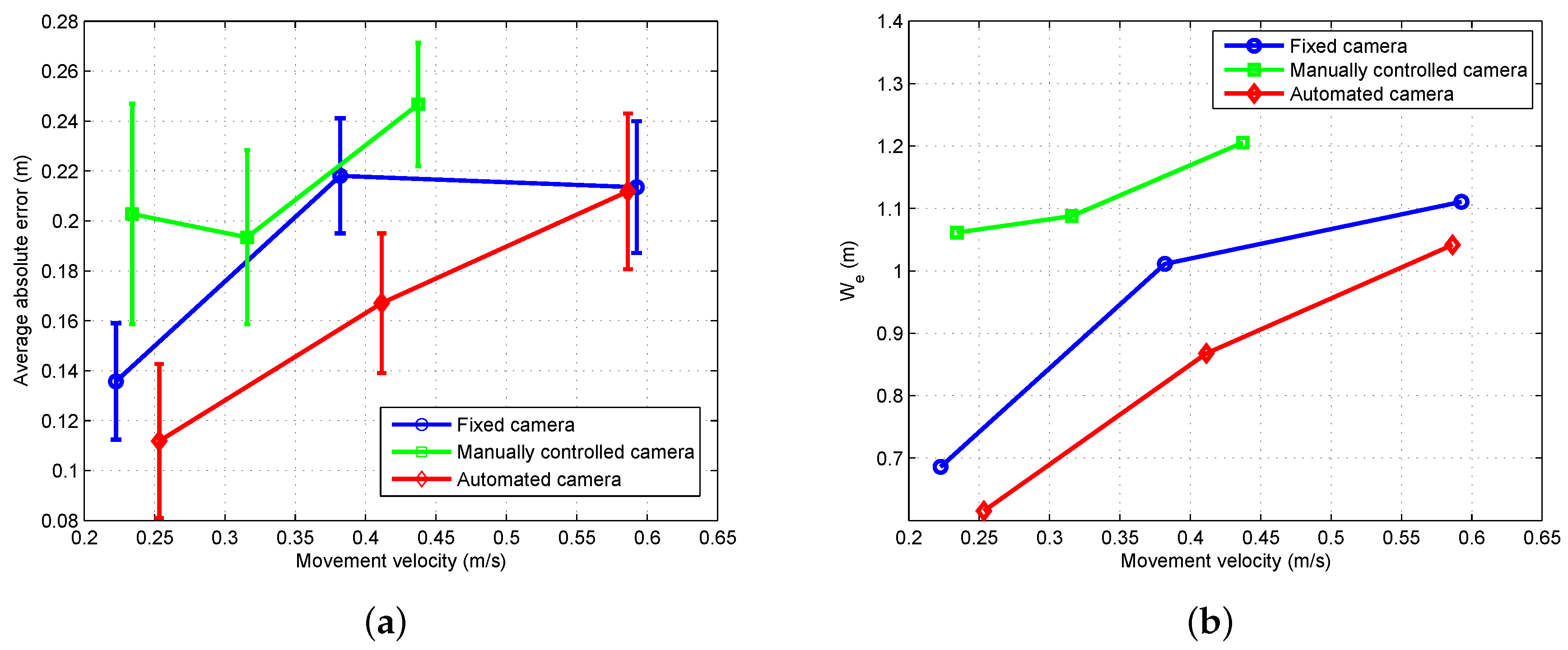

6.2.3. Experimental Results

Environment Sensing with Saccades

Performance Evaluation

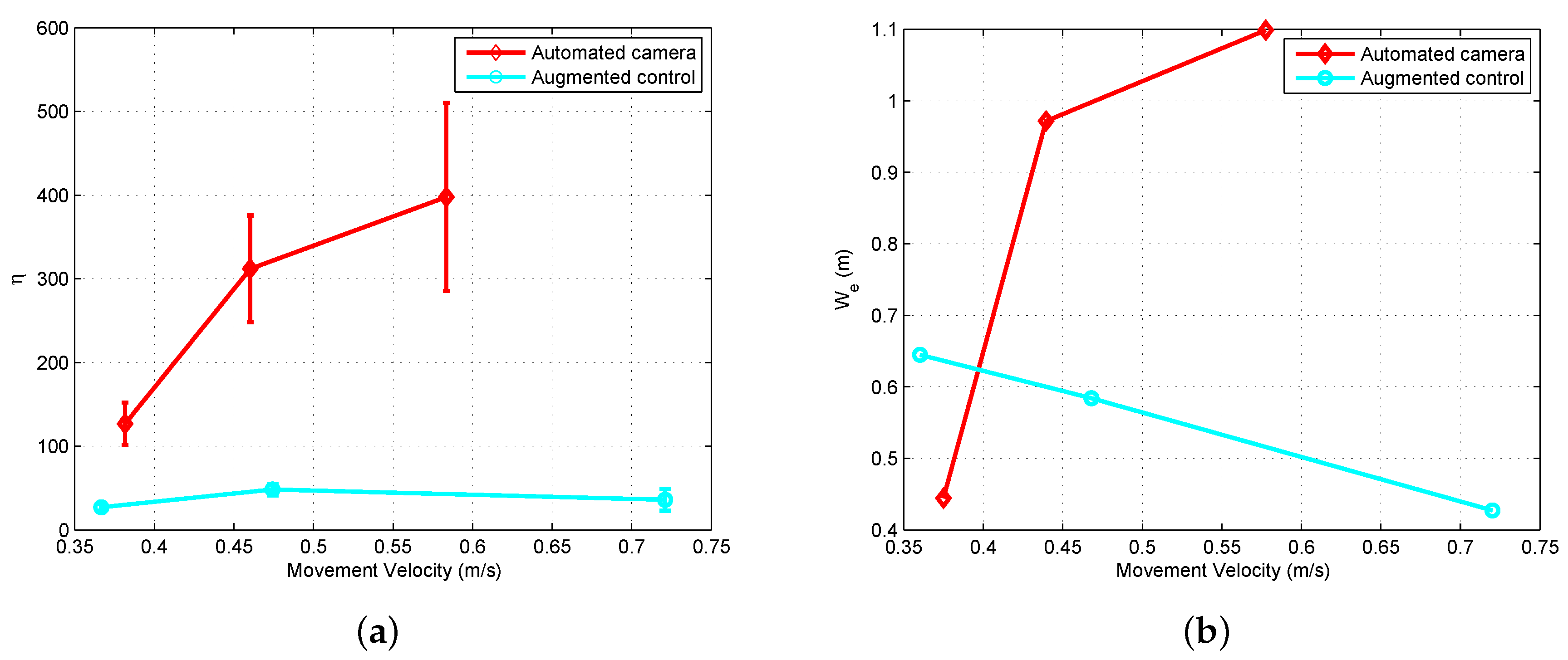

6.3. Augmented Flight Control

6.3.1. Implementation of Augmented Control

6.3.2. Experimental Results

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Fisher, A. Inside Google’s Quest to Popularize Self-Driving Cars. Pop. Sci. 2013, 18. 2013-09. [Google Scholar]

- Thrun, S. Winning the DARPA Grand Challenge. IFAC Proc. Vol. 2006, 39, 1. [Google Scholar] [CrossRef]

- Taylor, R.H.; Menciassi, A.; Fichtinger, G.; Dario, P. Medical robotics and computer-integrated surgery. In Springer Handbook of Robotics; Springer: Berlin, Germany, 2008; pp. 1199–1222. [Google Scholar]

- Floreano, D.; Wood, R.J. Science, technology and the future of small autonomous drones. Nature 2015, 521, 460–466. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mettler, B.; Kong, Z.; Li, B.; Andersh, J. Systems view on spatial planning and perception based on invariants in agent-environment dynamics. Front. Neurosci. 2015, 8, 439. [Google Scholar] [CrossRef] [PubMed]

- Mettler, B.; Kong, Z. Mapping and Analysis of Human Guidance Performance from Trajectory Ensembles. IEEE Trans. Hum. Mach. Syst. 2013, 43, 32–45. [Google Scholar] [CrossRef]

- Andersh, J.; Li, B.; Mettler, B. Modeling visuo-motor control and guidance functions in remote-control operation. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 4368–4374. [Google Scholar]

- Fitts, P.M. Human Engineering for an Effective Air-Navigation and Traffic-Control System; National Research Council, Div. of: Oxford, UK, 1951. [Google Scholar]

- Kong, Z.; Mettler, B. Modeling Human Guidance Behavior Based on Patterns in Agent-Environment Interactions. IEEE Trans. Hum. Mach. Syst. 2013, 43, 371–384. [Google Scholar] [CrossRef]

- Verma, A.; Feit, A.; Mettler, B. Investigation of Human First-Person Guidance Strategy from Gaze Tracking Data. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015; pp. 1066–1072. [Google Scholar]

- Verma, A.; Mettler, B. Investigating Human Learning and Decision-Making in Navigation of Unknown Environments. IFAC-PapersOnLine 2016, 49, 113–118. [Google Scholar] [CrossRef]

- Feit, A.; Mettler, B. Experimental Framework for Investigating First Person Guidance and Perception. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015; pp. 974–980. [Google Scholar]

- Feit, A.; Mettler, B. Extraction and Deployment of Human Guidance Policies. IFAC-PapersOnLine 2016, 49, 95–100. [Google Scholar] [CrossRef]

- Dörner, D.; Schaub, H. Errors in Planning and Decision-making and the Nature of Human Information Processing. Appl. Psychol. 1994, 43, 433–453. [Google Scholar] [CrossRef]

- Albus, J.; Meystel, A.; Uzzaman, S. Multiscale Motion Planning. In Proceedings of the 1997 IEEE International Symposium on Computational Intelligence in Robotics and Automation, Monterey, CA, USA, 10–11 July 1997. [Google Scholar]

- Lipinski, M.; Parks, D.R.; Rouse, R.V.; Herzenberg, L.A. Human trophoblast cell-surface antigens defined by monoclonal antibodies. Proc. Natl. Acad. Sci. USA 1981, 78, 5147–5150. [Google Scholar] [CrossRef] [PubMed]

- Pew, R.W. Human Perceptual-Motor Performance; Technical Report, DTIC Document; Defense Technical Information Center: Fort Belvoir, VA, USA, 1974.

- Gibson, J.J. The Ecological Approach to Visual Perception; Taylor & Francis Group LLC: New York, NY, USA, 1986. [Google Scholar]

- Gibson, J.J. The Theory of Affordances. In Perceiving, Acting, and Knowing; Shaw, R., Bransford, J., Eds.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1977. [Google Scholar]

- Pepping, G.; Li, F. Changing action capabilities and the perception of affordances. J. Hum. Mov. Stud. 2000, 39, 115. [Google Scholar]

- Warren, W.H., Jr.; Whang, S. Visual guidance of walking through apertures: Body-scaled information for affordances. J. Exp. Psychol. Hum. Percept. Perform. 1987, 13, 371. [Google Scholar] [CrossRef] [PubMed]

- Fajen, B.R.; Matthis, J.S. Direct perception of action-scaled affordances: The shrinking gap problem. J. Exp. Psychol. Hum. Percept. Perform. 2011, 37, 1442. [Google Scholar] [CrossRef] [PubMed]

- Fajen, B.R. Affordance-based control of visually guided action. Ecol. Psychol. 2007, 19, 383–410. [Google Scholar] [CrossRef]

- Warren, W.H.; Fajen, B.R. From optic flow to laws of control. In Optic Flow and Beyond; Springer: Berlin, Germany, 2004; pp. 307–337. [Google Scholar]

- Warren, W.H. The dynamics of perception and action. Psychol. Rev. 2006, 113, 358–389. [Google Scholar] [CrossRef] [PubMed]

- Foo, P.; Kelso, J.; De Guzman, G.C. Functional stabilization of unstable fixed points: Human pole balancing using time-to-balance information. J. Exp. Psychol. Hum. Percept. Perform. 2000, 26, 1281. [Google Scholar] [CrossRef] [PubMed]

- Sternad, D.; Duarte, M.; Katsumata, H.; Schaal, S. Bouncing a ball: Tuning into dynamic stability. J. Exp. Psychol. Hum. Percept. Perform. 2001, 27, 1163. [Google Scholar] [CrossRef] [PubMed]

- Siegler, I.; Mantel, B.; Warren, W.; Bardy, B. Behavioral dynamics of a rhythmic ball-bouncing task. In Proceedings of the IV Progress in Motor Control Conference, Caen, France, 20–23 August 2003. [Google Scholar]

- Fajen, B.R.; Warren, W.H. Behavioral dynamics of intercepting a moving target. Exp. Brain Res. 2007, 180, 303–319. [Google Scholar] [CrossRef] [PubMed]

- Loomis, J.M.; Beall, A.C.; Macuga, K.L.; Kelly, J.W.; Smith, R.S. Visual control of action without retinal optic flow. Psychol. Sci. 2006, 17, 214–221. [Google Scholar] [CrossRef] [PubMed]

- Bruggeman, H.; Zosh, W.; Warren, W.H. Optic flow drives human visuo-locomotor adaptation. Curr. Biol. 2007, 17, 2035–2040. [Google Scholar] [CrossRef] [PubMed]

- Warren, W.H.; Fajen, B.R. Behavioral dynamics of visually guided locomotion. In Coordination: Neural, Behavioral and Social Dynamics; Springer: Berlin, Germany, 2008; pp. 45–75. [Google Scholar]

- McBeath, M.; Shaffer, D.; Kaiser, M. How baseball outfielders determine where to run to catch fly balls. Science 1995, 268, 569. [Google Scholar] [CrossRef] [PubMed]

- Mettler, B. Structure and Organizational Principles of Agile Behavior: Challenges and Opportunities in Cognitive Engineering. J. Cogn. Crit. 2011, 3. [Google Scholar]

- Mettler, B.; Verma, A.; Feit, A. Emergent patterns in agent-environment interactions and their roles in supporting agile spatial skills. Annu. Rev. Control 2017, 44, 252–273. [Google Scholar] [CrossRef]

- Lee, D.N. A theory of visual control of braking based on information about time to collision. Perception 1976, 5, 437–459. [Google Scholar] [CrossRef] [PubMed]

- Lee, D. Guiding Movements by Coupling Taus. Ecol. Psychol. 1998, 10, 221–250. [Google Scholar] [CrossRef]

- Lee, D.N.; Davies, M.N.; Green, P.R. Visual control of velocity of approach by pigeons when landing. J. Exp. Biol. 1993, 180, 85–104. [Google Scholar]

- Lee, D.N.; Reddish, P.E.; Rand, D. Aerial docking by hummingbirds. Naturwissenschaften 1991, 78, 526–527. [Google Scholar] [CrossRef]

- Lee, D.N.; Lishman, J.R.; Thomson, J.A. Regulation of gait in long jumping. J. Exp. Psychol. Hum. Percept. Perform. 1982, 8, 448. [Google Scholar] [CrossRef]

- Padfield, G.; Lee, D.; Bradley, R. How Do Helicopter Pilots Know When to Stop, Turn or Pull Up? J. Am. Helicopter Soc. 2003, 48, 108–119. [Google Scholar] [CrossRef]

- Padfield, G.D.; Taghizad, A. How long do pilots look forward? In Proceedings of the 31st European Rotorcraft Forum, Florence, Italy, 13–15 September 2005. [Google Scholar]

- Padfield, G. The tau of flight control. Aeronaut. J. 2011, 115, 521–556. [Google Scholar] [CrossRef]

- Tustin, A. The nature of the operator’s response in manual control, and its implications for controller design. J. Inst. Electr. Eng. 1947, 94, 190–206. [Google Scholar]

- Elkind, J. Characteristics of Simple Manual Control Systems. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1956. [Google Scholar]

- McRuer, D.; Jex, H. A Review of Quasi-Linear Pilot Models. IEEE Trans. Hum. Factors Electron. 1967, 3, 231–249. [Google Scholar] [CrossRef]

- Jagacinski, R.J.; Flach, J.M. Control Theory for Humans, Quantitative Approaches to Modeling Performance; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2003. [Google Scholar]

- McRuer, D.; Kendel, E. Mathematical models of human pilot behavior. In Technical Report AGARDograph 180; Advisory Group on Aerospace Research and Development: Paris, France, 1974. [Google Scholar]

- McRuer, D. Human dynamics in man-machine systems. Automatica 1980, 16, 237–253. [Google Scholar] [CrossRef]

- Krendel, E.S.; McRuer, D.T. A servomechanisms approach to skill development. J. Frankl. Inst. 1960, 269, 24–42. [Google Scholar] [CrossRef]

- Heffley, R. A Compilation and Analysis of Helicopter Handling Qualities Data; Volume II: Data Analysis; Technical Report CR-3145; NASA: Washington, DC, USA, 1979.

- Hess, R.; McNally, B. Automation Effects in a Multiloop Manual Control System. IEEE Trans. Syst. Man Cybern. 1986, 16, 111–121. [Google Scholar] [CrossRef]

- White, M.; Padfield, G.; Armstrong, R. Progress in Measuring Simulation Fidelity using an Adaptive Pilot Model. In Proceedings of the 60th Annual Forum of the American Helicopter Society, Baltimore, MD, USA, 7–10 June 2004. [Google Scholar]

- Heffley, R.K. A Pilot-in-the-Loop Analysis of Several Kinds of Helicopter Acceleration/Deceleration Maneuvers. NASA CP 1982, 2216, 221–232. [Google Scholar]

- Padfield, G.; White, M. Measuring simulation fidelity through an adaptive pilot model. Aerosp. Sci. Technol. 2005, 9, 400–408. [Google Scholar] [CrossRef]

- Todorov, E.; Jordan, M.I. Optimal feedback control as a theory of motor coordination. Nat. Neurosci. 2002, 5, 1226. [Google Scholar] [CrossRef] [PubMed]

- Land, M.F. Eye movements and the control of actions in everyday life. Prog. Retin. Eye Res. 2006, 25, 296–324. [Google Scholar] [CrossRef] [PubMed]

- Hayhoe, M.; Ballard, D. Eye movements in natural behavior. Trends Cogn. Sci. 2005, 9, 188–194. [Google Scholar] [CrossRef] [PubMed]

- Johnson, L.; Sullivan, B.; Hayhoe, M.; Ballard, D. Predicting human visuomotor behaviour in a driving task. Philos. Trans. R. Soc. Lond. B 2014, 369, 20130044. [Google Scholar] [CrossRef] [PubMed]

- Guitton, D.; Volle, M. Gaze control in humans: Eye-head coordination during orienting movements to targets within and beyond the oculomotor range. J. Neurophys. 1987, 58, 427–459. [Google Scholar] [CrossRef] [PubMed]

- Bizzi, E. Eye-Head Coordination. Compr. Physiol. 1981, 1, 1321–1336. [Google Scholar]

- Wijayasinghe, I.; Aulisa, E.; Ghosh, B. Tracking and Optimal Control Problems in Human Head/Eye Coordination. In Proceedings of the American Control Conference, Washington, DC, USA, 17–19 June 2013. [Google Scholar]

- Chun, K.S.; Robinson, D. A model of quick phase generation in the vestibuloocular reflex. Biol. Cybern. 1978, 28, 209–221. [Google Scholar] [CrossRef] [PubMed]

- Goossens, H.H.; Van Opstal, A. Human eye-head coordination in two dimensions under different sensorimotor conditions. Exp. Brain Res. 1997, 114, 542–560. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lisberger, S.G.; Morris, E.; Tychsen, L. Visual motion processing and sensory-motor integration for smooth pursuit eye movements. Annu. Rev. Neurosci. 1987, 10, 97–129. [Google Scholar] [CrossRef] [PubMed]

- Hermens, F.; Flin, R.; Ahmed, I. Eye movements in surgery: A literature review. J. Eye Mov. Res. 2013, 6. [Google Scholar] [CrossRef]

- Salvucci, D.D.; Goldberg, J.H. Identifying fixations and saccades in eye-tracking protocols. In Proceedings of the 2000 symposium on Eye tracking research & applications, Palm Beach Gardens, FL, USA, 6–8 November 2000; pp. 71–78. [Google Scholar]

- Mettler, B.; Dadkhah, N.; Kong, Z.; Andersh, J. Research Infrastructure for Interactive Human-and Autonomous Guidance. J. Intell. Robot. Syst. 2013, 70, 437–459. [Google Scholar] [CrossRef]

- Li, B.; Mettler, B.; Andersh, J. Classification of Human Gaze in Spatial Guidance and Control. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015; pp. 1073–1080. [Google Scholar]

- Hess, R.A. Structural Model of the Adaptive Human Pilot. J. Guid. Control Dyn. 1980, 3, 416–423. [Google Scholar] [CrossRef]

- Fitts, P.M. The information capacity of the human motor system in controlling the amplitude of movement. J. Exp. Psychol. 1954, 47, 381. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, R.A.; Zelaznik, H.; Hawkins, B.; Frank, J.S.; Quinn, J.T., Jr. Motor-output variability: A theory for the accuracy of rapid motor acts. Psychol. Rev. 1979, 86, 415. [Google Scholar] [CrossRef]

- U.S. Army Aviation and Missile Command; Aviation Engineering Directorate. Handling Qualities Requirements for Military Rotorcraftt; ADS-33E-PRF; U.S. Army Aviation and Missile Command; Aviation Engineering Directorate: Huntsville, AL, USA, 2000. [Google Scholar]

- Brockett, R. Minimum Attention Control. In Proceedings of the 36th Conference on Decision & Control, San Diego, CA, USA, 12 December 1997. [Google Scholar]

- Drop, F.M.; Pool, D.M.; Damveld, H.J.; van Paassen, M.M.; Mulder, M. Identification of the feedforward component in manual control with predictable target signals. IEEE Trans. Cybern. 2013, 43, 1936–1949. [Google Scholar] [CrossRef] [PubMed]

- Pool, D.; Van Paassen, M.; Mulder, M. Modeling Human Dynamics in Combined Ramp-Following and Disturbance- Rejection Tasks; American Institute of Aeronautics and Astronautics (AIAA): Reston, VA, USA, 2010. [Google Scholar]

- Pool, D.M.; Zaal, P.M.T.; Van Paassen, M.M.; Mulder, M. Identification of multimodal pilot models using ramp target and multisine disturbance signals. J. Guid. Control Dyn. 2011, 34, 86–97. [Google Scholar] [CrossRef]

- US Army Aeroflightdynamics Directorate. CIFER Comprehensive Identification from Frequency Responses User’s Guide; US Army Aeroflightdynamics Directorate: Moffett Field, CA, USA, 2010. [Google Scholar]

- Huston, S.J.; Krapp, H.G. Visuomotor transformation in the fly gaze stabilization system. PLoS Biol. 2008, 6, e173. [Google Scholar] [CrossRef] [PubMed]

- Robinson, D. The mechanics of human saccadic eye movement. J. Physiol. 1964, 174, 245–264. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Martinez-Conde, S.; Macknik, S.L.; Hubel, D.H. The role of fixational eye movements in visual perception. Nat. Rev. Neurosci. 2004, 5, 229–240. [Google Scholar] [CrossRef] [PubMed]

- Robinson, D. The mechanics of human smooth pursuit eye movement. J. Physiol. 1965, 180, 569. [Google Scholar] [CrossRef] [PubMed]

- Boghen, D.; Troost, B.; Daroff, R. Velocity characteristics of normal human saccades. Investig. Ophthalmol. 1974, 13, 619–623. [Google Scholar]

- Brown, J. Some tests of the decay theory of immediate memory. Q. J. Exp. Psychol. 1958, 10, 12–21. [Google Scholar] [CrossRef]

- Wickens, C.D.; Hollands, J.G.; Banbury, S.; Parasuraman, R. Engineering Psychology & Human Performance; Psychology Press: London, UK, 2015. [Google Scholar]

- Voshell, M.; Woods, D.D.; Phillips, F. Overcoming the keyhole in human-robot coordination: Simulation and evaluation. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Orlando, FL, USA, 26–30 September 2005; Volume 49, pp. 442–446. [Google Scholar]

- Smyth, C.C. Indirect vision driving with fixed flat panel displays for near unity, wide, and extended fields of camera view. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, San Diego, CA, USA, 30 July–4 August 2000; Volume 44, pp. 541–544. [Google Scholar]

- Zhu, D.; Gedeon, T.; Taylor, K. “Moving to the centre”: A gaze-driven remote camera control for teleoperation. Interact. Comput. 2011, 23, 85–95. [Google Scholar] [CrossRef]

- Latif, H.O.; Sherkat, N.; Lotfi, A. Remote control of mobile robots through human eye gaze: The design and evaluation of an interface. In Proceedings of the SPIE Europe Security and Defence, International Society for Optics and Photonics, San Jose, CA, USA, 24–29 February 2008. [Google Scholar]

- Maini, E.S.; Teti, G.; Rubino, M.; Laschi, C.; Dario, P. Bio-inspired control of eye-head coordination in a robotic anthropomorphic head. In Proceedings of the 2006 First IEEE/RAS-EMBS International Conference on Biomedical Robotics and Biomechatronics, Pisa, Italy, 20–22 February 2006; pp. 549–554. [Google Scholar]

| Humans Are Better at | Machines Are Better at |

|---|---|

| Stability in control response | Fast control response |

| Adaptation to changing environments | Repetitive and precise tasks |

| Pattern recognition and processing large amounts of data | Processing information |

| Inductive reasoning | Deductive reasoning |

| Performing when overloaded | Multi-tasking |

| High-level goal selection and planning |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Andersh, J.; Mettler, B. Modeling the Human Visuo-Motor System to Support Remote-Control Operation. Sensors 2018, 18, 2979. https://doi.org/10.3390/s18092979

Andersh J, Mettler B. Modeling the Human Visuo-Motor System to Support Remote-Control Operation. Sensors. 2018; 18(9):2979. https://doi.org/10.3390/s18092979

Chicago/Turabian StyleAndersh, Jonathan, and Bérénice Mettler. 2018. "Modeling the Human Visuo-Motor System to Support Remote-Control Operation" Sensors 18, no. 9: 2979. https://doi.org/10.3390/s18092979

APA StyleAndersh, J., & Mettler, B. (2018). Modeling the Human Visuo-Motor System to Support Remote-Control Operation. Sensors, 18(9), 2979. https://doi.org/10.3390/s18092979