Automatic Railway Traffic Object Detection System Using Feature Fusion Refine Neural Network under Shunting Mode

Abstract

:1. Introduction

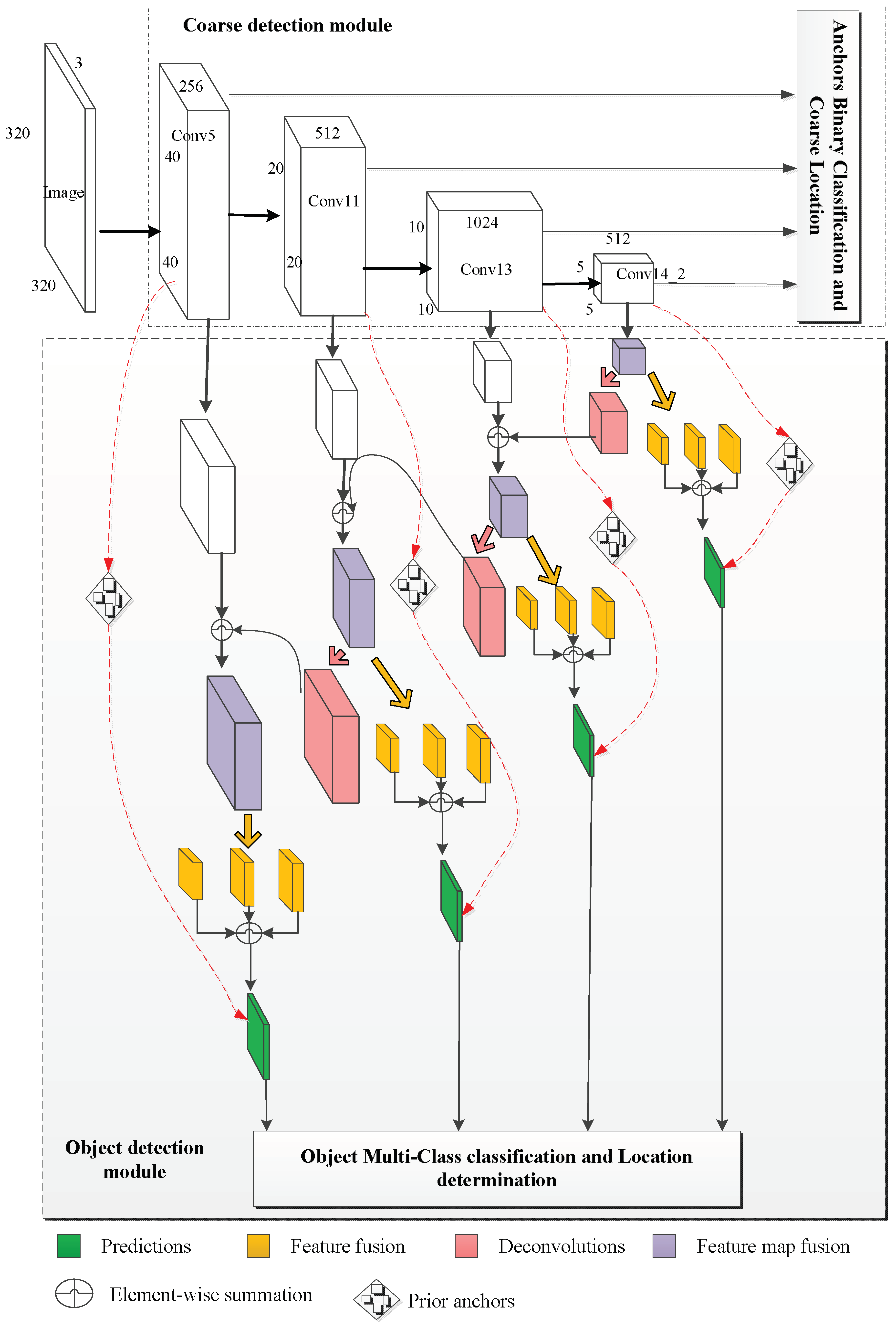

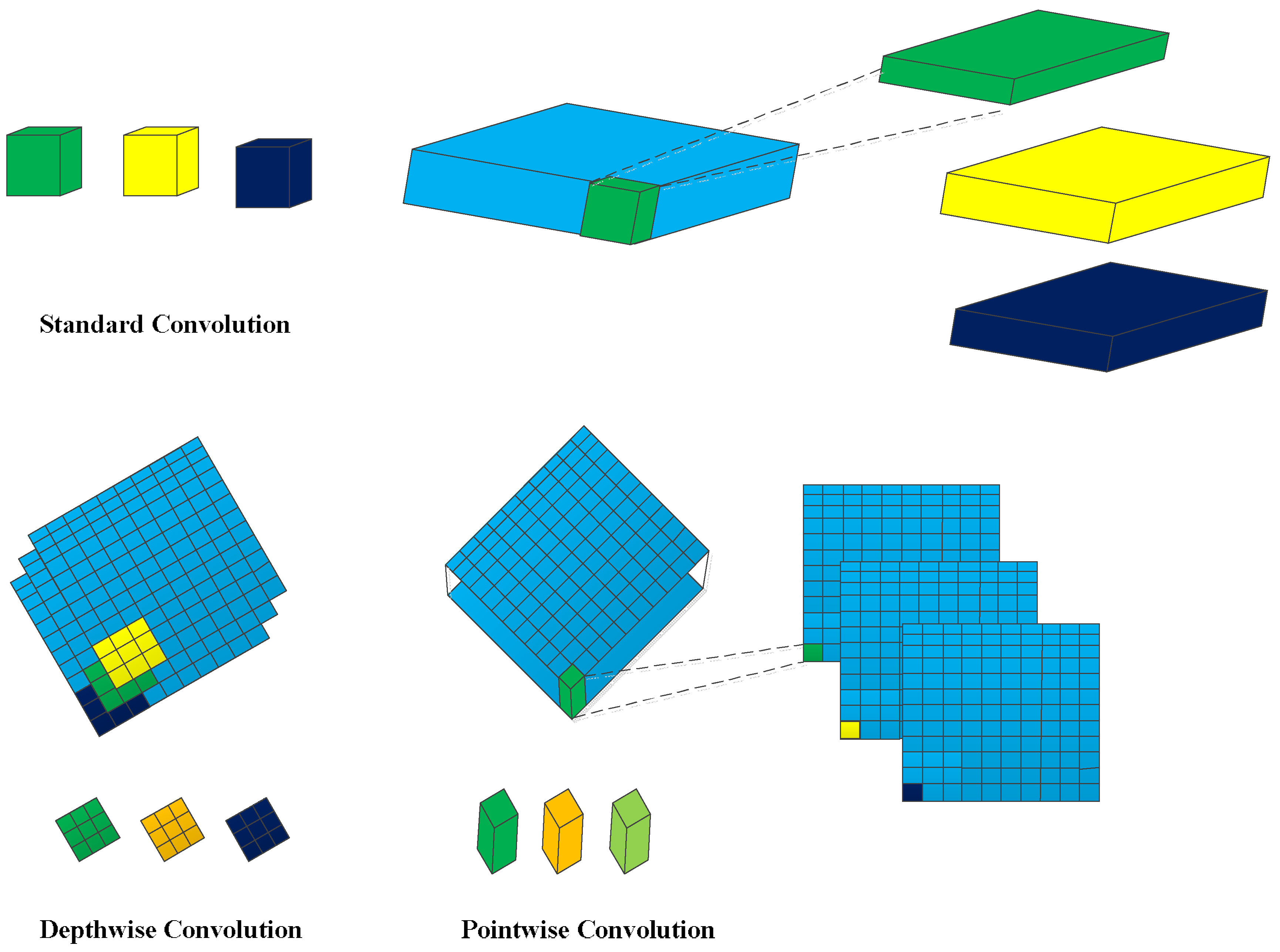

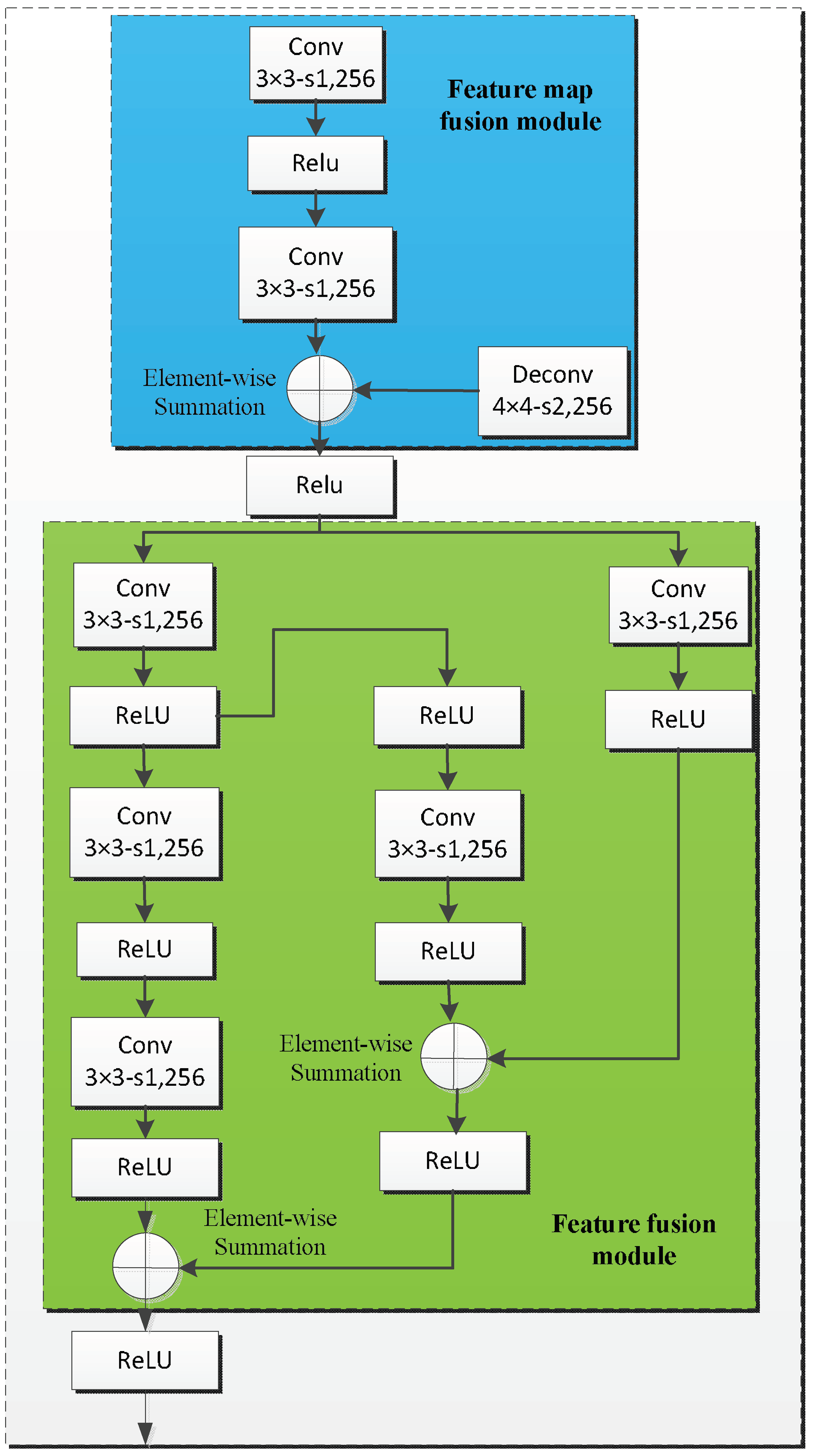

- To account for effectiveness and efficiency, three novel parts were introduced in FR-Net, including depthwise convolution, the coarse detection module, and the object detection module. The coarse object detection module provided prior anchors for the object detection module. Taking the prior anchor boxes as the input, the object detection module obtained sufficient feature information using two submodules (i.e., the feature map fusion module and feature fusion module) for object detection. Depthwise convolution was used for efficiency, whereas the other two modules were responsible for effectiveness.

- With input sizes of test images, FR-Net achieves 0.89 mAP with performance of 72.3 frames per second (FPS). The experimental results show that FR-Net balances effectiveness and real-time performance well. The robustness experimental results show that the proposed model can conduct all-weather detection effectively in railway traffic situations. Moreover, the proposed method yields superiority over the SSD for small-object detection.

2. Related Work

2.1. Railway Obstacle Detection Systems

2.2. Object Detection with CNNs

3. Proposes Method

3.1. Depthwise–Pointwise Convolution

3.2. Coarse Detection Module

3.3. Object Detection Module

4. Training

5. Experiment and Results

5.1. Datasets

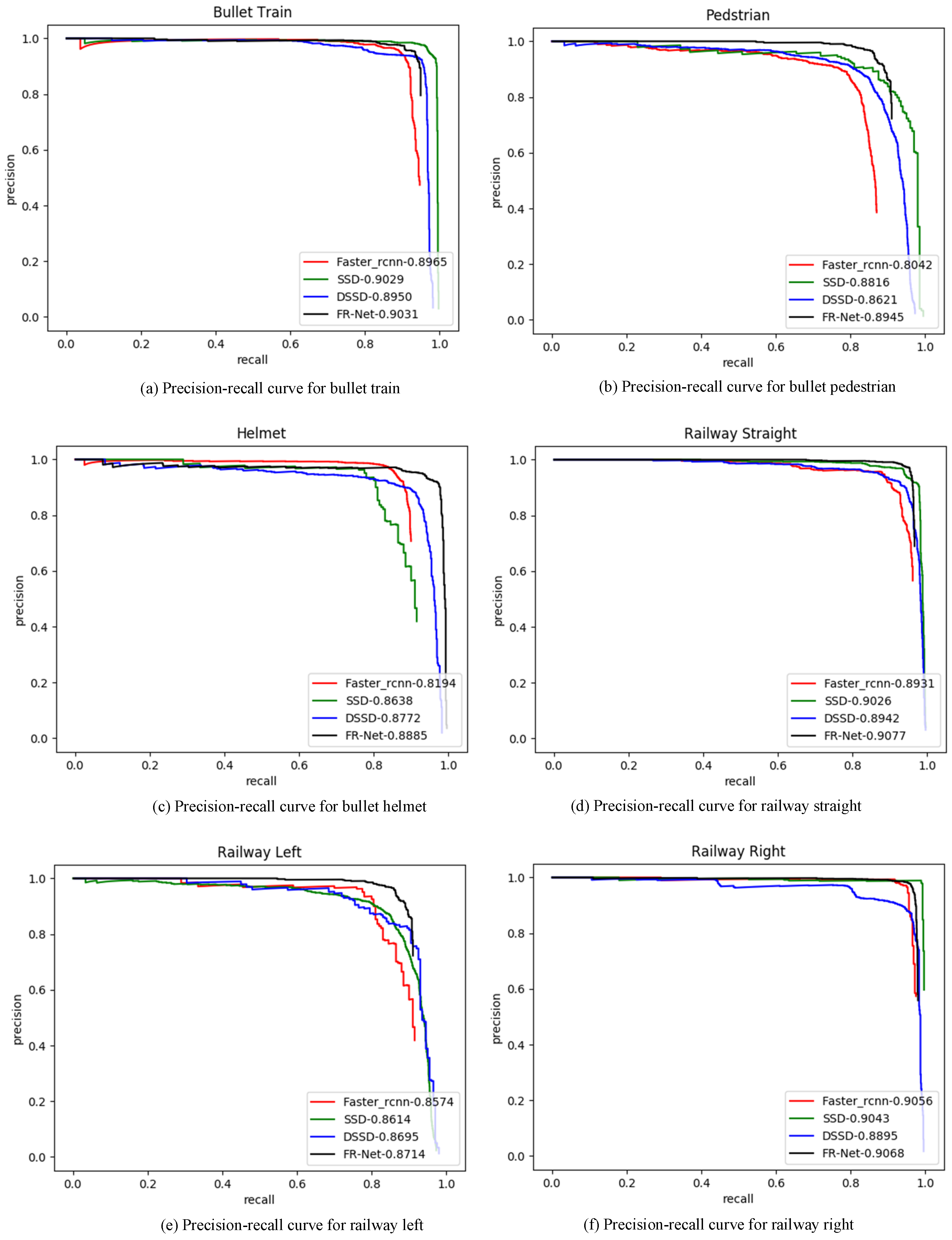

5.2. Effectiveness Performance

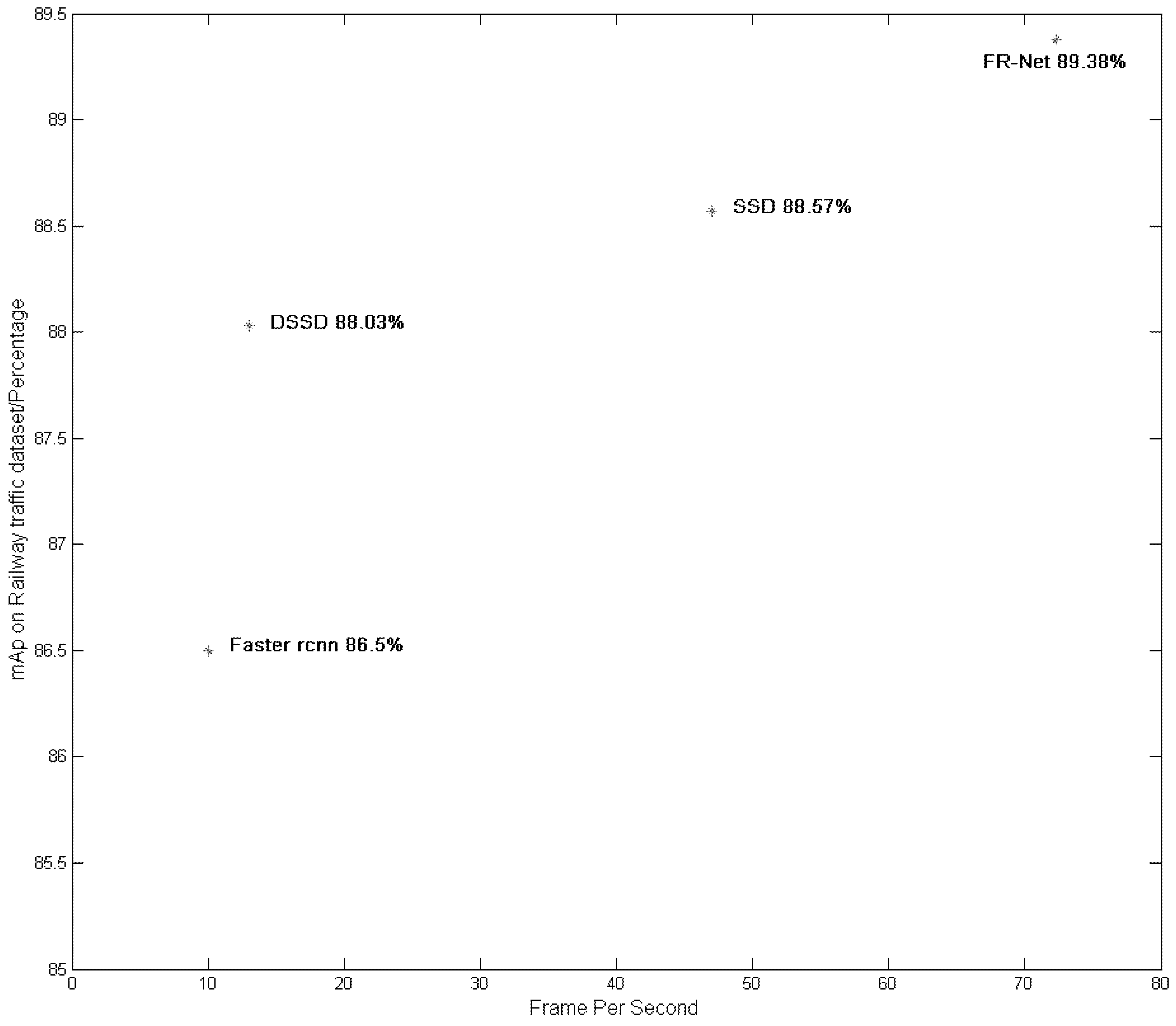

Comparison with State-of-Art

5.3. Runtime Performance

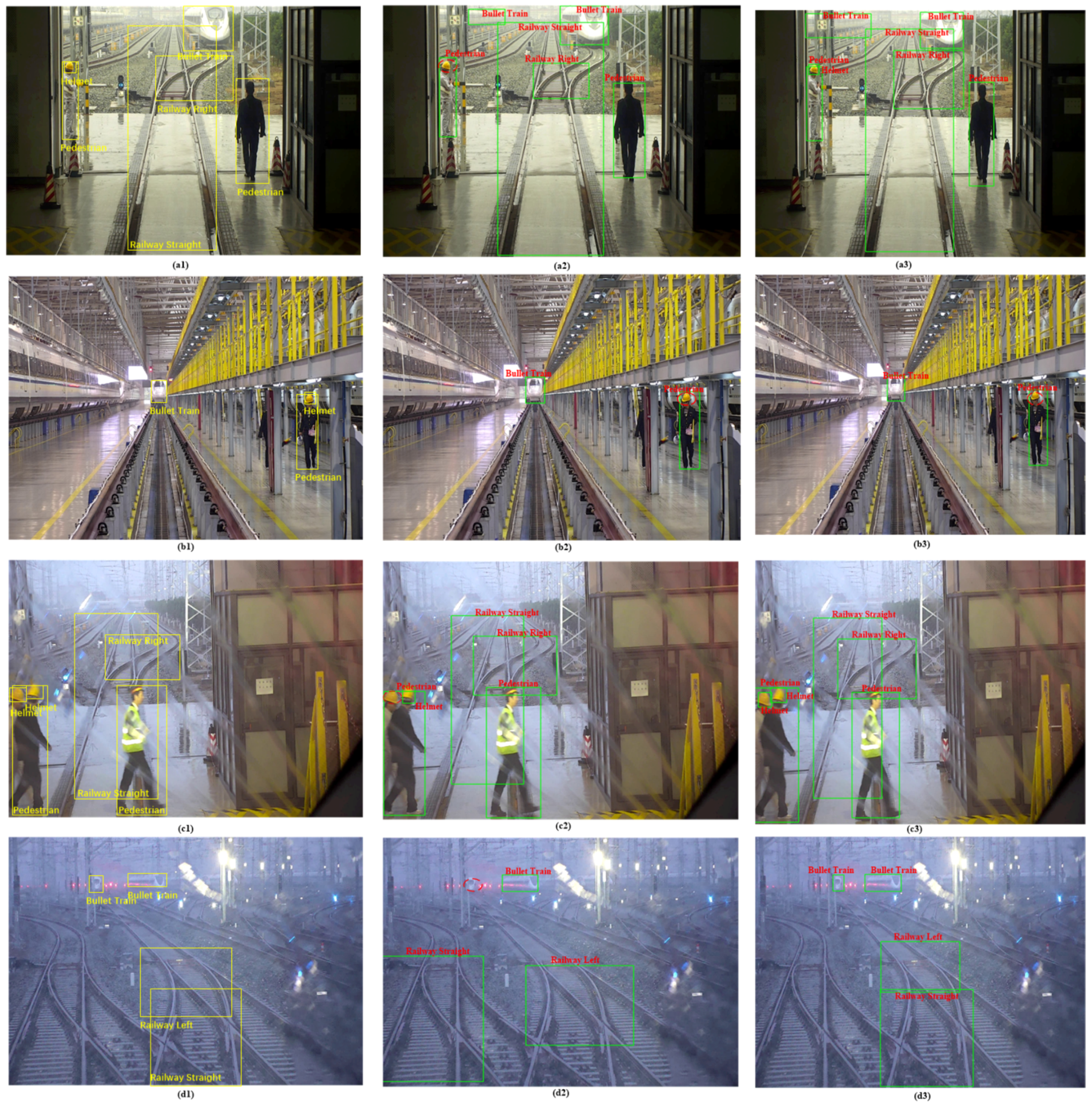

5.4. Visual Results of Small-Object Detection

5.5. Robustness Test

5.6. Ablation Study

5.6.1. Comparison of Various Designs

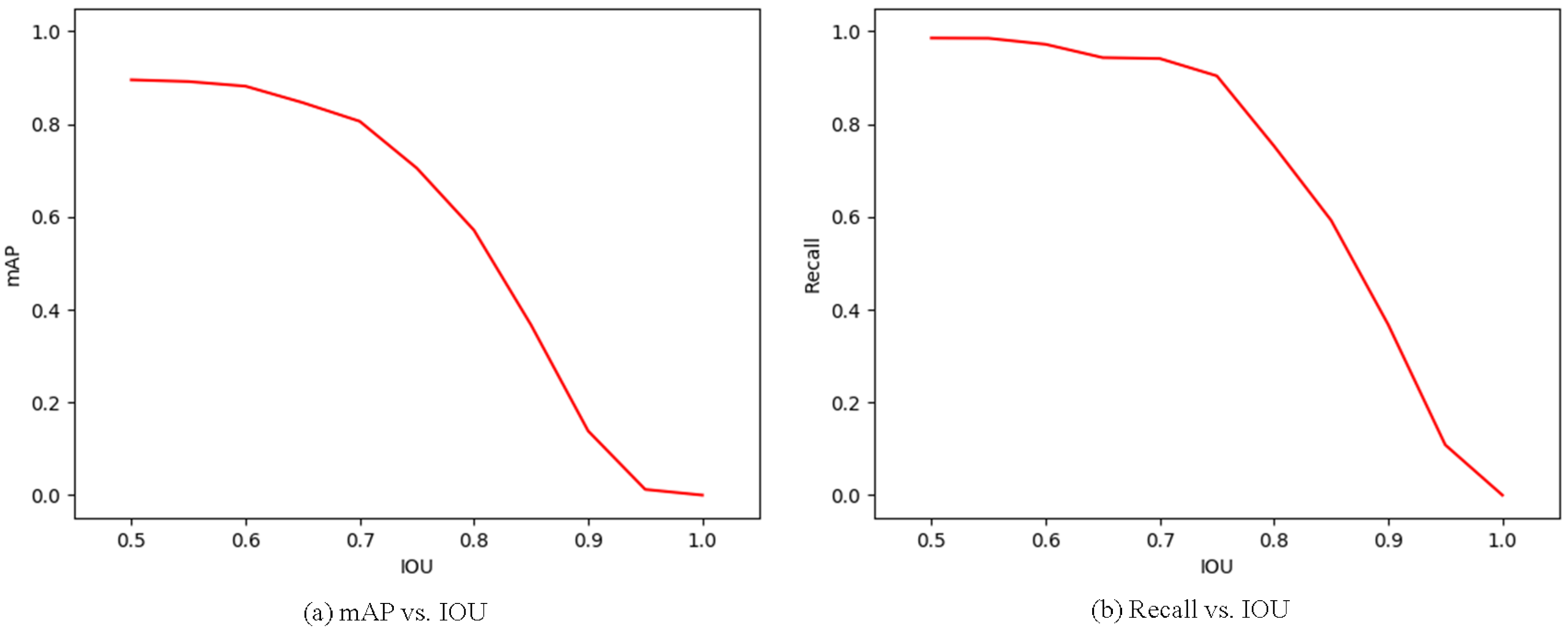

5.6.2. Analysis of mAP and Recall vs. IOU

5.6.3. Performance with Respect to Different Input Sizes

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Vaa, T.; Penttinen, M.; Spyropoulou, I. Intelligent transport systems and effects on road traffic accidents: State of the art. IET Intell. Transp. Syst. 2007, 1, 81–88. [Google Scholar] [CrossRef]

- Edwards, S.; Evans, G.; Blythe, P.; Brennan, D.; Selvarajah, K. Wireless technology applications to enhance traveller safety. IET Intell. Transp. Syst. 2012, 6, 328–335. [Google Scholar] [CrossRef]

- Dimitrakopoulos, G.; Bravos, G.; Nikolaidou, M.; Anagnostopoulos, D. Proactive knowledge-based intelligent transportation system based on vehicular sensor networks. IET Intell. Transp. Syst. 2013, 7, 454–463. [Google Scholar] [CrossRef]

- Ran, B.; Jin, P.J.; Boyce, D.; Qiu, T.Z.; Cheng, Y. Perspectives on future transportation research: Impact of intelligent transportation system technologies on next-generation transportation modeling. J. Intell. Transp. Syst. 2012, 16, 226–242. [Google Scholar] [CrossRef]

- Liu, L.; Zhou, F.; He, Y. Vision-based fault inspection of small mechanical components for train safety. IET Intell. Transp. Syst. 2016, 10, 130–139. [Google Scholar] [CrossRef]

- Sun, J.; Xiao, Z.; Xie, Y. Automatic multi-fault recognition in TFDS based on convolutional neural network. Neurocomputing 2017, 222, 127–136. [Google Scholar] [CrossRef]

- Liu, L.; Zhou, F.; He, Y. Automated Visual Inspection System for Bogie Block Key under Complex Freight Train Environment. IEEE Trans. Instrum. Meas. 2015, 65, 2–14. [Google Scholar] [CrossRef]

- Zhou, F.; Zou, R.; Qiu, Y.; Gao, H. Automated visual inspection of angle cocks during train operation. Proc. Inst. Mech. Eng. Part F 2013, 228, 794–806. [Google Scholar] [CrossRef]

- Pu, Y.R.; Chen, L.W.; Lee, S.H. Study of Moving Obstacle Detection at Railway Crossing by Machine Vision. Inform. Technol. J. 2014, 13, 2611–2618. [Google Scholar] [CrossRef]

- Öörni, R. Reliability of an in-vehicle warning system for railway level crossings—A user-oriented analysis. IET Intell. Transp. Syst. 2014, 8, 9–20. [Google Scholar] [CrossRef]

- Silar, Z.; Dobrovolny, M. Utilization of Directional Properties of Optical Flow for Railway Crossing Occupancy Monitoring. In Proceedings of the International Conference on IT Convergence and Security, Macau, China, 16–18 December 2013; pp. 1–4. [Google Scholar]

- Salmane, H.; Khoudour, L.; Ruichek, Y. Improving safety of level crossings by detecting hazard situations using video based processing. In Proceedings of the IEEE International Conference on Intelligent Rail Transportation, Beijing, China, 30 August–1 September 2013; pp. 179–184. [Google Scholar]

- Li, J. Discussion on Increasing Establishment Quality of Shunting Operation Scheme. Railway Transp. Econ. 2015, 37, 24–26. [Google Scholar]

- Feng, W.; Ma, B.; Liu, M. Thoughts on Shunting Operation Safety in Station. Railway Freight Transp. 2014, 32, 28–32. [Google Scholar]

- Feng, W.; Sun, Z.; Yu, X. Thoughts on Safety Management of Railway Shunting Operation. Railway Transp. Econ. 2010, 32, 63–65. [Google Scholar]

- Zhang, Z. Analysis on Railway Shunting Accident and Study on the Countermeasure. China Railway 2009, 31, 26–30. [Google Scholar]

- Lecun, Y.; Bengio, Y.; Hinton, G.E. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep learning for visual understanding: A review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv, 2017; arXiv:1704.04861. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector; Computer Vision—ECCV 2016; Springer: Berlin, Germany, 2016; pp. 21–37. [Google Scholar]

- Anti Collision Device Network [EB/OL]. Available online: http://en.wikipedia.org/wiki/Anti_Collision_Device (accessed on 9 June 2018).

- Liu, H.; Sheng, M.M.; Yang, X. A vehicle millimeter wave train collision avoidance radar system research. J. Radar 2013, 2, 234–238. [Google Scholar] [CrossRef]

- Silar, Z.; Dobrovolny, M. The obstacle detection on the railway crossing based on optical flow and clustering. In Proceedings of the International Conference on Telecommunications and Signal Processing, Rome, Italy, 2–4 July 2013; pp. 755–759. [Google Scholar]

- Nakasone, R.; Nagamine, N.; Ukai, M.; Mukojima, H.; Deguchi, D.; Murase, H. Frontal Obstacle Detection Using Background Subtraction and Frame Registration. Q. Rep. RTRI 2017, 58, 298–302. [Google Scholar] [CrossRef] [Green Version]

- Viola, P.; Jones, M. Rapid Object Detection using a Boosted Cascade of Simple Features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2001), Kauai, HI, USA, 8–14 December 2001; Volume 1, pp. I-511–I-518. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; Mcallester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 47, 6–7. [Google Scholar] [CrossRef] [PubMed]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Pang, Y.; Ye, L.; Li, X.; Pan, J. Incremental Learning with Saliency Map for Moving Object Detection. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 640–651. [Google Scholar] [CrossRef]

- Chen, B.H.; Shi, L.F.; Ke, X. A Robust Moving Object Detection in Multi-Scenario Big Data for Video Surveillance. IEEE Trans. Circuits Syst. Video Technol. 2018. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the International Conference on Neural Information Processing Systems, Lake Tahoe, CA, USA, 3–8 December 2012; Curran Associates Inc.: New York, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Uijlings, J.R.; Van De Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective Search for Object Recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef] [Green Version]

- Pinheiro, P.O.; Collobert, R.; Dollár, P. Learning to segment object candidates. Adv. Neural Inform. Process. Syst. 2015, 2, 1990–1998. [Google Scholar]

- Girshick, R.B.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; LeCun, Y. OverFeat: Integrated Recognition, Localization and Detection using Convolutional Networks. arXiv, 2013; arXiv:1312.6229. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; IEEE Computer Society: Washington, DC, USA, 2016; pp. 779–788. [Google Scholar]

- Fu, C.Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. DSSD: Deconvolutional Single Shot Detector. arXiv, 2017; arXiv:1701.06659. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. arXiv, 2017; 2999–3007arXiv:1708.02002. [Google Scholar]

- Zhang, S.; Zhu, X.; Lei, Z.; Shi, H.; Wang, X.; Li, S.Z. S^3FD: Single Shot Scale-Invariant Face Detector. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; IEEE Computer Society: Washington, DC, USA, 2017; pp. 192–201. [Google Scholar]

- Alom, M.Z.; Hasan, M.; Yakopcic, C.; Taha, T.M.; Asari, V.K. Improved Inception-Residual Convolutional Neural Network for Object Recognition. arXiv, 2017; arXiv:1712.09888. [Google Scholar]

- Wang, M.; Liu, B.; Foroosh, H. Factorized convolutional neural networks. arXiv, 2016; arXiv:1608.04337. [Google Scholar]

- Lin, T.; Doll´ar, P.; Girshick, R.B.; He, K.; Hariharan, B.; Belongie, S.J. Feature pyramid networks for object detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Chen, L.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutionalnets and fully connected CRFS. In Proceedings of the ICLR, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

| Type/Stride | Filter Shape | Input Size |

|---|---|---|

| Conv0/s2 | 3 × 3 × 3 × 32 | 320 × 320 × 3 |

| Conv1 dw/s1 | 3 × 3 × 32 dw | 160 × 160 × 32 |

| Conv1/s1 | 1 × 1 × 32 × 64 | 160 × 160 × 32 |

| Conv2 dw/s2 | 3 × 3 × 64 dw | 160 × 160 × 64 |

| Conv2/s1 | 1 × 1 × 64 × 128 | 80 × 80 × 64 |

| Conv3 dw/s1 | 3 × 3 × 128 dw | 80 × 80 × 128 |

| Conv3/s1 | 1 × 1 × 128 × 128 | 80 × 80 × 128 |

| Conv4 dw/s2 | 3 × 3 × 128 dw | 80 × 80 × 128 |

| Conv4/s1 | 1 × 1 × 128 × 256 | 40 × 40 × 128 |

| Conv5 dw/s2 | 3 × 3 × 128 dw | 40 × 40 × 256 |

| Conv5/s1 | 1 × 1 × 256 × 256 | 40 × 40 × 256 |

| Conv6 dw/s2 | 3 × 3 × 256 dw | 40 × 40 × 256 |

| Conv6/s1 | 1 × 1 × 256 × 512 | 20 × 20 × 256 |

| Conv7 dw/s1 | 3 × 3 × 512 dw | 20 × 20 × 512 |

| Conv7/s1 | 1 × 1 × 512 × 512 | 20 × 20 × 512 |

| Conv8 dw/s1 | 3 × 3 × 512 dw | 20 × 20 × 512 |

| Conv8/s1 | 1 × 1 × 512 × 512 | 20 × 20 × 512 |

| Conv9 dw/s1 | 3 × 3 × 512 dw | 20 × 20 × 512 |

| Conv9/s1 | 1 × 1 × 512 × 512 | 20 × 20 × 512 |

| Conv10 dw/s1 | 3 × 3 × 512 dw | 20 × 20 × 512 |

| Conv10/s1 | 1 × 1 × 512 × 512 | 20 × 20 × 512 |

| Conv11 dw/s1 | 3 × 3 × 512 dw | 20 × 20 × 512 |

| Conv11/s1 | 1 × 1 × 512 × 512 | 20 × 20 × 512 |

| Conv12 dw/s2 | 3 × 3 × 512 dw | 20 × 20 × 512 |

| Conv12/s1 | 1 × 1 × 512 × 1024 | 10 × 10 × 512 |

| Conv13 dw/s1 | 3 × 3 × 1024 dw | 10 × 10 × 1024 |

| Conv13/s1 | 1 × 1 × 1024 × 1024 | 10 × 10 × 1024 |

| Conv14_1/s1 | 3 × 3 × 1024 × 256 | 10 × 10 × 1024 |

| Conv14_2/s2 | 3 × 3 × 256 × 512 | 10 × 10 × 256 |

| Class | Number |

|---|---|

| Bullet Train | 3671 |

| Pedestrian | 9371 |

| Railway Straight | 3863 |

| Railway Left | 652 |

| Railway Right | 1804 |

| Helmet | 3089 |

| Method | Backbone | Input Size | Boxes | FPS | Model Size (M) | mAP (%) |

|---|---|---|---|---|---|---|

| SSD | VGG-16 | 8732 | 47 | 98.6 | 0.8861 | |

| Faster-RCNN | VGG-16 | 300 | 10 | 521 | 0.8632 | |

| DSSD-321 | ResNet-101 | 17,080 | 13 | 623.4 | 0.8813 | |

| FR-Net-320 | VGG-16 | 6375 | 72.3 | 74.2 | 0.8953 |

| Component | Mobile-Net | SFR-Net | FR-Net |

|---|---|---|---|

| Depthwise–pointwise? | - | ||

| The coarse object detection modules? | - | ||

| The object detection module? | - |

| Method | mAP(%) | FPS | Bullet Train | Pedestrian | Railway Straight | Railway Left | Railway Right | Helmet |

|---|---|---|---|---|---|---|---|---|

| Mobile_Net | 0.8692 | 106 | 0.8891 | 0.8315 | 0.9012 | 0.8628 | 0.9069 | 0.8239 |

| SFR-Net | 0.8997 | 26.1 | 0.9067 | 0.8933 | 0.9071 | 0.8841 | 0.9075 | 0.8994 |

| FR-Net | 0.8953 | 72.3 | 0.9031 | 0.8945 | 0.9077 | 0.8714 | 0.9068 | 0.8885 |

| Method | mAP(%) | FPS | Bullet Train | Pedestrian | Railway Straight | Railway Left | Railway Right | Helmet |

|---|---|---|---|---|---|---|---|---|

| FR-Net-512 | 0.9046 | 43.2 | 0.9046 | 0.9017 | 0.9060 | 0.9007 | 0.9073 | 0.9075 |

| FR-Net-320 | 0.8953 | 72.3 | 0.9031 | 0.8945 | 0.9077 | 0.8714 | 0.9068 | 0.8885 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, T.; Wang, B.; Song, P.; Li, J. Automatic Railway Traffic Object Detection System Using Feature Fusion Refine Neural Network under Shunting Mode. Sensors 2018, 18, 1916. https://doi.org/10.3390/s18061916

Ye T, Wang B, Song P, Li J. Automatic Railway Traffic Object Detection System Using Feature Fusion Refine Neural Network under Shunting Mode. Sensors. 2018; 18(6):1916. https://doi.org/10.3390/s18061916

Chicago/Turabian StyleYe, Tao, Baocheng Wang, Ping Song, and Juan Li. 2018. "Automatic Railway Traffic Object Detection System Using Feature Fusion Refine Neural Network under Shunting Mode" Sensors 18, no. 6: 1916. https://doi.org/10.3390/s18061916