An Effective Palmprint Recognition Approach for Visible and Multispectral Sensor Images

Abstract

:1. Introduction

2. Auto-Encoder (AE)

- ▪

- AE, which is a type of neural network, can be easily used in a parallel fashion.

- ▪

- The pretrained AE model with its initial weights can be utilized to produce more robust latent representations of the input data.

- ▪

- The nature of AE’s learning algorithms, such as online or iterative gradient descent, can allow us to train the AE model by batches compared to other dimensionality reduction methods which require the whole data in the training phase.

3. Extreme Learning Machine (ELM)

Regularized Extreme Learning Machine (RELM)

4. Proposed Palmprint Recognition Approach

4.1. HOG-SGF Based Feature Extraction

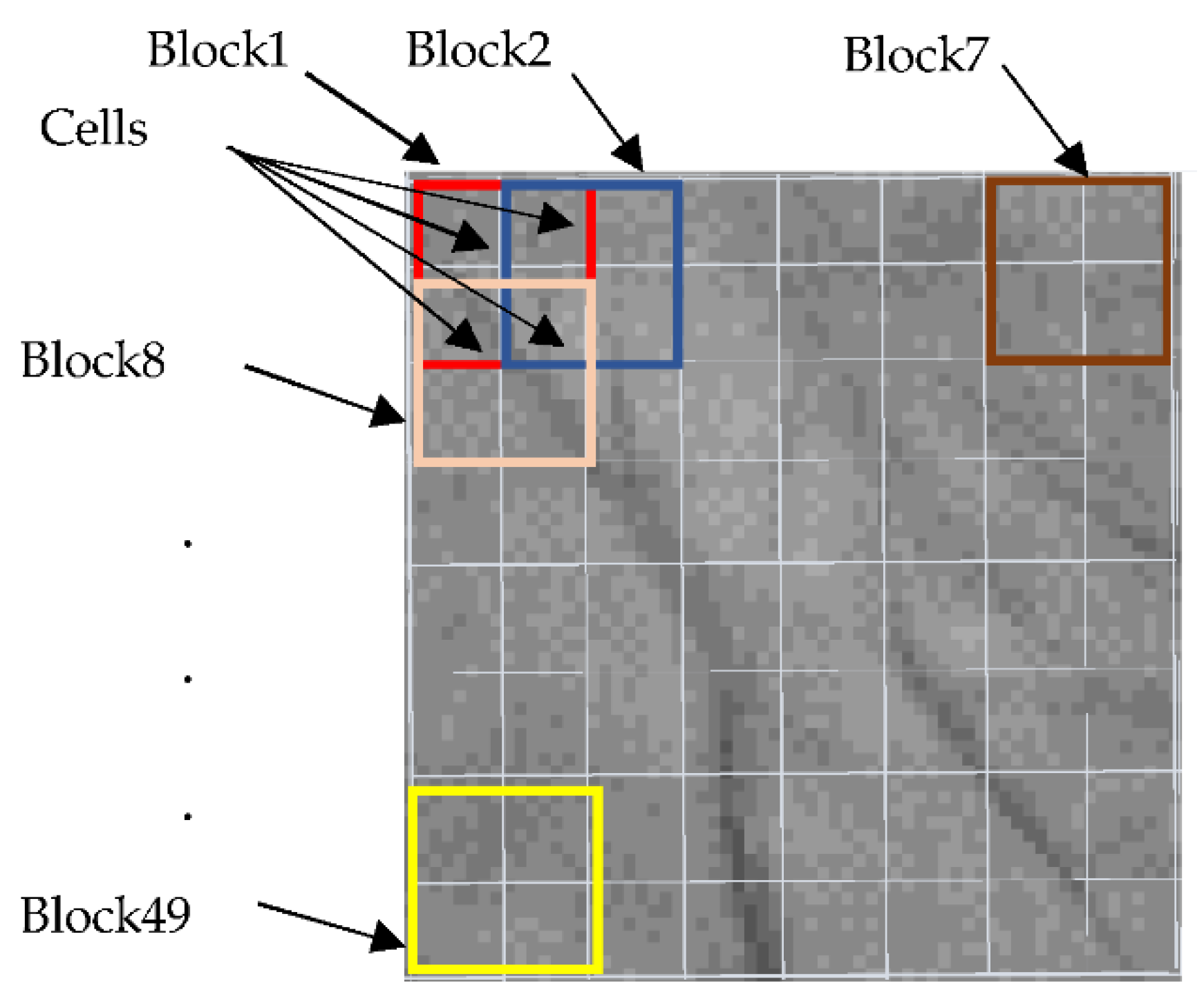

4.1.1. Dividing the Input Image into Cells and Blocks

4.1.2. Computing the Gradients’ Orientation

4.1.3. Constructing the Histograms of the Gradients’ Orientation

4.1.4. Block Normalization and Concatenation

4.1.5. Creating the Kernels of Steerable Gaussian Filter (SGF)

4.1.6. Extracting Mean and Standard Deviation Features from the Filter Responses of an Image

4.1.7. Feature Vector Normalization

4.2. AE Based Feature Reduction

4.3. Palmprint Recognition Using RELM Classifier

| Algorithm 1. Palmprint Recognition Using RELM Classifier |

| Input: the reduced features of training and testing set and setting parameters Output: the labels of testing set Learning stage: 1: Initializing the weights and biases of RELM randomly 2: Computing the matrix, H of the hidden layout using Equation (8) 3: Computing the matrix, T of the hidden layer using Equation (9) 4: Computing the output weights, using Equation (13) Classification stage: 5: Computing the matrix, of the hidden layout using Equation (8) 6: Computing the output weights, Y using Equation (29) 7: Classifying the testing user ID using Equation (30) depending on whether this ID belongs to the user ID in the training set. |

5. Experiment and Discussion

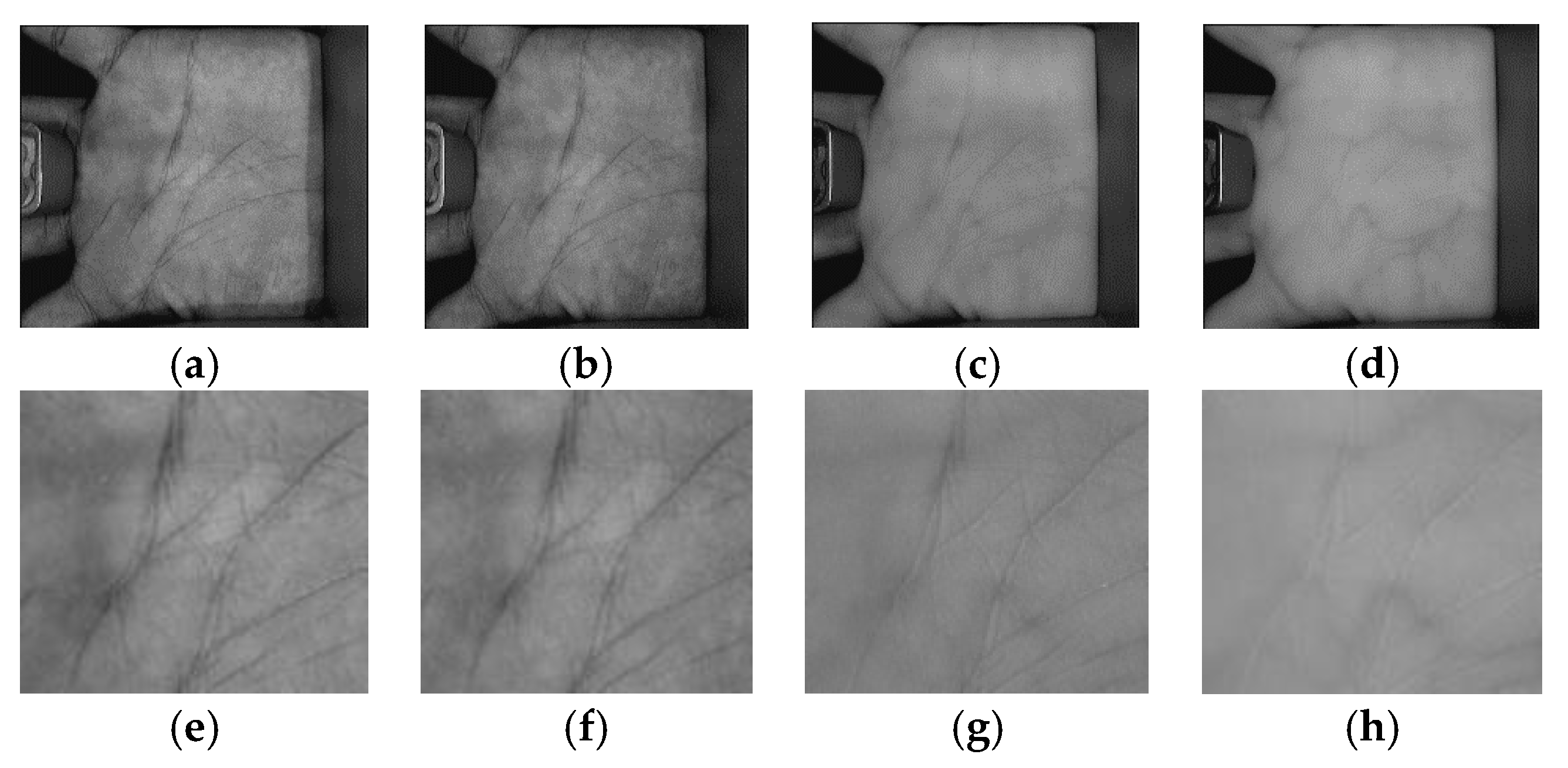

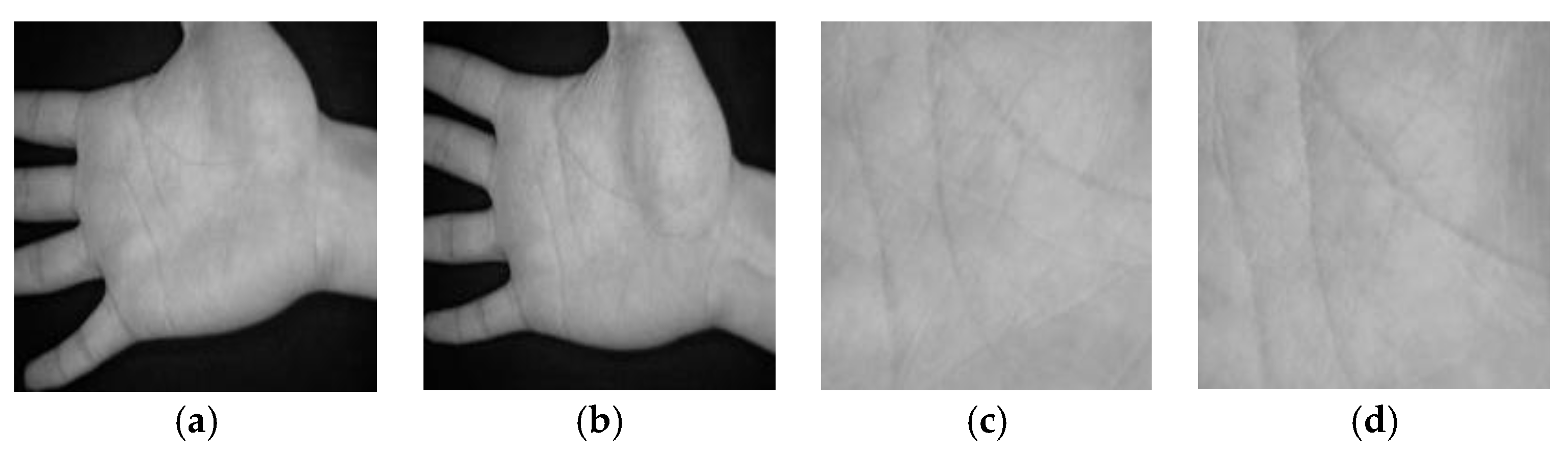

5.1. Description of Palmprint Databases

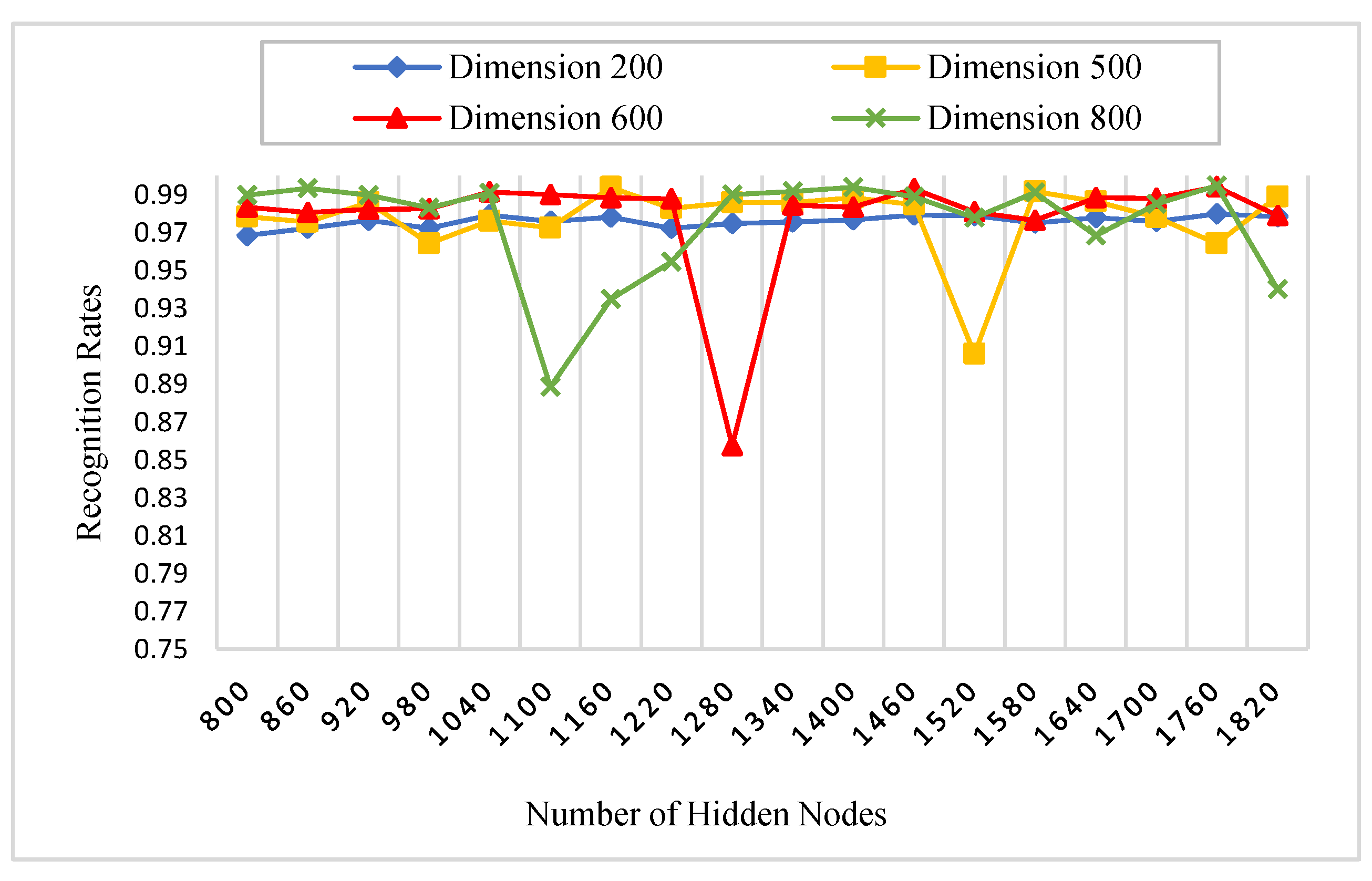

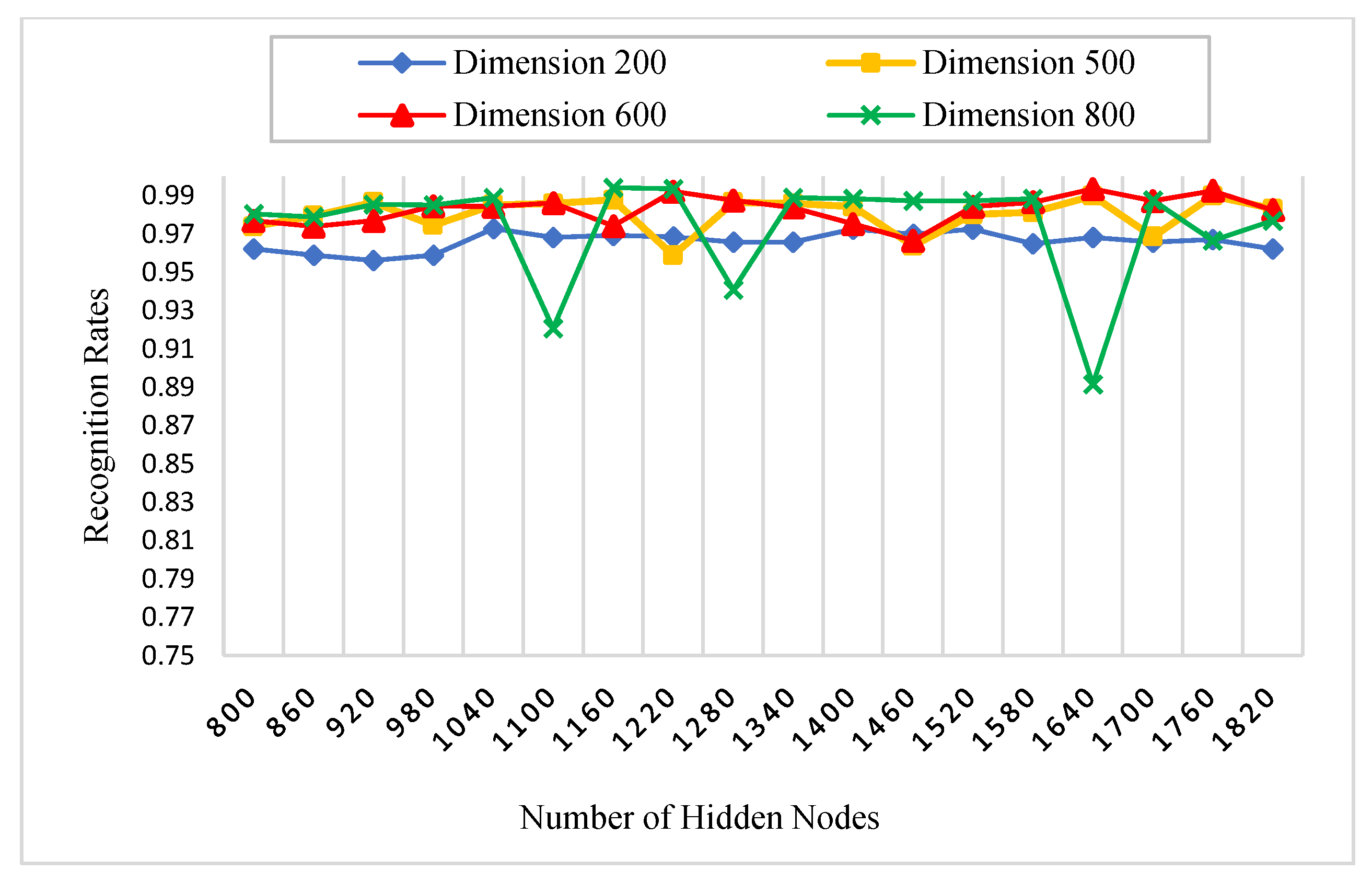

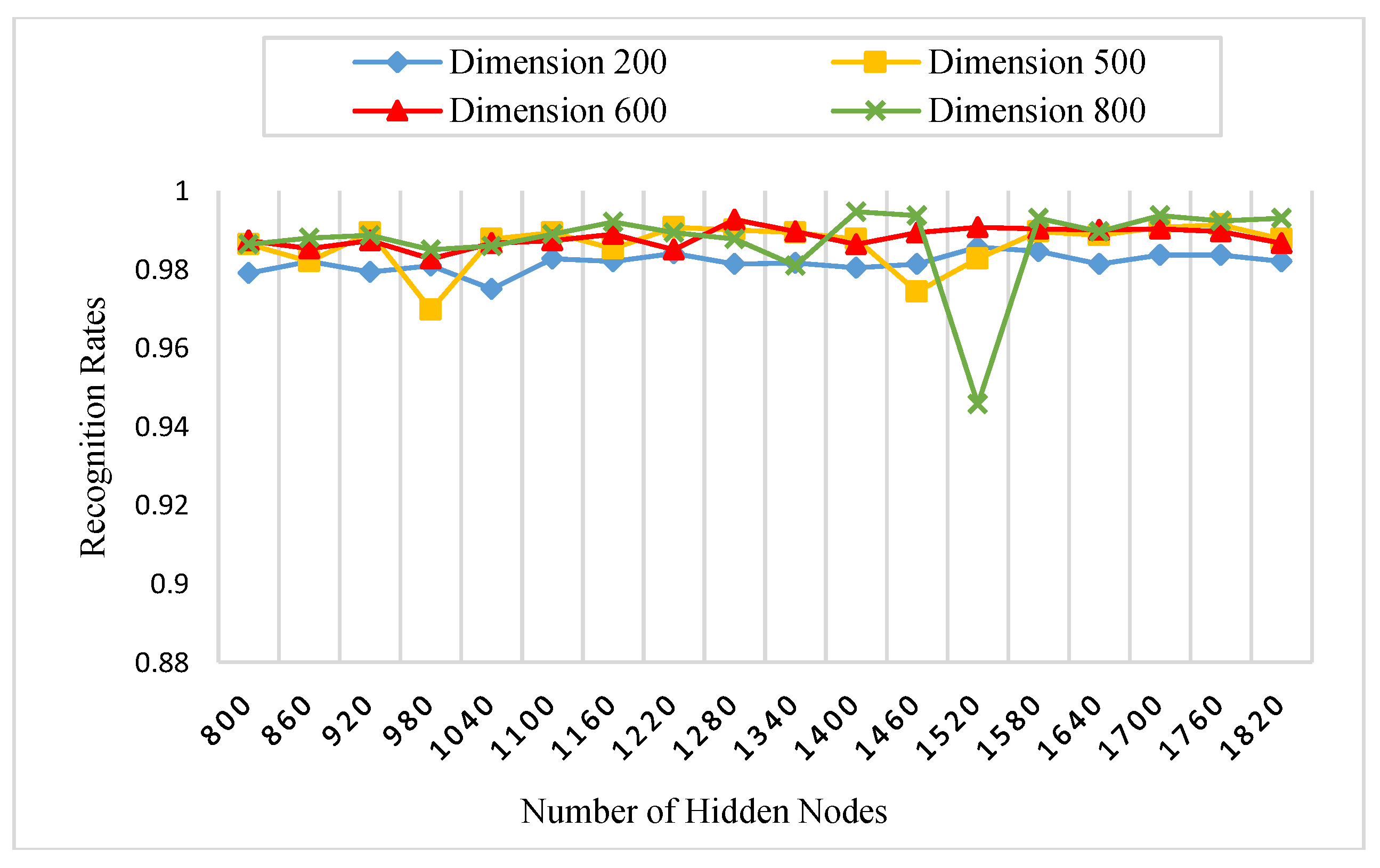

5.2. Parameter Settings

5.3. Experiment on Multispectral Palmprints

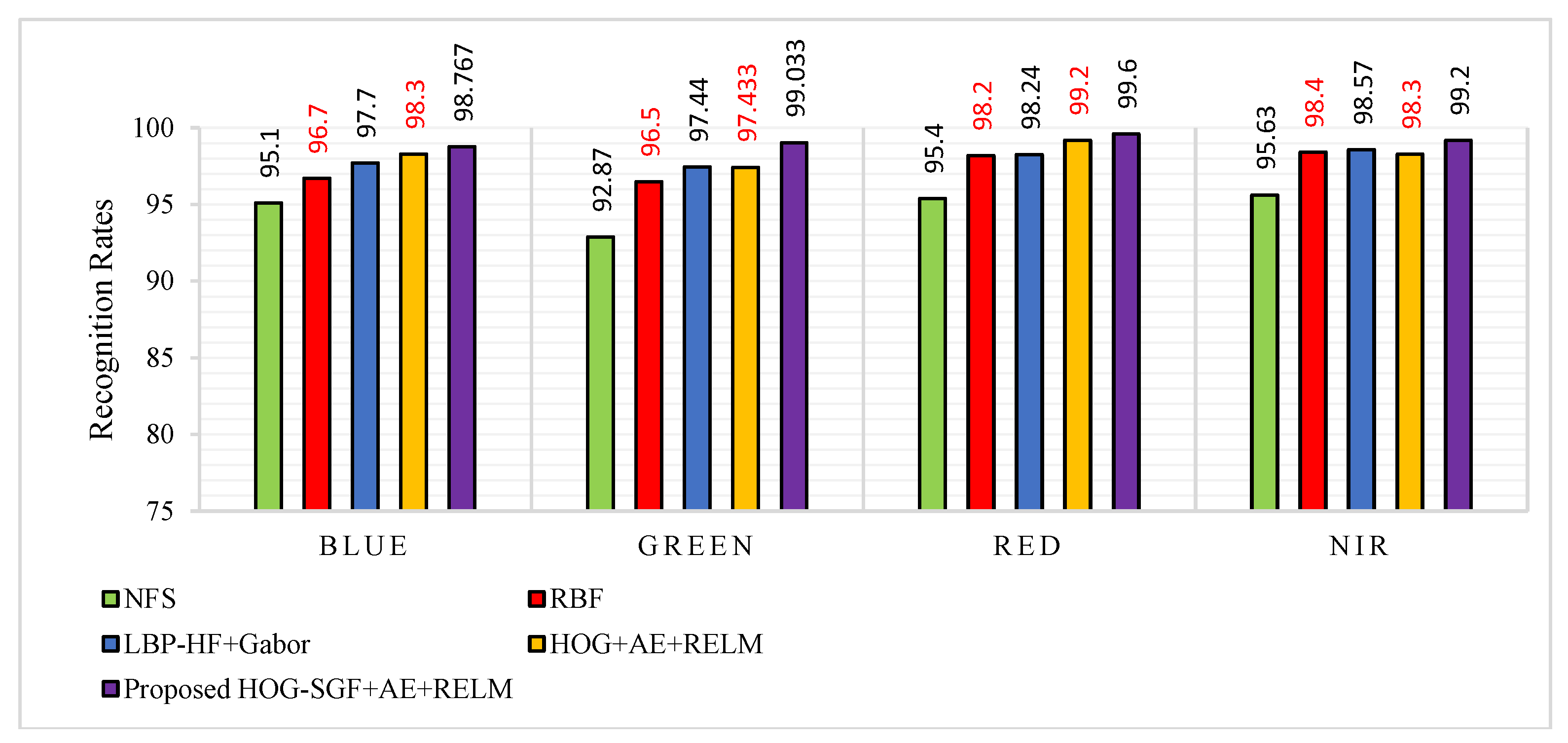

5.3.1. Procedure 1

5.3.2. Procedure 2

5.3.3. Procedure 3

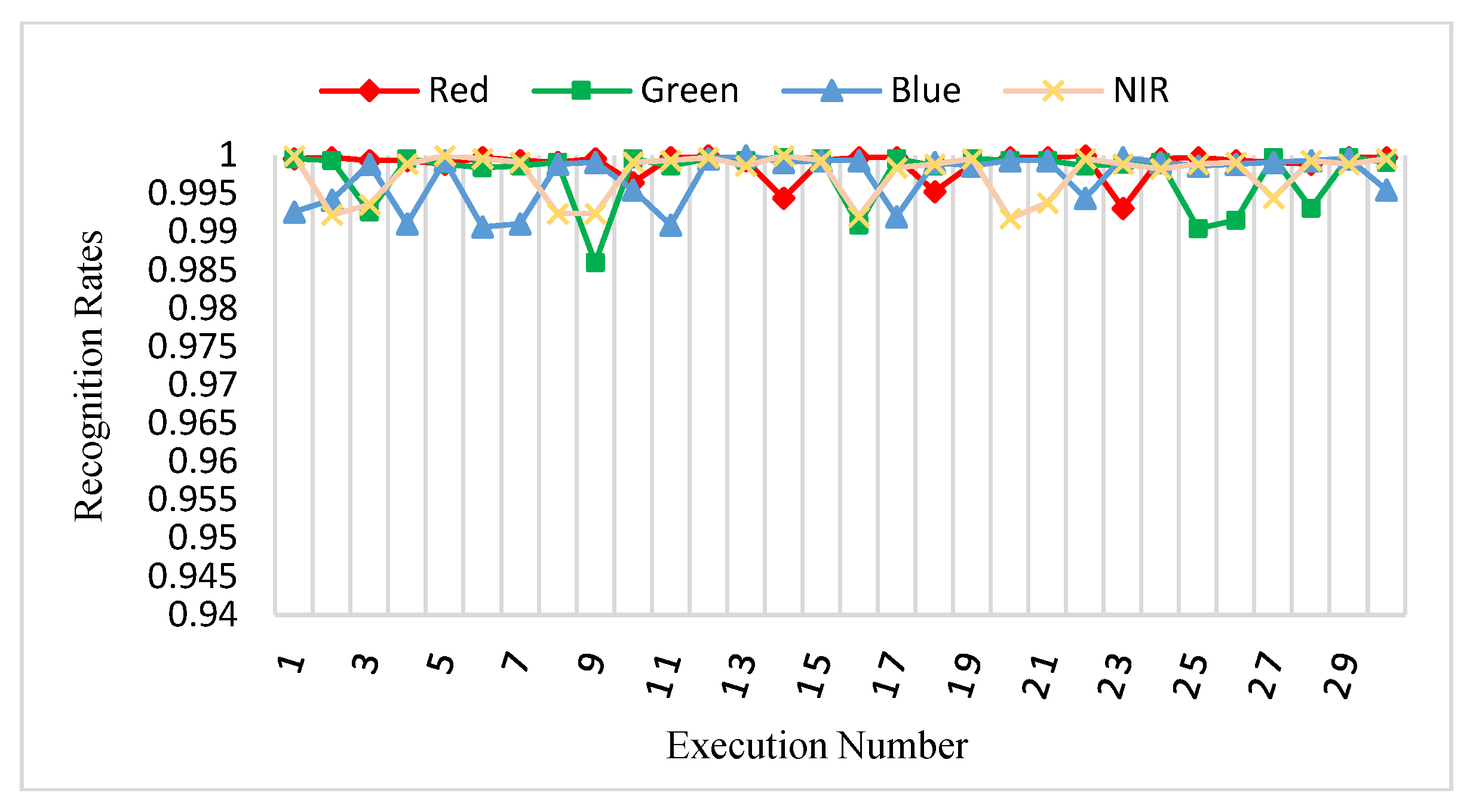

5.3.4. Procedure 4

5.4. Experiment on Grayscale Palmprints

5.5. Computational Efficiency

6. Conclusions and Future Work

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Jain, K. Biometric Recognition: Q & A. Nature 2007, 449, 38–40. [Google Scholar] [PubMed]

- Fei, L.; Xu, Y.; Zhang, B.; Fang, X.; Wen, J. Low-rank representation integrated with principal line distance for contactless palmprint recognition. Neurocomputing 2016, 218, 264–275. [Google Scholar] [CrossRef]

- Oloyede, M.; Hancke, G. Unimodal and Multimodal Biometric Sensing Systems: A Review. IEEE Access 2016, 4, 7532–7555. [Google Scholar] [CrossRef]

- Algashaam, F.; Nguyen, K.; Alkanhal, M.; Chandran, V.; Boles, W.; Banks, J. Multispectral Periocular Classification with Multimodal Compact Multi-Linear Pooling. IEEE Access 2017, 5, 14572–14578. [Google Scholar] [CrossRef]

- Zhang, D.; Guo, Z.; Lu, G.; Zhang, L.; Zuo, W. An Online System of Multispectral Palmprint Verification. IEEE Trans. Instrum. Meas. 2010, 59, 480–490. [Google Scholar] [CrossRef]

- Hong, D.; Liu, W.; Su, J.; Pan, Z.; Wang, G. A novel hierarchical approach for multispectral palmprint recognition. Neurocomputing 2015, 151, 511–521. [Google Scholar] [CrossRef]

- Han, C.C.; Cheng, H.L.; Lin, C.L.; Fan, K.C. Personal authentication using palm-print features. Pattern Recognit. 2003, 36, 371–381. [Google Scholar] [CrossRef]

- Wu, X.; Wang, K.; Zhang, D. HMMs Based Palmprint Identification. In Biometric Authentication; Springer: Berlin/Heidelberg, Germany, 2004; pp. 775–781. [Google Scholar]

- Raghavendra, R.; Dorizzi, B.; Rao, A.; Kumar, G.H. Designing efficient fusion schemes for multimodal biometric systems using face and palmprint. Pattern Recognit. 2011, 44, 1076–1088. [Google Scholar] [CrossRef]

- Pan, X.; Ruan, Q.; Wang, Y. Palmprint recognition using Gabor local relative features. Comput. Eng. Appl. 2012, 48, 706–712. [Google Scholar]

- Badrinath, G.; Kachhi, N.; Gupta, P. Verification system robust to occlusion using low-order Zernike moments of palmprint sub-images. Telecommun. Syst. 2010, 47, 275–290. [Google Scholar] [CrossRef]

- Gan, J.Y.; Zhou, D.P. A novel method for palmprint recognition based on wavelet transform. In Proceedings of the 8th IEEE International Conference on Signal Processing, Guilin, China, 16–20 November 2006; Volume 3. [Google Scholar]

- Li, J.; Cao, J.; Lu, K. Improve the two-phase test samples representation method for palmprint recognition. Optik Int. J. Light Electron. Opt. 2013, 124, 6651–6656. [Google Scholar] [CrossRef]

- Xu, Y.; Zhu, Q.; Fan, Z.; Qiu, M.; Chen, Y.; Liu, H. Coarse to fine K nearest neighbor classifier. Pattern Recognit. Lett. 2013, 34, 980–986. [Google Scholar] [CrossRef]

- Zhang, S.; Gu, X. Palmprint recognition based on the representation in the feature space. Optik Int. J. Light Electron. Opt. 2013, 124, 5434–5439. [Google Scholar] [CrossRef]

- Xu, X.; Guo, Z.; Song, C.; Li, Y. Multispectral Palmprint Recognition Using a Quaternion Matrix. Sensors 2012, 12, 4633–4647. [Google Scholar] [CrossRef] [PubMed]

- Lai, Z.; Mo, D.; Wong, W.; Xu, Y.; Miao, D.; Zhang, D. Robust Discriminant Regression for Feature Extraction. IEEE Trans. Cybern. 2017, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Wen, J.; Lai, Z.; Zhan, Y.; Cui, J. The L 2,1-norm-based unsupervised optimal feature selection with applications to action recognition. Pattern Recognit. 2016, 60, 515–530. [Google Scholar] [CrossRef]

- Wong, W.; Lai, Z.; Wen, J.; Fang, X.; Lu, Y. Low-Rank Embedding for Robust Image Feature Extraction. IEEE Trans. Image Process. 2017, 26, 2905–2917. [Google Scholar] [CrossRef] [PubMed]

- Lu, G.; Zhang, D.; Wang, K. Palmprint recognition using eigenpalms features. Pattern Recognit. Lett. 2003, 24, 1463–1467. [Google Scholar] [CrossRef]

- Du, F.; Yu, P.; Li, H.; Zhu, L. Palmprint recognition using Gabor feature-based bidirectional 2DLDA. In Computer Science for Environmental Engineering and EcoInformatics; Springer: Berlin/Heidelberg, Germany, 2011; pp. 230–235. [Google Scholar]

- Xu, X.; Lu, L.; Zhang, X.; Lu, H.; Deng, W. Multispectral palmprint recognition using multiclass projection extreme learning machine and digital shearlet transform. Neural Comput. Appl. 2014, 27, 143–153. [Google Scholar] [CrossRef]

- Lu, L.; Zhang, X.; Xu, X.; Shang, D. Multispectral image fusion for illumination-invariant palmprint recognition. PLoS ONE 2017, 12, e0178432. [Google Scholar] [CrossRef] [PubMed]

- El-Tarhouni, W.; Boubchir, L.; Al-Maadeed, N.; Elbendak, M.; Bouridane, A. Multispectral palmprint recognition based on local binary pattern histogram fourier features and gabor filter. In Proceedings of the 6th IEEE European Workshop on Visual Information Processing (EUVIP), Marseille, France, 25–27 October 2016; pp. 1–6. [Google Scholar]

- Rida, I.; Al-Maadeed, S.; Mahmood, A.; Bouridane, A.; Bakshi, S. Palmprint Identification Using an Ensemble of Sparse Representations. IEEE Access 2018, 6, 3241–3248. [Google Scholar] [CrossRef]

- Kong, A.; Zhang, D.; Kamel, M. Palmprint identification using feature-level fusion. Pattern Recognit. 2006, 39, 478–487. [Google Scholar] [CrossRef]

- Sun, Z.; Tan, T.; Wang, Y.; Li, S.Z. Ordinal palmprint representation for personal identification. In Computer Vision and Pattern Recognit. (CVPR); IEEE: Piscataway, NJ, USA, 2005; pp. 279–284. [Google Scholar]

- Kong, K.; Zhang, D. Competitive coding scheme for palmprint verification. In Proceedings of the 17th IEEE International Conference on Pattern Recognit (ICPR), Cambridge, UK, 23–26 August 2004; Volume 1, pp. 520–523. [Google Scholar]

- Bounneche, M.; Boubchir, L.; Bouridane, A.; Nekhoul, B.; Ali-Chérif, A. Multi-spectral palmprint recognition based on oriented multiscale log-Gabor filters. Neurocomputing 2016, 205, 274–286. [Google Scholar] [CrossRef]

- Zhang, L.; Li, L.; Yang, A.; Shen, Y.; Yang, M. Towards contactless palmprint recognition: A novel device, a new benchmark, and a collaborative representation based identification approach. Pattern Recognit. 2017, 69, 199–212. [Google Scholar] [CrossRef]

- Jia, W.; Huang, D.; Zhang, D. Palmprint verification based on robust line orientation code. Pattern Recognit. 2008, 41, 1504–1513. [Google Scholar] [CrossRef]

- Guo, Z.; Zhang, D.; Zhang, L.; Zuo, W. Palmprint verification using binary orientation co-occurrence vector. Pattern Recognit. Lett. 2009, 30, 1219–1227. [Google Scholar] [CrossRef]

- Zhang, D.; Zuo, W.; Yue, F. A Comparative Study of Palmprint Recognition Algorithms. ACM Comput. Surv. 2012, 44, 1–37. [Google Scholar] [CrossRef]

- Fei, L.; Xu, Y.; Zhang, D. Half-orientation extraction of palmprint features. Pattern Recognit. Lett. 2016, 69, 35–41. [Google Scholar] [CrossRef]

- Fei, L.; Xu, Y.; Tang, W.; Zhang, D. Double-orientation code and nonlinear matching scheme for palmprint recognition. Pattern Recognit. 2016, 49, 89–101. [Google Scholar] [CrossRef]

- Hao, Y.; Sun, Z.; Tan, T.; Ren, C. Multispectral palm image fusion for accurate contact-free palmprint recognition. In Proceedings of the 15th IEEE International Conference on Image Processing (ICIP), San Diego, CA, USA, 12–15 October 2008; pp. 281–284. [Google Scholar]

- Morales, A.; Ferrer, M.; Kumar, A. Towards contactless palmprint authentication. IET Comput. Vis. 2011, 5, 407. [Google Scholar] [CrossRef]

- Doublet, J.; Lepetit, O.; Revenu, M. Contact less hand recognition using shape and texture features. In Proceedings of the 8th IEEE International Conference on Signal Processing (ICSP), Guilin, China, 16–20 November 2006; Volume 3, pp. 1–4. [Google Scholar]

- Michael, G.O.; Connie, T.; Teoh, A.J. Touch-less palm print biometrics: Novel design and implementation. Image Vis. Comput. 2008, 26, 1551–1560. [Google Scholar] [CrossRef]

- Liou, C.; Huang, J.; Yang, W. Modeling word perception using the Elman network. Neurocomputing 2008, 71, 3150–3157. [Google Scholar] [CrossRef]

- Liou, C.; Cheng, W.; Liou, J.; Liou, D. Autoencoder for words. Neurocomputing 2014, 139, 84–96. [Google Scholar] [CrossRef]

- Japkowicz, N.; Hanson, S.; Gluck, M. Nonlinear Autoassociation Is Not Equivalent to PCA. Neural Comput. 2000, 12, 531–545. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: A new learning scheme of feedforward neural networks. In Proceedings of the IEEE International Joint Conference on Neural Networks, Budapest, Hungary, 25–29 July 2004; Volume 2, pp. 985–990. [Google Scholar]

- Huang, G.; Zhu, Q.; Siew, C. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Huang, G.; Ding, X.; Zhou, H. Optimization method based extreme learning machine for classification. Neurocomputing 2010, 74, 155–163. [Google Scholar] [CrossRef]

- Tikhonov, A. Solution of incorrectly formulated problems and the regularization method. Soviet Meth. Dokl. 1963, 4, 1035–1038. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the International Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–26 June 2005; pp. 886–893. [Google Scholar]

- Teoh, S.S.; Bräunl, T. Performance evaluation of HOG and Gabor features for vision-based vehicle detection. In Proceedings of the IEEE International Conference on Control System Computing and Engineering (ICCSCE), Penang, Malaysia, 27–29 November 2015; pp. 66–71. [Google Scholar]

- Freeman, W.T.; Adelson, E.H. The design and use of steerable filters. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 891–906. [Google Scholar] [CrossRef]

- CASIA Palmprint Image Database. Available online: http://http://biometrics.idealtest.org/ (accessed on 20 February 2018).

- Tamrakar, D.; Khanna, P. Kernel discriminant analysis of Block-wise Gaussian Derivative Phase Pattern Histogram for palmprint recognition. J. Vis. Commun. Image Represent. 2016, 40, 432–448. [Google Scholar] [CrossRef]

- Elhamifar, E.; Vidal, R. Sparse subspace clustering: Algorithm, theory, and applications. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2765–2781. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.; Ghahramani, Z.; Lafferty, J. Semi-supervised learning using Gaussian fields and harmonic functions. In Proceedings of the International Conference on Machine Learning, Washington, DC, USA, 21–24 August 2003; pp. 912–919. [Google Scholar]

| Method | Parameters |

|---|---|

| HOG-SGF | Image Size = 64 × 64 = 4096 pixels. Block Size = 2 × 2 = 4 cells. Number of Bins (HOG orientations) = 9 bins. Cell Size = 8 × 8 = 16 pixels. Number of Blocks per Image = 7 × 7 = 49 blocks. Number of SGF rotated angles = 24. |

| AE | Number of Hidden Nodes, . Encoder and Decoder Transfer Function is a Logistic Sigmoid Function. Maximum Epochs = 10. L2WeightRegularization = 0.004. Loss Function is a Mean Squared Error function. Training Algorithm is based on a Scaled Conjugate Gradient Function. |

| RELM | A Number of Hidden Nodes is, Regularization parameter is: () = , where An Activation function is a Nonlinear Sigmoid Function, . |

| Approach [Ref.] | Recognition Rates (%) | |||

|---|---|---|---|---|

| Blue | Green | Red | NIR | |

| TPTSR [13] | 78.13 | 98.02 | 98.58 | 98.34 |

| NFS [14] | 97.30 | 96.37 | 97.97 | 98.17 |

| DWT [16] | 93.83 | 93.50 | 95.20 | 94.60 |

| LBP-HF+Gabor [24] | 98.02 | 98.37 | 98.74 | 98.67 |

| FABEMD+TELM [23] | 96.73 | 96.93 | 97.80 | 97.67 |

| Log-Gabor+DHamm [29] | 99.23 | 99.10 | 99.30 | 99.33 |

| HOG+AE+RELM | 99.167 | 99.033 | 99.633 | 99.167 |

| Proposed HOG-SGF+AE+RELM | 99.47 | 99.40 | 99.70 | 99.47 |

| Methods [Ref.] | ERRs (%) | |||

|---|---|---|---|---|

| Blue | Green | Red | NIR | |

| Competitive code [28] | 0.0170 | 0.0168 | 0.0145 | 0.0137 |

| Palm code [5] | 0.0463 | 0.0507 | 0.0297 | 0.0332 |

| Fusion code [26] | 0.0212 | 0.0216 | 0.0179 | 0.0213 |

| Ordinal code [27] | 0.0202 | 0.0202 | 0.0161 | 0.0180 |

| BDOC–BHOG [6] | 0.0487 | 0.0418 | 0.0160 | 0.0278 |

| RLOC [31] | 0.0203 | 0.0249 | 0.0223 | 0.0208 |

| BOCV [32] | 0.0207 | 0.0232 | 0.0186 | 0.0284 |

| EBOCV [33] | 0.0225 | 0.0303 | 0.0313 | 0.0510 |

| HOC [34] | 0.0147 | 0.0144 | 0.0131 | 0.0139 |

| DOC [35] | 0.0146 | 0.0146 | 0.0119 | 0.0121 |

| BGDPPH [51] | 0.4100 | 0.4600 | 0.2900 | 0.4000 |

| HOG-SGF | 0.0073 | 0.0113 | 0.0025 | 0.0040 |

| Approach [Ref.] | Recognition Rates (%) | |||

|---|---|---|---|---|

| Blue | Green | Red | NIR | |

| NFS [14] | 95.10 | 92.87 | 95.40 | 95.63 |

| RBF [15] | 96.70 | 96.50 | 98.20 | 98.40 |

| LBP-HF+Gabor [24] | 97.70 | 97.44 | 98.24 | 98.57 |

| HOG+AE+RELM | 98.300 | 97.433 | 99.200 | 98.300 |

| Proposed HOG-SGF+AE+RELM | 98.767 | 99.033 | 99.600 | 99.200 |

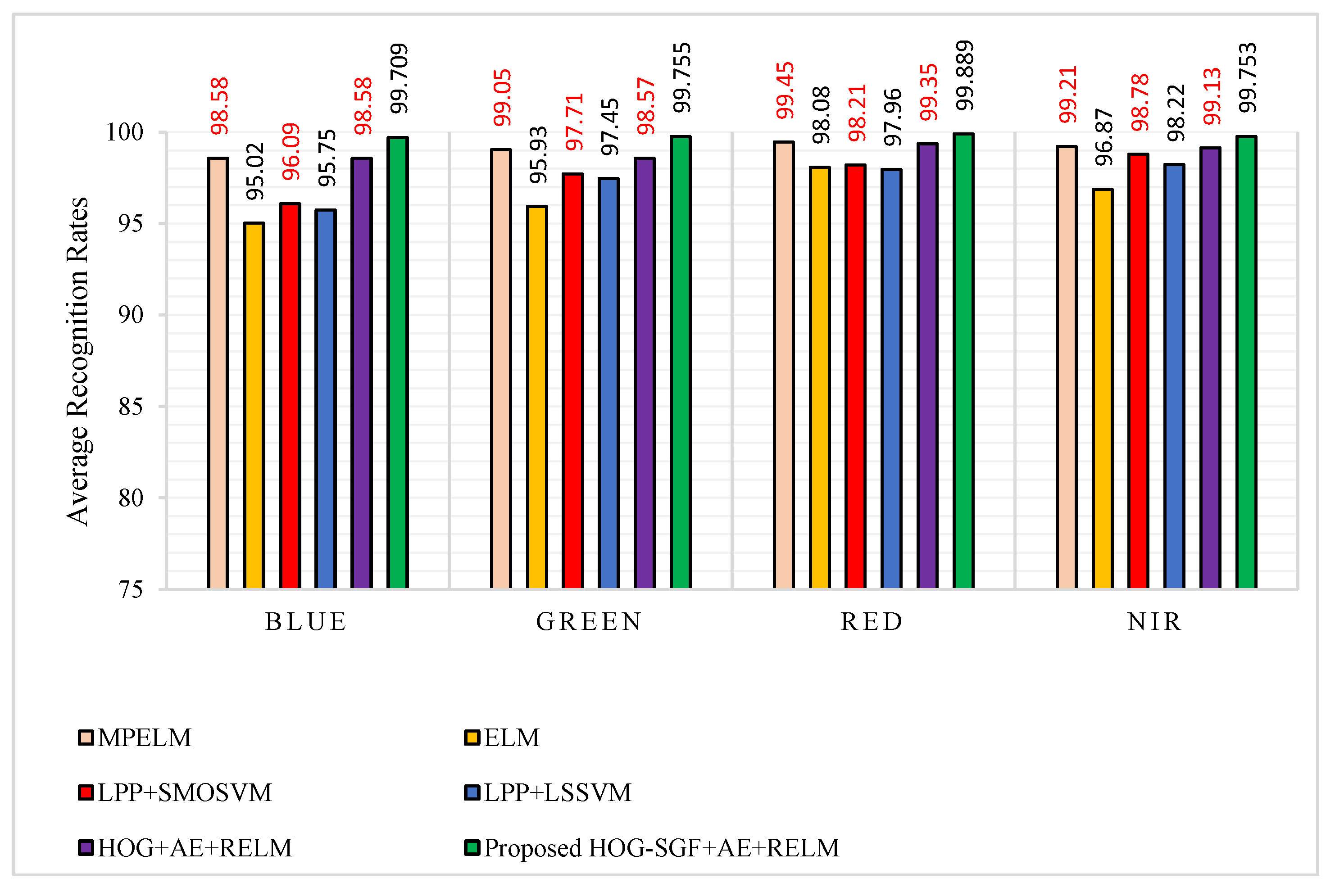

| Approach [Ref.] | Average Recognition Rates (%) | |||

|---|---|---|---|---|

| Blue | Green | Red | NIR | |

| MPELM [22] | 98.58 | 99.05 | 99.45 | 99.21 |

| ELM [22] | 95.02 | 95.93 | 98.08 | 96.87 |

| LPP+SMOSVM [22] | 96.09 | 97.71 | 98.21 | 98.78 |

| LPP+LSSVM [22] | 95.75 | 97.45 | 97.96 | 98.22 |

| HOG+AE+RELM | 98.58 | 98.57 | 99.35 | 99.13 |

| Proposed HOG-SGF+AE+RELM | 99.709 | 99.755 | 99.889 | 99.753 |

| Approach [Ref.] | Recognition Rates (%) | ||

|---|---|---|---|

| Blue + NIR | Green + NIR | Red + NIR | |

| FABEMD+TELM [23] | 99.10 | 99.47 | 99.47 |

| Log-Gabor+DHamm [29] | 99.63 | 99.67 | 99.50 |

| Log-Gabor+DKL [29] | 99.60 | 99.63 | 99.47 |

| Proposed HOG-SGF+AE+RELM | 99.90 | 99.77 | 99.80 |

| Approach [Ref.] | Recognition Rates (%) | ||

|---|---|---|---|

| Blue + NIR | Green + NIR | Red + NIR | |

| MPELM [22] | 99.17 | 99.51 | 99.56 |

| ELM [22] | 97.46 | 97.98 | 98.41 |

| LPP+SMOSVM [22] | 98.38 | 98.51 | 98.93 |

| LPP+LSSVM [22] | 98.62 | 99.05 | 99.21 |

| Proposed HOG-SGF+AE+RELM | 99.99 | 99.90 | 99.95 |

| Approach [Ref.] | Classifier | Accuracy (%) | |

|---|---|---|---|

| 2 Samples of Training | 6 Samples of Training | ||

| Competitive Code [28] | Hamming distance | 77.12 | 90.55 |

| OLOF+SIFT [38] | Euclidean distance | 75.85 | 91.77 |

| SSC [52] | Euclidean distance | 40.70 | 86.60 |

| GFHF [53] | Euclidean distance | 80.61 | 89.52 |

| LRRIPLD [2] | Principal line distance | 86.75 | 95.05 |

| HOG+AE | RELM | 87.52 | 95.67 |

| Proposed HOG-SGF+AE | RELM | 91.95 | 97.75 |

| Approach | Classifier | Time Cost (s) | ||

|---|---|---|---|---|

| Feature Extraction of One Image | Recognition of One Image | Accuracy (%) | ||

| CR_CompCode [30] | Euclidean distance | 0.0150 | 0.0247 | 98.78 |

| HOG+AE | RELM | 0.00274 | 0.0088 | 97.2 |

| Proposed HOG-SGF+AE | RELM | 0.00955 | 0.0088 | 98.85 |

| Method | Avg. Time (s) |

|---|---|

| HOG based feature extraction | 0.00274 |

| HOG-SGF based feature extraction | 0.00955 |

| Method | AE’s Hidden Nodes | Avg. Time (s) |

|---|---|---|

| Pre-training of AE Model on 3000 images | 200 | 6.2725 |

| Pre-training of AE Model on 3000 images | 800 | 28.8237 |

| Method | Feature Dimensions | RELM’s Hidden Nodes | Avg. Time (s) |

|---|---|---|---|

| Training of AE+RELM Model on 3000 images | 200 | 800 | 1.18804 |

| Training of AE+RELM Model on 3000 images | 800 | 800 | 1.23685 |

| Training of AE+RELM Model on 3000 images | 200 | 1820 | 3.42992 |

| Training of AE+RELM Model on 3000 images | 800 | 1820 | 4.24177 |

| Testing of AE+RELM Model on a one test image | 200 | 800 | 0.00610 |

| Testing of AE+RELM Model on a one test image | 800 | 800 | 0.00840 |

| Testing of AE+RELM Model on a one test image | 200 | 1820 | 0.00656 |

| Testing of AE+RELM Model on a one test image | 800 | 1820 | 0.00875 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gumaei, A.; Sammouda, R.; Al-Salman, A.M.; Alsanad, A. An Effective Palmprint Recognition Approach for Visible and Multispectral Sensor Images. Sensors 2018, 18, 1575. https://doi.org/10.3390/s18051575

Gumaei A, Sammouda R, Al-Salman AM, Alsanad A. An Effective Palmprint Recognition Approach for Visible and Multispectral Sensor Images. Sensors. 2018; 18(5):1575. https://doi.org/10.3390/s18051575

Chicago/Turabian StyleGumaei, Abdu, Rachid Sammouda, Abdul Malik Al-Salman, and Ahmed Alsanad. 2018. "An Effective Palmprint Recognition Approach for Visible and Multispectral Sensor Images" Sensors 18, no. 5: 1575. https://doi.org/10.3390/s18051575