The Impact of Curviness on Four Different Image Sensor Forms and Structures

Abstract

:1. Introduction

2. Background

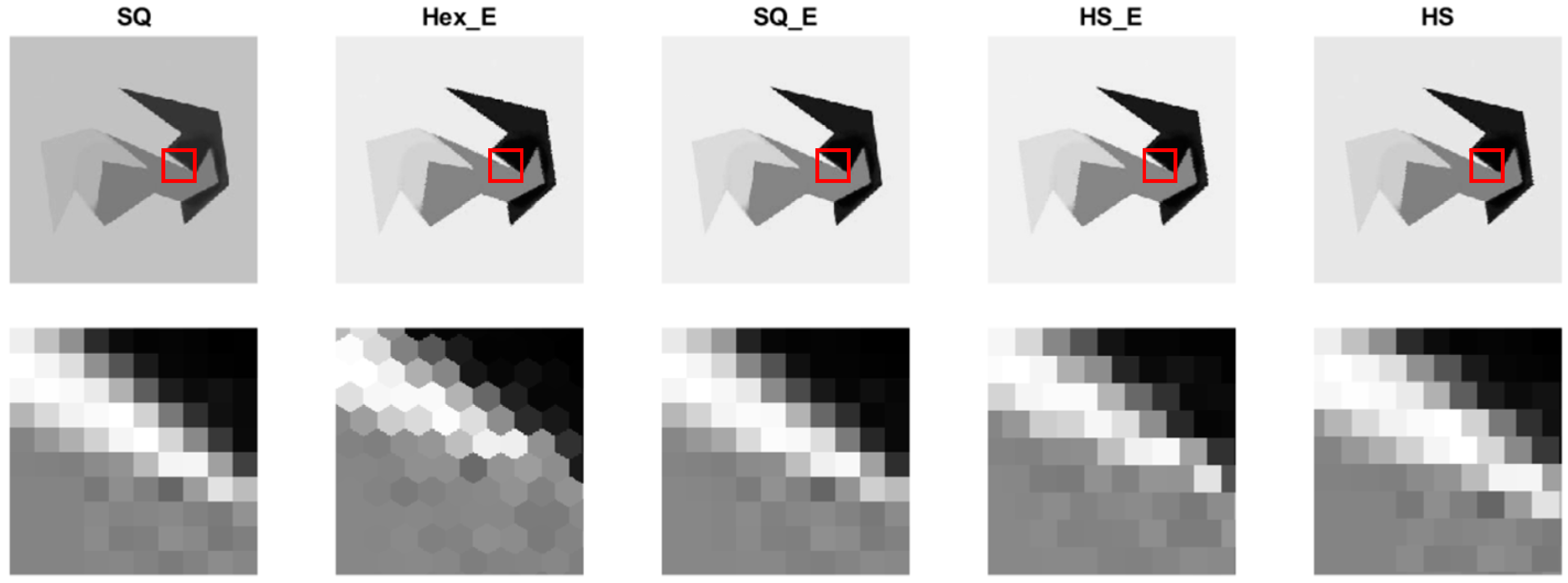

3. Image Generation

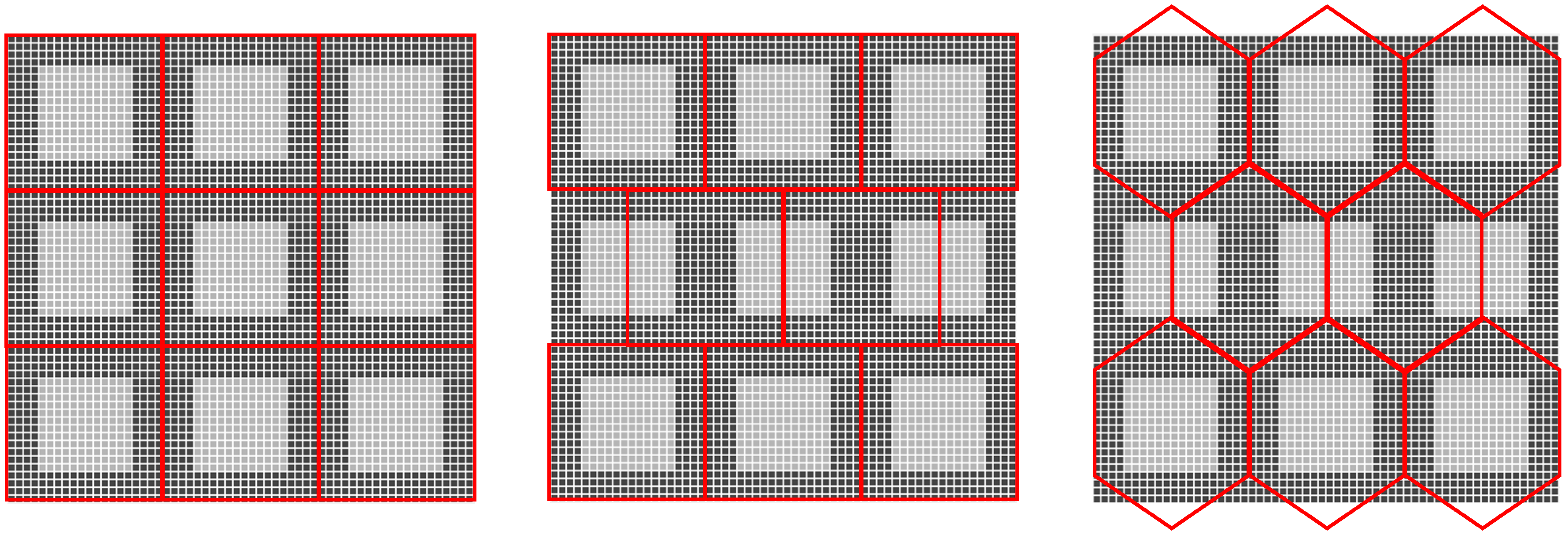

3.1. Generation of the Hexagonal Enriched Image (Hex_E)

3.1.1. A Grid of Virtual Image Sensor Pixels Is Constructed

3.1.2. The Second Step Is to Estimate the Values of Subpixels in the New Grid of Subpixels

3.1.3. In the Third Step, the Subpixels Are Projected back to a Hexagonal Grid Shown as Red Grids on the Right of Figure 1, Where the Distance between Each Two Hexagonal Pixels Is the Same

3.2. Generation of the Square Enriched Image (SQ_E)

3.3. Generation of the Half Pixel Shift Image (HS) and Half Pixel Shift Enriched Image (HS_E)

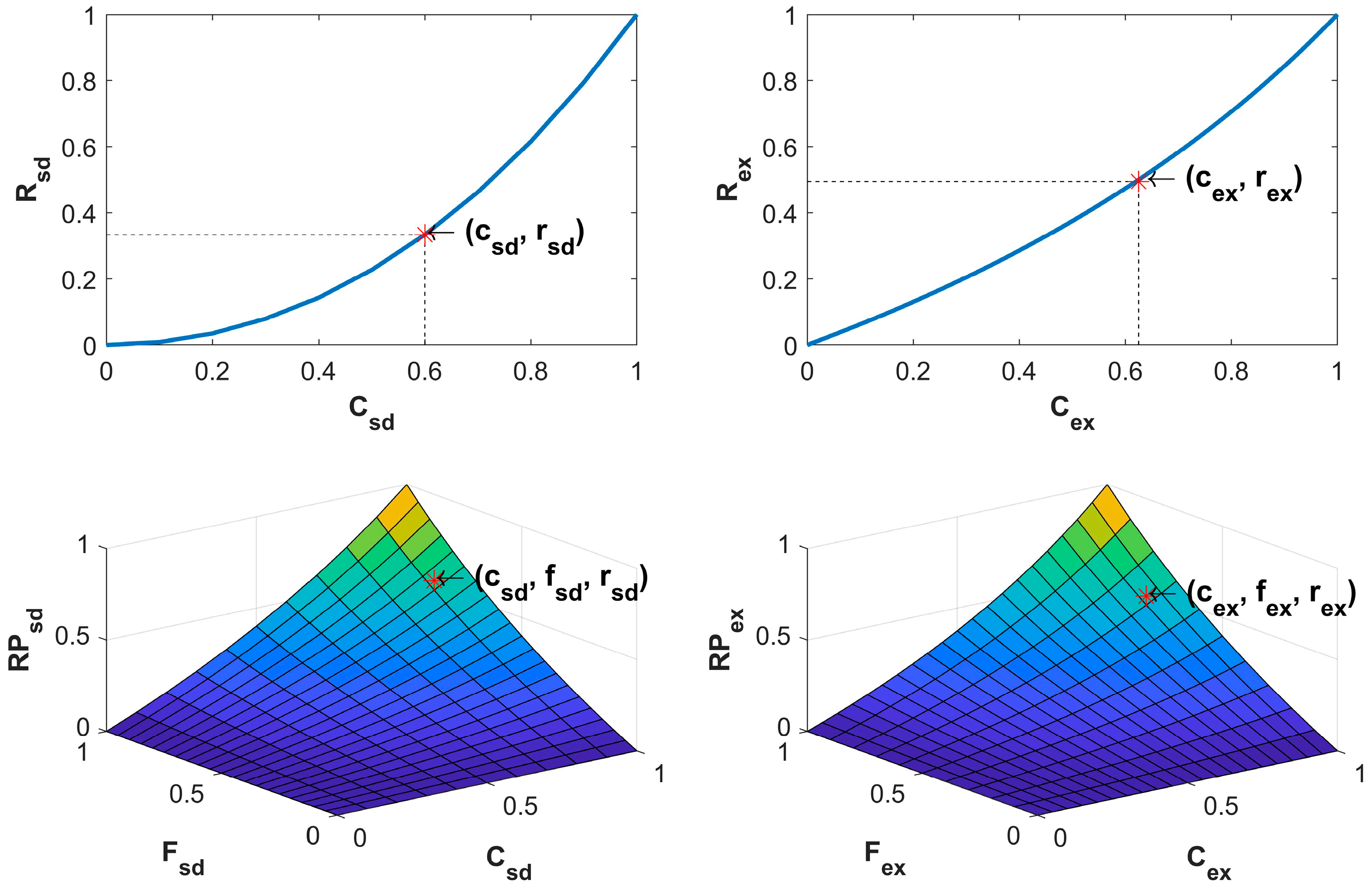

4. Curviness Quantification

4.1. Implementing a First Order Gradient Operation

4.2. Implementing Hessian Matrix on SQ, and SQ_E Images

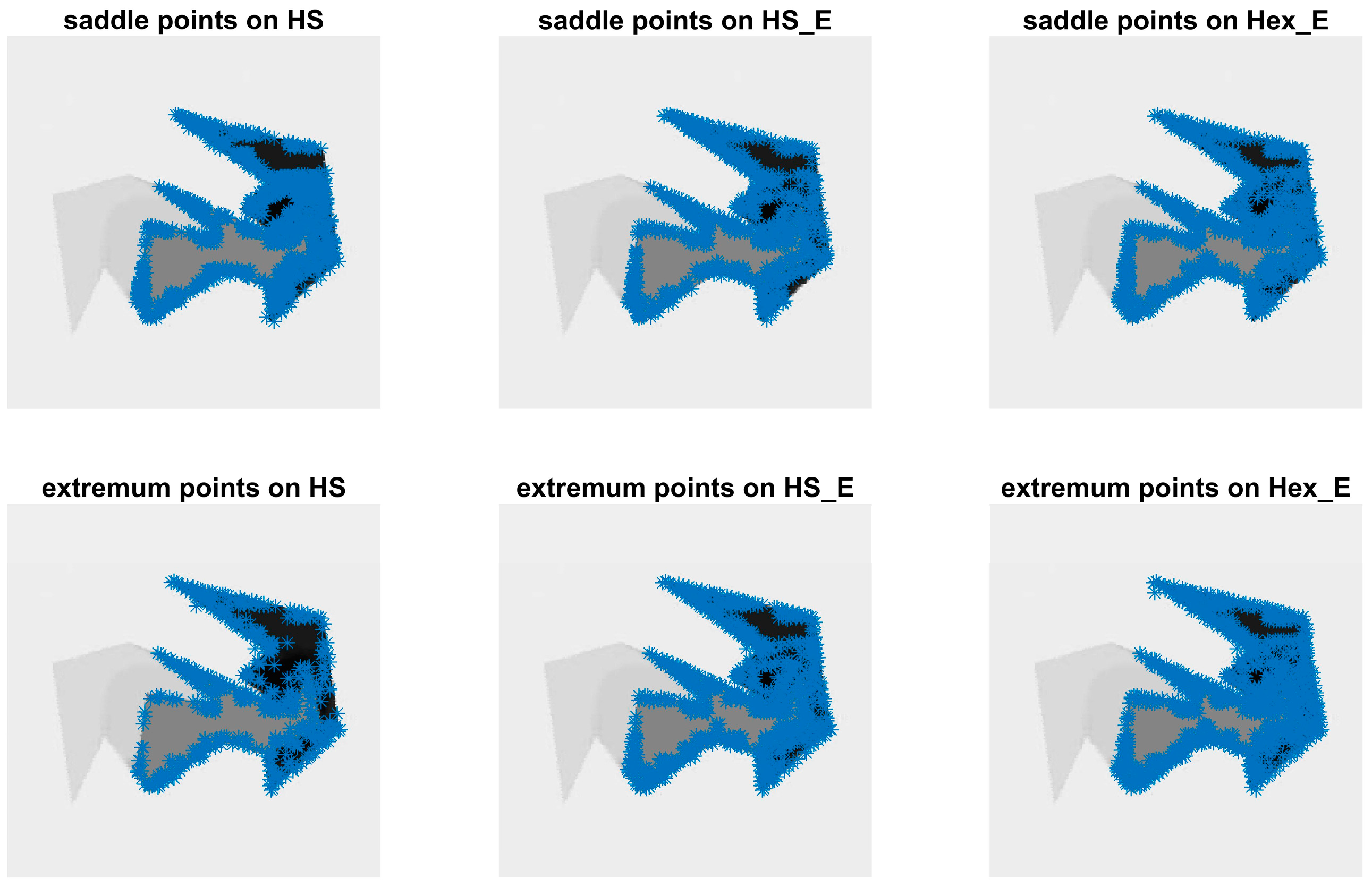

4.3. Implementing Second Order Operation to Detect Saddle and Extremum Points

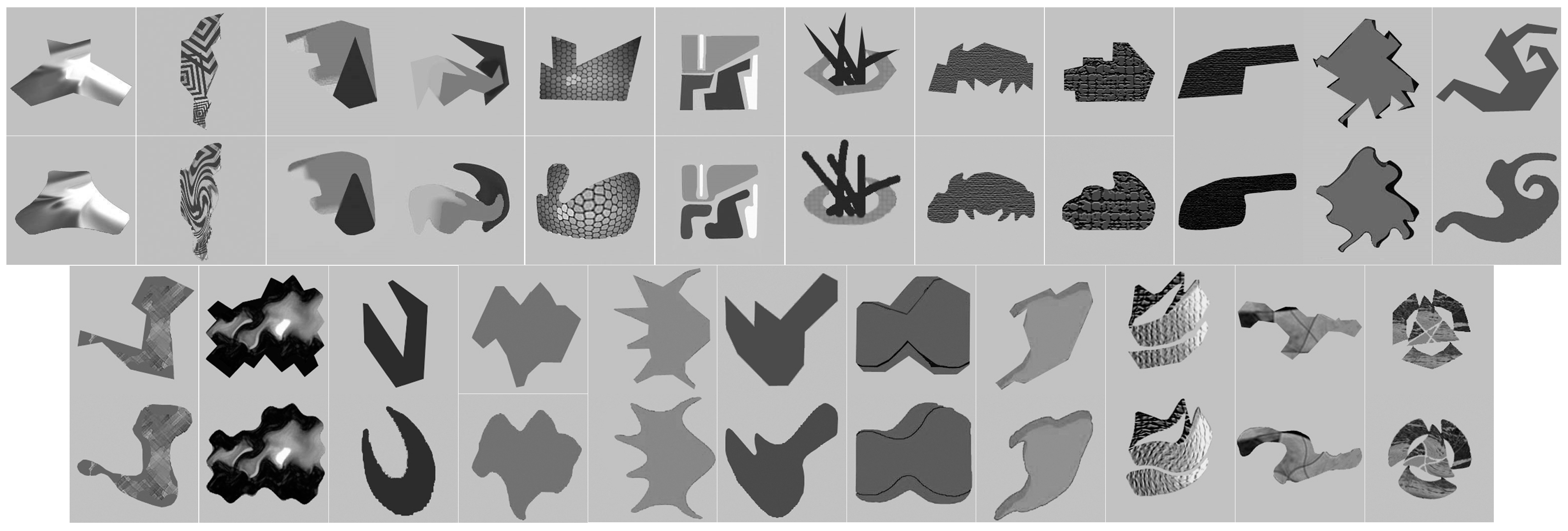

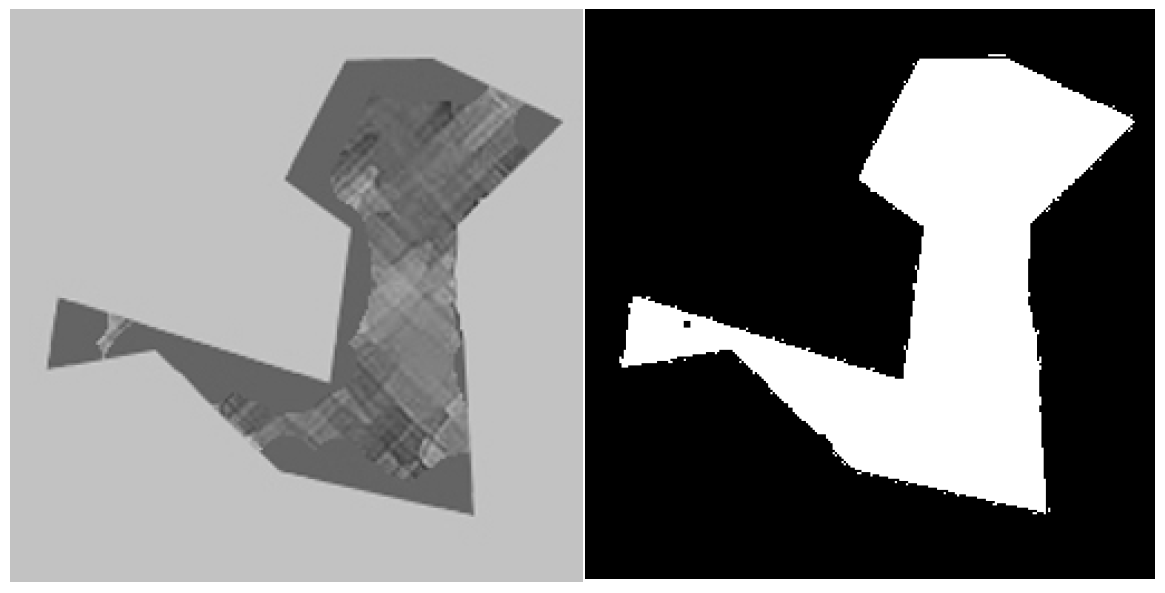

5. Experimental Setup

6. Results and Discussion

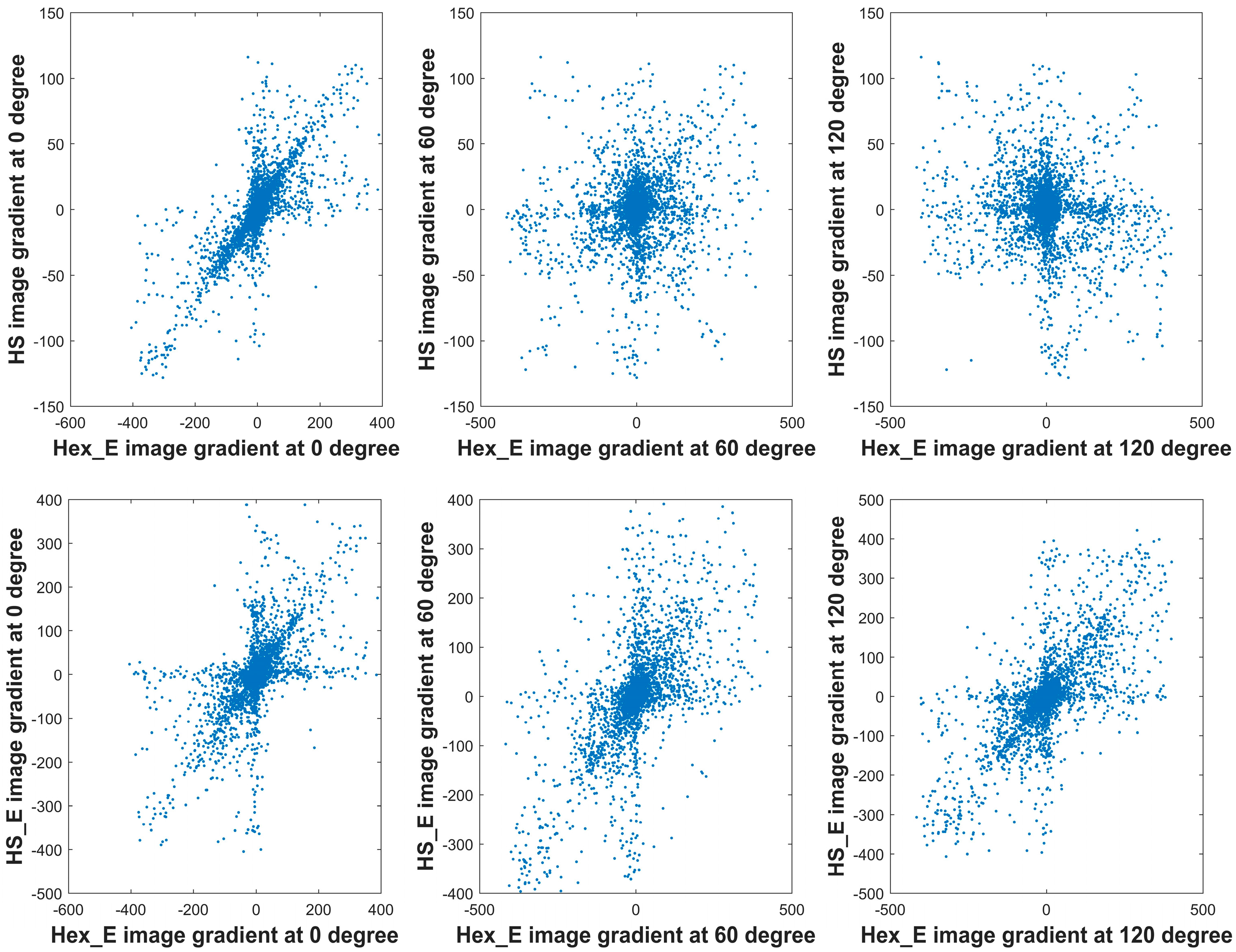

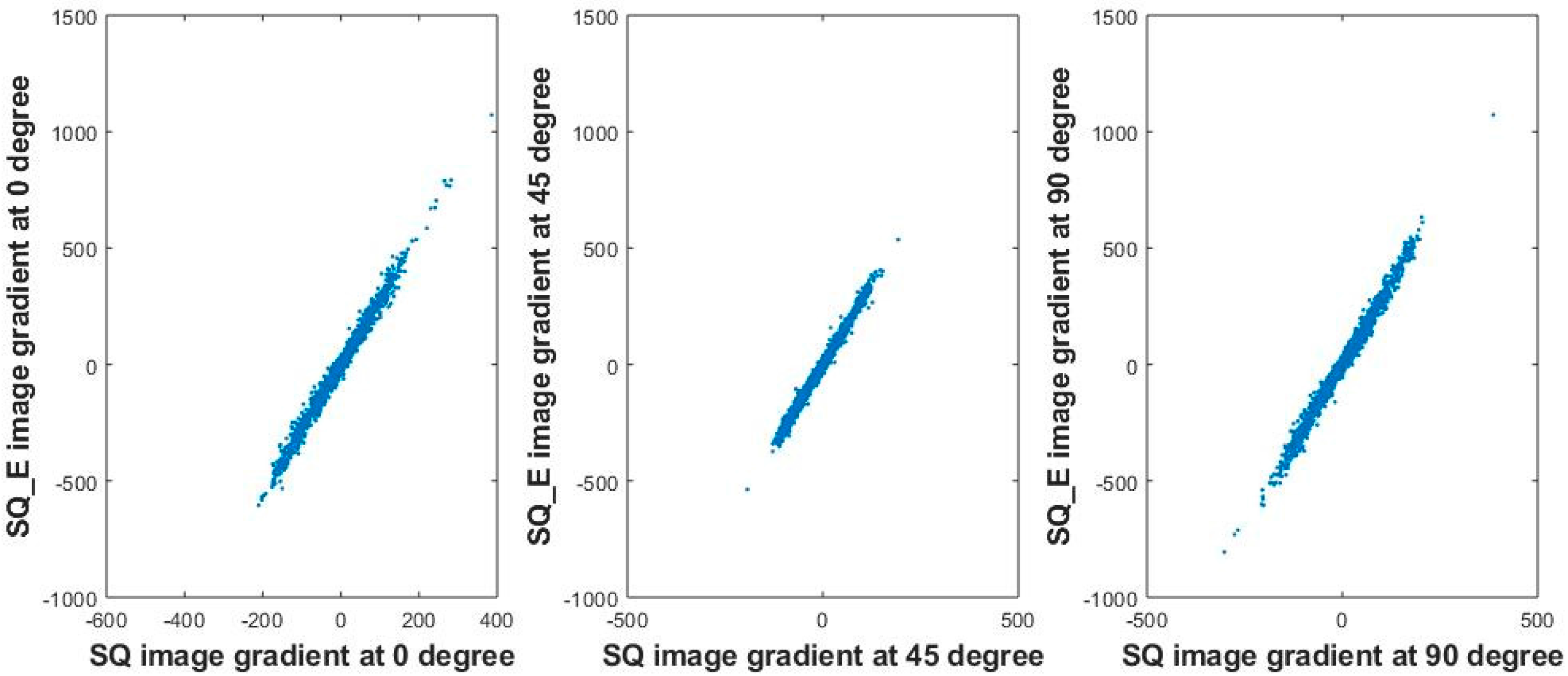

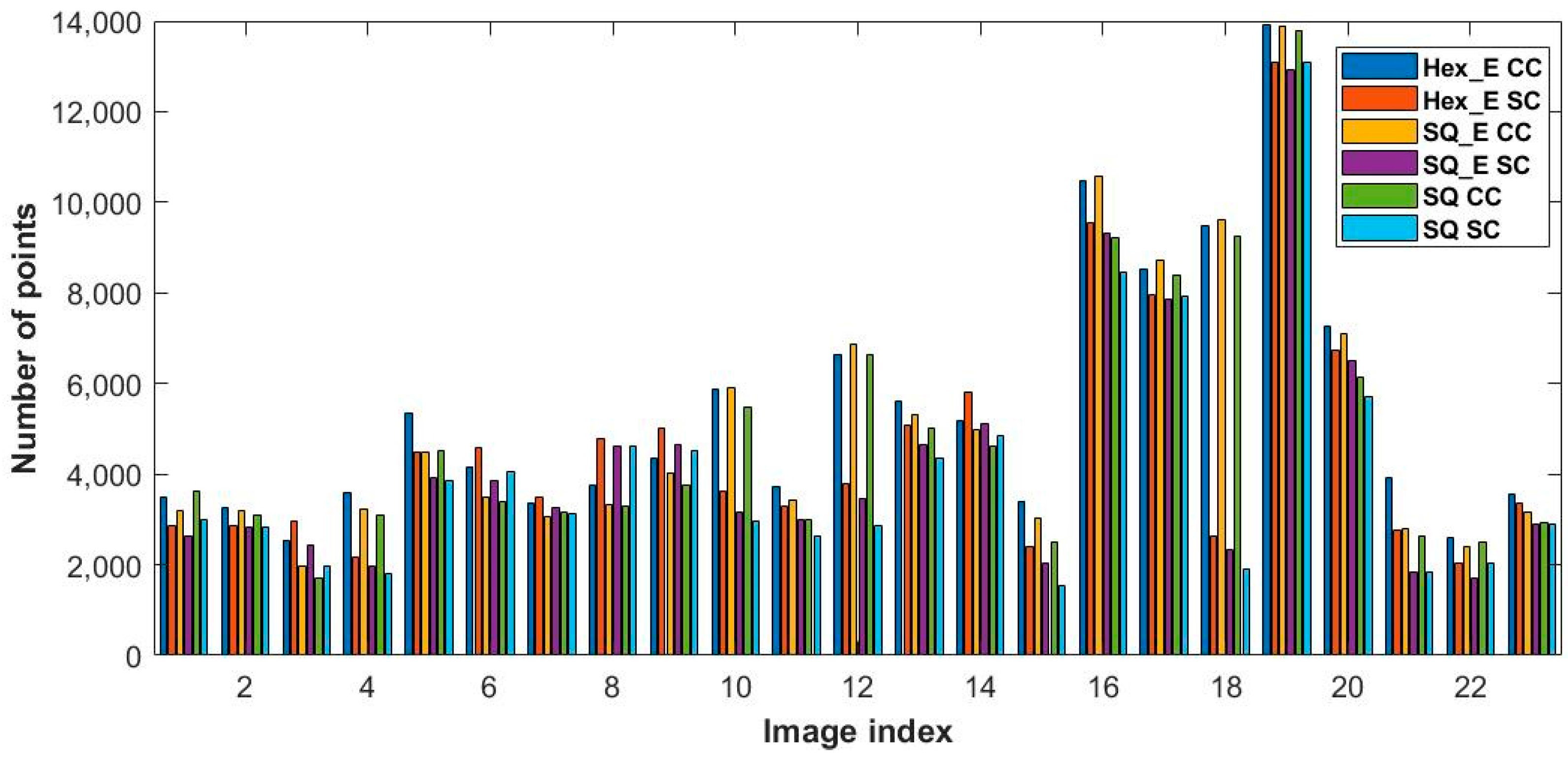

6.1. First Order Gradient Operation

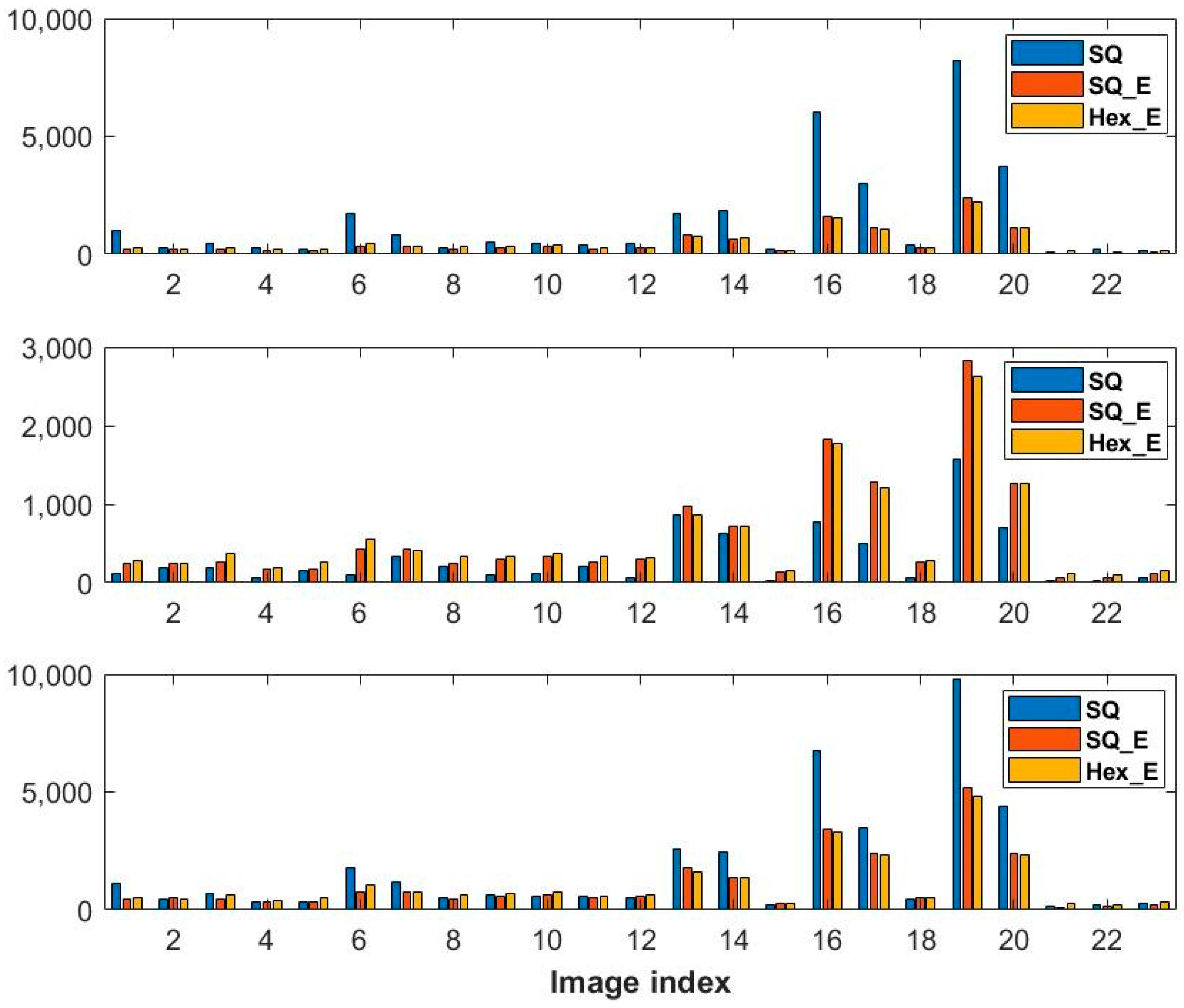

6.2. Hessian Matrix on SQ, and SQ_E Images

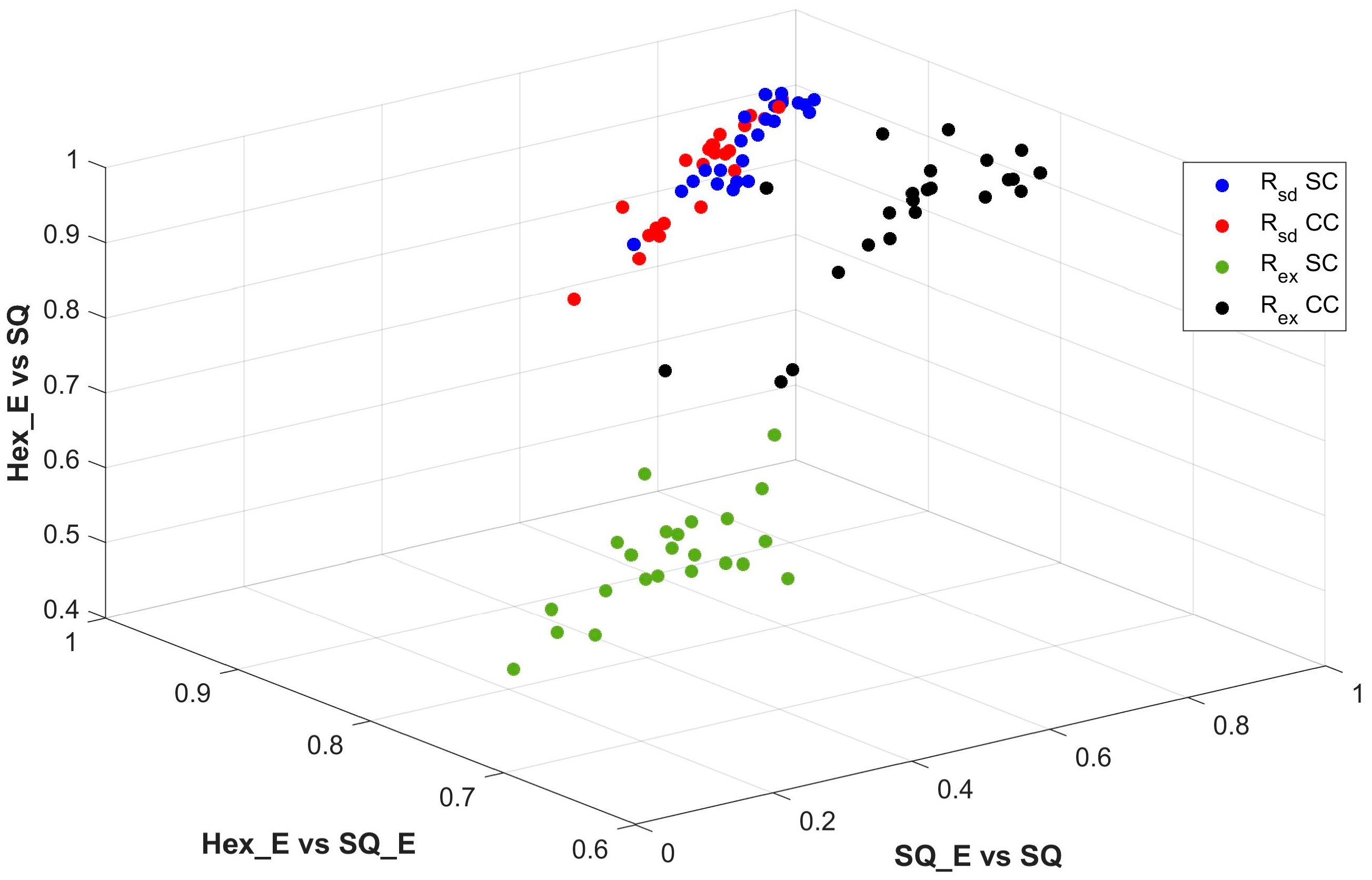

6.3. Saddle and Extremum Points

7. Conclusions

Author Contributions

Conflicts of Interest

References

- Wen, W.; Khatibi, S. Novel Software-Based Method to Widen Dynamic Range of CCD Sensor Images. In Proceedings of the International Conference on Image and Graphics, Tianjin, China, 13–16 August 2015; pp. 572–583. [Google Scholar]

- Wen, W.; Khatibi, S. Back to basics: Towards novel computation and arrangement of spatial sensory in images. Acta Polytech. 2016, 56, 409–416. [Google Scholar] [CrossRef]

- He, X.; Jia, W. Hexagonal Structure for Intelligent Vision. In Proceedings of the 2005 International Conference on Information and Communication Technologies, Karachi, Pakistan, 27–28 August 2005; pp. 52–64. [Google Scholar]

- Horn, B. Robot Vision; MIT Press: Cambridge, MA, USA, 1986. [Google Scholar]

- Yabushita, A.; Ogawa, K. Image reconstruction with a hexagonal grid. In Proceedings of the 2002 IEEE Nuclear Science Symposium Conference Record, Norfolk, VA, USA, 10–16 November 2002; Volume 3, pp. 1500–1503. [Google Scholar]

- Staunton, R.C.; Storey, N. A comparison between square and hexagonal sampling methods for pipeline image processing. In Proceedings of the 1989 Symposium on Visual Communications, Image Processing, and Intelligent Robotics Systems, Philadelphia, PA, USA, 1–3 November 1989; International Society for Optics and Photonics: Bellingham, WA, USA, 1990; pp. 142–151. [Google Scholar]

- Singh, I.; Oberoi, A.; Oberoi, M. Performance Evaluation of Edge Detection Techniques for Square, Hexagon and Enhanced Hexagonal Pixel Images. Int. J. Comput. Appl. 2015, 121. [Google Scholar] [CrossRef]

- Gardiner, B.; Coleman, S.A.; Scotney, B.W. Multiscale Edge Detection Using a Finite Element Framework for Hexagonal Pixel-Based Images. IEEE Trans. Image Process. 2016, 25, 1849–1861. [Google Scholar] [CrossRef] [PubMed]

- Burdescu, D.; Brezovan, M.; Ganea, E.; Stanescu, L. New Algorithm for Segmentation of Images Represented as Hypergraph Hexagonal-Grid. In Pattern Recognition and Image Analysis; Springer: Berlin/Heidelberg, Germany, 2011; pp. 395–402. [Google Scholar]

- Argyriou, V. Sub-Hexagonal Phase Correlation for Motion Estimation. IEEE Trans. Image Process. 2011, 20, 110–120. [Google Scholar] [CrossRef] [PubMed]

- Senthilnayaki, M.; Veni, S.; Kutty, K.A.N. Hexagonal Pixel Grid Modeling for Edge Detection and Design of Cellular Architecture for Binary Image Skeletonization. In Proceedings of the 2006 Annual IEEE India Conference, New Delhi, India, 15–17 September 2006; pp. 1–6. [Google Scholar]

- Linnér, E.; Strand, R. Comparison of restoration quality on square and hexagonal grids using normalized convolution. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 3046–3049. [Google Scholar]

- Jeevan, K.M.; Krishnakumar, S. An Algorithm for the Simulation of Pseudo Hexagonal Image Structure Using MATLAB. Int. J. Image Graph. Signal Process. 2016, 8, 57–63. [Google Scholar]

- He, X. 2D-Object Recognition with Spiral Architecture. Ph.D. Thesis, University of Technology, Sydney, Australia, 1999. [Google Scholar]

- Coleman, S.; Gardiner, B.; Scotney, B. Adaptive tri-direction edge detection operators based on the spiral architecture. In Proceedings of the 2010 17th IEEE International Conference on Image Processing (ICIP), Hong Kong, China, 26–29 September 2010; pp. 1961–1964. [Google Scholar]

- Wu, Q.; He, S.; Hintz, T. Virtual Spiral Architecture. In Proceedings of the International Conference on Parallel and Distributed Processing Techniques and Applications, Las Vegas, NV, USA, 21–24 June 2004; CSREA Press: Las Vegas, NV, USA, 2004. [Google Scholar]

- Her, I.; Yuan, C.-T. Resampling on a pseudohexagonal grid. CVGIP Graph. Models Image Process. 1994, 56, 336–347. [Google Scholar] [CrossRef]

- Van De Ville, D.; Philips, W.; Lemahieu, I. Least-squares spline resampling to a hexagonal lattice. Signal Process. Image Commun. 2002, 17, 393–408. [Google Scholar] [CrossRef]

- Li, X.; Gardiner, B.; Coleman, S.A. Square to Hexagonal lattice Conversion in the Frequency Domain. In Proceedings of the 2017 IEEE International Conference on Image Processing, Beijing, China, 17–20 September 2017. [Google Scholar]

- Wen, W.; Khatibi, S. Estimation of Image Sensor Fill Factor Using a Single Arbitrary Image. Sensors 2017, 17, 620. [Google Scholar] [CrossRef] [PubMed]

- Wen, W.; Khatibi, S. A software method to extend tonal levels and widen tonal range of CCD sensor images. In Proceedings of the 2015 9th International Conference on Signal Processing and Communication Systems (ICSPCS), Cairns, Australia, 14–16 December 2015; pp. 1–6. [Google Scholar]

- Coleman, S.; Scotney, B.; Gardiner, B. Tri-directional gradient operators for hexagonal image processing. J. Vis. Commun. Image Represent. 2016, 38, 614–626. [Google Scholar] [CrossRef]

- Rubin, E. Visuell Wahrgenommene Figuren: Studien in Psychologischer Analyse; Gyldendalske Boghandel: Copenhagen, Denmark, 1921; Volume 1. [Google Scholar]

- Pinna, B.; Deiana, K. Material properties from contours: New insights on object perception. Vis. Res. 2015, 115, 280–301. [Google Scholar] [CrossRef] [PubMed]

- Tirunelveli, G.; Gordon, R.; Pistorius, S. Comparison of square-pixel and hexagonal-pixel resolution in image processing. In Proceedings of the 2002 Canadian Conference on Electrical and Computer Engineering, Winnipeg, MB, Canada, 12–15 May 2002; Volume 2, pp. 867–872. [Google Scholar]

- Frangi, A.F.; Niessen, W.J.; Vincken, K.L.; Viergever, M.A. Multiscale vessel enhancement filtering. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Cambridge, MA, USA, 11–13 October 1998; Springer: Berlin, Germany, 1998; pp. 130–137. [Google Scholar]

- Vazquez, M.; Huyhn, N.; Chang, J.-M. Multi-Scale vessel Extraction Using Curvilinear Filter-Matching Applied to Digital Photographs of Human Placentas. Ph.D. Thesis, California State University, Long Beach, CA, USA, 2001. [Google Scholar]

- Kuijper, A. On detecting all saddle points in 2D images. Pattern Recognit. Lett. 2004, 25, 1665–1672. [Google Scholar] [CrossRef]

- Bar, M.; Neta, M. Humans Prefer Curved Visual Objects. Psychol. Sci. 2006, 17, 645–648. [Google Scholar] [CrossRef] [PubMed]

| Grid Structure | Pixel Form | Fill Factor | First Eigenvalue | Second Eigenvalue | |||

|---|---|---|---|---|---|---|---|

| SC | CC | SC | CC | ||||

| SQ_E | Yes | Yes | No | 272.98 | 277.86 | 6.43 | 8.51 |

| HS | No | Yes | Yes | 260.66 | 264.52 | 60.12 | 61.29 |

| HS_E | No | Yes | No | 210.19 | 212.98 | 106.33 | 107.89 |

| Hex_E | No | No | No | 187.28 | 190.26 | 112.32 | 114.14 |

| Image Index | ||||||

|---|---|---|---|---|---|---|

| SC | CC | SC | CC | SC | CC | |

| 1 | 49.047 | 50.953 | 44.121 | 55.879 | 45.163 | 54.837 |

| 2 | 48.41 | 51.59 | 48.407 | 51.593 | 48.601 | 51.399 |

| 3 | 49.468 | 50.532 | 23.679 | 76.321 | 28.143 | 71.857 |

| 4 | 49.42 | 50.58 | 32.887 | 67.113 | 46.829 | 53.171 |

| 5 | 47.206 | 52.794 | 38.418 | 61.582 | 37.502 | 62.498 |

| 6 | 49.914 | 50.086 | 46.71 | 53.29 | 47.856 | 52.144 |

| 7 | 47.701 | 52.299 | 36.29 | 63.71 | 46.749 | 53.251 |

| 8 | 49.791 | 50.209 | 14.97 | 85.03 | 46.549 | 53.451 |

| 9 | 49.575 | 50.425 | 15.392 | 84.608 | 48.163 | 51.837 |

| 10 | 49.408 | 50.592 | 16.171 | 83.829 | 48.056 | 51.944 |

| 11 | 49.011 | 50.989 | 36.452 | 63.548 | 49.222 | 50.778 |

| 12 | 49.075 | 50.925 | 14.809 | 85.191 | 43.015 | 56.985 |

| 13 | 48.724 | 51.276 | 16.613 | 83.387 | 45.124 | 54.876 |

| 14 | 48.163 | 51.837 | 26.797 | 73.203 | 44.71 | 55.29 |

| 15 | 48.899 | 51.101 | 16.959 | 83.041 | 46.383 | 53.617 |

| 16 | 49.167 | 50.833 | 18.203 | 81.797 | 46.13 | 53.87 |

| 17 | 49.568 | 50.432 | 25.92 | 74.08 | 45.673 | 54.327 |

| 18 | 48.741 | 51.259 | 37.231 | 62.769 | 45.062 | 54.938 |

| 19 | 49.474 | 50.526 | 28.151 | 71.849 | 48.687 | 51.313 |

| 20 | 49.651 | 50.349 | 14.972 | 85.028 | 44.699 | 55.301 |

| 21 | 48.758 | 51.242 | 44.467 | 55.533 | 42.291 | 57.709 |

| 22 | 48.834 | 51.166 | 46.471 | 53.529 | 45.996 | 54.004 |

| 23 | 48.612 | 51.388 | 17.293 | 82.707 | 46.214 | 53.786 |

| Image Index | ||||||

|---|---|---|---|---|---|---|

| SC | SC | SC | CC | |||

| 1 | 49.353 | 50.647 | 44.349 | 55.651 | 44.976 | 55.024 |

| 2 | 49.337 | 50.663 | 48.76 | 51.24 | 48.343 | 51.657 |

| 3 | 51.625 | 48.375 | 66.592 | 33.408 | 30.413 | 69.587 |

| 4 | 50.057 | 49.943 | 30.517 | 69.483 | 43.874 | 56.126 |

| 5 | 47.565 | 52.435 | 37.025 | 62.975 | 35.033 | 64.967 |

| 6 | 50.474 | 49.526 | 48.374 | 51.626 | 47.124 | 52.876 |

| 7 | 48.212 | 51.788 | 36.412 | 63.588 | 44.62 | 55.38 |

| 8 | 49.627 | 50.373 | 15.485 | 84.515 | 41.143 | 58.857 |

| 9 | 49.341 | 50.659 | 15.857 | 84.143 | 47.783 | 52.217 |

| 10 | 49.185 | 50.815 | 16.628 | 83.372 | 47.98 | 52.02 |

| 11 | 49.821 | 50.179 | 34.757 | 65.243 | 41.404 | 58.596 |

| 12 | 48.961 | 51.039 | 15.126 | 84.874 | 43.718 | 56.282 |

| 13 | 48.858 | 51.142 | 19.546 | 80.454 | 45.803 | 54.197 |

| 14 | 48.583 | 51.417 | 32.959 | 67.041 | 45.682 | 54.318 |

| 15 | 49.016 | 50.984 | 17.626 | 82.374 | 46.96 | 53.04 |

| 16 | 49.262 | 50.738 | 23.977 | 76.023 | 46.319 | 53.681 |

| 17 | 49.564 | 50.436 | 25.45 | 74.55 | 44.955 | 55.045 |

| 18 | 48.858 | 51.142 | 35.824 | 64.176 | 44.511 | 55.489 |

| 19 | 49.473 | 50.527 | 33.351 | 66.649 | 47.252 | 52.748 |

| 20 | 49.399 | 50.601 | 19.883 | 80.117 | 44.592 | 55.408 |

| 21 | 48.437 | 51.563 | 43.321 | 56.679 | 41.176 | 58.824 |

| 22 | 49.108 | 50.892 | 46.233 | 53.767 | 44.769 | 55.231 |

| 23 | 48.605 | 51.395 | 17.534 | 82.466 | 46.788 | 53.212 |

| Image Index | SQ | SQ_E | ||||

|---|---|---|---|---|---|---|

| 1 | 9.475 | 12.085 | 19.567 | 8.504 | 11.644 | 31.7 |

| 2 | 5.942 | 10.548 | 10.423 | 5.117 | 10.378 | 19.319 |

| 3 | 11.581 | 13.929 | 18.852 | 10.244 | 13.112 | 28.209 |

| 4 | 13.036 | 11.043 | 16.944 | 12.121 | 11.14 | 27.646 |

| 5 | 6.784 | 8.598 | 7.894 | 6.535 | 8.884 | 14.992 |

| 6 | 8.807 | 11.69 | 14.253 | 7.345 | 11.121 | 23.045 |

| 7 | 9.523 | 9.535 | 12.147 | 8.68 | 9.189 | 20.22 |

| 8 | 5.967 | 9.278 | 9.236 | 5.789 | 9.207 | 17.652 |

| 9 | 6.01 | 10.419 | 8.565 | 6.001 | 10.462 | 15.91 |

| 10 | 5.019 | 9.401 | 9.651 | 4.979 | 9.293 | 18.443 |

| 11 | 12.695 | 9.951 | 11.685 | 11.886 | 9.932 | 19.111 |

| 12 | 7.973 | 10.289 | 11.85 | 7.81 | 10.494 | 18.112 |

| 13 | 6.344 | 13.968 | 13.87 | 6.164 | 12.58 | 23.444 |

| 14 | 3.615 | 11.937 | 12.011 | 3.496 | 9.546 | 19.285 |

| 15 | 10.371 | 11.426 | 11.641 | 9.43 | 11.762 | 15.419 |

| 16 | 9.994 | 15.302 | 17.45 | 9.42 | 14.25 | 25.318 |

| 17 | 8.455 | 14.049 | 17.837 | 8.149 | 14.028 | 28.25 |

| 18 | 6.252 | 12.369 | 14.174 | 6.28 | 12.709 | 19.231 |

| 19 | 7.297 | 11.696 | 11.081 | 6.889 | 9.639 | 15.6 |

| 20 | 8.232 | 15.834 | 17.783 | 7.755 | 14.707 | 29.075 |

| 21 | 6.396 | 8.167 | 7.962 | 6.236 | 8.013 | 15.206 |

| 22 | 11.36 | 10.291 | 16.176 | 10.966 | 10.115 | 28.117 |

| 23 | 5.759 | 10.059 | 9.716 | 5.709 | 10.14 | 17.785 |

| Image Index | SQ_E & SQ | Hex_E & SQ_E | Hex_E & SQ | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SC | CC | SC | CC | SC | CC | SC | CC | SC | CC | SC | CC | |

| 1 | 0.865 | 0.754 | 0.299 | 0.812 | 0.940 | 0.934 | 0.769 | 0.814 | 0.946 | 0.916 | 0.596 | 0.922 |

| 2 | 0.700 | 0.690 | 0.394 | 0.499 | 0.912 | 0.922 | 0.710 | 0.75 | 0.909 | 0.918 | 0.688 | 0.781 |

| 3 | 0.866 | 0.836 | 0.343 | 0.868 | 0.941 | 0.928 | 0.756 | 0.866 | 0.957 | 0.954 | 0.610 | 0.954 |

| 4 | 0.857 | 0.820 | 0.329 | 0.828 | 0.948 | 0.940 | 0.739 | 0.811 | 0.953 | 0.938 | 0.620 | 0.926 |

| 5 | 0.690 | 0.538 | 0.328 | 0.385 | 0.896 | 0.890 | 0.701 | 0.778 | 0.923 | 0.910 | 0.668 | 0.801 |

| 6 | 0.786 | 0.756 | 0.277 | 0.915 | 0.929 | 0.919 | 0.767 | 0.841 | 0.892 | 0.893 | 0.538 | 0.967 |

| 7 | 0.836 | 0.731 | 0.340 | 0.792 | 0.931 | 0.930 | 0.735 | 0.802 | 0.933 | 0.899 | 0.638 | 0.909 |

| 8 | 0.528 | 0.542 | 0.342 | 0.510 | 0.876 | 0.867 | 0.680 | 0.747 | 0.871 | 0.896 | 0.649 | 0.797 |

| 9 | 0.886 | 0.769 | 0.515 | 0.792 | 0.934 | 0.933 | 0.763 | 0.821 | 0.943 | 0.902 | 0.698 | 0.896 |

| 10 | 0.631 | 0.524 | 0.340 | 0.865 | 0.894 | 0.919 | 0.662 | 0.786 | 0.908 | 0.770 | 0.613 | 0.926 |

| 11 | 0.807 | 0.824 | 0.292 | 0.729 | 0.922 | 0.932 | 0.707 | 0.804 | 0.948 | 0.939 | 0.623 | 0.878 |

| 12 | 0.877 | 0.687 | 0.273 | 0.898 | 0.926 | 0.940 | 0.734 | 0.786 | 0.94 | 0.805 | 0.577 | 0.942 |

| 13 | 0.782 | 0.757 | 0.260 | 0.749 | 0.928 | 0.939 | 0.739 | 0.798 | 0.921 | 0.907 | 0.608 | 0.886 |

| 14 | 0.855 | 0.828 | 0.324 | 0.871 | 0.940 | 0.949 | 0.741 | 0.831 | 0.943 | 0.917 | 0.602 | 0.928 |

| 15 | 0.893 | 0.877 | 0.321 | 0.830 | 0.930 | 0.947 | 0.725 | 0.809 | 0.950 | 0.944 | 0.583 | 0.929 |

| 16 | 0.790 | 0.744 | 0.114 | 0.940 | 0.926 | 0.938 | 0.751 | 0.798 | 0.866 | 0.833 | 0.478 | 0.964 |

| 17 | 0.757 | 0.698 | 0.149 | 0.900 | 0.933 | 0.942 | 0.737 | 0.783 | 0.865 | 0.818 | 0.530 | 0.944 |

| 18 | 0.877 | 0.654 | 0.280 | 0.921 | 0.934 | 0.938 | 0.729 | 0.774 | 0.947 | 0.784 | 0.583 | 0.954 |

| 19 | 0.765 | 0.671 | 0.193 | 0.890 | 0.925 | 0.940 | 0.731 | 0.772 | 0.861 | 0.810 | 0.522 | 0.937 |

| 20 | 0.833 | 0.803 | 0.187 | 0.906 | 0.941 | 0.948 | 0.761 | 0.807 | 0.908 | 0.889 | 0.537 | 0.952 |

| 21 | 0.683 | 0.709 | 0.308 | 0.704 | 0.879 | 0.910 | 0.692 | 0.868 | 0.920 | 0.932 | 0.620 | 0.915 |

| 22 | 0.813 | 0.784 | 0.349 | 0.828 | 0.941 | 0.951 | 0.775 | 0.822 | 0.937 | 0.899 | 0.673 | 0.905 |

| 23 | 0.656 | 0.759 | 0.319 | 0.671 | 0.898 | 0.932 | 0.685 | 0.796 | 0.913 | 0.931 | 0.620 | 0.859 |

| Image Index | SQ_E vs. SQ | Hex_E vs. SQ_E | Hex_E vs. SQ | |||

|---|---|---|---|---|---|---|

| 1 | 0.885 | 0.505 | 0.963 | 0.945 | 0.959 | 0.850 |

| 2 | 0.776 | 0.630 | 0.949 | 0.919 | 0.947 | 0.907 |

| 3 | 0.907 | 0.567 | 0.961 | 0.942 | 0.974 | 0.843 |

| 4 | 0.899 | 0.518 | 0.967 | 0.934 | 0.969 | 0.841 |

| 5 | 0.714 | 0.525 | 0.933 | 0.901 | 0.950 | 0.886 |

| 6 | 0.850 | 0.516 | 0.954 | 0.945 | 0.932 | 0.819 |

| 7 | 0.868 | 0.555 | 0.958 | 0.932 | 0.951 | 0.863 |

| 8 | 0.638 | 0.567 | 0.919 | 0.894 | 0.926 | 0.875 |

| 9 | 0.893 | 0.727 | 0.960 | 0.948 | 0.954 | 0.897 |

| 10 | 0.708 | 0.555 | 0.942 | 0.927 | 0.913 | 0.856 |

| 11 | 0.878 | 0.538 | 0.955 | 0.920 | 0.967 | 0.845 |

| 12 | 0.880 | 0.459 | 0.960 | 0.942 | 0.939 | 0.825 |

| 13 | 0.845 | 0.492 | 0.960 | 0.939 | 0.948 | 0.858 |

| 14 | 0.900 | 0.608 | 0.967 | 0.953 | 0.959 | 0.862 |

| 15 | 0.929 | 0.512 | 0.963 | 0.927 | 0.969 | 0.826 |

| 16 | 0.847 | 0.260 | 0.960 | 0.950 | 0.906 | 0.738 |

| 17 | 0.821 | 0.323 | 0.963 | 0.943 | 0.902 | 0.791 |

| 18 | 0.875 | 0.407 | 0.961 | 0.938 | 0.940 | 0.802 |

| 19 | 0.818 | 0.379 | 0.960 | 0.936 | 0.898 | 0.781 |

| 20 | 0.882 | 0.359 | 0.967 | 0.950 | 0.938 | 0.794 |

| 21 | 0.785 | 0.560 | 0.935 | 0.920 | 0.955 | 0.864 |

| 22 | 0.868 | 0.623 | 0.967 | 0.954 | 0.951 | 0.880 |

| 23 | 0.794 | 0.569 | 0.948 | 0.913 | 0.953 | 0.860 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wen, W.; Khatibi, S. The Impact of Curviness on Four Different Image Sensor Forms and Structures. Sensors 2018, 18, 429. https://doi.org/10.3390/s18020429

Wen W, Khatibi S. The Impact of Curviness on Four Different Image Sensor Forms and Structures. Sensors. 2018; 18(2):429. https://doi.org/10.3390/s18020429

Chicago/Turabian StyleWen, Wei, and Siamak Khatibi. 2018. "The Impact of Curviness on Four Different Image Sensor Forms and Structures" Sensors 18, no. 2: 429. https://doi.org/10.3390/s18020429

APA StyleWen, W., & Khatibi, S. (2018). The Impact of Curviness on Four Different Image Sensor Forms and Structures. Sensors, 18(2), 429. https://doi.org/10.3390/s18020429