A Brief Review of Facial Emotion Recognition Based on Visual Information

Abstract

:1. Introduction

1.1. Terminology

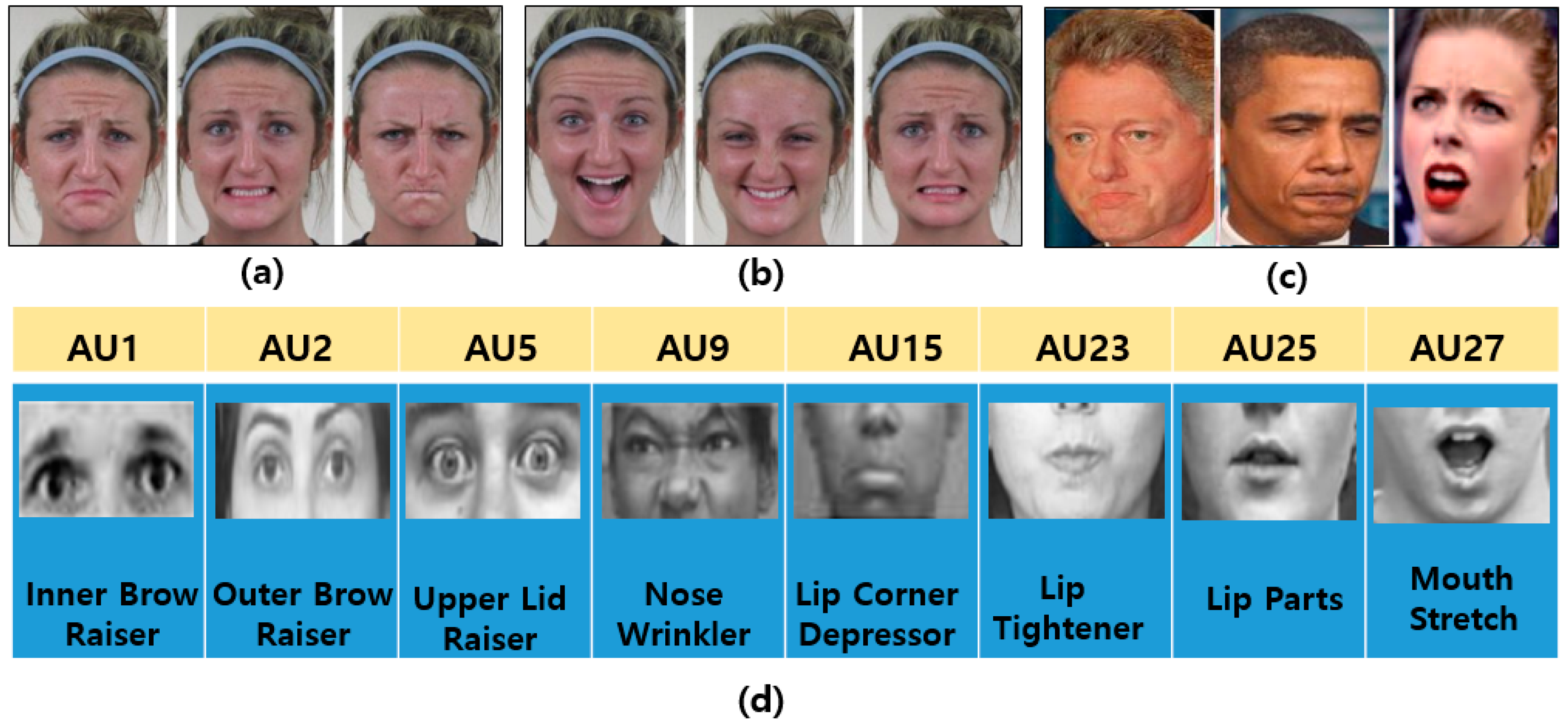

- The facial action coding system (FACS) is a system based on facial muscle changes and can characterize facial actions to express individual human emotions as defined by Ekman and Friesen [14] in 1978. FACS encodes the movements of specific facial muscles called action units (AUs), which reflect distinct momentary changes in facial appearance [15].

- Facial landmarks (FLs) are visually salient points in facial regions such as the end of the nose, ends of the eye brows, and the mouth, as described in Figure 1b. The pairwise positions of each of two landmark points, or the local texture of a landmark, are used as a feature vector of FER. In general, FL detection approaches can be categorized into three types according to the generation of models such as active shape-based model (ASM) and appearance-based model (AAM), a regression-based model with a combination of local and global models, and CNN-based methods. FL models are trained model from the appearance and shape variations from a coarse initialization. Then, the initial shape is moved to a better position step-by-step until convergence [16].

- Basic emotions (BEs) are seven basic human emotions: happiness, surprise, anger, sadness, fear, disgust, and neutral, as shown in Figure 3a.

- Micro expressions (MEs) indicate more spontaneous and subtle facial movements that occur involuntarily. They tend to reveal a person’s genuine and underlying emotions within a short period of time. Figure 3c shows some examples of MEs.

- Facial action units (AUs) code the fundamental actions (46 AUs) of individual or groups of muscles typically seen when producing the facial expressions of a particular emotion [17], as shown in Figure 3d. To recognize facial emotions, individual AU is detected and the system classify facial category according to the combination of AUs. For example, if an image has been annotated as having 1, 2, 25, and 26 AUs using an algorithm, the system will classify it as expressing an emotion of the “surprised” category, as indicated in Table 1.

1.2. Contributions of this Review

- The focus is on providing a general understanding of the state-of-the art FER approaches, and helping new researchers understand the essential components and trends in the FER field.

- Various standard databases that include still images and video sequences for FER use are introduced, along with their purposes and characteristics.

- Key aspects are compared between conventional FER and deep-learning-based FER in terms of accuracy and resource requirements. Although deep-learning-based FER generally produces better FER accuracy than conventional FER, it also requires a large amount of processing capacity, such as a graphic processing unit (GPU) and central processing unit (CPU). Therefore, many current FER algorithms are still being used in embedded systems, including smartphones.

- A new direction and application for future FER studies are presented.

1.3. Organization of this Review

2. Conventional FER Approaches

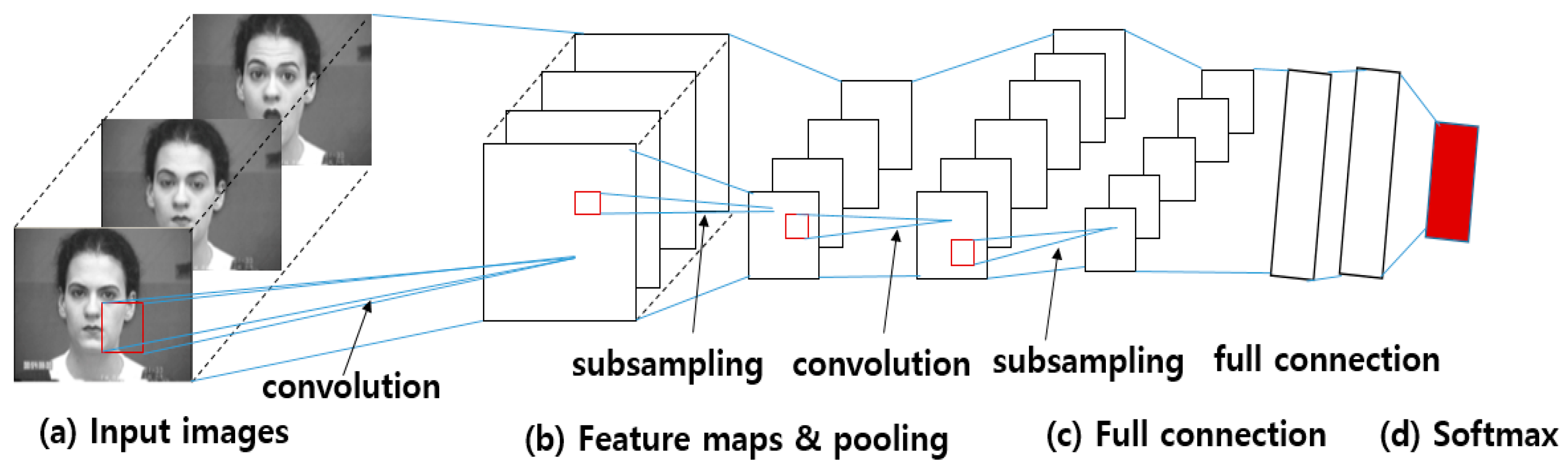

3. Deep-Learning Based FER Approaches

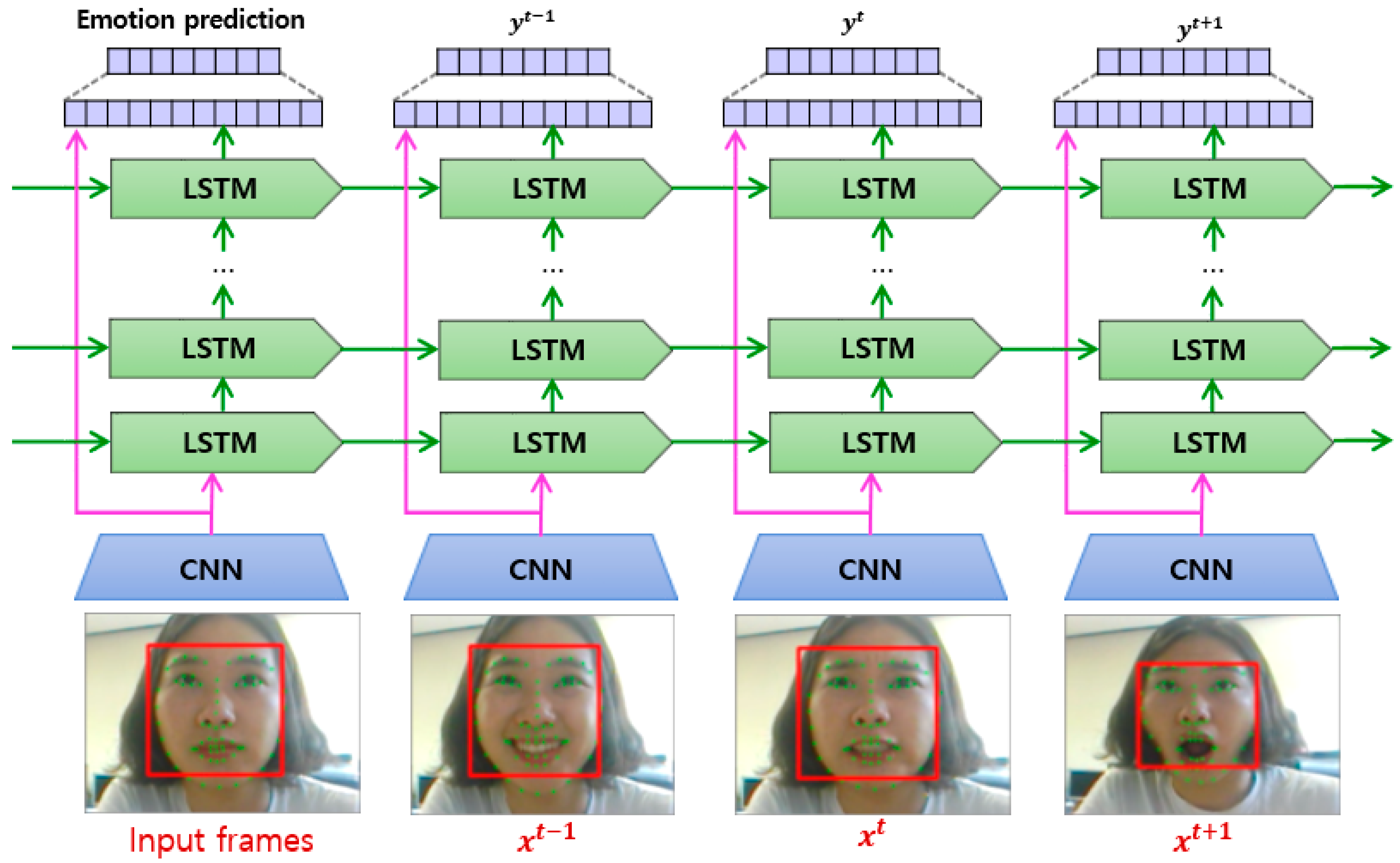

- The cell state is a horizontal line running through the top of the diagram, as shown in Figure 4. An LSTM has the ability to remove or add information to the cell state.

- A forget gate layer is used to decide what new information to store in the cell state.

- An input gate layer is used to decide which values will be updated in the cell.

- An output gate layer provides outputs based on the cell state.

4. Brief Introduction to FER Database

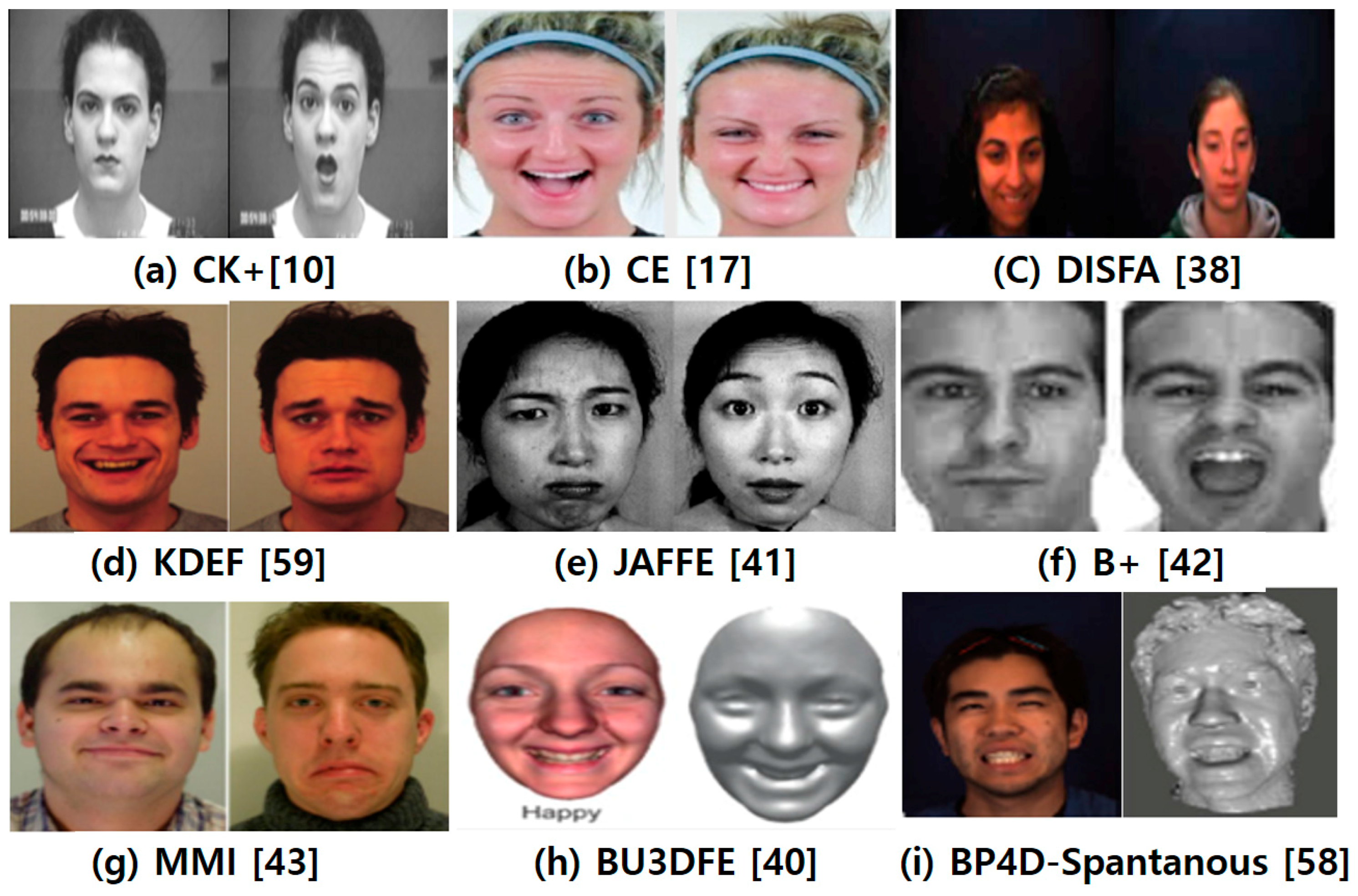

- The Extended Cohn-Kanade Dataset (CK+) [10]: CK+ contains 593 video sequences on both posed and non-posed (spontaneous) emotions, along with additional types of metadata. The age range of its 123 subjects is from 18 to 30 years, most of who are female. Image sequences may be analyzed for both action units and prototypic emotions. It provides protocols and baseline results for facial feature tracking, AUs, and emotion recognition. The images have pixel resolutions of 640 × 480 and 640 × 490 with 8-bit precision for gray-scale values.

- Compound Emotion (CE) [17]: CE contains 5060 images corresponding to 22 categories of basic and compound emotions for its 230 human subjects (130 females and 100 males, mean age of 23). Most ethnicities and races are included, including Caucasian, Asian, African, and Hispanic. Facial occlusions are minimized, with no glasses or facial hair. Male subjects were asked to shave their faces as cleanly as possible, and all participants were also asked to uncover their forehead to fully show their eyebrows. The photographs are color images taken using a Canon IXUS with a pixel resolution of 3000 × 4000.

- Denver Intensity of Spontaneous Facial Action Database (DISFA) [38]: DISFA consists of 130,000 stereo video frames at high resolution (1024 × 768) of 27 adult subjects (12 females and 15 males) with different ethnicities. The intensities of the AUs (0–5 scale) for all video frames were manually scored using two human experts in FACS. The database also includes 66 facial landmark points for each image in the database. The original size of each facial image is 1024 pixels × 768 pixels.

- Binghamton University 3D Facial Expression (BU-3DFE) [40]: Because 2D still images of faces are commonly used in FER, Yin et al. [40] at Binghamton University proposed a databases of annotated 3D facial expressions, namely, BU-3DFE 3D. It was designed for research on 3D human faces and facial expressions, and for the development of a general understanding of human behavior. It contains a total of 100 subjects, 56 females and 44 males, displaying six emotions. There are 25 3D facial emotion models per subject in the database, and a set of 83 manually annotated facial landmarks associated with each model. The original size of each facial image is 1040 pixels × 1329 pixels.

- Japanese Female Facial Expressions (JAFFE) [41]: The JAFFE database contains 213 images of seven facial emotions (six basic facial emotions and one neutral) posed by ten different female Japanese models. Each image was rated based on six emotional adjectives using 60 Japanese subjects. The original size of each facial image is 256 pixels × 256 pixels.

- Extended Yale B face (B+) [42]: This database consists of a set of 16,128 facial images taken under a single light source, and contains 28 distinct subjects for 576 viewing conditions, including nine poses for each of 64 illumination conditions. The original size of each facial image is 320 pixels × 243 pixels.

- MMI [43]: MMI consists of over 2900 video sequences and high-resolution still images of 75 subjects. It is fully annotated for the presence of AUs in the video sequences (event coding), and partially coded at the frame-level, indicating for each frame whether an AU is in a neutral, onset, apex, or offset phase. It contains a total of 238 video sequences on 28 subjects, both males and females. The original size of each facial image is 720 pixels × 576 pixels.

- Binghamton-Pittsburgh 3D Dynamic Spontaneous (BP4D-Spontanous) [58]: BP4D-spontanous is a 3D video database that includes a diverse group of 41 young adults (23 women, 18 men) with spontaneous facial expressions. The subjects were 18–29 years in age. Eleven are Asian, six are African-American, four are Hispanic, and 20 are Euro-Americans. The facial features were tracked in the 2D and 3D domains using both person-specific and generic approaches. The database promotes the exploration of 3D spatiotemporal features during subtle facial expressions for a better understanding of the relation between pose and motion dynamics in facial AUs, as well as a deeper understanding of naturally occurring facial actions. The original size of each facial image is 1040 pixels × 1329 pixels.

- The Karolinska Directed Emotional Face (KDEF) [59]: This database contains 4900 images of human emotional facial expressions. The database consists of 70 individuals, each displaying seven different emotional expressions photographed from five different angles. The original size of each facial image is 562 pixels × 762 pixels.

5. Performance Evaluation of FER

5.1. Subject-Independent and Cross-Database Tasks

5.2. Evaluation Metrics

5.3. Evaluation Results

- A large-scale dataset and massive computing power are required for training as the structure becomes increasingly deep.

- Large numbers of manually collected and labeled datasets are needed.

- Large memory is demanded, and the training and testing are both time consuming. These memories demanding and computational complexities make deep learning ill-suited for deployment on mobile platforms with limited resources [73].

- Considerable skill and experience are required to select suitable hyper parameters, such as the learning rate, kernel sizes of the convolutional filters, and the number of layers. These hyper-parameters have internal dependencies that make them particularly expensive for tuning.

- Although they work quite well for various applications, a solid theory of CNNs is still lacking, and thus users essentially do not know why or how they work.

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Mehrabian, A. Communication without words. Psychol. Today 1968, 2, 53–56. [Google Scholar]

- Kaulard, K.; Cunningham, D.W.; Bülthoff, H.H.; Wallraven, C. The MPI facial expression database—A validated database of emotional and conversational facial expressions. PLoS ONE 2012, 7, e32321. [Google Scholar] [CrossRef] [PubMed]

- Dornaika, F.; Raducanu, B. Efficient facial expression recognition for human robot interaction. In Proceedings of the 9th International Work-Conference on Artificial Neural Networks on Computational and Ambient Intelligence, San Sebastián, Spain, 20–22 June 2007; pp. 700–708. [Google Scholar]

- Bartneck, C.; Lyons, M.J. HCI and the face: Towards an art of the soluble. In Proceedings of the International Conference on Human-Computer Interaction: Interaction Design and Usability, Beijing, China, 22–27 July 2007; pp. 20–29. [Google Scholar]

- Hickson, S.; Dufour, N.; Sud, A.; Kwatra, V.; Essa, I.A. Eyemotion: Classifying facial expressions in VR using eye-tracking cameras. arXiv, 2017; arXiv:1707.07204v2 2017. [Google Scholar]

- Chen, C.H.; Lee, I.J.; Lin, L.Y. Augmented reality-based self-facial modeling to promote the emotional expression and social skills of adolescents with autism spectrum disorders. Res. Dev. Disabil. 2015, 36, 396–403. [Google Scholar] [CrossRef] [PubMed]

- Assari, M.A.; Rahmati, M. Driver drowsiness detection using face expression recognition. In Proceedings of the IEEE International Conference on Signal and Image Processing Applications, Kuala Lumpur, Malaysia, 16–18 November 2011; pp. 337–341. [Google Scholar]

- Zhan, C.; Li, W.; Ogunbona, P.; Safaei, F. A real-time facial expression recognition system for online games. Int. J. Comput. Games Technol. 2008, 2008. [Google Scholar] [CrossRef]

- Mourão, A.; Magalhães, J. Competitive affective gaming: Winning with a smile. In Proceedings of the ACM International Conference on Multimedia, Barcelona, Spain, 21–25 October 2013; pp. 83–92. [Google Scholar]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The extended Cohn-Kanade Dataset (CK+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 94–101. [Google Scholar]

- Kahou, S.E.; Michalski, V.; Konda, K. Recurrent neural networks for emotion recognition in video. In Proceedings of the ACM on International Conference on Multimodal Interaction, Seattle, WA, USA, 9–13 November 2015; pp. 467–474. [Google Scholar]

- Walecki, R.; Rudovic, O. Deep structured learning for facial expression intensity estimation. Image Vis. Comput. 2017, 259, 143–154. [Google Scholar]

- Kim, D.H.; Baddar, W.; Jang, J.; Ro, Y.M. Multi-objective based Spatio-temporal feature representation learning robust to expression intensity variations for facial expression recognition. IEEE Trans. Affect. Comput. 2017, PP. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Facial Action Coding System: Investigator’s Guide, 1st ed.; Consulting Psychologists Press: Palo Alto, CA, USA, 1978; pp. 1–15. ISBN 9993626619. [Google Scholar]

- Hamm, J.; Kohler, C.G.; Gur, R.C.; Verma, R. Automated facial action coding system for dynamic analysis of facial expressions in neuropsychiatric disorders. J. Neurosci. Methods 2011, 200, 237–256. [Google Scholar] [CrossRef] [PubMed]

- Jeong, M.; Kwak, S.Y.; Ko, B.C.; Nam, J.Y. Driver facial landmark detection in real driving situation. IEEE Trans. Circuits Syst. Video Technol. 2017, 99, 1–15. [Google Scholar] [CrossRef]

- Tao, S.Y.; Martinez, A.M. Compound facial expressions of emotion. Natl. Acad. Sci. 2014, 111, E1454–E1462. [Google Scholar]

- Benitez-Quiroz, C.F.; Srinivasan, R.; Martinez, A.M. EmotioNet: An accurate, real-time algorithm for the automatic annotation of a million facial expressions in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 5562–5570. [Google Scholar]

- Kolakowaska, A. A review of emotion recognition methods based on keystroke dynamics and mouse movements. In Proceedings of the 6th International Conference on Human System Interaction, Gdansk, Poland, 6–8 June 2013; pp. 548–555. [Google Scholar]

- Kumar, S. Facial expression recognition: A review. In Proceedings of the National Conference on Cloud Computing and Big Data, Shanghai, China, 4–6 November 2015; pp. 159–162. [Google Scholar]

- Ghayoumi, M. A quick review of deep learning in facial expression. J. Commun. Comput. 2017, 14, 34–38. [Google Scholar]

- Suk, M.; Prabhakaran, B. Real-time mobile facial expression recognition system—A case study. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 24–27 June 2014; pp. 132–137. [Google Scholar]

- Ghimire, D.; Lee, J. Geometric feature-based facial expression recognition in image sequences using multi-class AdaBoost and support vector machines. Sensors 2013, 13, 7714–7734. [Google Scholar] [CrossRef] [PubMed]

- Happy, S.L.; George, A.; Routray, A. A real time facial expression classification system using local binary patterns. In Proceedings of the 4th International Conference on Intelligent Human Computer Interaction, Kharagpur, India, 27–29 December 2012; pp. 1–5. [Google Scholar]

- Siddiqi, M.H.; Ali, R.; Khan, A.M.; Park, Y.T.; Lee, S. Human facial expression recognition using stepwise linear discriminant analysis and hidden conditional random fields. IEEE Trans. Image Proc. 2015, 24, 1386–1398. [Google Scholar] [CrossRef] [PubMed]

- Khan, R.A.; Meyer, A.; Konik, H.; Bouakaz, S. Framework for reliable, real-time facial expression recognition for low resolution images. Pattern Recognit. Lett. 2013, 34, 1159–1168. [Google Scholar] [CrossRef] [Green Version]

- Ghimire, D.; Jeong, S.; Lee, J.; Park, S.H. Facial expression recognition based on local region specific features and support vector machines. Multimed. Tools Appl. 2017, 76, 7803–7821. [Google Scholar] [CrossRef]

- Torre, F.D.; Chu, W.-S.; Xiong, X.; Vicente, F.; Ding, X.; Cohn, J. IntraFace. In Proceedings of the IEEE International Conference on Automatic Face and Gesture Recognition, Ljubljana, Slovenia, 4–8 May 2015; pp. 1–8. [Google Scholar]

- Polikovsky, S.; Kameda, Y.; Ohta, Y. Facial micro-expressions recognition using high speed camera and 3D-gradient descriptor. In Proceedings of the 3rd International Conference on Crime Detection and Prevention, London, UK, 3 December 2009; pp. 1–6. [Google Scholar]

- Sandbach, G.; Zafeiriou, S.; Pantic, M.; Yin, L. Static and dynamic 3D facial expression recognition: A comprehensive survey. Image Vis. Comput. 2012, 30, 683–697. [Google Scholar] [CrossRef]

- Zhao, G.; Huang, X.; Taini, M.; Li, S.Z.; Pietikäinen, M. Facial expression recognition from near-infrared videos. Image Vis. Comput. 2011, 29, 607–619. [Google Scholar] [CrossRef]

- Shen, P.; Wang, S.; Liu, Z. Facial expression recognition from infrared thermal videos. Intell. Auton. Syst. 2013, 12, 323–333. [Google Scholar]

- Szwoch, M.; Pieniążek, P. Facial emotion recognition using depth data. In Proceedings of the 8th International Conference on Human System Interactions, Warsaw, Poland, 25–27 June 2015; pp. 271–277. [Google Scholar]

- Gunawan, A.A.S. Face expression detection on Kinect using active appearance model and fuzzy logic. Procedia Comput. Sci. 2015, 59, 268–274. [Google Scholar]

- Wei, W.; Jia, Q.; Chen, G. Real-time facial expression recognition for affective computing based on Kinect. In Proceedings of the IEEE 11th Conference on Industrial Electronics and Applications, Hefei, China, 5–7 June 2016; pp. 161–165. [Google Scholar]

- Tian, Y.; Luo, P.; Luo, X.; Wang, X.; Tang, X. Pedestrian detection aided by deep learning semantic tasks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 5079–5093. [Google Scholar]

- Deshmukh, S.; Patwardhan, M.; Mahajan, A. Survey on real-time facial expression recognition techniques. IET Biom. 2016, 5, 155–163. [Google Scholar]

- Mavadati, S.M.; Mahoor, M.H.; Bartlett, K.; Trinh, P.; Cohn, J. DISFA: A spontaneous facial action intensity database. IEEE Trans. Affect. Comput. 2013, 4, 151–160. [Google Scholar] [CrossRef]

- Maalej, A.; Amor, B.B.; Daoudi, M.; Srivastava, A.; Berretti, S. Shape analysis of local facial patches for 3D facial expression recognition. Pattern Recognit. 2011, 44, 1581–1589. [Google Scholar] [CrossRef]

- Yin, L.; Wei, X.; Sun, Y.; Wang, J.; Rosato, M.J. A 3D facial Expression database for facial behavior research. In Proceedings of the International Conference on Automatic Face and Gesture Recognition, Southampton, UK, 10–12 April 2006; pp. 211–216. [Google Scholar]

- Lyons, M.J.; Akamatsu, S.; Kamachi, M.; Gyoba, J. Coding facial expressions with Gabor wave. In Proceedings of the IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 14–16 April 1998; pp. 200–205. [Google Scholar]

- B+. Available online: https://computervisiononline.com/dataset/1105138686 (accessed on 29 November 2017).

- MMI. Available online: https://mmifacedb.eu/ (accessed on 29 November 2017).

- Walecki, R.; Rudovic, O.; Pavlovic, V.; Schuller, B.; Pantic, M. Deep structured learning for facial action unit intensity estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3405–3414. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Ko, B.C.; Lee, E.J.; Nam, J.Y. Genetic algorithm based filter bank design for light convolutional neural network. Adv. Sci. Lett. 2016, 22, 2310–2313. [Google Scholar] [CrossRef]

- Breuer, R.; Kimmel, R. A deep learning perspective on the origin of facial expressions. arXiv, 2017; arXiv:1705.01842. [Google Scholar]

- Jung, H.; Lee, S.; Yim, J.; Park, S.; Kim, J. Joint fine-tuning in deep neural networks for facial expression recognition. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–12 December 2015; pp. 2983–2991. [Google Scholar]

- Zhao, K.; Chu, W.S.; Zhang, H. Deep region and multi-label learning for facial action unit detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 3391–3399. [Google Scholar]

- Olah, C. Understanding LSTM Networks. Available online: http://colah.github.io/posts/2015-08-Understanding-LSTMs/ (accessed on 29 November 2017).

- Donahue, J.; Hendricks, L.A.; Rohrbach, M.; Venugopalan, S.; Guadarrama, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 677–691. [Google Scholar] [CrossRef] [PubMed]

- Ng, H.W.; Nguyen, V.D.; Vonikakis, V.; Winkler, S. Deep learning for emotion recognition on small datasets using transfer learning. In Proceedings of the 17th ACM International Conference on Multimodal Interaction, Emotion Recognition in the Wild Challenge, Seattle, WA, USA, 9–13 November 2015; pp. 1–7. [Google Scholar]

- Chu, W.S.; Torre, F.D.; Cohn, J.F. Learning spatial and temporal cues for multi-label facial action unit detection. In Proceedings of the 12th IEEE International Conference on Automatic Face and Gesture Recognition, Washington, DC, USA, 30 May–3 June 2017; pp. 1–8. [Google Scholar]

- Hasani, B.; Mahoor, M.H. Facial expression recognition using enhanced deep 3D convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Hawaii, HI, USA, 21–26 July 2017; pp. 1–11. [Google Scholar]

- Graves, A.; Mayer, C.; Wimmer, M.; Schmidhuber, J.; Radig, B. Facial expression recognition with recurrent neural networks. In Proceedings of the International Workshop on Cognition for Technical Systems, Santorini, Greece, 6–7 October 2008; pp. 1–6. [Google Scholar]

- Jain, D.K.; Zhang, Z.; Huang, K. Multi angle optimal pattern-based deep learning for automatic facial expression recognition. Pattern Recognit. Lett. 2017, 1, 1–9. [Google Scholar] [CrossRef]

- Yan, W.J.; Li, X.; Wang, S.J.; Zhao, G.; Liu, Y.J.; Chen, Y.H.; Fu, X. CASME II: An improved spontaneous micro-expression database and the baseline evaluation. PLoS ONE 2014, 9, e86041. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Yin, L.; Cohn, J.; Canavan, S.; Reale, M.; Horowitz, A.; Liu, P.; Girard, J. BP4D-Spontaneous: A high resolution spontaneous 3D dynamic facial expression database. Image Vis. Comput. 2014, 32, 692–706. [Google Scholar] [CrossRef]

- KDEF. Available online: http://www.emotionlab.se/resources/kdef (accessed on 27 November 2017).

- Die große MPI Gesichtsausdruckdatenbank. Available online: https://www.b-tu.de/en/graphic-systems/databases/the-large-mpi-facial-expression-database (accessed on 2 December 2017).

- Kohavi, R. A study of cross-validation and bootstrap for accuracy estimation and model selection. In Proceedings of the Fourteenth International Joint Conference on Artificial Intelligence, San Mateo, CA, USA, 20–25 August 1995; pp. 1137–1143. [Google Scholar]

- Ding, X.; Chu, W.S.; Torre, F.D.; Cohn, J.F.; Wang, Q. Facial action unit event detection by cascade of tasks. In Proceedings of the IEEE International Conference Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2400–2407. [Google Scholar]

- Huang, M.H.; Wang, Z.W.; Ying, Z.L. A new method for facial expression recognition based on sparse representation plus LBP. In Proceedings of the International Congress on Image and Signal Processing, Yantai, China, 16–18 October 2010; pp. 1750–1754. [Google Scholar]

- Zhen, W.; Zilu, Y. Facial expression recognition based on local phase quantization and sparse representation. In Proceedings of the IEEE International Conference on Natural Computation, Chongqing, China, 29–31 May 2012; pp. 222–225. [Google Scholar]

- Zhang, S.; Zhao, X.; Lei, B. Robust facial expression recognition via compressive sensing. Sensors 2012, 12, 3747–3761. [Google Scholar] [CrossRef] [PubMed]

- Zhao, G.; Pietikainen, M. Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 915–928. [Google Scholar] [CrossRef] [PubMed]

- Jiang, B.; Valstar, M.F.; Pantic, M. Action unit detection using sparse appearance descriptors in space-time video volumes. In Proceedings of the IEEE International Conference and Workshops on Automatic Face & Gesture Recognition, Santa Barbara, CA, USA, 21–25 March 2011; pp. 314–321. [Google Scholar]

- Lee, S.H.; Baddar, W.J.; Ro, Y.M. Collaborative expression representation using peak expression and intra class variation face images for practical subject-independent emotion recognition in videos. Pattern Recognit. 2016, 54, 52–67. [Google Scholar] [CrossRef]

- Liu, M.; Li, S.; Shan, S.; Wang, R.; Chen, X. Deeply learning deformable facial action parts model for dynamic expression analysis. In Proceedings of the Asian Conference on Computer Vision, Singapore, 1–5 November 2014; pp. 143–157. [Google Scholar]

- Liu, M.; Li, S.; Shan, S.; Chen, X. Au-aware deep networks for facial expression recognition. In Proceedings of the IEEE International Conference and Workshops on Automatic Face and Gesture Recognition, Shanghai, China, 22–26 April 2013; pp. 1–6. [Google Scholar]

- Liu, M.; Li, S.; Shan, S.; Chen, X. AU-inspired deep networks for facial expression feature learning. Neurocomputing 2015, 159, 126–136. [Google Scholar] [CrossRef]

- Mollahosseini, A.; Chan, D.; Mahoor, M.H. Going deeper in facial expression recognition using deep neural networks. In Proceedings of the IEEE Winter Conference on Application of Computer Vision, Lake Placid, NY, USA, 7–9 March 2016; pp. 1–10. [Google Scholar]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, L.; Wang, G.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2017, 1, 1–24. [Google Scholar] [CrossRef]

| Category | AUs | Category | AUs |

|---|---|---|---|

| Happy | 12, 25 | Sadly disgusted | 4, 10 |

| Sad | 4, 15 | Fearfully angry | 4, 20, 25 |

| Fearful | 1, 4, 20, 25 | Fearfully surprised | 1, 2, 5, 20, 25 |

| Angry | 4, 7, 24 | Fearfully disgusted | 1, 4, 10, 20, 25 |

| Surprised | 1, 2 , 25, 26 | Angrily surprised | 4, 25, 26 |

| Disgusted | 9, 10, 17 | Disgusted surprised | 1, 2, 5, 10 |

| Happily sad | 4, 6, 12, 25 | Happily fearful | 1, 2, 12, 25, 26 |

| Happily surprised | 1, 2, 12, 25 | Angrily disgusted | 4, 10, 17 |

| Happily disgusted | 10, 12, 25 | Awed | 1, 2, 5, 25 |

| Sadly fearful | 1, 4, 15, 25 | Appalled | 4, 9, 10 |

| Sadly angry | 4, 7, 15 | Hatred | 4, 7, 10 |

| Sadly surprised | 1, 4, 25, 26 | - | - |

| Reference | Emotions Analyzed | Visual Features | Decision Methods | Database |

|---|---|---|---|---|

| Compound emotion [17] | Seven emotions and 22 compound emotions |

| Nearest-mean classifier, Kernel subclass discriminant analysis | CE [17] |

| EmotioNet [18] | 23 basic and compound emotions |

| Kernel subclass discriminant analysis | CE [17] CK+ [10], DISFA [38], |

| Real-time mobile [22] | Seven emotions |

| SVM | CK+ [10] |

| Ghimire and Lee [23] | Seven emotions |

| Multi-class AdaBoost, SVM | CK+ [10] |

| Global Feature [24] | Six emotions |

| Principal component analysis (PCA) | Self-generated |

| Local region specific feature [33] | Seven emotions |

| SVM | CK+ [10] |

| InfraFace [34] | Seven emotions, 17 AUs detected |

| A linear SVM | CK+ [10] |

| 3D facial expression [39] | Six prototypical emotions |

| Multiboosting and SVM | BU-3DFE [40] |

| Stepwise approach [31] | Six prototypical emotions |

| Hidden conditional random fields (HCRFs) | CK+ [10], JAFFE [41], B+ [42], MMI [43] |

| Reference | Emotions Analyzed | Recognition Algorithm | Database |

|---|---|---|---|

| hybrid CNN-RNN [11] | Seven emotions |

| EmotiW [52] |

| Kim et al. [13] | Six emotions |

| MMI [43], CASME II [57] |

| Breuer and Kimmel [47] | Eight emotions, 50 AU detection |

| CK+ [10], NovaEmotions [47] |

| Joint Fine-Tunning [48] | Seven emotions |

| CK+ [10], MMI [43] |

| DRML [49] | 12 AUs for BP4D, eight AUs for DISFA |

| DISFA [38], BP4D [58] |

| Multi-level AU [53] | 12 AU detection |

| BP4D [58] |

| 3D Inception-ResNet [54] | 23 basic and compound emotions |

| CK+ [10], DISFA [38] |

| Candide-3 [55] | Six emotions |

| CK+ [10] |

| Multi-angle FER [56] | Six emotions |

| CK+ [10], MMI [43] |

| Database | Data Configuration | Web Link |

|---|---|---|

| CK+ [10] |

| http://www.consortium.ri.cmu.edu/ckagree/ |

| CE [17] |

| http://cbcsl.ece.ohio-state.edu/dbform_compound.html |

| DISFA [38] |

| http://www.engr.du.edu/mmahoor/DISFA.htm |

| BU-3DFE [40] |

| http://www.cs.binghamton.edu/~lijun/Research/3DFE/3DFE_Analysis.html |

| JAFFE [41] |

| http://www.kasrl.org/jaffe_info.html |

| B+ [42] |

| http://vision.ucsd.edu/content/extended-yale-face-database-b-b |

| MMI [43] |

| https://mmifacedb.eu/ |

| BP4D-Spontanous [58] |

| http://www.cs.binghamton.edu/~lijun/Research/3DFE/3DFE_Analysis.html |

| KDEF [59] |

| http://www.emotionlab.se/resources/kdef |

| Type | Brief Description of Main Algorithms | Input | Accuracy (%) |

|---|---|---|---|

| Conventional (handcrafted-feature) FER approaches |

| Still frame | 59.18 |

| Still frame | 62.72 | |

| Still frame | 61.89 | |

| Sequence | 61.19 | |

| Sequence | 64.11 | |

| Still frame | 70.12 | |

| Average | 63.20 | ||

| Deep-learning-based FER approaches |

| Sequence | 63.40 |

| Sequence | 70.24 | |

| Still frame | 69.88 | |

| Still frame | 75.85 | |

| Still frame | 77.90 | |

| Sequence | 78.61 | |

| Average | 72.65 |

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ko, B.C. A Brief Review of Facial Emotion Recognition Based on Visual Information. Sensors 2018, 18, 401. https://doi.org/10.3390/s18020401

Ko BC. A Brief Review of Facial Emotion Recognition Based on Visual Information. Sensors. 2018; 18(2):401. https://doi.org/10.3390/s18020401

Chicago/Turabian StyleKo, Byoung Chul. 2018. "A Brief Review of Facial Emotion Recognition Based on Visual Information" Sensors 18, no. 2: 401. https://doi.org/10.3390/s18020401