Radar HRRP Target Recognition Based on Stacked Autoencoder and Extreme Learning Machine

Abstract

:1. Introduction

2. Theoretical Background

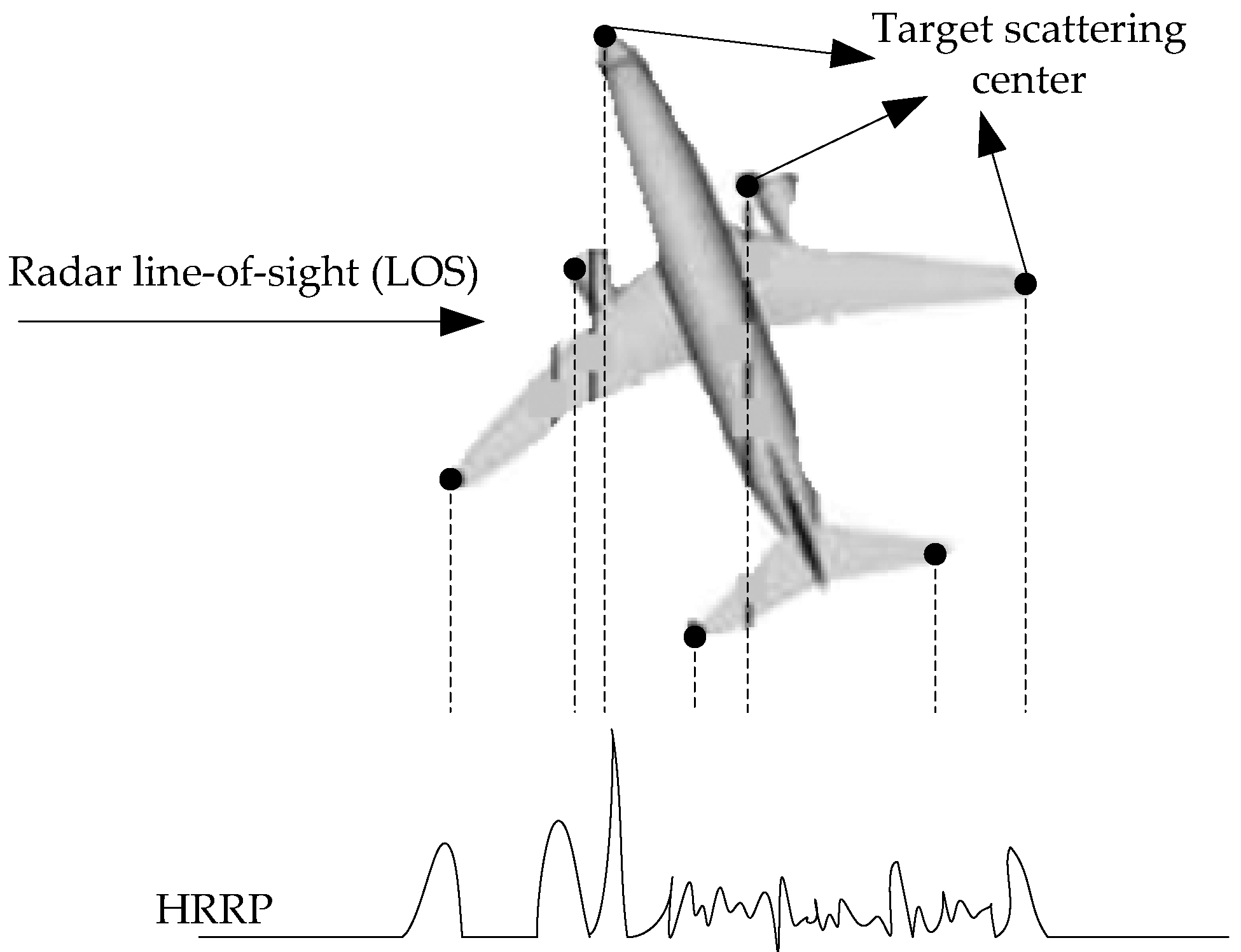

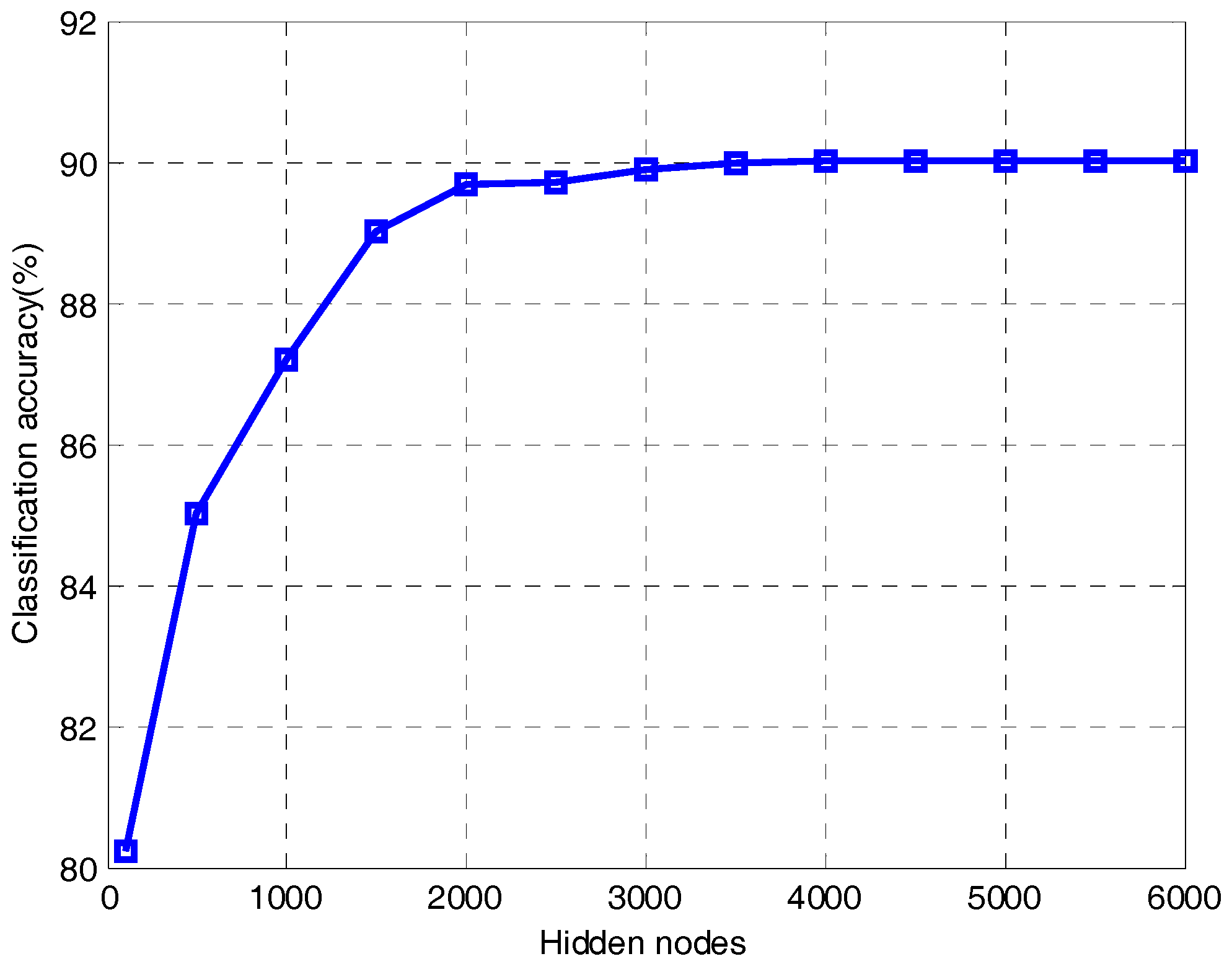

2.1. Description of HRRP

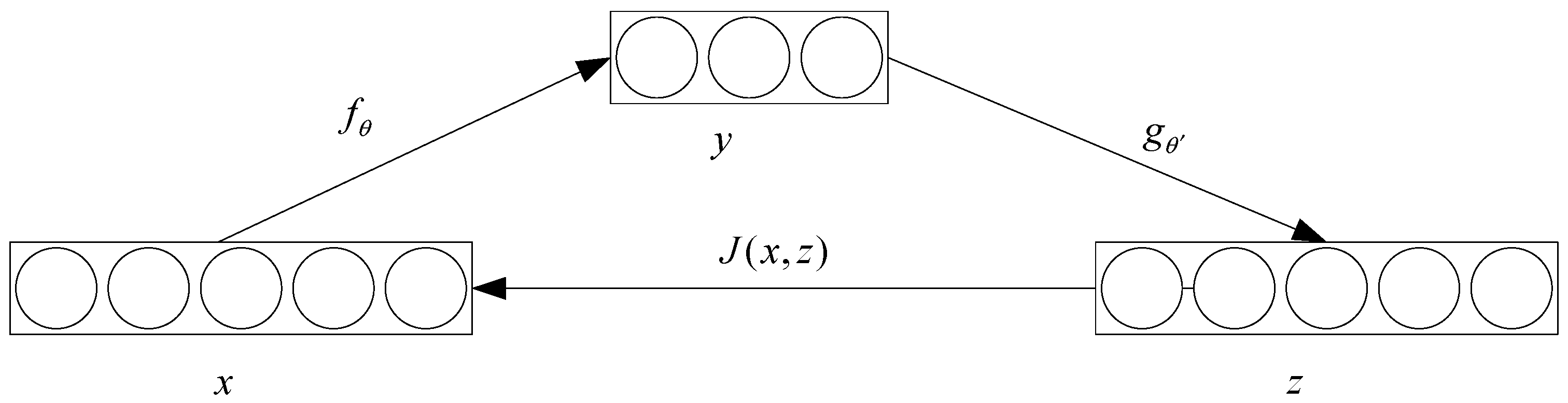

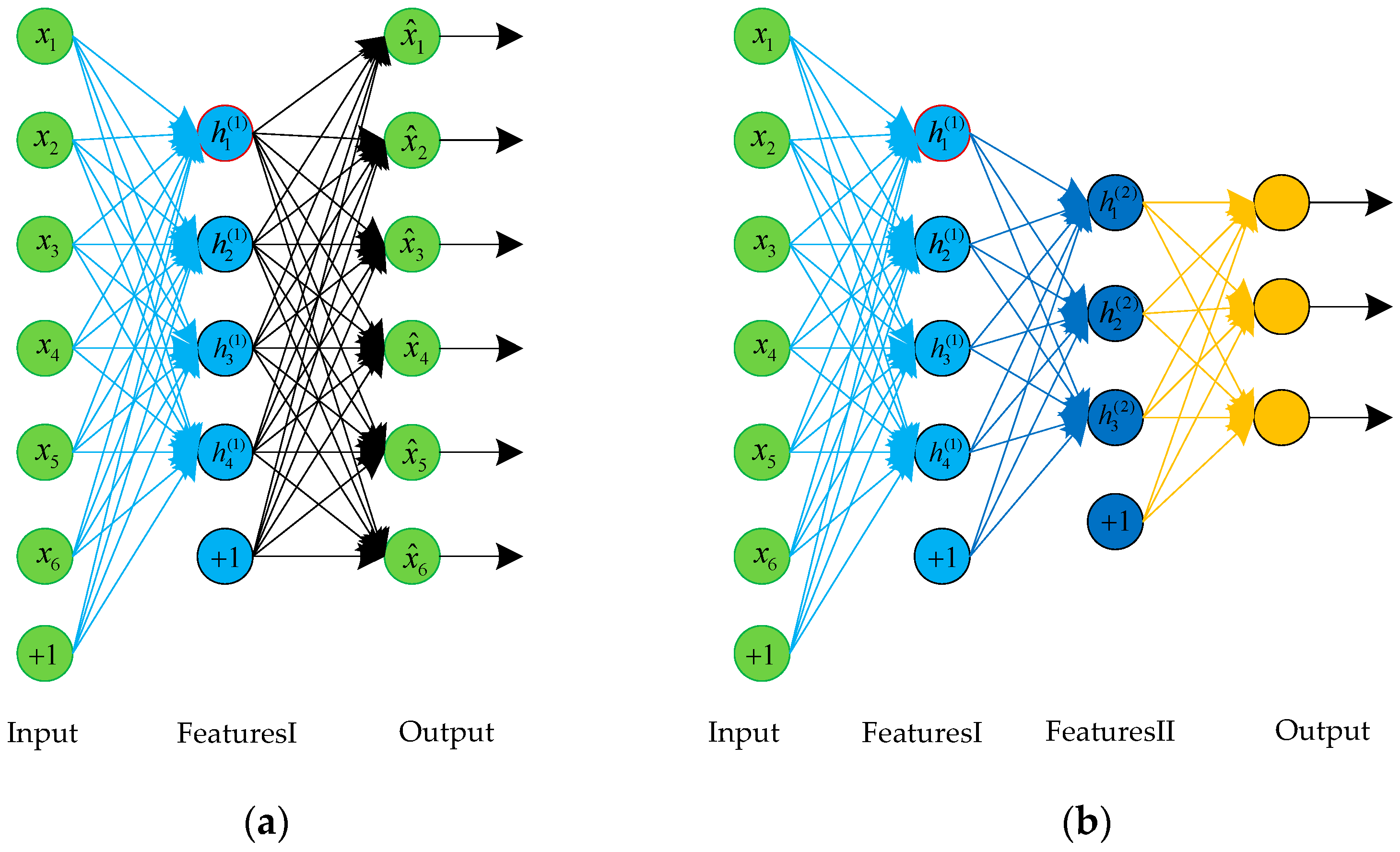

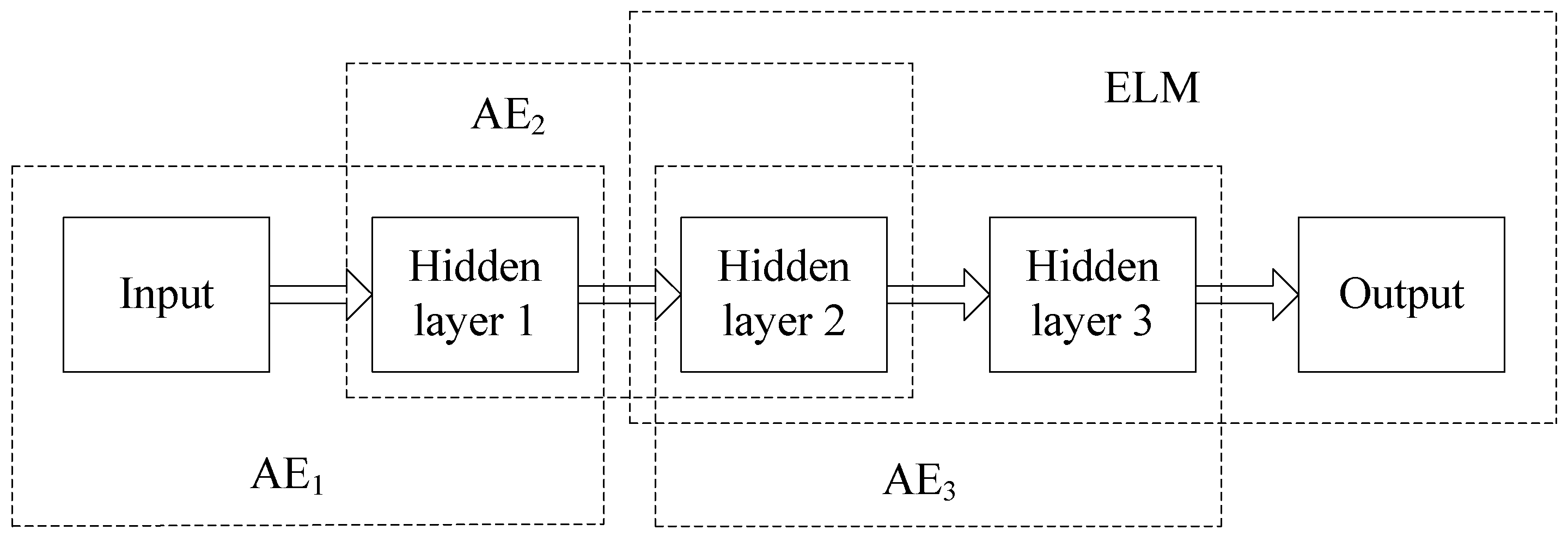

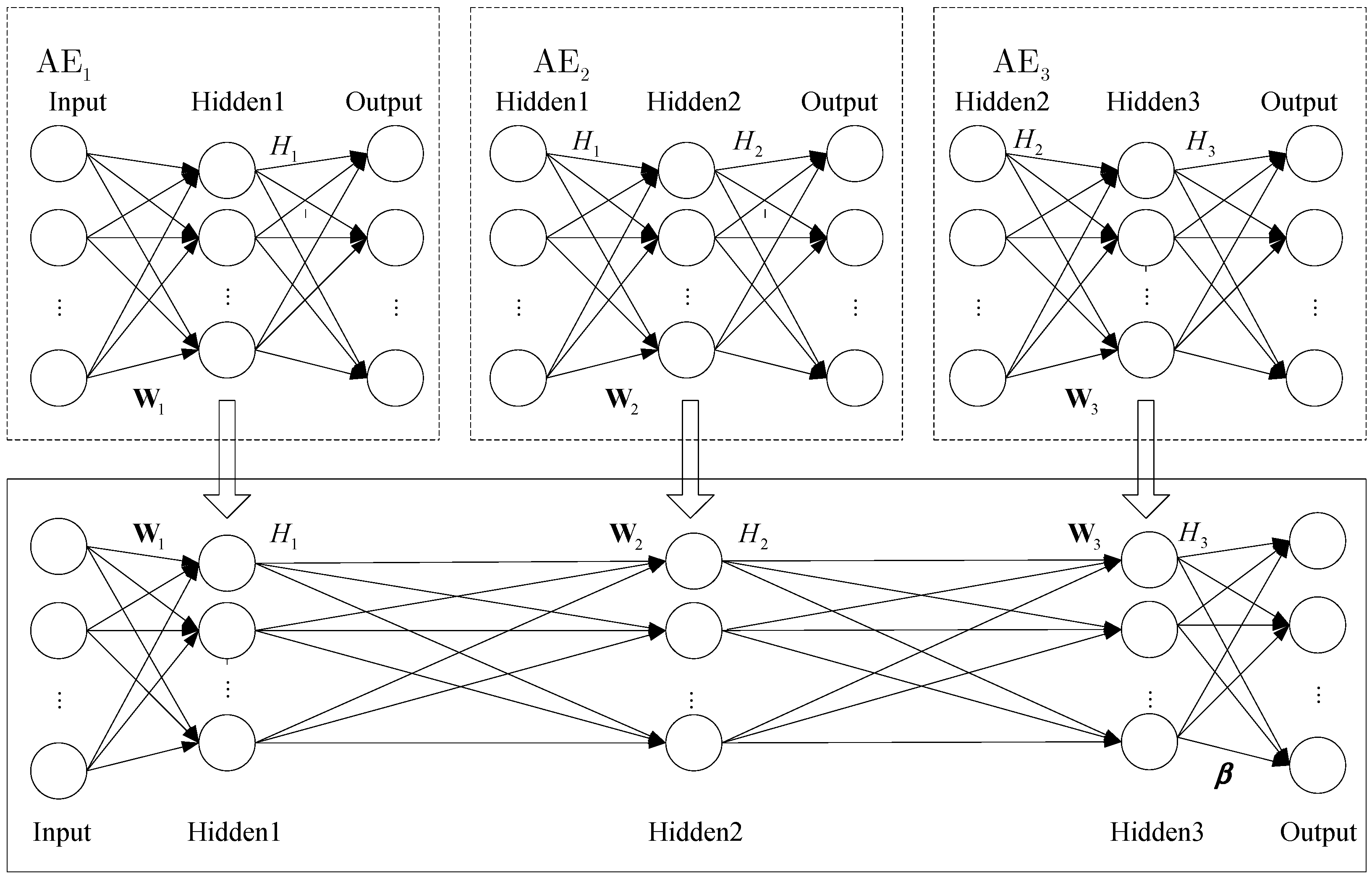

2.2. Stacked Autoencoder

2.3. Extreme Learning Machine

| Algorithm 1: ELM |

| Input: training sets , (, , ), activation function and hidden nodes . |

| Output: output weight vector |

| (1): Set random values to the input weights and the hidden layer biases ; |

| (2): Calculate the hidden layer output matrix according to Equation (7); |

| (3): Calculate the output weight vector according to Equation (9). |

3. Stacked Autoencoder-Regularized Extreme Learning Machine

3.1. Regularized ELM Based on the Class Information of the Target

| Algorithm 2: Regularized ELM |

| Input: training sets , (, , ), activation function and hidden nodes . |

| Output: output weight vector |

| (1): Calculate and , then calculate according to Equation (15); |

| (2): Set random values to the input weights and the hidden layer biases ; |

| (3): Calculate the hidden layer output matrix according to Equation (7); |

| (4): Calculate the output weight vector according to Equation (19). |

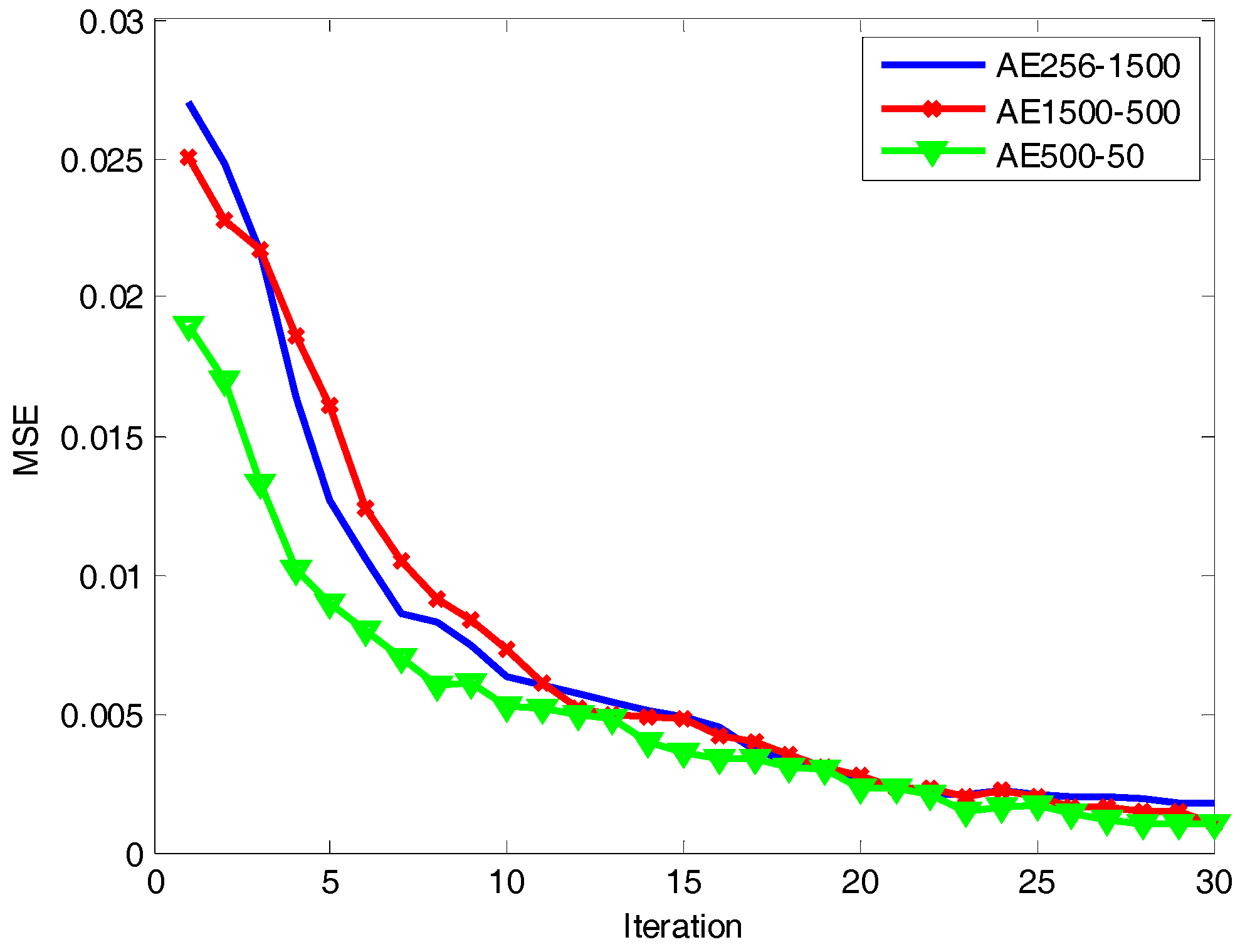

3.2. SAE-ELM

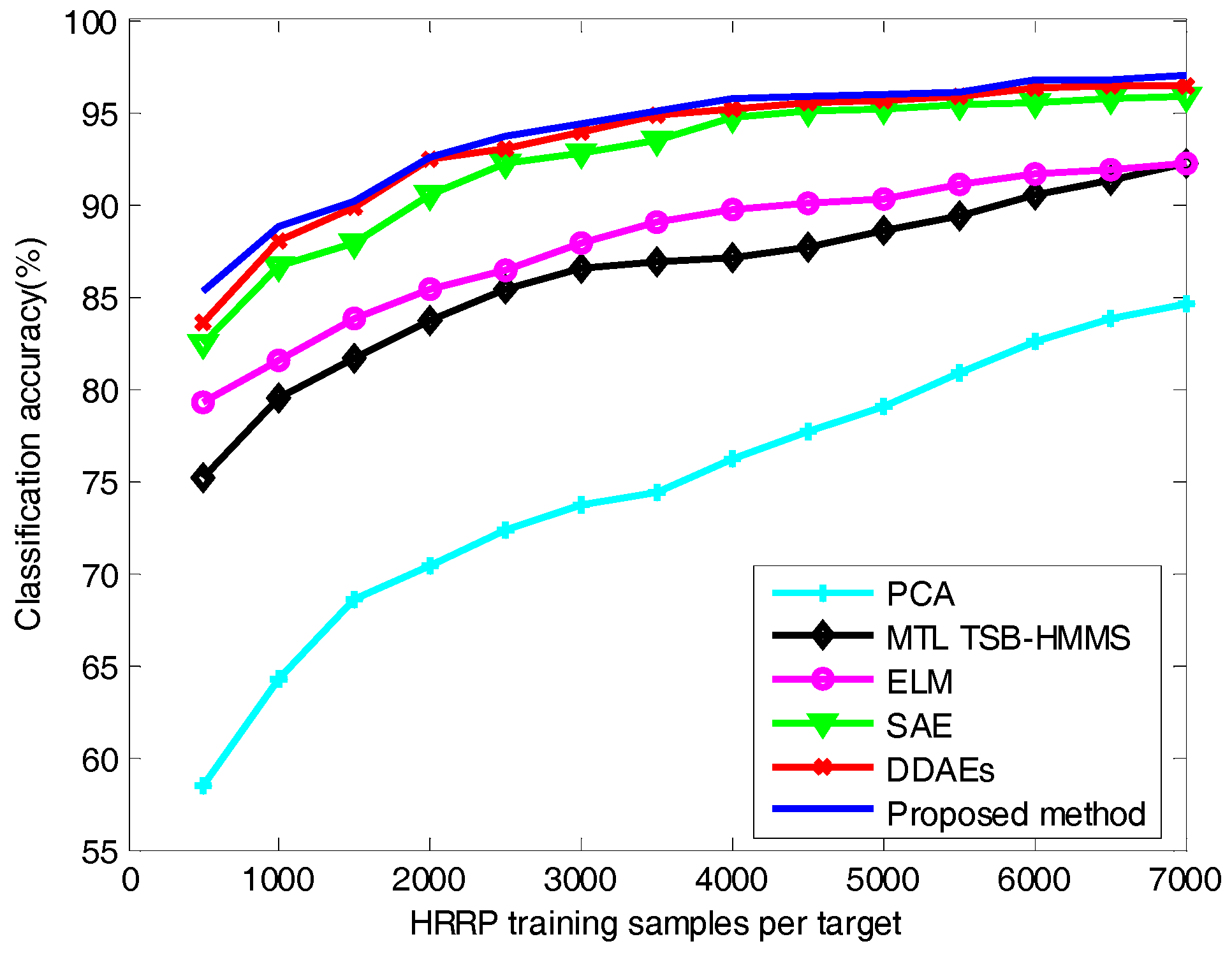

4. Experimental Results and Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Liu, Y.X.; Zhu, D.K.; Li, X.; Zhuang, Z.W. Micromotion characteristic acquisition based on wideband radar phase. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3650–3657. [Google Scholar] [CrossRef]

- Vespe, M.; Baker, C.J.; Griffiths, H.D. Radar target classification using multiple perspectives. IET Radar Sonar Navig. 2007, 1, 300–307. [Google Scholar] [CrossRef]

- Du, L.; Liu, H.W.; Bao, Z.; Zhang, J. Radar automatic target recognition using complex high-resolution range profiles. IET Radar Sonar Navig. 2007, 1, 18–26. [Google Scholar] [CrossRef]

- Du, L.; Wang, P.H.; Liu, H.W.; Pan, M.; Chen, F.; Bao, Z. Bayesian spatiotemporal multitask learning for radar HRRP target recognition. IEEE Trans. Signal Process. 2011, 59, 3182–3196. [Google Scholar] [CrossRef]

- Liu, J.; Fang, N.; Xie, Y.J.; Wang, B.F. Scale-space theory-based multi-scale features for aircraft classification using HRRP. Electron. Lett. 2016, 52, 475–477. [Google Scholar] [CrossRef]

- Zhang, X.D.; Shi, Y.; Bao, Z. A new feature vector using selected bispectra for signal classification with application in radar target recognition. IEEE Trans. Signal Process. 2001, 49, 1875–1885. [Google Scholar] [CrossRef]

- Du, L.; Liu, H.W.; Wang, P.H.; Feng, B.; Pan, M.; Bao, Z. Noise robust radar HRRP target recognition based on multitask factor analysis with small training data size. IEEE Trans. Signal Process. 2012, 60, 3546–3559. [Google Scholar]

- López-Rodríguez, P.; Escot-Bocanegra, D.; Fernández-Recio, R.; Bravo, I. Non-cooperative target recognition by means of singular value decomposition applied to radar high resolution range profiles. Sensors 2015, 15, 422–439. [Google Scholar] [CrossRef] [PubMed]

- López-Rodríguez, P.; Escot-Bocanegra, D.; Fernández-Recio, R.; Bravo, I. Non-cooperative identification of civil aircraft using a generalised mutual subspace method. IET Radar Sonar Navig. 2016, 10, 186–191. [Google Scholar] [CrossRef]

- Slomka, J.S. Features for high resolution radar range profile based ship classification. In Proceedings of the Fifth International Symposium on Signal Processing and its Applications (ISSPA), Brisbane, Australia, 22–25 August 1999; pp. 329–332. [Google Scholar]

- Christopher, M.; Alireza, K. Maritime ATR using classifier combination and high resolution range profiles. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 2558–2573. [Google Scholar]

- Li, J.; Stoica, P. Efficient mixed-spectrum estimation with application to feature extraction. IEEE Trans. Signal Process. 1996, 42, 281–295. [Google Scholar]

- Pei, B.; Bao, Z. Multi-aspect radar target recognition method based on scattering centers and HMMs classifiers. IEEE Trans. Aerosp. Electron. Syst. 2005, 41, 1067–1074. [Google Scholar]

- Jiang, Y.; Han, Y.B.; Sheng, W.X. Target recognition of radar HRRP using manifold learning with feature weighting. In Proceedings of the 2016 IEEE International Workshop on Electromagnetics: Applications and Student Innovation Competition (iWEM), Nanjing, China, 16–18 May 2016; pp. 1–3. [Google Scholar]

- Li, L.; Liu, Z. Noise-robust HRRP target recognition method via sparse-low-rank representation. Electron. Lett. 2017, 53, 1602–1604. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Pan, M.; Jiang, J.; Kong, Q.P.; Jiang, J.; Sheng, Q.H.; Zhou, T. Radar HRRP target recognition based on t-SNE segmentation and discriminant deep belief network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1609–1613. [Google Scholar] [CrossRef]

- Sun, W.J.; Shao, S.Y.; Zhao, R.; Yan, R.Q.; Zhang, X.W.; Chen, X.F. A sparse auto-encoder-based deep neural network approach for induction motor faults classification. Measurement 2016, 89, 171–178. [Google Scholar] [CrossRef]

- Zhao, F.X.; Liu, Y.X.; Huo, K. Radar target recognition based on stacked denoising sparse autoencoder. Chin. J. Radar 2017, 6, 149–156. [Google Scholar]

- Kang, M.; Ji, K.; Leng, X.; Xing, X.; Zou, H. Synthetic aperture radar target recognition with feature fusion based on a stacked autoencoder. Sensors 2017, 17, 192. [Google Scholar] [CrossRef] [PubMed]

- Protopapadakis, E.; Voulodimos, A.; Doulamis, A.; Doulamis, N.; Dres, D.; Bimpas, M. Stacked autoencoders for outlier detection in over-the-horizon radar signals. Comput. Intell. Neurosci. 2017. [Google Scholar] [CrossRef]

- Pan, M.; Jiang, J.; Li, Z.; Cao, J.; Zhou, T. Radar HRRP recognition based on discriminant deep autoencoders with small training data size. Electron. Lett. 2016, 52, 1725–1727. [Google Scholar]

- Feng, B.; Chen, B.; Liu, H.W. Radar HRRP target recognition with deep networks. Pattern Recognit. 2017, 61, 379–393. [Google Scholar] [CrossRef]

- Zhai, Y.; Chen, B.; Zhang, H.; Wang, Z.J. Robust variational auto-encoder for radar HRRP target recognition. In Proceedings of the International Conference on Intelligent Science and Big Data Engineering, Dalian, China, 22–23 September 2017; pp. 356–367. [Google Scholar]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Mou, R.; Chen, Q.; Huang, M. An improved BP neural network and its application. In Proceedings of the 2012 Fourth International Conference on Computational and Information Sciences, Chongqing, China, 10–12 August 2012; pp. 477–480. [Google Scholar]

- Tang, J.; Deng, C.; Huang, G.B. Extreme learning machine for multilayer perceptron. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 809–821. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Huang, G.B.; Song, S.J.; You, K. Trends in extreme learning machines: A review. Neural Netw. 2015, 61, 32–48. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Fan, W.; Sun, F.W.; Qian, X.J. An adaptive ensemble model of extreme learning machine for time series prediction. In Proceedings of the 12th International Computer Conference on Wavelet Active Media Technology and Information Processing (ICCWAMTIP), Chengdu, China, 18–20 December 2015; pp. 80–85. [Google Scholar]

- Huang, G.B.; Zhou, H.M.; Ding, X.J.; Zhang, R. Extreme learning machine for regression and multiclass classification. IEEE Trans. Syst. Man Cybern. B Cybern. 2012, 42, 513–529. [Google Scholar] [CrossRef] [PubMed]

- Liang, N.Y.; Huang, G.B.; Saratchandran, P.; Sundararajan, N. A fast and accurate online sequential learning algorithm for feedforward networks. IEEE Trans. Neural Netw. 2006, 17, 1411–1423. [Google Scholar] [CrossRef] [PubMed]

- Feng, G.R.; Huang, G.B.; Lin, Q.P.; Gay, R. Error minimized extreme learning machine with growth of hidden nodes and incremental learning. IEEE Trans. Neural Netw. 2009, 20, 1352–1357. [Google Scholar] [CrossRef] [PubMed]

- Li, M.B.; Huang, G.B.; Saratchandran, P.; Sundararajan, N. Fully complex extreme learning machine. Neurocomputing 2005, 68, 306–314. [Google Scholar] [CrossRef]

- Huang, G.B.; Chen, L. Enhanced random search based incremental extreme learning machine. Neurocomputing 2008, 71, 3460–3468. [Google Scholar] [CrossRef]

- Pal, M.; Maxwell, A.E.; Warner, T.A. Kernel-based extreme learning machine for remote-sensing image classification. Remote Sens. Lett. 2013, 4, 853–862. [Google Scholar] [CrossRef]

- Liu, J.; Fang, N.; Xie, Y.J.; Wang, B.F. Radar target classification using support vector machine and subspace methods. IET Radar Sonar Navig. 2015, 9, 632–640. [Google Scholar] [CrossRef]

- Liu, N.; Wang, H. Evolutionary extreme learning machine and its application to image analysis. J. Signal Proc. Syst. Signal Image Video Technol. 2013, 73, 73–81. [Google Scholar] [CrossRef]

- Ding, S.F.; Zhang, N.; Zhang, J.; Xu, X.Z.; Shi, Z.Z. Unsupervised extreme learning machine with representational features. Int. J. Mach. Learn. Cybern. 2017, 8, 587–595. [Google Scholar] [CrossRef]

- Zeng, Y.; Xu, X.; Shen, D.; Fang, Y.; Xiao, Z. Traffic sign recognition using kernel extreme learning machines with deep perceptual features. IEEE Trans. Intell. Trans. Syst. 2017, 18, 1647–1653. [Google Scholar] [CrossRef]

- Yang, X.Y.; Pang, S.; Shen, W.; Lin, X.S.; Jiang, K.Y.; Wang, Y.H. Aero engine fault diagnosis using an optimized extreme learning machine. Int. J. Aerosp. Eng. 2016. [Google Scholar] [CrossRef]

- Zhao, F.X.; Liu, Y.X.; Huo, K.; Zhang, Z.S. Radar Target Classification Using an Evolutionary Extreme Learning Machine Based on Improved Quantum-Behaved Particle Swarm Optimization. Math. Probl. Eng. 2017. [Google Scholar] [CrossRef]

- Kumar, V.; Nandi, G.C.; Kala, R. Static hand gesture recognition using stacked denoising sparse autoencoders. In Proceedings of the Seventh International Conference on Contemporary Computing (IC3), Noida, India, 7–9 August 2014; pp. 99–104. [Google Scholar]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Peng, C.; Yan, J.; Duan, S.K.; Wang, L.D.; Jia, P.F.; Zhang, S.L. Enhancing electronic nose performance based on a novel QPSO-KELM model. Sensors 2016, 16, 520. [Google Scholar] [CrossRef] [PubMed]

- Rao, C.R.; Mitra, S.K. Generalized Inverse of Matrices and Its Applications; Wiley: New York, NY, USA, 1971. [Google Scholar]

- Serre, D. Matrices: Theory and Applications; Springer: New York, NY, USA, 2002. [Google Scholar]

- Fletcher, R. Practical Methods of Optimization: Constrained Optimization; Wiley: New York, NY, USA, 1981. [Google Scholar]

- Yan, D.Q.; Chu, Y.H.; Zhang, H.Y.; Liu, D.S. Information discriminative extreme learning machine. Soft Comput. 2016, 1–13. [Google Scholar] [CrossRef]

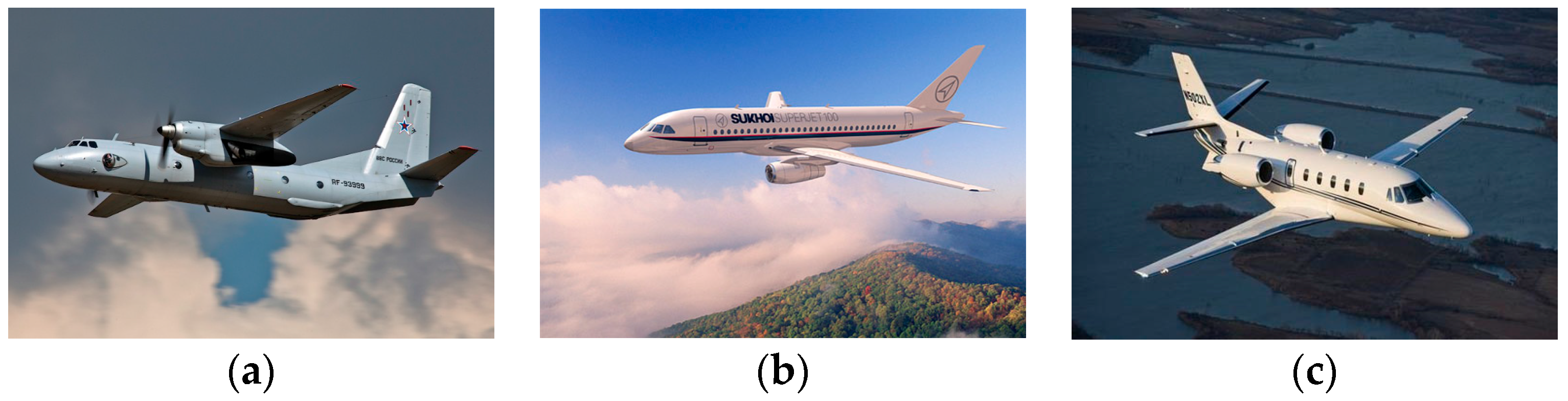

| Radar Parameters | Center Frequency | 5520 MHz | |

|---|---|---|---|

| Bandwidth | 400 MHz | ||

| Airplane | Length (m) | Width (m) | Height (m) |

| An-26 | 23.80 | 29.20 | 9.83 |

| Yark-42 | 36.38 | 34.88 | 9.83 |

| Citation business jet | 14.40 | 15.90 | 4.57 |

| Method | Classification Accuracy (%) |

|---|---|

| PCA | 74.38 |

| MTL TSB-HMMS | 86.87 |

| ELM | 89.01 |

| SAE | 93.51 |

| DDAEs | 94.79 |

| Proposed method | 95.01 |

| Method | Training Time (s) |

|---|---|

| SAE | 624.73 |

| DDAEs | 625.14 |

| Proposed method | 106.67 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, F.; Liu, Y.; Huo, K.; Zhang, S.; Zhang, Z. Radar HRRP Target Recognition Based on Stacked Autoencoder and Extreme Learning Machine. Sensors 2018, 18, 173. https://doi.org/10.3390/s18010173

Zhao F, Liu Y, Huo K, Zhang S, Zhang Z. Radar HRRP Target Recognition Based on Stacked Autoencoder and Extreme Learning Machine. Sensors. 2018; 18(1):173. https://doi.org/10.3390/s18010173

Chicago/Turabian StyleZhao, Feixiang, Yongxiang Liu, Kai Huo, Shuanghui Zhang, and Zhongshuai Zhang. 2018. "Radar HRRP Target Recognition Based on Stacked Autoencoder and Extreme Learning Machine" Sensors 18, no. 1: 173. https://doi.org/10.3390/s18010173