A Robust Vehicle Localization Approach Based on GNSS/IMU/DMI/LiDAR Sensor Fusion for Autonomous Vehicles

Abstract

:1. Introduction

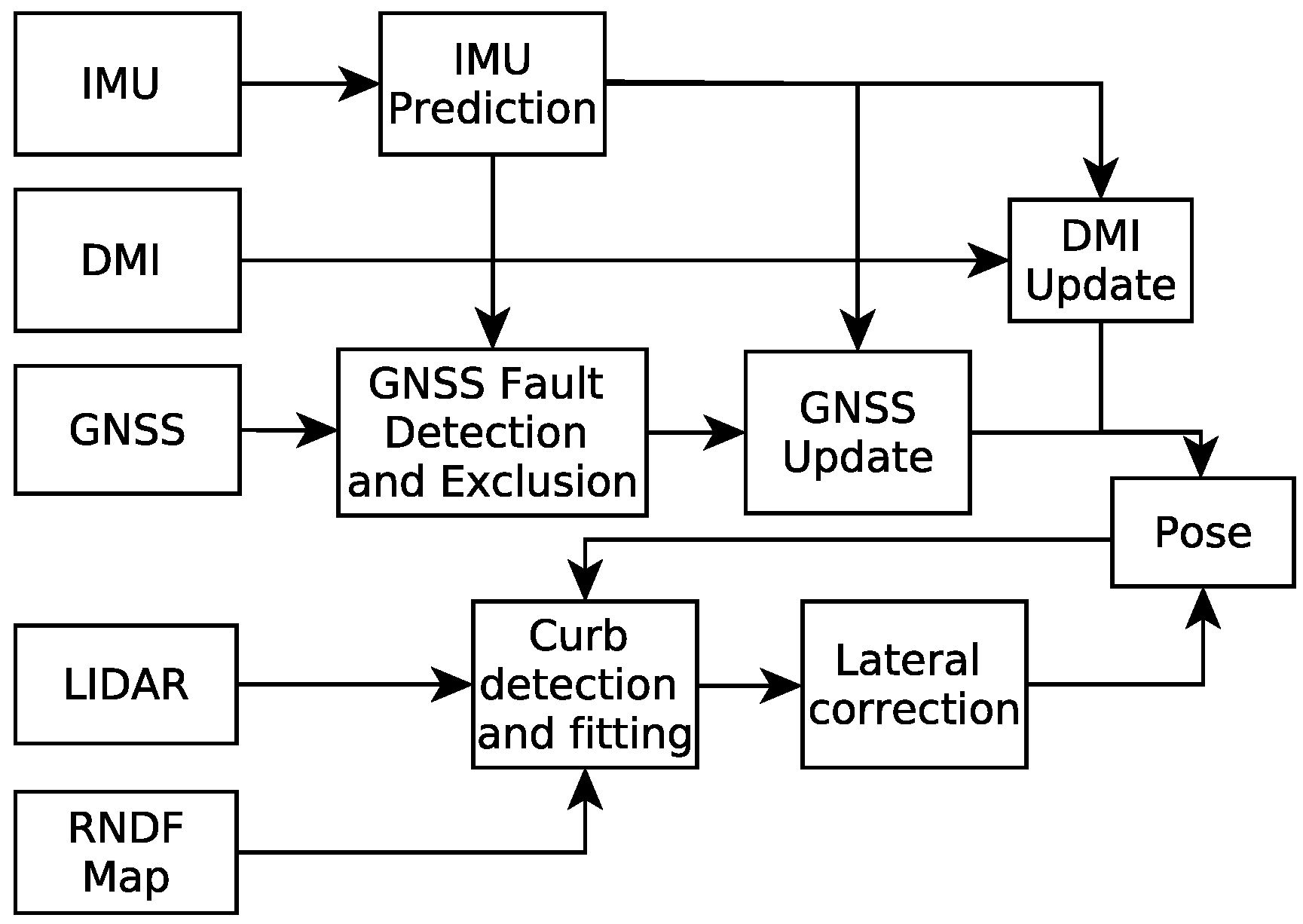

2. UKF-Based Localization Approach

- Earth-Centered-Earth-Fixed (ECEF) coordinate system (E): It has an origin at the center of the Earth. The positive Z-axis goes out the Earth’s north pole; the X-axis is along the prime meridian; and the Y-axis completes the right-handed system;

- Global coordinate system (G): The North-East-Down (NED) coordinate system is defined as G with the X-axis pointing north, the Y-axis pointing east and the Z-axis pointing down to construct a right-handed coordinate system;

- Body coordinate system (B): The coordinate system of the vehicle with the X-axis pointing forwards, the Y-axis pointing left and the Z-axis pointing up;

- Sensor coordinate system (S): the three orthogonal axes of the mounted sensors. We assume that S coincides with B after sensor to body alignment calibration [24].

2.1. Process Model

2.2. Measurement Model

2.2.1. Measurement Model of GNSS

2.2.2. Measurement Model of DMI

2.3. Implementation of UKF

2.3.1. Time Update

- Calculate the sigma points:where are the sigma points of state vector at previous time step , n is the dimension of the state vector , . determines the spread of the sigma points and is a secondary scaling parameter, which is usually set to one. One should note that the initial condition should be known.

- Time update process:where and are the predicted mean and covariance, respectively, and and are the weights of mean and covariance, which are associated with the i-th point, given by [27]:where is a parameter used to incorporate any prior knowledge about the distribution of state (for Gaussian distributions, is optimal).

2.3.2. Measurement Update of GNSS

- Calculate the sigma points:where and are the predicted mean and covariance from time update at time t, respectively.

- Perform measurement update:where are the projected sigma points through the measurement function h, is the predicted measurement produced by the weighted sigma points, and are the predicted measurement covariance and the state-measurement cross-covariance matrix, respectively, is the Kalman gain, is the innovation and and are the updated state and covariance at time t, respectively.

2.3.3. Measurement Update of DMI

2.4. Automatic Detection the Degradation of GNSS Performance

3. Correction of Lateral Localization Errors

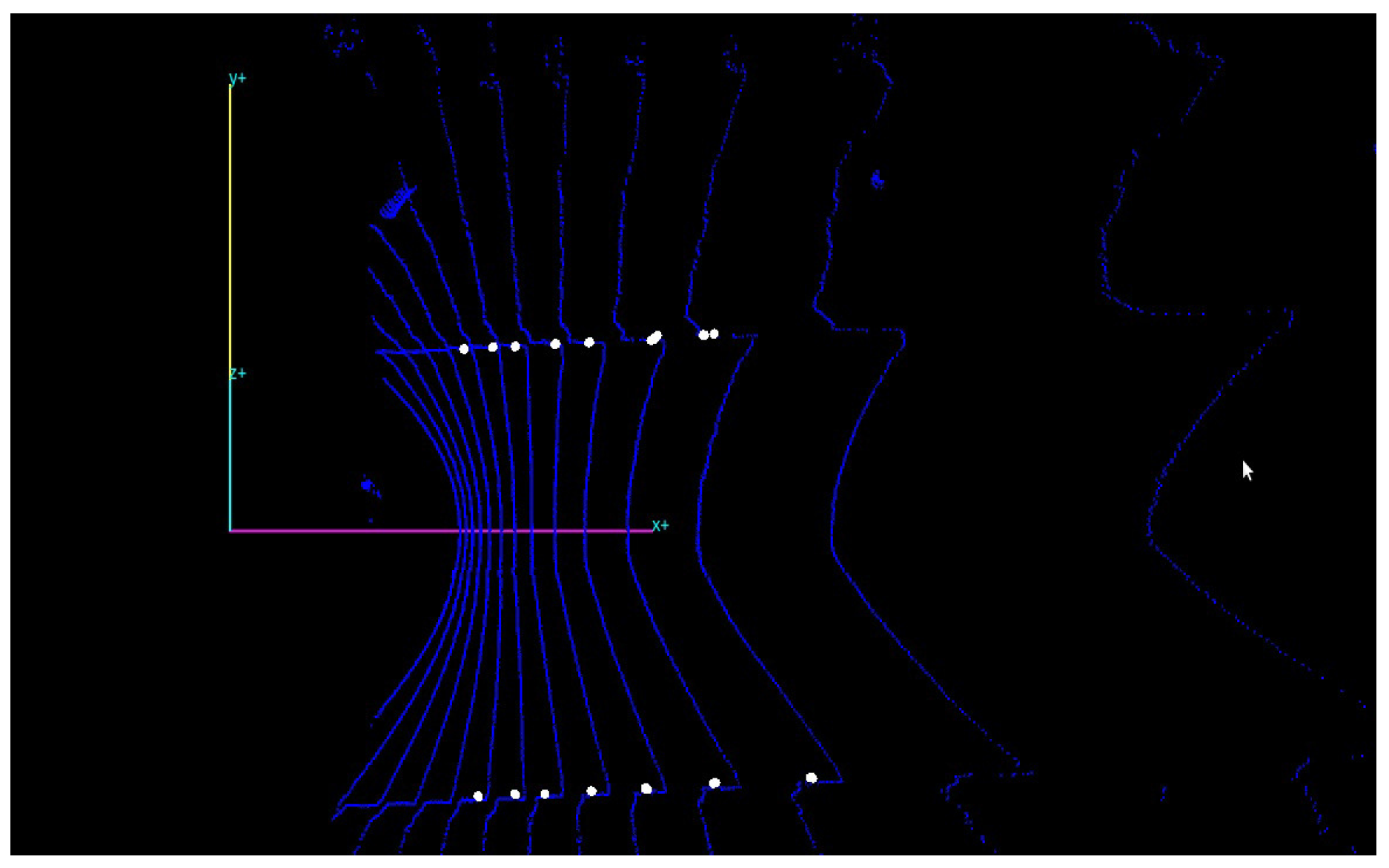

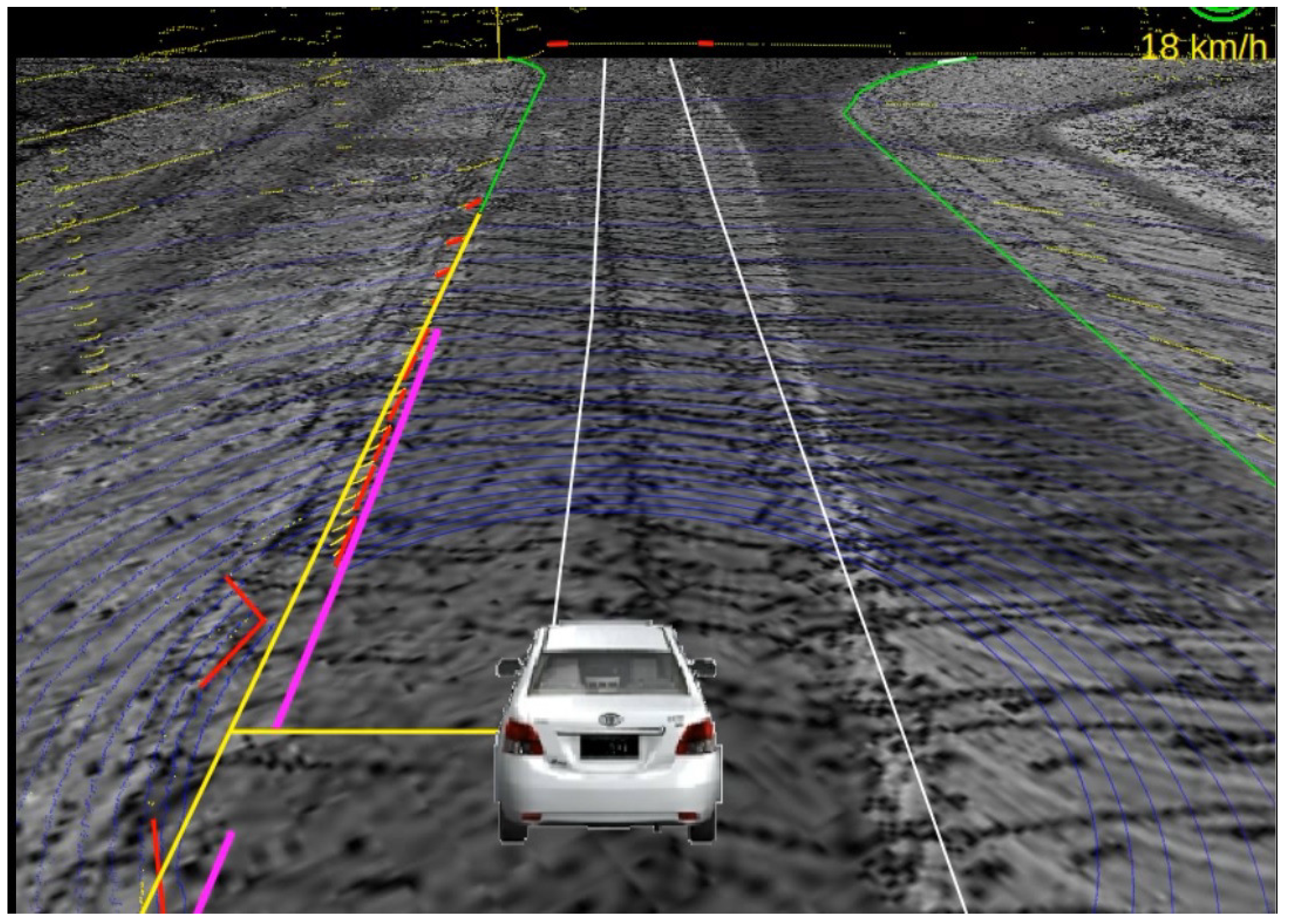

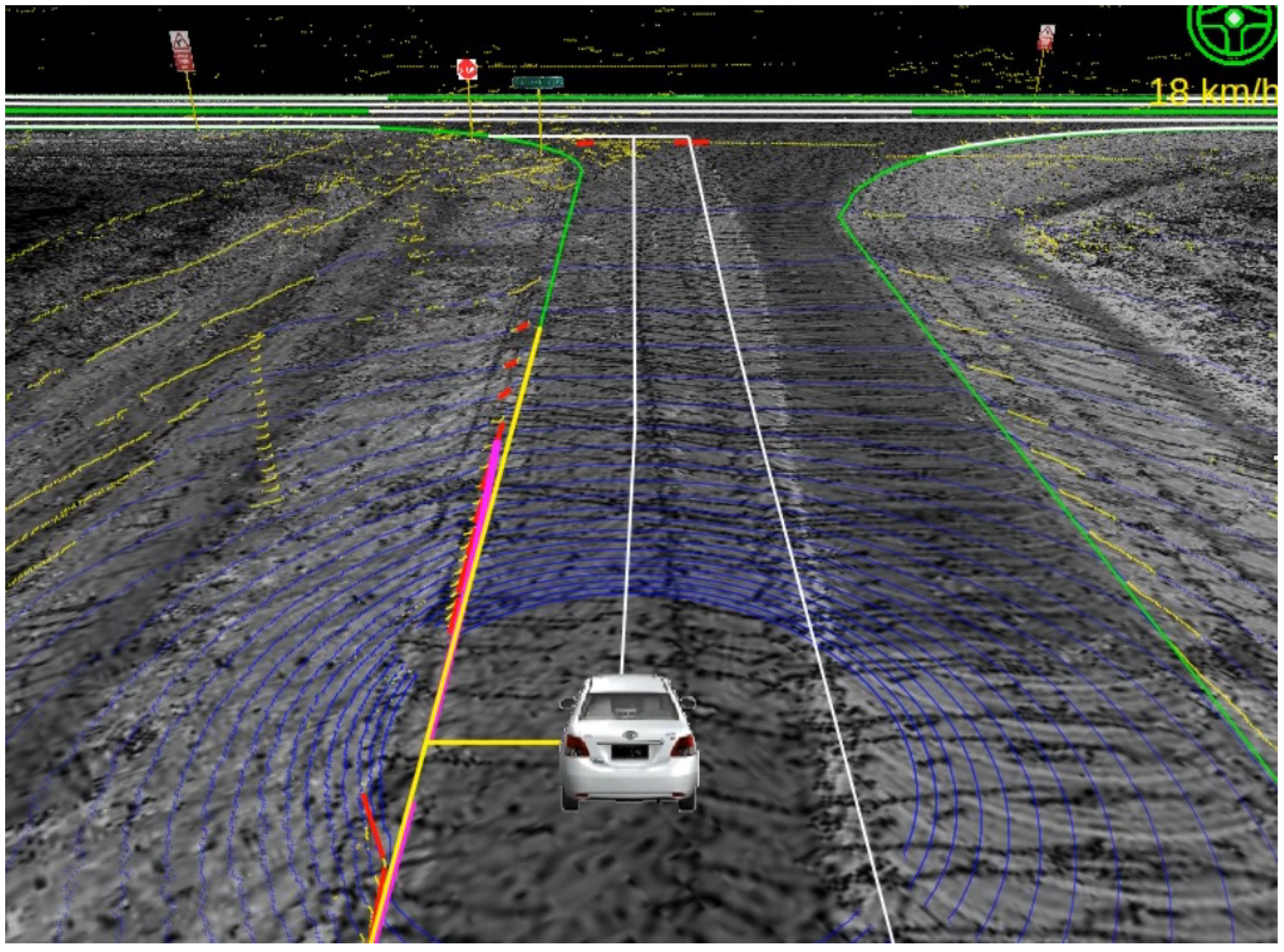

3.1. Curb Detection

3.1.1. Curb Detection Principle

3.1.2. Algorithm for Curb Detection

| Algorithm 1 Framework of curb detection. |

| Require: Point clouds collected by a 3D LiDAR; Ensure: Step 1: Given input point cloud, select the area of interest; Step 2: Calculate the vector difference of adjacent points in each beam; Step 3: Select curb-like points and filter out noises; Step 4: Separate higher obstacles by comparing the height with a threshold; return Curb points. |

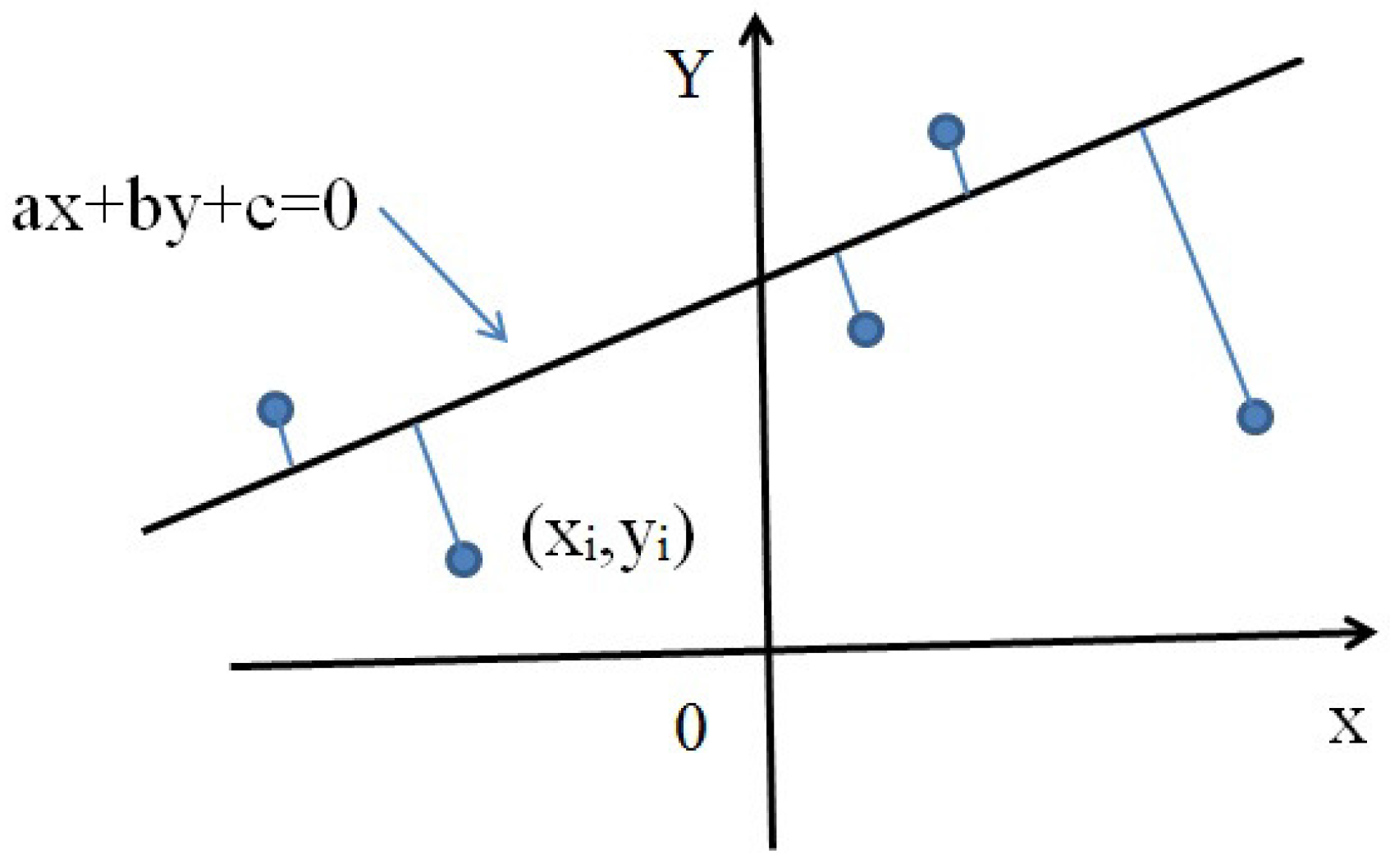

3.2. Curb Line Fitting Using RANSAC

| Algorithm 2 Framework of curb fitting. |

| Require: Detected curb points; Ensure: Step 1: Randomly select a sample of s curb points from S, and instantiate the model from this subset; Step 2: Determine the set of curb points that are within a distance threshold t of the model. The set is the consensus set of samples and defines the inliers of S; Step 3: If the subset of is greater than some threshold T, re-estimate the model using all of the points in and terminate; Step 4: If the size of is less than T, select a new subset and repeat the above; Step 5: After N trials, the largest consensus set is selected, and the model is re-estimated using all of the points in the subset . return Curb model. |

| Algorithm 3 Pseudo-code of curb fitting. |

| Require: M 3D points (only the X and Y coordinates are used); Ensure: Step 1: Initialize parameters of the algorithm. Let ; ; Step 2: Repeat for N iterations: a. Select two points randomly from the M points; b. Compute the parameters that define the line passing through those two points; c. Count the number of inliers for the current line; d. If the number of inliers is greater than or equal to T, terminate; e. Keep the line having a maximum number of inliers; Step 3: Draw the line having the maximum number of inliers. return Line/curve parameters of curbs. |

3.3. Lateral Correction Based on the Kalman Filter

3.3.1. Lateral Error Estimation

- State space: The space of real number

- State Vector:

- System equation:

- Observation:

- Prediction equation:

- Updating equations: ,

3.3.2. Lateral Adjustment

4. Experimental Results

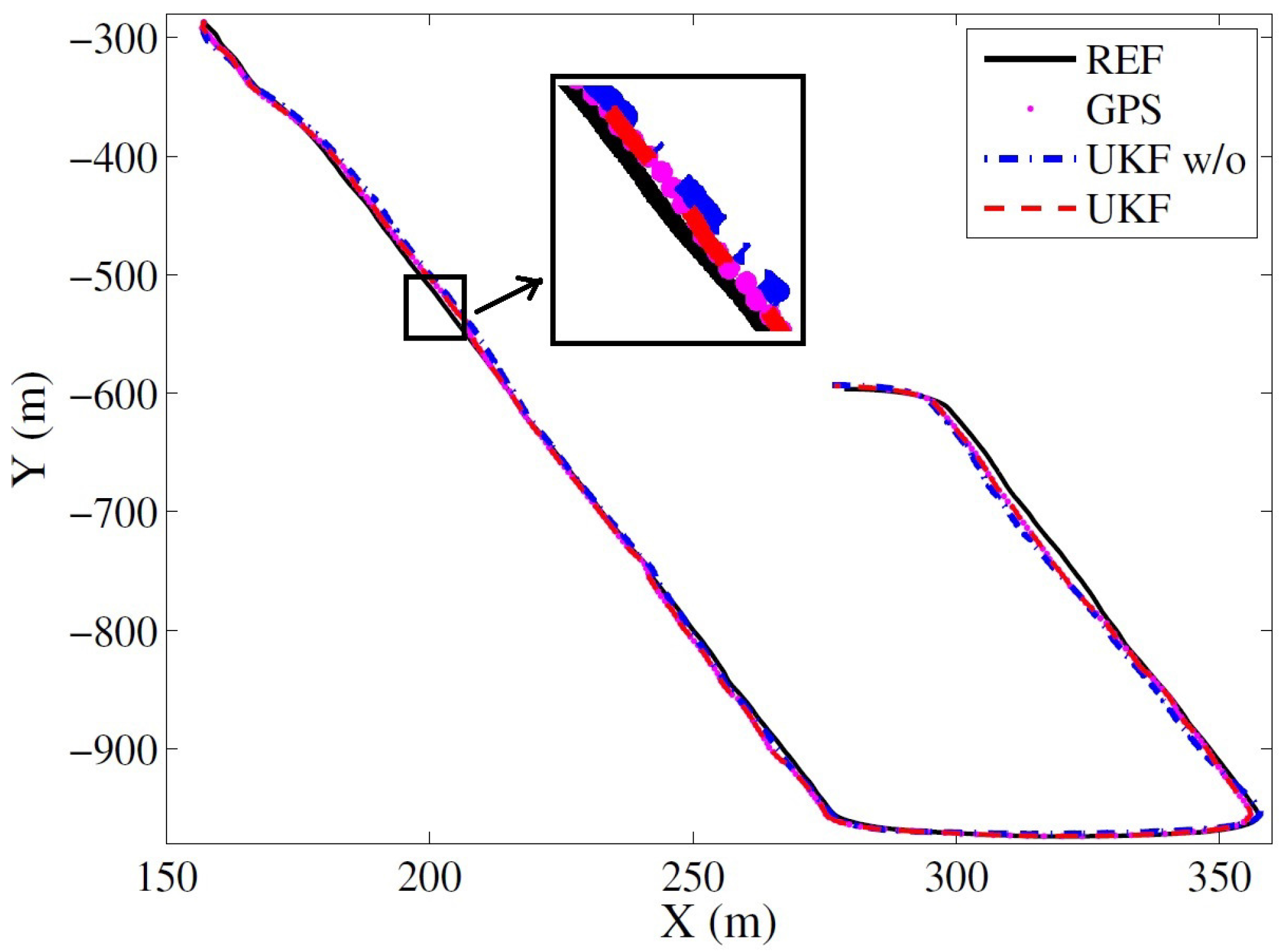

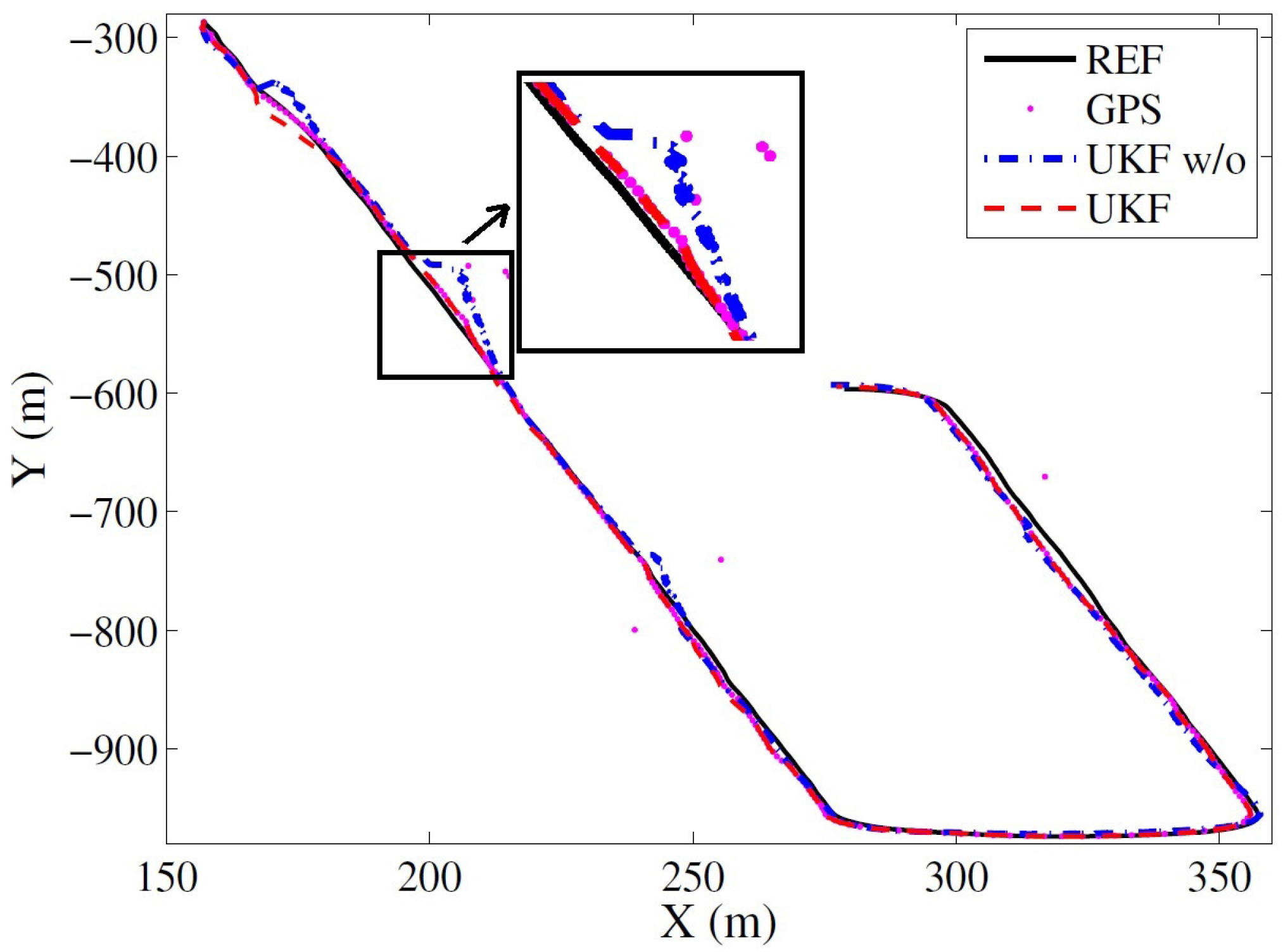

Result of GNSS/INS/DMI-Based Localization

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Montemerlo, M.; Becker, J.; Bhat, S.; Dahlkamp, H.; Dolgov, D.; Ettinger, S.; Haehnel, D.; Hilden, T.; Hoffmann, G.; Huhnke, B.; et al. Junior: The Stanford entry in the urban challenge. J. Field Robot. 2008, 25, 569–597. [Google Scholar] [CrossRef]

- Ronnback, S. Development of an INS/GPS Navigation Loop for an UAV. Master’s Thesis, Lulea Tekniska Universitet, Lulea, Sweden, 2000. [Google Scholar]

- Zhao, Y.M. GPS/IMU Integrated System for Land Vehicle Navigation Based on MEMS; Royal Institute of Technology: Stockholm, Sweden, 2011. [Google Scholar]

- Pitt, M.K.; Shephard, N. Filtering via simulation: Auxiliary particle filters. J. Am. Stat. Assoc. 1999, 94, 590–599. [Google Scholar] [CrossRef]

- Arulampalam, S.; Maskell, S.; Gordon, N.; Clapp, T. A tutorial on particle filter for on-line nonlinear/non-Gaussian Bayesian tracking. IEEE Trans. Signal Process. 2002, 50, 174–188. [Google Scholar] [CrossRef]

- Nemra, A.; Aouf, N. Robust INS/GPS sensor fusion for UAV localization using SDRE nonlinear filtering. IEEE Sens. J. 2010, 10, 789–797. [Google Scholar] [CrossRef]

- Vu, A.; Ramanandan, A.; Chen, A.; Farrell, J.A.; Barth, M. Real-time computer vision/DGPS-aided inertial navigation system for lane-level vehicle navigation. IEEE Trans. Intell. Transp. Syst. 2012, 13, 899–913. [Google Scholar] [CrossRef]

- Suhr, J.K.; Jang, J.; Min, D.; Jung, H.G. Sensor fusion-based low-cost vehicle localization system for complex urban environments. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1078–1086. [Google Scholar] [CrossRef]

- Oh, S.M. Multisensor fusion for autonomous UAV navigation based on the Unscented Kalman Filter with Sequential Measurement Updates. In Proceedings of the 2010 IEEE Conference Multisensor Fusion and Integration for Intelligent Systems (MFI), Salt Lake City, UT, USA, 5–7 September 2010; pp. 217–222. [Google Scholar]

- Zhou, J.C.; Yang, Y.H.; Zhang, J.Y.; Edwan, E.; Loffeld, O. Tightly-coupled INS/GPS using Quaternion-based Unscented Kalman filter. In Proceedings of the AIAA Guidance, Navigation, and Control Conference, Portland, OR, USA, 8–11 August 2011. [Google Scholar]

- Enkhtur, M.; Cho, S.Y.; Kim, K.H. Modified Unscented Kalman Filter for a multirate INS/GPS integrated navigation system. ETRI J. 2013, 35, 943–946. [Google Scholar] [CrossRef]

- Tao, Z.; Bonnifait, P.; Fremont, V.; Ibanez-Guzman, J. Mapping and localization using gps, lane markings and proprioceptive sensors. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 406–412. [Google Scholar]

- Schindler, A. Vehicle self-localization with high-precision digital maps. In Proceedings of the IEEE Intelligent Vehicle Symposium, Gold Coast, Australia, 23–26 June 2013; pp. 141–146. [Google Scholar]

- Gruyer, D.; Belaroussi, R.; Revilloud, M. Map-aided localization with lateral perception. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium (IV), Dearborn, MI, USA, 8–11 June 2014; pp. 674–680. [Google Scholar]

- Pandey, G.; Mcbride, J.R.; Eustice, R.M. Ford campus vision and LiDAR data set. Int. J. Rob. Res. 2011, 30, 1543–1552. [Google Scholar] [CrossRef]

- Zhao, G.Q.; Yuan, J.S. Curb detection and tracking using 3D-LIDAR scanner. In Proceedings of the 19th IEEE International Conference on Image Processing (ICIP), Orlando, FL, USA, 30 September–3 October 2012. [Google Scholar]

- Huang, A.S.; Teller, S. Lane boundary and curb estimation with lateral uncertainties. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 1729–1734. [Google Scholar]

- Hervieu, A.; Soheilian, B. Road side detection and reconstruction using LIDAR sensor. In Proceedings of the 2013 IEEE on the Intelligent Vehicles Symposium (IV), Gold Coast, Australia, 23–26 June 2013; pp. 1247–1252. [Google Scholar]

- Yeonsik, K.; Chiwon, R.; Seung-Beum, S.; Bongsob, S. A Lidar-based decision-making method for road boundary detection using multiple Kalman filters. IEEE Trans. Ind. Electron. 2012, 59, 4360–4368. [Google Scholar]

- Kim, Z. Robust lane detection and tracking in challenging scenarios. IEEE Trans. Intell. Transp. Syst. 2008, 9, 16–26. [Google Scholar] [CrossRef]

- Kodagoda, K.; Wijesoma, W.; Balasuriya, A. CuTE: Curb tracking and estimation. IEEE Trans. Control Syst. Technol. 2006, 14, 951–957. [Google Scholar] [CrossRef] [Green Version]

- Siegemund, J.; Pfeiffer, D.; Franke, U.; Forstne, W. Curb reconstruction using conditional random fields. In Proceedings of the IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010. [Google Scholar]

- Shin, Y.; Jung, C.; Chung, W. Drivable road region detection using a single laser range finder for outdoor patrol robots. In Proceedings of the IEEE Intelligent Vehicles Symposium(IV), San Diego, CA, USA, 21–24 June 2010. [Google Scholar]

- Meng, X.; Zhang, Z.; Sun, S.; Wu, J.; Wong, W. Biomechanical model-based displacement estimation in micro-sensor motion capture. Meas. Sci. Technol. 2012, 23, 055101. [Google Scholar] [CrossRef]

- Kuipers, J. Quaternions and Rotation Sequences: A Primer with Applications to Orbits, Aerospace, and Virtual Reality; Princeton University Press: Princeton, NJ, USA, 1999. [Google Scholar]

- Choukroun, D.; Bar-Itzhack, I.; Oshman, Y. Novel quaternion Kalman filter. IEEE Trans. Aerosp. Electron. Syst. 2006, 42, 174–190. [Google Scholar] [CrossRef]

- Wan, E.A.; der Merwe, R.V. The Unscented Kalman Filter for Nonlinear Estimation. In Proceedings of the 2000 IEEE Adaptive Systems for Signal Processing, Communications, and Control Symposium (AS-SPCC’2000), Lake Louise, AB, Canada, 4 October 2000; pp. 153–158. [Google Scholar]

- Sukkarieh, S.; Nebot, E.; Durrant-Whyte, H. A high integrity IMU/GPS navigation loop for autonomous land vehicle applications. IEEE Trans. Robot. Autom. 1999, 15, 572–578. [Google Scholar] [CrossRef]

- Galanis, G.; Anadranistakis, M. A one-dimensional Kalman filter for the correction of near surface temperature forecasts. Meteorol. Appl. 2002, 9, 437–441. [Google Scholar] [CrossRef]

- Blanco, J.; Moreno, F.; Gonzalez, J. A Collection of Outdoor Robotic Datasets with centimeter-accuracy Ground Truth. Auton. Robots 2009, 27, 174–190. [Google Scholar] [CrossRef]

- Wang, H.; Wang, B.; Liu, B.B.; Meng, X.L.; Yang, G.H. Pedestrian recognition and tracking using 3D LiDAR for autonomous vehicle. Robot. Auton. Syst. 2017, 88, 71–78. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Meng, X.; Wang, H.; Liu, B. A Robust Vehicle Localization Approach Based on GNSS/IMU/DMI/LiDAR Sensor Fusion for Autonomous Vehicles. Sensors 2017, 17, 2140. https://doi.org/10.3390/s17092140

Meng X, Wang H, Liu B. A Robust Vehicle Localization Approach Based on GNSS/IMU/DMI/LiDAR Sensor Fusion for Autonomous Vehicles. Sensors. 2017; 17(9):2140. https://doi.org/10.3390/s17092140

Chicago/Turabian StyleMeng, Xiaoli, Heng Wang, and Bingbing Liu. 2017. "A Robust Vehicle Localization Approach Based on GNSS/IMU/DMI/LiDAR Sensor Fusion for Autonomous Vehicles" Sensors 17, no. 9: 2140. https://doi.org/10.3390/s17092140