User

i initially selects a subset

of assignments and a bid

according to his valuation he preferences. Each user

i initially computes his marginal utility

, thereby obtaining his marginal utility per bid

. Then he interacts with the AI by using the OT technology in [

17], thereby receiving

, where the subscript 0 denotes the cardinality of the current winners’ set is equal to 0, and

is the rank-preserving-encrypted value of

. Then each user

i encrypts it as

by applying the platform’s Paillier encryption key

and a randomly value

. Then user

i commits

, where

is a bit string randomly generated for the proof of correctness. Finally, the user

i signs the commitment

and the encrypted value

. Then he adds

to the list

on the bulletin board and sends

to platform. Receiving all users’ values

, the platform decrypts and sorts them, thereby obtaining the user

i with the maximal encrypted marginal utility per bid. Moreover, the platform enters the following winner determination phase. The more detailed illustration is given in

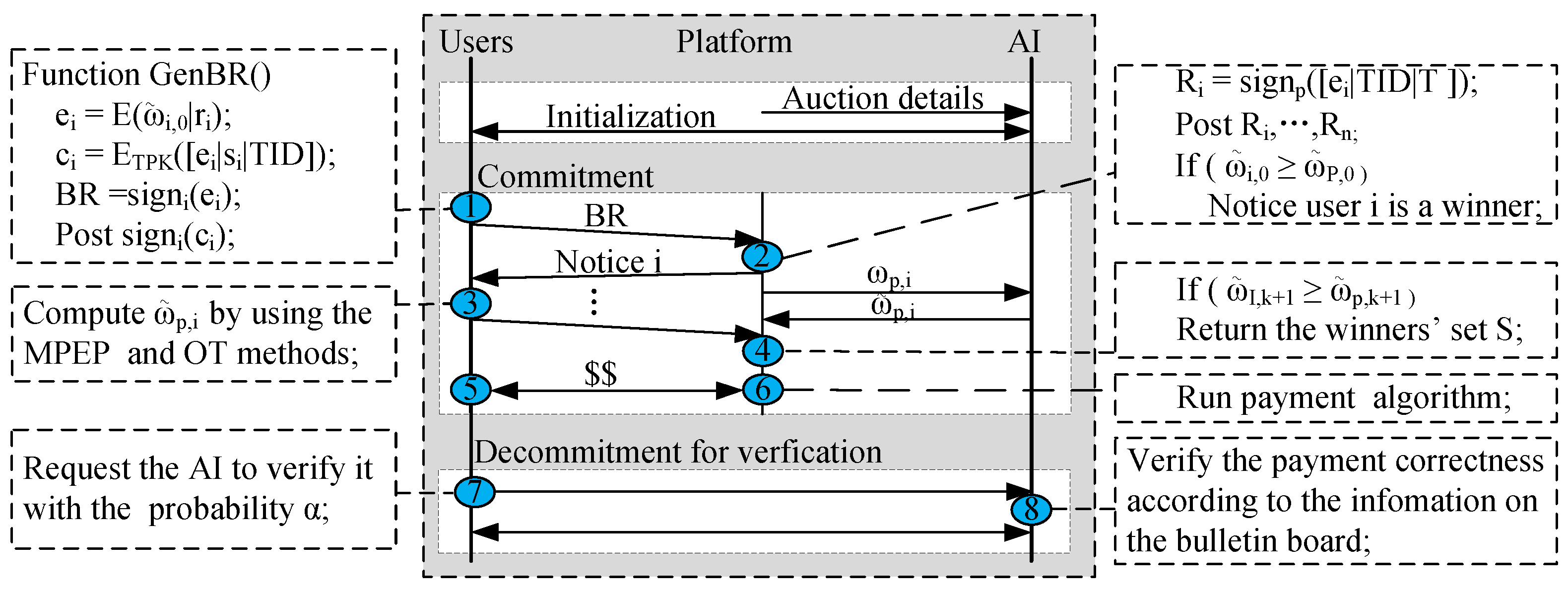

Figure 4.

(a)

Winner Determination: Firstly, the platform applies the homomorphic encryption scheme to compute the utility

according to Algorithm 3, thereby obtaining

. By using the OT technology in [

17], the platform interacts with the AI, and receives the encrypted

. The platform makes a commit

, where

is a bit string randomly generated for the proof of correctness. Signing it,

, the platform adds it to the list

on the bulletin board. If

, the platform will give user

i a notice that he is a winner. Then the user returns an acknowledgement and his encrypted

and

by using the platform’s public key. Receiving the acknowledgement, the platform adds user

i to winners’ set

S (see the line 5 of Algorithm 5.) and notifies each user

to compute his encrypted marginal utility per bid, i.e.,

, by using the same method as the computation of

. These users also add their signed commitments to their corresponding lists

on the above-defined bulletin board. When the platform receives all these

, it sorts them, thereby knowing which user has the maximal encrypted marginal utility per bid. The process is repeated until the

-th user’s

. Finally, we obtain the winners’ set that consists of

k users.

| Algorithm 5 Winner Determination for Sensing Submodular Jobs |

| Input: User set , the budget constraint B. |

| Output: The winners’ set S. |

- 1:

; For every , the platform recovers by using the decryption algorithm, and sorts all these values in a decreasing order, thereby obtaining the user i with the maximal encrypted marginal utility per bid, i.e., ; - 2:

The platform obtains by using the OT technology in [ 17] and Algorithm 3, and adds a signed commitment to the list on the bulletin board; - 3:

while do - 4:

The platform notices that user i is a winner; - 5:

Receiving an acknowledgement, the platform adds user i to the winner set S, i.e., ; - 6:

Notify each user to compute his encrypted marginal utility per bid, i.e., , by using the same method as the computation of ; Obtaining all these encrypted marginal utilities per bid, the platform finds the user i so that ; - 7:

The platform obtains by using the OT technology in [ 17] and Algorithm 3, and adds a signed commitment to the list on the bulletin board; - 8:

end while - 9:

return S

|

(b)

Payment Determination: At this stage, the encrypted values from the above

algorithm cannot support the preserving rank under the multiplication operation. To address this challenge, we introduce the homomorphic encryption schemes, which enable multiplication operation of encrypted values without disclosing privacy about the values and the computation’s result. Firstly, at time

T, for each winner

, its payment computation from the platform is given in the following description. The platform initializes the user set

and set

by using

and

. Differentiating from the above winner set, we refer to

as a referenced winner set. Each user

initially computes his marginal utility

, thereby obtaining his encrypted marginal utility per bid

by using the AI’s homomorphic encryption public key. He makes a commit

by using the above method. Finally, user

j signs this commitment

and the encrypted value

. Then he adds

to the list

on these bulletin board (meaning that the list is used to put user

j’s commitment for the computation of user

i’s payment) and sends the

to the platform. Receiving the values of all users in

, the platform sorts them, thereby obtaining the user

with the maximal encrypted marginal utility per bid (i.e.,

). Then the platform notices that user

is a referenced winner and requests user

i for obtaining the

. After user

receives the request, he computes the value

by applying the above MPEP and AI’s encryption public key. Signing it, he sends the signed

to the platform. According to the homomorphic encryption, we have

. Similarly, we can obtain

, where

means the encrypted marginal utility per bid when there are

j referenced winners. Since user

i is a true winner, the platform knows his bid and sensing preference

. Thus, the platform can compute the value

. Receiving the value

, the platform can obtain

and

. Furthermore, the interim payment can be obtained by using the homomorphic encryption comparison operation. Subsequently, the platform adds user

to the referenced winners’ set. The process is repeated until the

-th user’s

. Finally, we obtain the payment of winner

i. Other winners’ payments are computed by adopting the same method as the winner

i’s payment. The details are given in Algorithm 6.

| Algorithm 6 Payment Determination for Sensing Submodular Jobs |

| Input: User set , the budget constraint B, the set of winners S. |

| Output: (, p). |

- 1:

for each user do - 2:

; - 3:

end for - 4:

for all user do - 5:

; the referenced winners’ set ; - 6:

repeat - 7:

Every computes his encrypted marginal utility per bid by using AI’s homomorphic encryption public key for sending to the platform, and adds a signed commitment to the list on these bulletin board; Receiving these encrypted values, the platform sorts them in a decreasing order, thereby obtaining the user with the maximal encrypted marginal utility per bid, i.e., ; - 8:

Notice that user is a referenced winner and requests user i for obtaining the ; - 9:

According to the description of Section 5.3.2, the platform computes and by applying the Paillier cryptosystem and its homomorphic property in [ 46]; Obtain ; - 10:

; ; - 11:

until or - 12:

The platform requests the AI for obtaining the payment, i.e., , where denotes the decryption by using the AI’s private key; - 13:

end for - 14:

return (, p)

|