Noncontact Sleep Study by Multi-Modal Sensor Fusion

Abstract

:1. Introduction

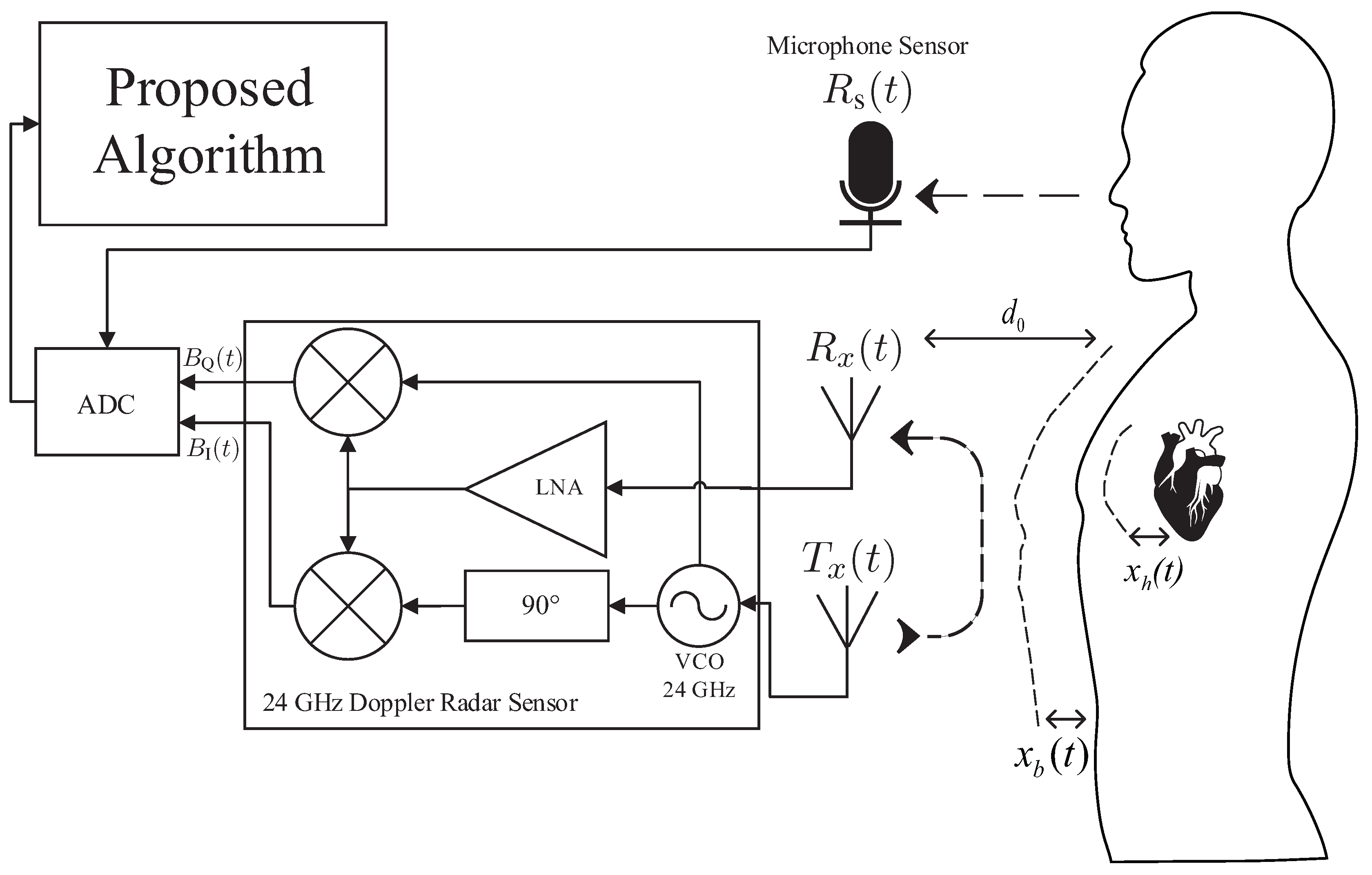

2. Proposed Sleep Stage Analysis Algorithm

2.1. Pre-Processing of the Radar and Sound Signal

2.2. Step 1: Feature Extraction

2.2.1. Forty Two Statistical Features of the Radar Signal

2.2.2. Two Spectral Features of the Radar Signal

2.2.3. Skewness and Kurtosis Features of the Radar Signal

2.2.4. Three Singular Value Decomposition Features of the Radar Signal

2.2.5. Three Principal Component Analysis Features of the Radar Signal

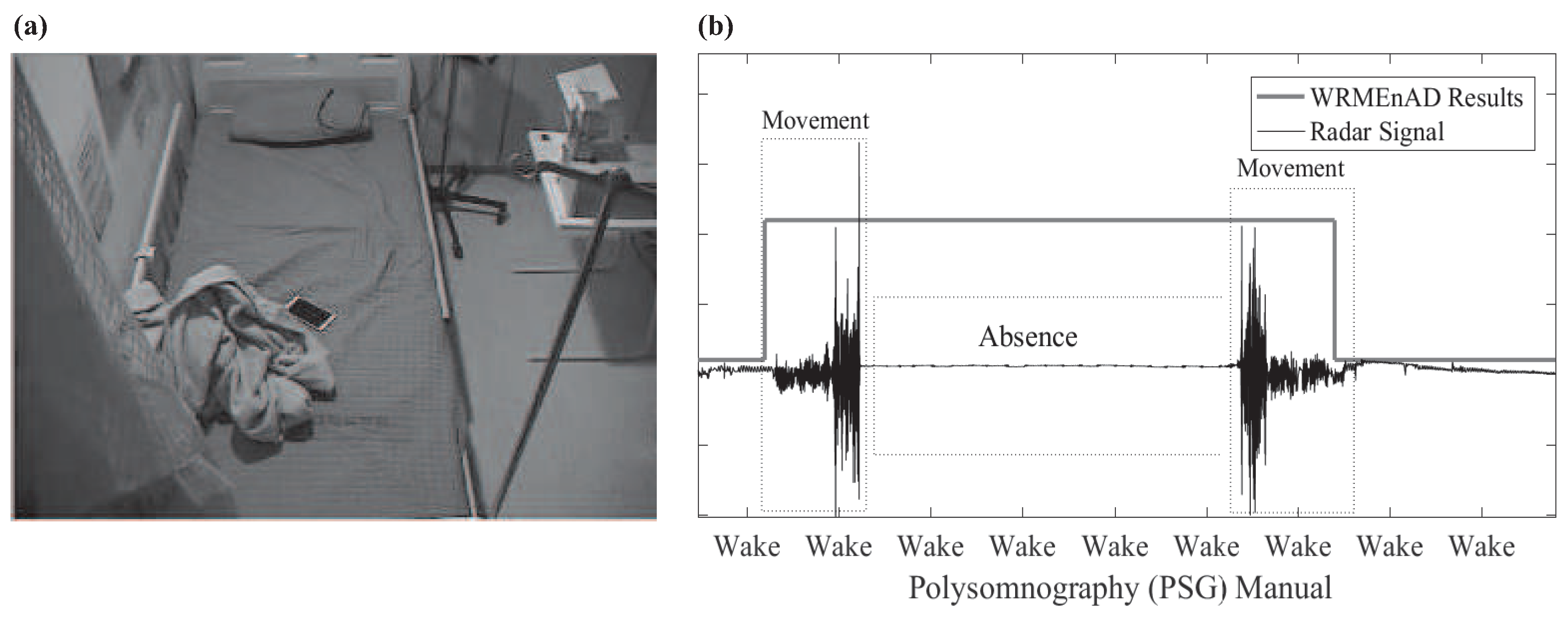

2.2.6. Wake Related Movement Existence and Absence Detection Flag

2.2.7. Sound Features

2.3. Step 2: Random Forest Classification and Final Decision Preparation

2.3.1. Wake/Sleep Random Forest Classification

2.3.2. Personal-Adjusted Threshold

2.3.3. Snore Event-Based Context-Awareness Method

2.3.4. NREM/REM Random Forest Classification

2.4. Step 3: Post-Processing and Final Sleep Stage Decision

2.4.1. Final Sleep Stage Decision

2.4.2. Post-Processing 1: Sound-Based Heuristic Wake Period Detection

2.4.3. Post-Processing 2: Heuristic Knowledge-Based REM Outlier Elimination

3. Experiments and Results

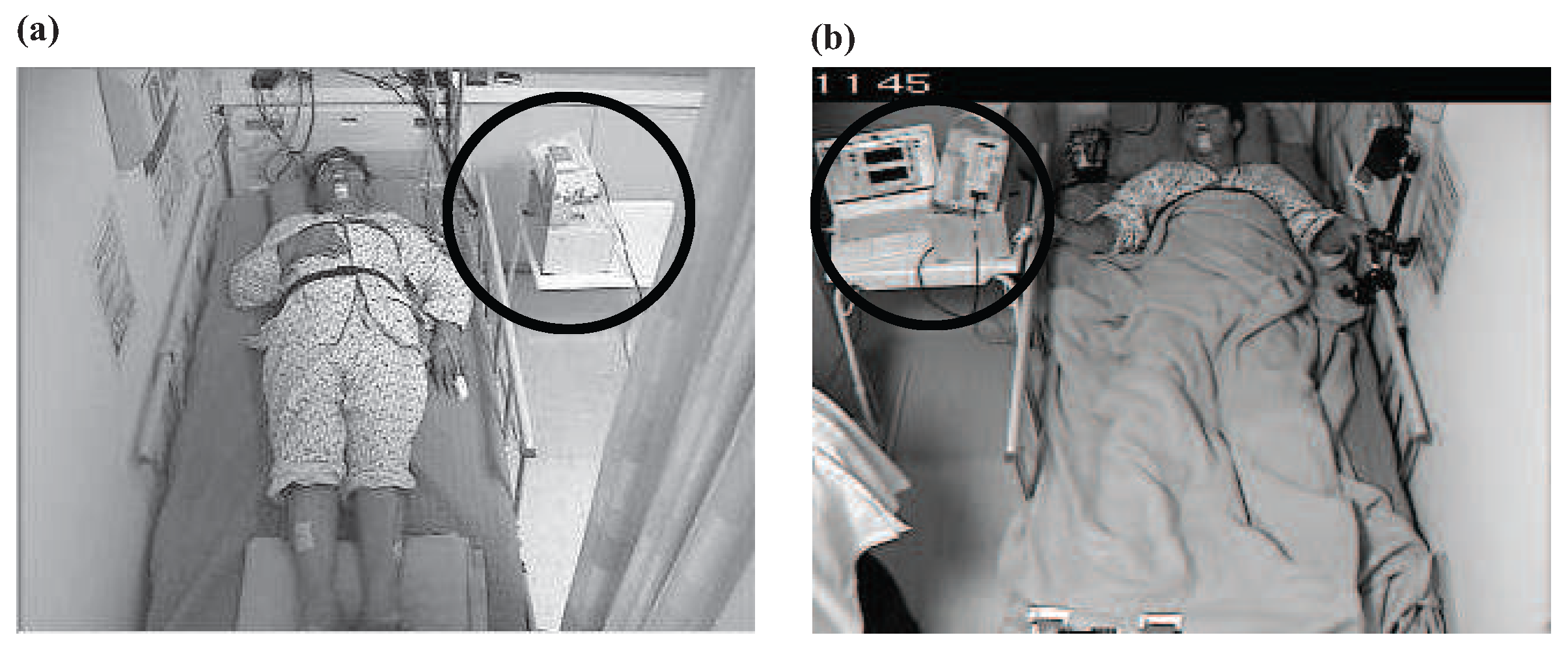

3.1. Experiment

3.1.1. Subjects

3.1.2. Polysomnography

3.1.3. Experimental Environment

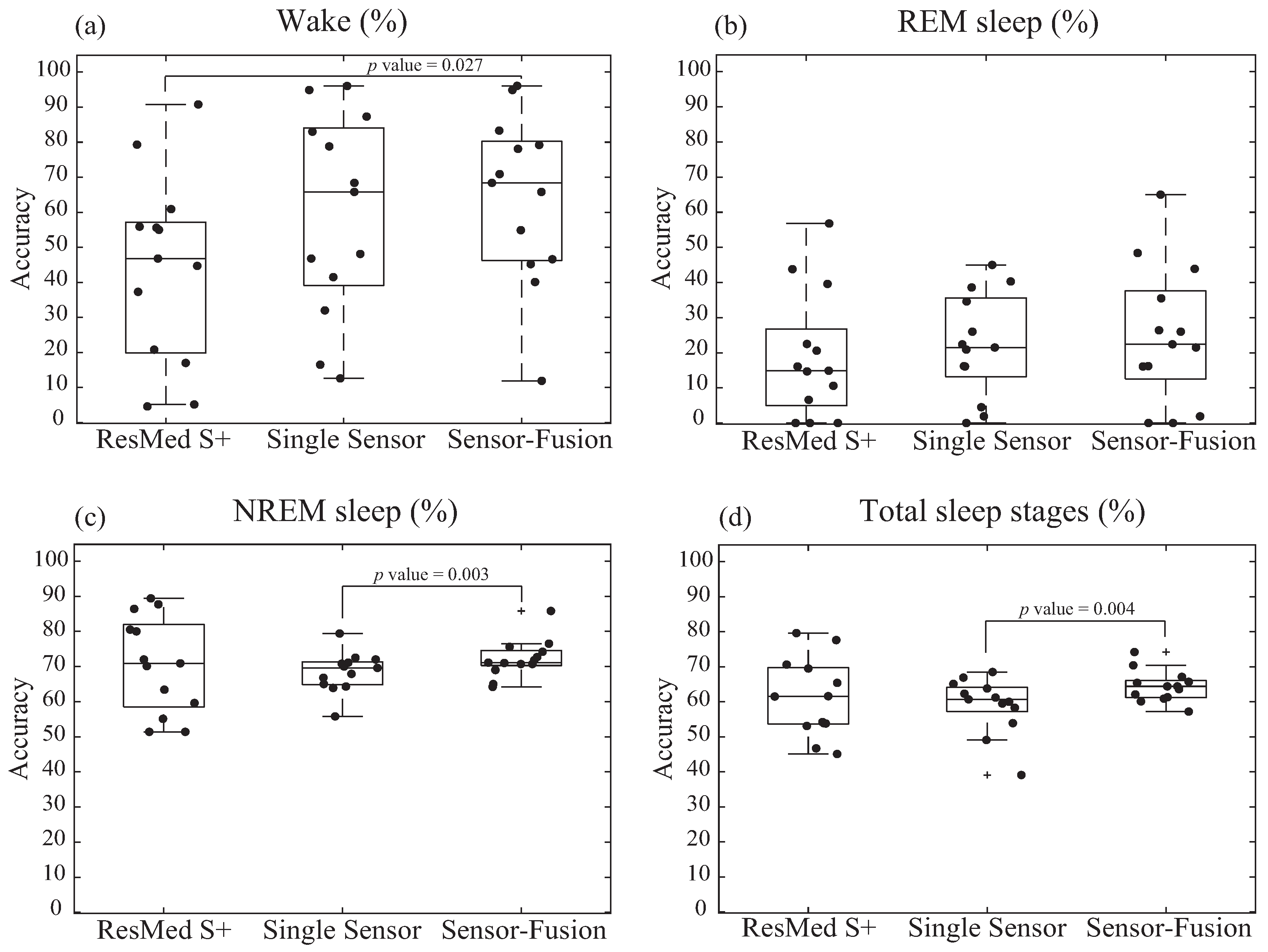

3.2. Results

3.2.1. Data Analysis

3.2.2. Diagnostic Accuracy of Sleep Stages Tested by Noncontact Devices

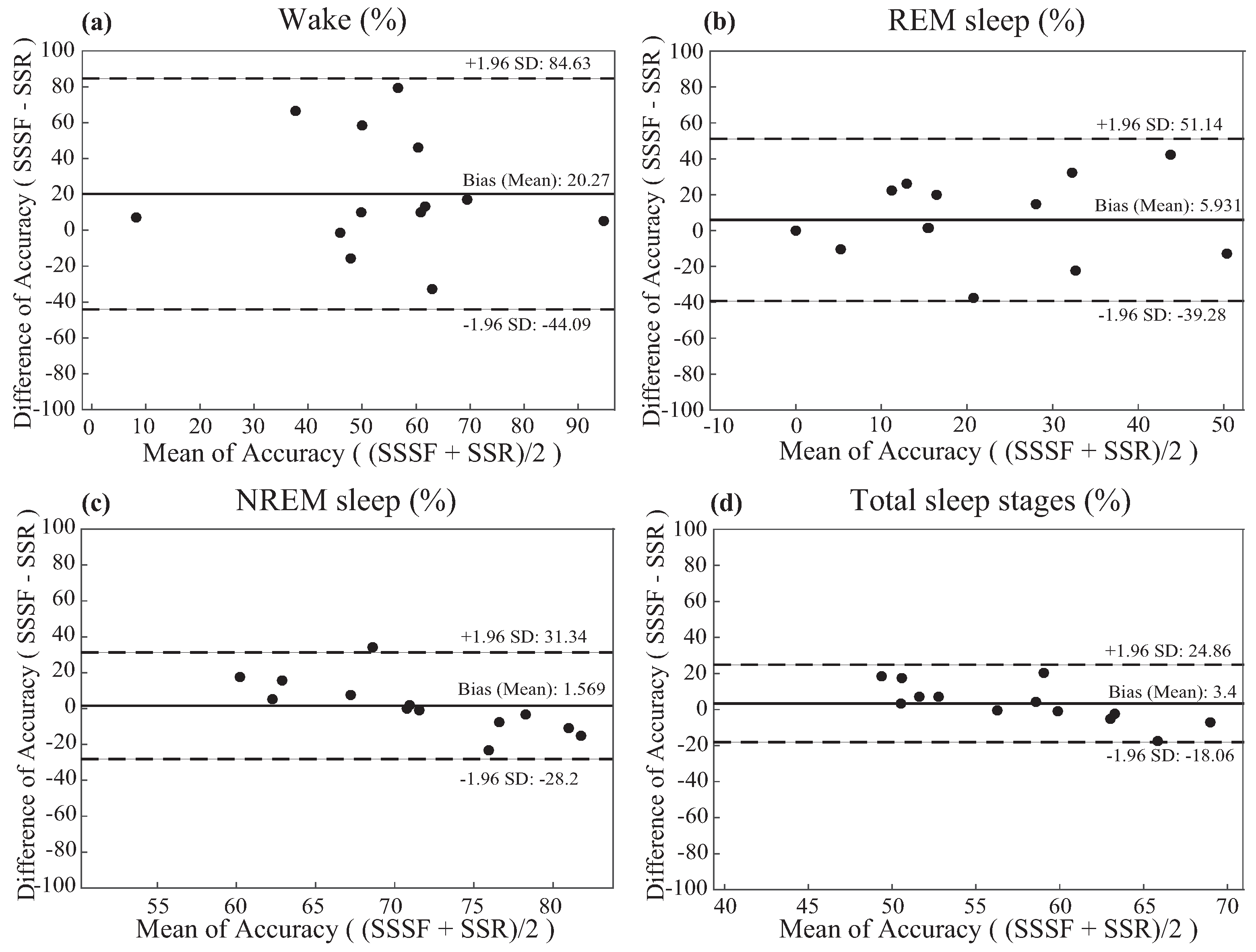

3.2.3. Bland and Altman Analysis of The Results

4. Discussion and Limitation

4.1. Discussion

4.2. Limitation

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Ethical Statements

References

- Carre Technologies. Hexoskin Smart Shirts-Cardiac, Respiratory, Sleep & Activity Metrics. Available online: http://www.hexoskin.com/ (accessed on 17 January 2017).

- Walsh, L.; McLoone, S.; Ronda, J.; Duffy, J.F.; Czeisler, C.A. Non-contact pressure-based sleep/wake discrimination. IEEE Trans. Biomed. Eng. 2016, PP, 1–12. [Google Scholar] [CrossRef]

- Iber, C. The American academy of sleep medicine manual for the scoring of sleep and associated events. In Rules, Terminology and Technical Specifications; American Academy of Sleep Medicine: Darien, IL, USA, 2007. [Google Scholar]

- Li, C.; Cummings, J.; Lam, J.; Graves, E.; Wu, W. Radar remote monitoring of vital signs. IEEE Mag. Microw. 2009, 10, 4756. [Google Scholar] [CrossRef]

- Ebrahimi, F.; Mikaeili, M.; Estrada, E.; Nazeran, H. Automatic sleep stage classification based on EEG signals by using neural networks and wavelet packet coefficients. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 20–25 August 2008; pp. 1151–1154. [Google Scholar]

- Jain, V.P.; Mytri, V.D.; Shete, V.V.; Shiragapur, B.K. Sleep stages classification using wavelet transform & neural networks. In Proceedings of the 2012 IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI), Hong Kong, China, 5–7 January 2012; pp. 71–74. [Google Scholar]

- Philips Respironics. Alice 6: LDx Diagnostic Sleep System. Available online: http://www.usa.philips.com/healthcare/product/HC1063315/ (accessed on 16 January 2017).

- Paquet, J.; Kawinska, A.; Carrier, J. Wake detection capacity of actigraphy during sleep. Sleep 2007, 30, 1362–1369. [Google Scholar] [CrossRef] [PubMed]

- Karlen, W.; Mattiussi, C.; Floreano, D. Sleep and wake classification with ECG and respiratory effort signals. IEEE Trans. Biomed. Circuits. Syst. 2009, 3, 71–78. [Google Scholar] [CrossRef] [PubMed]

- Zhovna, I.; Shallom, I.D. Automatic detection and classification of sleep stages by multichannel EEG signal modeling. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 Augst 2008; pp. 2665–2668. [Google Scholar]

- Rahman, T.; Adams, A.T.; Ravichandran, R.V.; Zhang, M.; Patel, S.N.; Kientz, J.A.; Choudhury, T. Dopplesleep: A contactless unobtrusive sleep sensing system using short-range Doppler radar. In Proceedings of the UbiComp ’15 Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Osaka, Japan, 7–11 September 2015; pp. 39–50. [Google Scholar]

- Mork, P.J.; Nilsson, J.; Lorås, H.W.; Riva, R.; Lundberg, U.; Westgaard, R.H. Heart rate variability in fibromyalgia patients and healthy controls during non-REM and REM sleep: A case–control study. Scand. J. Rheumatol. 2013, 42, 502–508. [Google Scholar] [CrossRef] [PubMed]

- Baumert, M.; Pamula, Y.; Kohler, M.; Martin, J.; Kennedy, D.; Nalivaiko, E.; Immanuel, S. Effect of respiration on heartbeat-evoked potentials during sleep in children with sleep-disordered breathing. Sleep Med. 2015, 16, 665–667. [Google Scholar] [CrossRef] [PubMed]

- Okada, S.; Koyama, K.; Shimizu, S.; Mohri, I.; Ohno, Y.; Tannike, M.; Makikawa, M. Comparison of gross body movements during sleep between normally developed children and ADHD children using video images. In Proceedings of the World Congress on Medical Physics and Biomedical Engineering, Beijing, China, 26–31 May 2012; pp. 1372–1374. [Google Scholar]

- Dafna, E.; Tarasiuk, A.; Zigel, Y. Sleep-wake evaluation from whole-night non-contact audio recordings of breathing sounds. PLoS ONE 2015, 10, e0117382. [Google Scholar] [CrossRef] [PubMed]

- ResMed. S+ by ResMed. Available online: https://sleep.mysplus.com/ (accessed on 16 January 2017).

- RfBeam. K-LC5 High Sensitivity Dual Channel Transceiver. Available online: https://www.rfbeam.ch/product?id=9 (accessed on 16 January 2017).

- Pathirana, P.N.; Herath, S.C.K.; Savkin, A.V. Multitarget tracking via space transformations using a single frequency continuouswave radar. IEEE Trans. Signal Process. 2012, 60, 5217–5229. [Google Scholar] [CrossRef]

- Droitcour, D.; Boric-Lubecke, O.; Lubecke, V.M.; Lin, J.; Kovacs, G.T.A. Range correlation and I/Q performance benefits in single-chip silicon Doppler radars for noncontact cardiopulmonary monitoring. IEEE Trans. Microw. Theory Tech. 2004, 52, 838–848. [Google Scholar] [CrossRef]

- Erkelens, J.S.; Heusdens, R. Tracking of nonstationary noise based on data-driven recursive noise power estimation. IEEE Trans. Audio Speech Lang. Process. 2008, 16, 1112–1123. [Google Scholar] [CrossRef]

- Droitcour, A.D.; Boric-Lubecke, O.; Lubecke, V.M.; Jenshan, L. 0.25 μm CMOS and BiCMOS single-chip direct-conversion Doppler radars for remote sensing of vital signs. In Proceedings of the 2002 IEEE Internationa Solid-State Circuits Conference, Digest of Technical Papers, San Francisco, CA, USA, 7 February 2002; pp. 348–349. [Google Scholar]

- Dafna, E.; Tarasiuk, A.; Zigel, A. Automatic detection of whole night snoring events using non-contact microphone. PLoS ONE 2013, 8, e84139. [Google Scholar] [CrossRef] [PubMed]

- Gu, W.; Yang, Z.; Shangguan, L.; Sun, W.; Jin, K.; Liu, Y. Intelligent sleep stage mining service with smartphones. In Proceedings of the UbiComp ’14 Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Seattle, WA, USA, 13–17 September 2014; pp. 649–660. [Google Scholar]

- Schenck, C.H.; Mahowald, M.W. A polysonographially documented case of adult somnabulism with long-distance automobile driving and frequent nocturnal violence: Parasomnia with continuing danger as a noninsane automatism? Sleep 1995, 18, 740–748. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–35. [Google Scholar] [CrossRef]

- Pavey, T.G.; Gilson, N.D.; Gomersall, S.R.; Clark, B.; Trost, S.G. Field evaluation of random forest activity classifier for wrist-worn accelerometer data. J. Sci. Med. Sport 2017, 20, 75–80. [Google Scholar] [CrossRef] [PubMed]

- Brown, R.; Basheer, R.; McKenna, J.; Strecker, R.; McCarley, R. Control of sleep and wakefulness. Physiol. Rev. 2012, 92, 1087–1187. [Google Scholar] [CrossRef] [PubMed]

- McCarley, R.W. Neurobiology of REM and NREM sleep. Sleep Med. 2007, 8, 302–330. [Google Scholar] [CrossRef] [PubMed]

- Berry, R.B.; Budhiraja, R.; Gottlieb, D.J.; Gozal, D.; Iber, C.; Kapur, V.K.; Marcus, C.L.; Mehra, R.; Parthasarathy, S.; Quan, S.F.; et al. Rules for scoring respiratory events in sleep: Update of the 2007 AASM Manual for the scoring of sleep and associated events. Deliberations of the sleep anea definitions task force of the american academy of sleep medicine. J. Clin. Sleep Med. 2012, 8, 597–619. [Google Scholar] [PubMed]

- Penzel, T.; Conradt, R. Computer based sleep recording and analysis. Sleep Med. Rev. 2000, 4, 131–148. [Google Scholar] [CrossRef] [PubMed]

- Imtiaz, S.A.; Rodriguez-Villegas, E. A low computational cost algorithm for REM sleep detection using single channel EEG. Ann. Biomed. Eng. 2014, 42, 2344–2359. [Google Scholar] [CrossRef] [PubMed]

- Frauscher, B.; Iranzo, A.; Gaig, C.; Gschliesser, V.; Guaita, M.; Raffelseder, V.; Ehrmann, L.; Sola, N.; Salamero, M.; Tolosa, E.; et al. SINBAR (Sleep Innsbruck Barcelona) Group. Normative EMG values during REM sleep for the diagnosis of REM sleep behavior disorder. Sleep 2012, 35, 835–847. [Google Scholar] [CrossRef] [PubMed]

- XeThru. X4SIP02 Radar Sub-System. Available online: https://www.xethru.com/shop/x4sip02-radar-sub-system.html (accessed on 16 January 2017).

| Training (11 Patients) | Test (13 Patients) | p-Value | |

|---|---|---|---|

| Baseline Information | |||

| Age (years) | 43.45 ± 13.06 | 40.27 ± 16.3 | 0.35 |

| Sex (male%) | 81.8% | 92.3% | 0.24 |

| Body Mass Index (BMI) (kg/m) | 24.75 ± 3.76 | 26.9 ± 4.1 | 0.11 |

| Medication Status (%) | 63.64% | 46.15% | 0.13 |

| Epworth Sleepiness Scale (ESS) | 8.2 ± 3.89 | 5.54 ± 2.74 | 0.07 |

| Pittsburgh Sleep Quality Index (PSQI) | 8.9 ± 4.37 | 6.73 ± 3.39 | 0.13 |

| PSG Information | |||

| Total Sleep Time (min) | 312.95 ± 38.57 | 298.05 ± 56.37 | 0.21 |

| Sleep Efficiency (%) | 80.67 ± 6.73 | 79.71 ± 11.15 | 0.15 |

| Deep Sleep (%) | 0.92 ± 2.63 | 3.91 ± 6.38 | 0.09 |

| REM (%) | 17.11 ± 5.94 | 13.76 ± 5.81 | 0.13 |

| Arousal Index (events/h) | 38.85 ± 18.6 | 41.59 ± 28.61 | 0.49 |

| Snoring (%) | 51.44 ± 32.04 | 47.51 ± 22.04 | 0.49 |

| AHI (events/h) | 26.75 ± 22.92 | 44.27 ± 32.95 | 0.1 |

| RDI(events/h) | 30.76 ± 23.48 | 47.96 ± 32.31 | 0.11 |

| Patient # | ResMed S+ | Proposed Algorithm | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Single-Sensor | Sensor-Fusion | |||||||||||

| Wake | REM | NREM | Total Accuracy | Wake | REM | B | Total Accuracy | Wake | REM | NREM | Total Accuracy | |

| P1 | 20.8% | 43.8% | 72.0% | 61.6% | 83.0% | 21.5% | 71.1% | 61.2% | 79.2% | 21.5% | 71.1% | 60.9% |

| P2 | 4.5% | 22.5% | 80.0% | 53.9% | 32.0% | 45.0% | 65.0% | 53.9% | 70.9% | 65.0% | 76.5% | 74.2% |

| P3 | 55.6% | 14.7% | 51.4% | 45.1% | 41.5% | 16.1% | 79.4% | 60.0% | 40.1% | 16.1% | 85.8% | 63.6% |

| P4 | 37.3% | 56.8% | 89.4% | 77.6% | 87.3% | 38.6% | 72.0% | 68.5% | 83.3% | 43.9% | 74.2% | 70.4% |

| P5 | 17.0% | 39.6% | 87.7% | 79.6% | 96.2% | 1.9% | 64.3% | 62.3% | 96.2% | 1.9% | 64.2% | 62.1% |

| P6 | 46.8% | 20.6% | 63.4% | 54.2% | 46.8% | 34.6% | 70.0% | 60.7% | 45.2% | 35.5% | 71.0% | 61.3% |

| P7 | 4.6% | 16.1% | 86.4% | 70.6% | 16.5% | 40.3% | 66.8% | 58.3% | 11.9% | 48.4% | 75.6% | 65.4% |

| P8 | 55.0% | 14.9% | 70.1% | 61.5% | 68.4% | 16.2% | 70.8% | 65.1% | 68.4% | 16.2% | 71.8% | 65.7% |

| P9 | 79.3% | 6.6% | 51.4% | 53.8% | 48.1% | 20.9% | 67.9% | 49.1% | 46.6% | 26.4% | 69.0% | 57.2% |

| P10 | 55.9% | 0.0% | 80.5% | 69.5% | 65.8% | 22.4% | 72.5% | 66.9% | 65.8% | 22.4% | 72.7% | 67.1% |

| P11 | 44.7% | 0.0% | 59.6% | 53.1% | 12.6% | 0.0% | 55.8% | 39.1% | 54.9% | 0.0% | 65.0% | 60.1% |

| P12 | 92.1% | 10.6% | 70.9% | 65.4% | 100.0% | 4.5% | 63.9% | 59.5% | 97.4% | 0.0% | 70.7% | 64.4% |

| P13 | 60.9% | 0.0% | 55.1% | 46.7% | 78.8% | 26.0% | 69.6% | 63.8% | 78.1% | 26.0% | 70.7% | 64.4% |

| Total Average | 43.8% | 21.5% | 71.9% | 60.9% | 59.8% | 22.2% | 68.4% | 59.2% | 60.0% | 26.0% | 71.9% | 64.4% |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chung, K.-y.; Song, K.; Shin, K.; Sohn, J.; Cho, S.H.; Chang, J.-H. Noncontact Sleep Study by Multi-Modal Sensor Fusion. Sensors 2017, 17, 1685. https://doi.org/10.3390/s17071685

Chung K-y, Song K, Shin K, Sohn J, Cho SH, Chang J-H. Noncontact Sleep Study by Multi-Modal Sensor Fusion. Sensors. 2017; 17(7):1685. https://doi.org/10.3390/s17071685

Chicago/Turabian StyleChung, Ku-young, Kwangsub Song, Kangsoo Shin, Jinho Sohn, Seok Hyun Cho, and Joon-Hyuk Chang. 2017. "Noncontact Sleep Study by Multi-Modal Sensor Fusion" Sensors 17, no. 7: 1685. https://doi.org/10.3390/s17071685