Rapid Global Calibration Technology for Hybrid Visual Inspection System

Abstract

:1. Introduction

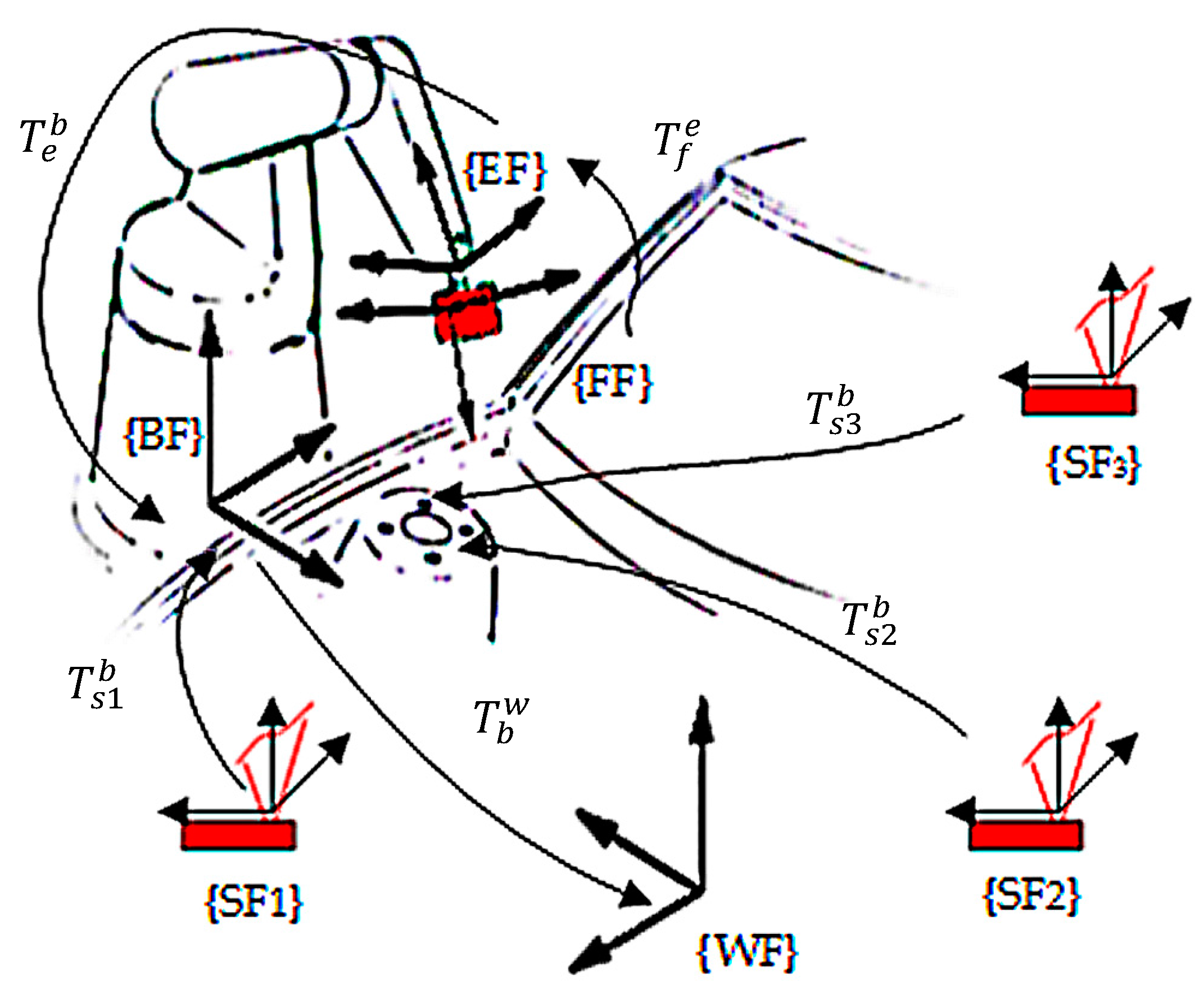

2. Principle of the Hybrid Visual Inspection System

2.1. System Principle

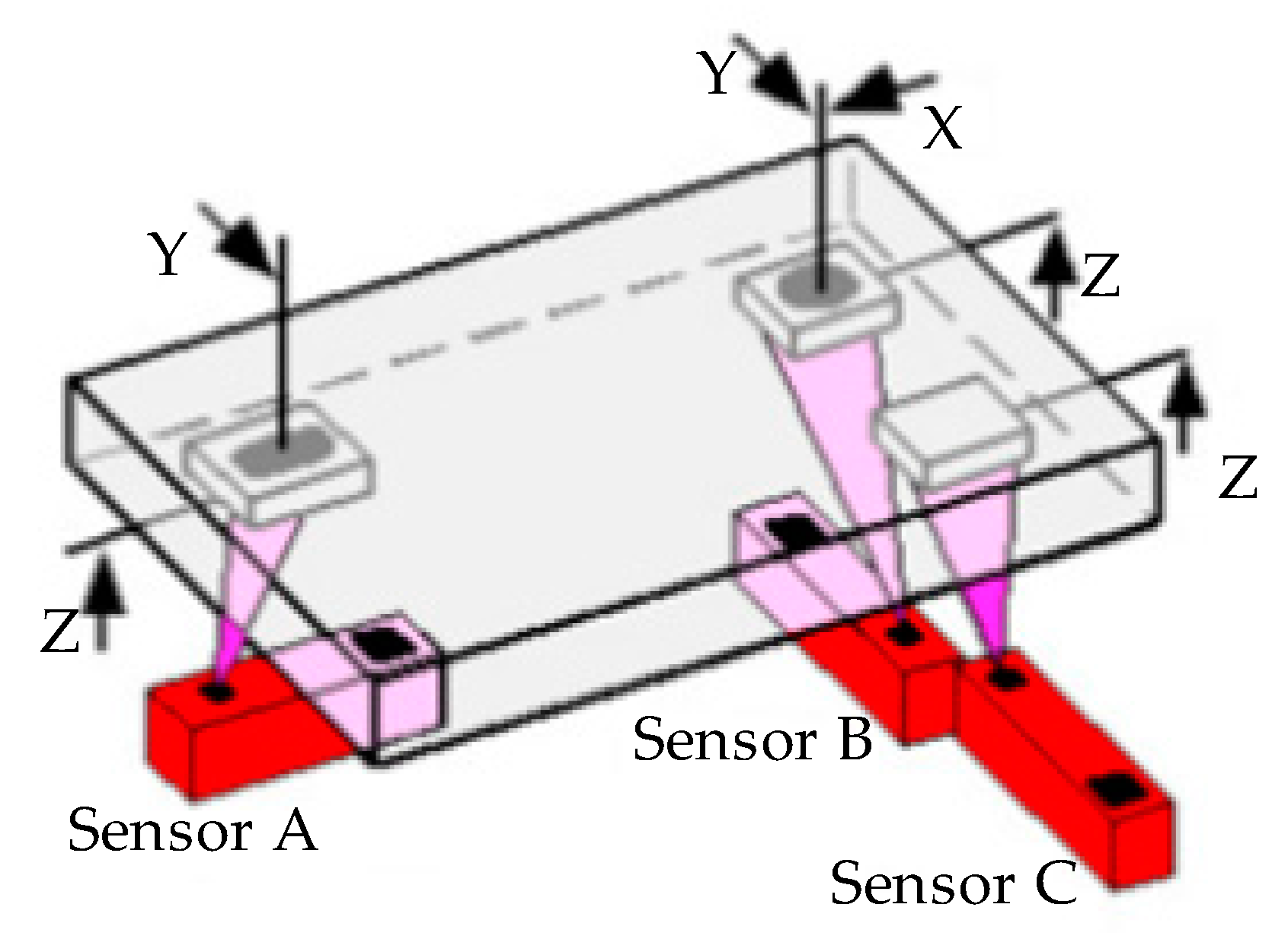

2.2. Reference Point System (RPS) and Workpiece Frame Definition

- (3)

- locating Points in Z axis (plane) (measures Z translation and rotation about Y and X)Sensor A—slot center Z valueSensor B—hole center Z valueSensor C—range Z value

- (2)

- locating Points in Y axis (line) (measures Y translation and rotation about Z)Sensor A—slot center Y valueSensor B—hole center Y value

- (1)

- locating Point in X axis (point) (measures X translation)Sensor B—hole center X value

3. Rapid Global Calibration Method

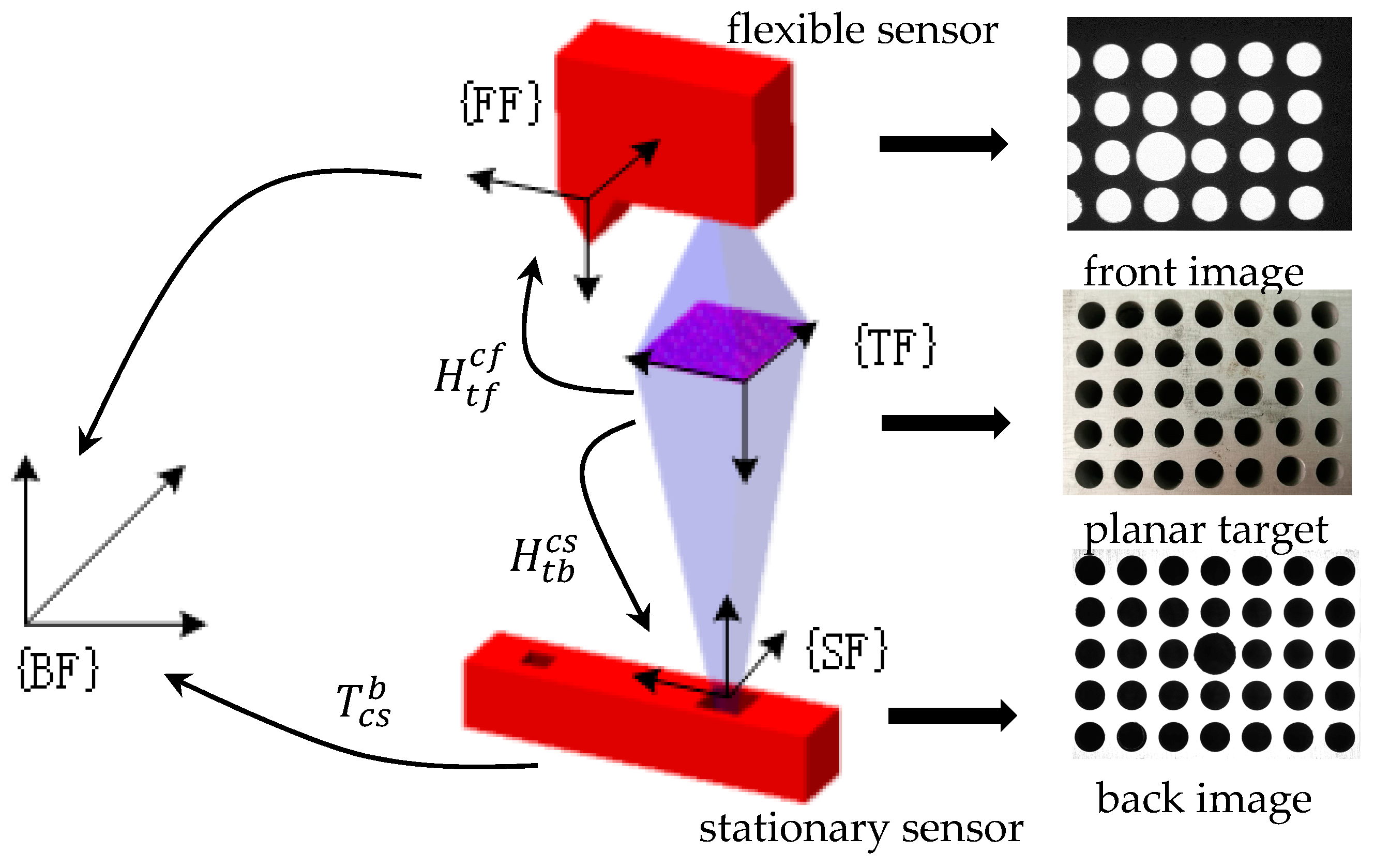

3.1. Flexible Sensor Global Calibration

3.2. Stationary Sensors Global Calibration

3.2.1. Camera Pinhole Model and Homography

3.2.2. Principle of Stationary Sensor Global Calibration

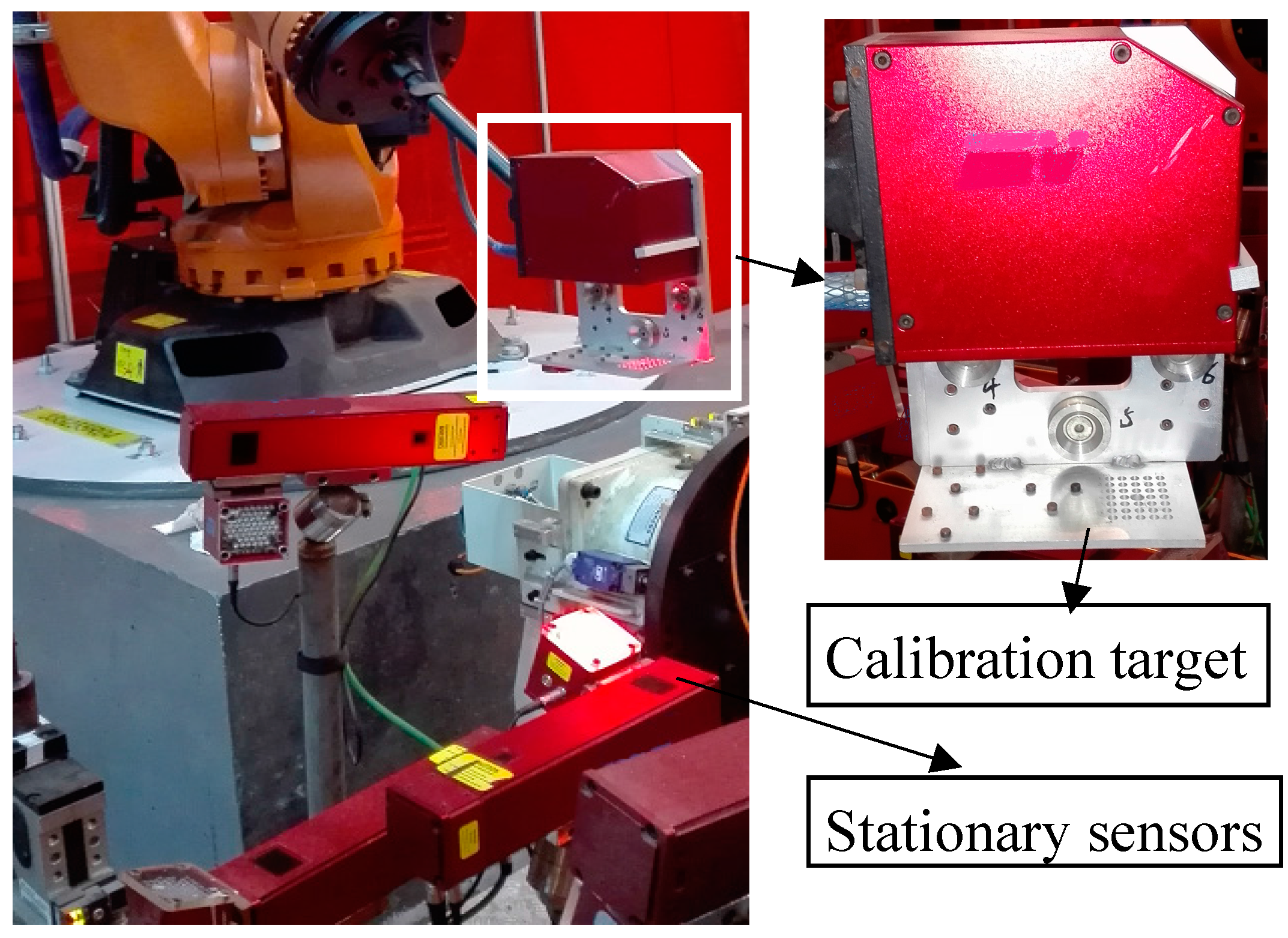

4. Experiment and Analysis

4.1. Experiment Setup

4.2. Calibration Results

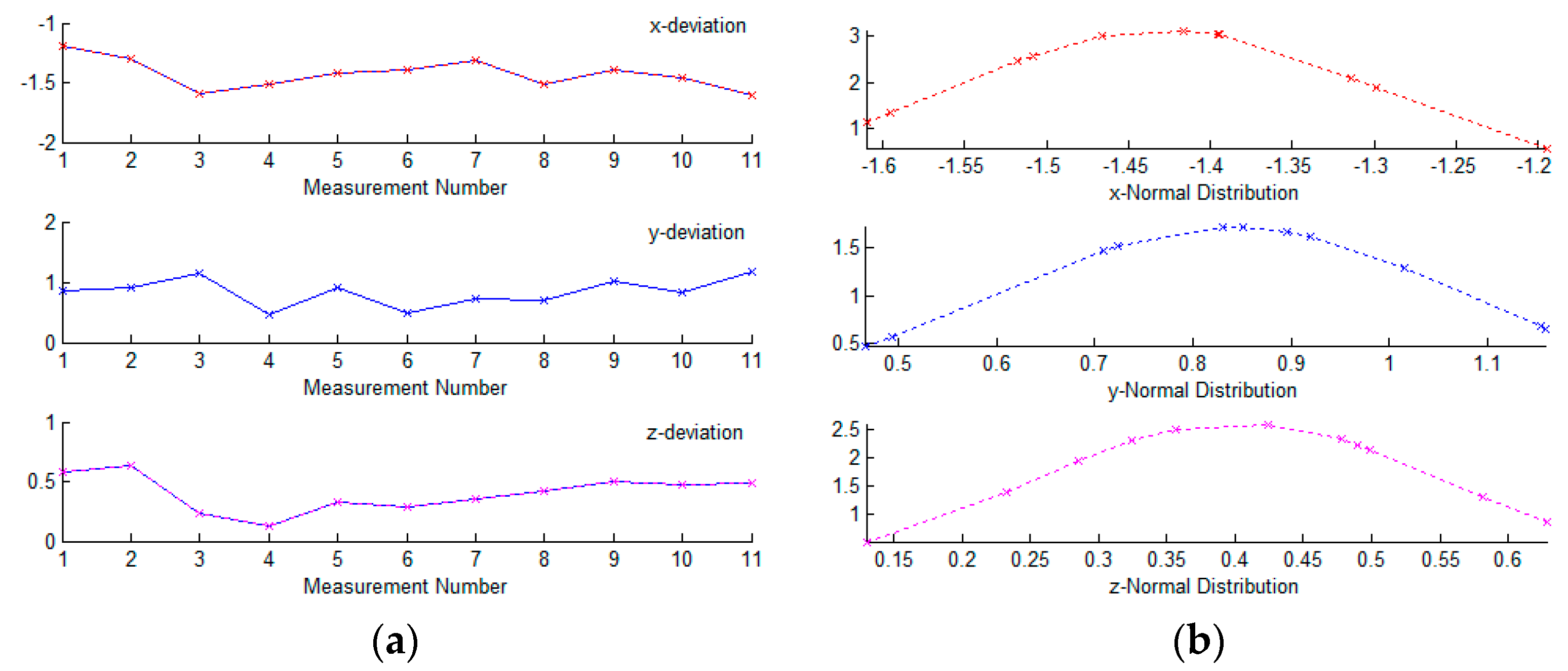

4.3. Accuracy Validation Experiments

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zhao, C.; Xu, J.; Zhang, Y. Three dimensional reconstruction of free flying insect based on single camera. Acta Opt. Sin. 2006, 26, 61–66. [Google Scholar]

- Lim, K.B.; Xiao, Y. Virtual stereovision system: new understanding on single-lens stereovision using a biprism. J. Electron. Imaging 2005, 14, 781–792. [Google Scholar] [CrossRef]

- Zhang, S.; Huang, P.S. Novel method for structured light system calibration. Opt. Eng. 2006, 45, 083601. [Google Scholar]

- Quan, C.; Tay, C.J.; Chen, L.J. A study on carrier-removal techniques in fringe projection profilometry. Opt. Laser Technol. 2007, 39, 1155–1161. [Google Scholar] [CrossRef]

- Kitahara, I.; Ohta, Y.; Saito, H. Recording of multiple videos in a large-scale space for large-scale virtualized reality. J. Inst. Image Inf. Telev. Eng. 2002, 56, 1328–1333. [Google Scholar]

- Lu, R.S.; Li, Y.F. A global calibration technique for high-accuracy 3D measurement systems. Sens. Actuators A Phys. 2004, 116, 384–393. [Google Scholar] [CrossRef]

- Liu, Y.; Lin, J.R. Multi-sensor Global calibration Technology of Vision Sensor in Car Body-in-White Visual Measurement System. Acta Metrol. Sin. 2014, 5, 204–209. [Google Scholar]

- Liu, Z.; Wei, X.; Zhang, G. External parameter calibration of widely distributed vision sensors with non-overlapping fields of view. Opt. Lasers Eng. 2013, 51, 643–650. [Google Scholar] [CrossRef]

- Xie, M.; Wei, Z. A flexible technique for calibrating relative position and orientation of two cameras with no-overlapping FOV. Measurement 2013, 46, 34–44. [Google Scholar] [CrossRef]

- Leu, M.C.; Ji, Z. Non-Linear Displacement Sensor Based on Optical Triangulation Principle. U.S. Patent 5,113,080, 12 May 1992. [Google Scholar]

- Weng, J.; Cohen, P. Camera calibration with distortion models and accuracy evaluation. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 965–980. [Google Scholar] [CrossRef]

- Wu, B.; Xue, T. Calibrating stereo visual sensor with free-position planar pattern. J. Optoelectron. Laser 2006, 17, 1293–1296. [Google Scholar]

- Levenberg, K.A. A method for the solution of certain problems in least squares. Q. Appl. Math. 1944, 2, 164–168. [Google Scholar] [CrossRef]

- Marquardt, D.W. An algorithm for least-squares estimation of nonlinear parameters. J. Soc. Ind. Appl. Math. 1963, 11, 431–441. [Google Scholar] [CrossRef]

- Moré, J.J. The Levenberg-Marquardt algorithm: Implementation and theory. Numer. Anal. 1978, 630, 105–116. [Google Scholar]

- Yin, S.; Ren, Y. A vision-based self-calibration method for robotic visual inspection systems. Sensors 2013, 13, 16565. [Google Scholar] [CrossRef] [PubMed]

- Yin, S.; Ren, Y.; Guo, Y. Development and calibration of an integrated 3D scanning system for high-accuracy large-scale metrology. Measurement 2014, 54, 65–76. [Google Scholar] [CrossRef]

- Zhang, Z.Y. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Hartley, R.I. In defence of the 8-point Algorithm. In Proceedings of the Fifth International Conference on Computer Vision, Boston, MA, USA, 20–23 June 1995; pp. 1064–1070. [Google Scholar]

- He, D.; Liu, X.; Peng, X. Eccentricity error identification and compensation for high-accuracy 3D optical measurement. Meas. Sci. Technol. 2013, 24, 660–664. [Google Scholar] [CrossRef] [PubMed]

- Fitzgibbon, A.W.; Fisher, R.B.; Pilu, M. Ellipse-Specific Direct Least-Square Fitting. In Proceedings of the International Conference on Image Processing, Lausanne, Switzerland, 19 September 1996; pp. 599–602. [Google Scholar]

- Lilliefors, H.W. On the Kolmogorov-Smirnov test for normality with mean and variance unknown. J. Am. Stat. Assoc. 1967, 62, 399–402. [Google Scholar] [CrossRef]

- Van Soest, J. Some experimental results concerning tests of normality. Stat. Neerlandica 1967, 21, 91–97. [Google Scholar] [CrossRef]

| Deviation (mm) | Sum | Rate | Correlation Coefficient | Sum | Rate |

|---|---|---|---|---|---|

| [−0.1, 0.1] | 37 | 21.26% | >0.9 | 28 | 16.09% |

| [−0.2, −0.1] & [0.1, 0.2] | 82 | 47.13% | [0.8, 0.9] | 58 | 33.33% |

| [−0.3, −0.2] & [0.2, 0.3] | 49 | 28.16% | [0.7, 0.8] | 63 | 36.21% |

| [−0.5, −0.3] & [0.3, 0.5] | 6 | 3.45% | [0.6, 0.7] | 10 | 5.74% |

| [<−0.5] & [>0.5] | 0 | 0 | [<0.6] | 15 | 8.62% |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, T.; Yin, S.; Guo, Y.; Zhu, J. Rapid Global Calibration Technology for Hybrid Visual Inspection System. Sensors 2017, 17, 1440. https://doi.org/10.3390/s17061440

Liu T, Yin S, Guo Y, Zhu J. Rapid Global Calibration Technology for Hybrid Visual Inspection System. Sensors. 2017; 17(6):1440. https://doi.org/10.3390/s17061440

Chicago/Turabian StyleLiu, Tao, Shibin Yin, Yin Guo, and Jigui Zhu. 2017. "Rapid Global Calibration Technology for Hybrid Visual Inspection System" Sensors 17, no. 6: 1440. https://doi.org/10.3390/s17061440