1. Introduction

Image denoising is an indispensable part of digital image processing on account of the continuous need for high-quality images. When images are obtained by a sensor system, noise inevitably arises in the images. Noise can be amplified by electronic sensor gains obtained by any post-image processing that increases the intensity range. Therefore, noise suppression must be conducted in advance. A denoising algorithm is necessary to improve image quality as well as to provide rectified input images that are additionally processed in tasks, such as segmentation, feature extraction, and texture analysis.

Many denoising filters have been presented in the literature. In the spatial domain, the use of patch-based approaches has become very popular in recent years. The nonlocal means (NLM) filter is one of the most renowned denoising methods [

1]. As an averaging-based filter, which directly smooths pixel values in the spatial domain, the NLM filter is an effective denoising method. When using the NLM filter, the similarity between the center patch and neighboring patches can be utilized for weight calculation. The nonlocal property of the images (self-similarity) has been adopted for denoising methods based on patch-based difference (PBD) refinement [

2]. Hence, an improved patch-based denoising method, block-matching, and a 3D filtering (BM3D) algorithm were presented in Ref. [

3].

To restore degraded image sequence data, denoising methods extended to the temporal domain have also been presented in recent years [

4,

5,

6,

7,

8,

9,

10,

11]. In the denoising process for monochromatic data, explicit motion representation refers to the detection of the true motion trajectories of a physical point via motion estimation and compensation. Many temporal filters, such as the locally adaptive linear minimum-mean-squared-error spatio-temporal filter (3D-LLMMSE) [

4] and the multi-hypothesis motion-compensated filter (MHMCF) [

5,

6], adopt this approach. The motion-compensated denoising algorithm based on 3D spatiotemporal volume for random and fixed-pattern noises [

10] and using regularized optical flow [

11] were presented recently. This motion-compensated filtering approach can be effective in preventing motion blurring during video denoising processes.

Furthermore, the denoising algorithm can be used with a color filter array (CFA) pattern, which is found in most cameras that use single sensors to obtain color images. The CFA pattern usually has three alternating color channels. Because each color channel shares a sensor plane at the sacrifice of the under-sampled spatial resolution in each channel, demosaicing must be performed to obtain a full resolution image in each channel [

12,

13,

14,

15,

16,

17]. The most renowned CFA is the Bayer pattern [

18], which consists of a green channel of half the number of whole pixels for the red and blue channels of the quarter, respectively. Recently, several other CFA patterns have been developed with the objective of enhancing sensitivity [

19,

20,

21,

22]. In addition, demosaicing methods for various CFA patterns have been presented recently [

23,

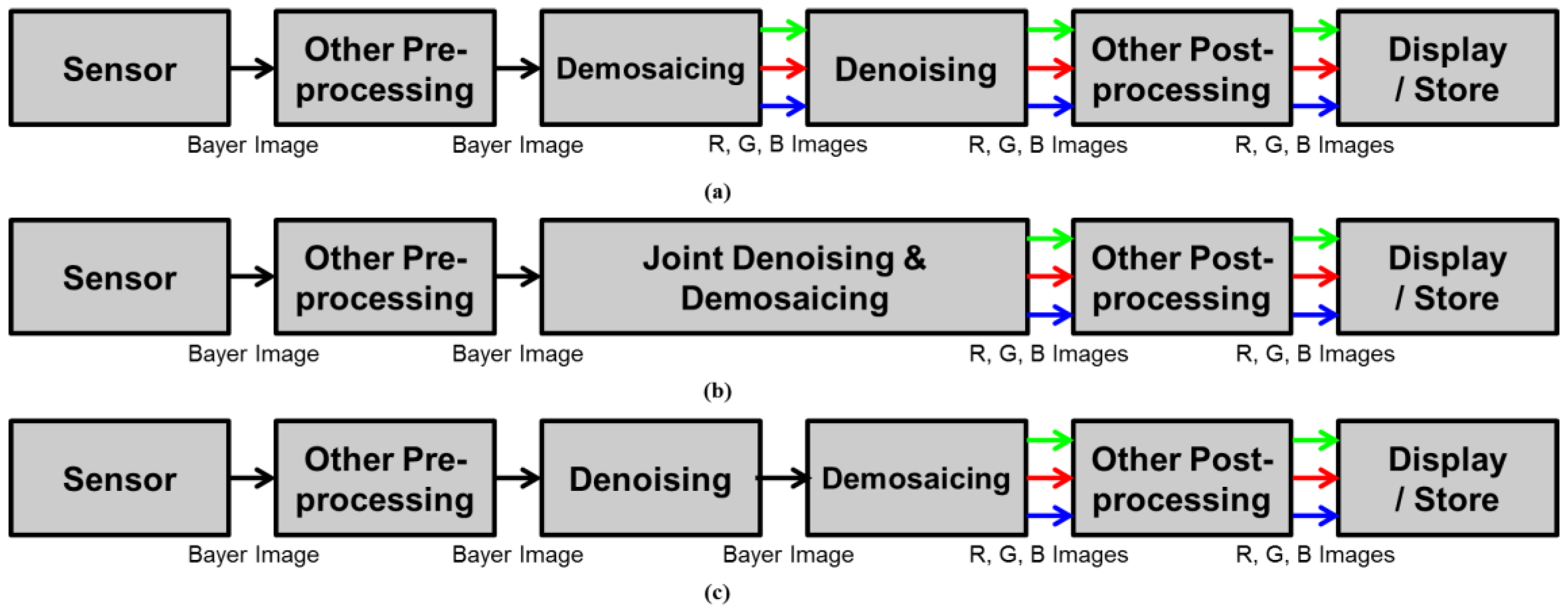

24]. The denoising filter is typically applied separately after demosaicing for the three color channels, as shown in

Figure 1a. In this case, there is no need to consider the under-sampled resolution of each channel. However, demosaicing changes the statistical characteristics of the noise and introduces spatial correlations [

25]. Moreover, demosaicing relies on chromatic aberration, which influences the spatial and spectral correlation through artifacts such as blur and mis-registration [

26]. Thus, the noise spreads spatially, which makes the denoising task after demosaicing more complicated.

As a unified estimation procedure, denoising and demosaicing, are conducted simultaneously in [

27,

28,

29], as shown in

Figure 1b. These approaches try to obtain more efficient and cost-efficient solutions by jointly considering denoising and demosaicing. The total least square based filter is adopted to remove both signal-dependent and signal-independent noise factors and conduct demosaicing in [

27]. Denoisaicing, which is a complex word for denoising and demosaicing, is presented in [

28]. In [

29], the total variation minimization is utilized to suppress sensor noise and demosaicing error.

Moreover, denoising for the three interpolated channels incurs high computational costs. To address these disadvantages, some denoising methods have been directly used with the CFA [

30,

31,

32], as shown in

Figure 1c. In the proposed approach, a denoising algorithm is also performed in the CFA pattern before the demosaicing process, as shown in

Figure 1c. By employing these typical structures, many demosaicing methods have been developed for Bayer pattern [

12,

13,

14,

15,

16]. Most demosaicing algorithms are based on the assumption that the differences or ratios among the channels are smoother than the intensities of the channels, which is known as the inter-channel correlation [

12,

13,

14,

15]. In other words, the high frequencies of the red, green, and blue channels are assumed to be similar. The high frequencies among the different channels can be jointly exploited during an interpolation procedure to overcome the degraded spatial resolution caused by under-sampling. Most demosaicing algorithms consider the inter-channel correlation to suppress color artifacts and interpolation errors.

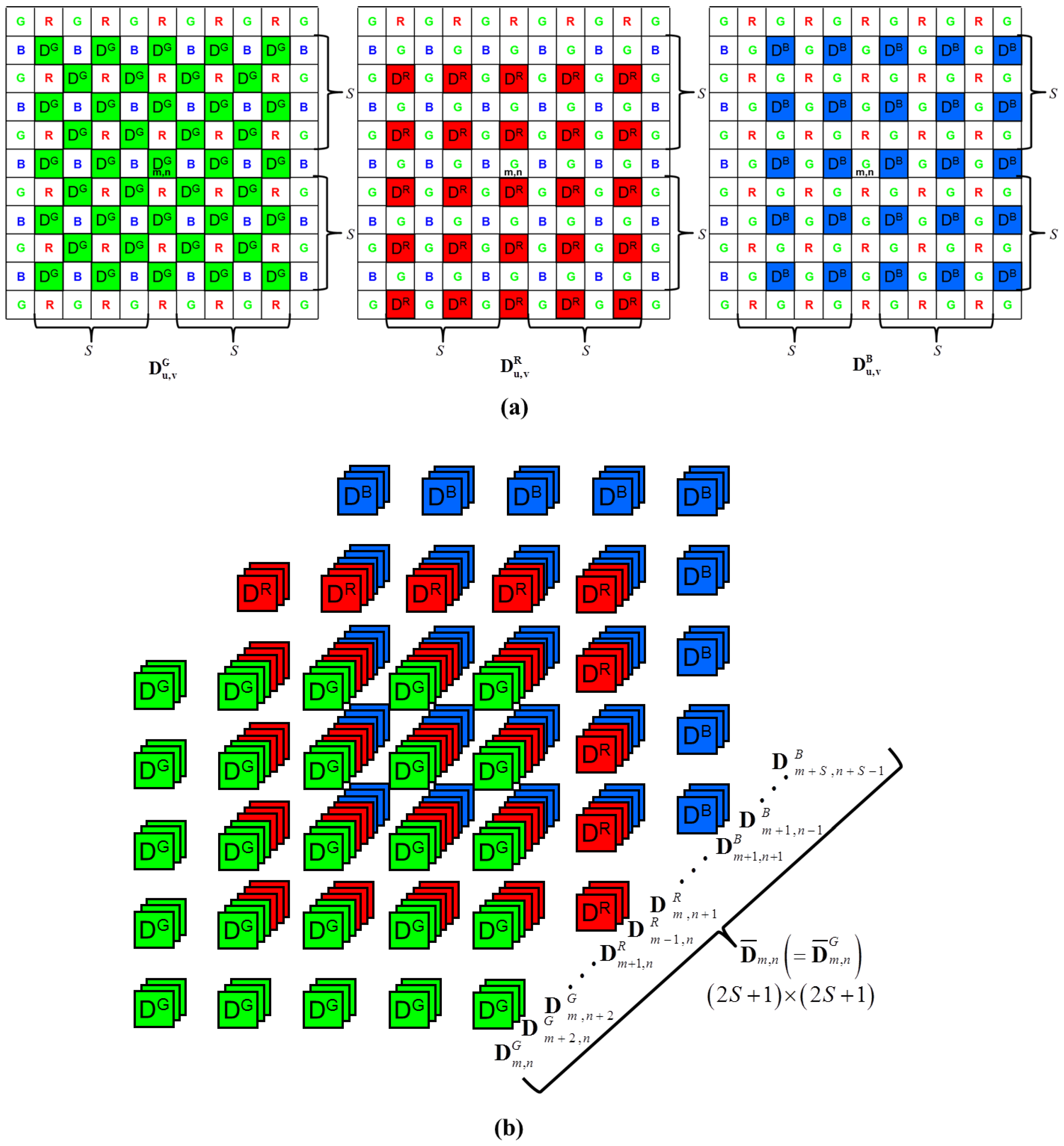

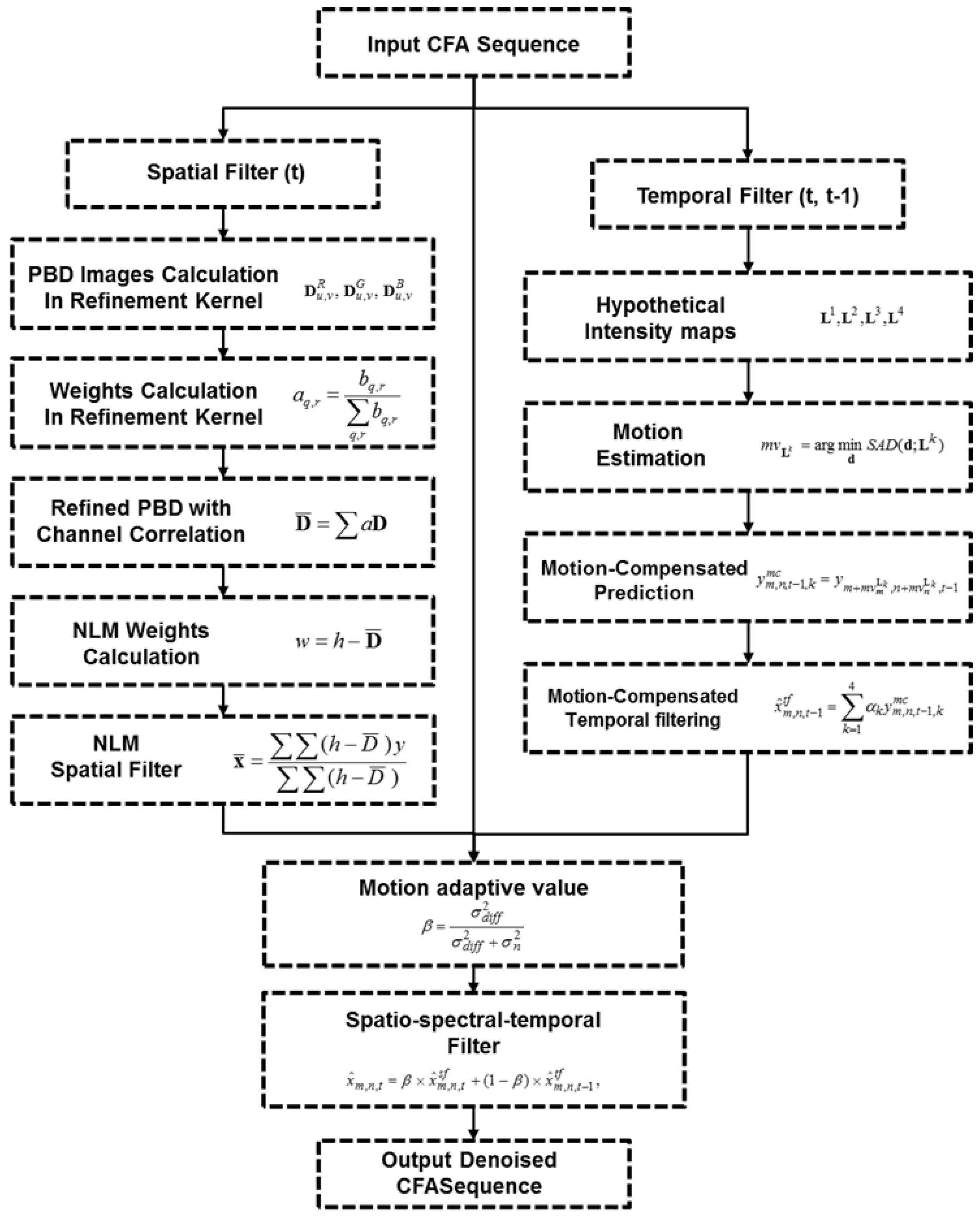

As in demosaicing, consideration of the inter-channel correlation is key to performing the denoising process in the CFA sequence. In the proposed method, the spatio-spectral-temporal correlation is considered for CFA denoising. The main contribution of this paper is twofold. First, a spatial filter considering spatio-spectral correlation is proposed to effectively remove noise while preserving edge details. The clarity along the edges and details is required for denoising in the spatial domain. However, edge estimation on an alternating under-sampled grid can be very difficult. Hence, the spatio-spectral correlation must be considered in the spatial filter, and the inter-channel correlation must be analyzed to detect the edges and details in the under-sampled pattern. Owing to the alternating under-sampling of the CFA pattern, the inter-channel correlation is difficult to consider in the direct denoising process. Hence, when conventional methods are used for the CFA pattern, spatial resolution degradation occurs. Conventional methods, which involve direct removal of noise from CFA, do not take into account the inter-channel correlation. To overcome the spatial resolution degradation while removing noise, both inter-channel and intra-channel correlations are used in the direct denoising process. In the proposed spatial filter, the patch is computed from the pixel values of all the color channels, such as a monochromatic NLM filter. The PBD values of the pixel locations are obtained from the intra-channels. Then, PBD refinement is conducted by considering both the intra-channel and inter-channel correlations in the CFA pattern.

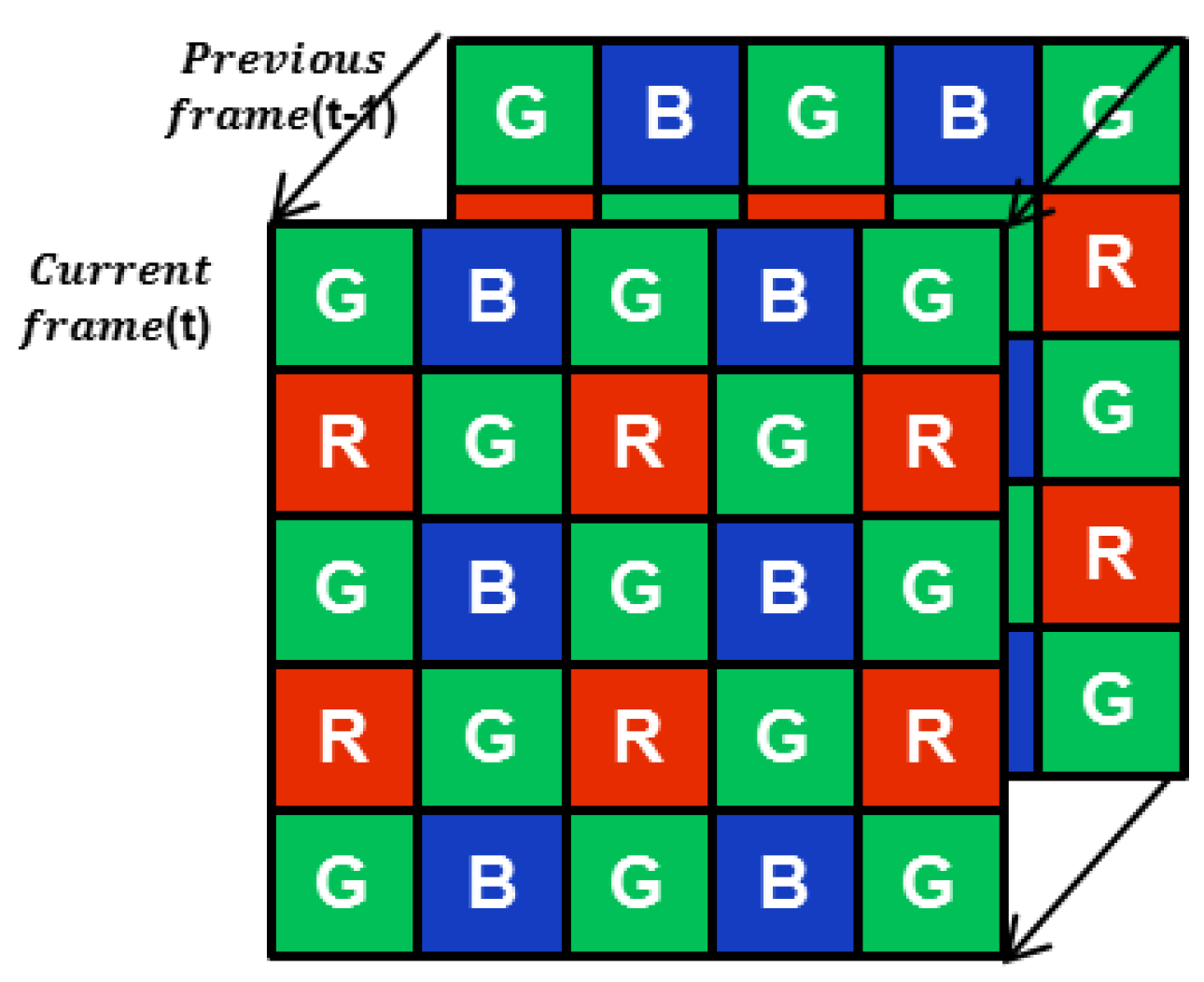

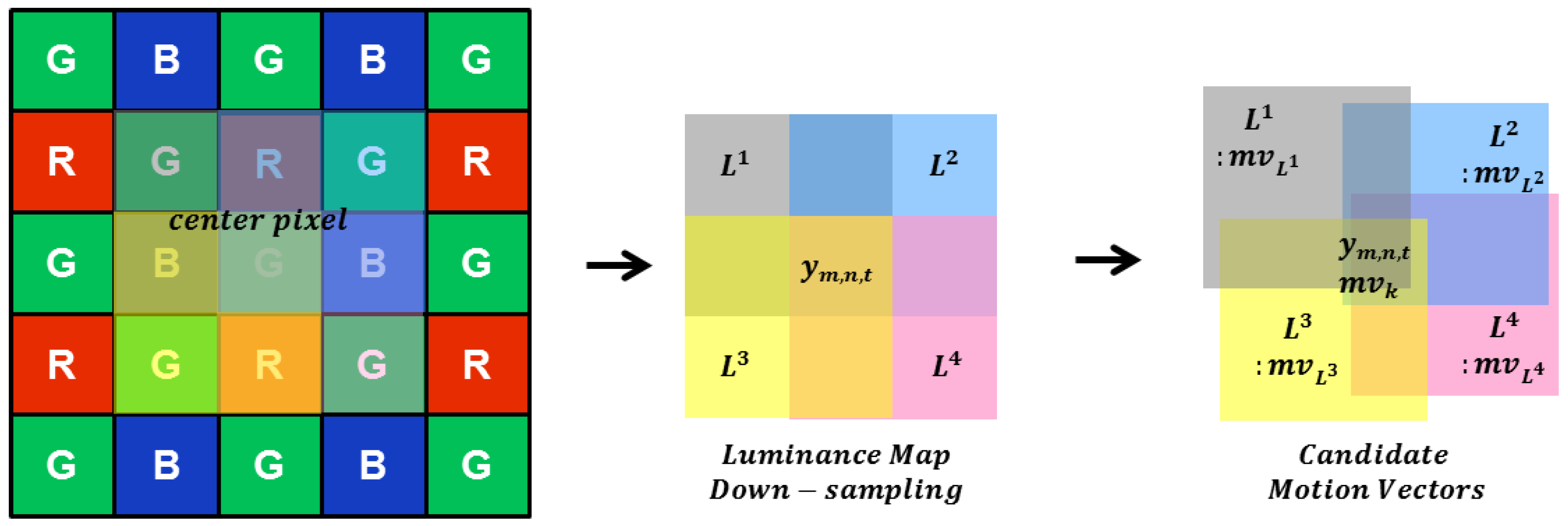

Second, a motion-compensated temporal filter is proposed that removes the noise in the temporal domain without motion artifacts and considers the tempo-spectral correlation. Most existing denoising algorithms that consider motion information have been proposed for monochromatic sequence data and are therefore difficult to apply directly in the CFA sequence. Owing to the alternating under-sampling of the CFA pattern, motion estimation and compensation are difficult to perform in temporal filtering. In the proposed temporal filter, the inter-channel correlation is considered in the motion estimation and compensation processes. To find the motion trajectory in a CFA sequence, a candidate motion vector is estimated from the CFA pattern by using hypothetical intensity maps. To consider the inter-channel correlation, these proposed hypothetical intensity maps are formed by pixels neighboring the center pixel. After determining the motion trajectories from the candidate motion vectors, the proposed motion-compensated temporal filter is directly performed on the CFA sequence. A motion adaptive spatio-spectral-temporal filter is proposed to eliminate motion blurring while maintaining the advantages of the temporal filter. It consists of a filter that operates in both the spatial and temporal domains, and the filtered results of these domains are combined using a motion adaptive value. The motion adaptive detection value is estimated by the variance of the absolute difference calculated from the CFA sequence. It adaptively controls the ratio of the results of the spatial and temporal filters. Then, the denoised CFA sequence without motion blurring is acquired.

The remainder of this paper is organized as follows:

Section 2 provides the background of the proposed method. In addition, we explain the proposed algorithm for use with the CFA sequence. The motivation and novelty of the proposed method are described in

Section 3. In

Section 4, the experimental results are given, including comparisons with conventional methods for test images with varying noise levels. We conclude the paper in

Section 5.

4. Experimental Results

We experimentally compared the numerical and visual performance of the proposed method with state-of-the-art denoising methods for a noisy CFA sequence, specifically first the single frame denoising [

31] followed by demosaicing [

15], first demosaicing [

15] and then video denoising (VBM3D) [

9], and then joint denoising and demosaicing for video CFA data [

32]. The single frame denoising method [

31] exploits characteristics of the human visual system and sensor noise statistics. In the demosaicing method [

15], the LMMSE filtering for considering spatial and spectral correlations was utilized to reconstruct a full color image. The state-of-the-art grayscale video denoising method, VBM3D [

9], was used next for the demosaicing method [

15]. In [

32], the denoising algorithm was used in the PCA-transformed domain to suppress the CFA image noise. Then, demosaicing was applied to the denoised CFA data.

The performance of the proposed method was tested with a real captured CFA sequence by using a Bayer pattern camera and a well-known common video sequence to simulate the CFA sequence, as shown in

Figure 9.

The original spatial resolution of each image was 1280 × 720 pixels. The original sequence shown in

Figure 9a was captured by using CFA patterned camera. We then evaluated the denoising methods by adding Gaussian noise to the CFA sequence. The original sequence in

Figure 9b is a full color sequence. The video data can be accessed at

https://media.xiph.org/video/derf/. We down-sampled the sequence using the Bayer pattern and added Gaussian noise to evaluate the denoising methods. In this experiment, we attempted to remove the effect of demosaicing and evaluate the performance of the denoising method. In addition, gamma correction was applied under the same conditions for all the cases—original, noisy, and denoised sequences.

For color images, the image quality of the denoised and demosaiced results depend on the demosaicing methods. The demosaicing method used in [

15] was adopted in [

9] and [

31], as well as in the proposed denoising method, whereby denoising and the demosaicing algorithm were separately conducted. Although denoising and demosaicing were performed together in [

32], a similar method described in [

15] was used in the internal joint process.

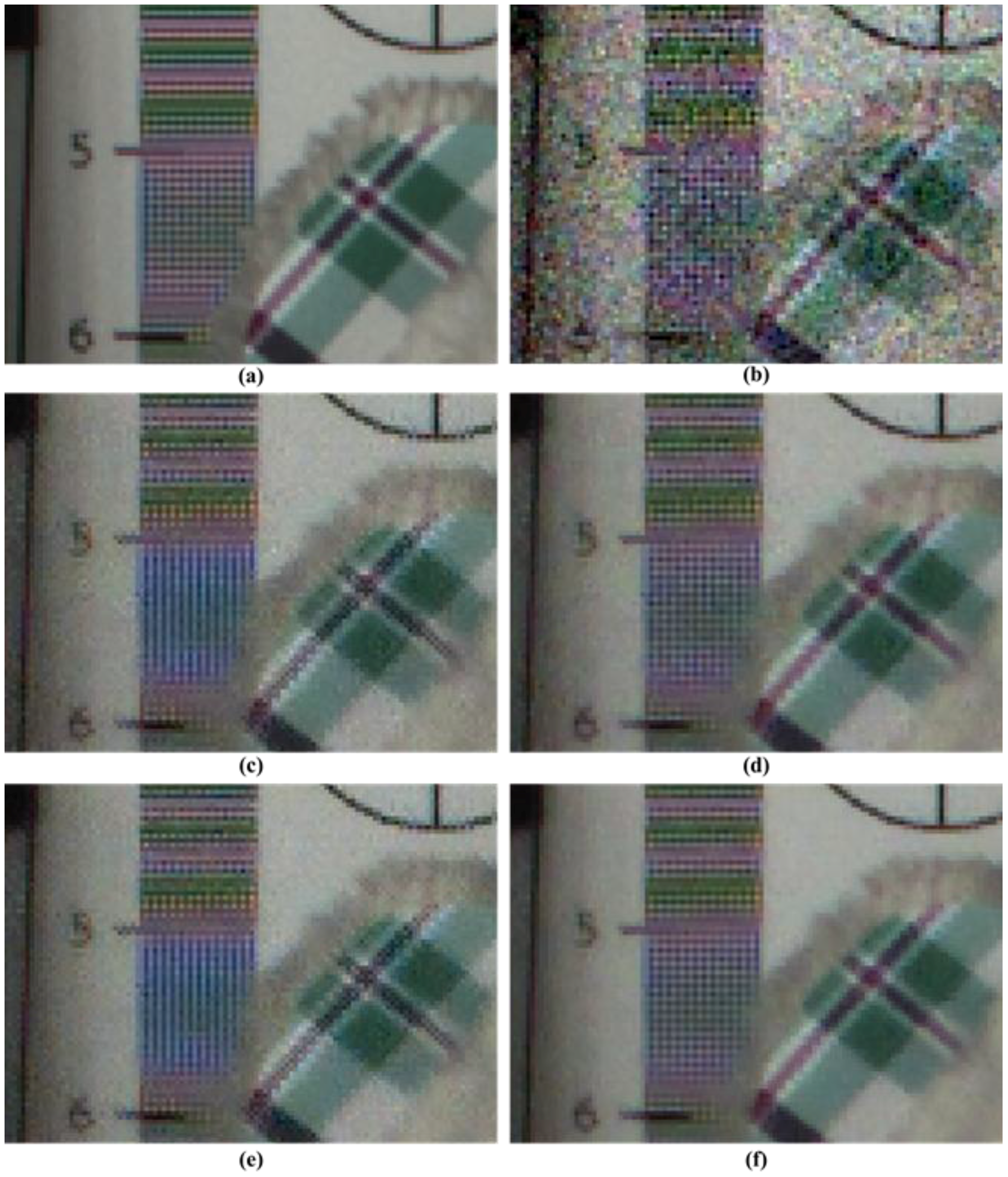

A visual comparison of the results is important for the algorithm evaluation.

Figure 10 and

Figure 11 illustrate a magnified view of some challenging parts of the restored “Moving Train” sequence. It is clearly seen that the proposed method significantly outperforms the conventional methods. As illustrated in

Figure 10 and

Figure 11, the visual results of the proposed method are better than those of the other methods. Although the method from [

31] effectively removes noise and preserves edges or textures, color artifacts appear, especially in the characters, as shown in

Figure 10c. The remaining noise appears in the results of

Figure 10c and

Figure 11c compared with the proposed method. The proposed method provides fewer color artifacts than the denoising method [

31].

Although the VBM3D denoising method [

9] demonstrates improved visual performance compared with the other methods, noise and ringing artifacts remain in the edge and detail regions, as shown in

Figure 10d and

Figure 11d. Because demosaicing process before denoising changes the statistical characteristics of the noise and spreads spatially. The method described in [

32] has severe motion artifacts around the edges and details in the region of motion, as shown in

Figure 11e. Because method [

32] did not consider the inter-channel correlation in video denoising process using motion information. The high-frequency regions, which are difficult to denoise, including small details and textures, are preserved significantly better and the color artifacts are reduced in the proposed method, as shown in

Figure 10f. Additionally, moving details are better preserved than those in the other methods, as shown in

Figure 11f.

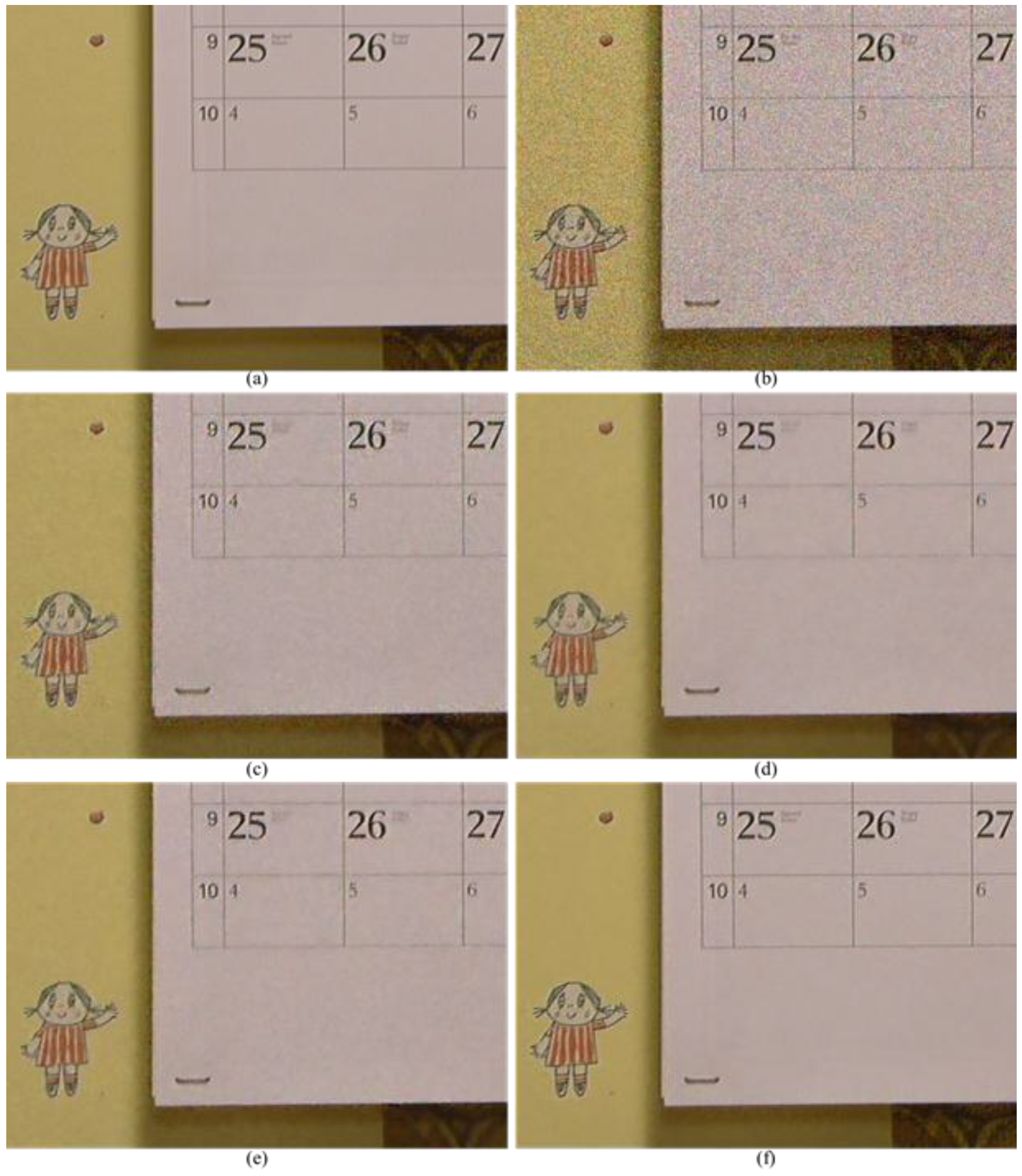

Figure 12 presents comparisons of the denoising results for relatively common video data, “Calendar”. Similar results were obtained in the simulation tests as in the captured set. The methods discussed in [

31] demonstrate ringing artifacts around the edges and over-smoothed results in the detail regions, as shown in

Figure 12c. Although the denoised results are clear and definite in [

9] and [

32], over-shooting and color artifacts appear around the strong edges, as shown in

Figure 12d,e. In the proposed method in

Figure 12f, the denoised results are more explicit and better than those in the other methods. It is evident that the proposed method provides significantly superior performance for the considerably noisy images that contain high-frequency regions that are difficult to restore.

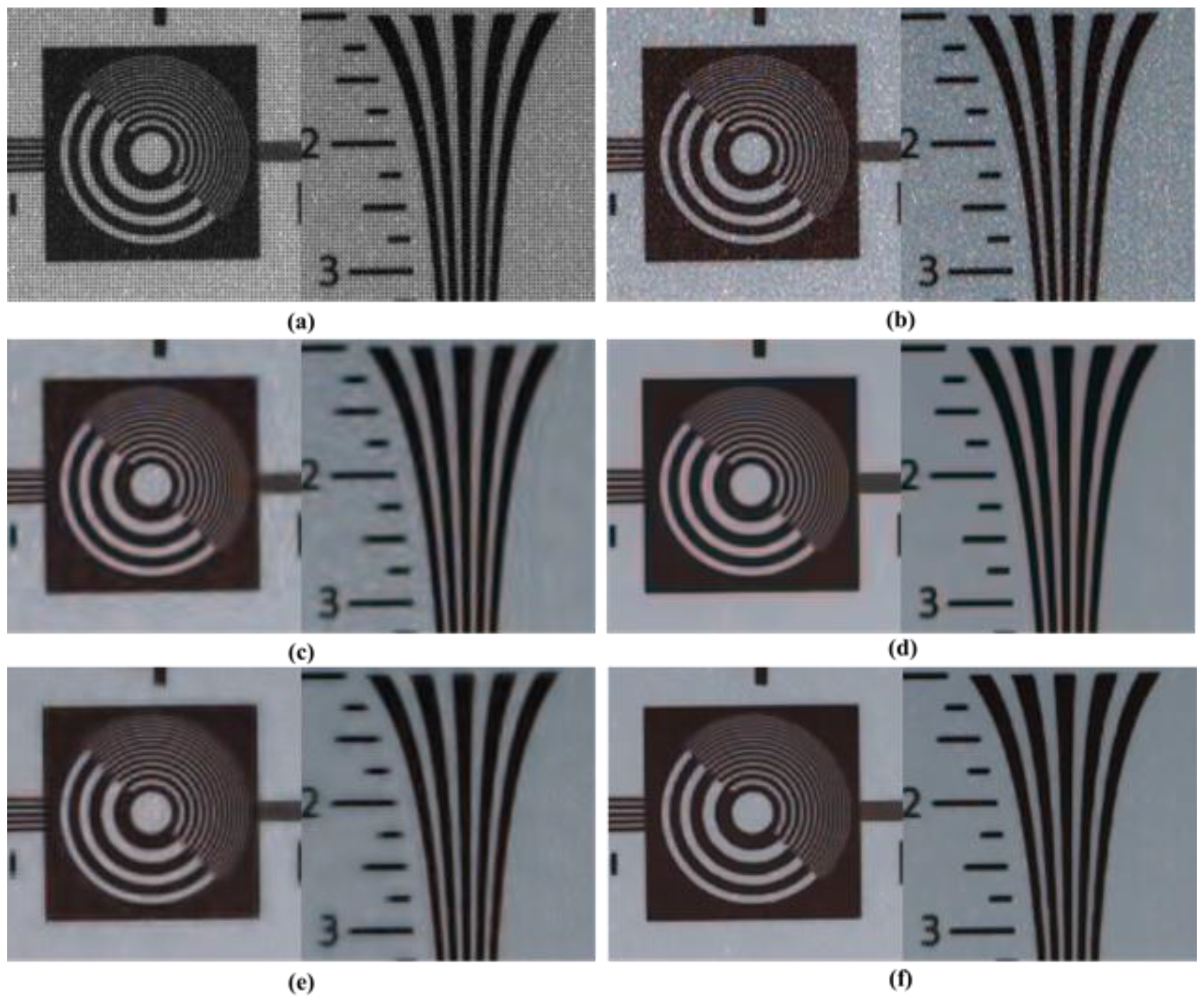

Figure 13 shows the denoising results obtained using different methods for a real static scene of original CFA image under noisy circumstances.

Figure 13 is a difficult part in the processing of denoising and demosaicing. Similar results were obtained as in the simulation tests for the results of

Figure 9. The methods discussed in [

31] and [

32] do not eliminate ringing artifacts around the edges and over-smoothed results in the detail regions. Although the denoised results are neat and definite for the method proposed in [

9], false color and artifacts appear around the strong edges. Denoised results obtained using the proposed method are more definite and better than those obtained using the other methods. The proposed method also provides significantly superior performance for the considerably noisy images that contain high frequency regions, which are difficult to restore.

The numerical evaluation is presented in

Table 1 and

Table 2 for average peak signal to noise ratio (PSNR) and color peak signal to noise ratio (CPSNR) values.

Table 1 and

Table 2 display the average PSNR and CPSNR values of the algorithms at each noise level for the denoised results of the CFA sequence.

The color peak signal to noise ratio (CPSNR) is defined as:

where CMSE is represented as:

where

,

, and

are the true pixel values of the original color images for the R, G, and B channels, respectively, and

,

, and

are the estimated pixel values of the denoised images for the R, G, and B channels, respectively.

and

are the row and column sizes of the images, and

is the size of the border, which is not calculated for the CPSNR.

We evaluated the effectiveness of the proposed algorithm by using structural similarity (SSIM) measurements [

34] for another representation of the performance. Various experimental setups were employed to examine the performance under the different noise levels and various motion scenarios. The SSIMs of the different scenes and noise levels were then calculated. The SSIM is defined as follows:

where

is the mean value of the denoised signal,

is the mean value of the original signal, and (

,

) is the standard deviation of the signal.

and

are the variables used to stabilize the division with a weak denominator. The SSIM index is a decimal value between −1 and 1, and 1 is only reachable in case of two identical sets of data. Two different scenes in

Figure 9 were chosen. For these different scenes, the average SSIMs at two noise levels were calculated, and the results are presented in

Table 3 and

Table 4. In general, our method outperformed other approaches in terms of average SSIM measurements. That is, the denoising result of the proposed method bears the most similarity to the original image.

In general, our method outperformed the other approaches in terms of the PSNR values. We observe that the proposed method produced superior results compared to the methods described in [

31] and [

32], which directly denoised the CFA image. The method used, VBM3D [

9], which provides state-of-the-art results among the considered denoising techniques in the grayscale sequence, performed the second best. In summary, the proposed method outperformed the competition in terms of visual quality and numerical results. This is because the proposed method simultaneously and efficiently considers inter-channel and intra-channel correlations to suppress noise and preserve edges and fine details without color artifacts and motion artifacts.

In the general framework, the motion-compensated spatial-temporal filter is performed in the demosaiced full color sequence. A denoising filter in that framework is generally applied to the luminance domain. In the proposed framework, the denoising filter is applied to the CFA sequence before demosaicing. The proposed method can prevent color artifacts and the spread of noise during denoising process by considering spatio-tempo-spectral correlation. However, in the general framework, demosaicing performed in noisy CFA can cause changes in the statistical characteristics of the noise and degrades the spatial resolution. If the proposed method is implemented in a general framework, noise will be removed without considering inter-channel correlation. The spectral correlation in CFA sequence should be modified and applied to the denoising process in the general framework.