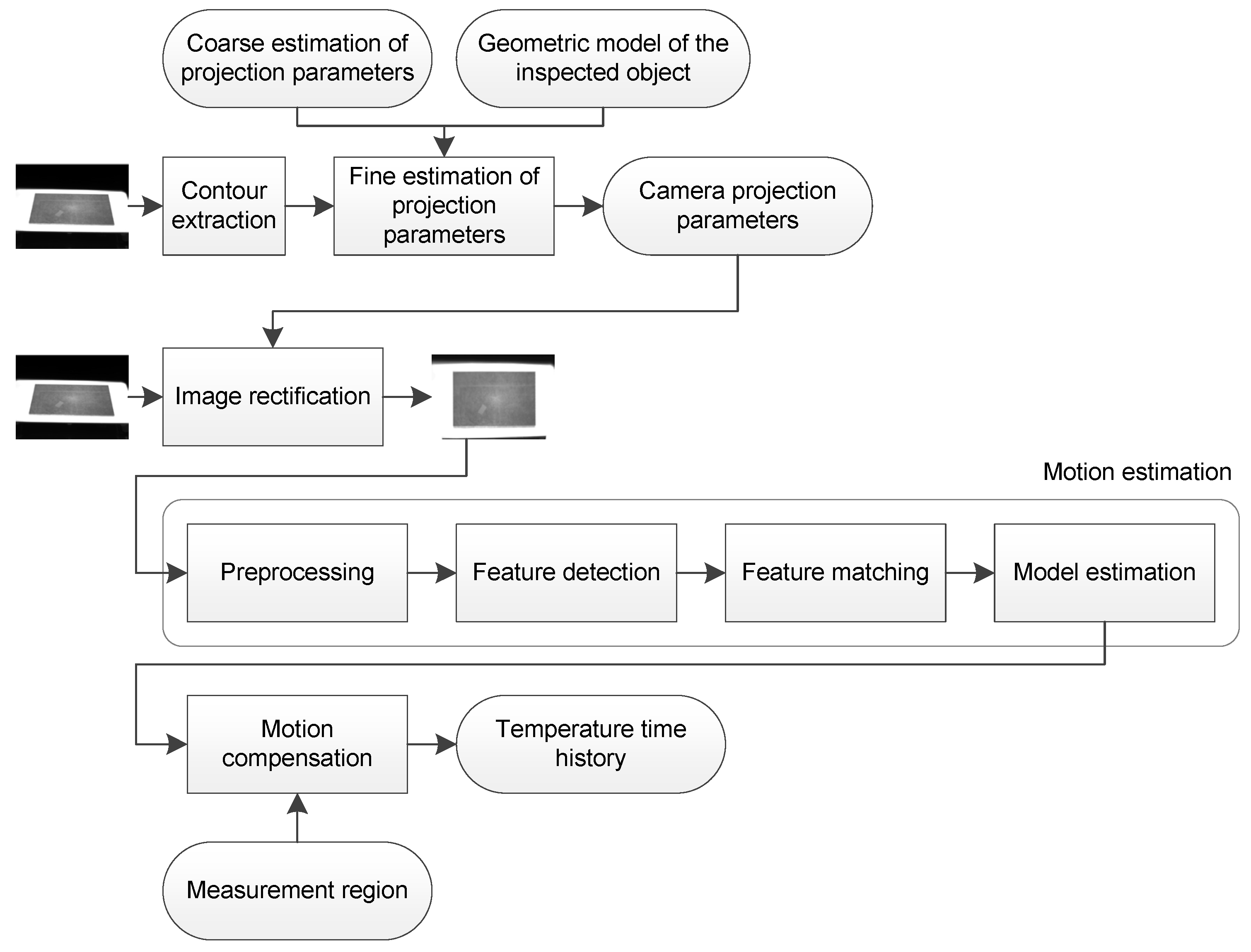

2.1. Image Acquisition

The first required step in order to monitor the temperature is the acquisition of the infrared images. The images are acquired using an infrared camera. These devices measure infrared radiation in a particular wavelength, typically from 8 to 12 , or from 2 to 5 . The measured infrared radiation is converted into temperature based on the properties of the inspected material, including the emissivity, and the conditions in which the image was acquired, including reflected temperature, ambient temperature, distance, or relative humidity. The accuracy of the calculated temperature values is greatly affected by errors in the estimation of these parameters.

The most common cameras used to acquire infrared images in industrial applications are long-wavelength infrared cameras based on uncooled microbolometers that operate in the range from 8 to 12 . They do not require cooling. However, the acquisition rate is low compared with high-end mid-wavelength infrared cameras that operate in the range from 2 to 5 . These cameras are usually based on cooled semiconductor detectors that provide much better temperature resolution and higher speed, but they are also more expensive and require more maintenance. Thus, both camera types have their advantages and disadvantages. In the case of fast moving objects, the proposed procedure would require high-end cameras to operate correctly and avoid blurred images. However, the proposed monitoring procedure can be applied using any type of camera.

Vibrations or camera motion cause not only a shifting of the monitored object in the image, they can also cause blurring of the image. Cooled cameras require short integration times (around 1 to 1.5 ms at room temperature). However, a microbolometer detector usually requires an integration time ten times higher. Therefore, depending on the speed of the movement between the camera and monitored object, motion blurring could appear in the acquired images. This work does not deal with this issue. It is assumed that moving objects are exposed sharply and edges can be detected accurately, either because objects move slowly or because a high-end camera based on cooled semiconductor detectors is used. In case motion blurring cannot be avoided, motion deblurring should be applied to the images before applying the proposed procedure. This issue is studied with detail in [

20] for visual images. Reference [

21] proposes a procedure for motion deblurring of infrared images from a microbolometer camera.

2.2. Image Rectification

In this work image rectification is used to calculate a front-parallel projection of the images that can be used to estimate the motion between images accurately. Image rectification requires an estimation of the parameters that control camera projection. These parameters can be classified as extrinsic or intrinsic camera parameters.

Extrinsic parameters control the transformation of points in world coordinates to points in camera coordinates, and include three rotations and three translations. This transformation can be expressed as (

1).

Intrinsic parameters determine the projection of points from camera coordinates to pixels in the image, and include the focal length (

f), the size of the pixels (width and height:

and

), and the position of the central pixel (

and

). This projection can be described as the combination of a perspective projection from 3D to 2D, expressed as (

2), and a 2D affine transformation, expressed as (

3).

The transformation of a 3D point

in world coordinates into a 2D point

in pixel coordinates can be expressed as (

4), where

r and

c stand for pixel row and column in the image.

In order to estimate the optimal values for the projection camera parameters, observations of a known target are required. Feature extraction from the images provides the position of known reference points in the calibration target. The parameters of the camera projection model are then estimated by using direct or iterative methods based on this set of reference points. This approach for the estimation of the camera parameters requires calibration targets with features of known dimensions. In visible cameras, accurate calibration targets can be accurately printed using off-the-shelf printers. However, infrared cameras require calibration targets with distinguishable features in terms of infrared radiation.

A recent work on infrared camera calibration estimates the projection parameters in (

4) without using specific calibration targets [

22]. In this work the projection parameters are estimated with iterative approximations based on the position of the edges in the image, which represent a great advantage for infrared image because objects of interest in infrared images can be easily distinguished from the environment due to temperature differences. This procedure does not consider distortions, but provides accuracy acceptable for common infrared applications. This method is easy to apply, and it does not require specific calibration targets because it is based on information that can be extracted from objects in the image. Moreover, it can be applied from only one image of a known object. Therefore, this is the method used to estimate camera projection parameters in this work.

The considered rectification procedure assumes that the area where the measurement is performed is flat. Therefore, the extracted points from the images lie on the same plane. This plane, the measurement plane, appears in many different applications where infrared thermography is used, such as building inspection or non-destructive testing where the inspected specimens are usually flat. Considering this plane

then all world points have a

equal to zero. Thus, (

4) can be expressed as (

5).

The iterative method proposed to estimate the coefficients of H requires a coarse estimation of the coefficients. The initial coarse estimation of the intrinsic parameters is provided by the manufacturer of the particular camera used (focal length, detector pitch and IR resolution). The initial estimation of the extrinsic parameters consists of the estimation of the displacement (vertical, horizontal and distance) and rotation (pan, tilt and roll) of the measurement plane relative to the camera.

The estimation of the projection parameters continues from the coarse estimation of

H. A contour of the inspected object is extracted from the image and transformed into world coordinates using

. The proposed method to extract the contour of the inspected object is the Canny edge detector [

23]. Then, correspondences are estimated by computing the closest points from the model to the object after applying the transformation to world coordinates. Incorrect correspondences bias the procedure, thus they must be filtered using robust statistics. The final step is the estimation of a homography using the correspondences. The procedure is repeated until convergence is reached. In each iteration the distance from the extracted contour to the real shape of the object is reduced. The result is a homography that describes the projection parameters accurately. This transformation can be directly applied to the original infrared image in order to obtain the rectified image in world coordinates.

In order to illustrate this procedure, a solid object is manually moved while temperature monitoring is performed. The goal is to measure the temperature in a particular location regardless of the movement. This experiment simulates the temperature monitoring of material that is moved, for example hot metal stones on a conveyor belt, or affected by vibrations. Next section will extend the tests with images acquired in real environments. In this example, a test piece made up of metal is used. The dimensions of the test piece are 300 mm × 199 mm × 5 mm. A visible image of the test piece can be seen in

Figure 2. The test piece is placed on a hot plate (electric griddle), which is at 150

C approximately. The experiment is performed when the test piece is around 100

C.

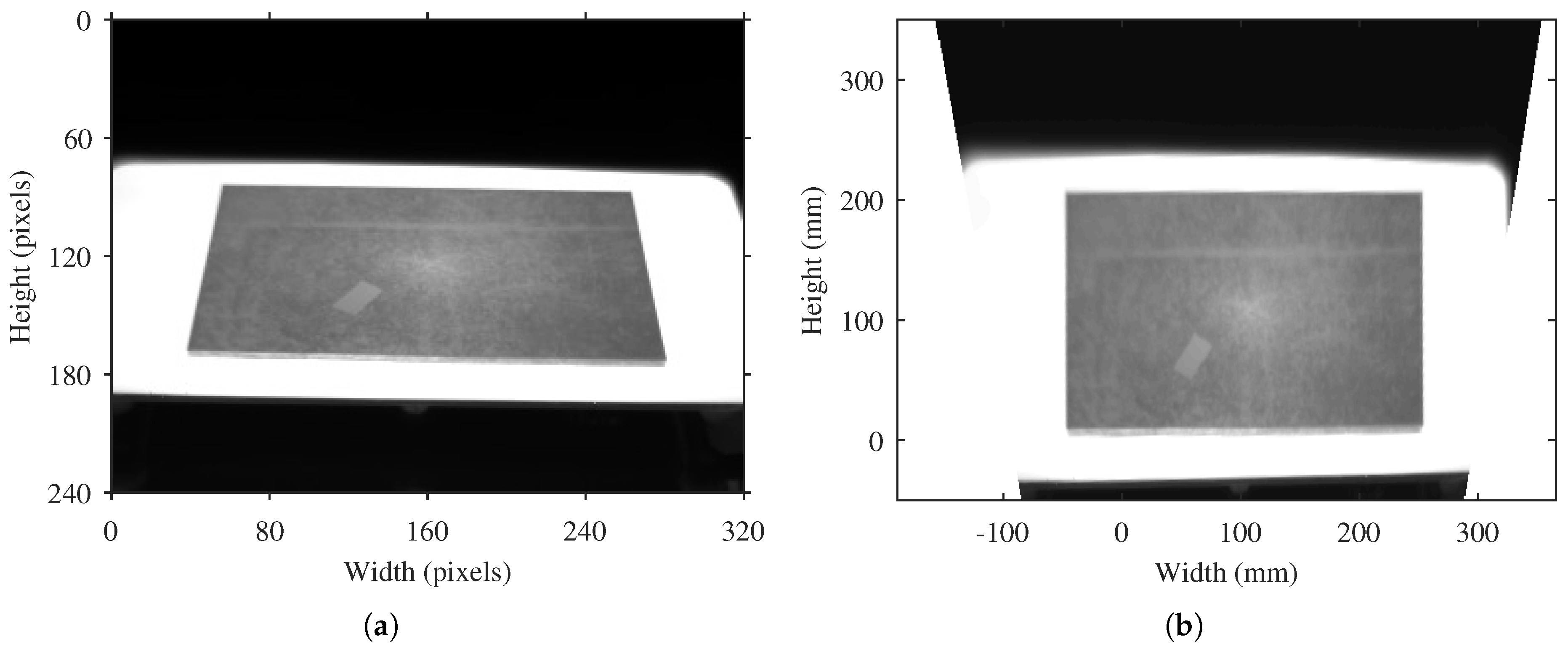

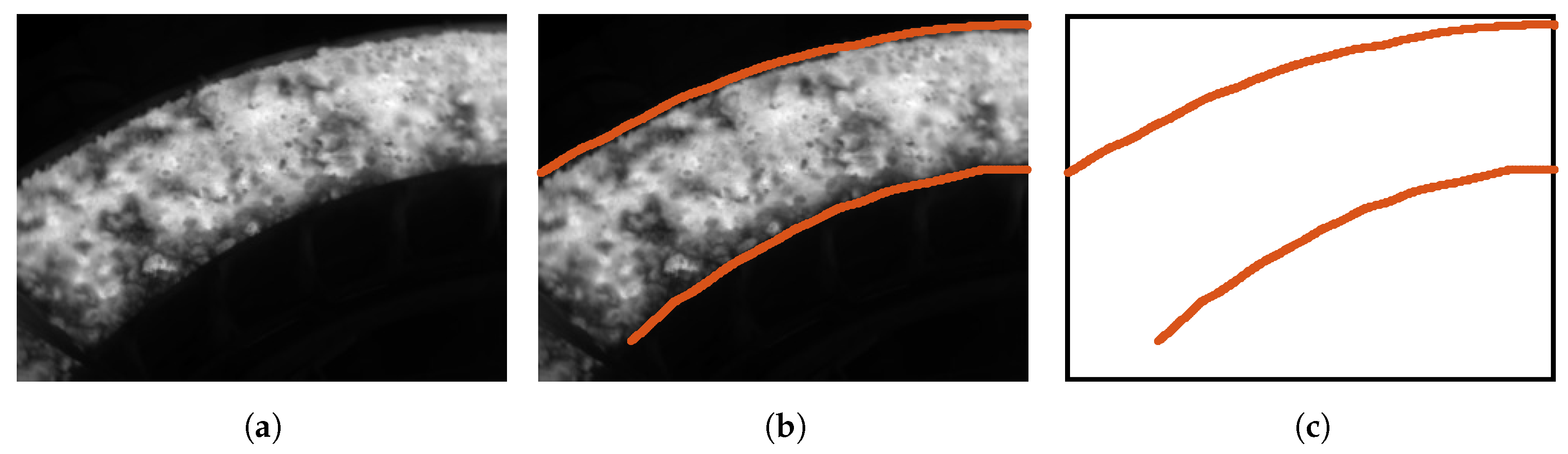

An infrared image of the test piece placed on the hot plate can be seen in

Figure 3a. This image is the first of a sequence of images acquired while the test piece is moved within the measurement plane to simulate movements of the material or vibrations. A piece of electrical tape is stuck on the surface for later tests. The temperature of the electrical tape is nearly identical to the underlying test piece and it is used for temperature monitoring. The electrical tape can be clearly distinguished in the images because the emissivity of the tape is higher than the emissivity of the surface of the metal test piece. Therefore, at the same temperature it emits more infrared radiation.

In order to extract the contour of the test piece, an edge detector is applied to the image. The result can be seen in

Figure 3b. The extracted contour in

Figure 3c is used for the estimation of the projection parameters.

The infrared camera used in this experiment is a FLIR T450sc (FLIR Systems, Wilsonville, OR, USA). The manufacturer provides information that can be used to obtain a coarse estimation of the projection parameters: 18 mm focal length, 25

detector pitch and 320 × 240 image resolution. For the initial values of the extrinsic parameters the following values are roughly estimated: 3

pan, 54

tilt, 180 mm and 180 mm horizontal and vertical displacements, and 1200 mm distance. This camera can acquire raw infrared images, and lossless videos. Therefore, the images used in the tests are not corrupted by noise, for example due to JPEG compression. The technical specifications of this camera are given in

Table 1.

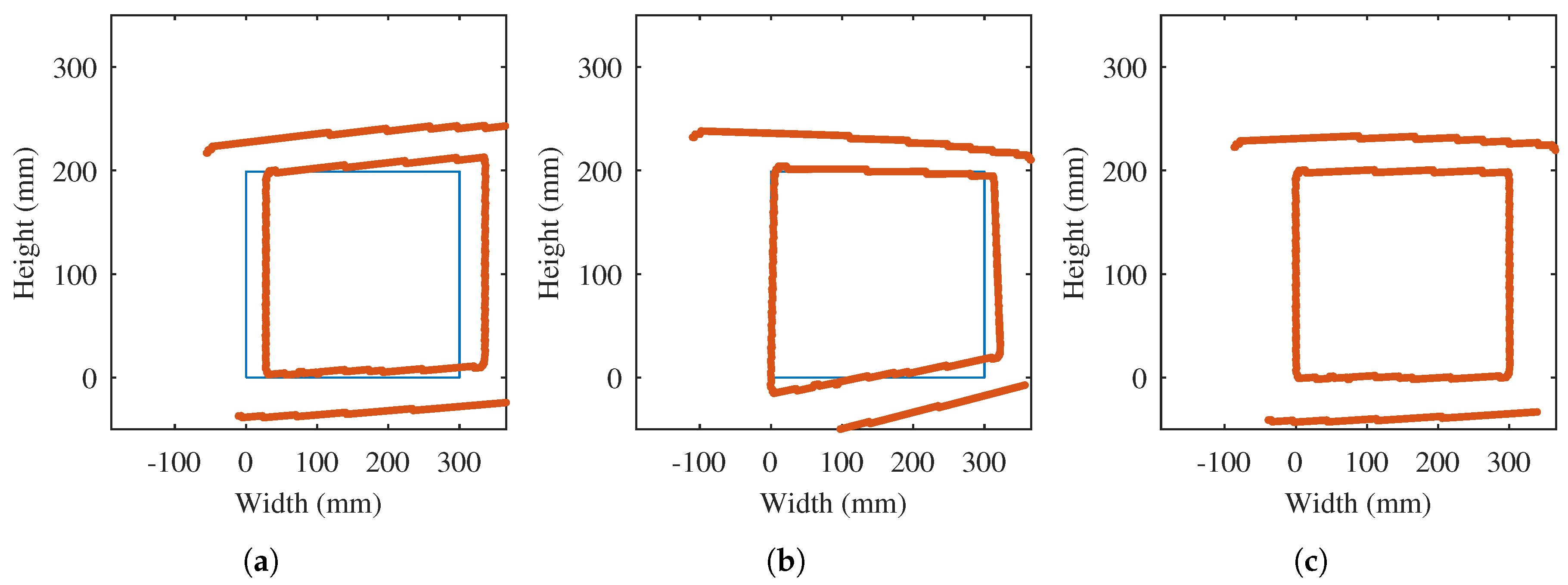

The initial values of the projection parameters are used to transform the extracted contour to world coordinates. The result can be seen in

Figure 4a. The shape of the inspected object (test piece is 300 mm × 199 mm) is included in the figure. As can be seen, the transformation of the extracted contour does not match the real shape of the object, as it is only an approximation.

The fine estimation of the projection parameters is carried out by minimizing the distance from the extracted contour in world units to the real shape of the object. The procedure runs iteratively until convergence. In each iteration the approximation improves, that is, the estimation of the projection parameters is more accurate.

Figure 4b shows the results after 5 iterations.

Figure 4c shows the results when convergence is reached, where an accurate estimation of the projection parameters is obtained.

Not all the points in the extracted contour produce valid correspondences. As can be seen in

Figure 4, the edges of the base plate are not part of the shape of the test piece. Therefore, these points are discarded [

24].

The final valid correspondences are used to accurately estimate the projection parameters, that is, the homography that describes the projection of points from world coordinates to image coordinates, and vice versa. The estimated homography is used to rectify the infrared image. In this procedure the image is interpolated according to a rectangular grid in order to calculate a front-parallel projection of the image using the projection parameters described in the homography. This procedure is generally available in most image processing packages as a projective transformation of an image [

25]. The result can be seen in

Figure 5, where an image with pixel coordinates is transformed into an image with a front-parallel projection in real-world units. The coordinates of the image in

Figure 5b are real-world units. Thus, useful geometric information can be easily extracted from the image.

2.3. Motion Estimation

Motion estimation is required to compensate for the movement of the monitored material in the sequence of images. This movement must be described with a mathematical model. Therefore, motion estimation requires the estimation of the values of the mathematical model. Once the model is estimated, it can be used to compensate for the movement of the object, by applying the inverse transformation to the objects that have moved in the image.

2.3.1. Mathematical Model

Modeling the movement between two images is complex, as an image provides a 2D representation of a 3D scene. However, rectified images provide a major advantage in this aspect as pixels represent world coordinates in the measurement plane. This way, the mathematical model required to describe movement is greatly simplified, yet accurate and complete. In a rectified image, the movements of the objects is 2D. Thus, it can be modeled using a 2D rigid transformation. This transformation has three coefficients: the rotation angle

; and the horizontal and vertical translations:

and

. This transformation can be expressed as (

6).

Working with rectified images has many advantages. One of them is that the model of the movement is very simple.

2.3.2. Feature Detection

This step detects salient and distinctive features from the images. Features must be distributed over the image. Also, the same features must be efficiently detectable in consecutive images. The goal is to find matching features between consecutive images, that is, a feature that identifies the same point in the scene in the two images. Generally, these features are detected from distinctive locations in the images, such as region corners or line intersections.

Feature detection includes two parts: the detection of the points of interest, and the description of these points. Points of interest in the image are stable and repeatable positions in the image. In visible images, these points can correspond to corners. A vector of features is calculated then for each of these points. These features include derivatives, or moment invariants. One of the most used method for feature detection is SURF (Speeded Up Robust Features) [

26]. This method is based on Hessian detectors and use the Haar wavelet to calculate the features of the detected points.

SURF does not provide good results using raw infrared images because in most cases the contrast in the region of interest is not enough to detect the features required to estimate movement. Moreover, when the image contains information about the moving material but also about non-moving objects, such as the background, features can also be detected in non-moving areas. This mixture of features cannot be used to estimate movement. Therefore, a preprocessing stage is proposed.

The first step of the preprocessing stage is to extract the region of interest from the image, that is, the part where the moving material is located. This step is application dependent, but can be carried out in most cases using thresholding techniques [

27]. The moving material inspected using infrared thermography usually has a different temperature from the rest of the image. Thus, thresholding the image based on the temperature level is an effective solution that works for most applications. The example presented in

Figure 3 is slightly different because in the image three parts can be distinguished based on temperature: the background, the hot plate and the test piece. In this case an effective approach is to apply thresholding twice: a first thresholding to distinguish the plate and the test piece from the background, and then a second thresholding applied only to the extracted region in the first thresholding to distinguish the plate from the test piece.

The second step of the preprocessing stage is the enhancement of the contrast in the image. This step enables SURF to extract meaningful features from the region of interest in the image. Applying SURF to the raw image can result in a low number of features focused on the corners of the material that do not provide the required information to estimate movement correctly. One of the most common methods to enhance contrast in images is a method known as CLAHE (Contrast Limited Adaptive Histogram Equalization) [

28]. This is the proposed method for contrast enhancement in this work.

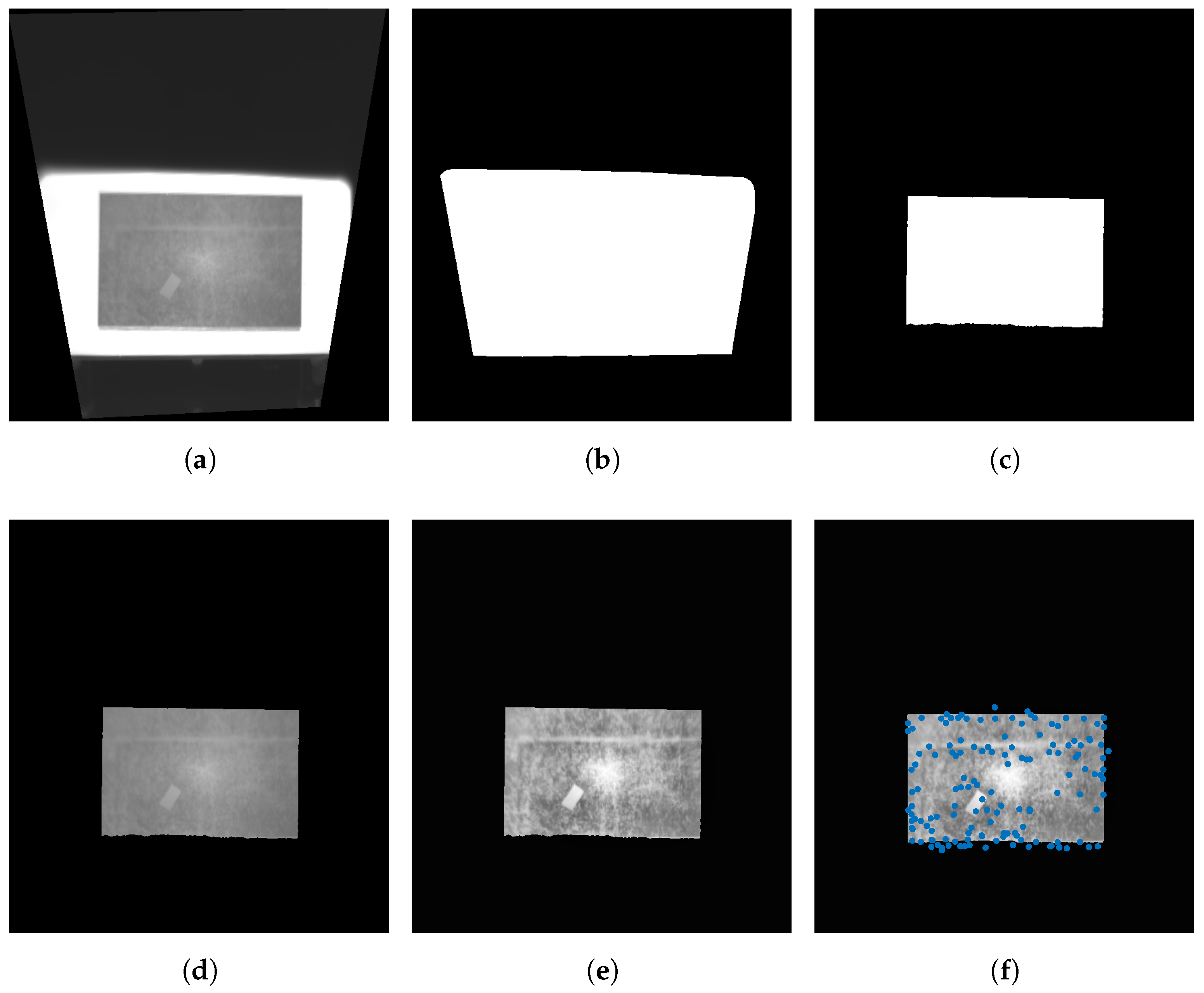

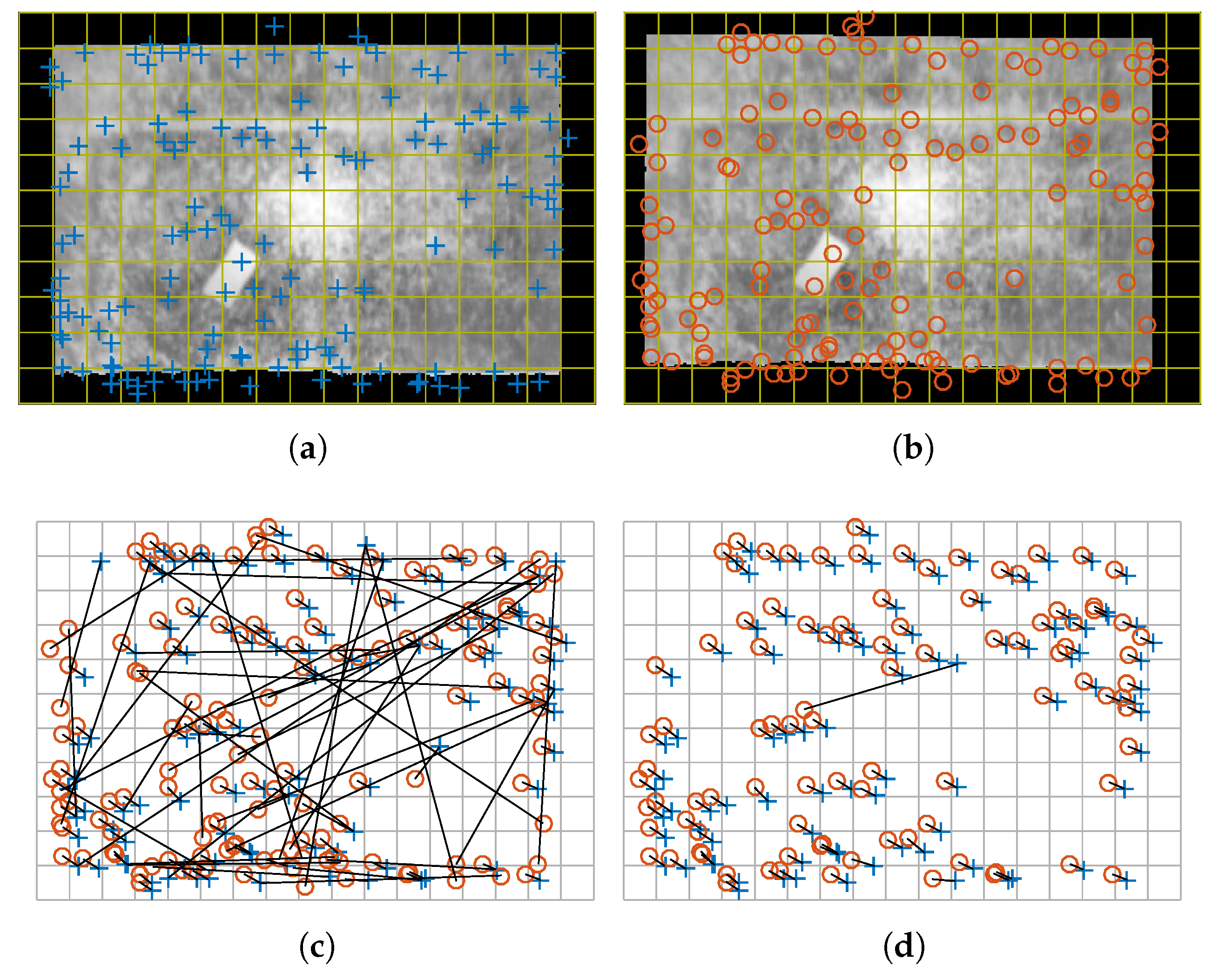

Figure 6 shows the results of the feature detection procedure for the test piece used in the previous example.

Figure 6b shows the results of the first thresholding, where a region that includes the hot plate and the test piece is obtained. This first thresholding is applied to distinguish the hot plate and the test piece from the background. The result is a binary image, where the white part represents the foreground and the black part the background that is ignored in next steps. The results of the second thresholding are shown in

Figure 6c. In this case, the obtained regions distinguish the test piece from the plate. The white area in this image represent the region of interest for the considered problem: the region in the image where the test piece is located. Using this region in the original image in

Figure 6a produces the result shown in

Figure 6d. This image is obtained by multiplying the images in

Figure 6a,c (in some references this is described as the application of the and logical operator to the images).

Figure 6e shows the result of the next step in the preprocessing: contrast enhancement using CLAHE. The resulting image can now be used to detect the features required to estimate the movement. The location of the features for the example can be seen in

Figure 6f.

As can be seen in

Figure 6f, some features are located outside the boundary of the test piece. This is because features are calculated based on derivatives that use windows of pixels around the pixel in which the derivative is calculated. Cropping the image around the test piece would solve this problem, but some interesting features in the corners could be missed.

2.3.3. Feature Matching

Feature matching looks for correspondences between two set of features. Features from the two considered images are compared and linked by minimizing the sum of squared differences. The result is a set of possible correspondences, in most cases containing outliers.

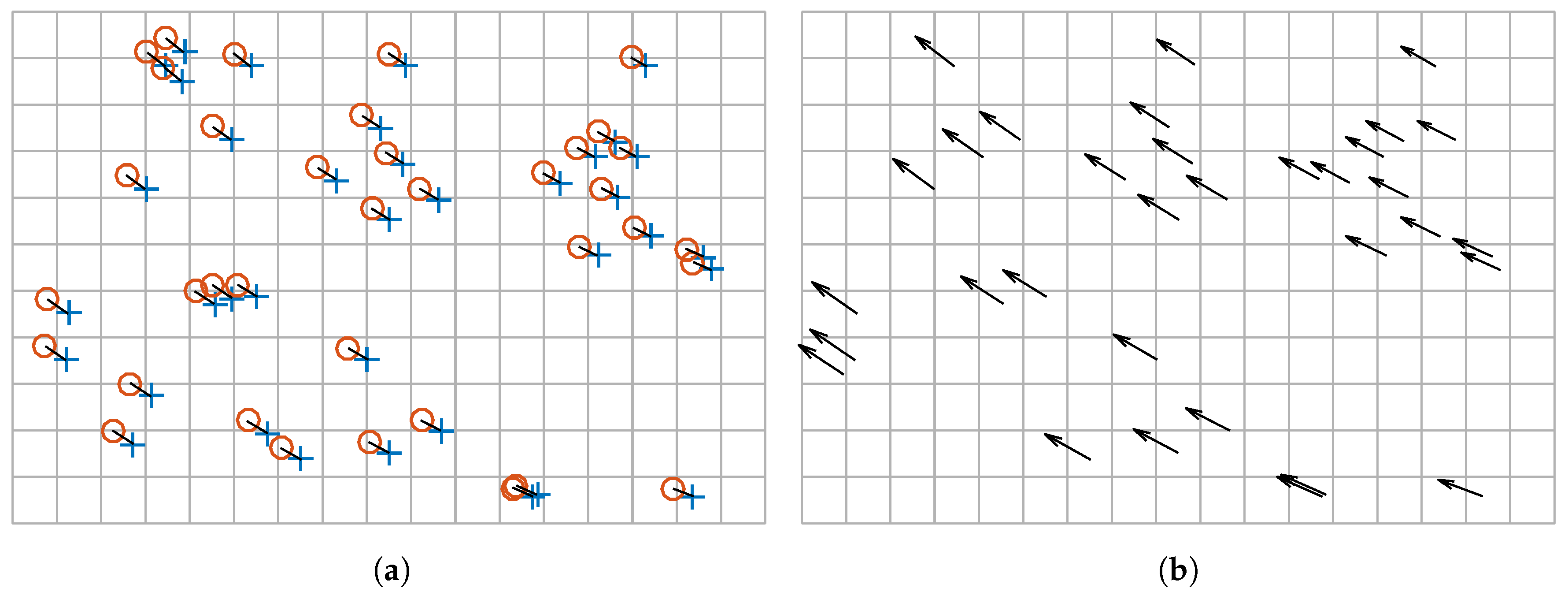

Figure 7 shows an example of feature matching. In this example a second image of the same test piece acquired later is shown. When the second image is acquired the test piece is slightly moved to the left and upwards. The movement of the test piece is performed within the measurement plane, thus, the same projection parameters are used to rectify the second image. The feature detection procedure is applied to the two images, including preprocessing and enhancement. The results are shown in

Figure 7a,b. The goal of the feature matching procedure is to find the corresponding features between the two images. The initial result of the feature matching procedure can be seen in

Figure 7c. A line connects the matched features between the two images. They also indicate the estimated movement, from the crosses to the circles. The initial result includes many outliers that do not provide the correct information about the movement of the test piece. Ideally, all the matched features should identify the same movement. Part of these outliers can be removed using heuristics. For example, distances between feature vectors can be sorted. Then, only a percentage of the closest distances can be selected as valid in order to reject ambiguous matches. Multiple features in the first image matching the same feature in the second image can also be removed to reduce the number of outliers. Using these two heuristics, the result of the feature matching procedure reduces the number of outliers, as can be seen in

Figure 7d. However, no heuristic can guarantee there will not be outliers in the result of the matching. In the example, there are still clearly visible outliers.

When using infrared images, features can also change with time due to temperature differences that can diminish due to heat diffusion. Therefore it is not possible to find matching features between images acquired at distant time periods. In this work feature matching is applied between images acquired consecutively, where the features are expected to remain constant. However, temperature differences could generate some outliers that need to be considered for the model estimation.

2.3.4. Model Estimation

The movement model is described using a 2D rigid transformation. The coefficients of this model must be estimated using the result of the feature matching procedure: a set of point correspondences. These correspondences provide information about the movement of the material in the image. In this work, the method used to estimate a rigid transformation is a fast 2D method [

29].

Considering a set of

n points

and

in

, where

and

represents the 2D coordinates of the

i-th point in

and

, the rigid transformation that maps

into

can be described as (

7), where

R is the rotation and

t the translation.

Solving (

7) requires minimizing

E, which is obtained using the least squares error criterion and can be defined as (

8).

The value of

t that minimizes

E must satisfy (

9).

Therefore,

t can be calculated using (

10), where

and

are the centroids of

and

Substituting the centered points

and

in (

8) yields (

11).

The angle of rotation

defines the rotation matrix. The rotation of point

using this angle is (

12).

Substituting (

12) in (

11) gives an equation where

E only depends on

. Solving for

results in (

13).

In order to calculate the translation

t, the value of

R must be substituted in (

10).

The method used to estimate the rigid transformation between correspondences should only be applied when there are no outliers in the data. Correspondence outliers would lead to major errors in the resulting estimated transformation. Therefore, the method to estimate the rigid transformation cannot be applied to the matched features directly.

The proposed solution for the estimation of the rigid transformation using noisy correspondences is MLESAC [

30]. This robust estimator is an enhanced version of the Random Sample Consensus (RANSAC) algorithm [

31], widely applied to estimate mathematical models robustly. The algorithm randomly samples the available correspondences and estimates rigid transformations using the previously described method. Not all point correspondences are used, just the strictly required number to estimate the rigid transformation. Among all the putative solutions, the solution that maximizes the likelihood is chosen.

Figure 8 shows the results of the motion estimation procedure for the considered example. As can be seen in

Figure 8a, only some of the correspondences in

Figure 7d are truly considered for the robust estimation of the movement model. The final result represented in

Figure 8b is an accurate estimation of the movement in the test piece between the two images. The result of the robust estimation of the movement is a 2D rigid transformation that perfectly describes the movement of the material in the measurement plane.

2.4. Motion Compensation

Motion compensation can be applied in two equivalent ways. The first possible approach is to move the pixels in the second image according to the inverse of the estimated movement. This approach requires image reinterpolation.

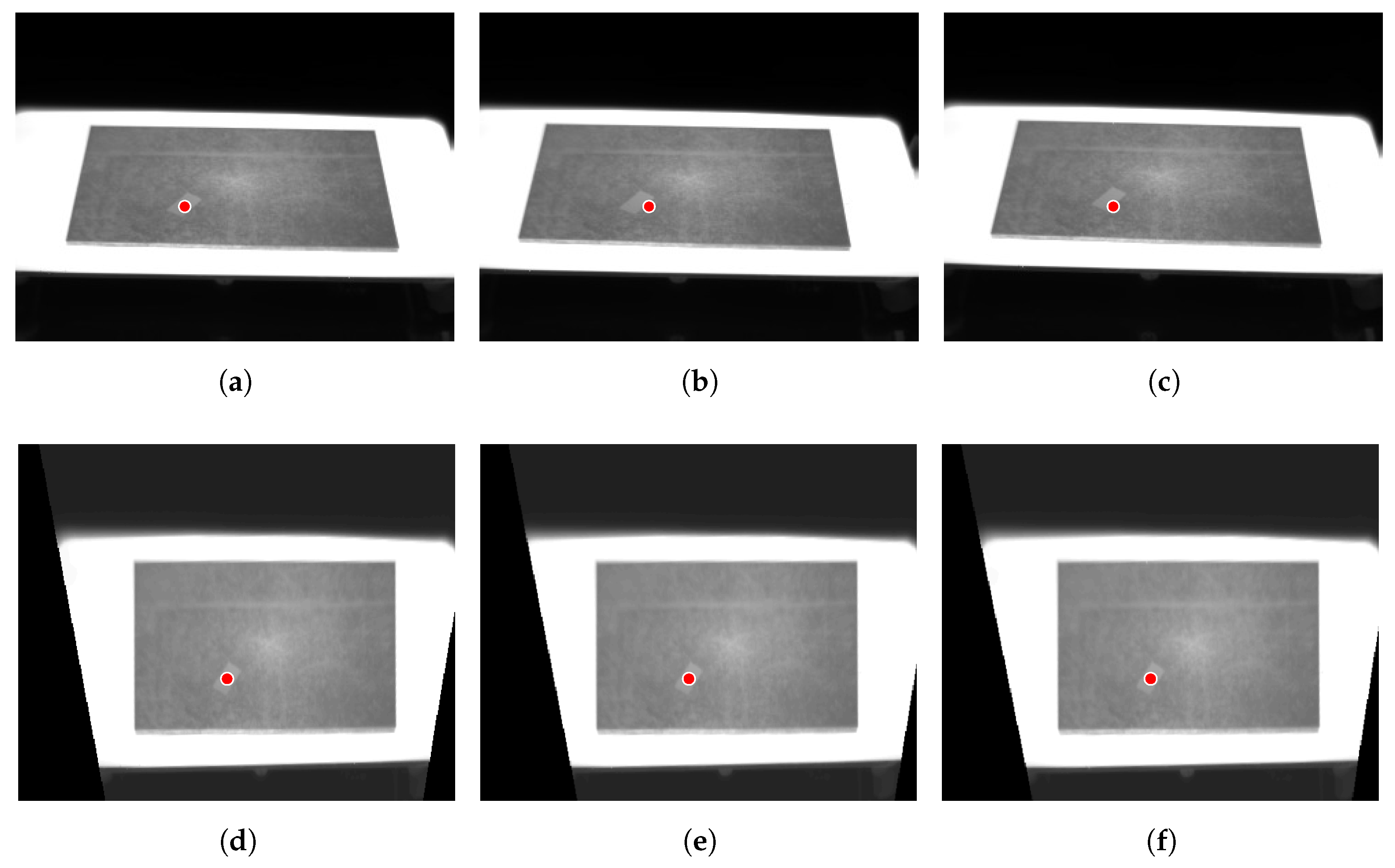

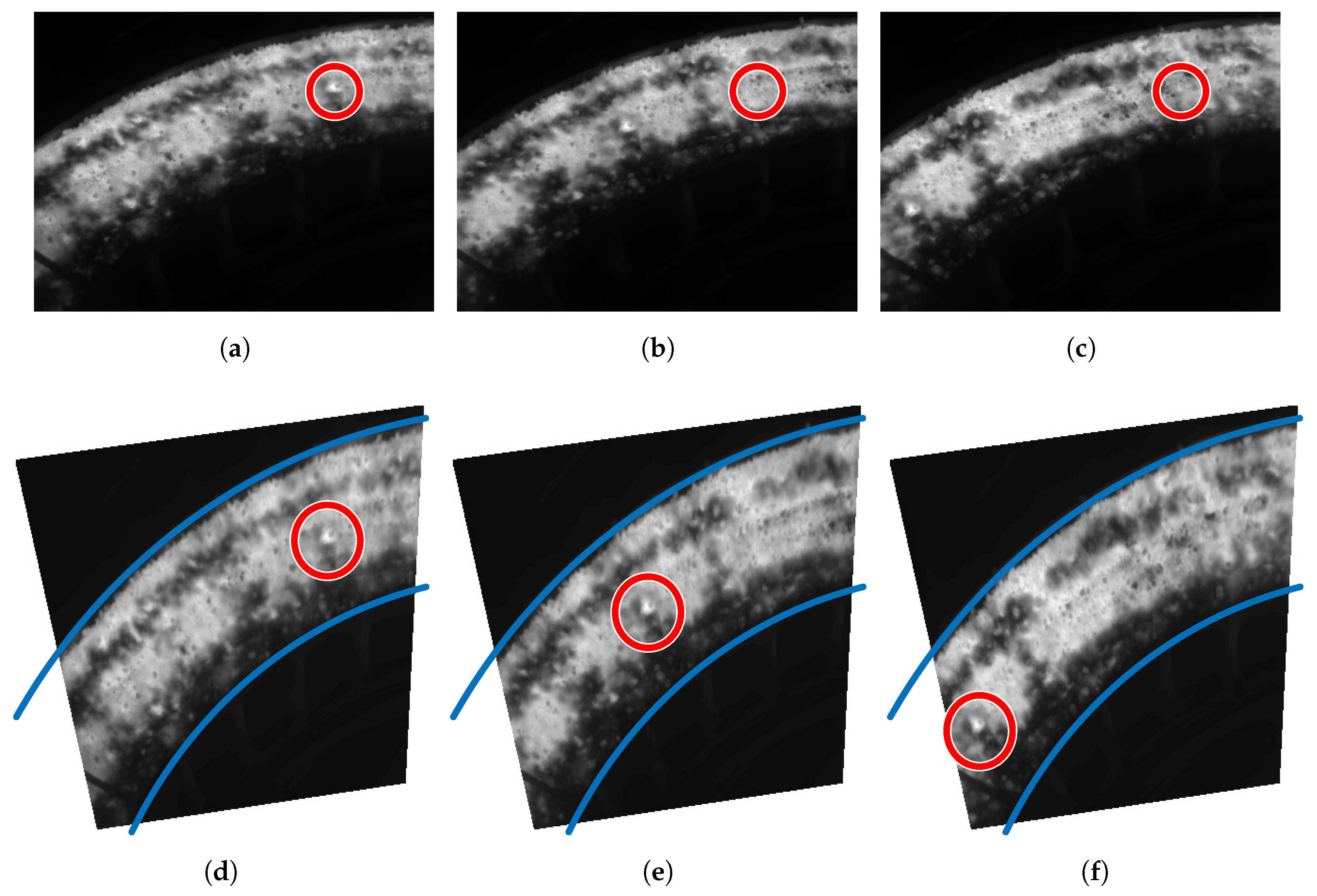

Figure 9 shows an illustration of the image reinterpolation approach. The first row of images shows the raw infrared images acquired while the test piece is moved. In the first image (

Figure 9a), a circular measurement region is established on the electrical tape stuck on the test piece. As expected, while the test piece is moved the position of the measurement region misses the location of the center of the tape. The second row in the figure shows the images after motion compensation. In this case, the circular measurement region always stays at the same position relative to the electrical tape, regardless of the movement.

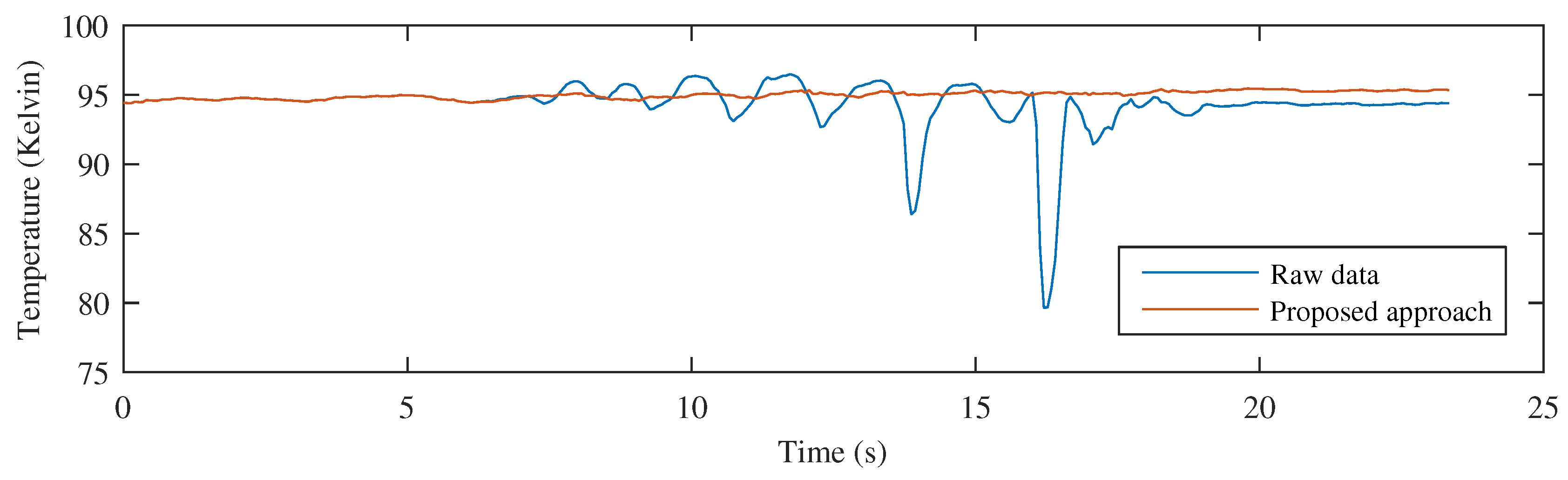

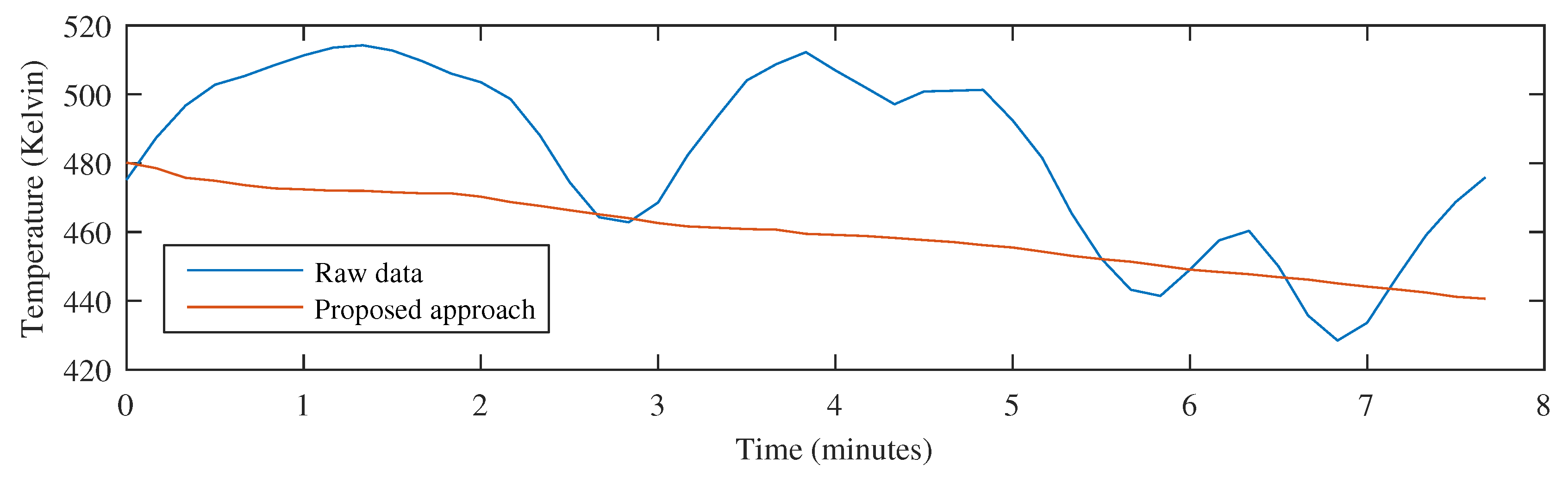

Monitoring the temperature in the circular measurement region of the previous example provides the results shown in

Figure 10. When the raw images are used, the position of the circular measurement region fails to identify the position of the center of the tape, as can be seen in

Figure 9. Therefore, the resulting signal does not provide the correct temperature of the tape over time. However, when the movement is compensated using the proposed approach, the position of the measurement region is always correct, resulting is an accurate signal representing the temperature time history of the inspected material.

The second approach to image reinterpolation is to move the measurement regions according to the estimated movement of the monitored material. This approach does not require image reinterpolation, thus, it is faster and produces the same results.

The motion estimation procedure produces a 2D rigid transformation

between every two consecutively acquired images,

and

. The obtained transformations can be composed to obtain the transformation from the first image to the current image

i using (

14).

Using (

14) any single point in one image can be transformed back and forth between any other image. Therefore, it can be used to compensate for the movement of the material.