Detecting and Classifying Human Touches in a Social Robot Through Acoustic Sensing and Machine Learning

Abstract

:1. Introduction

2. Related Work

2.1. Tactile Human–Robot Interaction

2.2. Interaction through Acoustic Sensing

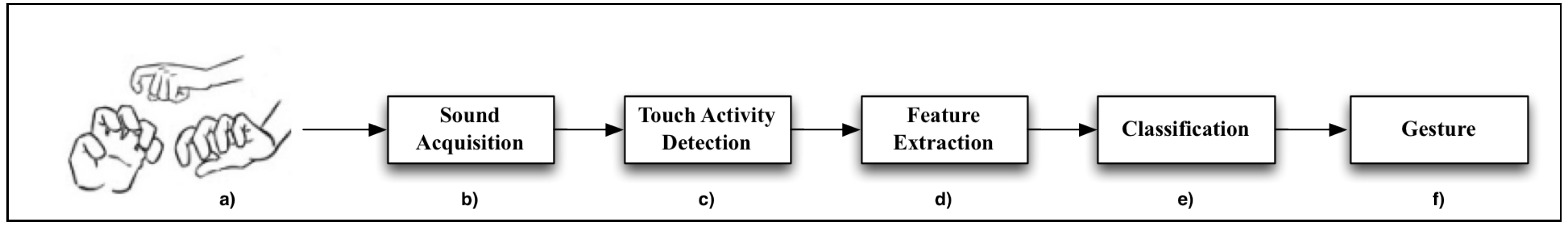

3. System Phases

4. System Setup

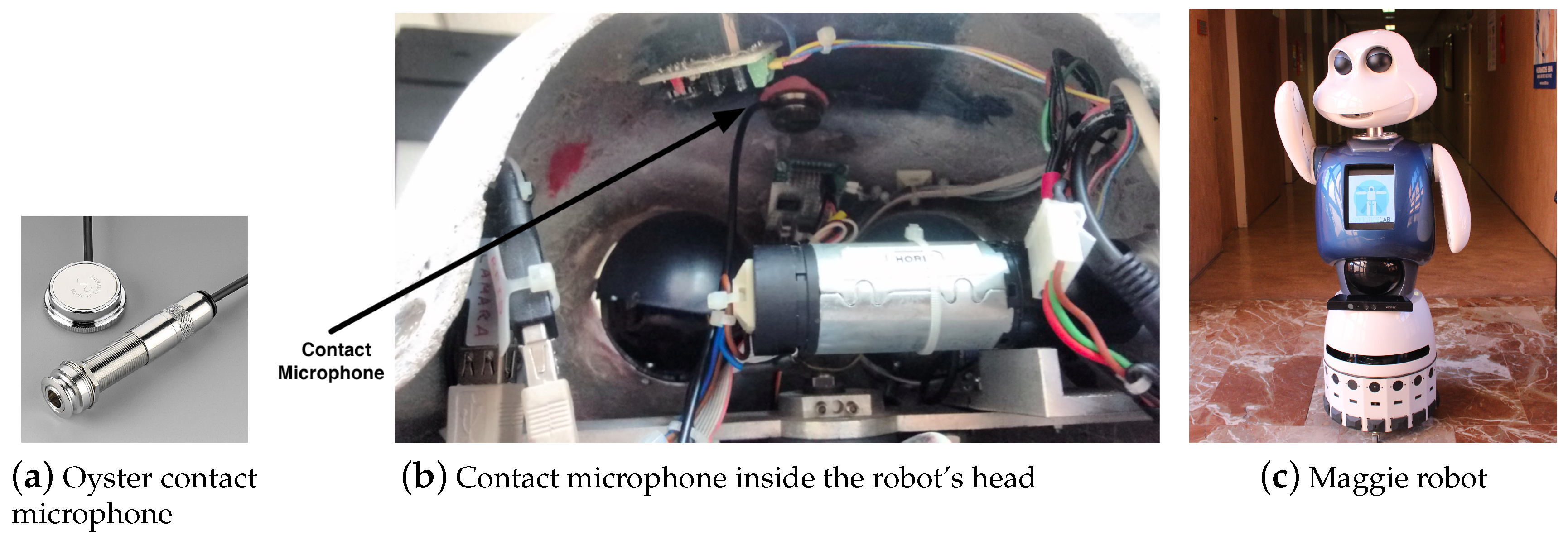

4.1. Contact Microphones

4.2. Integration of Contact Microphones in Our Social Robot

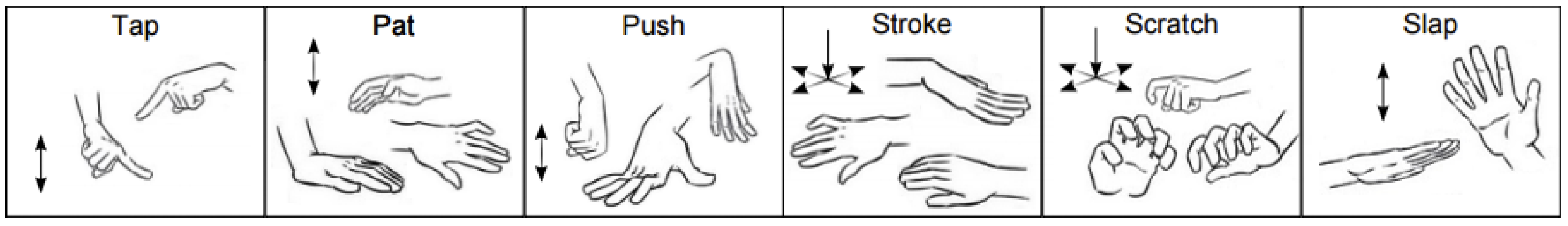

4.3. Set of Touch Gestures

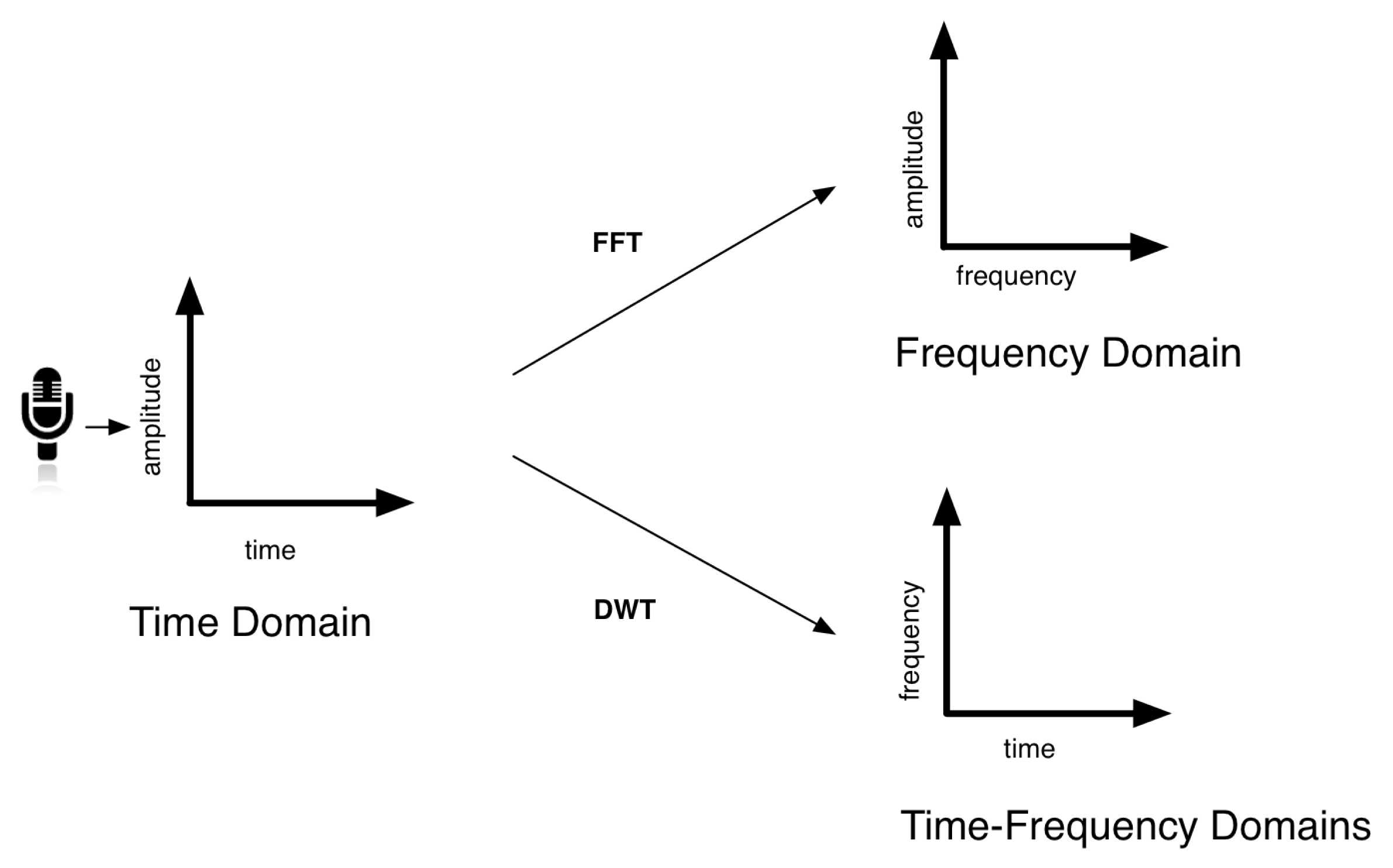

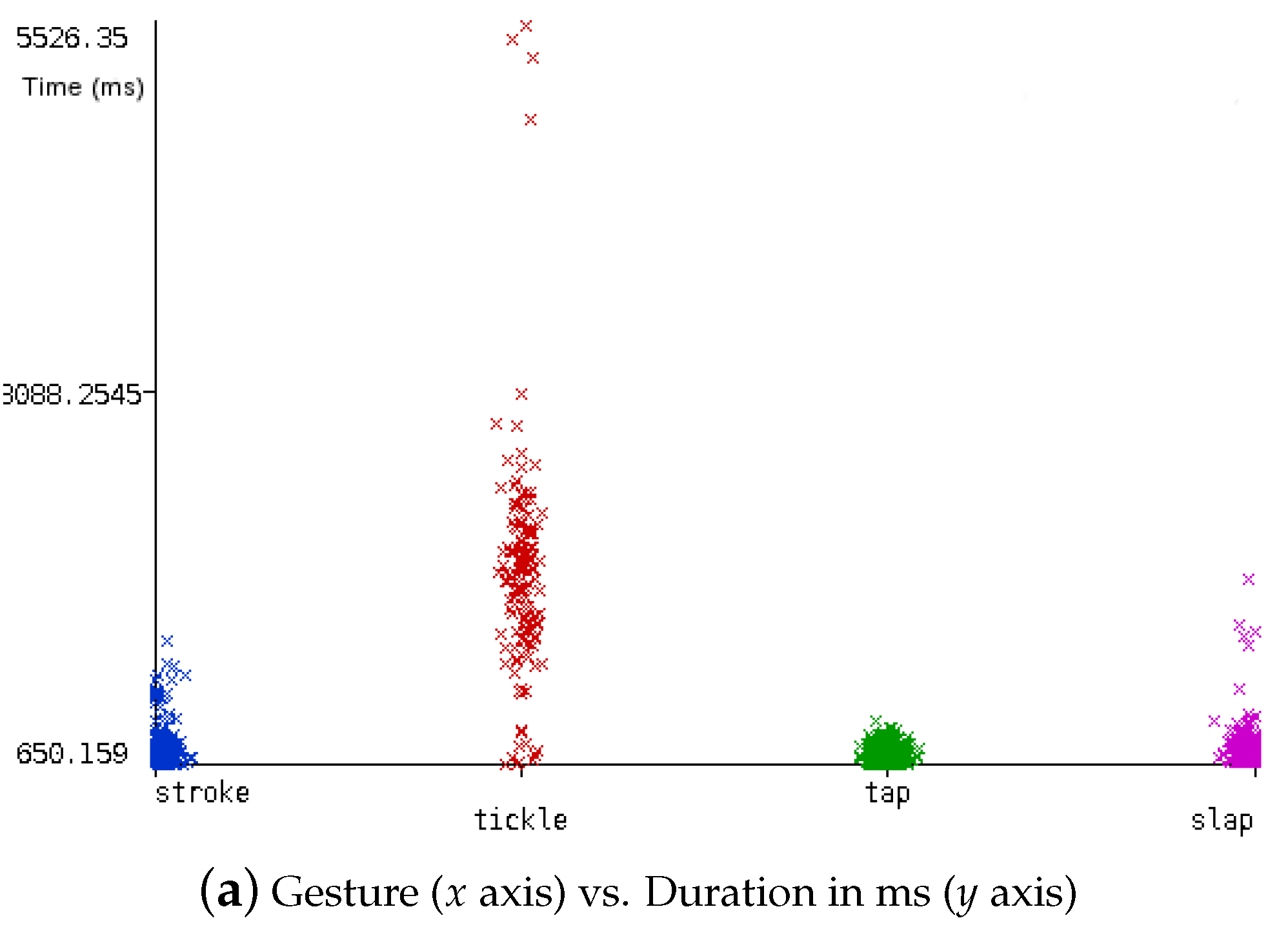

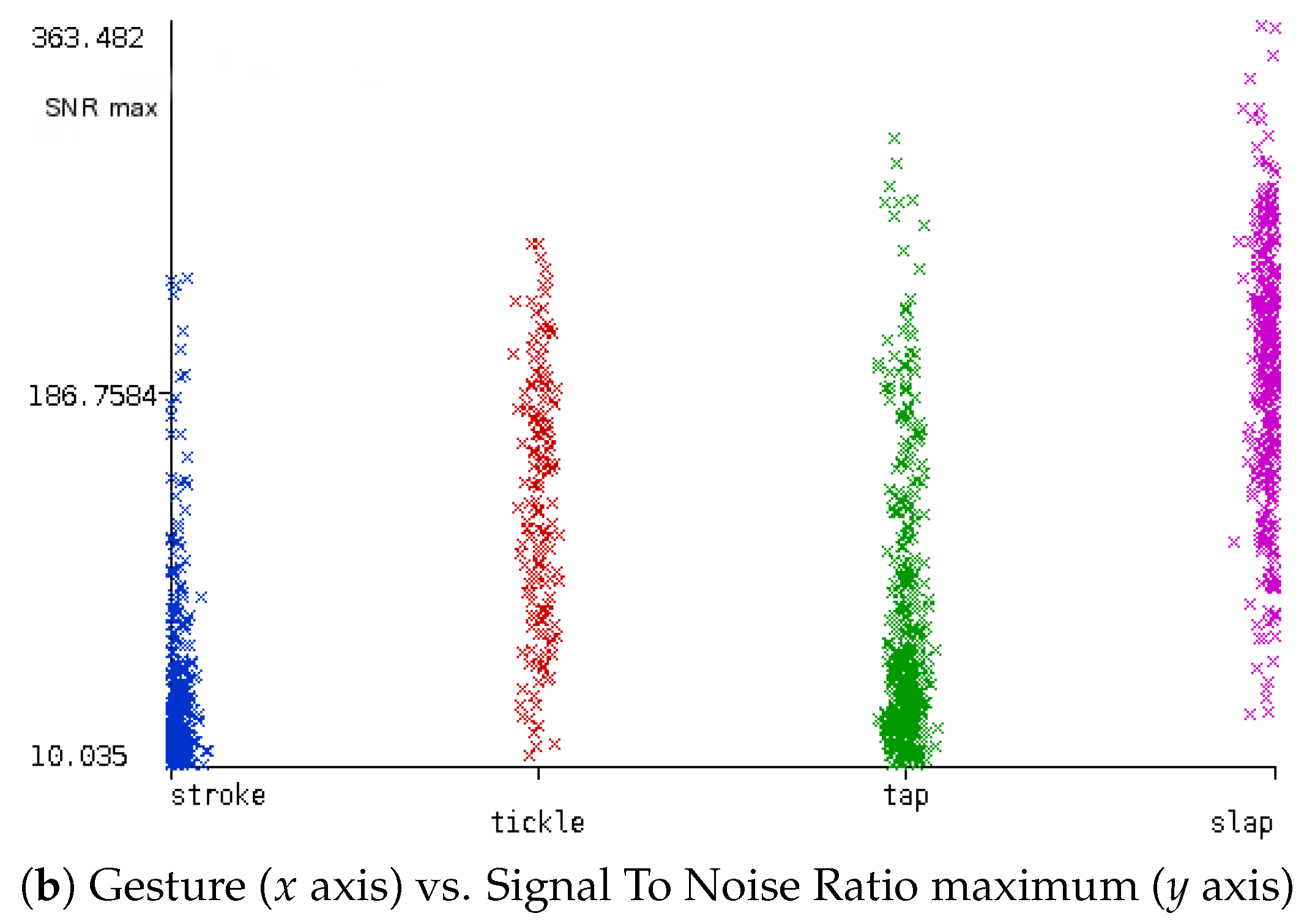

5. Data Analysis

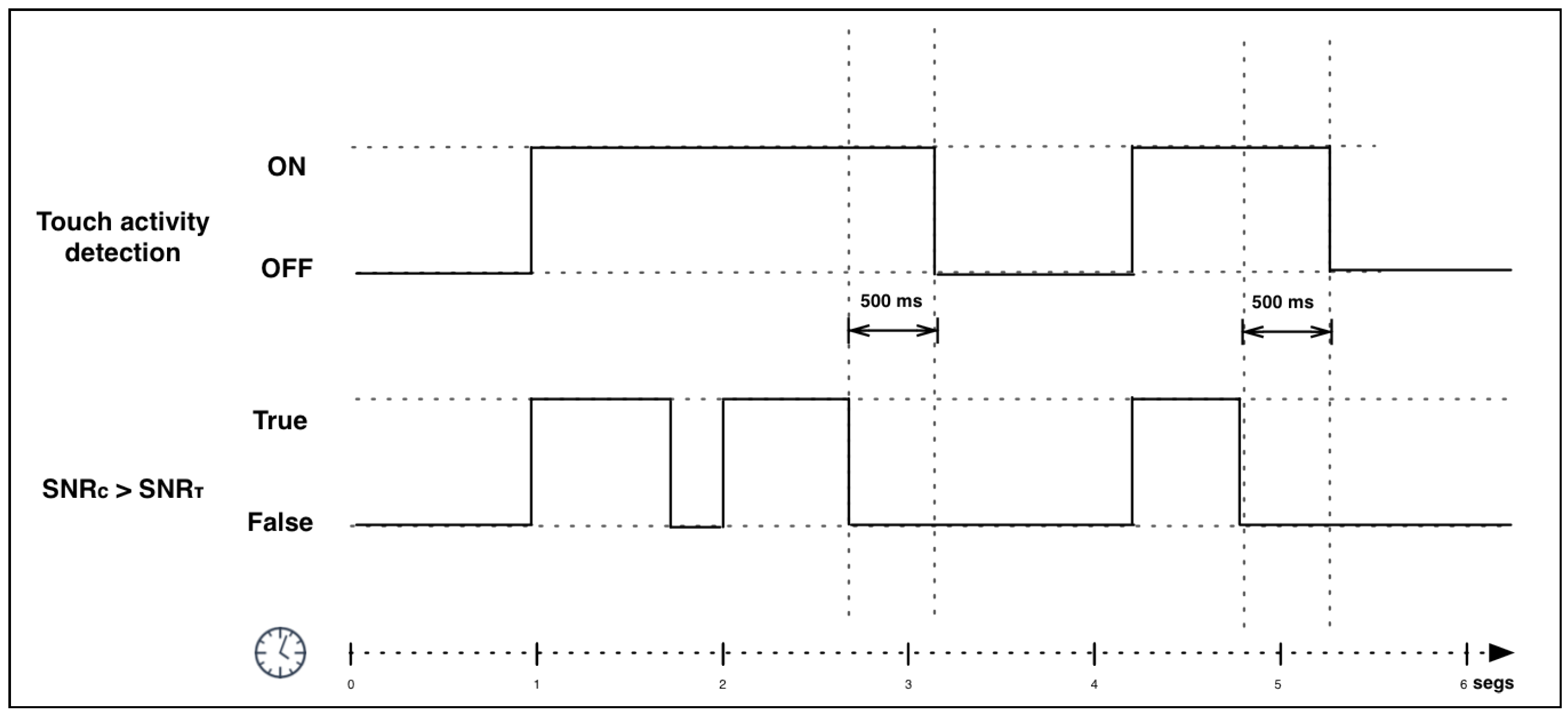

5.1. Building the Dataset

5.2. System Validation

5.3. System Testing

6. Discussion

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A

| Sensor Technology | Advantages | Disadvantages |

|---|---|---|

| Resistive | -Wide dynamic range. -Durability. -Good overload tolerance. | -Hysteresis in some designs. -Elastomer needs to be optimized for both mechanical and electrical properties. -Limited spatial resolution compared to vision sensors. -Large numbers of wires may have to be brought away from the sensor. -Monotonic response but often not linear. |

| Piezoelectric | -Wide dynamic range. -Durability. -Good mechanical properties of piezo/pyroelectric materials. -Temperature as well as force sensing capability. | -Difficulty of separating piezoelectric from pyroelectric effects. -Inherently dynamic: output decays to zero for constant load. -Difficulty of scanning elements.-Good solutions are complex. |

| Capacitive | -Wide dynamic range. -Linear response. -Robust. | -Susceptible to noise. -Some dielectrics are temperature sensitive. -Capacitance decreases with physical size, ultimately limiting spatial resolution. |

| Magnetic transductor | -Wide dynamic range. -Large displacements possible. -Simple. | -Poor spatial resolution. -Mechanical problems when sensing on slopes. |

| Mechanical transductor | -Well-known technology. -Good for probe applications. | -Complex for array constructions. -Limited spatial resolution. |

| Optical transductor | -Very high resolution. -Compatible with vision sensing technology. -No electrical interference problems. -Processing electronics can be remote from sensor. -Low cabling requirements. | -Dependence on elastomer in some designs. -Some hysteresis. |

Appendix B

References

- Altun, K.; MacLean, K.E. Recognizing affect in human touch of a robot. Pattern Recognit. Lett. 2015, 66, 31–40. [Google Scholar] [CrossRef]

- Hertenstein, M.J.; Verkamp, J.M.; Kerestes, A.M.; Holmes, R.M. The communicative functions of touch in humans, nonhuman primates, and rats: A review and synthesis of the empirical research. Genet. Soc. Gen. Psychol. Monogr. 2006, 132, 5–94. [Google Scholar] [CrossRef] [PubMed]

- Nie, J.; Park, M.; Marin, A.L.; Sundar, S.S. Can you hold my hand? Physical warmth in human-robot interaction. In Proceedings of the Seventh Annual ACM/IEEE International Conference on Human-Robot Interaction (HRI), Boston, MA, USA, 5–8 March 2012; pp. 201–202, ISBN 9781450310635. [Google Scholar]

- Ohmura, Y.; Kuniyoshi, Y.; Nagakubo, A. Conformable and scalable tactile sensor skin for curved surfaces. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation (ICRA 2006), Orlando, FL, USA, 15–19 May 2006; pp. 1348–1353. [Google Scholar] [CrossRef]

- Yohanan, S.; MacLean, K.E. The role of affective touch in human-robot interaction: Human intent and expectations in touching the haptic creature. Int. J. Soc. Robot. 2012, 4, 163–180. [Google Scholar] [CrossRef]

- Argall, B.D.; Billard, A.G. A survey of tactile human–robot Interactions. Robot. Auton. Syst. 2010, 58, 1159–1176. [Google Scholar] [CrossRef]

- Nicholls, H.R.; Lee, M.H. A survey of robot tactile sensing technology. Int. J. Robot. Res. 1989, 8, 3–30. [Google Scholar] [CrossRef]

- Morita, T.; Iwata, H.; Sugano, S. Development of human symbiotic robot: Wendy. In Proceedings of the 1999 IEEE International Conference on Robotics and Automation, Detroit, MI, USA, 10–15 May 1999; pp. 3183–3188. [Google Scholar] [CrossRef]

- Gonzalez-Pacheco, V.; Ramey, A.; Alonso-Martin, F.; Castro-Gonzalez, A.; Salichs, M.A. Maggie: A social robot as a gaming platform. Int. J. Soc. Robot. 2011, 3, 371–381. [Google Scholar] [CrossRef]

- Mitsunaga, N.; Miyashita, T.; Ishiguro, H.; Kogure, K.; Hagita, N. Robovie-iv: A communication robot interacting with people daily in an office. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 5066–5072. [Google Scholar] [CrossRef]

- Sabanovic, S.; Bennett, C.C.; Chang, W.-L.; Huber, L. Paro robot affects diverse interaction modalities in group sensory therapy for older adults with dementia. In Proceedings of the 2013 IEEE International Conference on Rehabilitation Robotics (ICORR), Seattle, WA, USA, 24–26 June 2013; pp. 1–6. [Google Scholar] [CrossRef]

- Minato, T.; Yoshikawa, Y.; Noda, T.; Ikemoto, S.; Ishiguro, H.; Asada, M. Cb2: A child robot with biomimetic body for cognitive developmental robotics. In Proceedings of the 2007 7th IEEE-RAS International Conference on Humanoid Robots, Pittsburgh, PA, USA, 29 November–1 December 2007; pp. 557–562. [Google Scholar]

- Dahiya, R.S.; Metta, G.; Valle, M.; Sandini, G. Tactile sensing-from humans to humanoids. IEEE Trans. Robot. 2010, 26, 1–20. [Google Scholar] [CrossRef]

- Goris, K.; Saldien, J.; Vanderborch, B.; Lefeber, D. Mechanical design of the huggable robot probo. Int. J. Humanoid Robot. 2011, 8, 481–511. [Google Scholar] [CrossRef]

- Silvera Tawil, D.; Rye, D.; Velonaki, M. Touch modality interpretation for an EIT-based sensitive skin. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3770–3776. [Google Scholar] [CrossRef]

- Silvera-Tawil, D.; Rye, D.; Velonaki, M. Interpretation of social touch on an artificial arm covered with an EIT-based sensitive skin. Int. J. Soc. Robot. 2014, 6, 489–505. [Google Scholar] [CrossRef]

- Cooney, M.D.; Nishio, S.; Ishiguro, H. Recognizing affection for a touch-based interaction with a humanoid robot. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 1420–1427. [Google Scholar] [CrossRef]

- Paradiso, J.; Checka, N. Passive acoustic sensing for tracking knocks atop large interactive displays. In Proceedings of the IEEE Sensors, Orlando, FL, USA, 12–14 June 2002; pp. 521–527. [Google Scholar] [CrossRef]

- Harrison, C.; Hudson, S.E. Scratch input: Creating large, inexpensive, unpowered and mobile finger input surfaces. In Proceedings of the 21st Annual ACM Symposium on User Interface Software and Technology, Monterey, CA, USA, 19–22 October 2008; pp. 205–208. [Google Scholar] [CrossRef]

- Murray-Smith, R.; Williamson, J.; Hughes, S.; Quaade, T. Stane: Synthesized surfaces for tactile input. In Proceedings of the Twenty-Sixth Annual CHI Conference on Human Factors in Computing Systems, Florence, Italy, 10 April 2008; pp. 1299–1302. [Google Scholar] [CrossRef]

- Robinson, S.; Rajput, N.; Jones, M.; Jain, A.; Sahay, S.; Nanavati, A. TapBack: Towards richer mobile interfaces in impoverished contexts. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; pp. 2733–2736. [Google Scholar] [CrossRef]

- Lopes, P.; Jota, R.; Jorge, J.A. Augmenting touch interaction through acoustic sensing. In Proceedings of the ACM International Conference on Interactive Tabletops and Surfaces (ITS ’11), Kobe, Japan, 13–16 November 2011; pp. 53–56. [Google Scholar] [CrossRef]

- Braun, A.; Krepp, S.; Kuijper, A. Acoustic tracking of hand activities on surfaces. In Proceedings of the 2nd International Workshop on Sensor-Based Activity Recognition and Interaction (WOAR ’15), Rostock, Germany, 25–26 June 2015; p. 9. [Google Scholar] [CrossRef]

- Ono, M.; Shizuki, B.; Tanaka, J. Touch & activate: Adding interactivity to existing objects using active acoustic sensing. In Proceedings of the 26th Annual ACM Symposium on User Interface Software and Technology, St. Andrews, UK, 11 October 2013; pp. 31–40. [Google Scholar] [CrossRef]

- Harrison, C.; Schwarz, J.; Hudson, S.E. TapSense: Enhancing finger interaction on touch surfaces. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology (UIST ’11), Santa Barbara, CA, USA, 16–19 October 2011; pp. 627–636. [Google Scholar] [CrossRef]

- Cochran, W.; Cooley, J.; Favin, D.; Helms, H.; Kaenel, R.; Lang, W.; Maling, G.; Nelson, D.; Rader, C.; Welch, P. What is the fast Fourier transform? Proc. IEEE 1967, 55, 1664–1674. [Google Scholar] [CrossRef]

- Stanković, R.S.; Falkowski, B.J. The haar wavelet transform: Its status and achievements. Comput. Electr. Eng. 2003, 29, 25–44. [Google Scholar] [CrossRef]

- Alonso-Martin, F.; Castro-González, Á.; Gorostiza, J.; Salichs, M.A. Multidomain voice activity detection during human-robot interaction. In International Conference on Social Robotics; Springer: Berlin/Heidelberg, Germany, 2013; pp. 64–73. [Google Scholar] [CrossRef]

- Alonso-Martin, F.; Malfaz, M.; Sequeira, J.; Gorostiza, J.; Salichs, M.A. A multimodal emotion detection system during human-robot interaction. Sensors 2013, 13, 15549–15581. [Google Scholar] [CrossRef] [PubMed]

- Holmes, G.; Donkin, A.; Witten, I. Weka: A machine learning workbench. In Proceedings of the Australian New Zealnd Intelligent Information Systems Conference (ANZIIS ’94), Brisbane, Australia, 29 November–2 December 1994; pp. 357–361. [Google Scholar] [CrossRef]

- Marques, R.Z.; Coutinho, L.R.; Borchartt, T.B.; Vale, S.B.; Silva, F.J. An experimental evaluation of data mining algorithms using hyperparameter optimization. In Proceedings of the 2015 Fourteenth Mexican International Conference on Artificial Intelligence (MICAI), Cuernavaca, Mexico, 25–31 October 2015; pp. 152–156. [Google Scholar] [CrossRef]

- Thornton, C.; Hutter, F.; Hoos, H.H.; Leyton-Brown, K. Auto-WEKA: Combined selection and hyperparameter optimization of classification algorithms. In Proceedings of the 19th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ’13), Chicago, IL, USA, 11–14 August 2013; pp. 847–855. [Google Scholar] [CrossRef]

- Salichs, M.; Barber, R.; Khamis, A.; Malfaz, M.; Gorostiza, J.; Pacheco, R.; Rivas, R.; Corrales, A.; Delgado, E.; Garcia, D. Maggie: A robotic platform for human-robot social interaction. In Proceedings of the 2006 IEEE Conference on Robotics, Automation and Mechatronics, Bangkok, Thailand, 1–3 June 2006; pp. 1–7. [Google Scholar] [CrossRef]

- Breiman, L. The little bootstrap and other methods for dimensionality selection in regression: X-fixed prediction error. J. Am. Stat. Assoc. 1992, 87, 738–754. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–745. ISBN 978-0-387-84858-7. [Google Scholar]

- Caruana, R.; Karampatziakis, N.; Yessenalina, A. An empirical evaluation of supervised learning in high dimensions. In Proceedings of the 25th International Conference on Machine Learning (ICML ’08), Helsinki, Finland, 5–9 July 2008; pp. 96–103. [Google Scholar] [CrossRef]

- Platt, J.C. Sequential Minimal Optimization: A Fast Algorithm for Training Support Vector Machines; Technical Report MSR-TR-98-14; 1998; 21p. [Google Scholar]

- Fernández-Delgado, M.; Cernadas, E.; Barro, S.; Amorim, D. Do we need hundreds of classifiers to solve real world classification problems? J. Mach. Learn. Res. 2014, 15, 3133–3181. [Google Scholar] [CrossRef]

- Najafabadi, M.M.; Villanustre, F.; Khoshgoftaar, T.M.; Seliya, N.; Wald, R.; Muharemagic, E. Deep learning applications and challenges in big data analytics. J. Big Data 2015, 2, 1. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. Imagenet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1106–1114. [Google Scholar]

- Lawrence, S.; Giles, C.; Tsoi, A. Face recognition: A convolutional neural-network approach. IEEE Trans. Neural Netw. 1997, 8, 98–113. [Google Scholar] [CrossRef] [PubMed]

- Palaz, D.; Magimai-Doss, M.; Collobert, R. Analysis of CNN-Based Speech Recognition System Using Raw Speech as Input; ISCA: Dresden, Germany, 2015; pp. 11–15. [Google Scholar]

- Remland, M.S.; Jones, T.S.; Brinkman, H. Interpersonal distance, body orientation, and touch: Effects of culture, gender, and age. J. Soc. Psychol. 1995, 135, 281–297. [Google Scholar] [CrossRef] [PubMed]

- Suvilehto, J.T.; Glerean, E.; Dunbar, R.I.; Hari, R.; Nummenmaa, L. Topography of social touching depends on emotional bonds between humans. Proc. Natl. Acad. Sci. USA 2015, 112, 13811–13816. [Google Scholar] [CrossRef] [PubMed]

| Feature | Description | Domain |

|---|---|---|

| Pitch | Frequency perceived by human ear. | Time, Frequency, Time-Frequency |

| Flux | Feature computed as the sum across one analysis window of the squared difference between the magnitude spectra corresponding to successive signal frames. In other words, it refers to the variation of the magnitude of the signal. | Frequency |

| RollOff-95 | Frequency that contains 95% of the signal energy. | Frequency |

| Centroid | Represents the median of the signal spectrum in the frequency domain. That is, the frequency to which the signal approaches the most. It is frequently used to calculate the tone of a sound or timbre. | Frequency |

| Zero-crossing rate (ZCR) | Indicates the number of times the signal cross the abscissa. | Time |

| Root Mean Square (RMS) | Amplitude of the signal volume. | Time |

| Signal-to-noise ratio (SNR) | Relates the touch signal with the noise signal. | Time |

| Duration | Duration of the contact in time. | Time |

| Number of contacts per minute | A touch gesture may consist of several touches. | Time |

| Gesture | Contact Area | Intensity | Duration | Intention | Example |

|---|---|---|---|---|---|

| Stroke | med-large | low | med-long | empathy, compassion |  |

| Tickle | med | med | med-long | fun, joy |  |

| Tap | small | low | short | advise, warn |  |

| Slap | small | high | short | discipline, punishment, sanction |  |

| Classifier | F-Score |

|---|---|

| RF | 1 |

| MLP | 0.93 |

| LMT | 0.82 |

| CNN | 0.81 |

| SVM | 0.80 |

| DL4J | 0.76 |

| Classifier | F-Score |

|---|---|

| LMT | 0.81 |

| RF | 0.79 |

| DTNB | 0.78 |

| MLP | 0.75 |

| CNN | 0.74 |

| DL4J | 0.73 |

| SVM | 0.72 |

| Gesture | Stroke | Tickle | Tap | Slap |

|---|---|---|---|---|

| Stroke | 94 | 21 | 33 | 15 |

| Tickle | 6 | 122 | 05 | 11 |

| Tap | 8 | 0 | 146 | 7 |

| Slap | 7 | 0 | 04 | 155 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alonso-Martín, F.; Gamboa-Montero, J.J.; Castillo, J.C.; Castro-González, Á.; Salichs, M.Á. Detecting and Classifying Human Touches in a Social Robot Through Acoustic Sensing and Machine Learning. Sensors 2017, 17, 1138. https://doi.org/10.3390/s17051138

Alonso-Martín F, Gamboa-Montero JJ, Castillo JC, Castro-González Á, Salichs MÁ. Detecting and Classifying Human Touches in a Social Robot Through Acoustic Sensing and Machine Learning. Sensors. 2017; 17(5):1138. https://doi.org/10.3390/s17051138

Chicago/Turabian StyleAlonso-Martín, Fernando, Juan José Gamboa-Montero, José Carlos Castillo, Álvaro Castro-González, and Miguel Ángel Salichs. 2017. "Detecting and Classifying Human Touches in a Social Robot Through Acoustic Sensing and Machine Learning" Sensors 17, no. 5: 1138. https://doi.org/10.3390/s17051138

APA StyleAlonso-Martín, F., Gamboa-Montero, J. J., Castillo, J. C., Castro-González, Á., & Salichs, M. Á. (2017). Detecting and Classifying Human Touches in a Social Robot Through Acoustic Sensing and Machine Learning. Sensors, 17(5), 1138. https://doi.org/10.3390/s17051138