Hybrid Orientation Based Human Limbs Motion Tracking Method

Abstract

:1. Introduction

2. Materials and Methods

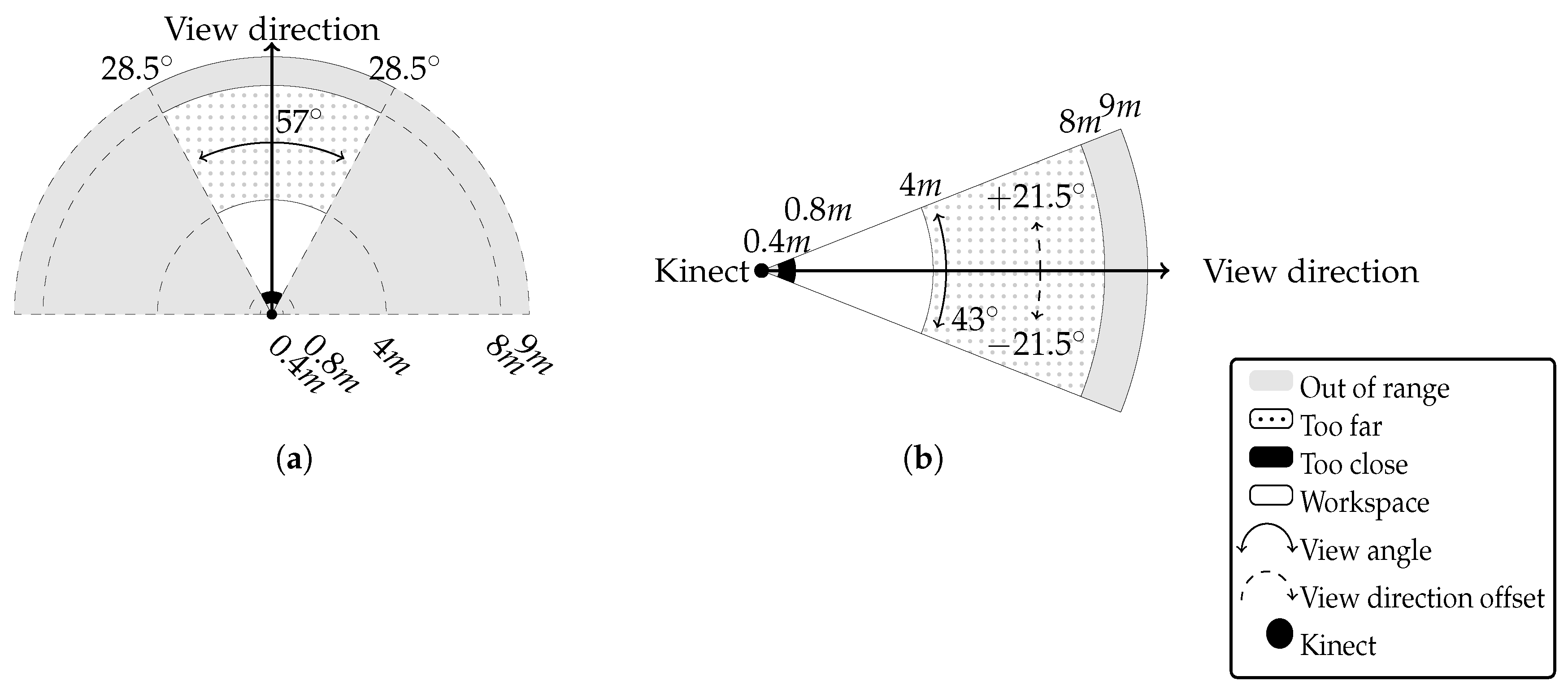

2.1. Microsoft Kinect Controller Characteristics

2.2. IMU Characteristics

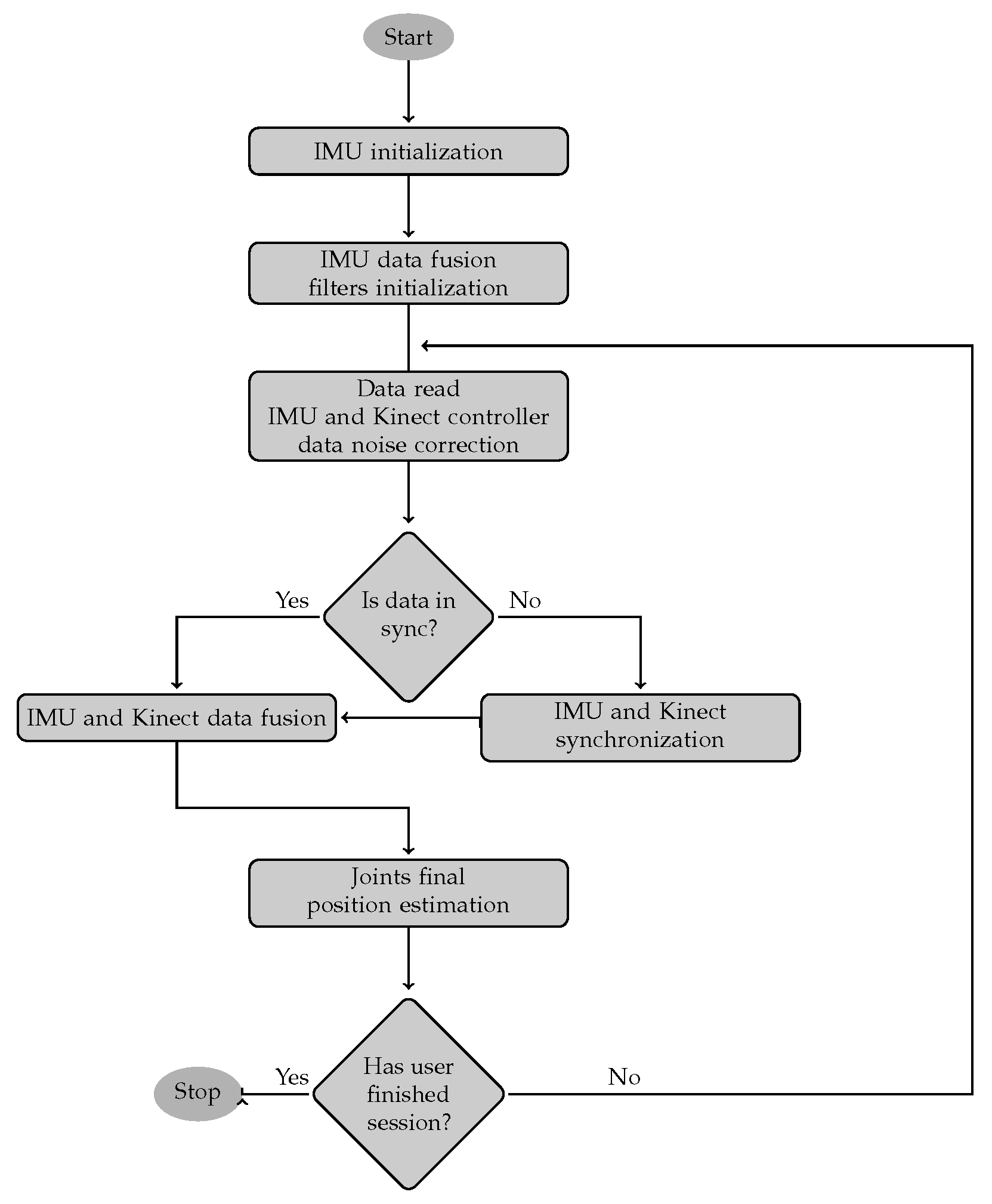

2.3. Hybrid, Orientation Based, Human Limbs Motion Tracking Method

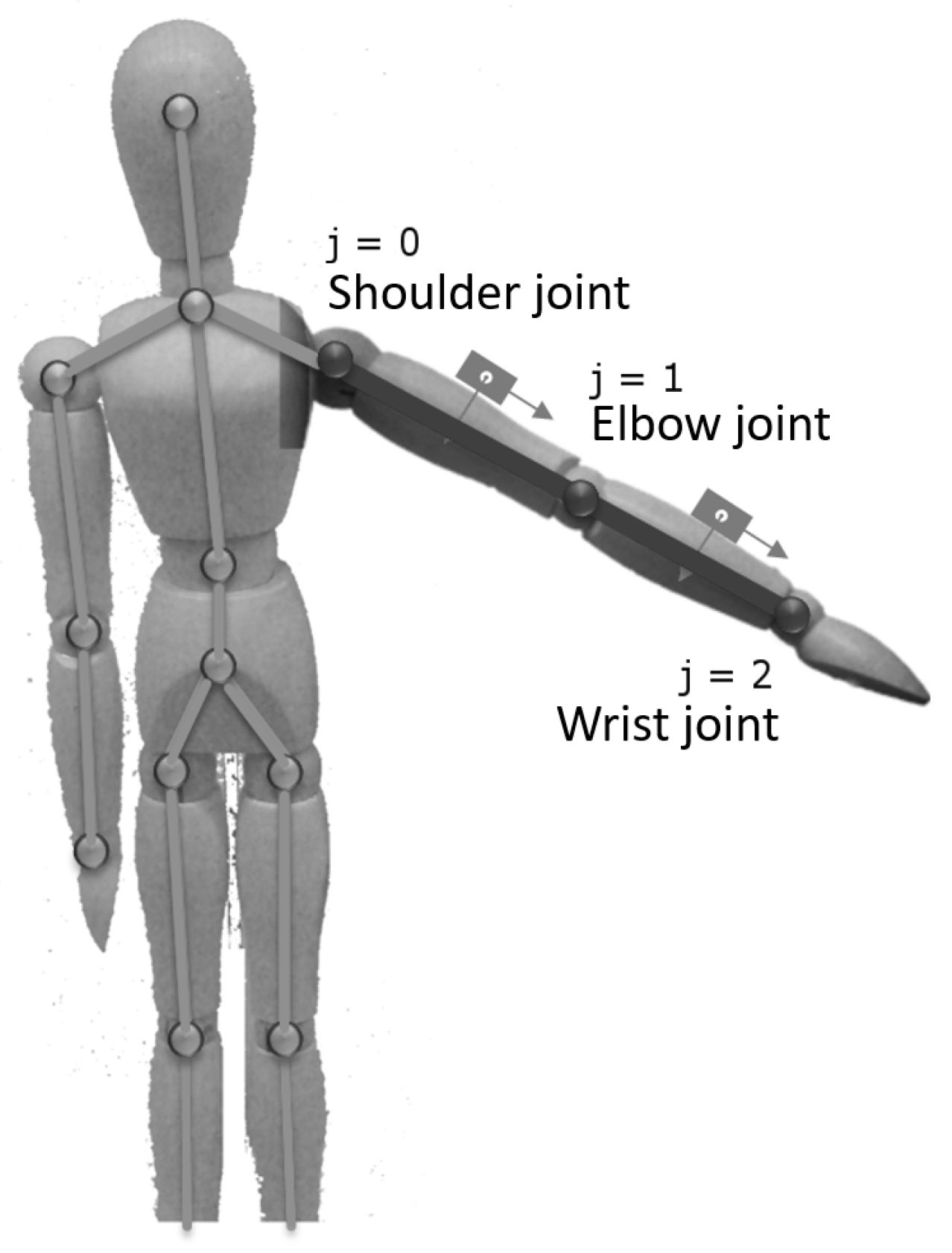

2.3.1. Coordination Space

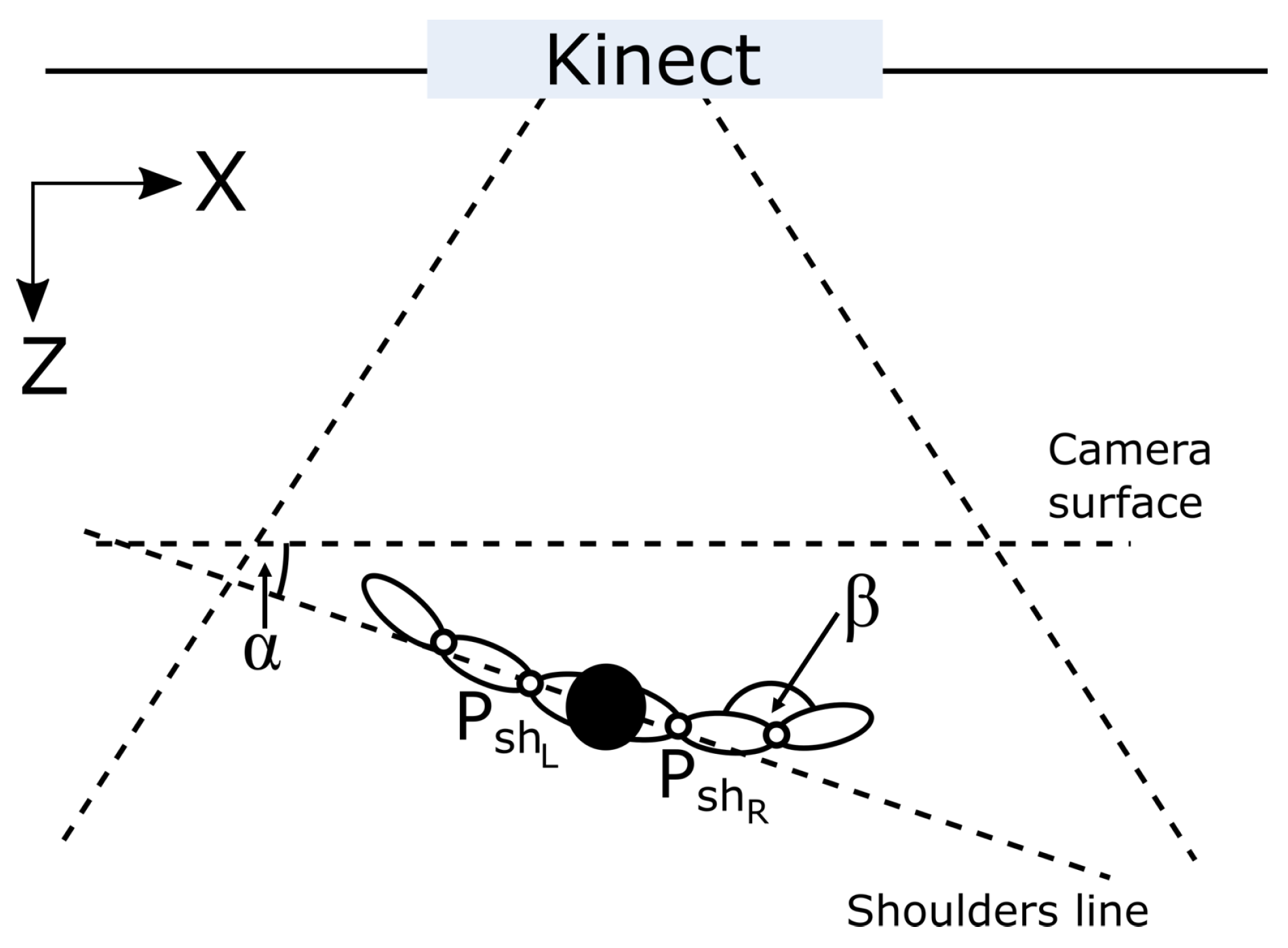

2.3.2. IMU Initialization

2.3.3. IMU Data Fusion Filters Initialization

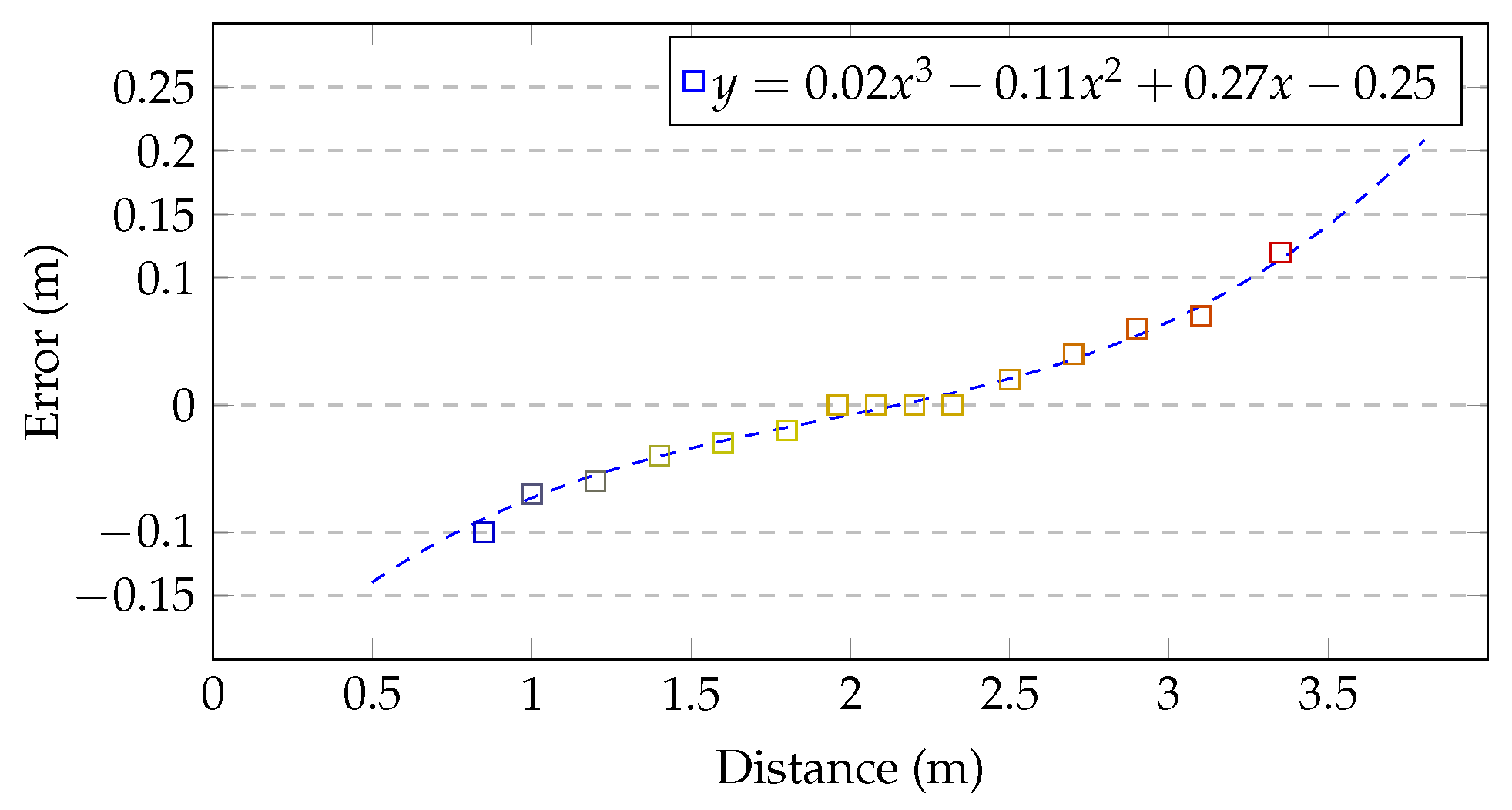

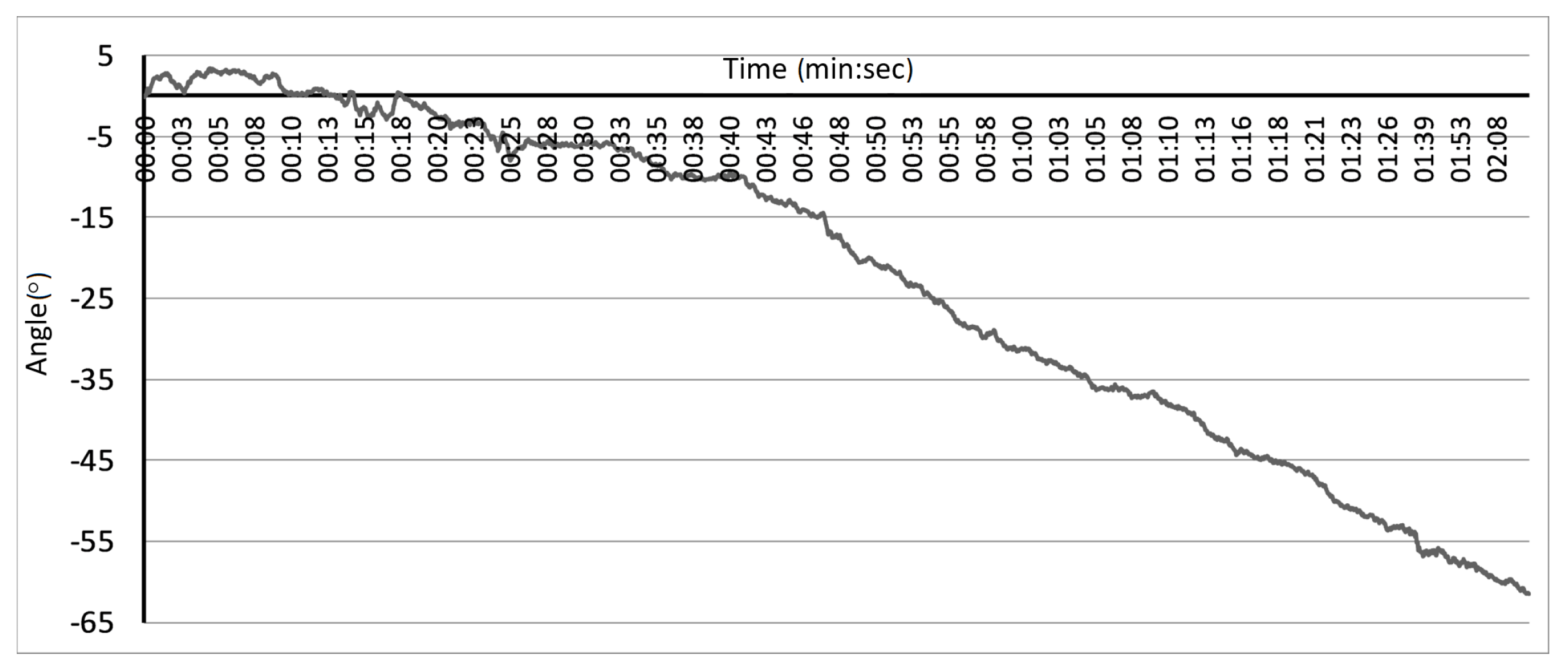

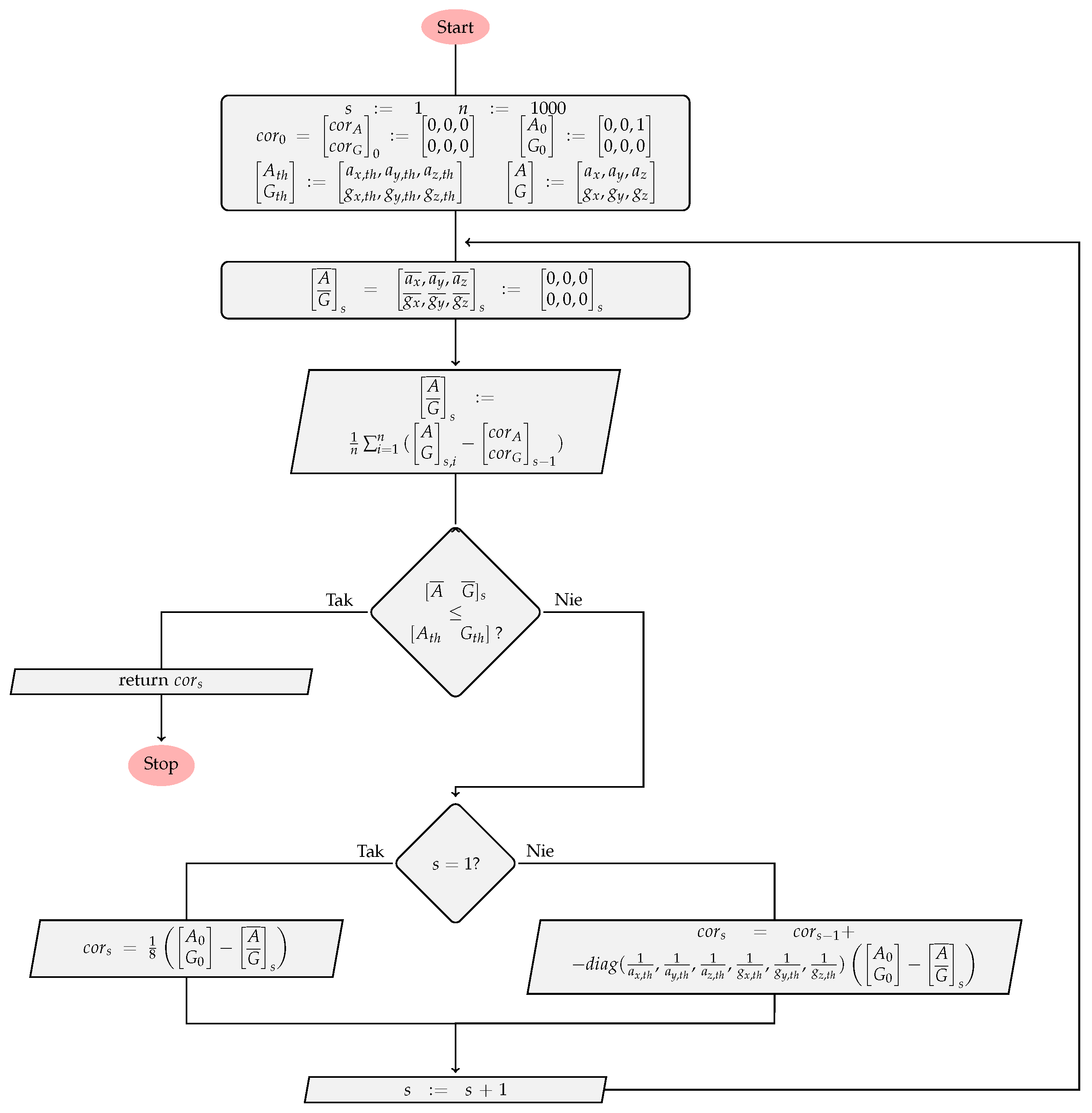

2.3.4. IMU and Kinect Controller Data Noise Correction

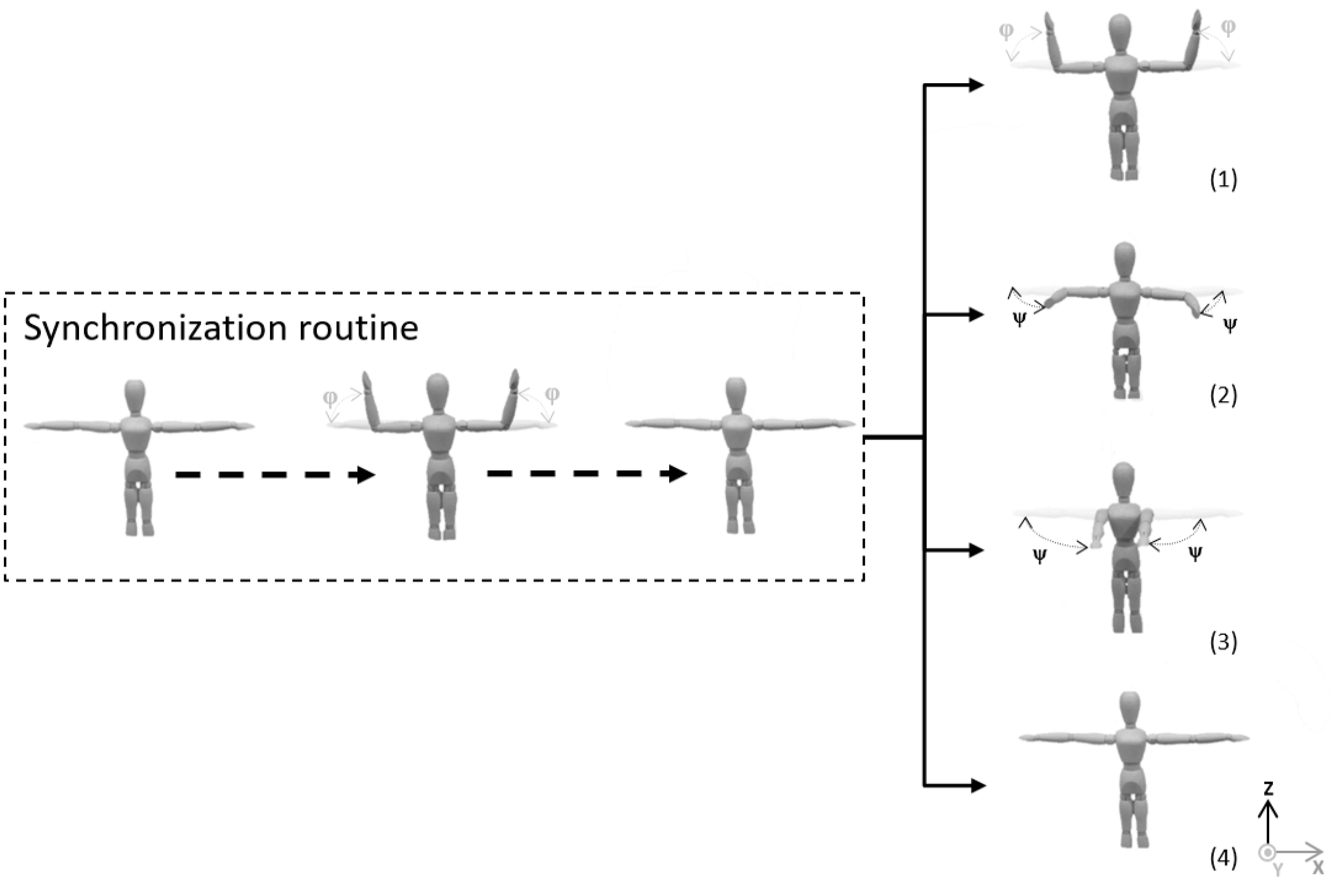

2.3.5. IMU and Kinect Synchronization

2.3.6. IMU and Kinect Data Fusion

2.3.7. Joints Final Position Estimation

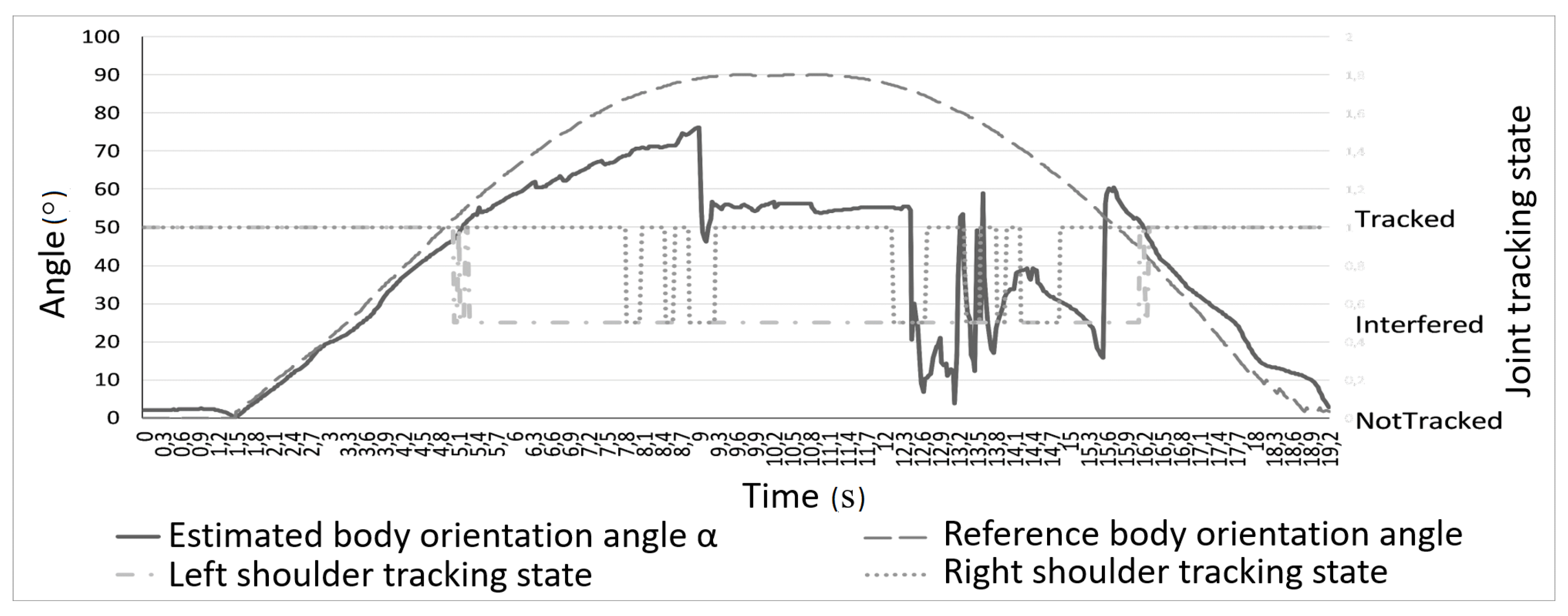

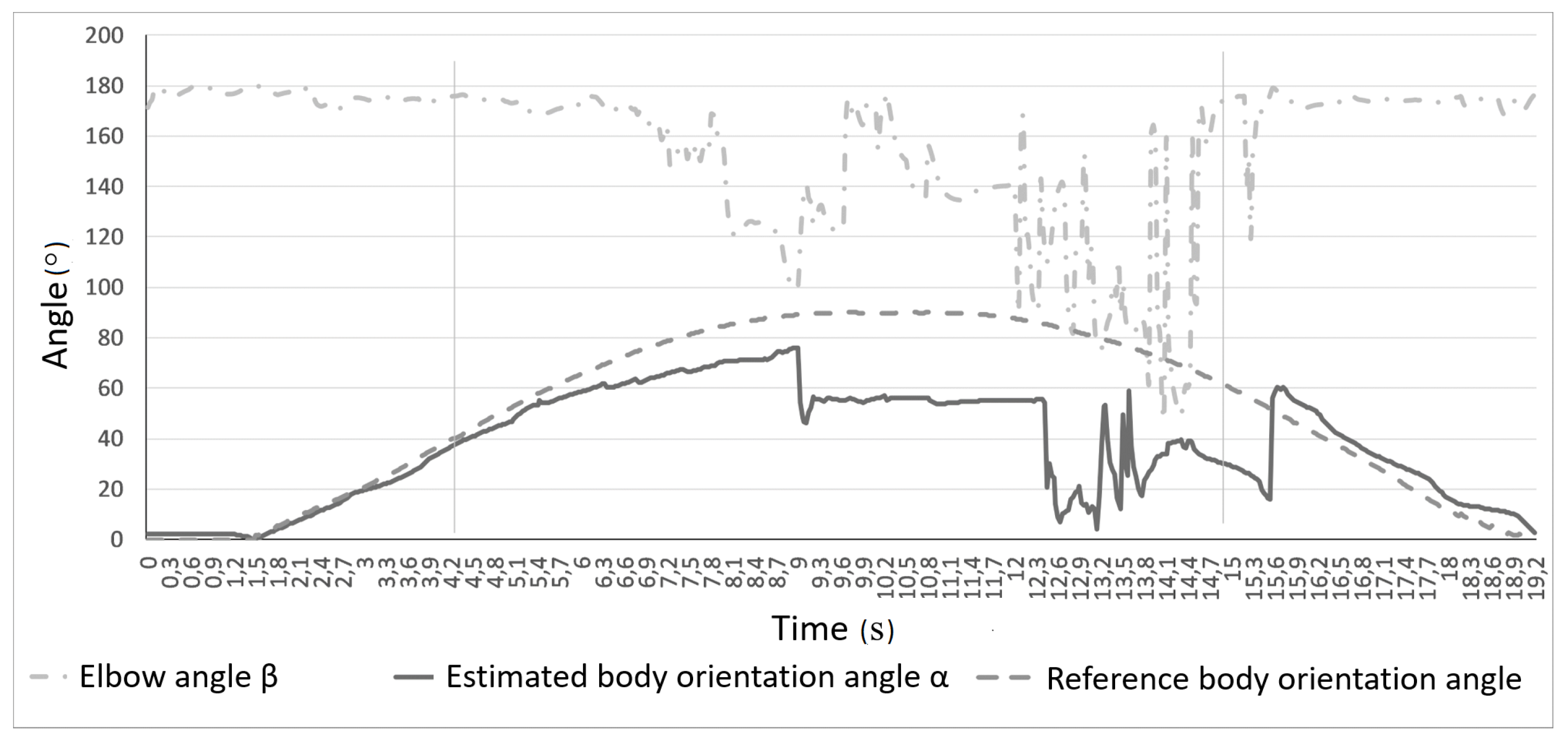

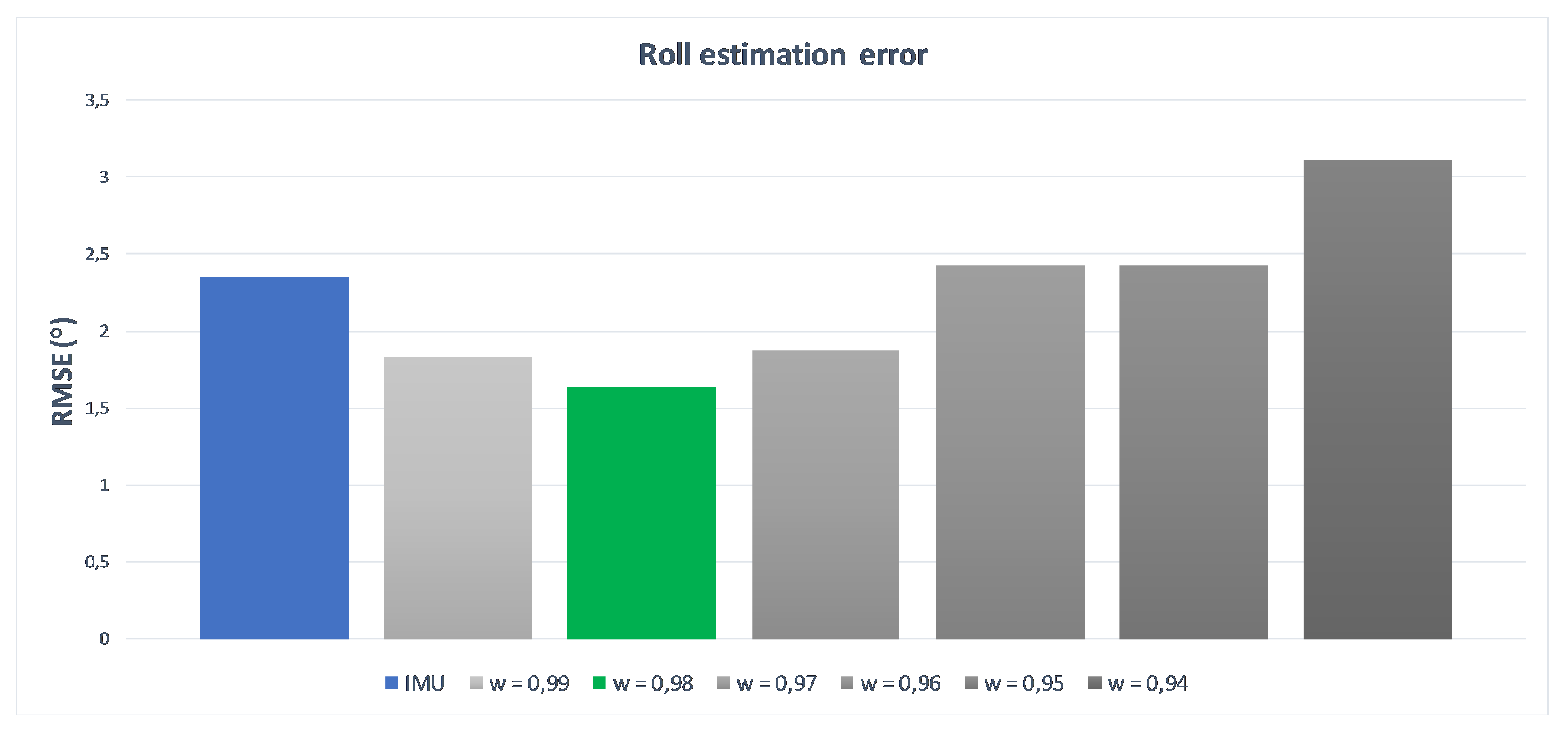

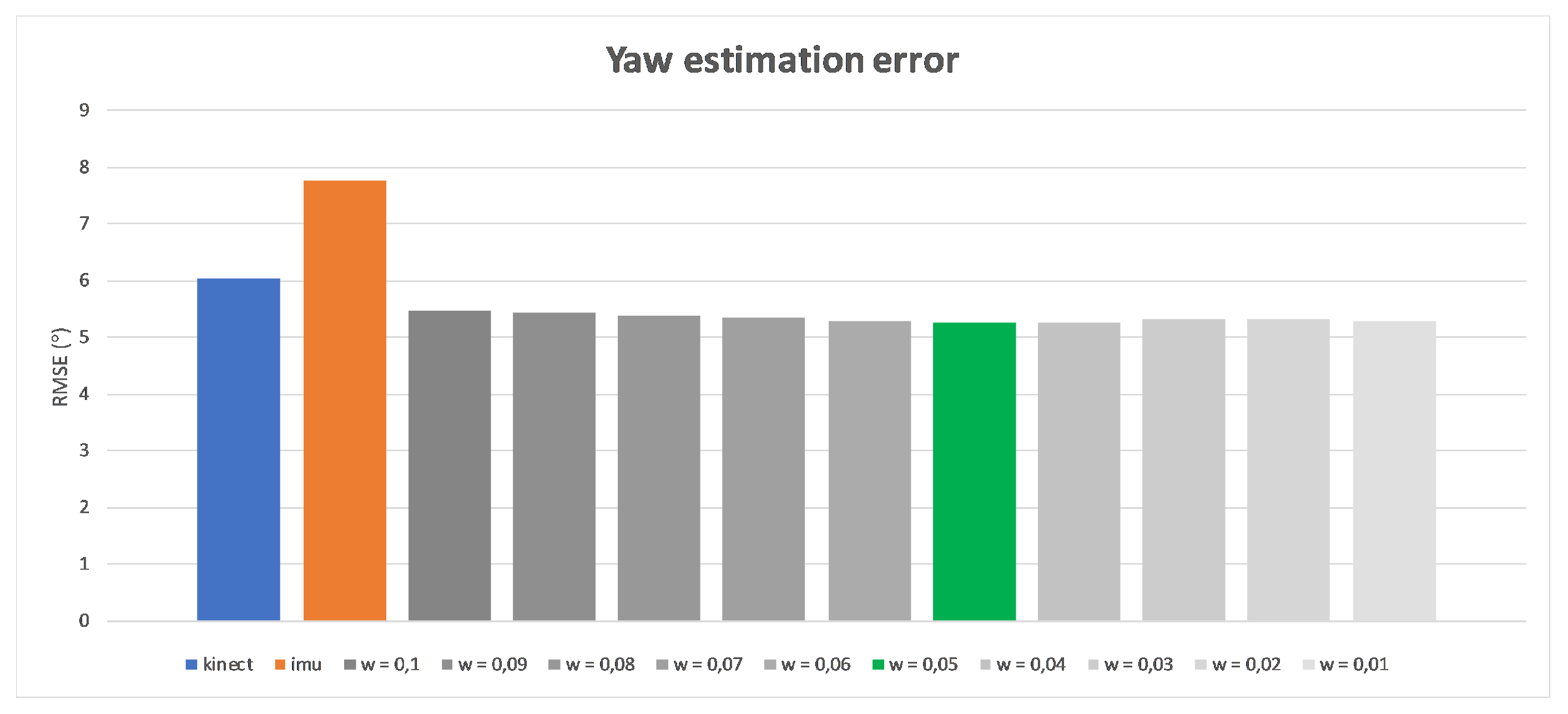

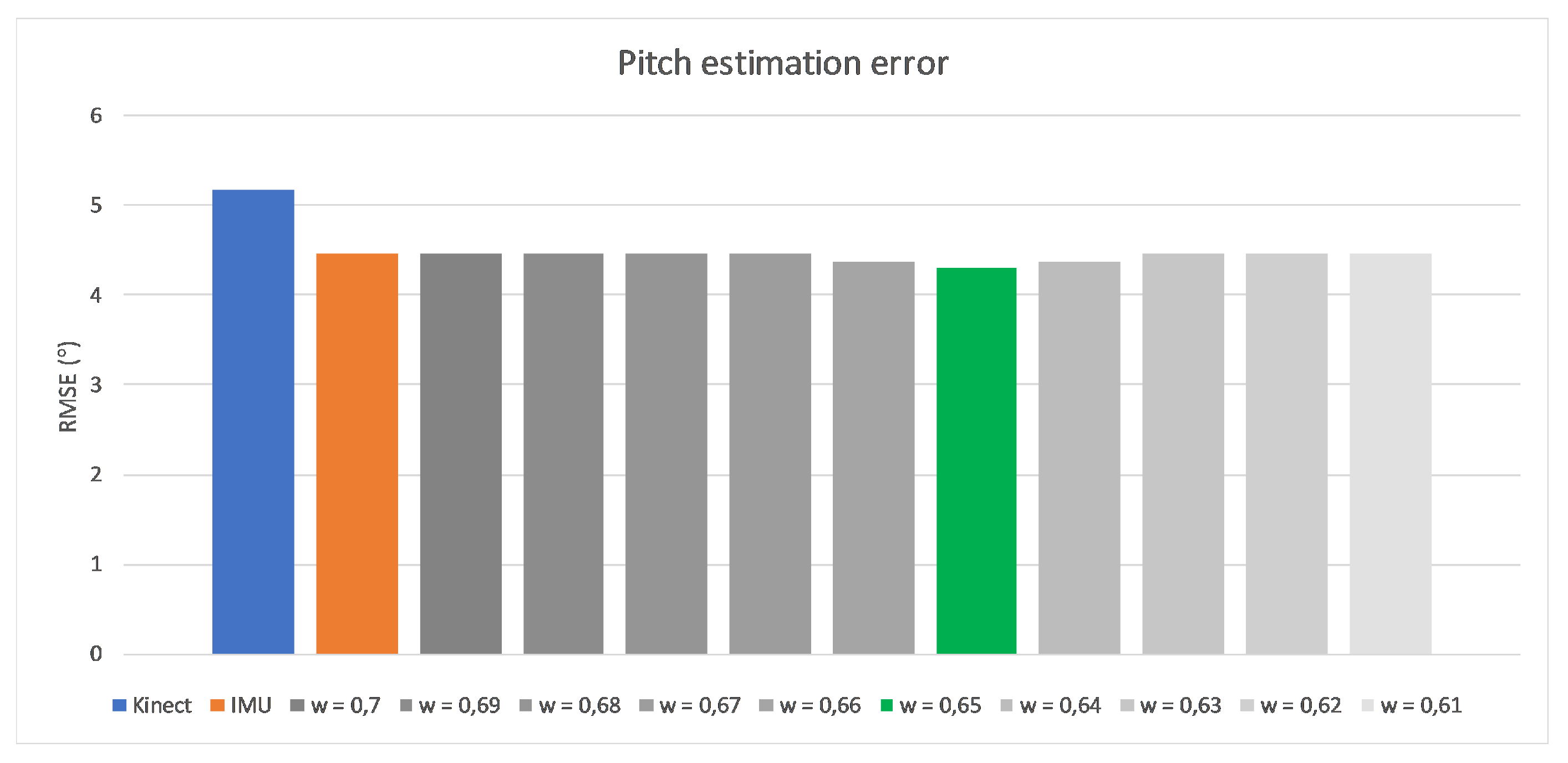

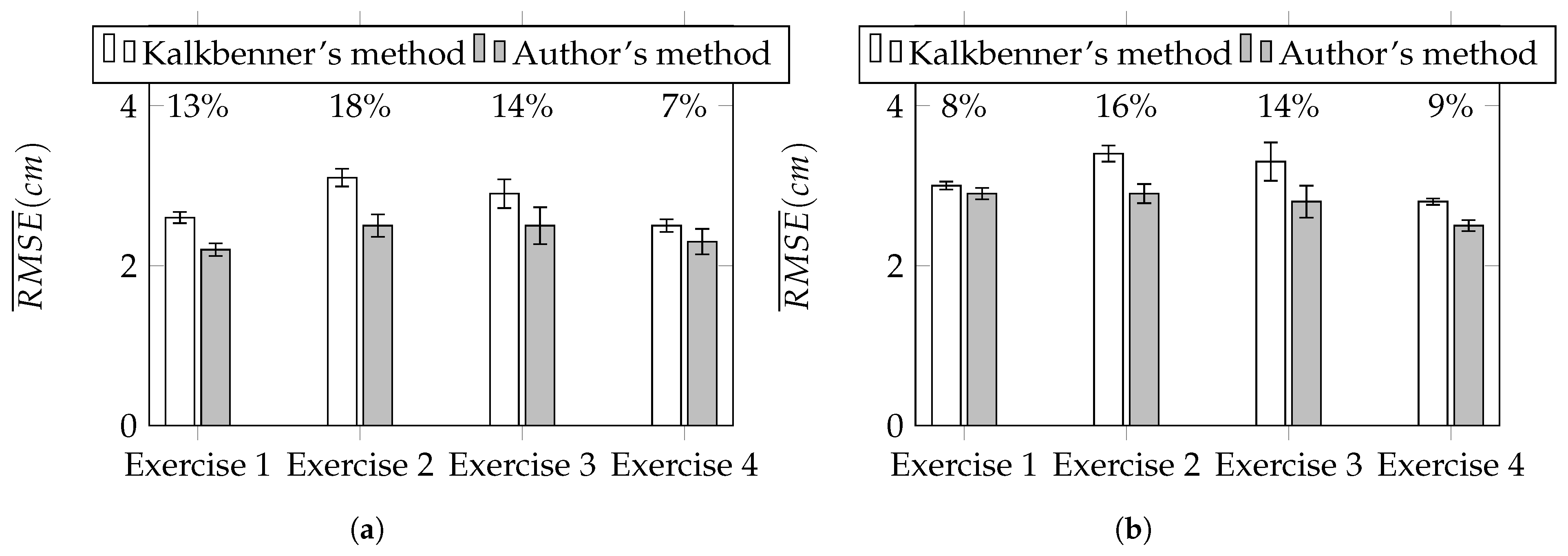

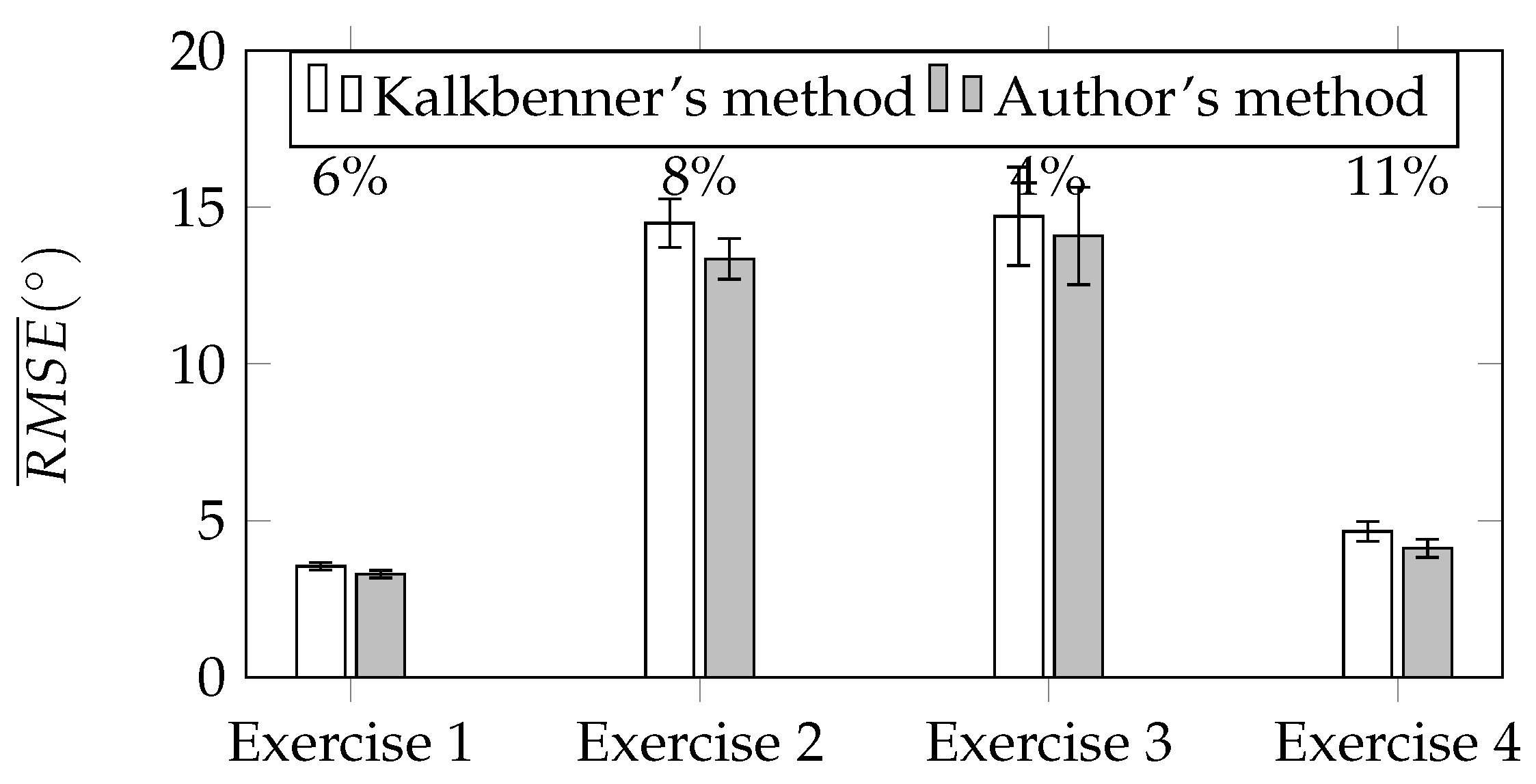

3. Results

4. Discussion and Conclusions

Author Contributions

Conflicts of Interest

Abbreviations

| RMSE | Root Mean Squared Error |

| IMU | Inertial Measurement Units |

| LPF | Low Pass Filter |

References

- Glonek, G.; Pietruszka, M. Natural user interfaces (NUI): Review. J. Appl. Comput. Sci. 2012, 20, 27–45. [Google Scholar]

- Johansson, G. Visual perception of biological motion and a model for its analysis. Percept. Psychophys. 1973, 14, 201–211. [Google Scholar] [CrossRef]

- Lange, B.; Koenig, S.; McConnell, E.; Chang, C.Y.; Juang, R.; Suma, E.; Bolas, M.; Rizzo, A. Interactive game-based rehabilitation using the Microsoft Kinect. In Proceedings of the 2012 IEEE Virtual Reality Short Papers and Posters (VRW), Costa Mesa, CA, USA, 4–8 March 2012; pp. 171–172. [Google Scholar]

- Chang, Y.J.; Chen, S.F.; Huang, J.D. A Kinect-based system for physical rehabilitation: A pilot study for young adults with motor disabilities. Res. Dev. Disabil. 2011, 32, 2566–2570. [Google Scholar] [CrossRef] [PubMed]

- Helten, T.; Müller, M.; Seidel, H.-P.; Theobalt, C. Real-time body tracking with one depth camera and inertial sensors. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 1105–1112. [Google Scholar]

- Huang, B.; Wu, X. Pedometer algorithm research based-matlab. Adv. Comput. Sci. Inf. Eng. 2012, 1, 81–86. [Google Scholar]

- Jayalath, S.; Abhayasinghe, N. A gyroscopic data based pedometer algorithm. In Proceedings of the 2013 8th International Conference on Computer Science and Education, Colombo, Sri Lanka, 26–28 April 2013; pp. 551–555. [Google Scholar]

- Pedley, M. Tilt sensing using a three-axis accelerometer. Freescale Semicond. Appl. Notes 2013, 3, 1–22. [Google Scholar]

- Roetenberg, D.; Luinge, H.; Slycke, P. Xsens MVN: Full 6DOF human motion tracking using miniature inertial sensors. Xsens Motion Technol. BV 2009, 1, 1–9. [Google Scholar]

- Xsens Motion Technologies. Products—Xsens 3D motion tracking. Available online: https://www.xsens.com/products/ (accessed on 8 December 2017).

- Bo, A.P.L.; Hayashibe, M.; Poignet, P. Joint angle estimation in rehabilitation with inertial sensors and its integration with Kinect. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 3479–3483. [Google Scholar]

- Destelle, F.; Ahmadi, A.; O’Connor, N.E.; Moran, K.; Chatzitofis, A.; Zarpalas, D.; Daras, P. Low-cost accurate skeleton tracking based on fusion of kinect and wearable inertial sensors. In Proceedings of the European Signal Processing Conference, Lisbon, Portugal, 1–5 September 2014; pp. 371–375. [Google Scholar]

- Madgwick, S.O.H.; Harrison, A.J.L.; Vaidyanathan, R. Estimation of IMU and MARG orientation using a gradient descent algorithm. In Proceedings of the IEEE International Conference on Rehabilitation Robotics, Zurich, Switzerland, 29 June–1 July 2011; pp. 179–185. [Google Scholar]

- Kalkbrenner, C.; Hacker, S.; Algorri, M.E.; Blechschmidt-trapp, R. Motion capturing with inertial measurement units and Kinect - tracking of limb movement using optical and orientation information. In Proceedings of the International Conference on Biomedical Electronics and Devices, Angers, France, 3–6 March 2014; pp. 120–126. [Google Scholar]

- Feng, S.; Murray-Smith, R. Fusing Kinect sensor and inertial sensors with multi-rate Kalman filter. In Proceedings of the IET Conference on Data Fusion and Target Tracking 2014 Algorithms and Applications, Liverpool, UK, 30 April 2014; pp. 1–9. [Google Scholar]

- Tannous, H.; Istrate, D.; Benlarbi-Delai, A.; Sarrazin, J.; Gamet, D.; Ho Ba Tho, M.; Dao, T. A new multi-sensor fusion scheme to improve the accuracy of knee fexion kinematics for functional rehabilitation movements. Sensors 2016, 16, 1914. [Google Scholar] [CrossRef] [PubMed]

- Tian, Y.; Meng, X.; Tao, D.; Liu, D.; Feng, C. Upper limb motion tracking with the integration of IMU and Kinect. Neurocomputing 2015, 159, 207–218. [Google Scholar] [CrossRef]

- Skalski, A.; Machura, B. Metrological analysis of microsoft kinect in the context of object localization. Metrol. Meas. Syst. 2015, 469–478. [Google Scholar] [CrossRef]

- Gonzalez-Jorge, H.; Riveiro, B.; Vazquez-Fernandez, E.; Martínez-Sánchez, J.; Arias, P. Metrological evaluation of microsoft kinect and asus xtion sensors. Measurement 2013, 46, 1800–1806. [Google Scholar] [CrossRef]

- Khoshelham, K.; Elberink, S.O. Accuracy and resolution of kinect depth data for indoor mapping applications. Sensors 2012, 12, 1437–1454. [Google Scholar] [CrossRef] [PubMed]

- DiFilippo, N.M.; Jouaneh, M.K. Characterization of different microsoft kinect sensor models. IEEE Sens. J. 2015, 15, 4554–4564. [Google Scholar] [CrossRef]

- Stackoverflow Community. Precision of the Kinect Depth Camera. Available online: https://goo.gl/x4jWoX (accessed on 8 December 2017).

- Freedman, B.; Shpunt, A.; Machline, M.; Yoel, A. Depth Mapping Using Projected Patterns. U.S. Patent 20100118123, 13 May 2010. [Google Scholar]

- Shpunt, A. Depth Mapping Using Multi-Beam Illumination. U.S. Patent 20100020078, 28 January 2010. [Google Scholar]

- Shpunt, A.; Petah, T.; Zalevsky, Z.; Rosh, H. Depth-Varying Light Fields for Three Dimensional Sensing. U.S. Patent 20080106746, 8 May 2008. [Google Scholar]

- Reichinger, A. Kinect Pattern Uncovered. Available online: https://goo.gl/DOqRxZ (accessed on 8 December 2017).

- Fofi, D.; Sliwa, T.; Voisin, Y. A comparative survey on invisible structured light. SPIE Mach. Vis. Appl. Ind. Insp. XII 2004, 1–90. [Google Scholar] [CrossRef]

- Rzeszotarski, D.; Strumiłło, P.; Pełczyński, P.; Więcek, B.; Lorenc, A. System obrazowania stereoskopowego sekwencji scen trójwymiarowych. Elektronika 2005, 10, 165–184. [Google Scholar]

- Shotton, J.; Johnson, M.; Cipolla, R. Semantic texton forests for image categorization and segmentation. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Shotton, J.; Fitzgibbon, A.W.; Cook, M.; Sharp, T.; Finocchio, M.; Moore, R.; Kipman, A.; Blake, A. Real-time human pose recognition in parts from single depth images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 1297–1304. [Google Scholar]

- Asteriadis, S.; Chatzitofis, A.; Zarpalas, D.; Alexiadis, D.S.; Daras, P. Estimating human motion from multiple Kinect sensors. In Proceedings of the 6th International Conference on Computer Vision/Computer Graphics Collaboration Techniques and Applications - MIRAGE ’13, Berlin, Germany, 6–7 June 2013; pp. 1–6. [Google Scholar]

- Kitsikidis, A.; Dimitropoulos, K.; Douka, S.; Grammalidis, N. Dance analysis using multiple Kinect sensors. In Proceedings of the 2014 International Conference on Computer Vision Theory and Applications (VISAPP), Lisbon, Portugal, 5–8 January 2014; pp. 789–795. [Google Scholar]

- Schroder, Y.; Scholz, A.; Berger, K.; Ruhl, K.; Guthe, S.; Magnor, M. Multiple kinect studies. Comput. Graph. Lab 2011, 09-15, 1–30. [Google Scholar]

- Alexiev, K.; Nikolova, I. An algorithm for error reducing in IMU. In Proceedings of the 2013 IEEE International Symposium on Innovations in Intelligent Systems and Applications, Albena, Bulgaria, 19–21 June 2013. [Google Scholar]

- The Center for Geotechnical Modeling (CGM) at UC Davis. Signal Processing and Filtering of Raw Accelerometer Records. Available online: https://goo.gl/jQt49h (accessed on 8 December 2017).

- Wang, W.Z.; Guo, Y.W.; Huang, B.Y.; Zhao, G.R.; Liu, B.Q.; Wang, L. Analysis of filtering methods for 3D acceleration signals in body sensor network. In Proceedings of the 2011 International Symposium on Bioelectronics and Bioinformatics, Suzhou, China, 3–5 November 2011; pp. 263–266. [Google Scholar]

- Gebhardt, S.; Scheinert, G.; Uhlmann, F.H. Temperature influence on capacitive sensor structures. In Proceedings of the 51st International Scientific Colloquium, Ilmenau, Germany, 11–15 September 2006; pp. 11–15. [Google Scholar]

- Grigorie, M.; de Raad, C.; Krummenacher, F.; Enz, C. Analog temperature compensation for capacitive sensor interfaces. In Proceedings of the 22nd European Solid-State Circuits Conference, Neuchatel, Switzerland, 17–19 September 1996; pp. 388–391. [Google Scholar]

- Cheung, P. Impulse Response & Digital Filters. Available online: https://goo.gl/GkbGHg (accessed on 8 December 2017).

- Freescale Semiconductor, Inc. Allan Variance: Noise Analysis for Gyroscopes; Freescale Semiconductor, Inc.: Austin, TX, USA, 2015; pp. 1–9. [Google Scholar]

- Allan, D.W. Statistics of atomic frequency standards. Proc. IEEE 1966, 54, 221–230. [Google Scholar] [CrossRef]

- Allan, D.W. Should the classical variance be used as a basic measure in standards metrology? IEEE Trans. Instrum. Meas. 1987, IM-36, 646–654. [Google Scholar] [CrossRef]

- Dunn, F.; Parberry, I. Rotation in three dimensions. In 3D Math Primer for Graphics and Game Development, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2011; pp. 217–294. [Google Scholar]

- Armstrong, J.S.; Collopy, F. Error measures for generalizing about forecasting methods: Empirical comparisons. Int. J. Forecast. 1992, 8, 69–80. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Glonek, G.; Wojciechowski, A. Hybrid Orientation Based Human Limbs Motion Tracking Method. Sensors 2017, 17, 2857. https://doi.org/10.3390/s17122857

Glonek G, Wojciechowski A. Hybrid Orientation Based Human Limbs Motion Tracking Method. Sensors. 2017; 17(12):2857. https://doi.org/10.3390/s17122857

Chicago/Turabian StyleGlonek, Grzegorz, and Adam Wojciechowski. 2017. "Hybrid Orientation Based Human Limbs Motion Tracking Method" Sensors 17, no. 12: 2857. https://doi.org/10.3390/s17122857