A Laser Line Auto-Scanning System for Underwater 3D Reconstruction

Abstract

:1. Introduction

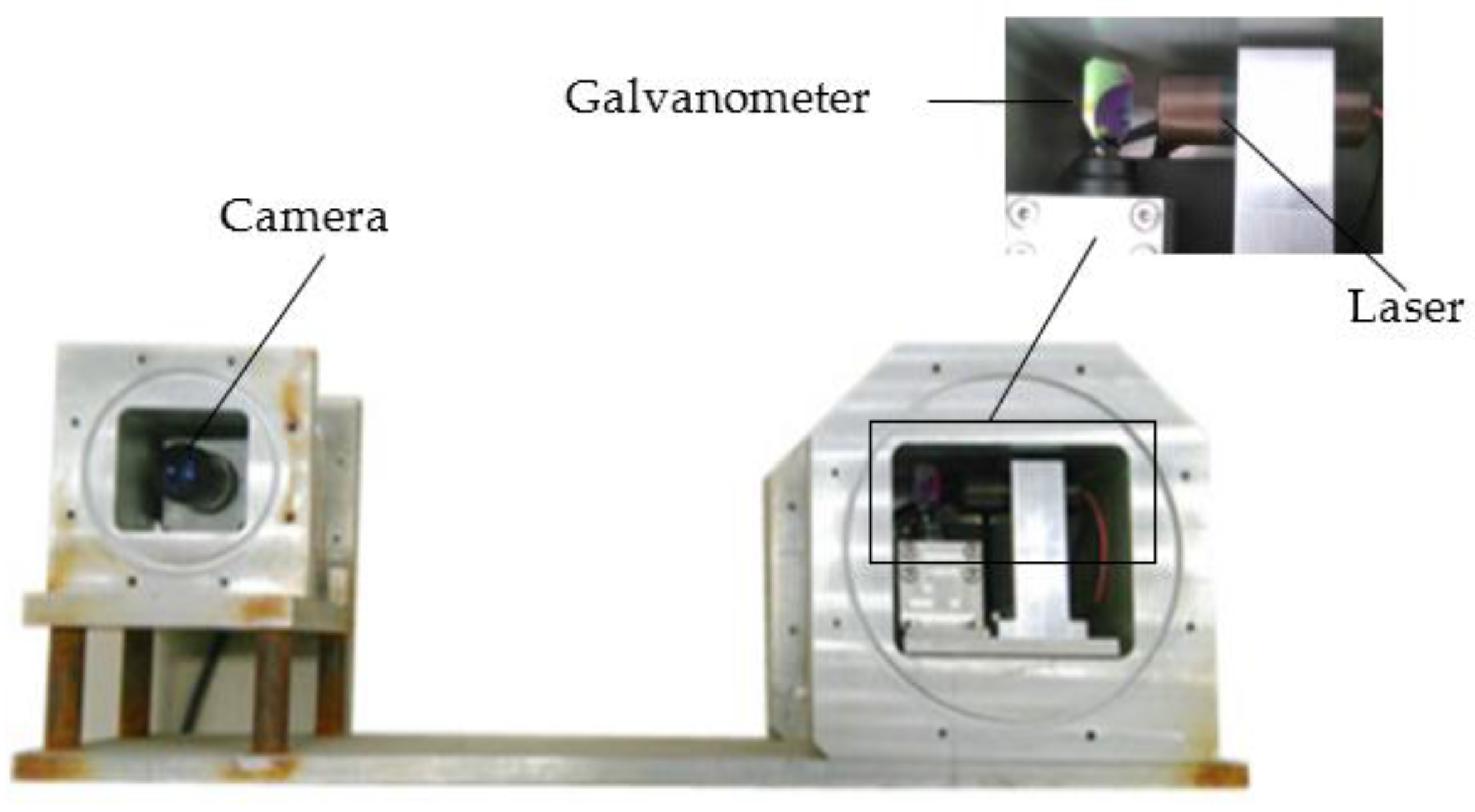

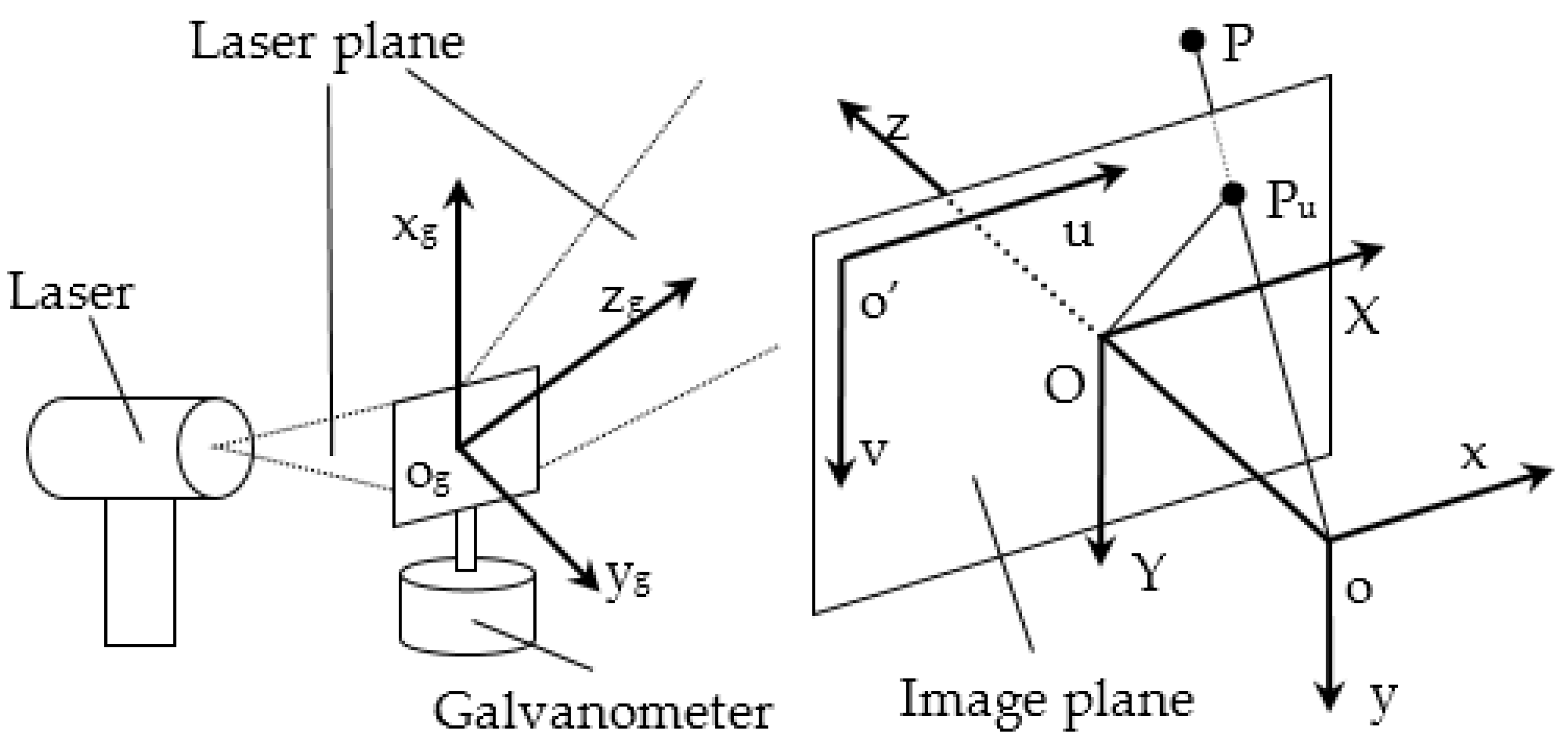

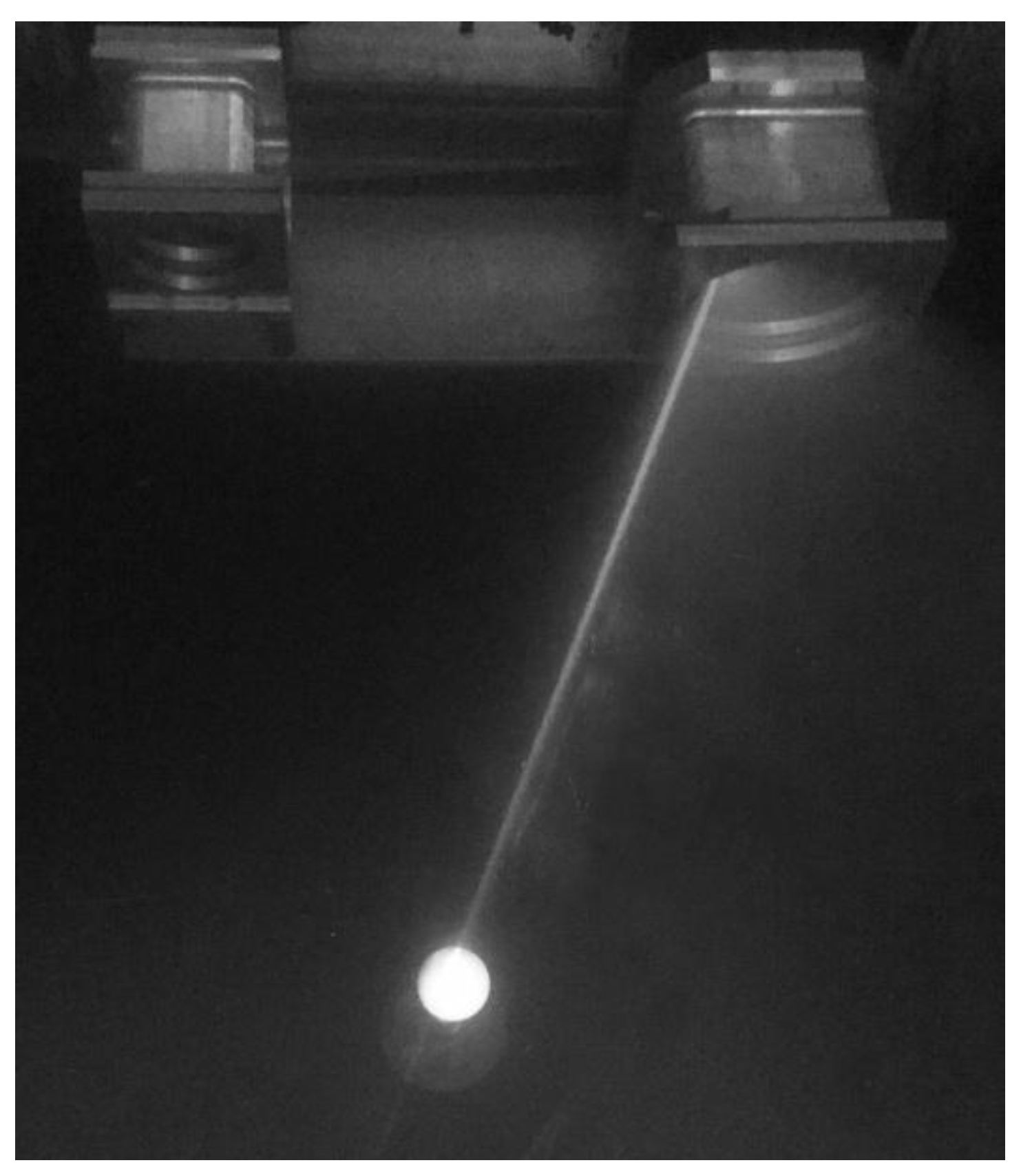

2. System Composition and Structure

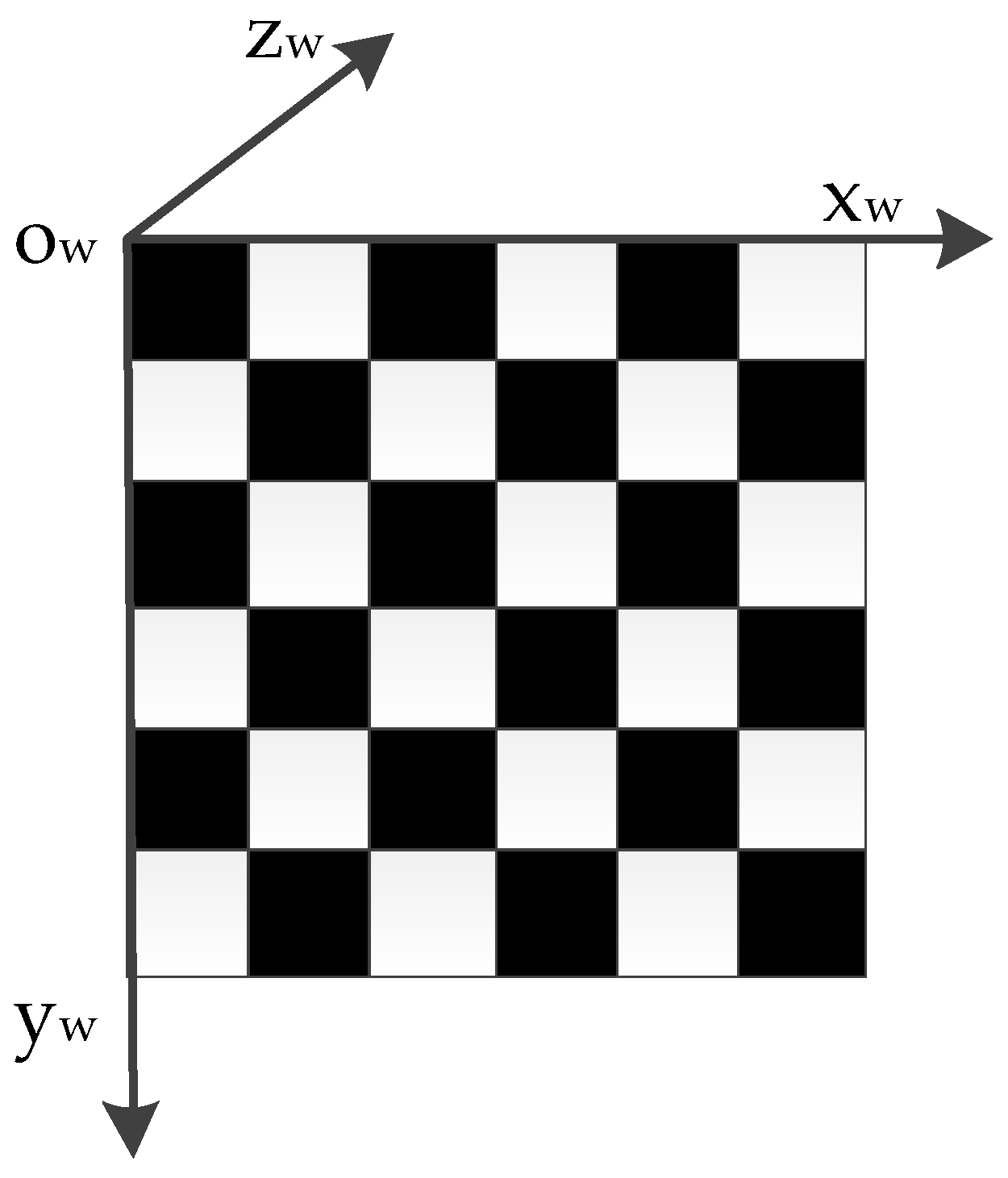

3. System Calibration

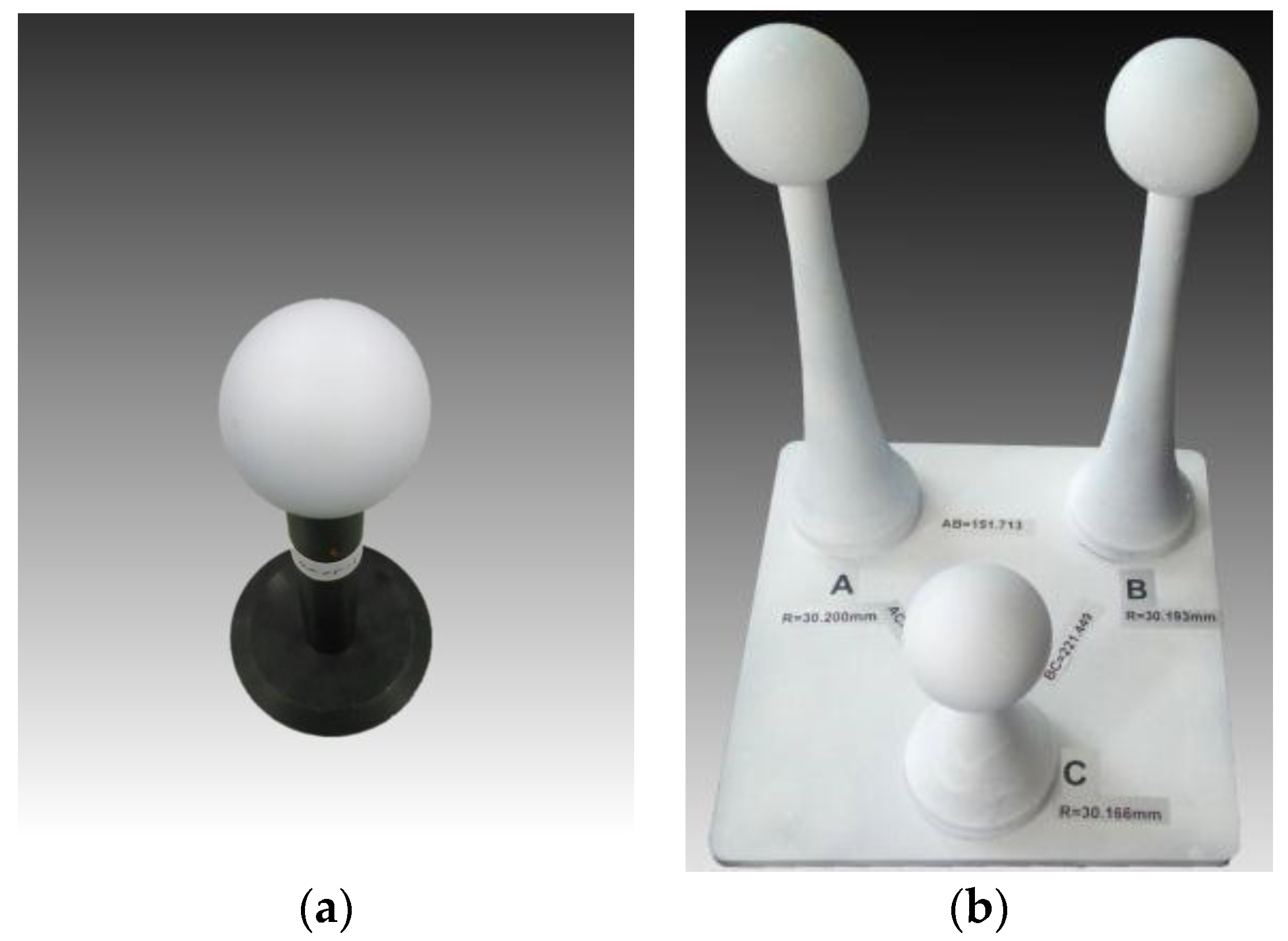

- The target was placed in the measurement range to obtain a target image. Reasonable camera parameters such as gain and contrast are set to obtain the corresponding laser stripe image so that only the strongest laser spots were captured as saturated pixels. As the number of the points in the black squares has been already sufficient for fitting laser lines, the other points in the white squares could be ignored. This target image, together with the images obtained in the next two steps, were used to calibrate the camera internal parameters including the radial and decentering distortion parameters with the Zhang method [39]. The laser stripe image at the same position were used to calculate the coordinates in the camera coordinate system of the laser points with the rotation matrix and translation matrix, which were obtained when calibrating the camera internal parameters [40].

- Keeping the laser plane emission angle constant, the position and the orientation of the target were changed multiple times in the view field of the system. Step 1 was then repeated. All laser spots obtained hitherto belonged to one laser plane, and these spots were used to fit the laser plane equations expressed in the camera coordinate system.

- Laser plane emission angle was changed with the rotation of the galvanometer. Steps 1 and 2 were then repeated. Then, we obtained several different laser plane equations that could be used to calculate the galvanometer rotating axis equation.

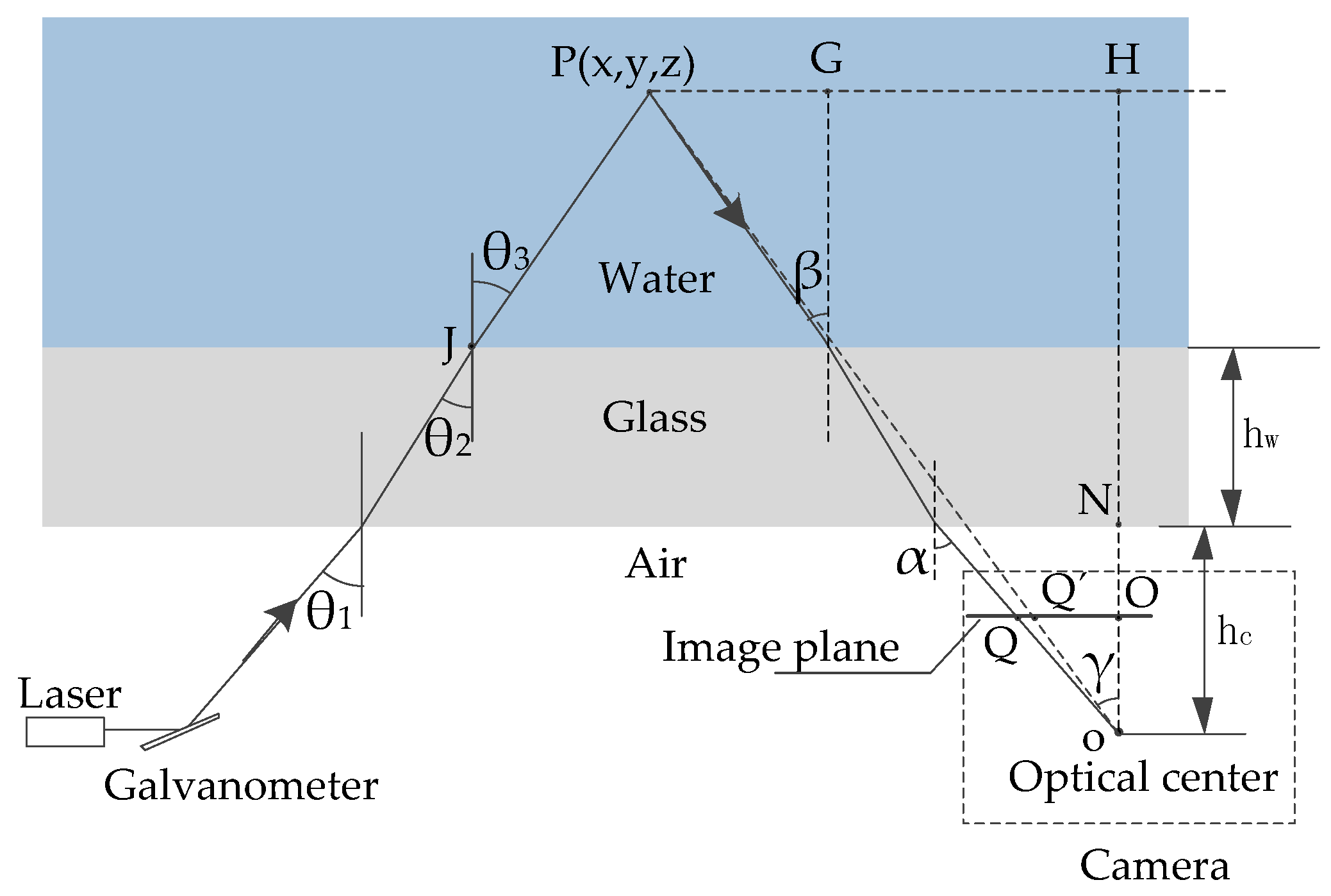

4. Compensation for the Refraction Caused by Air-Glass-Water Interface

5. Experiments and Results

6. Discussion and Outlook

- System machining and assemblyIn this paper, we assume that the galvanometer rotating axis should completely coincide with the line intersected by the laser plane and the mirror of galvanometer. Assembly error will affect the accuracy of the measurement. The effect is reduced by the virtual axis with the optimization algorithm to improve the accuracy in the actual operation. In addition, the other machining and assembly parameters can also lead to the calculation error of the triangulation.

- Parameters of the camera and laserAn external environment with a different light will cause the contrast and definition difference of the image. The camera gain, offset, and contrast parameters as well as the laser intensity should be adjusted accordingly to improve optical quality so as to improve the accuracy. The results are stable in dark environments.

- The angle between light plane and spherical surfaceWhen the angle is small, the width of the light strip formed by the laser line and spherical intersecting becomes large and the length becomes short. All of the above have a great influence on the extraction of the light stripe center.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Prats, M.; Garcia, J.C.; Fernandez, J.J. Advances in the specification and execution of underwater autonomous manipulation tasks. In Proceedings of the MTS/IEEE Oceans, Santander, Spain, 6–9 June 2011; pp. 1–5.

- Prats, M.; Fernandez, J.J.; Sanz, P.J. Combining template tracking and laser peak detection for 3D reconstruction and grasping in underwater environments. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Algarve, Portugal, 7–12 October 2012; pp. 106–112.

- Marani, G.; Choi, S.K.; Yuh, J. Underwater autonomous manipulation for intervention missions AUVs. Ocean Eng. 2009, 36, 15–23. [Google Scholar] [CrossRef]

- Cieslak, P.; Ridao, P.; Giergiel, M. Autonomous underwater panel operation by GIRONA500 UVMS: A practical approach to autonomous underwater manipulation. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 529–536.

- Coiras, E.; Petillot, Y.; Lane, D.M. Multiresolution 3-D reconstruction from side-scan sonar images. IEEE Trans. Image Process. 2007, 16, 382–390. [Google Scholar] [CrossRef] [PubMed]

- Tetlow, S.; Allwood, R.L. The use of a laser stripe illuminator for enhanced underwater viewing. Proc. SPIE 1994, 2258, 547–555. [Google Scholar]

- Roman, C.; Inglis, G.; Rutter, J. Application of structured light imaging for high resolution mapping of underwater archaeological sites. In Proceedings of the MTS/IEEE Oceans, Sydney, Australia, 24–27 May 2010; pp. 1–9.

- Meline, A.; Triboulet, J.; Jouvencel, B. Comparative study of two 3D reconstruction methods for underwater archaeology. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Algarve, Portugal, 7–12 October 2012; pp. 740–745.

- Eric, M.; Kovacic, R.; Berginc, G.; Pugelj, M.; Stopinsek, Z.; Solina, F. The Impact of the Latest 3D Technologies on the Documentation of Underwater Heritage Sites. In Proceedings of the IEEE Digital Heritage International Congress, Marseille, France, 28 October–1 November 2013; pp. 281–288.

- Bruno, F.; Gallo, A.; Muzzupappa, M.; Daviddde Petriaggi, B.; Caputo, P. 3D documentation and monitoring of the experimental cleaning operations in the underwater archaeological site of Baia (Italy). In Proceedings of the IEEE Digital Heritage International Congress, Marseille, France, 28 October–1 November 2013; pp. 105–112.

- Costa, C.; Loy, A.; Cataudella, S.; Davis, D.; Scardi, M. Extracting fish size using dual underwater cameras. Aquacult. Eng. 2006, 35, 218–227. [Google Scholar] [CrossRef]

- Dunbrack, R.L. In situ measurement of fish body length using perspective-based remote stereo-video. Fish. Res. 2006, 82, 327–331. [Google Scholar] [CrossRef]

- Rosenblum, L.; Kamgar-Parsi, B. 3D reconstruction of small underwater objects using high-resolution sonar data. In Proceedings of the 1992 Symposium on Autonomous Underwater Vehicle Technology, Washington, DC, USA, 2–3 June 1992; pp. 228–235.

- Pathak, K.; Birk, A.; Vaskevicius, N. Plane-based registration of sonar data for underwater 3D mapping. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 4880–4885.

- Massot-Campos, M.; Oliver-Codina, G. Optical sensors and methods for underwater 3D reconstruction. Sensors 2015, 15, 31525–31557. [Google Scholar] [CrossRef] [PubMed]

- Leone, A.; Diraco, G.; Distante, C. Stereoscopic system for 3D seabed mosaic reconstruction. In Proceedings of the International Conference on Image Processing (ICIP), Atlanta, GA, USA, 8–11 October 2006; pp. II541–II544.

- Drap, P.; Scaradozzi, D.; Gambogi, P.; Long, L.; Gauche, F. Photogrammetry for virtual exploration of underwater archeological sites. In Proceedings of the XXI International Scientific Committee for Documentation of Cultural Heritage (CIPA) Symposium, Athens, Greece, 1–6 October 2007.

- Bruno, F.; Bianco, G.; Muzzupappa, M.; Barone, S.; Razionale, A. Experimentation of structured light and stereo vision for underwater 3D reconstruction. ISPRS J. Photogramm. Remote Sens. 2011, 66, 508–518. [Google Scholar] [CrossRef]

- Bianco, G.; Gallo, A.; Bruno, F.; Muzzupappa, M. A comparative analysis between active and passive techniques for underwater 3D reconstruction of close-range objects. Sensors 2013, 13, 11007–11031. [Google Scholar] [CrossRef] [PubMed]

- Salvi, J.; Fernandez, S.; Pribanic, T.; Llado, X. A state of the art in structured light patterns for surface profilometry. Pattern Recognit. 2010, 43, 2666–2680. [Google Scholar] [CrossRef]

- Rumbaugh, L.; Li, Y.; Bollt, E.; Jemison, W. A 532 nm chaotic lidar transmitter for high resolution underwater ranging and imaging. In Proceedings of the MTS/IEEE OCEANS, San Diego, CA, USA, 23–27 September 2013; pp. 1–6.

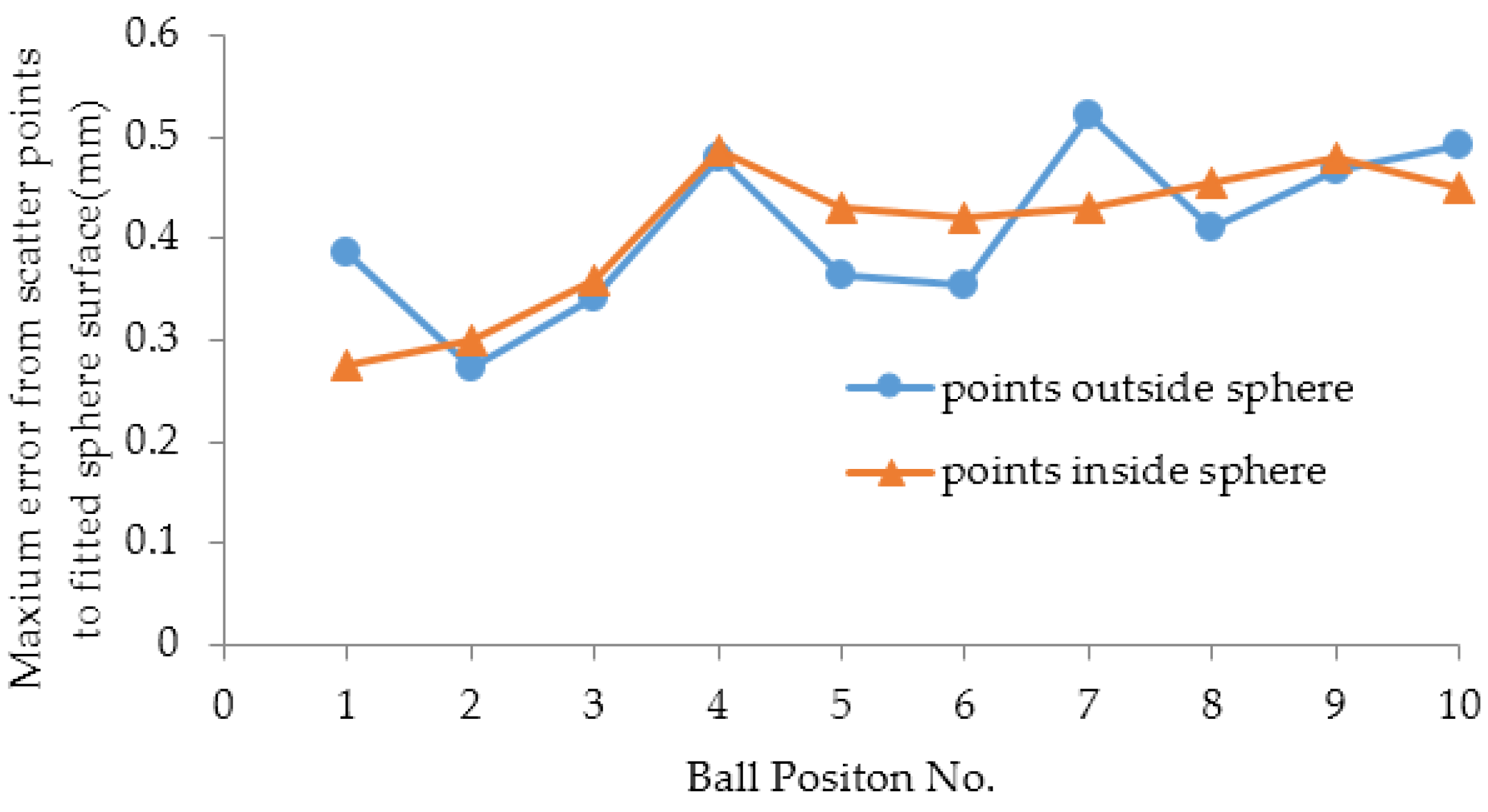

- Van, G.N.; Cuypers, S.; Bleys, P.; Kruth, J.P. A performance evaluation test for laser line scanners on CMMs. Opt. Lasers Eng. 2009, 47, 336–342. [Google Scholar]

- Mahmud, M.; Joannic, D.; Roy, M.; Isheil, A.; Fontaine, J.F. 3D part inspection path planning of a laser scanner with control on the uncertainty. Comput. Aided Des. 2011, 43, 345–355. [Google Scholar] [CrossRef]

- Jaffe, J.S.; Dunn, C. A model-based comparison of underwater imaging systems. In Proceedings of the Ocean Optics IX, Orlando, FL, USA, 4 April 1988; pp. 344–350.

- Cain, C.; Leonessa, A. Laser based rangefinder for underwater applications. In Proceedings of the 2012 American Control Conference (ACC), Montreal, QC, Canada, 27–29 June 2012; pp. 6190–6195.

- Bodenmann, A.; Thornton, B.; Nakajima, R.; Yamamoto, H.; Ura, T. Wide area 3D seafloor reconstruction and its application to sea fauna density mapping. In Proceedings of the MTS/IEEE OCEANS, San Diego, CA, USA, 23–27 September 2013; pp. 4–8.

- Massot-Campos, M.; Oliver-Codina, G. Underwater laser-based structured light system for one-shot 3D reconstruction. In Proceedings of the IEEE Sensors, Valencia, Spain, 2–5 November 2014; pp. 1138–1141.

- Kondo, H.; Maki, T.; Ura, T.; Nose, Y.; Sakamaki, T.; Inaishi, M. Structure tracing with a ranging system using a sheet laser beam. In Proceedings of the 2004 International Symposium on Underwater Technology, Taipei, Taiwan, 20–23 April 2004; pp. 83–88.

- Maire, F.D.; Prasser, D.; Dunbabin, M.; Dawson, M. A vision based target detection system for docking of an autonomous underwater vehicle. In Proceedings of the 2009 Australasion Conference on Robotics and Automation, Sydney, Australia, 2–4 December 2009.

- Bräuer-Burchardt, C.; Heinze, M.; Schmidt, I. Underwater 3D surface measurement using fringe projection based scanning devices. Sensors 2015, 16, 13. [Google Scholar] [CrossRef] [PubMed]

- Nakatani, T.; Li, S.; Ura, T.; Bodenmann, A.; Sakamaki, T. 3D visual modeling of hydrothermal chimneys using a rotary laser scanning system. In Proceedings of the 2011 IEEE Symposium on Underwater Technology and Workshop on Scientific Use of Submarine Cables and Related Technologies, Tokyo, Japan, 5–8 April 2011; pp. 1–5.

- Prats, M.; Fernandez, J.J.; Sanz, P.J. An approach for semi-autonomous recovery of unknown objects in underwater environments. In Proceedings of the 13th International Conference on Optimization of Electrical and Electronic Equipment (OPTIM), Brasov, Romania, 24–26 May 2012; pp. 1452–1457.

- Peñalver, A.; Fernández, J.J.; Sales, J.; Sanz, P.J. Multi-view underwater 3D reconstruction using a stripe laser light and an eye-in-hand camera. In Proceedings of the MTS/IEEE OCEANS, Genova, Italy, 18–21 May 2015; pp. 1–6.

- 2G Robotics. ULS-100 Underwater Laser Scanner for Short Range Scans. Available online: http://www.2grobotics.com/products/underwater-laser-scanner-uls-100/ (accessed on 30 August 2016).

- 2G Robotics. ULS-200 Underwater Laser Scanner for Mid Range Scans. Available online: http://www.2grobotics.com/products/underwater-laser-scanner-uls-200/ (accessed on 30 August 2016).

- 2G Robotics. ULS-500 Underwater Laser Scanner for Long Range Scans. Available online: http://www.2grobotics.com/products/underwater-laser-scanner-uls-500/ (accessed on 30 August 2016).

- Savante. SLV-50 Laser Vernier Caliper. Available online: http://www.savante.co.uk/wp-content/uploads/2015/02/Savante-SLV-50.pdf (accessed on 30 August 2016).

- Inglis, G.; Smart, C.; Vaughn, I.; Roman, C. A pipeline for structured light bathymetric mapping. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Algarve, Portugal, 7–12 October 2012; pp. 4425–4432.

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Bradski, G.; Kaehler, A. Camera model and calibration. In Learning OpenCV, 1st ed.; Kaiqi, W., Ed.; Tsinghua University Press: Beijing, China, 2009; pp. 427–432. [Google Scholar]

- Siemens PLM Software. NX 11. Available online: http://www.plm.automation.siemens.com/zh_cn/products/nx/index.shtml (accessed on 14 September 2016).

- Ekkel, T.; Schmik, J.; Luhmann, T.; Hastedt, H. Precise laser-based optical 3D measurement of welding seams under water. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 117. [Google Scholar] [CrossRef]

| Ball Position No. | Fitted Sphere Centers | Fitted Radii | Points Number | ||

|---|---|---|---|---|---|

| xg | yg | zg | |||

| 1 | 18.6293 | −71.9997 | 667.2722 | 20.2712 | 3635 |

| 2 | 18.6899 | −79.499 | 721.507 | 20.2547 | 2978 |

| 3 | 19.5748 | −39.5651 | 772.5898 | 20.2187 | 2870 |

| 4 | 19.7713 | −39.3427 | 817.5533 | 20.2018 | 2380 |

| 5 | 19.8753 | −29.6658 | 858.9471 | 20.1116 | 1958 |

| 6 | 19.9782 | −23.9347 | 912.6583 | 20.0975 | 2017 |

| 7 | 20.3525 | −16.948 | 958.4314 | 20.0857 | 1701 |

| 8 | 21.1155 | −23.5845 | 998.197 | 20.0794 | 1527 |

| 9 | 21.6605 | −32.4656 | 1021.3342 | 20.1154 | 1433 |

| 10 | 23.3907 | −37.6898 | 1067.269 | 20.1003 | 1176 |

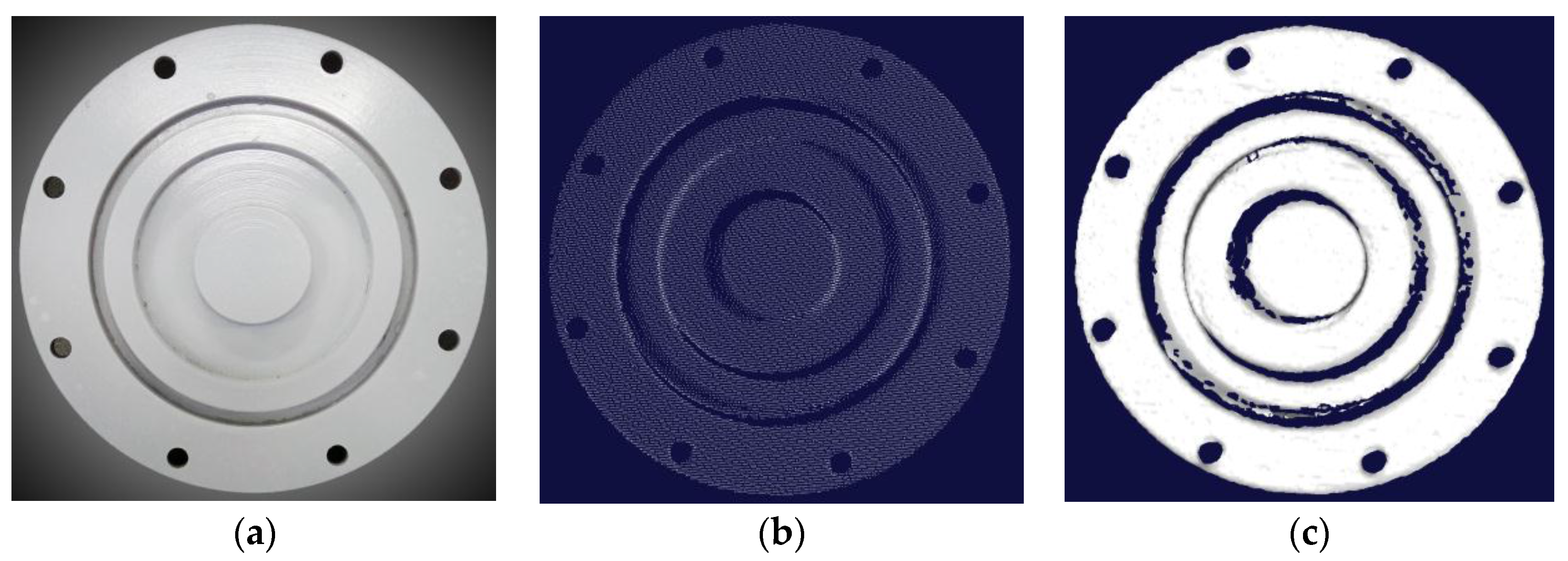

| Position No. | Value Type | Radii (mm) | Distances (mm) | ||||

|---|---|---|---|---|---|---|---|

| A | B | C | AB | AC | BC | ||

| - | Standard Values | 30.200 | 30.193 | 30.166 | 151.713 | 220.056 | 221.449 |

| 1 | Measured Values | 30.612 | 30.939 | 31.089 | 151.682 | 219.197 | 222.09 |

| Errors | 0.412 | 0.746 | 0.923 | −0.031 | −0.859 | 0.641 | |

| 2 | Measured Values | 29.968 | 30.461 | 30.631 | 150.699 | 218.179 | 220.716 |

| Errors | −0.232 | 0.268 | 0.465 | −1.014 | −1.877 | −0.733 | |

| 3 | Measured Values | 30.133 | 30.245 | 30.519 | 150.694 | 219.372 | 222.438 |

| Errors | −0.067 | 0.052 | 0.353 | −1.019 | −0.684 | 0.989 | |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chi, S.; Xie, Z.; Chen, W. A Laser Line Auto-Scanning System for Underwater 3D Reconstruction. Sensors 2016, 16, 1534. https://doi.org/10.3390/s16091534

Chi S, Xie Z, Chen W. A Laser Line Auto-Scanning System for Underwater 3D Reconstruction. Sensors. 2016; 16(9):1534. https://doi.org/10.3390/s16091534

Chicago/Turabian StyleChi, Shukai, Zexiao Xie, and Wenzhu Chen. 2016. "A Laser Line Auto-Scanning System for Underwater 3D Reconstruction" Sensors 16, no. 9: 1534. https://doi.org/10.3390/s16091534

APA StyleChi, S., Xie, Z., & Chen, W. (2016). A Laser Line Auto-Scanning System for Underwater 3D Reconstruction. Sensors, 16(9), 1534. https://doi.org/10.3390/s16091534