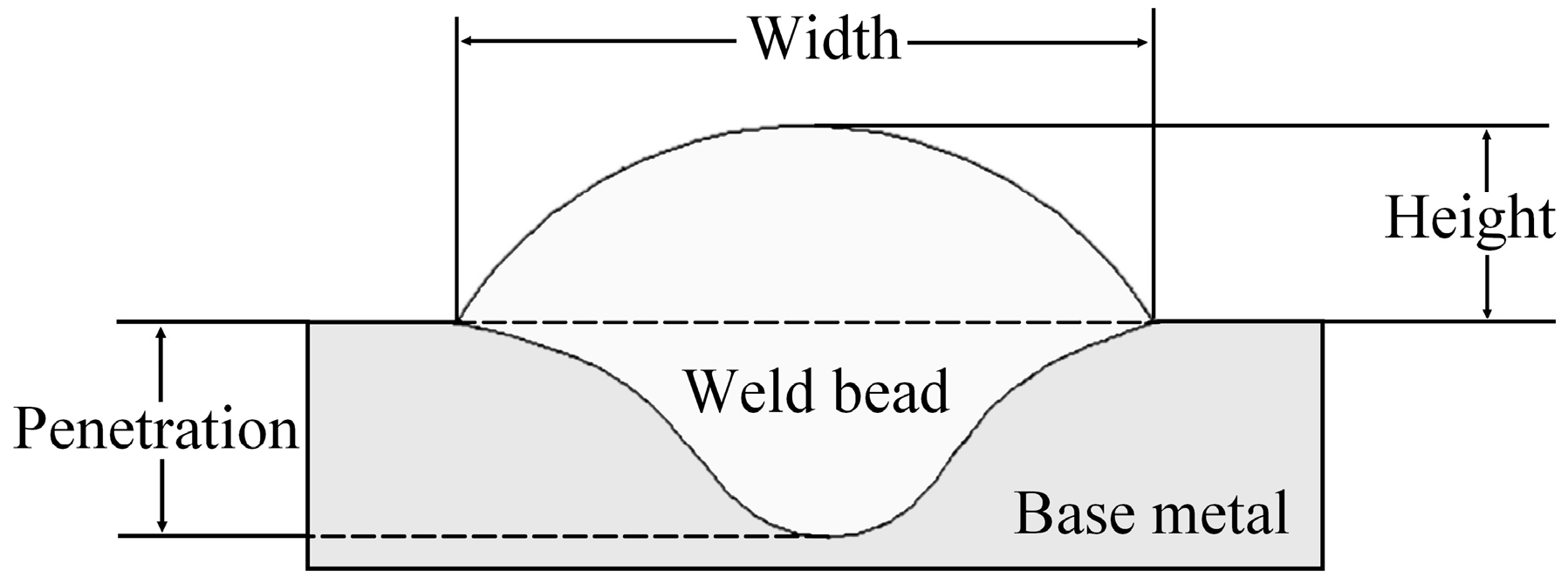

Real-Time Measurement of Width and Height of Weld Beads in GMAW Processes

Abstract

:1. Introduction

2. Materials and Methods

2.1. Measurement Process

- Calibration process;

- Image processing;

- Image acquisition.

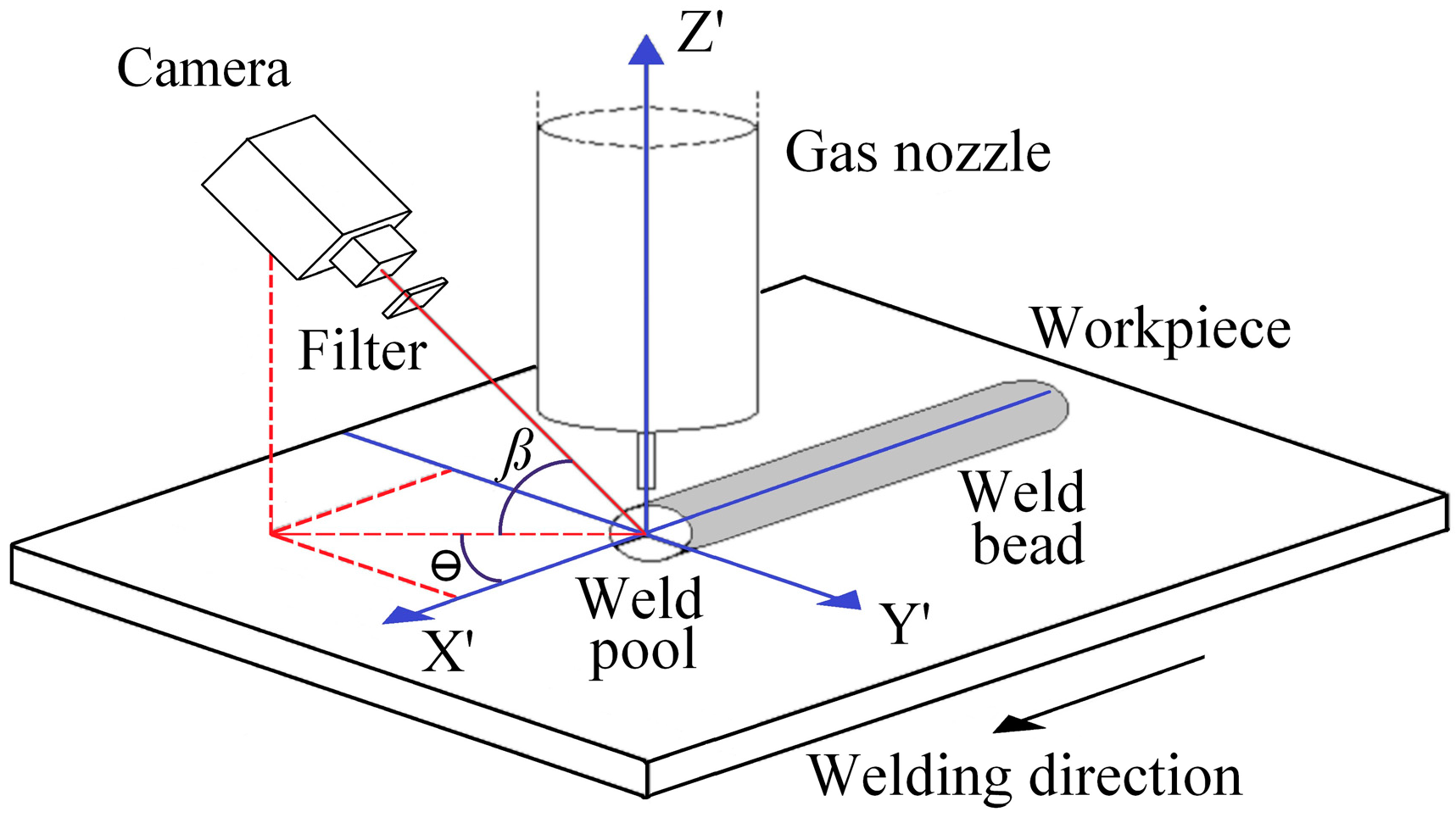

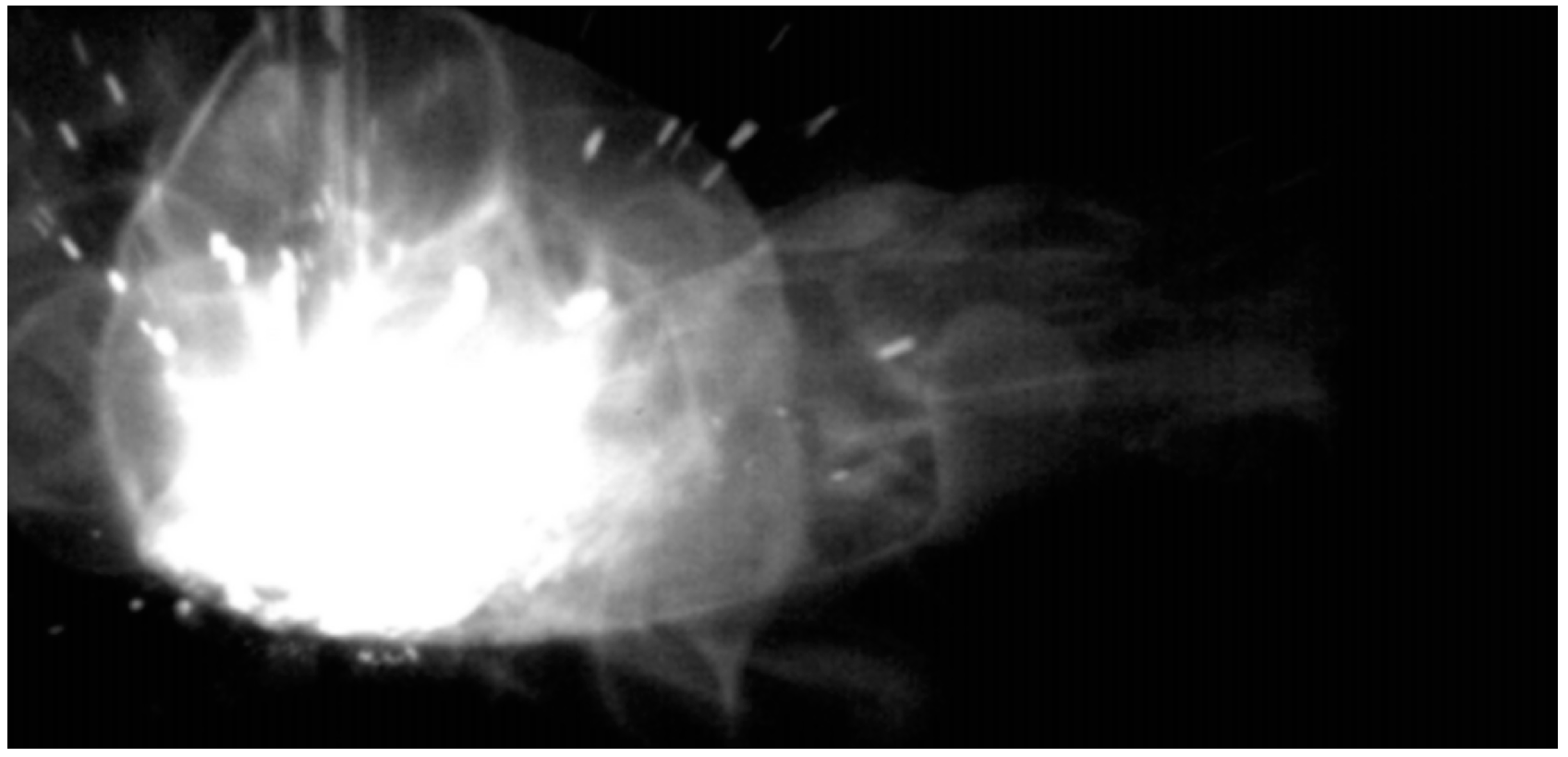

2.1.1. Image Acquisition

2.1.2. Calibration Process

2.1.3. Image Processing Methodology

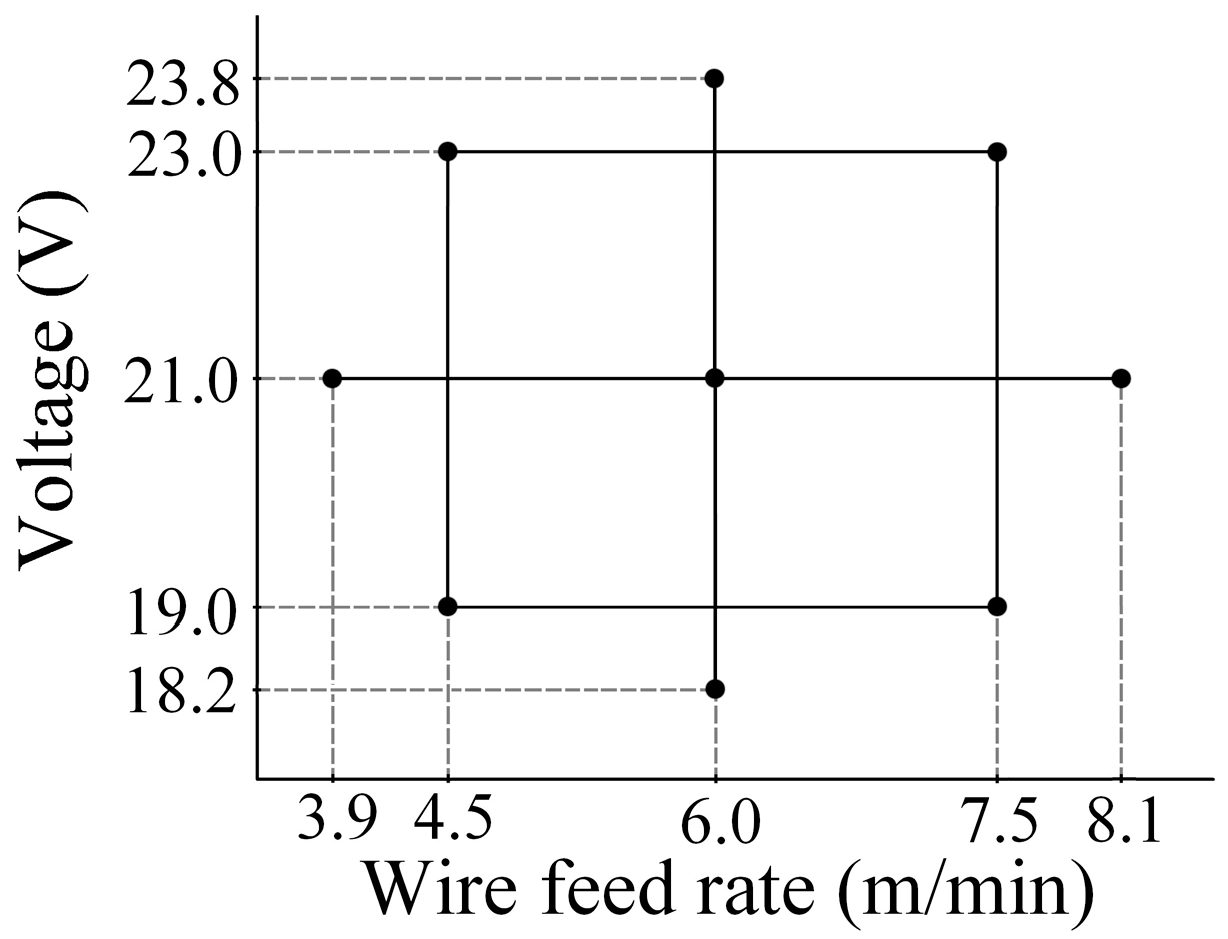

2.2. Validation Process

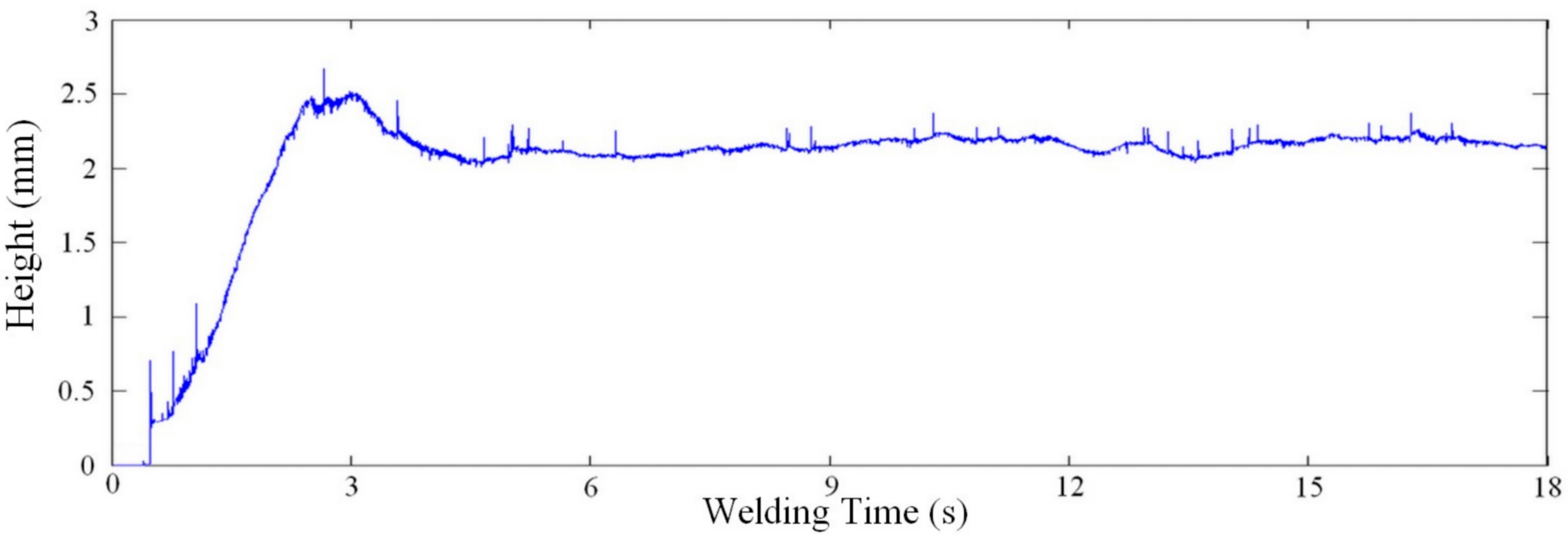

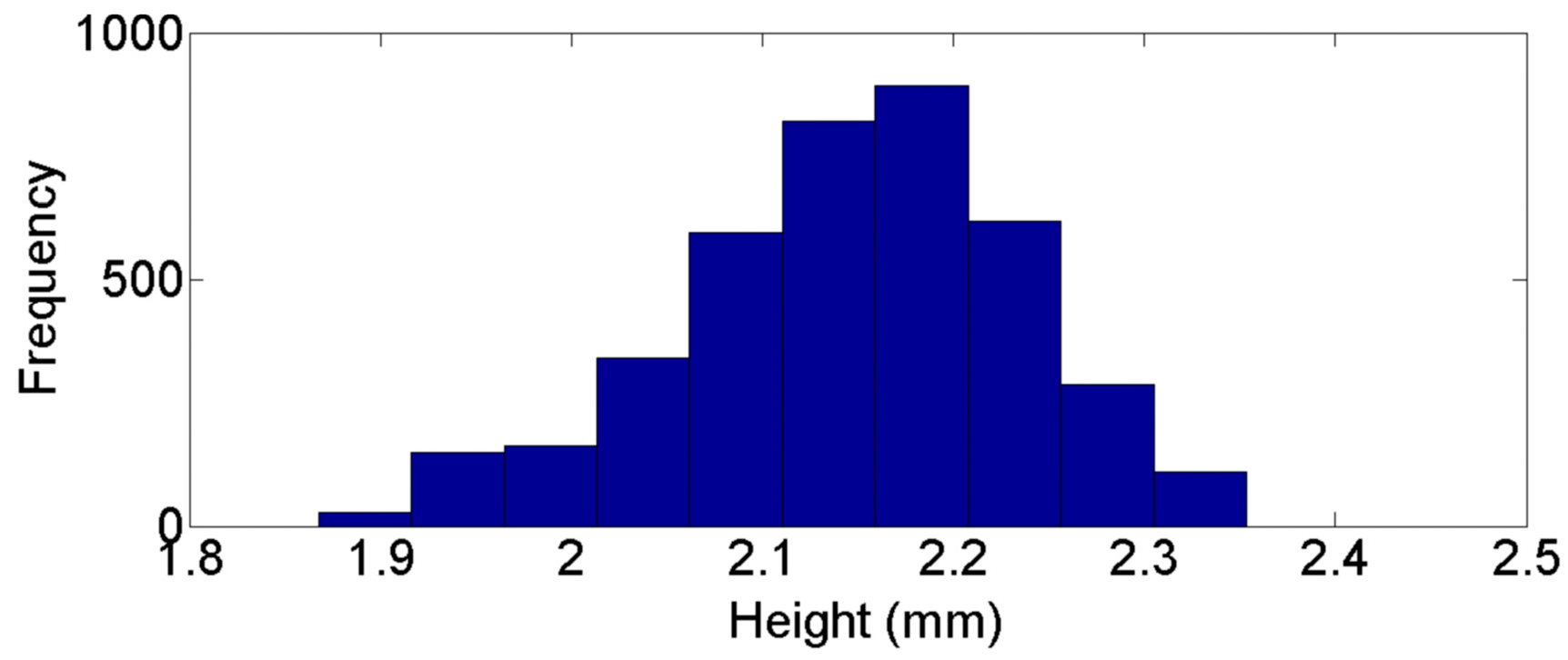

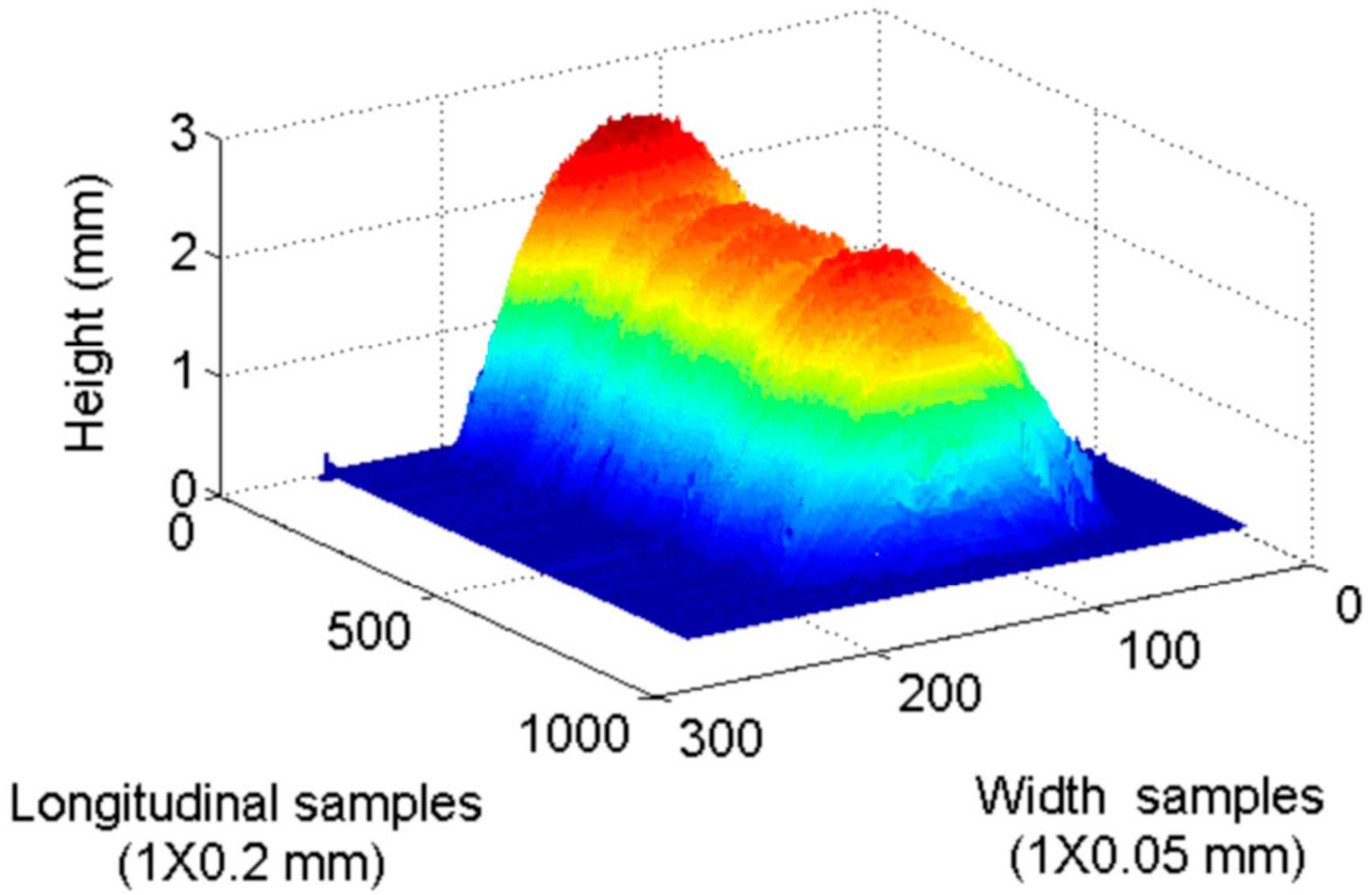

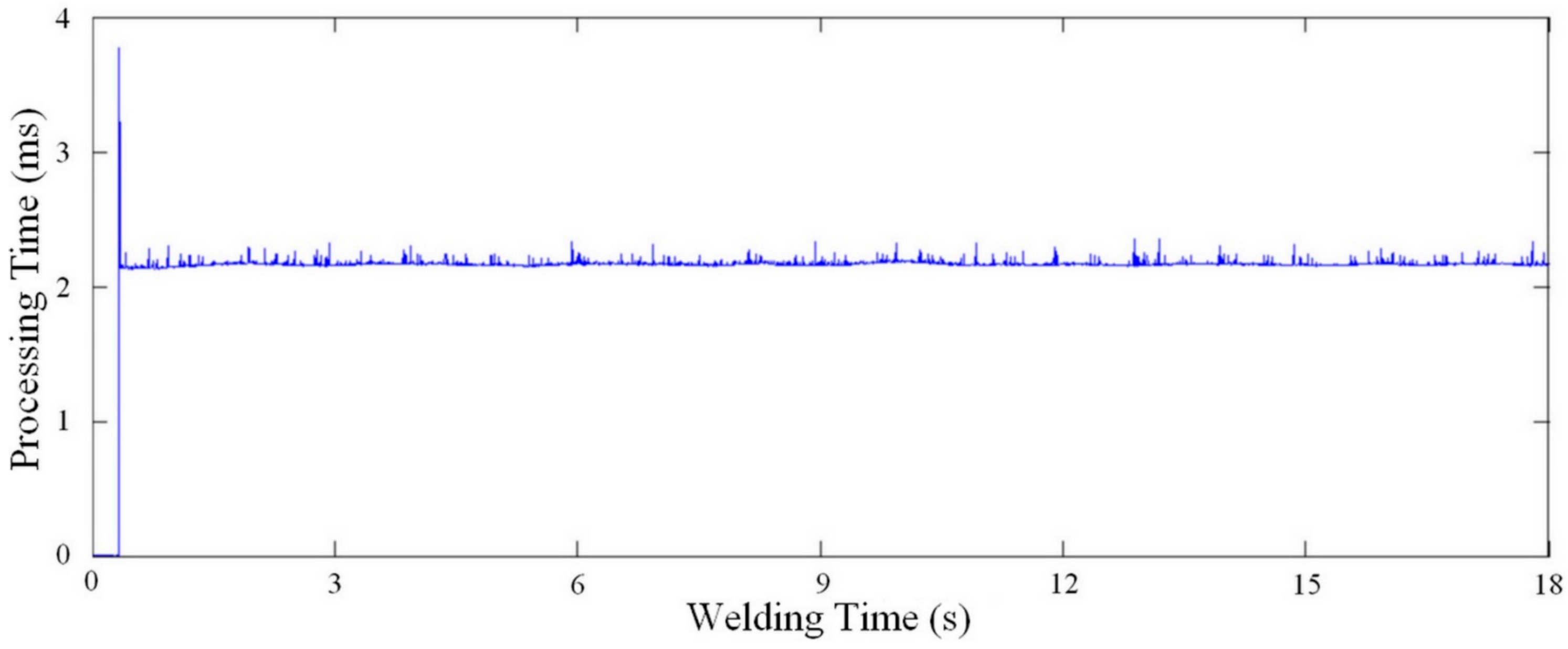

3. Results and Discussions

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Wang, Z.; Zhang, Y.; Wu, L. Adaptive interval model control of weld pool surface in pulsed gas metal arc welding. Automatica 2012, 48, 233–238. [Google Scholar] [CrossRef]

- Li, X.; Shao, Z.; Zhang, Y.; Kvidahl, L. Monitoring and Control of Penetration in GTAW and Pipe Welding. Weld J. 2013, 92, 190–196. [Google Scholar]

- Karadeniz, K.; Ozsarac, U.; Yildiz, C. The effect of process parameters on penetration in gas metal arc welding processes. Mater. Des. 2007, 28, 649–656. [Google Scholar] [CrossRef]

- Kanti, K.; Rao, P. Prediction of bead geometry in pulsed GMA welding using back propagation neural network. J. Mater. Process Technol. 2008, 200, 300–305. [Google Scholar] [CrossRef]

- Kita, A.; Ume, I. Measuring On-Line and Off-Line Noncontact Ultrasound Time of Flight Weld Penetration Depth. Weld J. 2007, 86, 9–17. [Google Scholar]

- Rokhlin, S.; Guu, A. Computerized Radiographic Sensing and Control of an Arc Welding Process. Weld J. 1990, 89, 83–97. [Google Scholar]

- Zhang, Y.M.; Wu, L.; Walcott, B.L.; Chen, D.H. Determining Joint Penetration in GTAW with Vision Sensing of Weld Face Geometry. Weld J. 1993, 72, 463s–469s. [Google Scholar]

- Zhang, Y.M.; Kovacevic, R.; Wu, L. Dynamic Analysis and Identification of Gas Tungsten Arc Welding Process for Full Penetration Control. J. Eng. Ind. 1996, 118, 123–136. [Google Scholar] [CrossRef]

- Zhang, Y.M.; Kovacevic, R. Real-Time Sensing of Sag Geometry during GTA Welding. J. Manuf. Sci. Eng. 1997, 119, 151–160. [Google Scholar] [CrossRef]

- Zhang, Y.M.; Kovacevic, R.; Li, L. Adaptive Control of Full Penetration GTA Welding. IEEE Trans. Control Syst. Technol. 1996, 4, 394–403. [Google Scholar] [CrossRef]

- Huang, W.; Kovacevic, R. Development of a real-time laser-based machine vision system to monitor and control welding processes. Int. J. Adv. Manuf. Technol. 2012, 63, 235–248. [Google Scholar] [CrossRef]

- Ma, X.; Zhang, Y. Gas Metal Arc Weld Pool Surface Imaging: Modeling and Processing. Weld J. 2011, 5, 85–90. [Google Scholar]

- Ma, X.; Zhang, Y.; Gray, A.; Male, A. Image processing for measurement of three-demensional gas metal arc weld pool surface: Algorithms are explored for processing the image of a grid laser pattern reflected from the dynamic GMA weld pool surface. In Proceedings of the IEEE International Conference on Computer Science, Zhangjiajie, China, 25–27 May 2012.

- Zhang, W.J.; Liu, Y.; Zhang, Y.M. Real-time measurement of the weld pool surface in GTAW process. In Proceedings of the 2013 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Minneapolis, MN, USA, 6–9 May 2013.

- Abdullah, B. Monitoring of TIG welding using laser and diode illumination sources: A comparison study. In Proceedings of the International Conference on Electronic Design, Penang, Malaysia, 1–3 December 2008.

- Mota, C.P.; Machado, M.V.; Finzi-Neto, R.M.; Vilarinho, L.O. Near-infrared vision system for arc-welding monitoring. Soldag. Insp. 2013, 18, 19–30. [Google Scholar] [CrossRef]

- Cruz, J.G.; Torres, E.M.; Sadek, C.A. A methodology for modeling and control of weld bead width in the GMAW process. J. Braz. Soc. Mech. Sci. 2015, 37, 1529–1541. [Google Scholar] [CrossRef]

- Xiong, J.; Zhang, G.; Qiu, Z.; Li, Y. Vision-sensing and bead width control of a single-bead multi-layer part: Material and energy savings in GMAW-based rapid manufacturing. J. Clean. Prod. 2013, 41, 82–88. [Google Scholar] [CrossRef]

- Xiong, J.; Zhang, G. Online measurement of bead geometry in GMAW-based additive manufacturing using passive vision. Meas. Sci. Technol. 2013, 24, 1–7. [Google Scholar] [CrossRef]

- Zhang, G.; Yan, Z.; Wu, L. Reconstructing a three-dimensional P-GMAW weld pool shape from a two-dimensional visual image. Meas. Sci. Technol. 2006, 17, 1877–1882. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: New York, NY, USA, 2004; pp. 32–91. [Google Scholar]

- Pinto-Lopera, J.E. Uso de Agentes Inteligentes no Controle Simultâneo da Largura e do Reforço dos Cordões de Solda no Processo GMAW-S. Ph.D. Thesis, University of Brasilia, Brasilia, Brazil, March 2016. [Google Scholar]

| Wire Feed Rate (m/min) | Voltage (V) | Width | |||

|---|---|---|---|---|---|

| Vision System | 3D Scanner | ||||

| µ (mm) | s (mm) | µ (mm) | s (mm) | ||

| 3.9 | 21.0 | 6.16 | 0.38 | 6.18 | 0.37 |

| 4.5 | 23.0 | 6.87 | 0.36 | 6.92 | 0.48 |

| 4.5 | 19.0 | 5.69 | 0.27 | 5.72 | 0.28 |

| 6.0 | 23.8 | 7.80 | 0.35 | 7.85 | 0.46 |

| 6.0 | 21.0 | 6.79 | 0.34 | 6.83 | 0.38 |

| 6.0 | 18.2 | 5.84 | 0.43 | 5.87 | 0.60 |

| 7.5 | 23.0 | 8.16 | 0.50 | 8.22 | 0.53 |

| 7.5 | 19.0 | 6.23 | 0.77 | 6.30 | 0.78 |

| 8.1 | 21.0 | 7.70 | 0.45 | 7.71 | 0.29 |

| Wire Feed Rate (m/min) | Voltage (V) | Height | |||

|---|---|---|---|---|---|

| Vision System | 3D Scanner | ||||

| µ (mm) | s (mm) | µ (mm) | s (mm) | ||

| 3.9 | 21.0 | 1.59 | 0.17 | 1.58 | 0.14 |

| 4.5 | 23.0 | 1.67 | 0.14 | 1.66 | 0.10 |

| 4.5 | 19.0 | 1.69 | 0.11 | 1.70 | 0.09 |

| 6.0 | 23.8 | 1.97 | 0.15 | 1.95 | 0.17 |

| 6.0 | 21.0 | 2.15 | 0.09 | 2.16 | 0.12 |

| 6.0 | 18.2 | 2.31 | 0.16 | 2.29 | 0.18 |

| 7.5 | 23.0 | 2.39 | 0.17 | 2.37 | 0.17 |

| 7.5 | 19.0 | 2.87 | 0.20 | 2.85 | 0.22 |

| 8.1 | 21.0 | 2.48 | 0.18 | 2.50 | 0.17 |

| Wire Feed Rate (m/min) | Voltage (V) | Welch’s t-Test () | |

|---|---|---|---|

| Width | Height | ||

| 3.9 | 21.0 | 1.1361 | 1.4677 |

| 4.5 | 23.0 | 2.2514 | 2.0040 |

| 4.5 | 19.0 | 2.2676 | 2.2807 |

| 6.0 | 23.8 | 2.3471 | 2.5113 |

| 6.0 | 21.0 | 2.2441 | 1.8011 |

| 6.0 | 18.2 | 1.0838 | 2.3702 |

| 7.5 | 23.0 | 2.4013 | 2.4802 |

| 7.5 | 19.0 | 1.8946 | 1.9353 |

| 8.1 | 21.0 | 0.6760 | 2.4637 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pinto-Lopera, J.E.; S. T. Motta, J.M.; Absi Alfaro, S.C. Real-Time Measurement of Width and Height of Weld Beads in GMAW Processes. Sensors 2016, 16, 1500. https://doi.org/10.3390/s16091500

Pinto-Lopera JE, S. T. Motta JM, Absi Alfaro SC. Real-Time Measurement of Width and Height of Weld Beads in GMAW Processes. Sensors. 2016; 16(9):1500. https://doi.org/10.3390/s16091500

Chicago/Turabian StylePinto-Lopera, Jesús Emilio, José Mauricio S. T. Motta, and Sadek Crisostomo Absi Alfaro. 2016. "Real-Time Measurement of Width and Height of Weld Beads in GMAW Processes" Sensors 16, no. 9: 1500. https://doi.org/10.3390/s16091500