This section presents the results of the off-line analysis on the collected trace set and the online evaluation on LG Nexus 5 smartphones. For off-line analysis, we first determine the key parameters of MGRA,

i.e., the feature vector and the training set number. We further compare two different SVM kernels and one different classifier of random forest to confirm the functionality of SVM with the RBF kernel. Then, we compare the classification accuracy of MGRA with two state-of-the-art methods: uWave [

8] and 6DMG [

9]. For online evaluation, we compare the energy and computation time among MGRA, uWave and 6DMG.

7.1. Parameter Customization

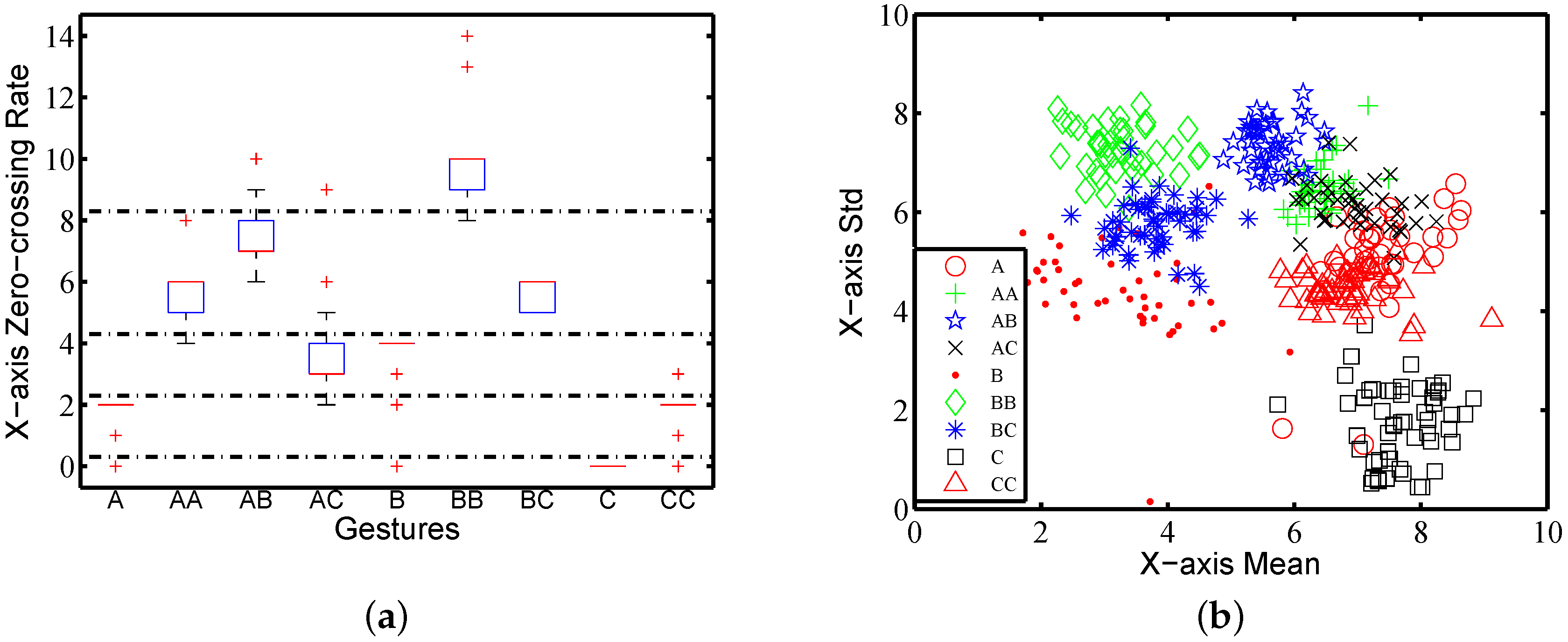

Feature vector selection has a great impact on classification for SVM.

Section 5 only provides the feature impact order as

. It still needs to allocate the length of the feature vector; meanwhile, a larger training trace number, better classification accuracy. However, it brings the end users a heavy burden to construct a training set when the number of traces is big.

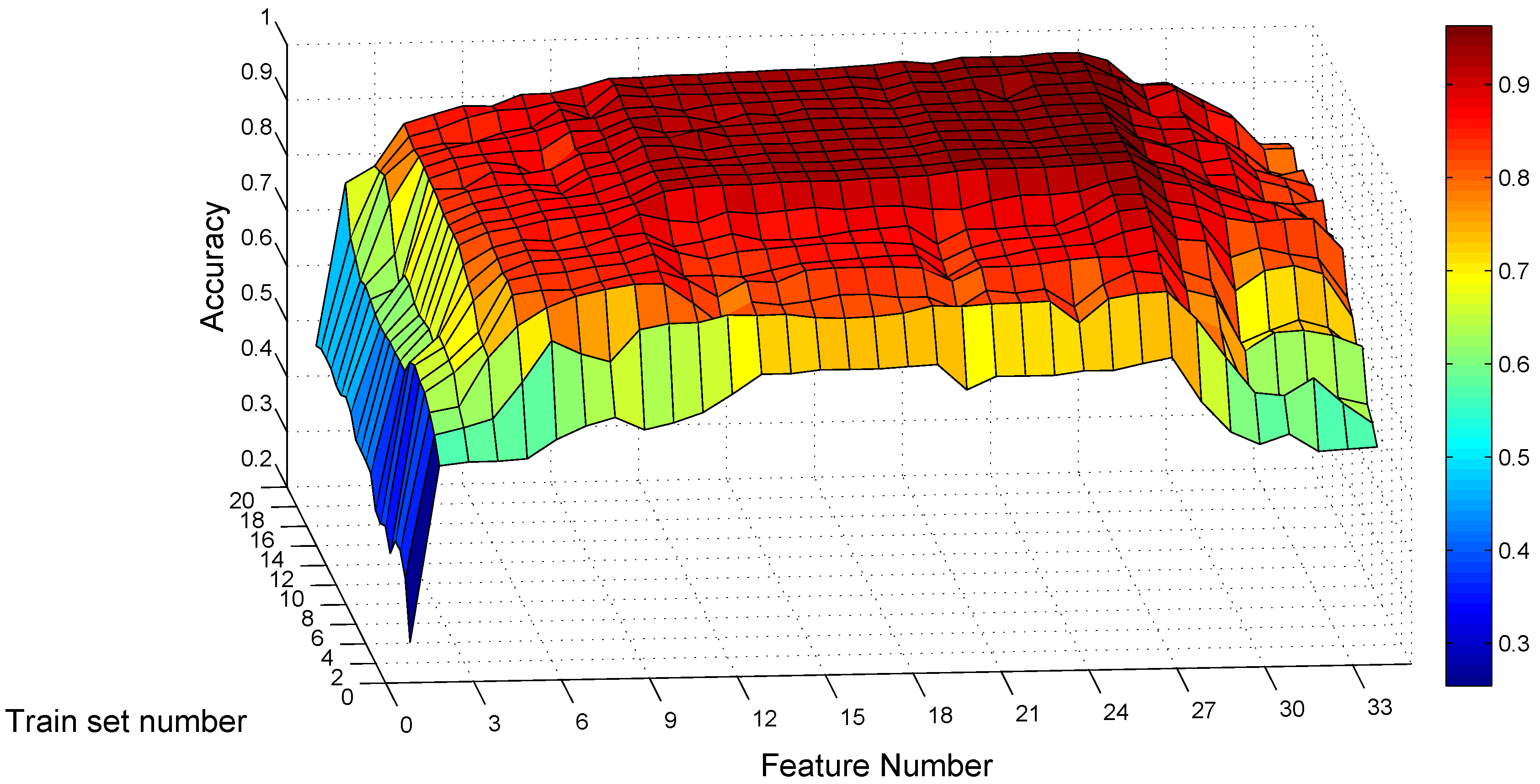

Hence, we conduct a grid search for optimal values on these two parameters for the static traces, with the feature number varying from one to 34 according to the order and the training set number varying from one to 20. For each combination of these two parameters, we train the SVM model with traces per gesture class, randomly selected from Confusion Set under static scenarios for each subject. We only use static traces for training, as the end user may prefer to use MGRA under mobile scenarios, but may not like to collect training traces while moving. After constructing the model, the rest of the traces of each subject under static scenarios is used to test the subject’s own classification model. This evaluation process is repeated five times for each combination.

Figure 11 shows the average recognition accuracy of static traces among eight subjects for each parameter combination. From the point of view of the training traces number, it confirms the tendency that a larger number means better classification accuracy. When the training trace number exceeds 10, the accuracy improvement is little. The maximum recognition accuracy under static scenarios is

with 20 training traces per gesture class and with 27 feature items. For the combination of 10 training traces and 27 features, the average recognition accuracy is

, no more than

lower than the maximum value. Hence, we use 10 as the trace number per gesture class for training in MGRA.

We further dig into the impact on recognition with different feature numbers while keeping

.

Table 5 shows the confusion matrices of one subject on feature number

. When only using the standard deviation of the composite acceleration (

), the average classification accuracy is only 44.82%. For example,

Table 5a shows that 55% traces of gesture

are recognized as gesture

, and the other 45% are recognized as gesture

. After adding time feature (

), posture

(

) and

(

), only 7.5% and 5% of gesture

are recognized incorrectly as gestures

and

, described in

Table 5b. The average accuracy for four features increases to

.

After adding seven features,

Table 5c shows that there is only

of gesture

classified as gesture

. None of gesture

is recognized as gesture

. Such an experiment shows that MGRA with 11 features correctly recognizes between

and

. It also illustrates that gesture

is more confusable with

than

, confirming that two gestures are harder to classify when sharing common parts. The average recognition accuracy is

for 11 features.

For 27 features, the recognition error is little for each gesture class, as shown in

Table 5d. Besides, all error items are on the intersection of two gestures sharing a common symbol, except only

of gesture

A is recognized incorrectly as gesture

B. The average recognition accuracy achieves

for this subject on the feature vector of 27 items.

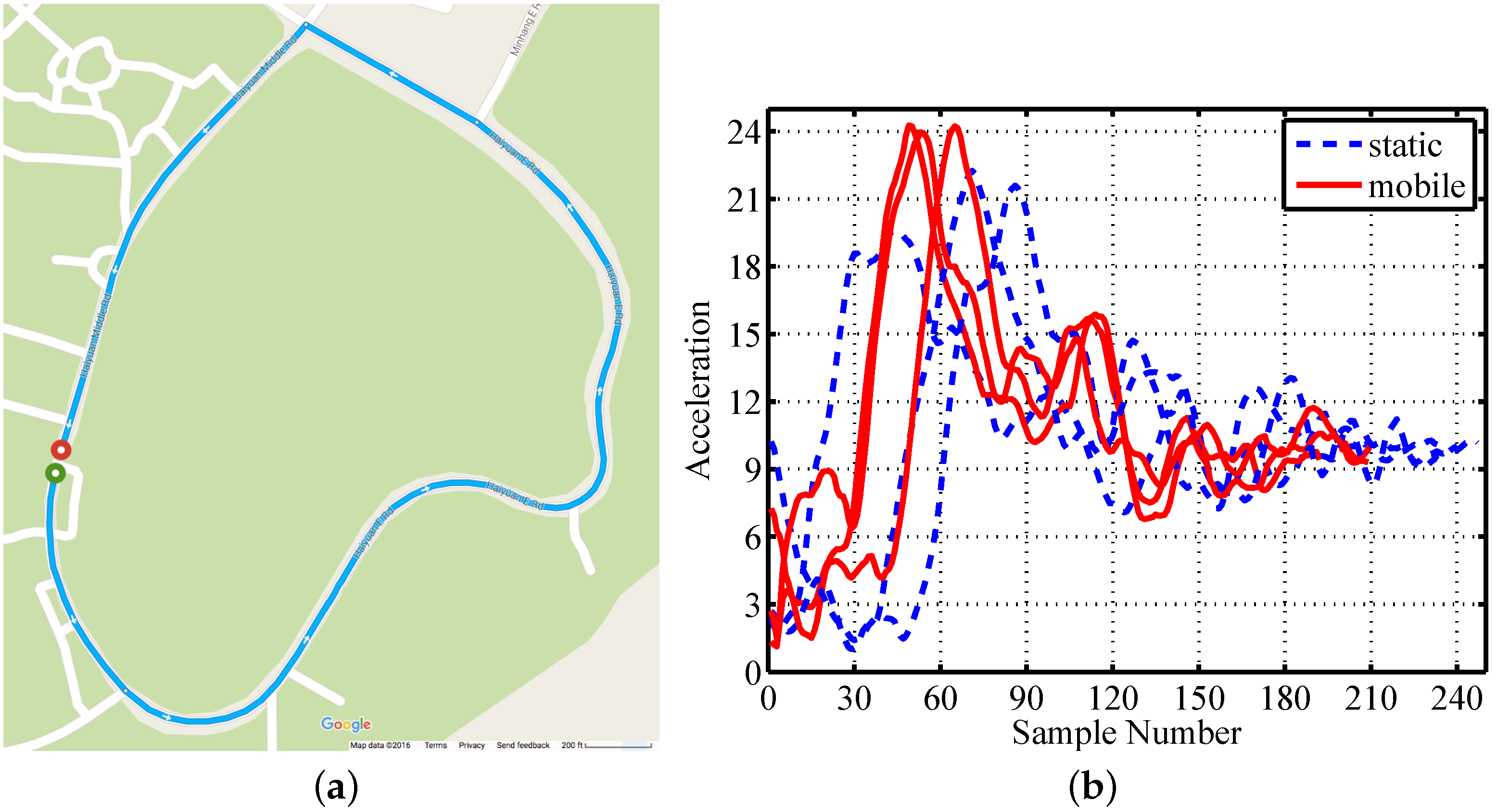

Before determining the feature number, we should also consider the traces collected under mobile scenarios where the acceleration of the car is added. For each parameter combination, we test the models constructed from the static traces, with the traces collected under mobile scenarios. Someone may ask: why not construct the SVM model based on mobile traces separately? Because it is inconvenient for potential users in practice to collect a number of gestures for training under mobile scenarios. Even collecting the training traces in a car for our subjects, it requires someone else to be the driver. However, it is easy for the user to just perform a certain gesture to call commands on the smartphone under mobile scenarios, comparing to the interaction with touch screens.

Figure 12 depicts the classification results for the same subject as

Figure 11, testing his static models with mobile traces. The highest accuracy of

appears when the training set number is 20 and the feature number is 27. For the combination of

and

, the recognition accuracy is

. The confusion matrix of

and

tested with mobile traces is shown in

Table 6. Most error items are also on the intersections of gestures sharing one common symbol, except that a small fraction of gesture

B is classified as gesture

A and

, gesture

as

and gestures

C and

as

B. Comparing

Table 6 to

Table 5d, most error cells in

Table 6 do not exist in

Table 5d. This indicates that the accuracy decreases when testing the classification model constructed from static traces with mobile traces. However, the average recognition accuracy is

, still acceptable for this subject.

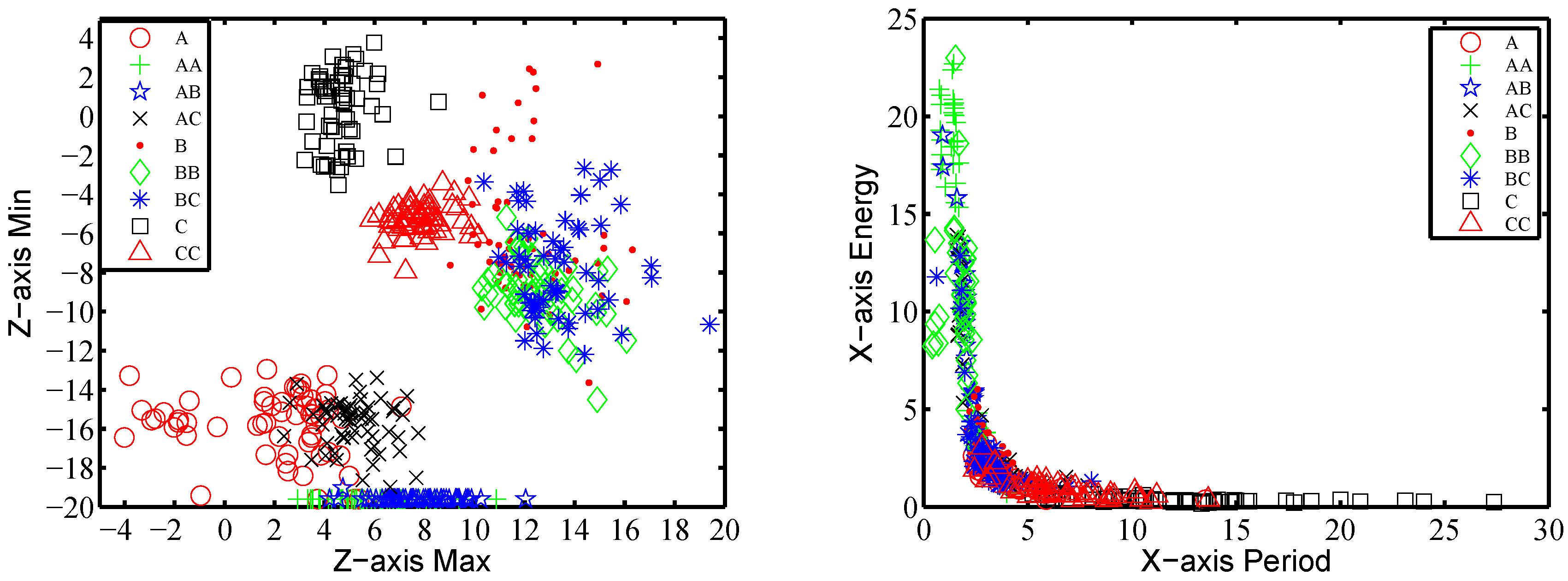

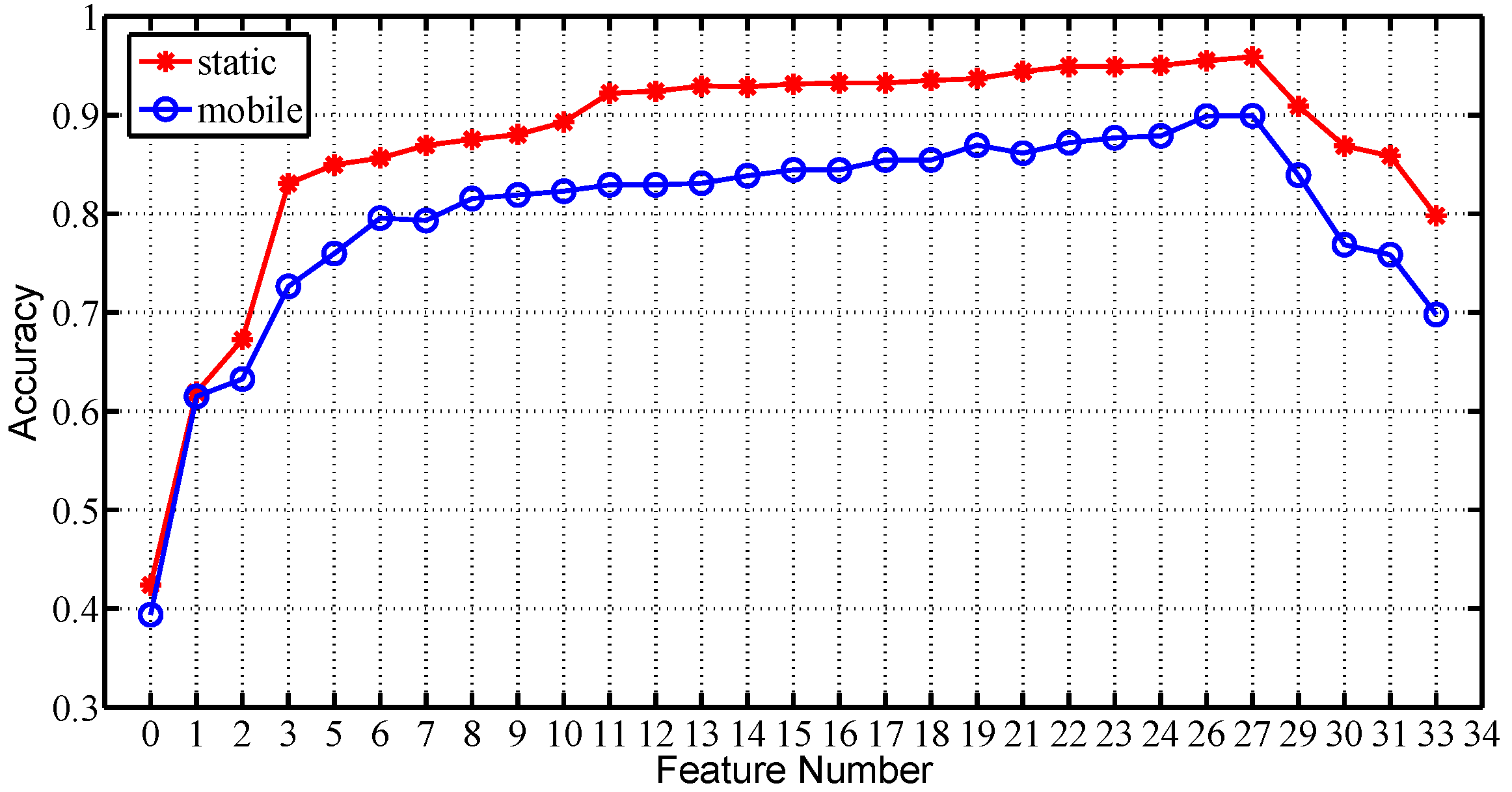

Taking the training sample number as 10, we further plot the average recognition accuracy among all subjects for all feature number values under two scenarios respectively, in

Figure 13. It shows that the recognition accuracy achieves the maximum when

, for the test traces under both static and mobile scenarios. Therefore, we choose

as the feature vector of MGRA.

7.2. Comparison with SVM Kernels and Random Forest

We choose RBF as the SVM kernel for MGRA, withthe assumption based on the central limit theorem. To justify the choice of SVM kernel, we further examine Fisher and polynomial kernels under both static and mobile scenarios. The feature vector is chosen as for the comparison.

Besides, some previous research has demonstrated the classification performance of random forest on activity or gesture recognition [

19]. Therefore, we also evaluate random forest as the classifier and apply all 34 features under both scenarios.

Table 7 shows the confusion matrix of the Fisher kernel, the polynomial kernel and random forest under static scenarios for the same subject with the results in

Table 5d. It first shows that most error items are on the intersections of gestures sharing one common symbol, which is consistent with the error distribution in

Table 5d.

Table 7a also demonstrates that the Fisher kernel achieves 100% accuracy on classifying repetition gestures, which are

,

and

. However, the accuracy for all of the other gestures is lower than that in

Table 5d.

Comparing the polynomial to the RBF kernel, it shows that the classification accuracy of gestures

A,

,

,

,

and

C is higher than 95% in both

Table 5d and

Table 7b. The misclassification between gestures

and

B is larger than 7% in

Table 7b for the polynomial kernel, which has been corrected in

Table 5d by the RBF kernel. The average classification accuracy among all subjects when applying three different SVM kernels under static scenarios is shown in

Table 8. The accuracy of the RBF kernel is the highest, and the other two are close to or above 90% for static traces.

However, the accuracy decreases clearly when applying the model trained under static scenarios to the traces under mobile scenarios for the Fisher and the polynomial kernel, as shown in

Table 9a,b. For gestures

and

B, the classification accuracy is no more than 60% for both the Fisher and the polynomial kernels. Gesture

is misclassified as

and

B with a high percentage for both kernels, because these three gestures share some common parts. For gesture

B, there are 27.5% and 32.5% misclassified as gesture

for both kernels, respectively. These errors come from both gestures being performed as two circles by the subject. The only difference is that gesture

B includes two vertical circles, while gesture

contains two horizontal circles. Referring back to

Table 6, the RBF kernel misclassifies only 2.5% of gesture

B as

under mobile scenarios. The average classification accuracy results among all subjects with three kernels under mobile scenarios is also listed in

Table 8. It shows that the Fisher and the polynomial kernel are not robust to scenario change. On the contrary, the RBF kernel retains accuracy very close to 90%.

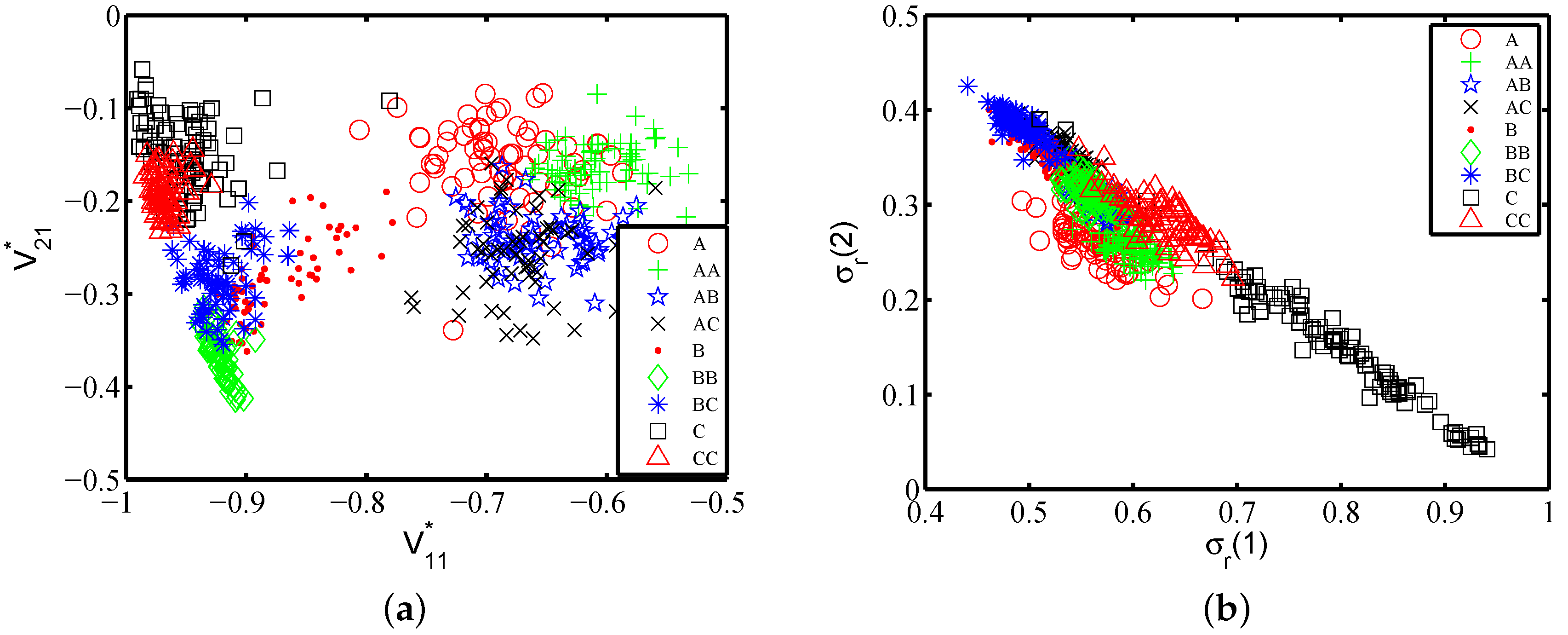

Applying random forest as the classifier, we get the confusion matrices for the same subject, listed in

Table 7c and

Table 9c, under both scenarios, respectively. The results show that random forest is also not robust to scenario change. The average accuracy results are lower under both scenarios, when comparing random forest to SVM with the polynomial and RBF kernels, shown in

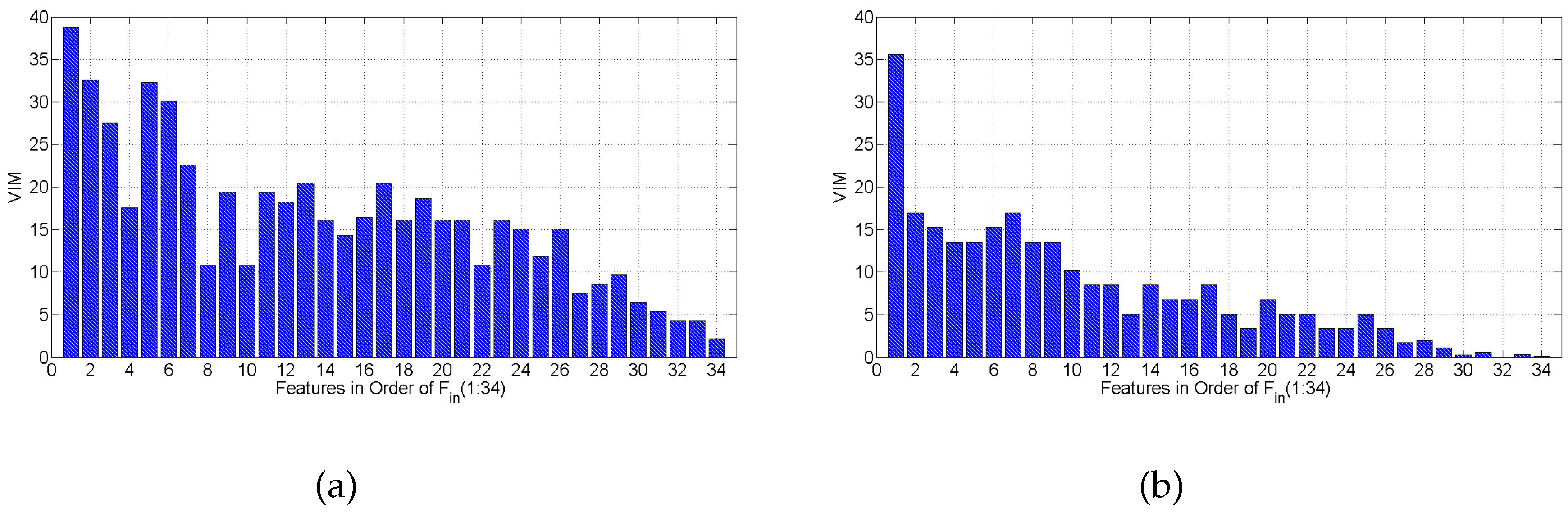

Table 8. When digging into the Variable Importance Measure (VIM) of the random forest average on all subjects,

Figure 14 shows that

are still the most important features for random forest. Except that

are a little more important than

under static scenarios, and

outperforms

a bit under mobile scenarios. We further conduct an evaluation of random forest on feature set

. The average classification accuracy is 86.17% and 68.32% under static and mobile scenarios, respectively, decreasing no more than 3.5% compared to the accuracy with all 34 features. This confirms that the feature selection results of mRMR and Algorithm 1 are independent of classification method and scenario.

After comparison among three SVM kernels and the classifier of random forest, we confirm that SVM with the RBF kernel is suitable as the classifier for MGRA.

7.3. Accuracy Comparison with uWave and 6DMG

We compare MGRA, uWave [

8] and 6DMG [

9] on classification accuracy with both Confusion Set and Easy Set in this section.

uWave exploits DTW as its core and originally only records one gesture trace as the template. The recognition accuracy directly relies on the choice of the template. To be fair for comparison, we let uWave make use of 10 training traces per gesture in two ways. The first method is to carry out template selection from 10 training traces for each gesture class first. This can be treated as the training process for uWave. The template selection criterion is that the target trace has maximum similarity to the other nine traces, i.e., the average distance from the target trace to the other nine traces after applying DTW is minimum. We call this best-uWave. The second method is to compare the test gesture with all 10 traces per gesture class and to calculate the mean distance from the input gesture to nine gesture classes. We call this method 10-uWave. 10-uWave does not have any training process, but it will spend much time on classification.

For 6DMG, we extract 41 time domain features from both acceleration and gyroscope samples in the gesture traces. The number of hidden states is set to eight, experimentally chosen as the best from the range of (2, 10). 6DMG uses 10 traces per gesture class for training.

We first compare the test trace set from static scenarios.

Table 10 shows the confusion matrix of best-uWave, 10-uWave and 6DMG of the same subject of

Table 5d. Most classification errors of MGRA, comparing

Table 5d to

Table 10, still exist when applying best-uWave, 10-uWave and 6DMG; except that best-uWave corrects MGRA’s error of

on gesture

A recognizing as gesture

B. 6DMG corrects MGRA from recognizing

of gesture

as gesture

and

of gesture

as

. Both best-uWave and 10-uWave decrease MGRA’s error of

to

on recognizing gesture

as

. On the contrary, a majority of errors existing for best-uWave, 10-uWave and 6DMG are corrected by MGRA. For example, the confusion cell of gesture

recognized as

is

,

and

for best-uWave, 10-uWave and 6DMG, respectively, in

Table 10, which are completely corrected by MGRA. The average accuracy of best-uWave, 10-uWave and 6DMG for this subject under the static scenario is

,

and

, respectively, lower than

of MGRA.

Then, we compare the performance with the test traces by the same subject under mobile scenarios, shown in

Table 11. Comparing

Table 6 to

Table 11, most recognition errors of MGRA still exist for best-uWave, 10-uWave and 6DMG, except a small fraction of errors are decreased. On the contrary, MGRA corrects or decreases most error items in

Table 11. For example, the cell of gesture

recognized as

B is

,

and

, respectively, for best-uWave, 10-uWave and 6DMG. Referring back to

Table 6, MGRA misclassified only

of gesture

as

B. The reason for gesture

being misrecognized as

B is the same as why gesture

B was misrecognized as

, as discussed in

Section 7.2. Therefore, MGRA outperforms best-uWave, 10-uWave and 6DMG clearly under mobile scenarios, whose average recognition accuracy for this subject is

,

,

and

, respectively.

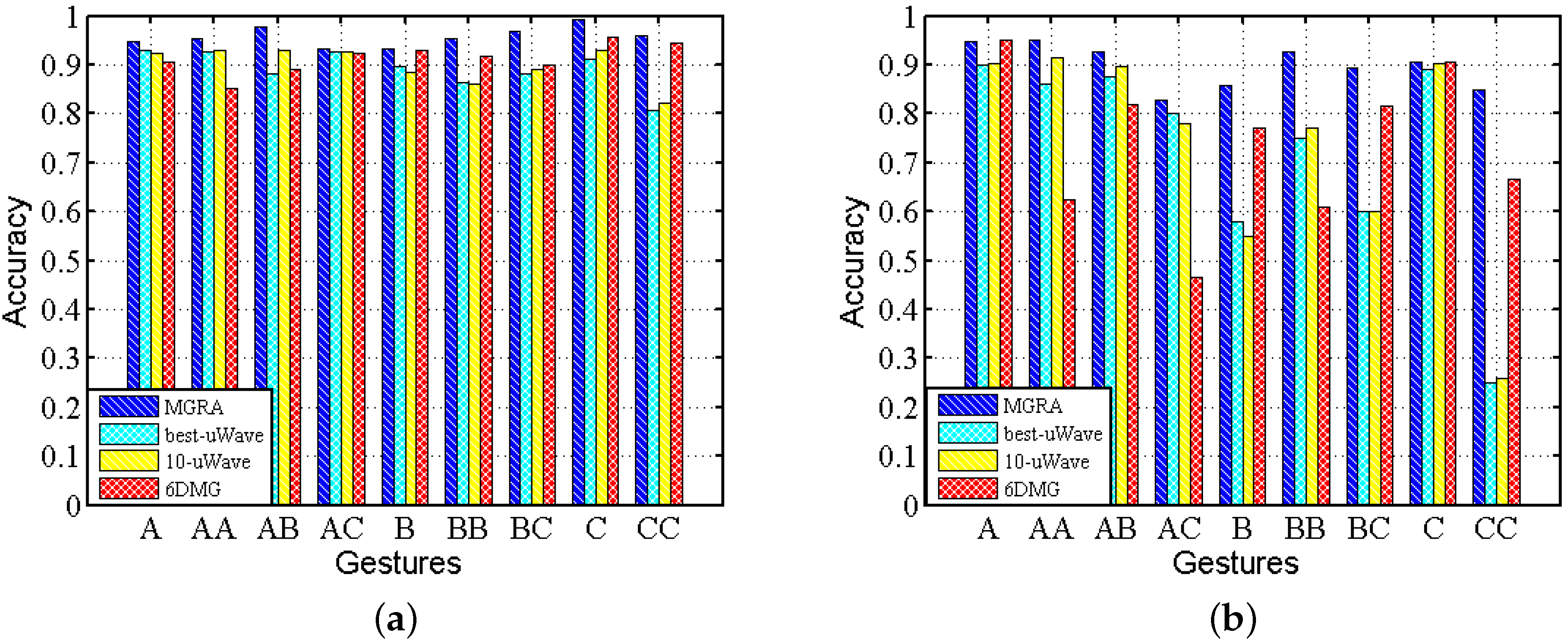

We calculate the average accuracy among all subjects for the test traces under static and mobile scenarios separately and depict the results in

Figure 15. It shows that MGRA not only achieves a higher accuracy of classification, but also it has a more stable performance across gestures and scenarios. For best-uWave, 10-uWave and 6DMG, they achieve high accuracy on static traces, but their accuracy decreases about

when tested with mobile traces. Moreover, the recognition accuracy is gesture dependent for uWave and 6DMG, especially under mobile scenarios.

Considering the impact of gesture set on recognition, we further compare the recognition accuracy on another gesture set,

i.e., Easy Set. The evaluation process is the same as the one on Confusion Set.

Table 12 shows the confusion matrices for one subject on Easy Set.

Table 12a shows that MGRA achieves higher accuracy on static traces from Easy Set, than the result in

Table 12d from Confusion Set. Only

of gesture

C is classified incorrectly as

B, and 5% of gesture 美 is classified incorrectly as 游. The average accuracy is

.

Table 12b shows that MGRA also achieves a higher average accuracy of

with traces on Easy Set under mobile scenarios. Recall that the features of MGRA are enumerated and selected based totally on Confusion Set. Therefore, the high classification accuracy on Easy Set confirms the gesture scalability of MGRA.

Here, we report the average accuracy comparison among all subjects and all gestures in

Table 13. It shows that all approaches improve their accuracy, comparing Easy Set to Confusion Set. For MGRA, the recognition accuracy only decreases

and

, from static test traces to mobile test traces for the two gesture sets, respectively. However, the other approaches drop more than

. This confirms that MGRA adapts more to mobile scenarios than uWave and 6DMG. Comparing the recognition accuracy on Easy Set, under static and mobile scenarios, MGRA holds accuracy higher than

for both. This indicates if the end user puts some efforts into the gesture design, MGRA can achieve high recognition accuracy no matter whether under static or mobile scenarios.

One question might be brought up: why does 6DMG fail in comparison to MGRA, which exploits the readings from both the accelerometer and gyroscope? This result basically is due to two reasons. The first is that MGRA extracts features from the time-domain, the frequency domain and SVD analysis, unlike 6DMG, which only extracts features from the time domain. The second is that MGRA applies mRMRto determine the feature impact order under both static and mobile scenarios and finds the best intersection of two orders. mRMR ensures the classification accuracy for selecting features of the highest relevance to the target class and with minimal redundancy.

7.4. Online Evaluation

Energy consumption is one of the major concerns for smartphone applications [

20]. Real-time response is also important for user-friendly interaction with mobile devices. Therefore, we conduct a cost comparison for MGRA, best-uWave, 10-uWave and 6DMG on the LG Nexus 5. We measure the energy consumption through PowerTutor [

21]. We count the training and classification time for the four recognition methods.

Table 14 shows the cost comparison among the four recognition approaches. MGRA has the smallest time and the minimum energy cost for classification. Moreover, the training time is less than 1 min for MGRA, for it extracts altogether 27 features and is trained with the multi-class SVM model.

The training and classification time is much higher for best-uWave and 10-uWave, because they take DTW on the raw time series as their cores. The raw series contains 200∼400 real values, larger than the 27 items of MGRA. Besides, DTW exploits dynamic programming, whose time complexity is . 10-uWave has a much longer classification time than best-uWave, because the test gesture needs to be compared to all 10 templates for each gesture class, i.e., 90 gesture templates.

The training time and energy of 6DMG is much greater than MGRA, since 6DMG extracts 41 features for the gesture trace and training the HMM model. Besides, the classification time and energy of 6DMG are also greater than those of MGRA. This comes from 6DMG needing both acceleration and gyroscope sensors.