Cutting State Diagnosis for Shearer through the Vibration of Rocker Transmission Part with an Improved Probabilistic Neural Network

Abstract

:1. Introduction

2. Related Works

2.1. Feature Extraction Methods

2.2. Optimization and Improvement of PNN

2.3. Discussion

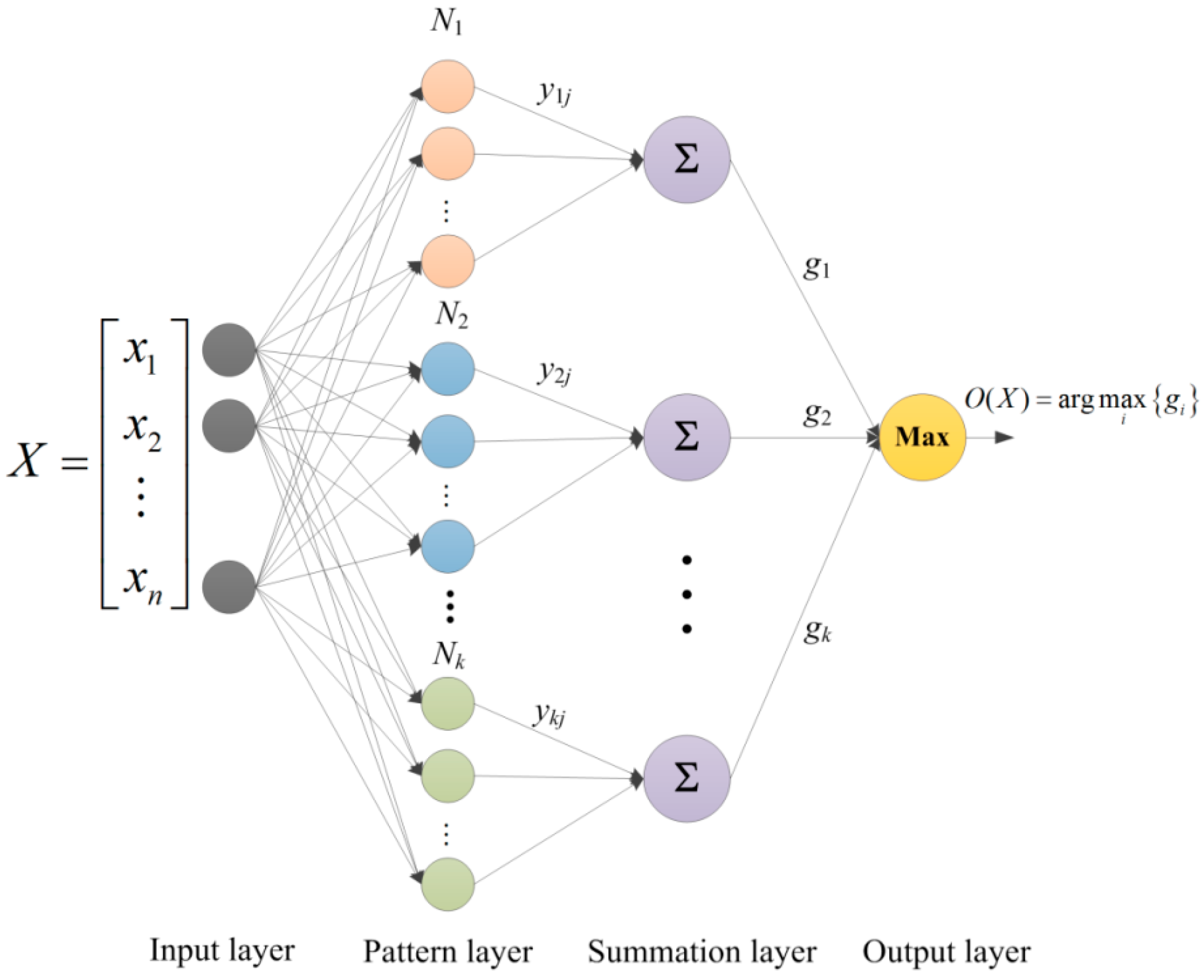

3. Probabilistic Neural Network and Parameter Optimization

3.1. Probabilistic Neural Network

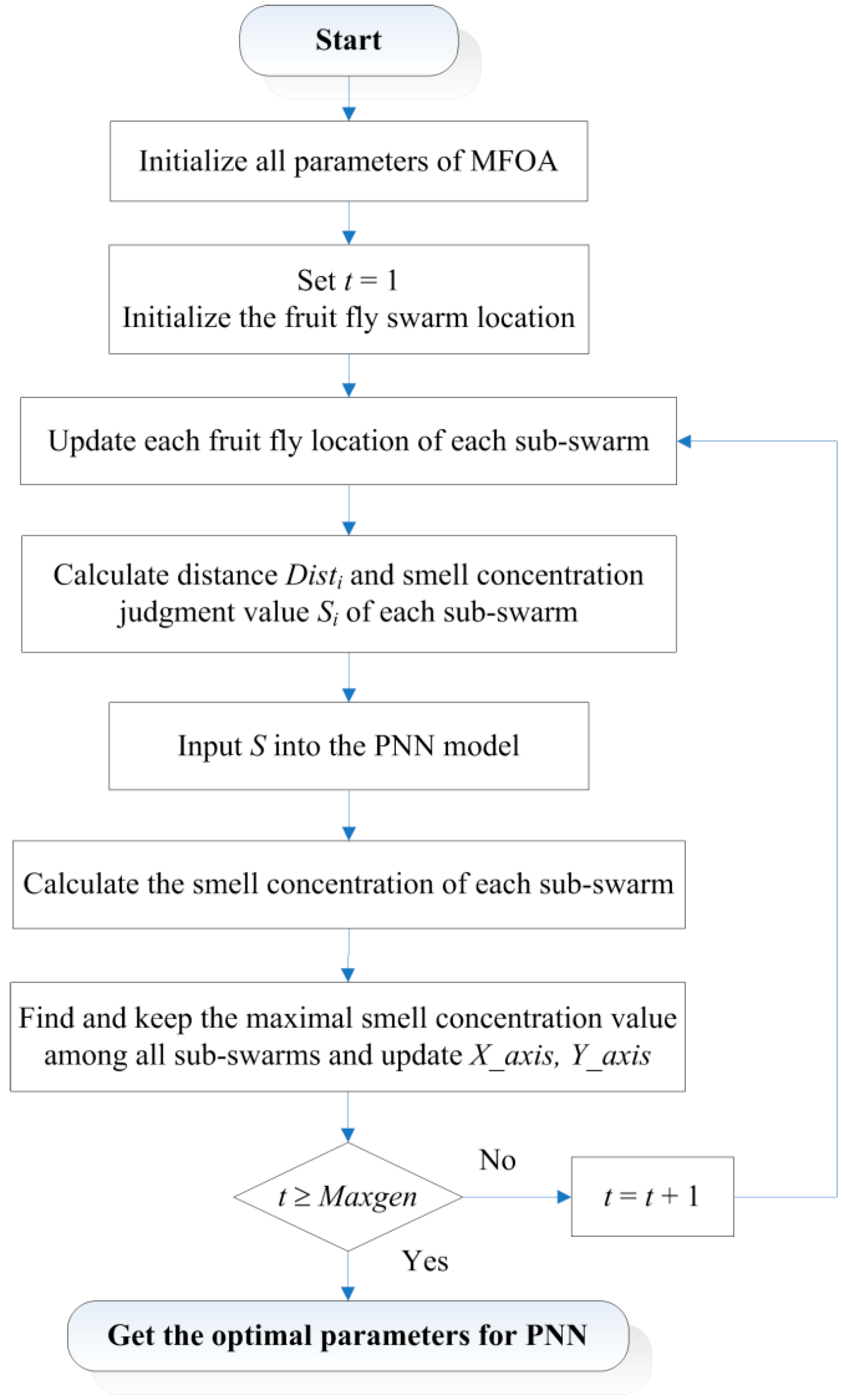

3.2. Modified Fruit Fly Optimization Algorithm

3.3. Parameters Optimization for PNN Using MFOA

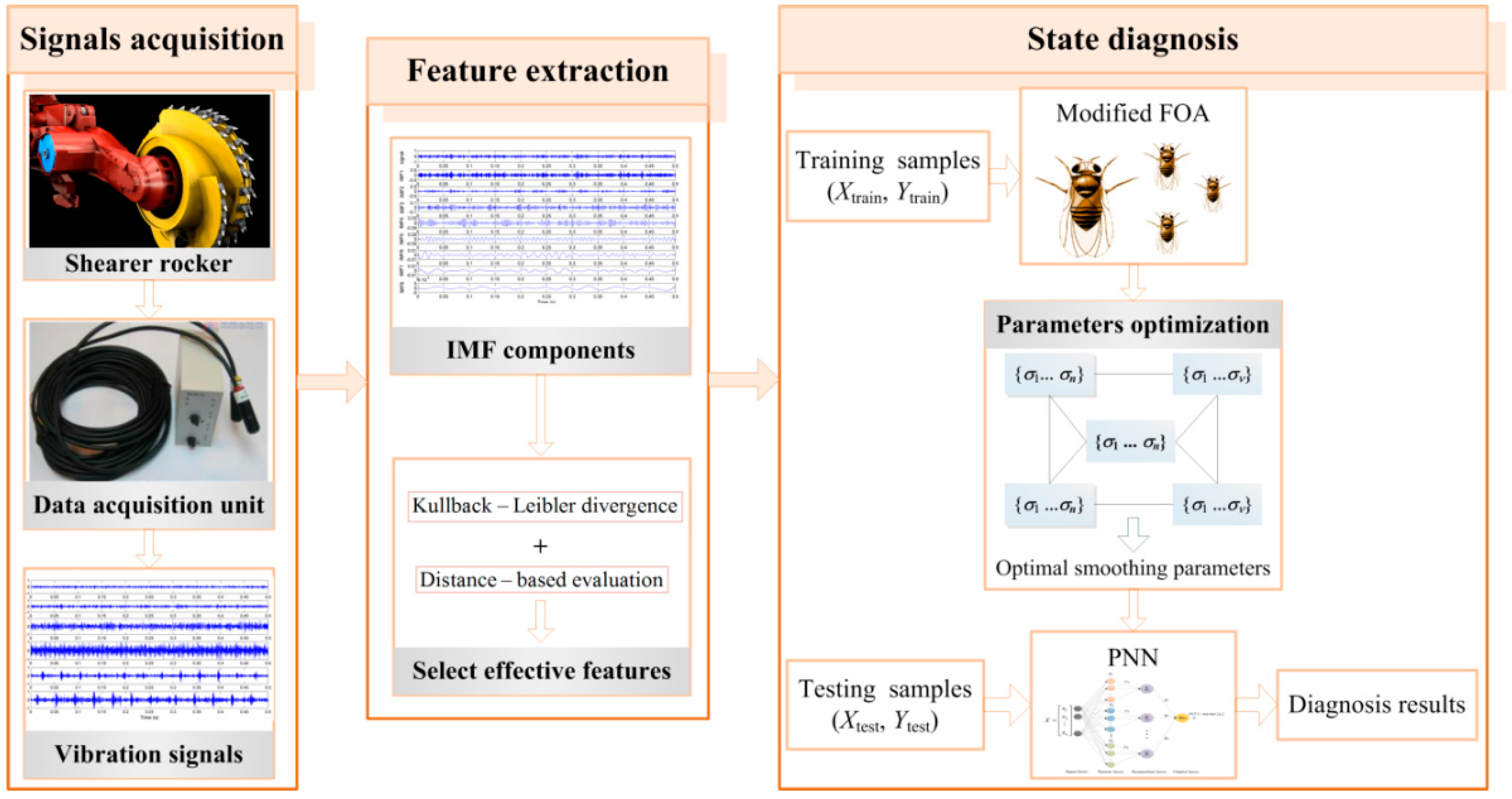

4. Diagnosis Process for Shearer Cutting State

4.1. Vibration Signals Acquisition of Rocker Transmission Part

4.2. Feature Extraction

4.2.1. KLD-Based False Components Identification

4.2.2. Distance-Based Feature Selection

4.3. State Diagnosis Process

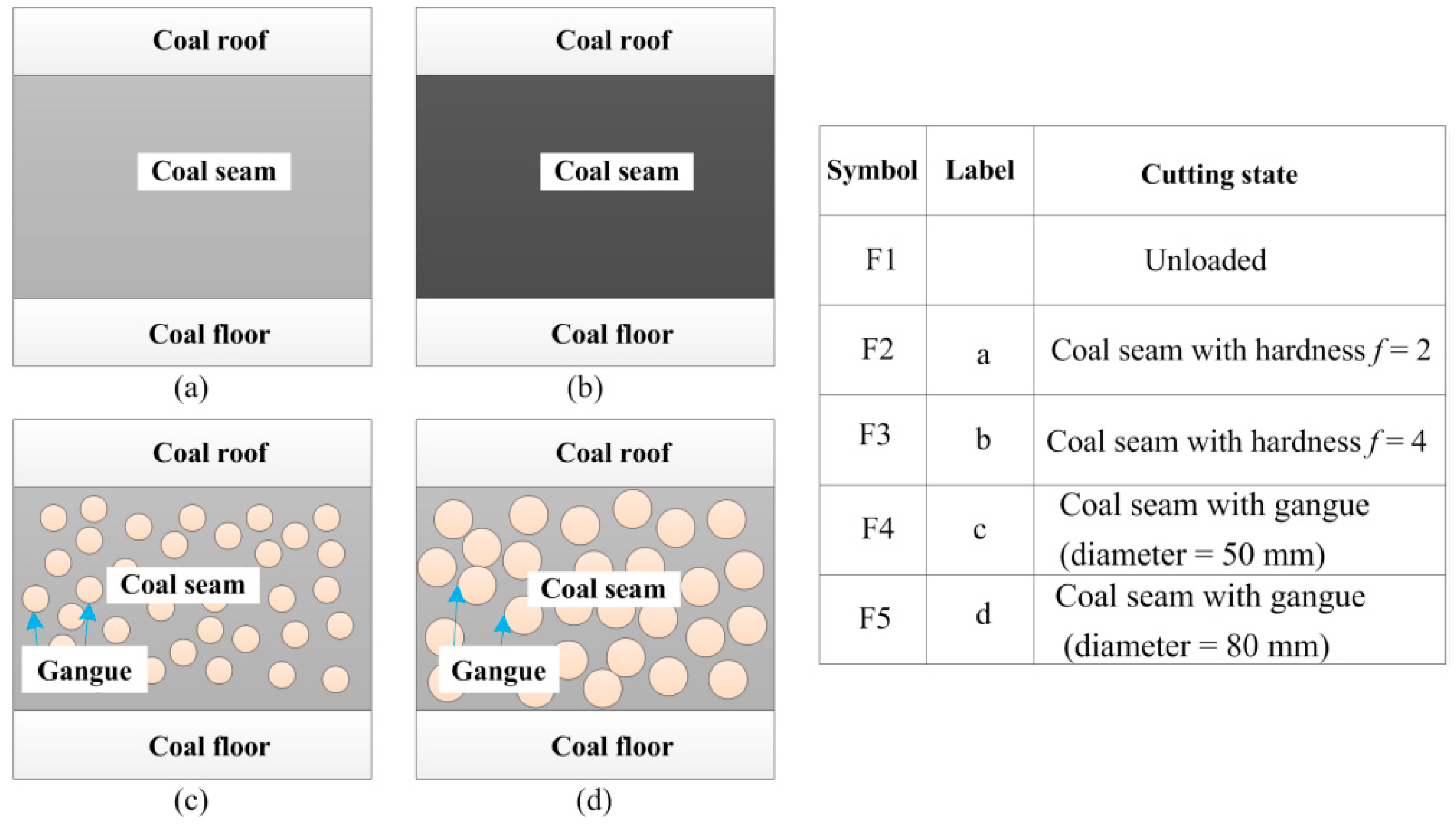

5. Simulation Studies

5.1. Samples Preparation

5.2. Simulation Results of Proposed Method

5.3. Comparison with Other Methods

5.4. Further Studies for Different Parameter Settings

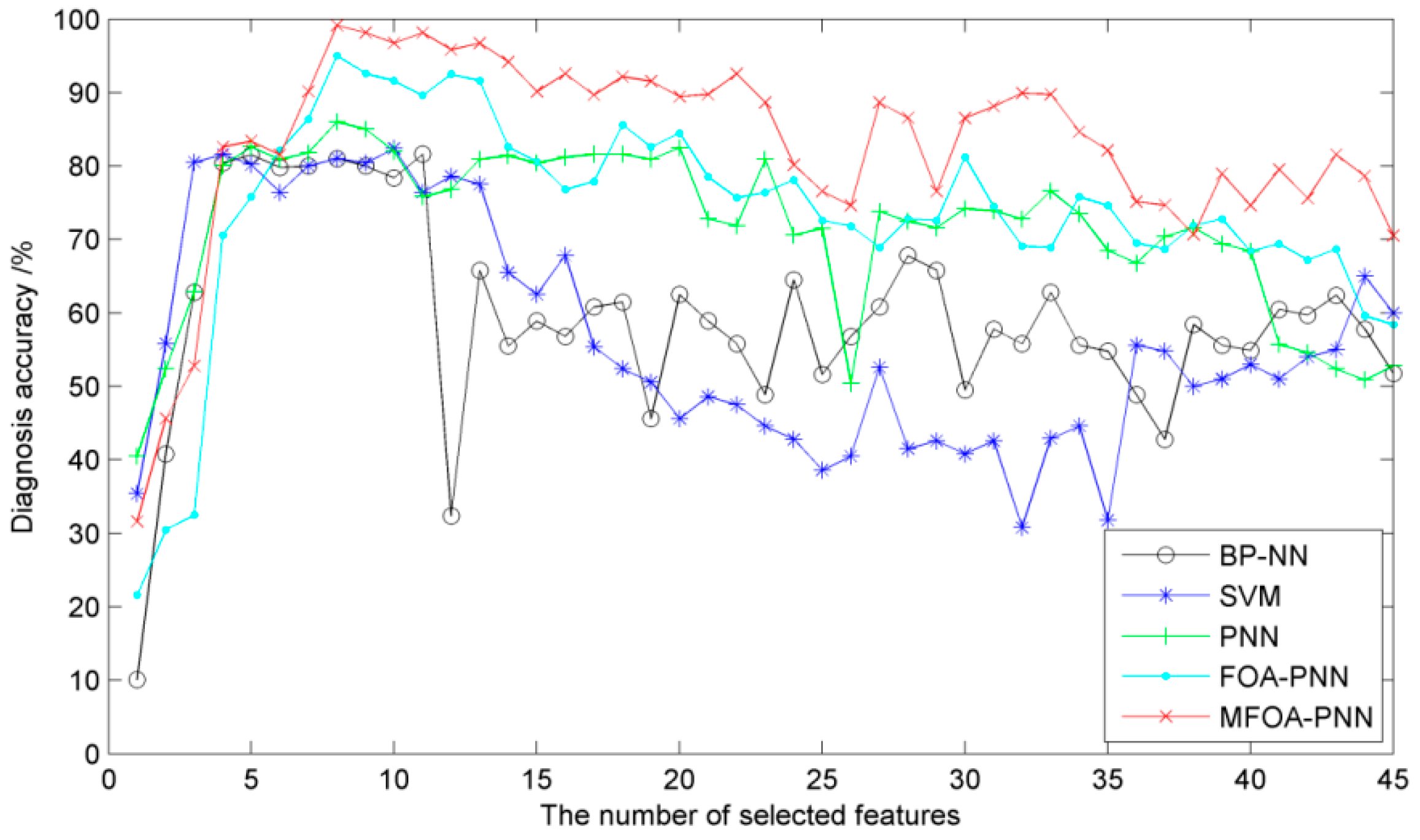

5.4.1. The Number of Selected Features

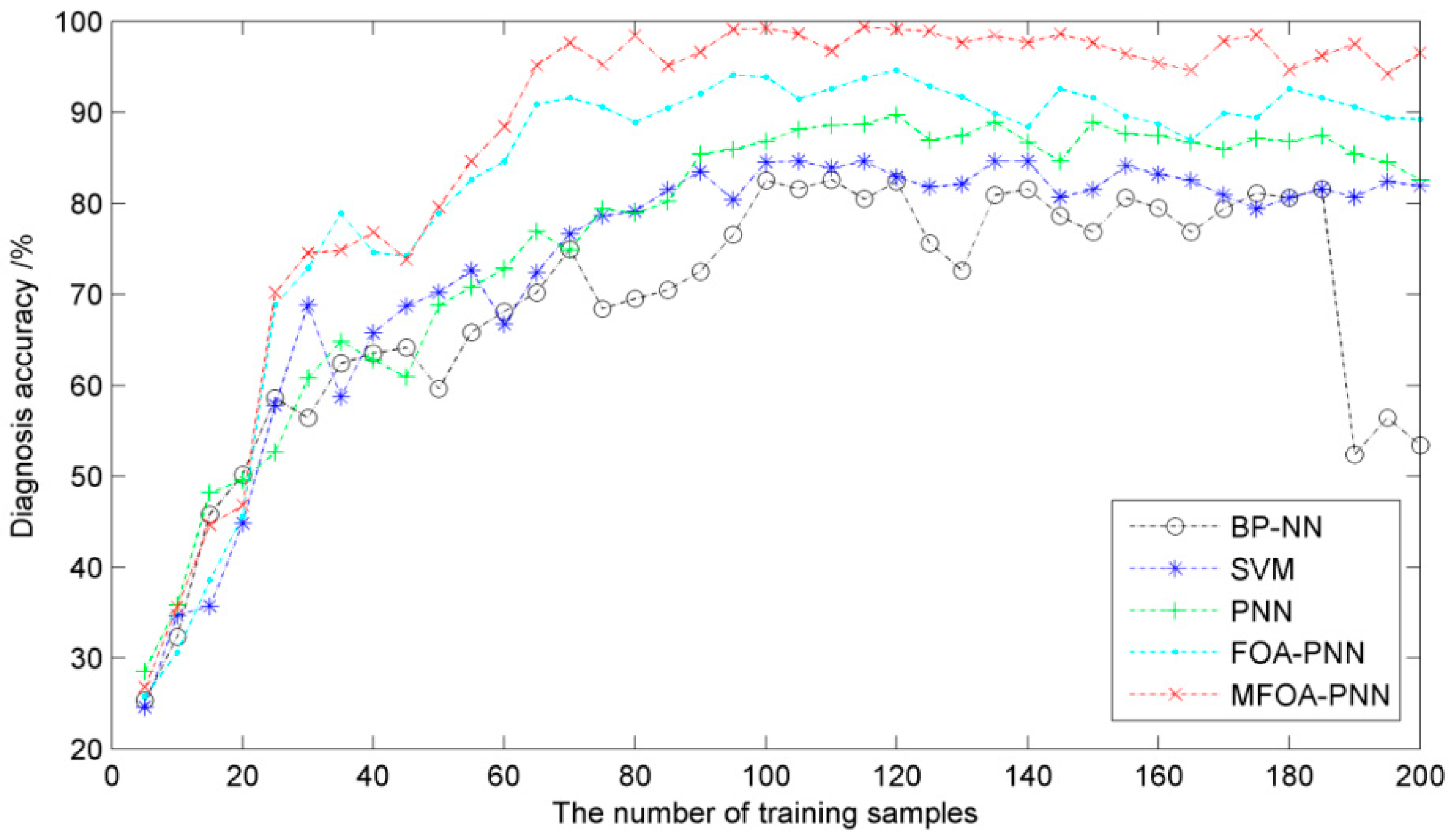

5.4.2. The Number of Training Samples

6. Conclusions and Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Bessinger, S.L.; Neison, M.G. Remnant roof coal thickness measurement with passive gamma ray instruments in coal mine. IEEE Trans. Ind. Appl. 1993, 29, 562–565. [Google Scholar] [CrossRef]

- Chufo, R.L.; Johnson, W.J. A radar coal thickness sensor. IEEE Trans. Ind. Appl. 1993, 29, 834–840. [Google Scholar] [CrossRef]

- Bausov, I.Y.; Stolarczyk, G.L.; Stolarczyk, L.G.; Koppenjan, S.D.S. Look-ahead radar and horizon sensing for coal cutting drums. In Proceedings of the 4th International Workshop on Advanced Ground Penetrating Radar, Naples, Italy, 27–29 June 2007; pp. 192–195.

- Yang, W.C.; Qiu, J.B.; Zhang, Y.; Zhao, F.J.; Liu, X. Acoustic modeling of coal-rock interface identification. Coal Sci. Technol. 2015, 43, 100–103. [Google Scholar]

- Markham, J.R.; Solomon, P.R.; Best, P.E. An FT-IR based instrument for measuring spectral emittance of material at high temperature. Rev. Sci. Instrum. 1990, 61, 3700–3708. [Google Scholar] [CrossRef]

- Sun, J.P.; She, J. Wavelet-based coal-rock image feature extraction and recognition. J. China Coal Soc. 2013, 38, 1900–1904. [Google Scholar]

- Sun, J.P. Study on identified method of coal and rock interface based on image identification. Coal Sci. Technol. 2011, 39, 77–79. [Google Scholar]

- Wang, B.P.; Wang, Z.C.; Li, Y.X. Application of wavelet packet energy spectrum in coal-rock interface recognition. Key Eng. Mater. 2011, 474, 1103–1106. [Google Scholar] [CrossRef]

- Sahoo, R.; Mazid, A.M. Application of opto-tactile sensor in shearer machine design to recognize rock surfaces in underground coal mining. In Proceedings of the IEEE International Conference on Industrial Technology, Churchill, Australia, 10–13 February 2009; pp. 916–921.

- Ren, F.; Liu, Z.Y.; Yang, Z.J.; Liang, G.Q. Application study on the torsional vibration test in coal-rock interface recognition. J. Taiyuan Univ. Technol. 2010, 41, 94–96. [Google Scholar]

- Chen, J.L.; Pan, J.; Li, Z.P.; Zi, Y.Y. Generator bearing fault diagnosis for wind turbine via empirical wavelet transform using measured vibration signals. Renew. Energy 2016, 89, 80–92. [Google Scholar] [CrossRef]

- Chen, J.H.; Yang, Y.C.; Wei, T.Y. Application of wavelet analysis and decision tree in UTDR data for diagnosis of membrane filtration. Chemometr. Intell. Lab. 2012, 116, 102–111. [Google Scholar] [CrossRef]

- Zhang, G.P. Neural networks for classification: A survey. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2002, 30, 451–462. [Google Scholar] [CrossRef]

- Si, L.; Wang, Z.B.; Liu, X.H.; Tan, C.; Xu, J.; Zheng, K.H. Multi-sensor data fusion identification for shearer cutting conditions based on parallel quasi-newton neural networks and the Dempster-Shafer theory. Sensors 2015, 15, 28772–28795. [Google Scholar] [CrossRef] [PubMed]

- Si, L.; Wang, Z.B.; Liu, X.; Tan, C.; Liu, Z.; Xu, J. Identification of shearer cutting patterns using vibration signals based on a least squares support vector machine with an improved fruit fly optimization algorithm. Sensors 2016, 16. [Google Scholar] [CrossRef] [PubMed]

- Friedman, N.; Geiger, D.; Goldszmidt, M. Bayesian network classifiers. Mach. Learn. 1997, 29, 131–163. [Google Scholar]

- Rosenblatt, F. The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev. 1958, 65, 386–408. [Google Scholar] [CrossRef] [PubMed]

- Abd Rahman, N.H.; Lee, M.H.; Latif, M.T. Artificial neural networks and fuzzy time series forecasting: An application to air quality. Qual. Quant. 2015, 49, 2633–2647. [Google Scholar] [CrossRef]

- Wu, C.L.; Chau, K.W.; Li, Y.S. Methods to improve neural network performance in daily flows prediction. J. Hydrol. 2009, 372, 80–93. [Google Scholar] [CrossRef]

- Chau, K.W.; Wu, C.L. A hybrid model coupled with singular spectrum analysis for daily rainfall prediction. J. Hydroinform. 2010, 12, 458–473. [Google Scholar] [CrossRef]

- Chiroma, H.; Abdul-kareem, S.; Khan, A.; Nawi, N.M.; Gital, A.Y.; Shuib, L.; Abubakar, A.I.; Rahman, M.Z.; Herawan, T. Global warming: Predicting opec carbon dioxide emissions from petroleum consumption using neural network and hybrid cuckoo search algorithm. PLoS ONE 2015, 10. [Google Scholar] [CrossRef] [PubMed]

- Hakim, S.J.S.; Razak, H.A.; Ravanfar, S.A. Fault diagnosis on beam-like structures from modal parameters using artificial neural networks. Measurement 2015, 76, 45–61. [Google Scholar] [CrossRef]

- Xu, J.; Wang, Z.B.; Tan, C.; Si, L.; Liu, X.H. A cutting pattern recognition method for shearers based on improved ensemble empirical mode decomposition and a probabilistic neural network. Sensors 2015, 15, 27721–27737. [Google Scholar] [CrossRef] [PubMed]

- Specht, D.F. Probabilistic neural networks and the polynomial Adaline as complementary techniques for classification. IEEE Trans. Neural Netw. 1990, 1, 111–121. [Google Scholar] [CrossRef] [PubMed]

- Specht, D.F. Probabilistic neural networks. Neural Netw. 1990, 3, 109–118. [Google Scholar] [CrossRef]

- Taormina, R.; Chau, K.W. Data-driven input variable selection for rainfall-runoff modeling using binary-coded particle swarm optimization and extreme learning machines. J. Hydrol. 2015, 529, 1617–1632. [Google Scholar] [CrossRef]

- Zhang, J.; Chau, K.W. Multilayer ensemble pruning via novel multi-sub-swarm particle swarm optimization. J. Univers. Comput. Sci. 2009, 15, 840–858. [Google Scholar]

- Mao, K.Z.; Tan, K.C.; Ser, W. Probabilistic neural-network structure determination for pattern classification. IEEE Trans. Neural Netw. 2000, 11, 1009–1016. [Google Scholar] [CrossRef] [PubMed]

- Georgiou, L.V.; Alevizos, P.D.; Vrahatis, M.N. Novel approaches to probabilistic neural networks through bagging and evolutionary estimating of prior probabilities. Neural Process. Lett. 2008, 27, 153–162. [Google Scholar] [CrossRef]

- Kusy, M.; Zajdel, R. Application of reinforcement learning algorithms for the adaptive computation of the smoothing parameter for probabilistic neural network. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 2163–2175. [Google Scholar] [CrossRef] [PubMed]

- Specht, D.F.; Romsdahl, H. Experience with adaptive probabilistic neural networks and adaptive general regression neural networks. In Proceedings of the 1994 IEEE International Conference on Neural Networks, IEEE World Congress on Computational Intelligence, Orlando, FL, USA, 27 June–2 July 1994; pp. 1203–1208.

- Ahadi, A.; Khoshnevis, A.; Saghir, M.Z. Windowed Fourier transform as an essential digital interferometry tool to study coupled heat and mass transfer. Opt. Laser Technol. 2014, 57, 304–317. [Google Scholar] [CrossRef]

- Veer, K.; Agarwal, R. Wavelet and short-time Fourier transform comparison-based analysis of myoelectric signals. J. Appl. Stat. 2015, 42, 1591–1601. [Google Scholar] [CrossRef]

- Cui, H.M.; Zhao, R.M.; Hou, Y.L. Improved threshold denoising method based on wavelet transform. Phys. Procedia 2012, 33, 1354–1359. [Google Scholar]

- Feldman, M. Hilbert transform in vibration analysis. Mech. Syst. Signal Process. 2011, 25, 735–802. [Google Scholar] [CrossRef]

- Huang, N.E.; Shen, Z.; Long, S.R. The empirical mode decomposition and the Hilbert spectrum for nonlinear and nonstationary time series analysis. Proc. R. Soc. Lond. Ser. 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Wu, Z.H.; Huang, N.E. A study of the characteristics of white noise using the empirical mode decomposition method. Proc. R. Soc. Lond. A 2004, 460, 1597–1611. [Google Scholar] [CrossRef]

- Wang, W.C.; Chau, K.W.; Xu, D.M.; Chen, X.Y. Improving forecasting accuracy of annual runoff time series using ARIMA based on EEMD decomposition. Water Resour. Manag. 2015, 29, 2655–2675. [Google Scholar] [CrossRef]

- Yang, B.S.; Han, T.; An, J.L. ART-KOHONEN neural network for fault diagnosis of rotating machinery. Mech. Syst. Signal. Process. 2014, 18, 645–657. [Google Scholar] [CrossRef]

- Yu, X.; Ding, E.; Che, C.; Liu, X.; Li, L. A novel characteristic frequency bands extraction method for automatic bearing fault diagnosis based on Hilbert Huang transform. Sensors 2015, 15, 27869–27893. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Zhou, G.; Zhu, Y.; Li, L.; Wang, D. Research on parallel ensemble empirical mode decomposition denoising method for partial discharge signals based on cloud platform. Trans. China Electrotech. Soc. 2015, 30, 213–222. [Google Scholar]

- Demir, B.; Erturk, S. Empirical mode decomposition of hyperspectral images for support vector machine classification. IEEE Trans. Geosci. Remote. 2010, 48, 4071–4084. [Google Scholar] [CrossRef]

- Zhang, F.; Yu, X.; Chen, C.J.; Li, Y.F.; Huang, H.Z. Fault diagnosis of rotating machinery based on kernel density estimation and Kullback-Leibler divergence. J. Mech. Sci. Technol. 2014, 28, 4441–4454. [Google Scholar] [CrossRef]

- Alweshah, M.; Abdullah, S. Hybridizing firefly algorithms with a probabilistic neural network for solving classification problems. Appl. Soft Comput. 2015, 35, 513–524. [Google Scholar] [CrossRef]

- Chtioui, Y.; Panigrahi, S.; Marsh, R. Conjugate gradient and approximate Newton methods for an optimal probabilistic neural network for food color classification. Opt. Eng. 1998, 37, 3015–3023. [Google Scholar] [CrossRef]

- Kusy, M.; Zajdel, R. Probabilistic neural network training procedure based on Q(0)-learning algorithm in medical data classification. Appl. Intell. 2014, 41, 837–854. [Google Scholar] [CrossRef]

- Pan, W.T. A new fruit fly optimization algorithm: Taking the financial distress model as an example. Knowl. Based Syst. 2012, 26, 69–74. [Google Scholar] [CrossRef]

- Jones, M.C.; Marron, S.; Sheather, S.J. A Brief Survey of Bandwidth Selection for Density Estimation. J. Am. Stat. Assoc. 1996, 91, 401–407. [Google Scholar] [CrossRef]

| Feature ID | 5 | 9 | 16 | 21 |

|---|---|---|---|---|

| Feature type | f5 of signal | f9 of signal | f7 of IMF1 | f3 of IMF2 |

| βi | 5.54 | 4.28 | 3.86 | 3.74 |

| Feature ID | 28 | 35 | 36 | 39 |

| Feature type | f1 of IMF3 | f8 of IMF3 | f9 of IMF3 | f3 of IMF4 |

| βi | 4.82 | 3.75 | 4.81 | 3.96 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Si, L.; Wang, Z.; Liu, X.; Tan, C.; Zhang, L. Cutting State Diagnosis for Shearer through the Vibration of Rocker Transmission Part with an Improved Probabilistic Neural Network. Sensors 2016, 16, 479. https://doi.org/10.3390/s16040479

Si L, Wang Z, Liu X, Tan C, Zhang L. Cutting State Diagnosis for Shearer through the Vibration of Rocker Transmission Part with an Improved Probabilistic Neural Network. Sensors. 2016; 16(4):479. https://doi.org/10.3390/s16040479

Chicago/Turabian StyleSi, Lei, Zhongbin Wang, Xinhua Liu, Chao Tan, and Lin Zhang. 2016. "Cutting State Diagnosis for Shearer through the Vibration of Rocker Transmission Part with an Improved Probabilistic Neural Network" Sensors 16, no. 4: 479. https://doi.org/10.3390/s16040479