Analysis of Camera Arrays Applicable to the Internet of Things

Abstract

:1. Introduction

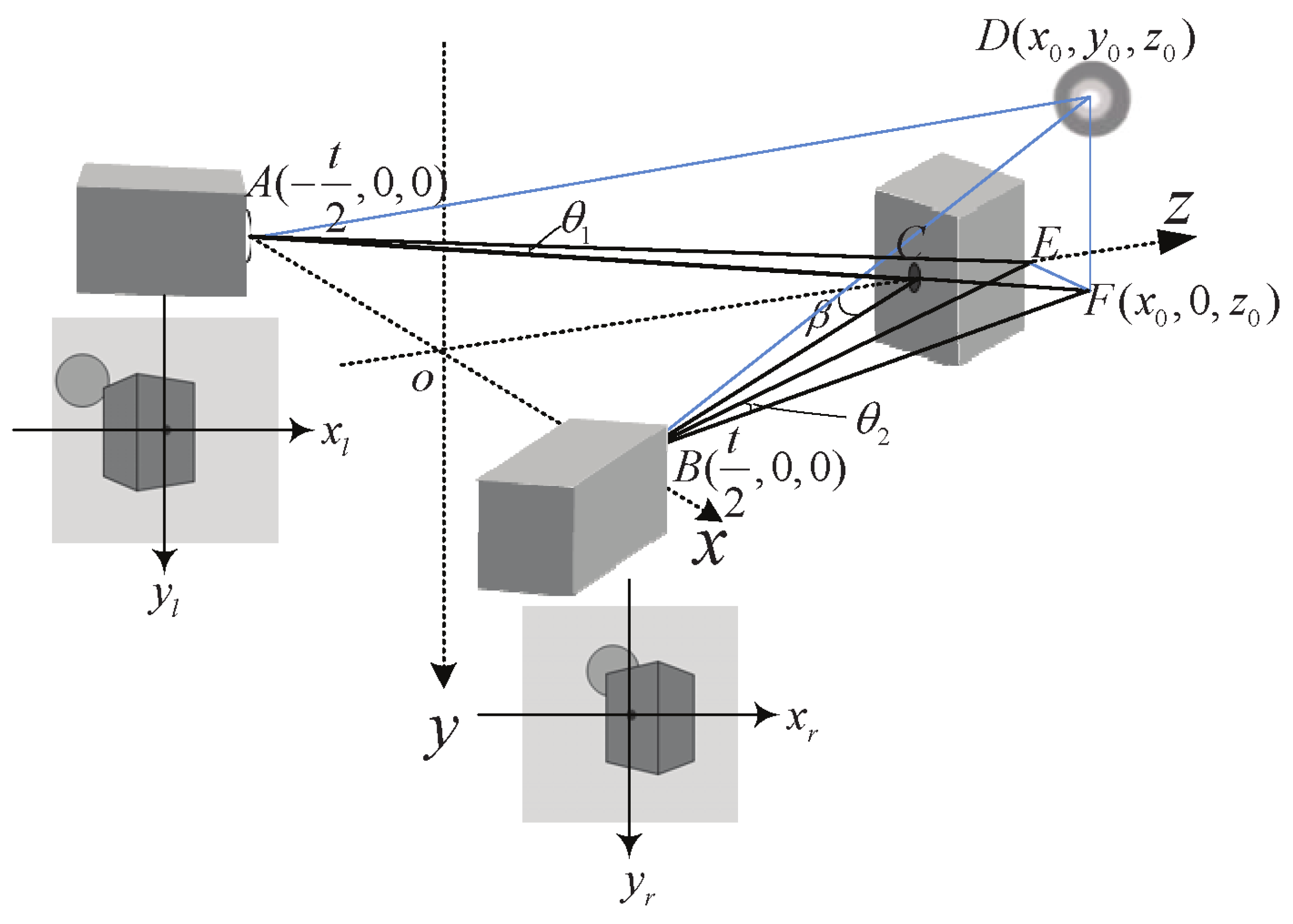

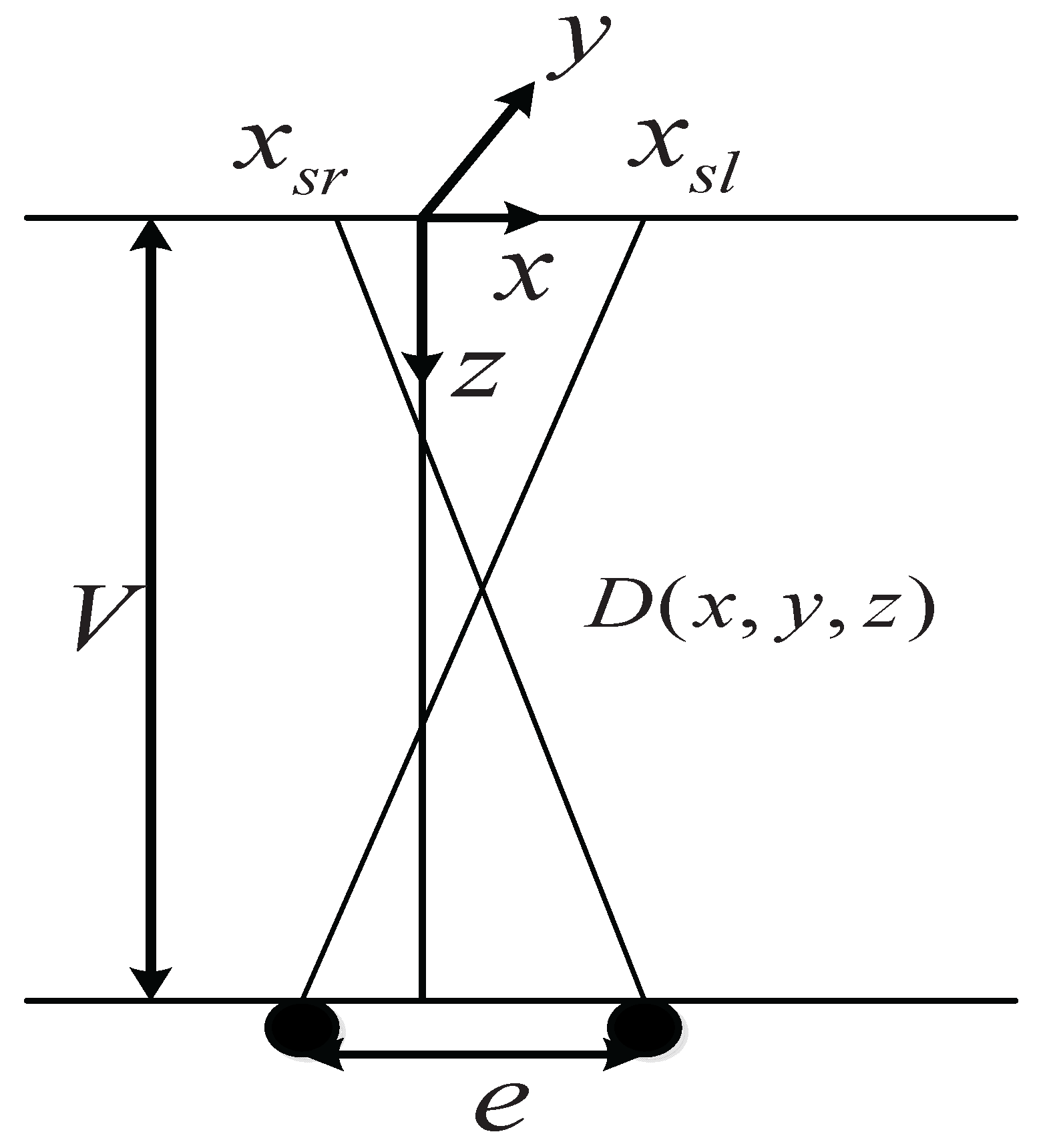

2. Camera Array Models

2.1. Converged Camera Array Model

2.2. Parallel Camera Array Model

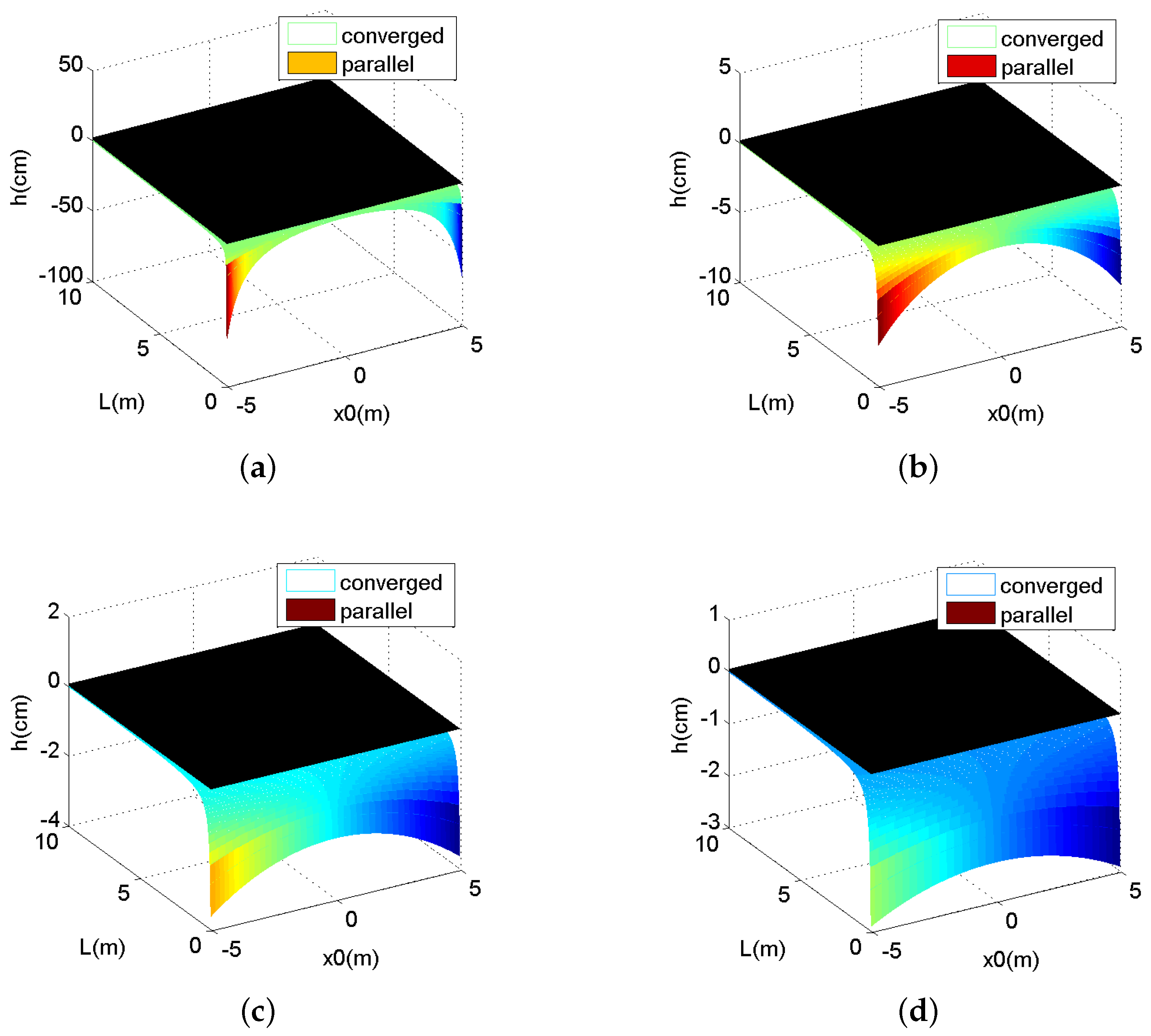

2.3. Model Analysis

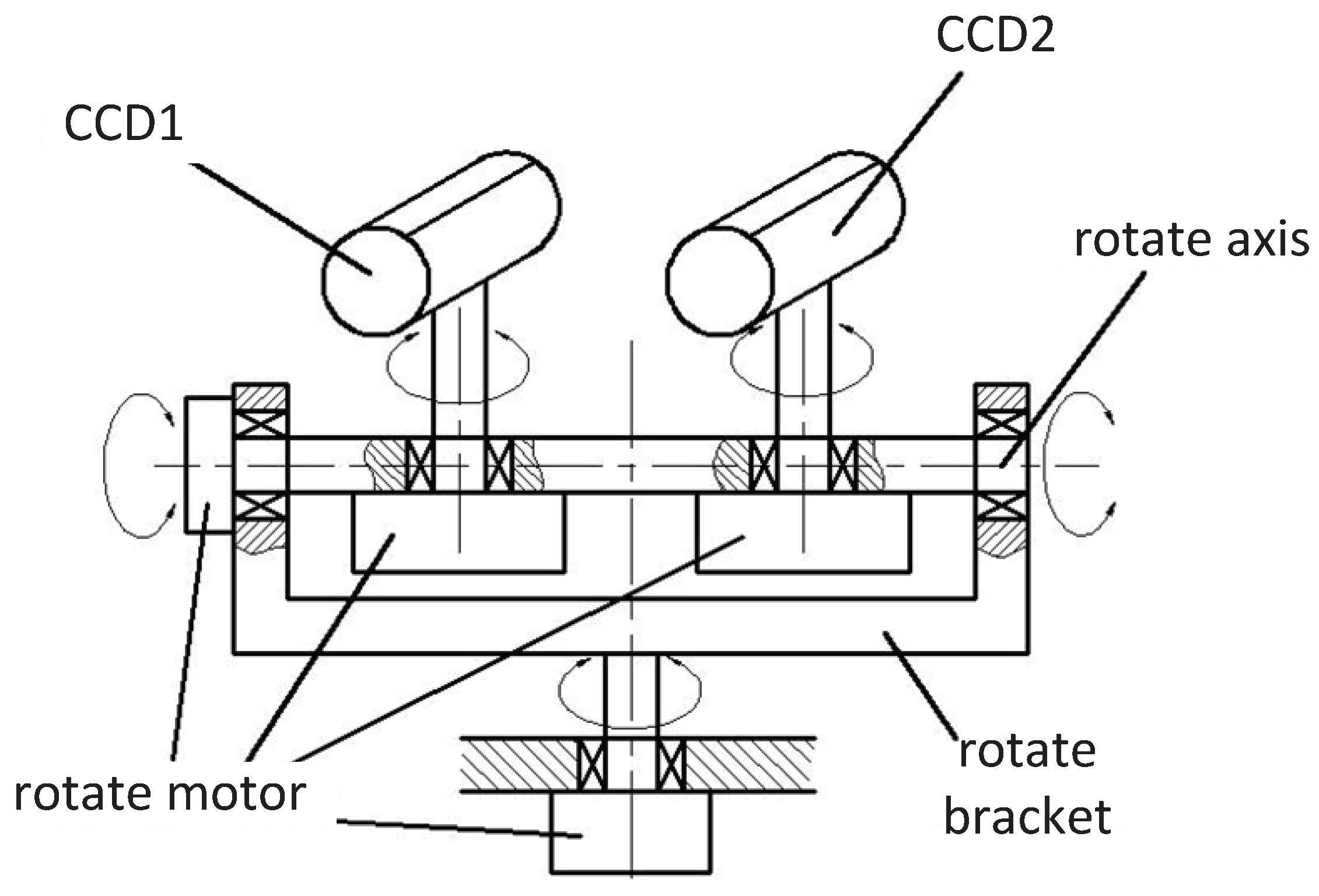

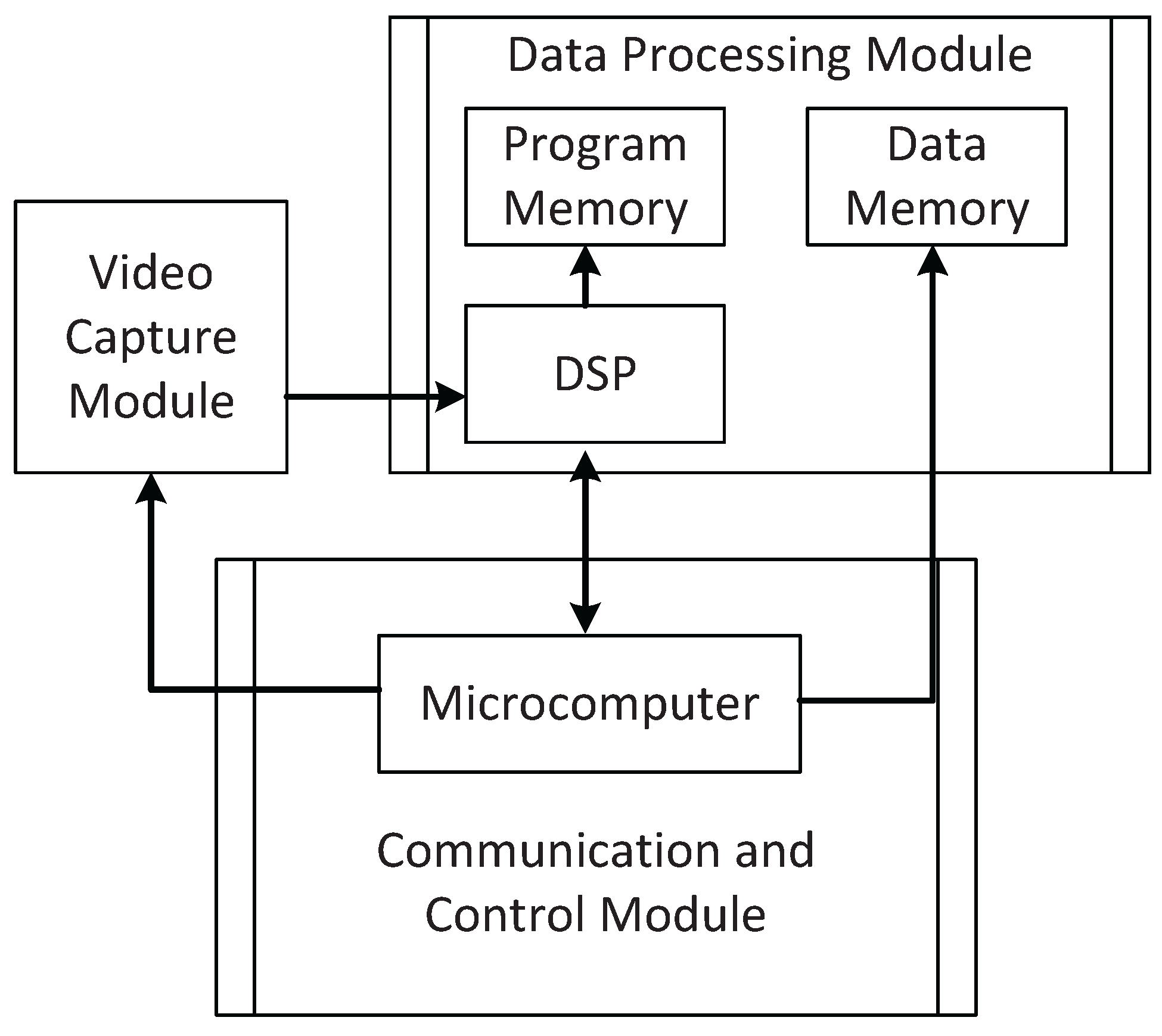

3. Auto-Converged Camera Array Realization

4. Auto-Converged Experiment and Measure

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Lv, Z.; Halawani, A.; Feng, S.; Réhman, S.; Li, H. Touch-less Interactive Augmented Reality Game on Vision Based Wearable Device. Pers. Ubiquitous Comput. 2015, 19, 551–567. [Google Scholar] [CrossRef]

- Monares, Á.; Ochoa, S.F.; Santos, R.; Orozco, J.; Meseguer, R. Modeling IoT-based solutions using human-centric wireless sensor networks. Sensors 2014, 14, 15687–15713. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tiete, J.; Domínguez, F.; Silva, B.D.; Segers, L.; Steenhaut, K.; Touhafi, A. SoundCompass: A distributed MEMS microphone array-based sensor for sound source localization. Sensors 2014, 14, 1918–1949. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sun, T.; Fang, J.Y.; Zhao, D.; Liu, X.; Tong, Q.X. A Novel Multi-Digital Camera System Based on Tilt-Shift Photography Technology. Sensors 2015, 15, 7823–7843. [Google Scholar] [CrossRef] [PubMed]

- Lv, Z.; Tek, A.; Da Silva, F.; Empereur-Mot, C.; Chavent, M.; Baaden, M. Game on, science-how video game technology may help biologists tackle visualization challenges. PLoS ONE 2013, 8, 57990. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.; Kim, K.; Lee, S. Stereo camera based virtual cane system with identifiable distance tactile feedback for the blind. Sensors 2014, 14, 10412–10431. [Google Scholar] [CrossRef] [PubMed]

- Yamaguchi, M.; Wakunami, K.; Inaniwa, M. Computer generated hologram from full-parallax 3D image data captured by scanning vertical camera array. Chin. Opt. Lett. 2014, 12, 060018-1–060018-6. [Google Scholar] [CrossRef]

- Jovanov, L.; Pižurica, A.; Philips, W. Fuzzy logic-based approach to wavelet denoising of 3D images produced by time-of-flight cameras. Opt. Express 2010, 18, 22651–22676. [Google Scholar] [CrossRef] [PubMed]

- Bradshaw, M.F.; Hibbard, P.B.; Parton, A.D.; Rose, D.; Langley, K. Surface orientation, modulation frequency and the detection and perception of depth defined by binocular disparity and motion parallax. Vis. Res. 2006, 46, 2636–2644. [Google Scholar] [CrossRef] [PubMed]

- Shim, H.; Lee, S. Hybrid exposure for depth imaging of a time-of-flight depth sensor. Opt. Express 2014, 22, 13393–13402. [Google Scholar] [CrossRef] [PubMed]

- Son, J.Y.; Javidi, B.; Kwack, K.D. Methods for displaying three-dimensional images. Proc. IEEE 2006, 94, 502–523. [Google Scholar] [CrossRef]

- Jung, K.; Park, J.I.; Choi, B.U. Interactive auto-stereoscopic display with efficient and flexible interleaving. Opt. Eng. 2012, 51, 027402-1–027402-12. [Google Scholar] [CrossRef]

- Shin, D.; Daneshpanah, M.; Javidi, B. Generalization of three-dimensional N-ocular imaging systems under fixed resource constraints. Opt. Lett. 2012, 37, 19–21. [Google Scholar] [CrossRef] [PubMed]

- Son, J.Y.; Javidi, B. Three-dimensional imaging methods based on multiview images. Disp. Technol. J. 2005, 1, 125–140. [Google Scholar] [CrossRef]

- Zhang, L.; Tam, W.J. Stereoscopic image generation based on depth images for 3D TV. Broadcast. IEEE Trans. 2005, 51, 191–199. [Google Scholar] [CrossRef]

- Kooi, F.L.; Toet, A. Visual comfort of binocular and 3D displays. Displays 2004, 25, 99–108. [Google Scholar] [CrossRef]

- Yamanoue, H. The differences between toed-in camera configurations and parallel camera configurations in shooting stereoscopic images. In Proceedings of the 2006 IEEE International Conference on Multimedia and Expo, Toronto, ON, Canada, 9–12 July 2006; pp. 1701–1704.

- Yoon, K.H.; Ju, H.; Park, I.; Kim, S.K. Determination of the optimum viewing distance for a multi-view auto-stereoscopic 3D display. Opt. Express 2014, 22, 22616–22631. [Google Scholar] [CrossRef] [PubMed]

- Son, J.Y.; Yeom, S.; Lee, D.S.; Lee, K.H.; Park, M.C. A stereoscopic camera model of focal plane detector array. Disp. Technol. J. 2011, 7, 281–288. [Google Scholar]

- Fazio, G.; Hora, J.; Allen, L.; Ashby, M.; Barmby, P.; Deutsch, L.; Huang, J.S.; Kleiner, S.; Marengo, M.; Megeath, S.; et al. The infrared array camera (IRAC) for the spitzer space telescope. Astrophys. J. Suppl. Ser. 2004, 154, 10–16. [Google Scholar] [CrossRef]

- Sawhney, H.S.; Kumar, R. True multi-image alignment and its application to mosaicing and lens distortion correction. Pattern Anal. Mach. Intell. IEEE Trans. 1999, 21, 235–243. [Google Scholar] [CrossRef]

- Yoneyama, S.; Kikuta, H.; Kitagawa, A.; Kitamura, K. Lens distortion correction for digital image correlation by measuring rigid body displacement. Opt. Eng. 2006, 45, 023602. [Google Scholar] [CrossRef]

- Yang, J.; Gao, Z.; Chu, R.; Liu, Y.; Lin, Y. New stereo shooting evaluation metric based on stereoscopic distortion and subjective perception. Opt. Rev. 2015, 22, 459–468. [Google Scholar] [CrossRef]

- Amirzadeh, A.; Karimpour, A. An interacting Fuzzy-Fading-Memory-based Augmented Kalman Filtering method for maneuvering target tracking. Digit. Sig. Process. 2013, 23, 1678–1685. [Google Scholar] [CrossRef]

- Yang, J.; Liu, Y.; Meng, Q.; Chu, R. Objective Evaluation Criteria for Stereo Camera Shooting Quality under Different Shooting Parameters and Shooting Distances. IEEE Sens. J. 2015, 15, 4508–4521. [Google Scholar] [CrossRef]

- Moorthy, A.K.; Bovik, A.C. Blind image quality assessment: From natural scene statistics to perceptual quality. IEEE Trans. Image Process. 2011, 20, 3350–3364. [Google Scholar] [CrossRef] [PubMed]

| 1 m | 2 m | 3 m | 4 m | 5 m | |

|---|---|---|---|---|---|

| 6 m | 7 m | 8 m | 9 m | 10 m | |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, J.; Xu, R.; Lv, Z.; Song, H. Analysis of Camera Arrays Applicable to the Internet of Things. Sensors 2016, 16, 421. https://doi.org/10.3390/s16030421

Yang J, Xu R, Lv Z, Song H. Analysis of Camera Arrays Applicable to the Internet of Things. Sensors. 2016; 16(3):421. https://doi.org/10.3390/s16030421

Chicago/Turabian StyleYang, Jiachen, Ru Xu, Zhihan Lv, and Houbing Song. 2016. "Analysis of Camera Arrays Applicable to the Internet of Things" Sensors 16, no. 3: 421. https://doi.org/10.3390/s16030421