Performance Analysis of Visible Light Communication Using CMOS Sensors

Abstract

:1. Introduction

2. Fundamental of the System

2.1. Operation of CMOS Sensors and Camera

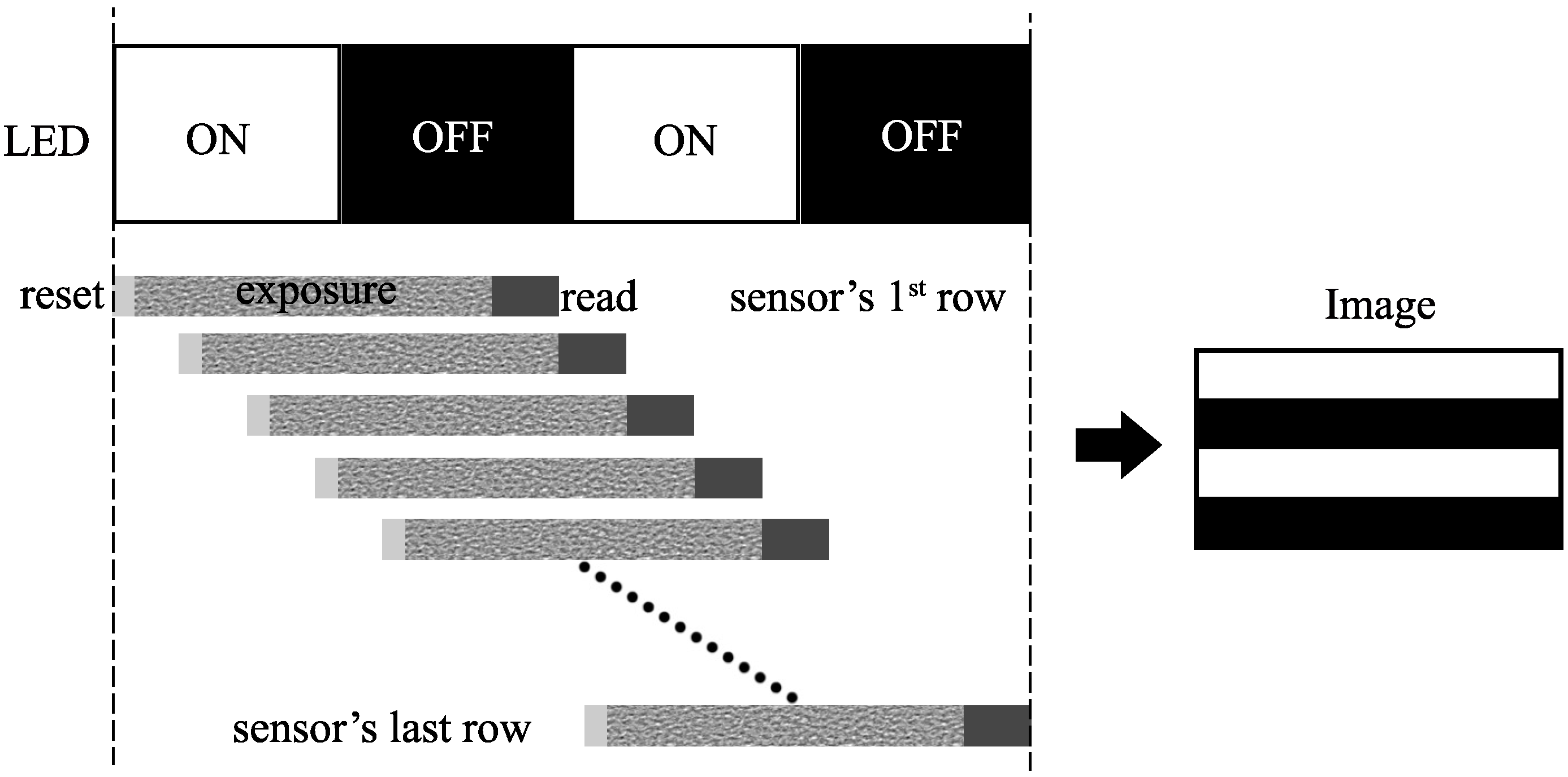

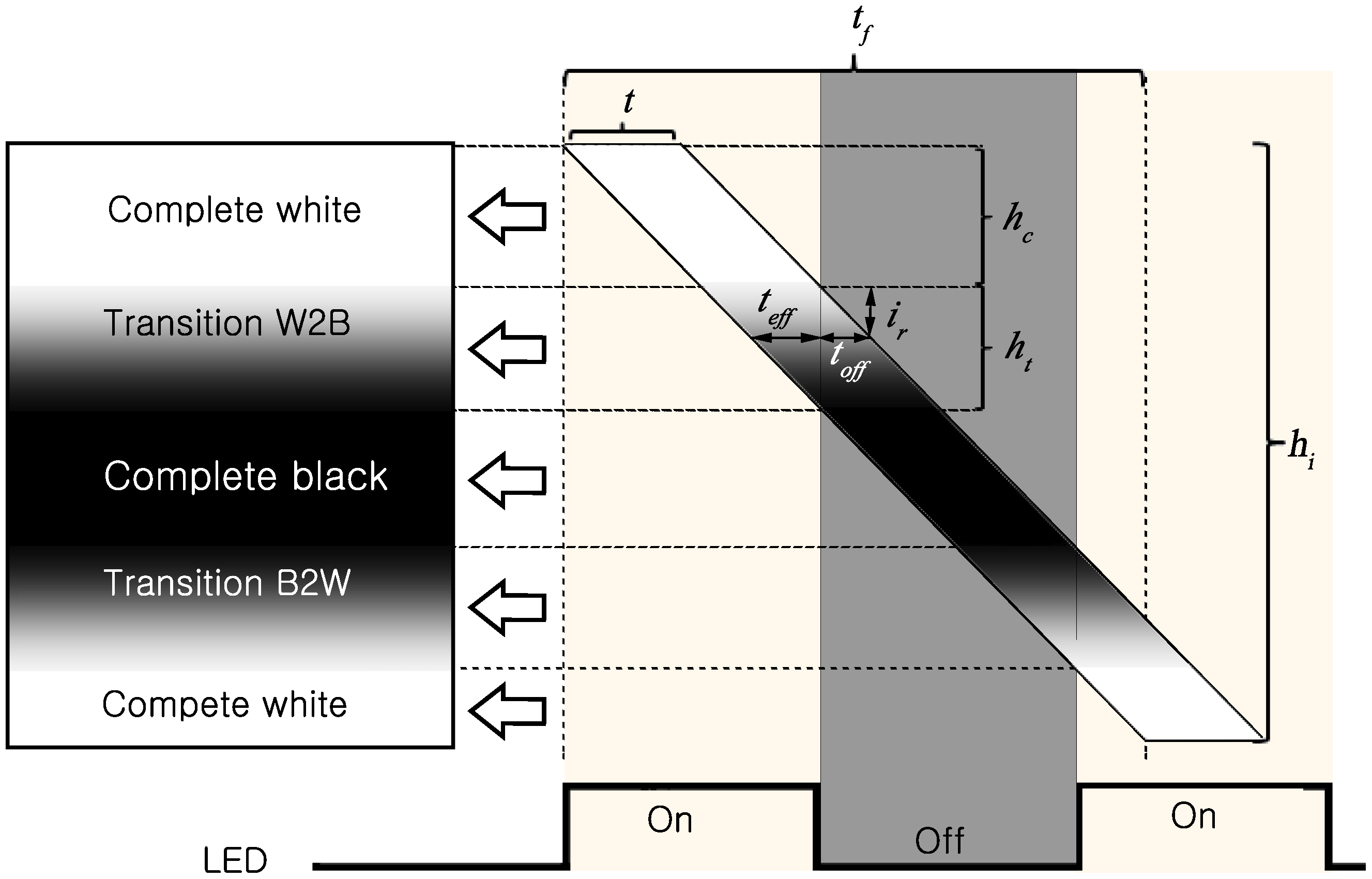

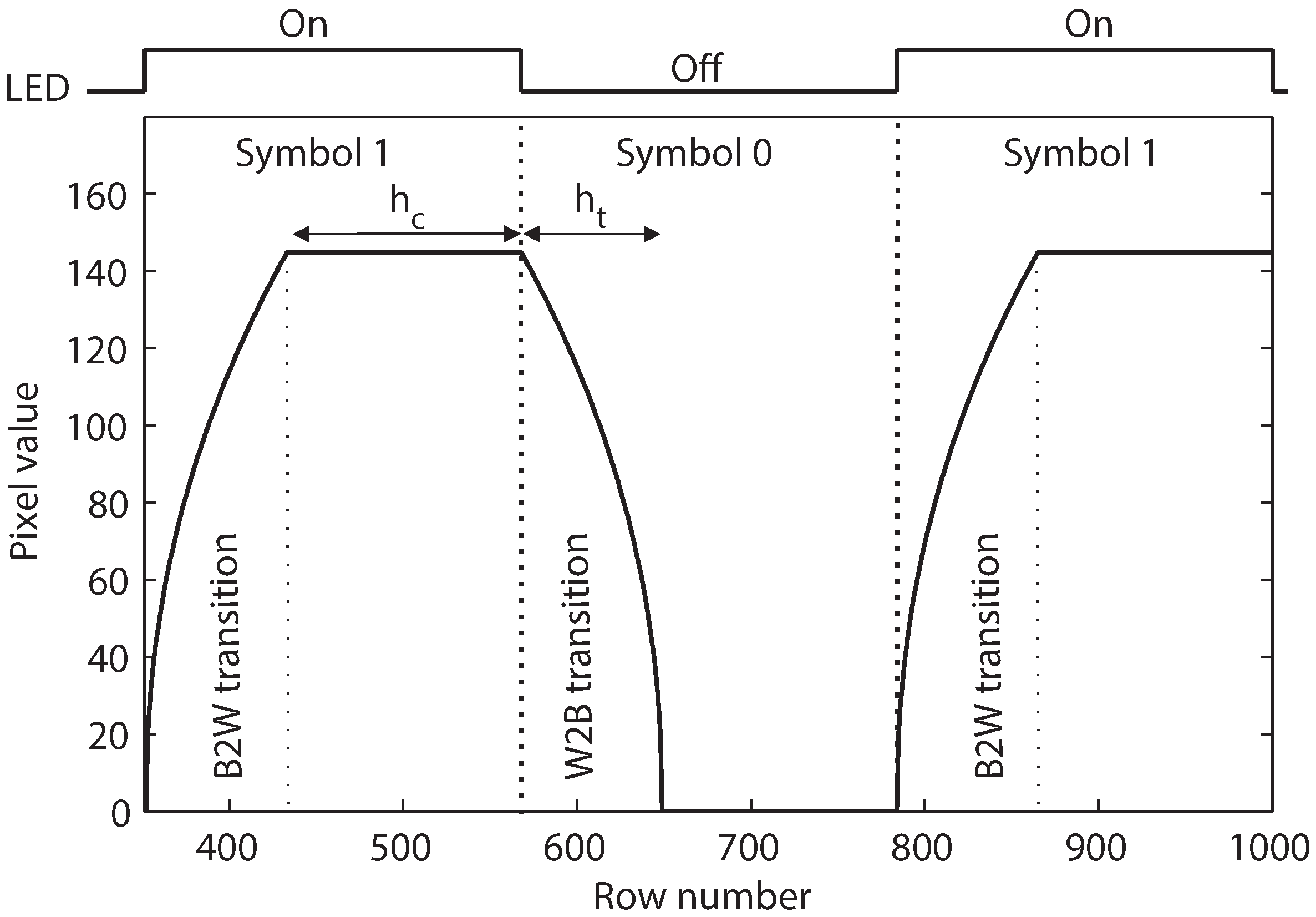

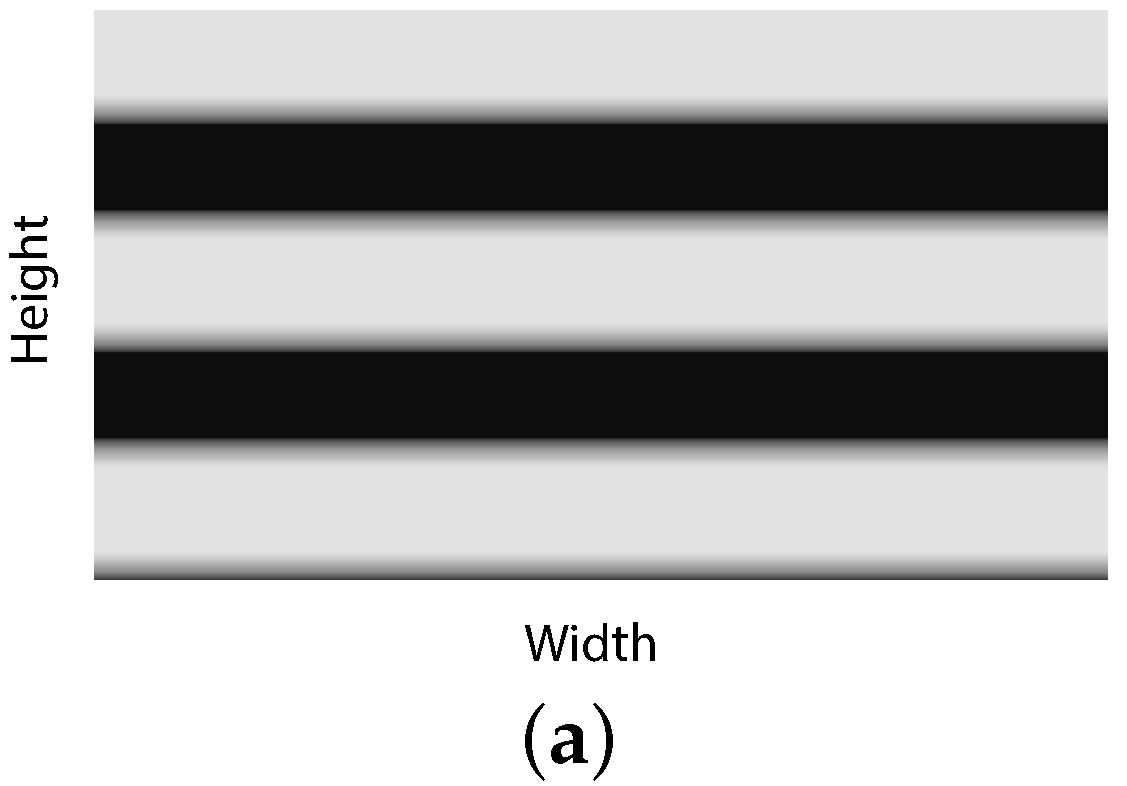

2.1.1. Rolling Shutter Mechanism and its Advantage in VLC

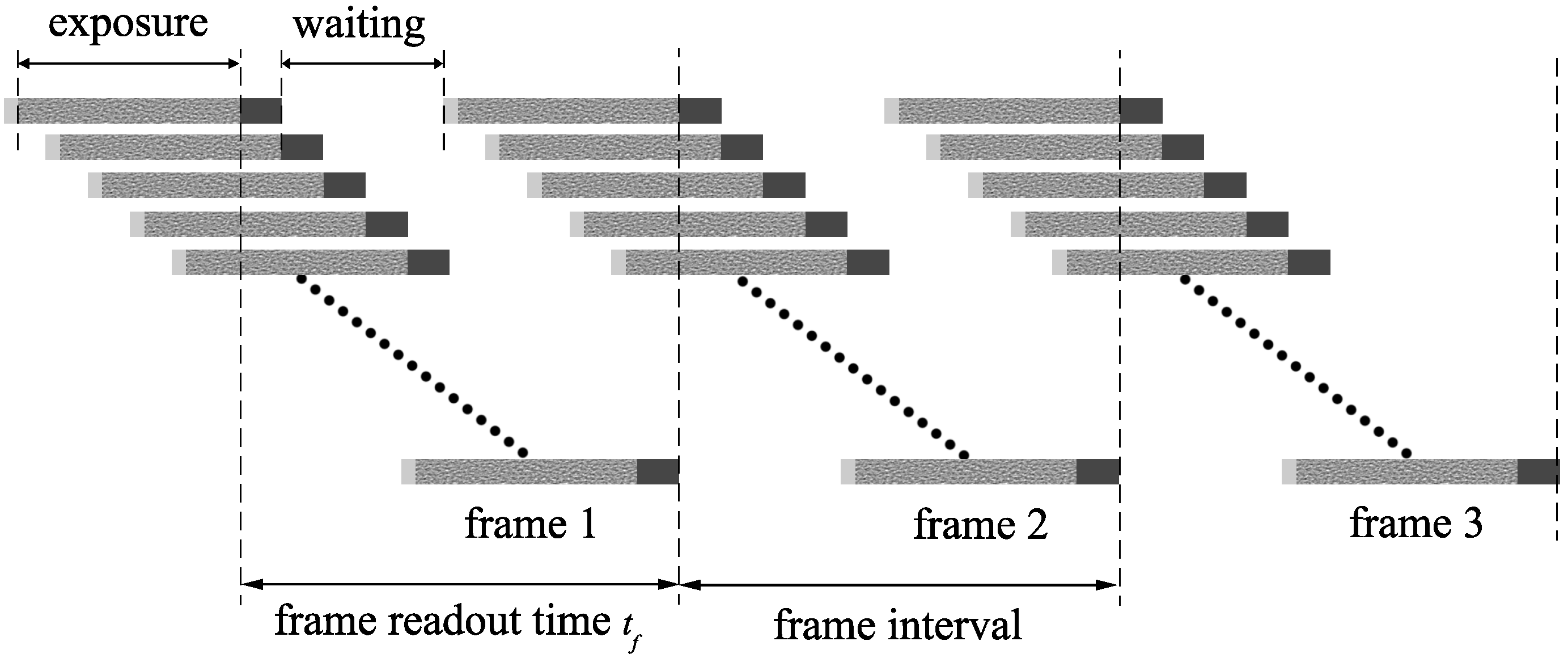

2.1.2. Frame Rate in a CMOS Sensor Camera

2.2. Calculating Pixel Value

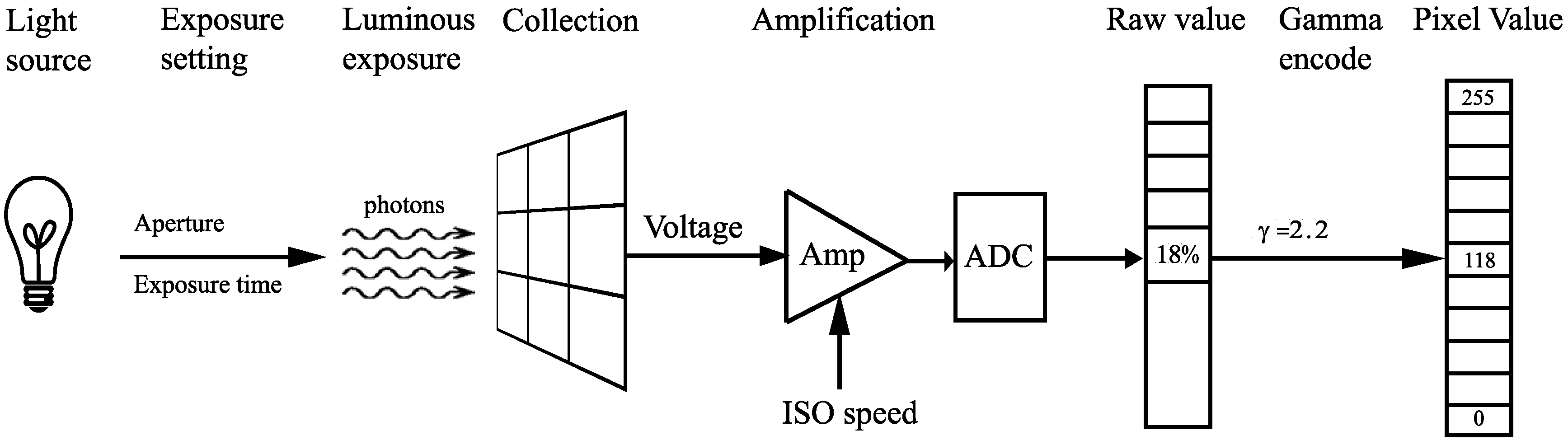

2.2.1. The Whole Process from Receiving Light to the Calculating Pixel Value in Image Sensor

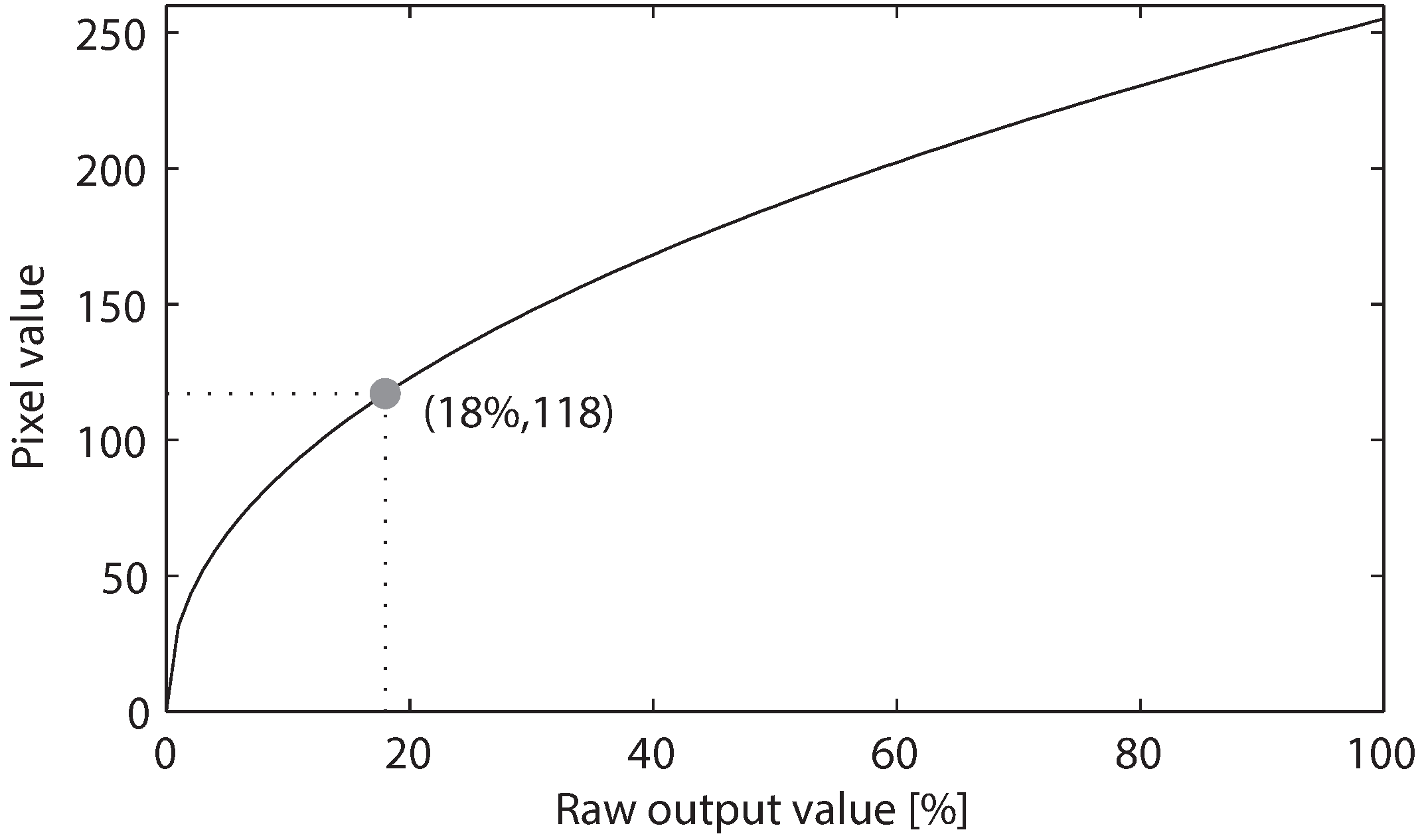

2.2.2. Calculating the Pixel Value from the Raw Output Value

2.2.3. Calculating the Pixel Value from the Luminous Exposure Ratio

2.2.4. Calculating Luminous Exposure Ratio from Exposure Difference

2.2.5. Photometry of LED and Ambient Light

Measurement of Radiated Light Intensity

Measurement of Incident Light Intensity

2.2.6. Calculating Indicated Exposure Value from Given Camera Settings and Light Source Intensity

Indicated Exposure Value for Radiated Light

Indicated Exposure Value for Incident Light

Indicated Exposure Value for the Combined Light Source

3. Performance Analysis of the System

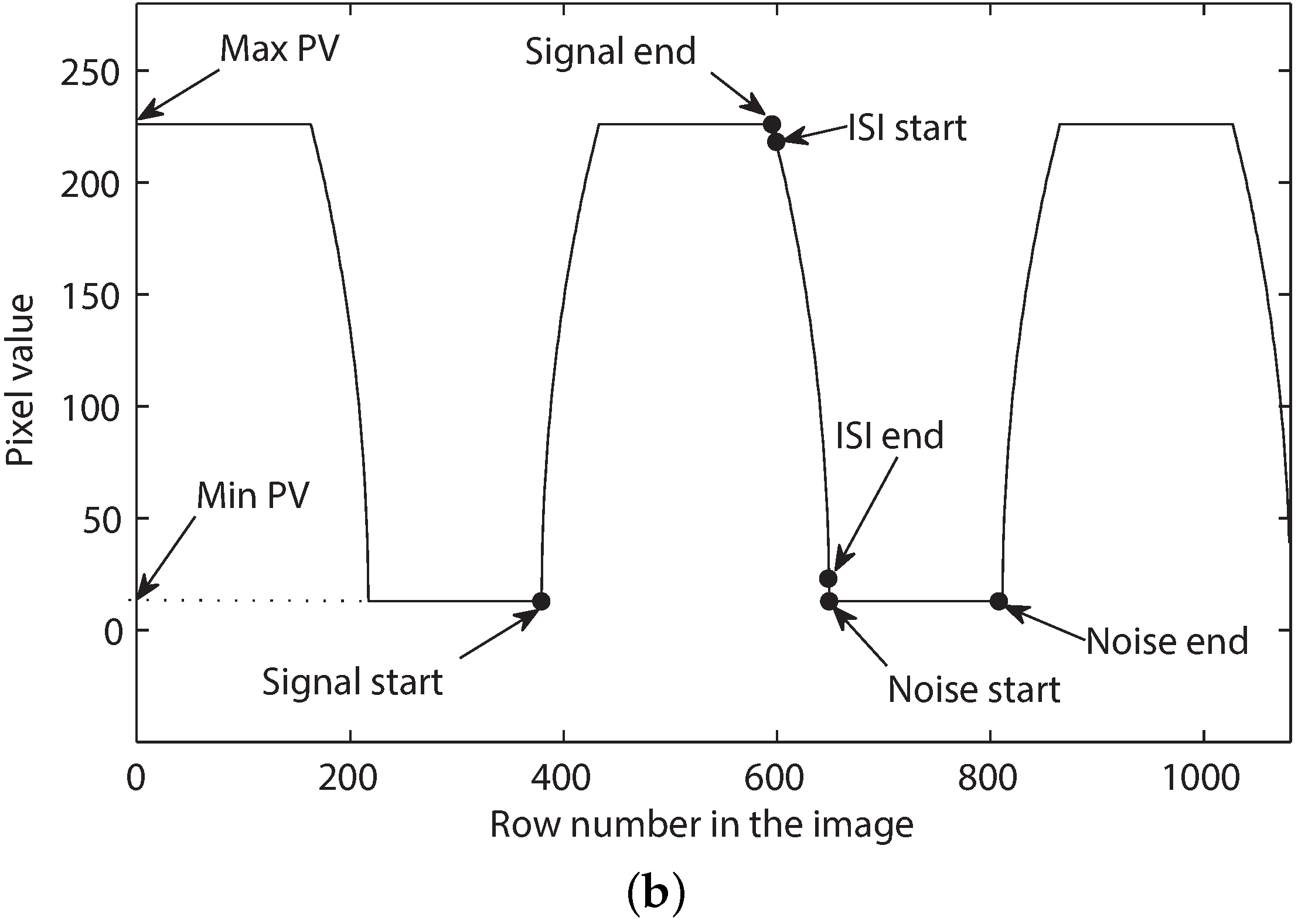

3.1. Signal Quality

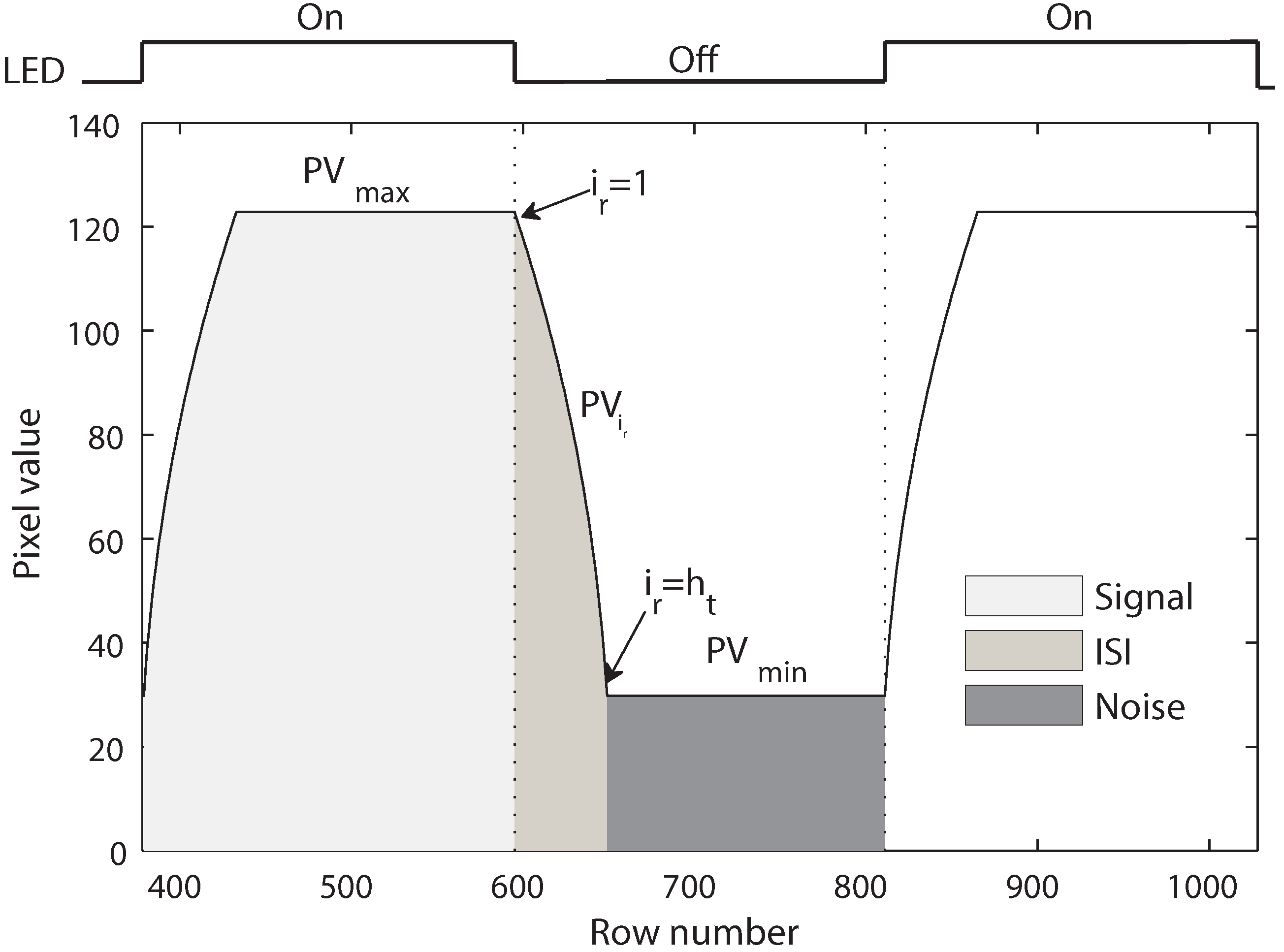

3.1.1. Intersymbol Interference

3.1.2. Ambient Light Noise

3.1.3. SINR

3.2. Data Rate

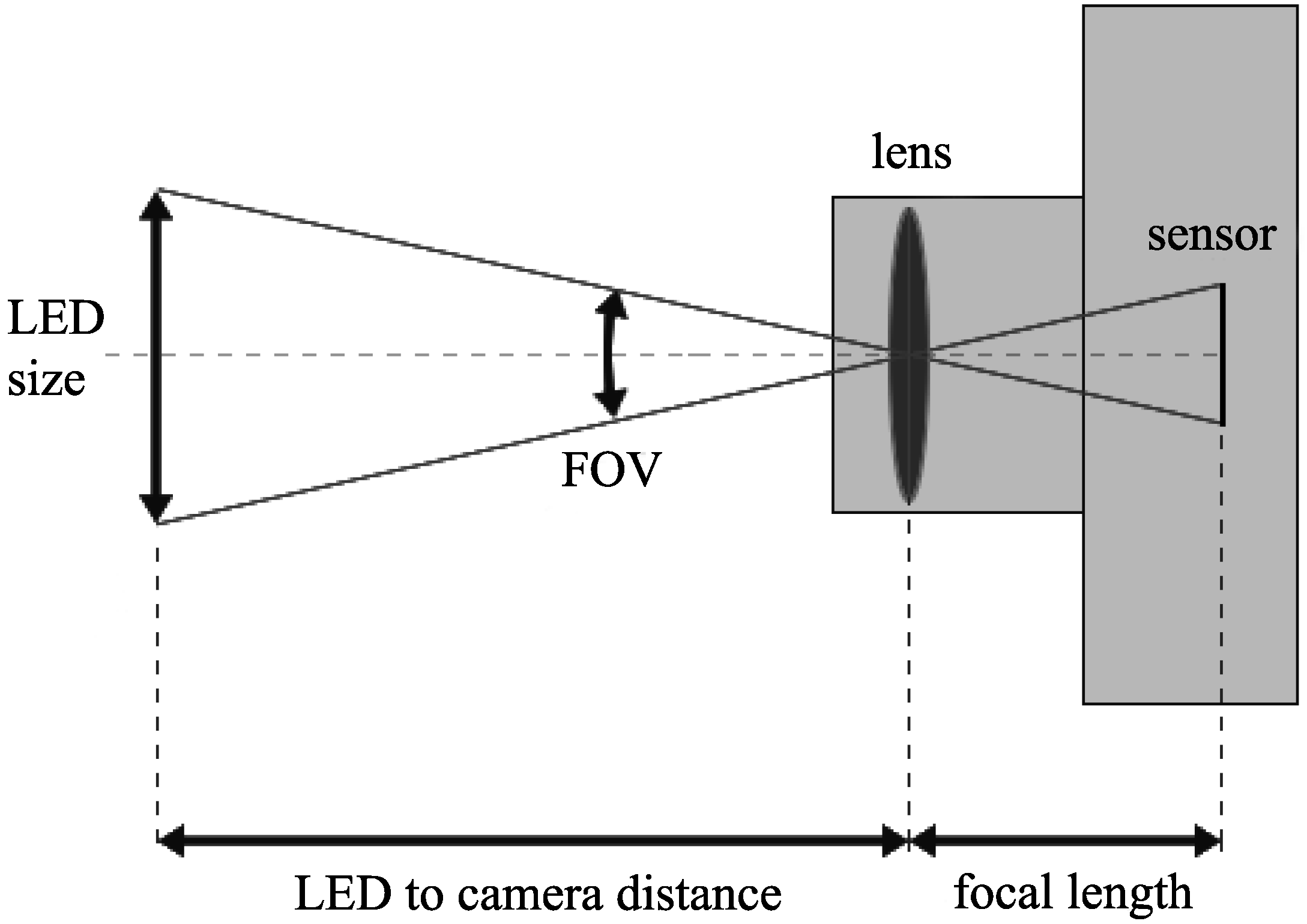

3.3. Required Distance from LED to Camera

4. Simulation

4.1. Simulation Environment

4.2. Simulation and Calculation Procedure

4.3. Simulation Results

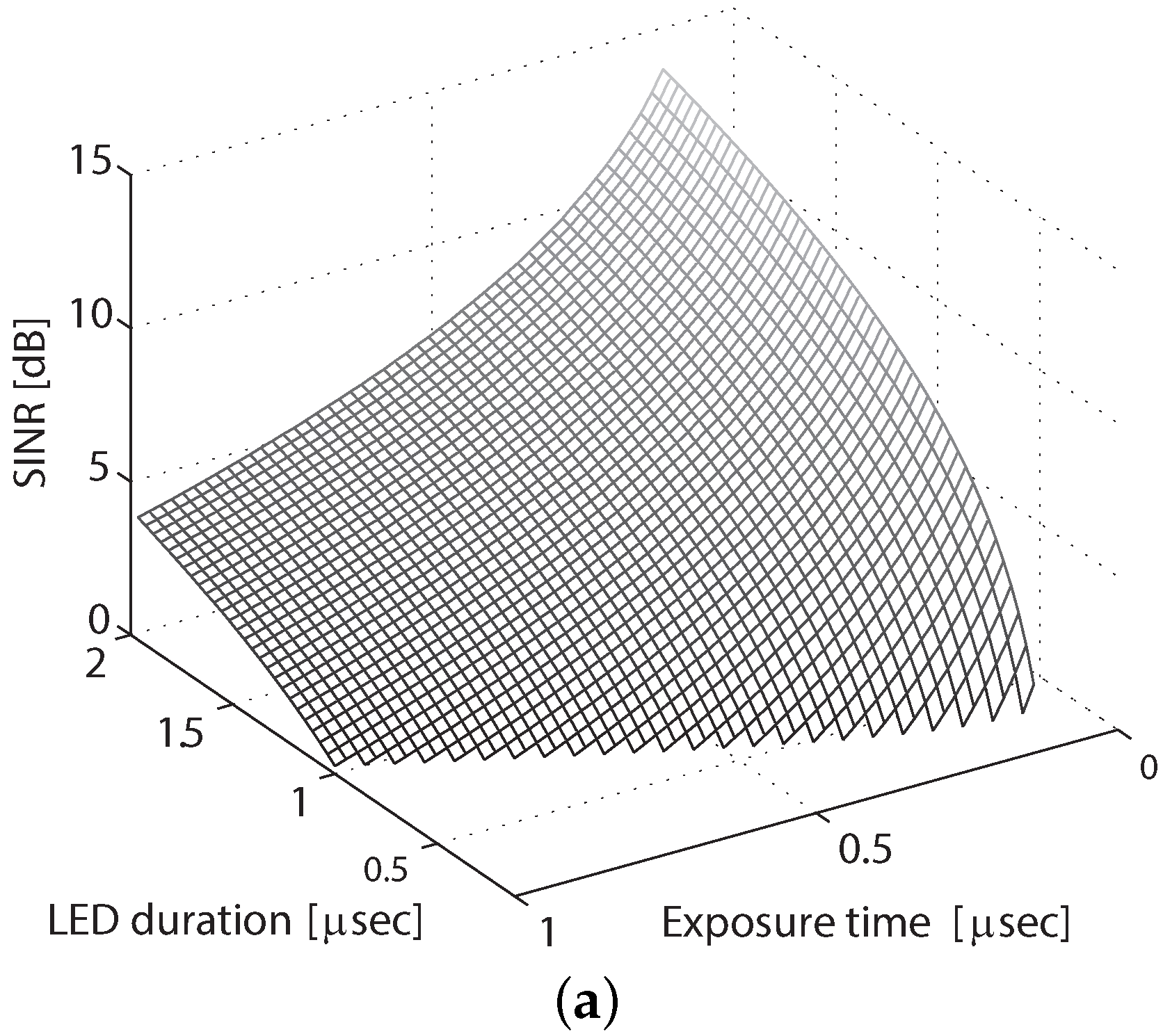

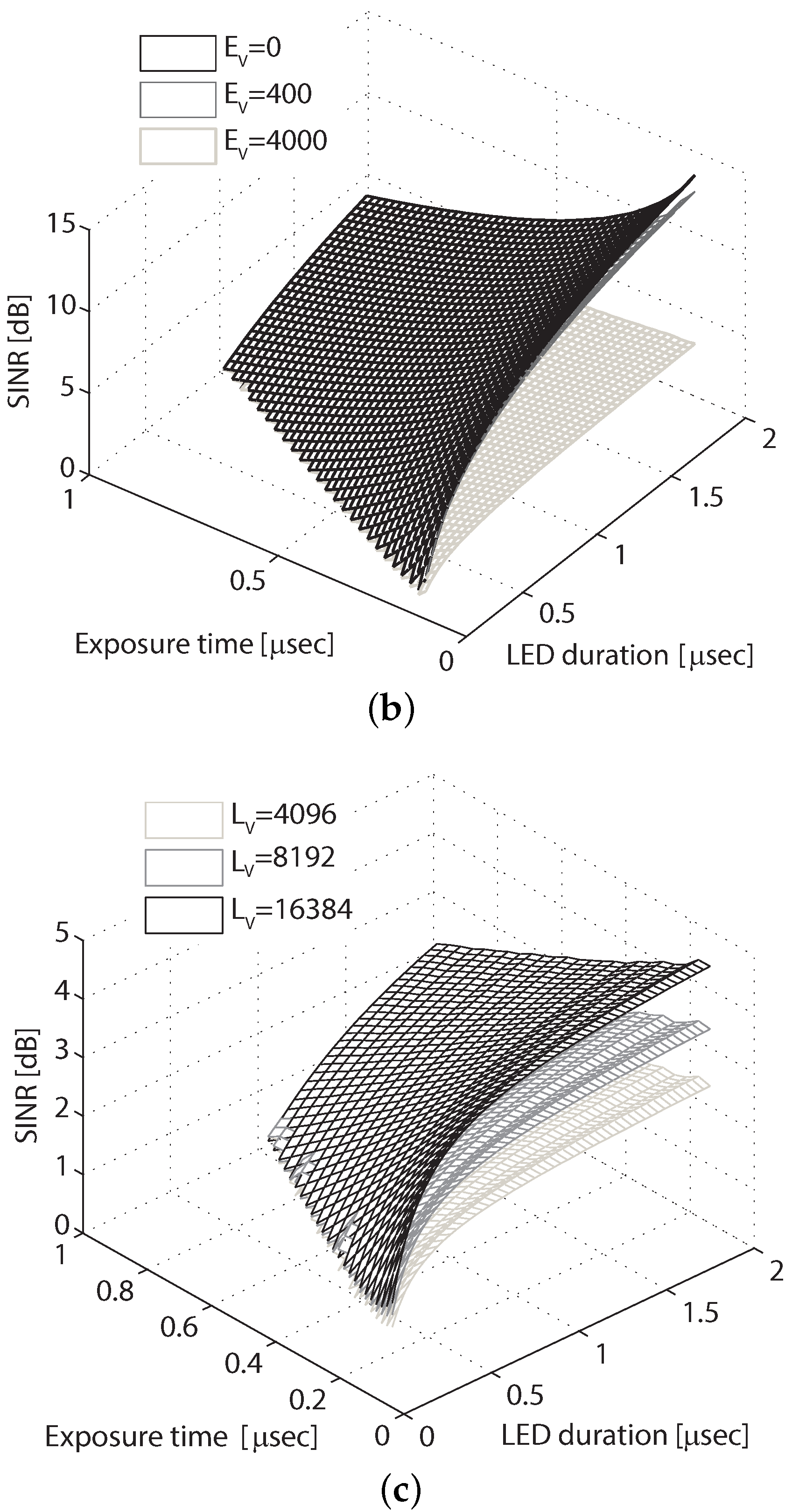

4.3.1. SINR

4.3.2. Data Rate

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A Calculate the Maximum Pixel Value

Appendix B Calculate the Minimum Pixel Value

Appendix C Pixel Value of a Row in a Transition Band

Appendix D Derivative of SINR with Respect to Exposure Time

Appendix E Derivative of SINR with Respect to LED Frequency

Appendix F Derivative of SINR with Respect to Ambient Light Illuminance

Appendix G. Derivative of SINR with Respect to LED Luminance

References

- Danakis, C.; Afgani, M.; Povey, G.; Underwood, I.; Haas, H. Using a CMOS camera sensor for visible light communication. In Proceedings of the 2012 IEEE Globecom Workshops (GC Wkshps), Anaheim, CA, USA, 3–7 Decemmber 2012; pp. 1244–1248.

- Takai, I.; Ito, S.; Yasutomi, K.; Kagawa, K.; Andoh, M.; Kawahito, S. LED and CMOS image sensor based optical wireless communication system for automotive applications. IEEE Photonics J. 2013, 5. [Google Scholar] [CrossRef]

- Jovicic, A.; Li, J.; Richardson, T. Visible light communication: Opportunities, challenges and the path to market. IEEE Commun. Mag. 2013, 51, 26–32. [Google Scholar] [CrossRef]

- Rajagopal, N.; Lazik, P.; Rowe, A. Visual light landmarks for mobile devices. In Proceedings of the 13th International Symposium on Information Processing in Sensor Networks, Berlin, Germany, 15–17 April 2014; pp. 249–260.

- Boubezari, R.; Le Minh, H.; Ghassemlooy, Z.; Bouridane, A.; Pham, A.T. Data detection for Smartphone visible light communications. In Proceedings of the 9th International Symposium on Communication Systems, Networks & Digital Signal Processing (CSNDSP), Manchester, UK, 23–25 July 2014; pp. 1034–1038.

- Nguyen, T.; Hong, C.H.; Le, N.T.; Jang, Y.M. High-speed asynchronous Optical Camera Communication using LED and rolling shutter camera. In Proceedings of the 2015 Seventh International Conference on Ubiquitous and Future Networks (ICUFN), Sapporo, Japan, 7–10 July 2015; pp. 214–219.

- Corbellini, G.; Aksit, K.; Schmid, S.; Mangold, S.; Gross, T. Connecting networks of toys and smartphones with visible light communication. IEEE Commun. Mag. 2014, 52, 72–78. [Google Scholar] [CrossRef]

- Ji, P.; Tsai, H.M.; Wang, C.; Liu, F. Vehicular visible light communications with led taillight and rolling shutter camera. In Proceedings of the 2014 IEEE 79th Vehicular Technology Conference (VTC Spring), Seoul, Korea, 18–21 May 2014; pp. 1–6.

- Kuo, Y.S.; Pannuto, P.; Hsiao, K.J.; Dutta, P. Luxapose: Indoor positioning with mobile phones and visible light. In Proceedings of the 20th Annual International Conference on Mobile Computing and Networking, Maui, HI, USA, 7–11 September 2014; pp. 447–458.

- Hyun, S.W.; Lee, Y.Y.; Le, J.H.; Ju, M.C.; Park, Y.G. Indoor positioning using optical camera communication and pedestrian dead reckoning. In Proceedings of the 2015 Seventh International Conference on Ubiquitous and Future Networks (ICUFN), Sapporo, Japan, 7–10 July 2015; pp. 64–65.

- Chao, J.; Evans, B.L. Online calibration and synchronization of cellphone camera and gyroscope. In Proceedings of the 2013 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Austin, TX, USA, 3–5 December 2013.

- International Organization for Standardization (ISO). ISO 12232:2006—Photography—Digital Still Cameras—Determination of Exposure Index, ISO Speed Ratings, Standard Output Sensitivity, and Recommended Exposure Index, 2nd ed.; ISO: London, UK, 2006. [Google Scholar]

- Jacobson, R.E. Camera Exposure Determination. In The Manual of Photography: Photographic and Digital Imaging, 9th ed.; Focal Press: Waltham, MA, USA, 2000. [Google Scholar]

- Chaves, J. Introduction to Nonimaging Optics, 1st ed.; CRC Press: Boca Raton, FL, USA, 2008. [Google Scholar]

- Stroebel, L.; Compton, J.; Current, I.; Zakia, R. Basic Photographic Materials and Processes, 2nd ed.; Focal Press: Waltham, MA, USA, 2000. [Google Scholar]

- International Organization for Standardization (ISO). ISO 2720:1974.—General Purpose Photographic Exposure Meters (Photoelectric Type)—Guide to Product Specification; ISO: London, UK, 1974. [Google Scholar]

- Banner Engineering Corp. Handbook of Photoelectric Sensing, 2nd ed.; Banner Engineering: Plymouth, MN, USA, 1993. [Google Scholar]

| Value (lux) | Environment |

|---|---|

| Total starlight | |

| 1 | Full moon |

| 80 | Hallways in office buildings |

| 100 | Very dark overcast day |

| 300–500 | Office lighting |

| 400 | Sunrise or sunset |

| 1000 | Overcast day |

| 10,000–25,000 | Full daylight |

| 32,000–130,000 | Direct sunlight |

| Parameter | Value |

|---|---|

| Modulation | OOK |

| LED luminous intensity | 0.73 (cd) |

| LED area | 10 (cm) |

| Indoor office illuminance | 400 (lux) |

| Outdoor illuminance | 40,000 (lux) |

| LED luminance | 4096 to 16,384 (cd/m) |

| ISO speed | 100 |

| Lens aperture | 4 |

| γ | 2.22 |

| K constant | 12.5 |

| C constant | 98 |

| Sensor resolution | |

| Frame readout time | 1/100 (s) |

| LED frequency | 500 to 8000 Hz |

| Exposure time | 1/8000 to 1/1000 (s) |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Do, T.-H.; Yoo, M. Performance Analysis of Visible Light Communication Using CMOS Sensors. Sensors 2016, 16, 309. https://doi.org/10.3390/s16030309

Do T-H, Yoo M. Performance Analysis of Visible Light Communication Using CMOS Sensors. Sensors. 2016; 16(3):309. https://doi.org/10.3390/s16030309

Chicago/Turabian StyleDo, Trong-Hop, and Myungsik Yoo. 2016. "Performance Analysis of Visible Light Communication Using CMOS Sensors" Sensors 16, no. 3: 309. https://doi.org/10.3390/s16030309

APA StyleDo, T.-H., & Yoo, M. (2016). Performance Analysis of Visible Light Communication Using CMOS Sensors. Sensors, 16(3), 309. https://doi.org/10.3390/s16030309