1. Introduction

Measuring air quality has been historically performed by ground stations. Later on, manned aircraft and satellites were used to collect necessary measurements. Unfortunately, airborne and satellite sensors are very costly which prevents their daily use. Most recently, remotely controlled unmanned aerial vehicles (UAVs) equipped with different sensors are being used to get up-to-date information with higher spatial and temporal resolution at reasonable equipment price. The use of UAVs for air quality monitoring is getting more and more attention from both the research community and industry. The most common usage of UAVs are air pollution and emission monitoring [

1], climate change monitoring [

2], emergency response [

3], disaster monitoring (e.g., forest fires [

4,

5] or chemical factory explosions [

6], etc.), area monitoring [

7], or wildlife monitoring and protection [

8,

9]. In this work, we focus on autonomous UAVs which could collect required data in a coordinated manner without any human aid.

The reason to move from remotely controlled UAVs to autonomous UAVs is the fact that human operators (pilots) seem to be a bottleneck of the system when several UAVs collaborate on a single mission [

10]. Each operator or a team of operators is responsible for one UAV and controls its actions. The human operators communicate among themselves and coordinate their actions in order to achieve a common goal. Such an approach has its limits in the human interactions and human control of the UAVs. Therefore, one of the main goals of research tackling UAVs is to improve management of the UAVs such that an operator or a group of operators can control larger groups of UAVs easily. This can be achieved by two means:

In this article we provide a follow-up to our previous work in [

10,

11,

12,

13] on advanced Human-Machine Interfaces (HMIs) using planning of alternatives aimed at the first approach and explore solutions tackling the second approach with the help of planning of alternative plans as well. We have already demonstrated how multiagent control algorithms can be used to control multiple UAVs [

14]; therefore, the method proposed in this article also continues in this direction and provides methods of improving UAV planning capability by utilization of planning for alternatives.

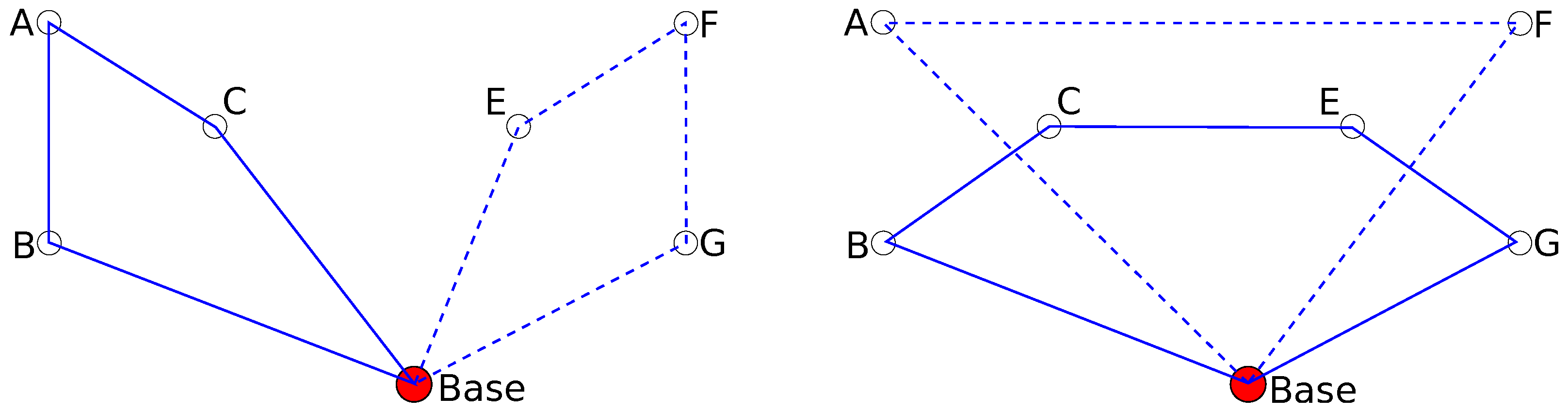

An illustrative example of planning alternatives for trajectory planning is shown in

Figure 1. There are two UAVs and six waypoints that need to be visited by either UAV. Planning of alternatives can propose several possible solutions to the task. Two of them are shown in the figure.

Unlike in the case of making alternative plans for human operators, where the utility function defining the quality of the solution is unknown (or only implicitly known only to the operator) in the case of fully autonomous UAVs, the utility function is known but the optimization problem is too complex to be solved optimally. Our proposed approach here is to use planning of alternatives to provide a diverse set of trajectories out of which final trajectories for all the UAVs are chosen. Since the set of created diverse trajectories is processed automatically, the size of the created set can be much bigger than when we want a human operator to choose. We named out approach diverse planning.

Although the solution is not limited to it, our task in this work is to monitor different air pollutants across a city. Even though the dense monitoring is necessary to detect sources of the pollution, it is rather an overkill for uniform continuous monitoring. Different pollutants are required to be monitored at different locations. For example, near a main road junctions, it is necessary to monitor gases produced during combustion: carbon dioxide (CO

), methane (CH

), and nitrous oxide (N

O), while near schools it is necessary to monitor ultrafine particles [

15], carbon monoxide (CO), and sulfur dioxide (SO

), which negatively affect human health [

16].

A UAV or a team of UAVs can be also used for monitoring of remote and inaccessible areas, as proposed, e.g., in [

1,

17,

18,

19]. In our case, we want to monitor a city (particularly, we are using simulation for the city of Prague), which requires low altitude flights (disallowing monitoring by conventional aircraft). There is a full range of UAVs which could be used for this task (Provided that the legal and regulation issues [

20,

21] are solved). These UAVs differ in their sizes, range of flights, payload and power capacities, speeds, etc. In this study we do not focus on any particular UAV and the proposed method can be easily used for any type of UAV by correctly setting few basic parameters. For our purpose we could use, for example,

Meteorological Mini-UAV (

M2AV) developed at the Institute of Aerospace Systems, Technical University of Braunschweig, Germany. The maximum take-off weight is 4.5 kg, including 1.5 kg of payload, with the range of 60 km at a cruising speed 20 ms

.

The presented task here is a real-world-inspired application called Multi-UAV Sensing Problem (MUSP). The goal is to gather air quality data for a large area using a group of UAVs. Different types of locations are required to be monitored by different sensors. Each UAV can be equipped with multiple sensors, but the weight of each mounted sensor negatively affects the fuel amount which can be carried and thus limits UAV flight range. As the authors in [

1] mentions:

This overview article implies that our algorithm is the first one considering non-zero sensors weights at such a scale. The solution of the MUSP has to specify which sensors have to be mounted on which UAV and also plan the flight trajectory which has to be shorter with each mounted sensor. The quality of the solution is measured by the total number of performed measurements.

In MUSP, we can use diverse planning to provide a set of diverse trajectories out of which the most suitable trajectories for the UAVs are chosen. This approach allows us to balance the quality of the solution and the required computational time, which is necessary for large-scale applications.

The article is structured as follows. In

Section 2, we formally define the problem of multi-UAV coordination for remote sensing with two base-line solutions using the classical and greedy planning techniques. In

Section 3, we present the novel algorithm DivPlan based on diverse planning techniques providing efficient solution to the defined family of remote sensing problems. In

Section 4, we provide a complexity analysis for the three algorithms. Finally, we experimentally compare the proposed algorithm with the two base-line approaches in

Section 5 and also evaluate the algorithm in simulation of a real-world problem.

2. Diverse Planning for Multi-UAV Coordination

The diverse planning techniques can be used directly for improved interaction with a human UAV operator, but also for algorithms planning for a team of UAVs aiming at improved autonomous behavior. Before presenting the Multi-UAV coordination planner coined DivPlan utilizing diverse planning, we present two base-line algorithms. Since the output of the algorithms are trajectories in the form of GPS coordinates it is very simple to deploy them on any UAVs supporting flight along GPS trajectories, e.g., using mixed-reality system as have been demonstrated in [

14].

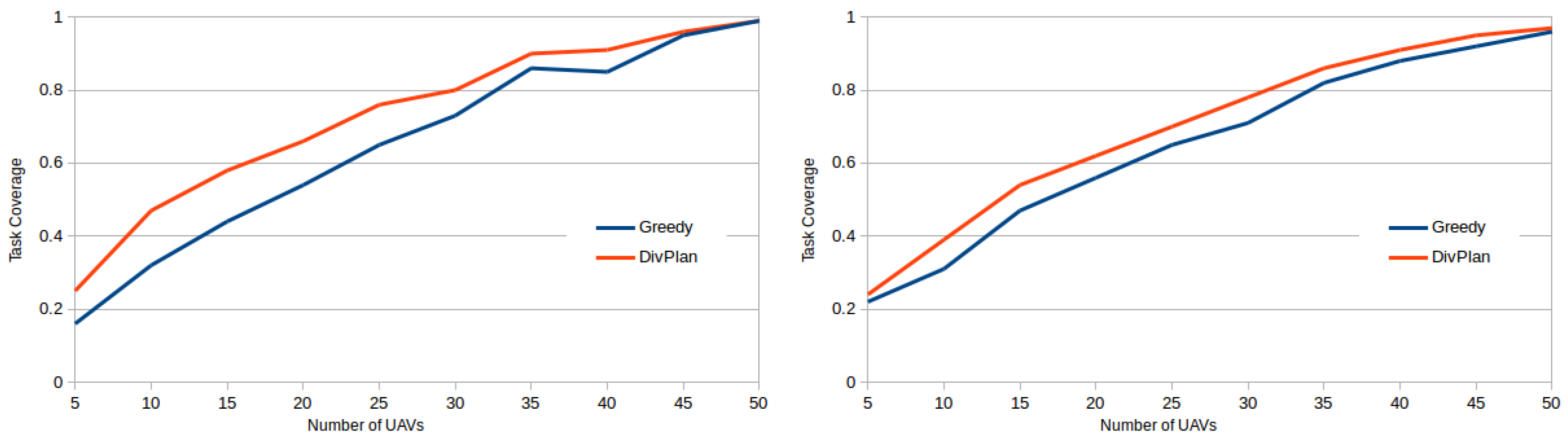

Firstly, we will present an pseudo-optimal planner based on translation of the problem to classical optimal planning; Secondly, we will present a greedy approach. On one hand, the pseudo-optimal algorithm provides solutions close to optima; however, at the price of high (generally intractable) computational complexity. On the other hand, the greedy approach is computationally easy (tractable); however, the solutions are often of low quality. The motivation for DivPlan was to design a middle-ground algorithm with complexity low enough for large-scale scenarios; however, with solutions of higher quality than the naive greedy approach.

The Multi-UAV Sensing Problem (MUSP) is defined as a tuple

, where

is a set of sensor types the UAVs can equip in form of particular sensors,

is a set of target ground locations for sensing in form ,

is a set of (identificators of) the UAVs carrying out the mission,

is the set of the sensing tasks of the UAVs of sensor type y at target location (the optimization criterion is to fulfill maximal number of these tasks),

c is the number of sensor slots (identical for all UAVs),

b is the maximal battery charge (identical for all UAVs), and

p represents the battery penalty for one equipped sensor (identical for all UAVs).

The semantics of fulfilment of a task is following. The task has to be fulfilled by a UAV located at with an equipped sensor of type y. Although each vehicle can be equipped by a number of sensors (maximally c), the more sensors attached, the heavier the vehicle is, therefore the smaller is its flight range. The decrease of the flight range is defined by decrease of the maximal (and initial) battery charge by equipping sensors on the vehicle. The reduced initial charge is for e equipped sensors.

A solution of a MUSP problem is a mapping , where for each UAV a trajectory and a set of equipped sensors of types in are assigned. A trajectory is an ordered sequence of locations from L (or an empty sequence) over which the UAV moves to fulfill the tasks. For the length of the trajectory (the distance between two locations is computed as Euclidean distance), it must hold that the battery charge is sufficient (we assume WLOG that the units of battery charge are the same units as the distance). For the number of equipped sensors, it must hold . An optimal solution μ of a MUSP problem is such that there exists no other solution to fulfilling more tasks than μ. The closer is a solution to the optimum (to the maximal number of solved tasks), the higher is the quality of the solution.

After we sketch why MUSPs are hard to solve in

Section 2.1, we show how to solve the discretized variant of the problem optimally in

Section 2.2. The second algorithm, presented in

Section 2.3, is the greedy solution.

2.1. Why Is This Task Difficult?

A MUSP combines several well-known NP-complete problems; however, to our best knowledge this particular combination has not been proposed and formally defined yet.

For one UAV, one sensor type and the case when it is possible to fulfill all tasks, the problem reduces to a Travelling Salesman Problem (TSP). The selection of sensors under the limit of the battery thus flying distance is a Knapsack Problem (KP). The combination of TSP and KP is know as Orienteering Problems (OP) [

22]; however, defined over different prices of the goals, whereas we limit the total flight range in our problem. The MUSPs additionally adds the combinatorial problem of optimization for multiple UAVs.

2.2. The Pseudo-Optimal Algorithm

The (close to) optimal solution to a MUSP will be obtained by translating the problem to a classical planning problem and using top-performing optimal planner SymBA* [

23] to search for a solution. Detailed description of translation MUPS into a planning problem can be found in the

Appendix A. The solution is than translated to

μ by means of prescription which UAV should use which sensors and how to move among the targets and which to sense. As classical planning does not directly allow modeling of continuous fluents, the proposed translation uses discretization of distances between the locations of sensor tasks and related values as battery charge. Although the discretization causes the optimal solution to the translated problem does not necessarily corresponds to optimal solution to the original MUSP the error is bounded by

, where

is the number of sensor tasks and

d is the distance for one discrete flight “step”, i.e., the discretization factor. Therefore, we denote the algorithm as pseudo-optimal.

2.3. The Greedy Algorithm

The

greedy algorithm sequentially generates and assigns trajectories and equipped sensors to each UAV. The algorithm is listed as Algorithm 1. Each UAV is assigned a trajectory and sensors by a method

listed as Algorithm 2. Sensor tasks covered by created trajectory are removed from the problem before the creation of another trajectory for the next UAV.

| Algorithm 1: GreedySolver – a greedy algorithm solving MUSPs. |

![Sensors 16 02199 i001]() |

Input of the method

(Algorithm 2) is a list of sensor tasks and a list of equipped sensors (this parameter contains no sensor when called from Greedy algorithm, but it will be needed later in the DivPlan algorithm). In fact,

solves an Orienteering Problem (OP) together with selection of a suitable subset of sensors. Since the Orienteering Problem is currently an open problem, the proposed solution is another greedy approach. As soon as a practical solution to this problem exists, we shall replace

method by a stand-alone solver.

| Algorithm 2: Greedy Orienteering Problem solver (with greedy sensors selection) of one UAV. |

![Sensors 16 02199 i002]() |

firstly creates an empty trajectory containing only the location of the base and then it sequentially adds new points to the trajectory as long as the UAV has enough battery charge to return to the base. New points are selected by the method , which finds the closest task of T to the last point of the . All tasks requiring new sensor (not yet equipped) are penalized by p of and thus tasks with available sensors are preferred. There is also a limit on maximal number of sensors equipped by a single UAV. The semantics of adding to minimizing is that the new waypoint is added to the trajectory such that extension of the trajectory length is minimized.

The greedy method represents a fast algorithm with prospectively lower solution quality that is supposed to solve large MUSP instances, which cannot be solved by the pseudo-optimal algorithm.

3. Diverse Planning Based Algorithm

So far, we presented two algorithms. On one hand, a pseudo-optimal planner which can solve MUSPs nearly optimally but it can solve only very small instances. On the other hand, it is the greedy algorithm which is able to solve large problem instances but since it is a greedy method, it can produce substantially sub-optimal solutions. The main goal of the proposed planner based on diverse planning DivPlan, is to create better solutions than greedy, but in a reasonable time.

DivPlan in Algorithm 3 works in two phases. Firstly, it uses a diverse planning technique to create diverse set of possible trajectories for the UAVs together with a set of sensors it should be equipped with. Then it assigns a subset of these trajectories to individual UAVs by translation to a Constraint Optimization Problem (COP) [

24].

| Algorithm 3: DivPlan—A MUSP solver based on diverse planning and constraint optimization. |

input : Problem

output: Solution μ

;

;

;

// UAVs U as COP variables X,

// as the COP domains for all variables,

// constraints C forbidding selection of the same trajectory by two UAVs,

// maximizing the number of sensor tasks covered by the solution μ,

// as the initial solution, and

// returns μ assigning a value from for each , i.e., trajectories to UAVs

return μ; |

The strategy for creating the diverse trajectories is inspired by the experimentally most successful method for diverse planning [

10], i.e., looking for good solutions of modified problems.

Method

(Algorithm 4) creates internally many smaller instances of the MUSP problem containing different subsets of sensor tasks and collects their solutions. The subsets of the sensor tasks are created by clustering method

k-means [

25], which groups tasks that are nearby and thus could be possibly covered by a single UAV. Number of clusters varies from 1 to number of sensor slots

c, generating trajectories for various numbers and combinations of sensors. Then the algorithm repeatedly calls

method until all tasks of the cluster are covered. This process is repeated for all subsets of sensors that can be mounted on an UAV and all the created trajectories are stored together with these required sensors.

Once a large portfolio of diverse trajectories is created, DivPlan runs a COP solver. We use

OptaPlanner (

http://www.optaplanner.org/) a popular Constraint Satisfaction and Optimization Solver.

OptaPlanner assigns a trajectory with a set of sensors

to each UAV optimizing the selection by

. Such assignment solves the original MUSP problem. There is only one rule specifying the quality of the solution, i.e., how many sensor tasks are covered by this solution. The rule in form of optimization criterion follows

meaning the maximized number of sensor tasks

has to be covered by the solution

μ. The rule is also listed in Algorithm algDrool in the Drools syntax (

https://docs.jboss.org/drools/release/5.2.0.Final/drools-expert-docs/html/ch05.html) used by

OptaPlanner. Note that the trajectory length is not being optimized by

OptaPlanner, only the number of covered tasks. For practical purposes, one of the very convenient features of

OptaPlanner is that it is an any-time algorithm and thus it produces better solutions as it is granted more computation time.

| Algorithm 4: Creates a set of diverse trajectories. |

![Sensors 16 02199 i003]() |

| Algorithm 5: OptaPlanner rule (in the Drools syntax). |

rule ‘‘CoveredTasks’’

when

$task : SensorTask()

not UavPlan(hasSensor($task.sensor), trajectory.isCovered($task.point))

then

scoreHolder.addMediumConstraintMatch(kcontext, −1);

end |

4. Complexity Analysis

A MUSP is combination of several NP-hard problems and thus it is NP-hard. In practice, that means that every algorithm solving this problem optimally needs time growing exponentially with the problem size, unless P = NP. The size of MUSP is dependent on several parameters: number of UAVs , number of sensing tasks , number of different sensors , and number of sensor slots c on a UAV. The number of locations is never more than , with +1 for the base location. The maximal battery charge b and penalty p only limit the number and size of the solutions. Let us take a closer look at how these parameters influence the computational complexity of presented algorithms.

4.1. Pseudo-Optimal Algorithm

The pseudo-optimal algorithm works in three steps, translating a MUSP to a classical planning problem, solving the translated problem by a classical planner and back translating the classical plan to the solution of the MUSP instance.

The number of planning objects used in the translation step is , where d is the discretization factor. The process of grounding generates all possible parameterizations of the predicates based on the objects of the particular types. Classical planning assumes finite number of objects, therefore the grounding will be finite as well. As all the predicates are binary the asymptotic complexity of grounding of predicates will be . Similarly, grounding of operators generates all possible parameterized actions. As the maximal number of predicate parameters is six for the operator , the asymptotic complexity of grounding of operators is . Encoding of the initial state and goal conditions is , because only a subset of facts is used. This gives us polynomial asymptotic complexity for the translation process .

Computational complexity of classical planning (therefore also of the used SymBA* planner) grows in the worst case exponentially with the size of the input problem

. The problem created during the translation is bounded by

. Therefore the overall complexity of the solution is exponentially dependent on the input size as follows:

where the back translation process only linearly traverses the resulting plan and builds the MUSP solution

μ, therefore there are no additional factors.

It is obvious that this approach is viable for smallest instances only. As we will show in the experiments only up to a dozen of monitoring tasks.

4.2. Greedy Algorithm

The greedy algorithm sequentially creates trajectories for each UAV. To create one trajectory, it repeatedly selects sensor tasks and adds the closest one to the existing trajectory. Thus, the whole computation runs in time:

which is polynomial in the size of the MUSP instance.

4.3. DivPlan Algorithm

DivPlan firstly creates a set

of diverse trajectories. Number of these trajectories can be estimated directly form Algorithm 4.

where

is number of trajectories created from cluster

. In the worst case, the

can approach the total number of sensor tasks (each trajectory covers just one location with one sensor task), but in practice this number is typically much smaller especially for large number sensor tasks. We can also limit this number by a constant

t, then:

Hence we bound the total number of diverse trajectories by t, the number of diverse trajectories created for one cluster of sensor tasks, and the total number of sensors, is in practice limited.

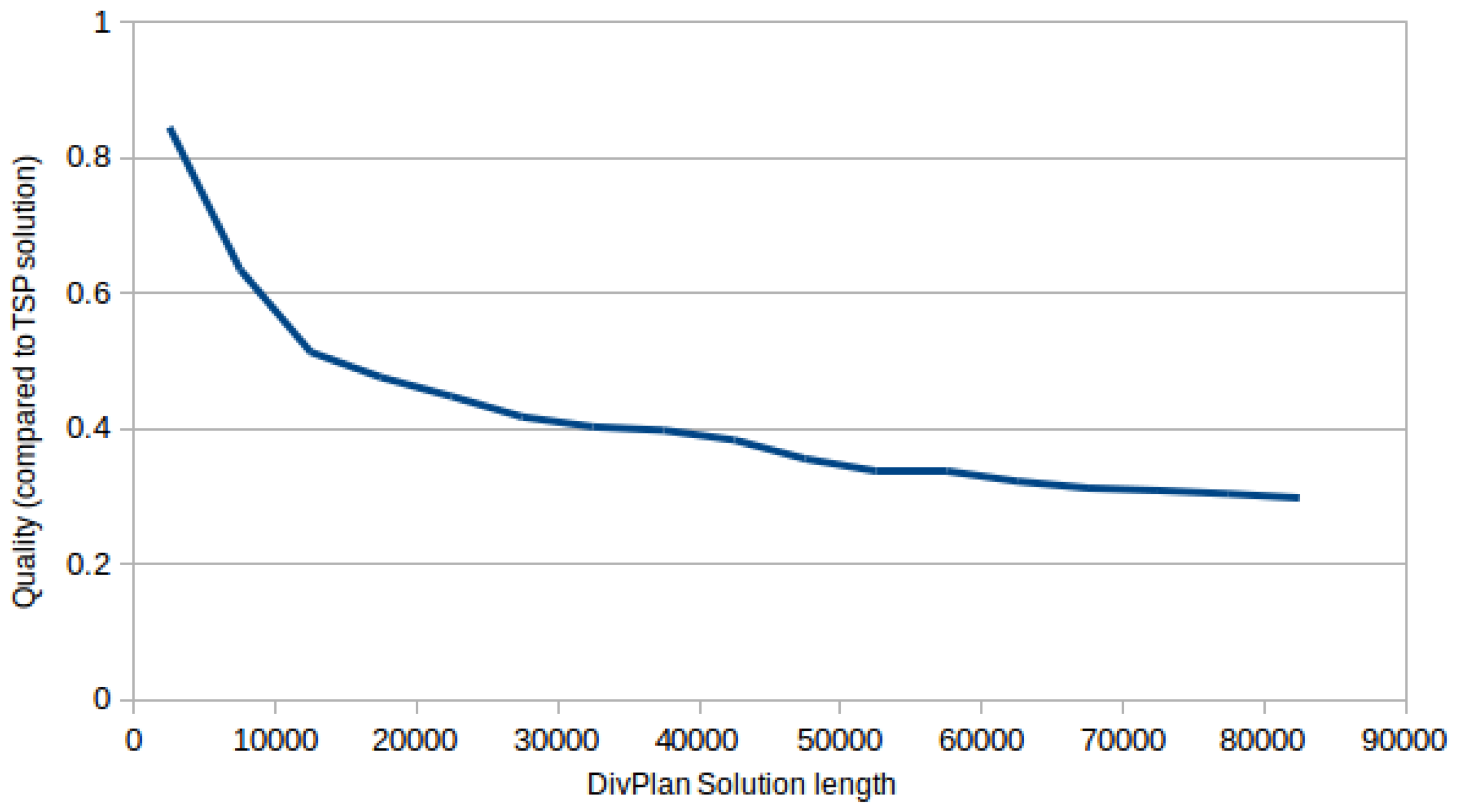

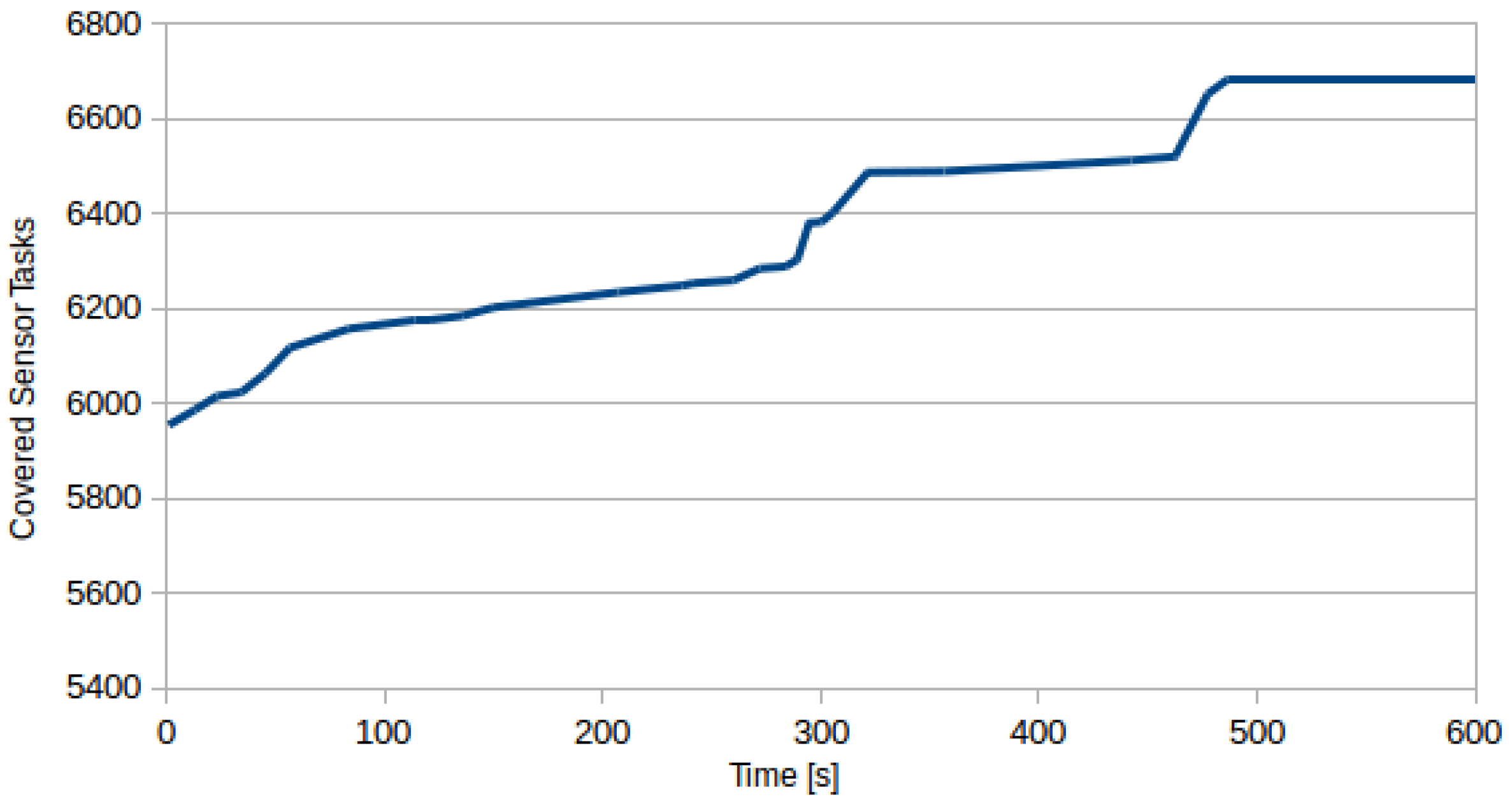

OptaPlanner is an anytime algorithm and thus it is difficult to evaluate its time complexity, moreover there is too many variables to theoretically estimate its performance profile (for experimental evaluations refer to the following experimental section, particularly

Figure 7). Nevertheless, we can evaluate the total size of the search space as

which gives us a following asymptotic bound on time complexity of DivPlan:

The time complexity of DivPlan is thus exponentially dependent only on the number of UAVs and their sensor slots. Unlike the number of sensing tasks, these numbers are very limited in practice. If we consider them to be fixed parameters, we would get a polynomial complexity of DivPlan algorithm.

6. Conclusions and Future Work

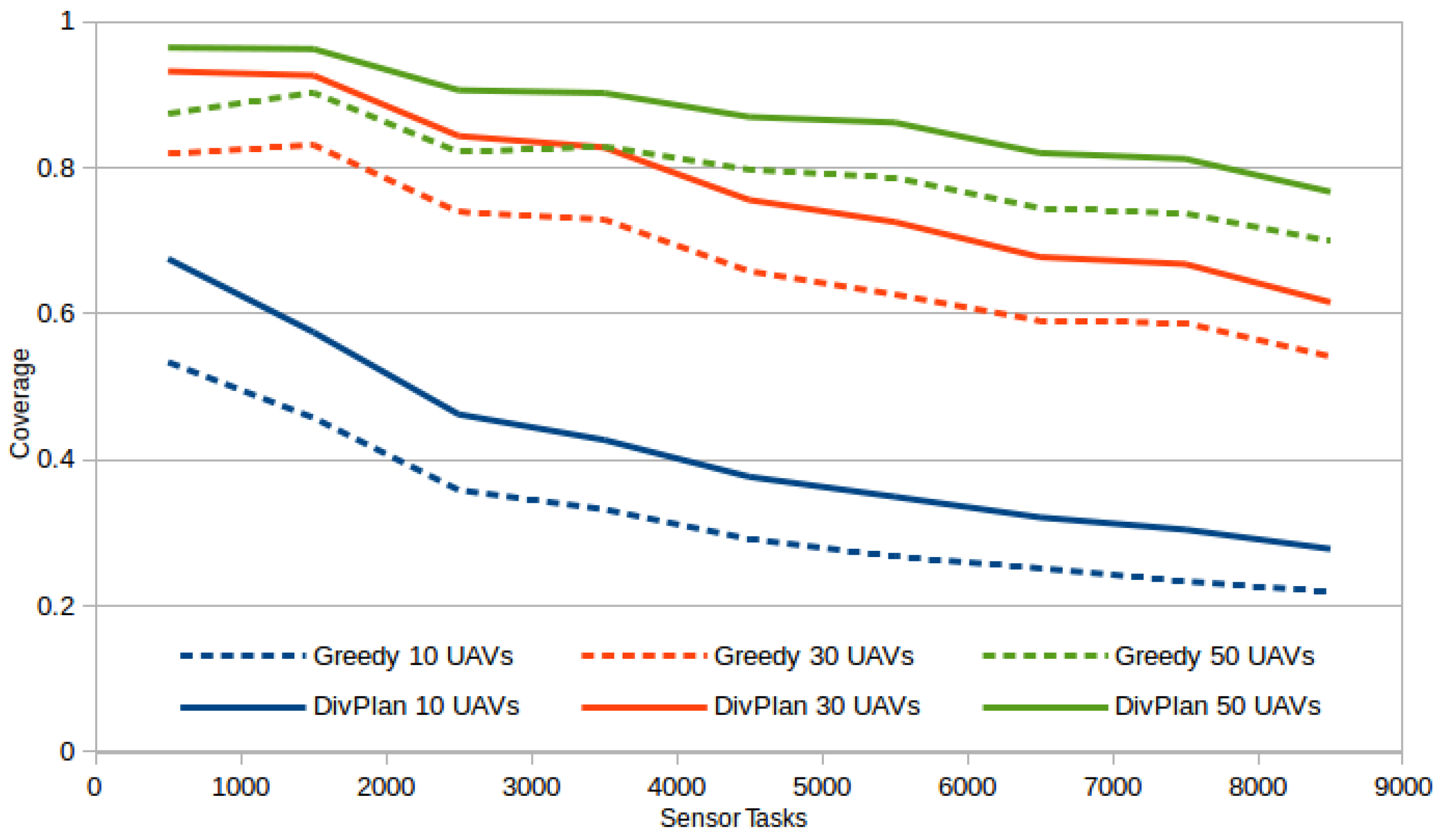

To solve the problem of autonomous remote sensing with non-zero weight of sensors, we have firstly formally defined the problem and for comparison we have designed two base-line algorithms commonly used for solution of combinatorial optimization problems in the literature. The algorithms were based on two distinct paradigms: (a) translation to classical planning with appropriate discretization; and (b) a greedy approach. The base-line algorithms framed the problem from the perspective of the solution quality and efficiency metrics, respectively.

The main contribution of our work was a novel algorithm aiming at the remote sensing problem by a fleet of coordinated UAVs with practicality in mind. The DivPlan algorithm targets a middle-ground between the optimal but inefficient and low-quality but highly efficient greedy algorithms. To provide such an algorithm, we have integrated an appropriate diverse planning technique to generate the alternative trajectories and Constraint Optimization composing the final solution out of these diverse partial solutions. The solvers were both theoretically and experimentally compared. The results show that an approach based on diverse planning is a good balance between quality of the solution and planning time. Moreover, the greedy and diversity planning approaches were able to solve large problem instances, which demonstrates their good scalability.

Based on the experimental results in the simulated real-world-inspired environment and a conservative usage of waypoints as a robotic primitive, we conjecture DivPlan is a good choice for practical deployment to a Multi-UAV system, which we leave to explore in a future work.