A Brain-Machine Interface Based on ERD/ERS for an Upper-Limb Exoskeleton Control

Abstract

:1. Background

2. Method

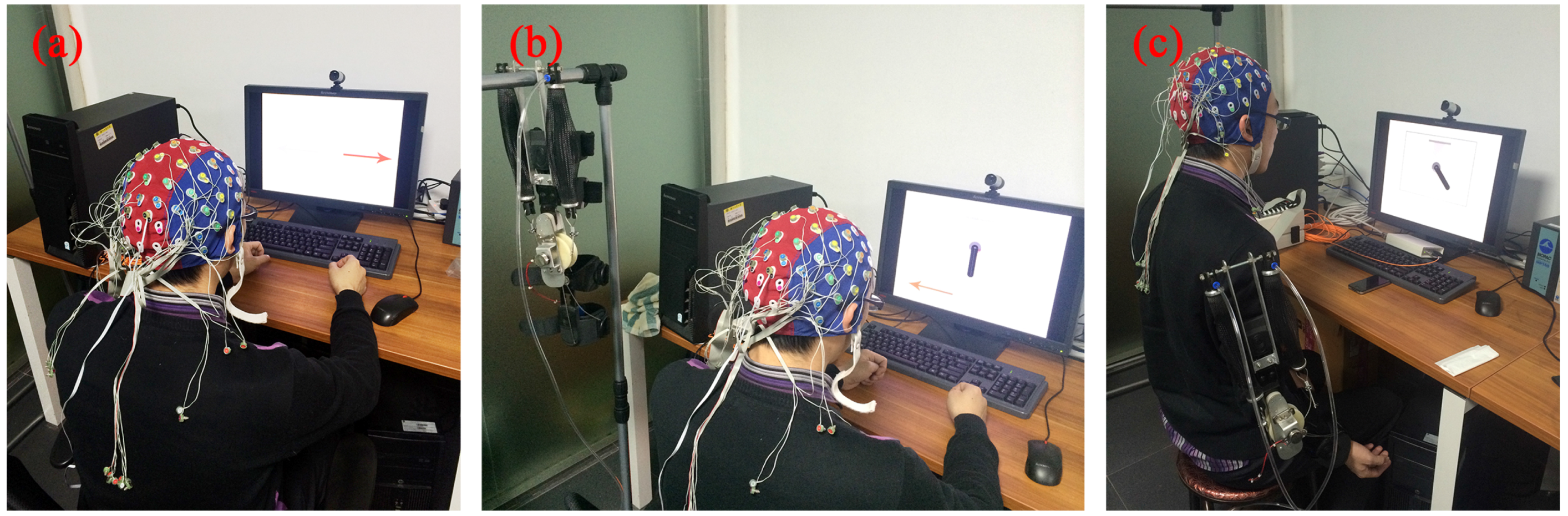

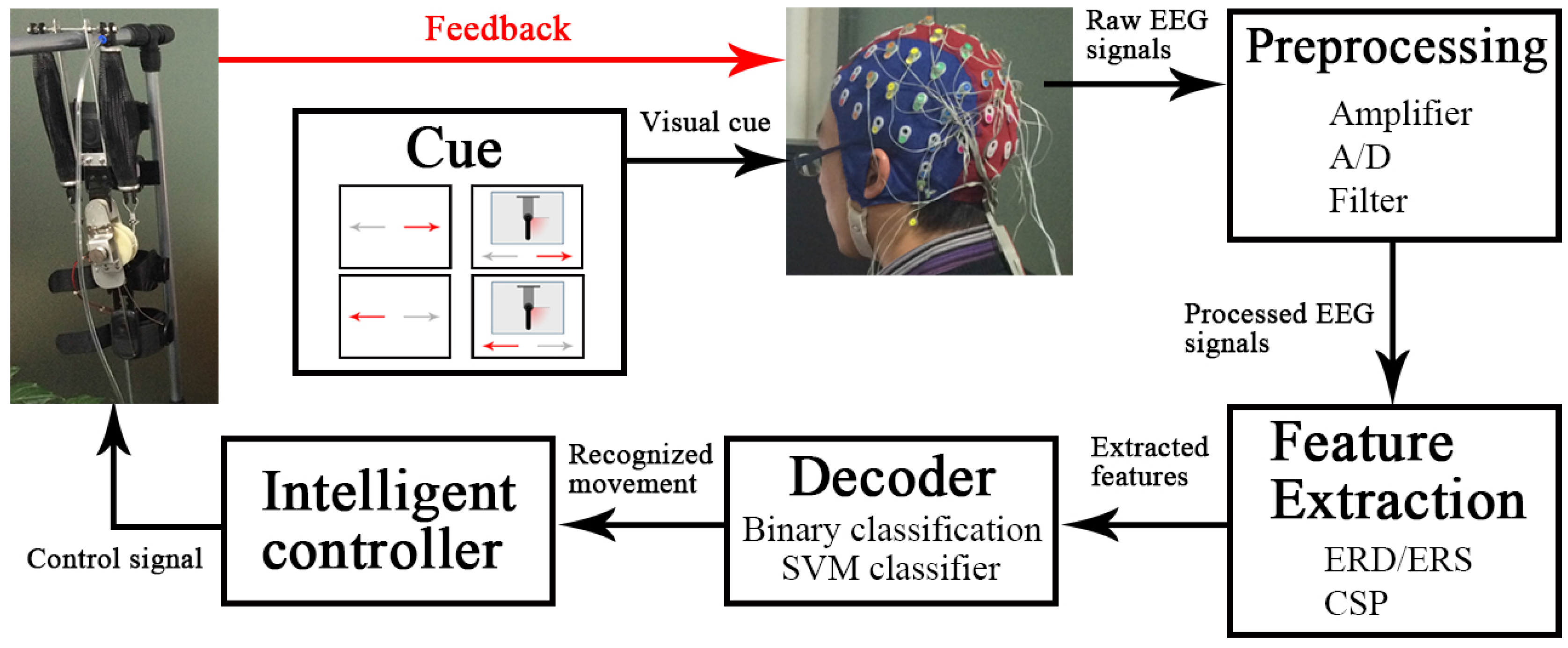

2.1. Subjects and Data Acquisition

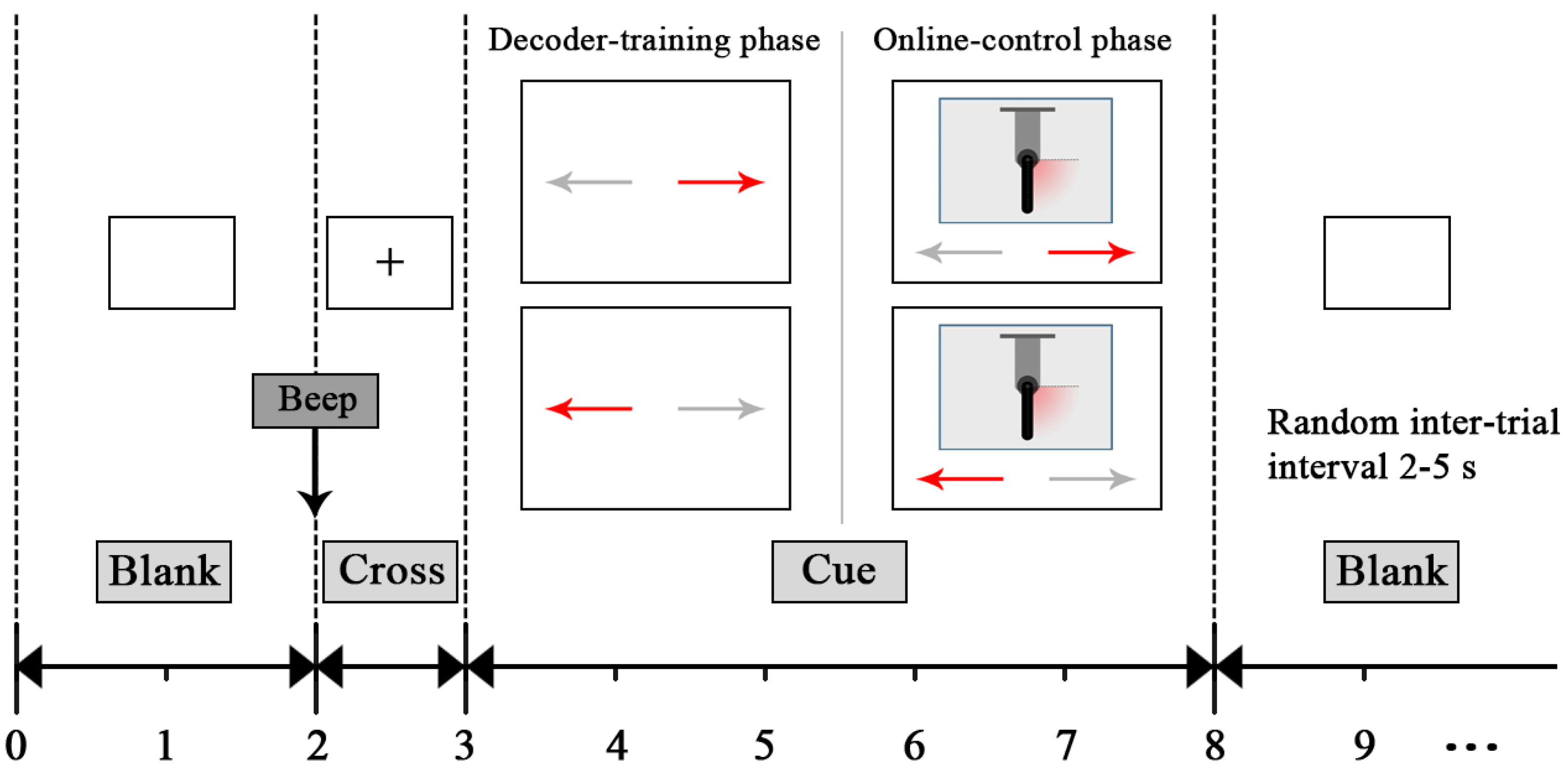

2.2. Experiment Procedure

2.2.1. Decoder-Training Phase

2.2.2. Online-Control Phase

2.3. Trial Exclusion

2.4. Feature Extraction

2.5. Classification

2.6. Data Analysis

3. Results

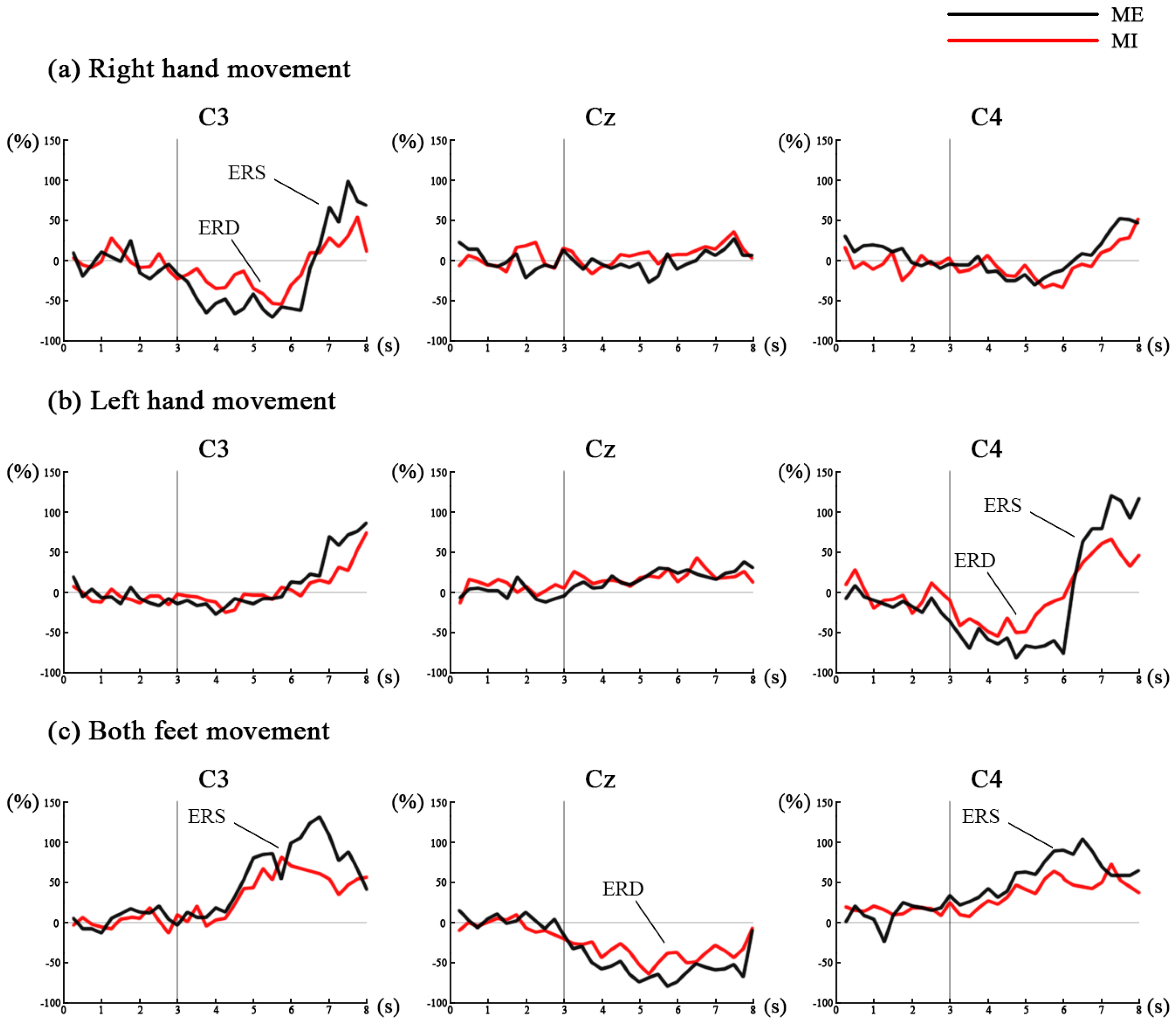

3.1. Results of the Decoder-Training Phase

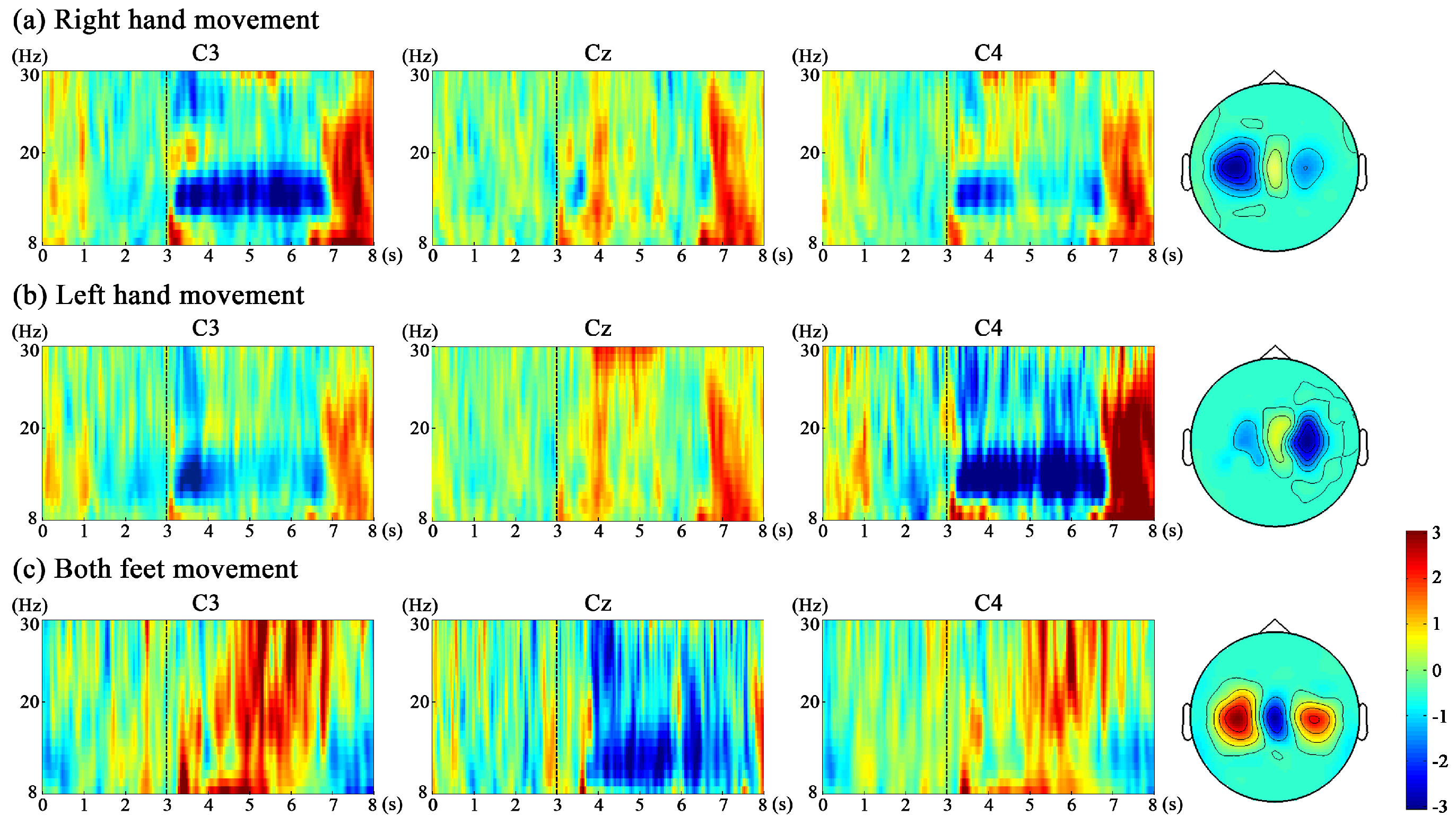

3.1.1. Neurophysiological Analysis of ERD/ERS

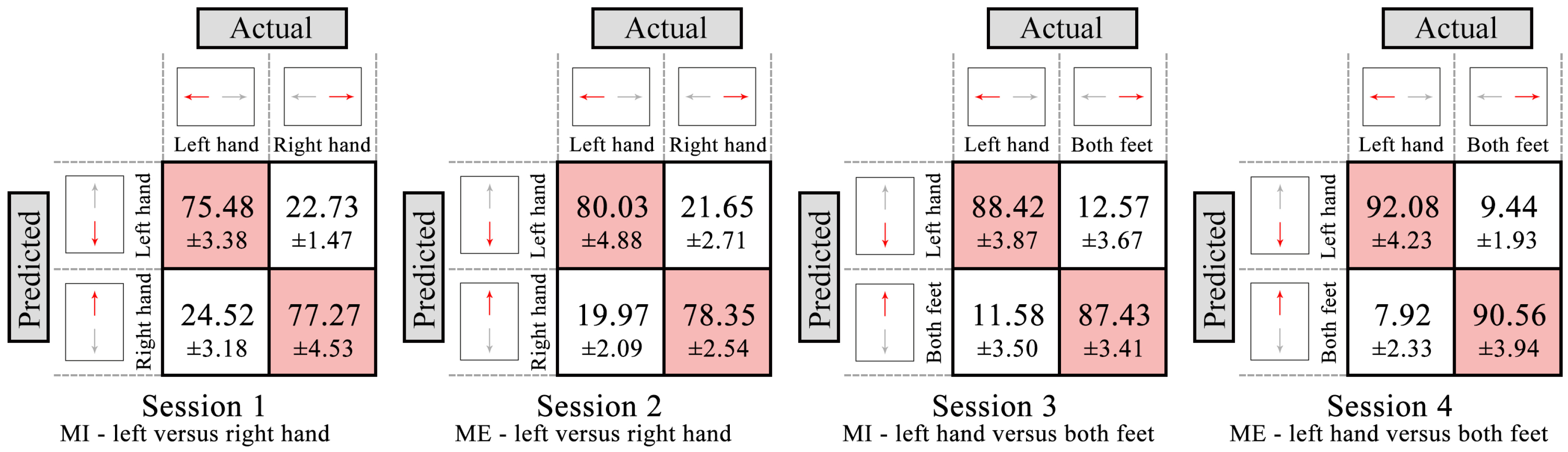

3.1.2. Classification Results

3.2. Results of Online-Control Phase

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| BMI | brain-machine interfaces |

| EEG | electroencephalogram |

| ERD | event-related desynchronization |

| ERS | event-related synchronization |

| ME | motor execution |

| MI | motor imagery |

| ADL | activities of daily living |

| EMG | electromyography |

| LDA | linear discriminant analysis |

| CSP | common spatial pattern |

References

- Lo, H.S.; Xie, S.Q. Exoskeleton robots for upper-limb rehabilitation: State of the art and future prospects. Med. Eng. Phys. 2012, 34, 261–268. [Google Scholar] [CrossRef] [PubMed]

- Lo, A.C.; Guarino, P.D.; Richards, L.G.; Haselkorn, J.K.; Wittenberg, G.F.; Federman, D.G.; Ringer, R.J.; Wagner, T.H.; Krebs, H.I.; Volpe, B.T.; et al. Robot-assisted therapy for long-term upper-limb impairment after stroke. N. Engl. J. Med. 2010, 362, 1772–1783. [Google Scholar] [CrossRef] [PubMed]

- Gopura, R.; Bandara, D.; Kiguchi, K.; Mann, G. Developments in hardware systems of active upper-limb exoskeleton robots: A review. Robot. Auton. Syst. 2016, 75, 203–220. [Google Scholar] [CrossRef]

- Ren, Y.; Kang, S.H.; Park, H.S.; Wu, Y.N.; Zhang, L.Q. Developing a multi-joint upper limb exoskeleton robot for diagnosis, therapy, and outcome evaluation in neurorehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 21, 490–499. [Google Scholar] [PubMed]

- Naik, G.; Al-Timemy, A.; Nguyen, H. Transradial amputee gesture classification using an optimal number of sEMG sensors: An approach using ICA clustering. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 24, 837–846. [Google Scholar] [CrossRef] [PubMed]

- Khokhar, Z.O.; Xiao, Z.G.; Menon, C. Surface EMG pattern recognition for real-time control of a wrist exoskeleton. Biomed. Eng. Online 2010, 9, 1. [Google Scholar] [CrossRef] [PubMed]

- Naik, G.R.; Kumar, D.K. Identification of hand and finger movements using multi run ICA of surface electromyogram. J. Med. Syst. 2012, 36, 841–851. [Google Scholar] [CrossRef] [PubMed]

- Herr, H. Exoskeletons and orthoses: Classification, design challenges and future directions. J. Neuroeng. Rehabil. 2009, 6, 1. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Naik, G.R.; Nguyen, H.T. Nonnegative matrix factorization for the identification of EMG finger movements: Evaluation using matrix analysis. IEEE J. Biomed. Health Inform. 2015, 19, 478–485. [Google Scholar] [CrossRef] [PubMed]

- Yin, Y.H.; Fan, Y.J.; Xu, L.D. EMG and EPP-integrated human-machine interface between the paralyzed and rehabilitation exoskeleton. IEEE Trans. Inform. Technol. Biomed. 2012, 16, 542–549. [Google Scholar] [CrossRef] [PubMed]

- Naik, G.R.; Kumar, D.K.; Jayadeva. Twin SVM for gesture classification using the surface electromyogram. IEEE Trans. Inform. Technol. Biomed. 2010, 14, 301–308. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Chen, Y.; Wang, J. Surface EMG-based sketching recognition using two analysis windows and gene expression programming. Front. Neurosci. 2016, 10, 445. [Google Scholar] [CrossRef] [PubMed]

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain-computer interfaces for communication and control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Neuper, C. Motor imagery and direct brain-computer communication. Proc. IEEE 2001, 89, 1123–1134. [Google Scholar] [CrossRef]

- Pregenzer, M.; Pfurtscheller, G. Frequency component selection for an EEG-based brain to computer interface. IEEE Trans. Rehabil. Eng. 1999, 7, 413–419. [Google Scholar] [CrossRef] [PubMed]

- Pfurtscheller, G.; Aranibar, A. Event-related cortical desynchronization detected by power measurements of scalp EEG. Electroencephalogr. Clin. Neurophysiol. 1977, 42, 817–826. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Da Silva, F.L. Event-related EEG/MEG synchronization and desynchronization: Basic principles. Clin. Neurophysiol. 1999, 110, 1842–1857. [Google Scholar] [CrossRef]

- Pfurtscheller, G. Graphical display and statistical evaluation of event-related desynchronization (ERD). Electroencephalogr. Clin. Neurophysiol. 1977, 43, 757–760. [Google Scholar] [CrossRef]

- Pfurtscheller, G. Event-related synchronization (ERS): An electrophysiological correlate of cortical areas at rest. Electroencephalogr. Clin. Neurophysiol. 1992, 83, 62–69. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Neuper, C.; Flotzinger, D.; Pregenzer, M. EEG-based discrimination between imagination of right and left hand movement. Electroencephalogr. Clin. Neurophysiol. 1997, 103, 642–651. [Google Scholar] [CrossRef]

- Müller-Putz, G.R.; Kaiser, V.; Solis-Escalante, T.; Pfurtscheller, G. Fast set-up asynchronous brain-switch based on detection of foot motor imagery in 1-channel EEG. Med. Biol. Eng. Comput. 2010, 48, 229–233. [Google Scholar] [CrossRef] [PubMed]

- Broetz, D.; Braun, C.; Weber, C.; Soekadar, S.R.; Caria, A.; Birbaumer, N. Combination of brain-computer interface training and goal-directed physical therapy in chronic stroke: A case report. Neurorehabil. Neural Repair 2010, 6, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Caria, A.; Weber, C.; Brötz, D.; Ramos, A.; Ticini, L.F.; Gharabaghi, A.; Braun, C.; Birbaumer, N. Chronic stroke recovery after combined BCI training and physiotherapy: A case report. Psychophysiology 2011, 48, 578–582. [Google Scholar] [CrossRef] [PubMed]

- Ramos-Murguialday, A.; Broetz, D.; Rea, M.; Läer, L.; Yilmaz, Ö.; Brasil, F.L.; Liberati, G.; Curado, M.R.; Garcia-Cossio, E.; Vyziotis, A.; et al. Brain-machine interface in chronic stroke rehabilitation: A controlled study. Ann. Neurol. 2013, 74, 100–108. [Google Scholar] [CrossRef] [PubMed]

- Ang, K.K.; Guan, C.; Chua, K.S.G.; Ang, B.T.; Kuah, C.; Wang, C.; Phua, K.S.; Chin, Z.Y.; Zhang, H. A clinical study of motor imagery-based brain-computer interface for upper limb robotic rehabilitation. In Proceedings of the 31st Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; pp. 5981–5984.

- Sarac, M.; Koyas, E.; Erdogan, A.; Cetin, M.; Patoglu, V. Brain computer interface based robotic rehabilitation with online modification of task speed. In Proceedings of the 2013 IEEE International Conference on Rehabilitation Robotics (ICORR), Seattle, WA, USA, 24–26 June 2013; pp. 1–7.

- Pfurtscheller, G.; Guger, C.; Müller, G.; Krausz, G.; Neuper, C. Brain oscillations control hand orthosis in a tetraplegic. Neurosci. Lett. 2000, 292, 211–214. [Google Scholar] [CrossRef]

- Bai, O.; Lin, P.; Vorbach, S.; Floeter, M.K.; Hattori, N.; Hallett, M. A high performance sensorimotor beta rhythm-based brain-computer interface associated with human natural motor behavior. J. Neural Eng. 2008, 5, 24–35. [Google Scholar] [CrossRef] [PubMed]

- Oldfield, R.C. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 1971, 9, 97–113. [Google Scholar] [CrossRef]

- Vidaurre, C.; Blankertz, B. Towards a cure for BCI illiteracy. Brain Topogr. 2010, 23, 194–198. [Google Scholar] [CrossRef] [PubMed]

- Nolan, H.; Whelan, R.; Reilly, R. FASTER: Fully automated statistical thresholding for EEG artifact rejection. J. Neurosci. Methods 2010, 192, 152–162. [Google Scholar] [CrossRef] [PubMed]

- Bai, O.; Mari, Z.; Vorbach, S.; Hallett, M. Asymmetric spatiotemporal patterns of event-related desynchronization preceding voluntary sequential finger movements: A high-resolution EEG study. Clin. Neurophysiol. 2005, 116, 1213–1221. [Google Scholar] [CrossRef] [PubMed]

- Onose, G.; Grozea, C.; Anghelescu, A.; Daia, C.; Sinescu, C.; Ciurea, A.; Spircu, T.; Mirea, A.; Andone, I.; Spânu, A.; et al. On the feasibility of using motor imagery EEG-based brain-computer interface in chronic tetraplegics for assistive robotic arm control: A clinical test and long-term post-trial follow-up. Spinal Cord 2012, 50, 599–608. [Google Scholar] [CrossRef] [PubMed]

- Qaraqe, M.; Ismail, M.; Serpedin, E. Band-sensitive seizure onset detection via CSP-enhanced EEG features. Epilepsy Behav. 2015, 50, 77–87. [Google Scholar] [CrossRef] [PubMed]

- Subasi, A.; Gursoy, M.I. EEG signal classification using PCA, ICA, LDA and support vector machines. Expert Syst. Appl. 2010, 37, 8659–8666. [Google Scholar] [CrossRef]

- Subasi, A. Automatic recognition of alertness level from EEG by using neural network and wavelet coefficients. Expert Syst. Appl. 2005, 28, 701–711. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Solis-Escalante, T. Could the beta rebound in the EEG be suitable to realize a “brain switch”? Clin. Neurophysiol. 2009, 120, 24–29. [Google Scholar] [CrossRef] [PubMed]

- Pfurtscheller, G.; Neuper, C.; Andrew, C.; Edlinger, G. Foot and hand area mu rhythms. Int. J. Psychophysiol. 1997, 26, 121–135. [Google Scholar] [CrossRef]

- Huang, D.; Lin, P.; Fei, D.Y.; Chen, X.; Bai, O. Decoding human motor activity from EEG single trials for a discrete two-dimensional cursor control. J. Neural Eng. 2009, 6, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Formaggio, E.; Storti, S.F.; Galazzo, I.B.; Gandolfi, M.; Geroin, C.; Smania, N.; Spezia, L.; Waldner, A.; Fiaschi, A.; Manganotti, P. Modulation of event-related desynchronization in robot-assisted hand performance: Brain oscillatory changes in active, passive and imagined movements. J. Neuroeng. Rehabil. 2013, 10, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Lisi, G.; Noda, T.; Morimoto, J. Decoding the ERD/ERS: Influence of afferent input induced by a leg assistive robot. Front. Syst. Neurosci. 2014, 8, 1–12. [Google Scholar] [CrossRef] [PubMed]

| Session | Task | Paradigm | Cue and Description |

|---|---|---|---|

| Session 1 | Motor imagery (MI) | left versus right hand movement | “←”: imagine left wrist extension; |

| “→”: imagine right wrist extension. | |||

| Session 2 | Motor execution (ME) | left versus right hand movement | “←”: execute left wrist extension; |

| “→”: execute right wrist extension. | |||

| Session 3 | Motor imagery (MI) | left hand versus both feet movement | “←”: imagine left wrist extension; |

| “→”: imagine both feet dorsiflexion. | |||

| Session 4 | Motor execution (ME) | left hand versus both feet movement | “←”: execute left wrist extension; |

| “→”: execute both feet dorsiflexion. |

| Classification Strategy | Classifier | Train-Test Ratio | Session 1 | Session 2 | Session 3 | Session 4 | Average |

|---|---|---|---|---|---|---|---|

| S1 | LDA | 50%–50% | 74.34 ± 1.07 | 76.85 ± 2.22 | 85.38 ± 2.38 | 88.43 ± 3.41 | 81.25 ± 6.73 |

| S2 | LDA | 60%–40% | 75.44 ± 2.03 | 76.32 ± 1.93 | 87.88 ± 1.44 | 90.05 ± 2.54 | 82.42 ± 7.61 |

| S3 | LDA | 80%–20% | 76.38 ± 3.40 | 79.19 ± 2.83 | 87.93 ± 1.04 | 91.32 ± 3.01 | 83.71 ± 7.07 |

| S4 | SVM | 50%–50% | 75.03 ± 1.45 | 75.07 ± 4.05 | 85.55 ± 3.81 | 87.90 ± 1.56 | 80.89 ± 6.81 |

| S5 | SVM | 60%–40% | 75.81 ± 2.30 | 77.74 ± 2.12 | 86.47 ± 1.03 | 90.23 ± 2.44 | 82.56 ± 6.90 |

| S6 | SVM | 80%–20% | 76.55 ± 1.59 | 78.26 ± 3.13 | 87.47 ± 2.54 | 91.26 ± 1.23 | 83.39 ± 7.11 |

| S7 | BPNN | 50%–50% | 71.82 ± 2.39 | 73.11 ± 1.24 | 83.07 ± 3.09 | 86.34 ± 2,84 | 78.59 ± 7.21 |

| S8 | BPNN | 60%–40% | 72.13 ± 1.49 | 73.45 ± 1.83 | 83.23 ± 3.03 | 88.04 ± 2.57 | 79.21 ± 7.69 |

| S9 | BPNN | 80%–20% | 73.24 ± 2.73 | 75.33 ± 3.52 | 84.56 ± 1.91 | 88.43 ± 1.77 | 80.39 ± 7.27 |

| Session 1 (MI) | Session 2 (ME) | Session 3 (MI) | Session 4 (ME) | |||||

|---|---|---|---|---|---|---|---|---|

| Left Hand | Right Hand | Left Hand | Right Hand | Left Hand | Both Feet | Left Hand | Both Feet | |

| Precision | 76.86 ± 2.38 | 75.91 ± 0.92 | 78.71 ± 1.27 | 79.69 ± 2.36 | 87.55 ± 3.24 | 88.30 ± 2.03 | 90.70 ± 1.37 | 91.96 ± 2.07 |

| Recall | 75.48 ± 1.09 | 77.27 ± 1.33 | 80.03 ± 0.74 | 78.45 ± 1.04 | 88.42 ± 3.03 | 87.43 ± 1.92 | 92.08 ± 0.87 | 90.56 ± 1.90 |

| F-score | 76.16 ± 0.71 | 76.58 ± 0.31 | 79.36 ± 0.92 | 79.07 ± 0.48 | 87.99 ± 1.06 | 87.86 ± 0.77 | 91.38 ± 0.92 | 91.25 ± 0.88 |

| Session 5 (MI) without Exoskeleton | Session 6 (ME) without Exoskeleton | Session 7 (MI) wearing Exoskeleton | Session 8 (ME) wearing Exoskeleton | |||||

|---|---|---|---|---|---|---|---|---|

| Left Hand | Both Feet | Left Hand | Both Feet | Left Hand | Both Feet | Left Hand | Both Feet | |

| Precision | 87.72 ± 1.09 | 87.94 ± 2.21 | 92.23 ± 1.17 | 91.15 ± 1.83 | 85.84 ± 1.37 | 83.96 ± 1.21 | 90.33 ± 1.16 | 84.81 ± 1.22 |

| Recall | 87.97 ± 0.93 | 87.69 ± 1.88 | 91.04 ± 1.23 | 92.33 ± 1.54 | 82.13 ± 1.26 | 86.45 ± 0.97 | 83.69 ± 1.46 | 91.04 ± 2.09 |

| F-score | 87.70 ± 0.35 | 87.81 ± 0.89 | 91.63 ± 0.43 | 91.74 ± 0.66 | 83.94 ± 0.88 | 85.19 ± 0.80 | 86.88 ± 0.45 | 87.81 ± 0.92 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, Z.; Sun, S.; Zhang, S.; Chen, Y.; Li, C.; Chen, S. A Brain-Machine Interface Based on ERD/ERS for an Upper-Limb Exoskeleton Control. Sensors 2016, 16, 2050. https://doi.org/10.3390/s16122050

Tang Z, Sun S, Zhang S, Chen Y, Li C, Chen S. A Brain-Machine Interface Based on ERD/ERS for an Upper-Limb Exoskeleton Control. Sensors. 2016; 16(12):2050. https://doi.org/10.3390/s16122050

Chicago/Turabian StyleTang, Zhichuan, Shouqian Sun, Sanyuan Zhang, Yumiao Chen, Chao Li, and Shi Chen. 2016. "A Brain-Machine Interface Based on ERD/ERS for an Upper-Limb Exoskeleton Control" Sensors 16, no. 12: 2050. https://doi.org/10.3390/s16122050