Sensor for In-Motion Continuous 3D Shape Measurement Based on Dual Line-Scan Cameras

Abstract

:1. Introduction

2. 3D Shape Measurement Based on Line-Scan Cameras

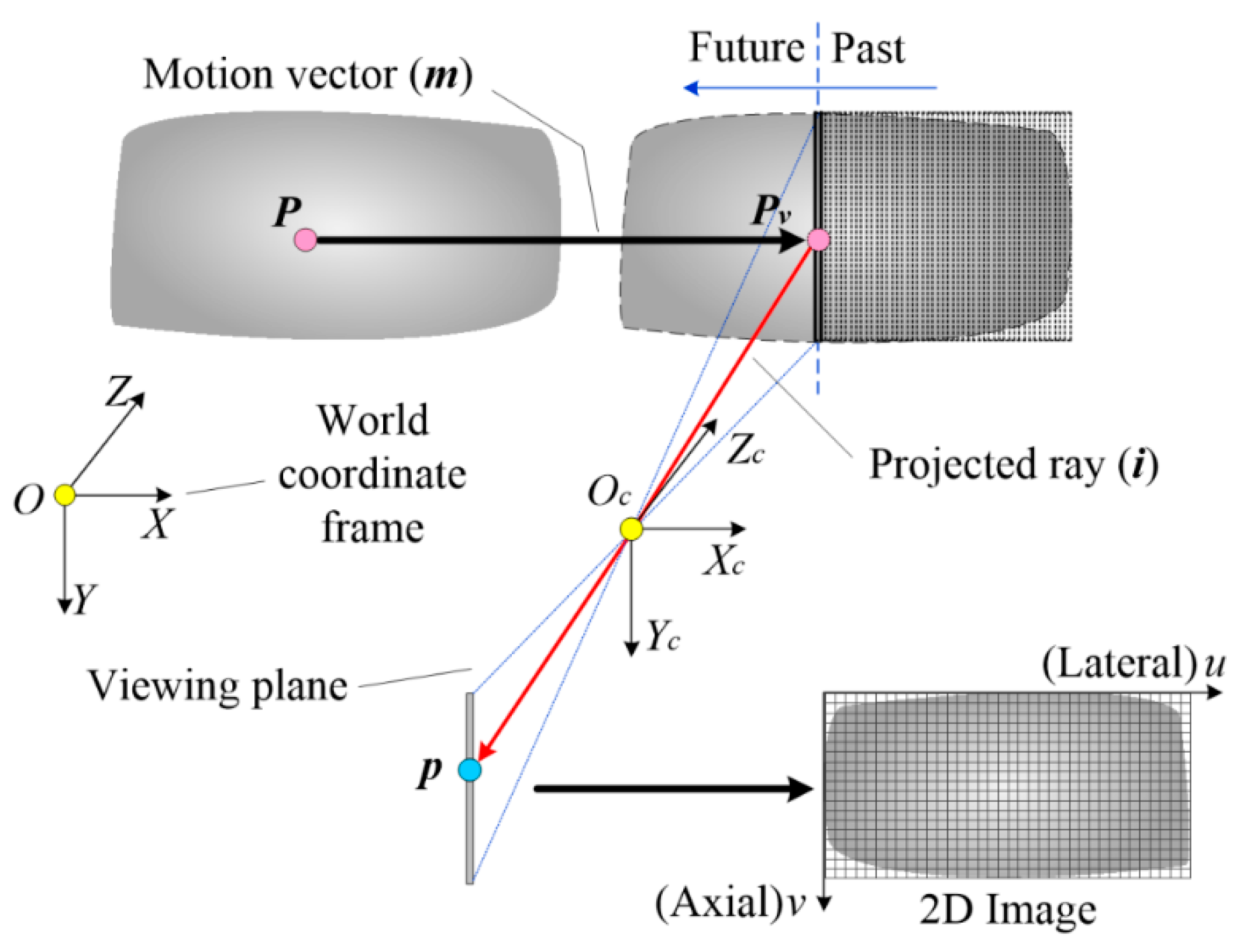

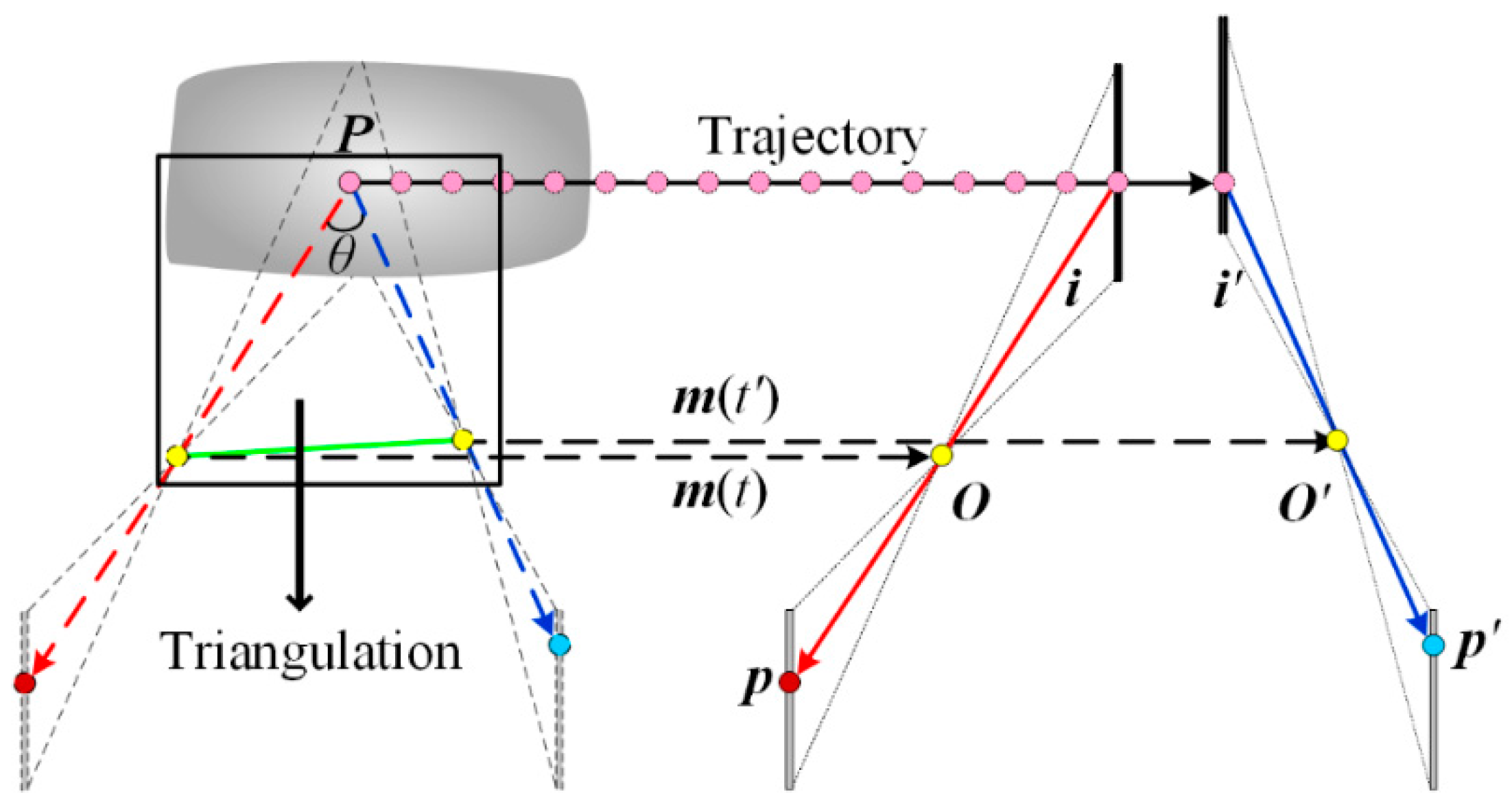

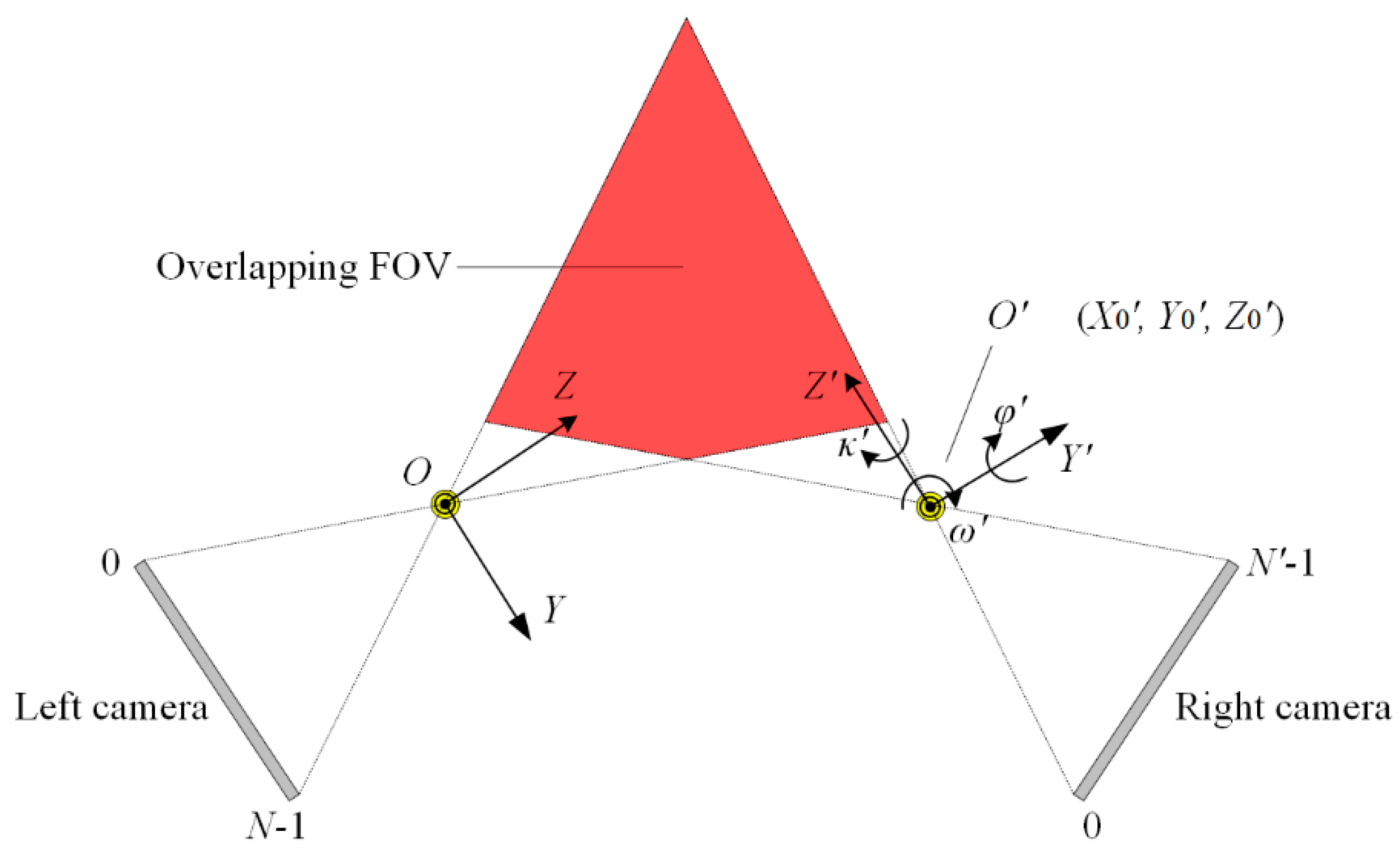

2.1. Triangulation of Line-Scan Cameras

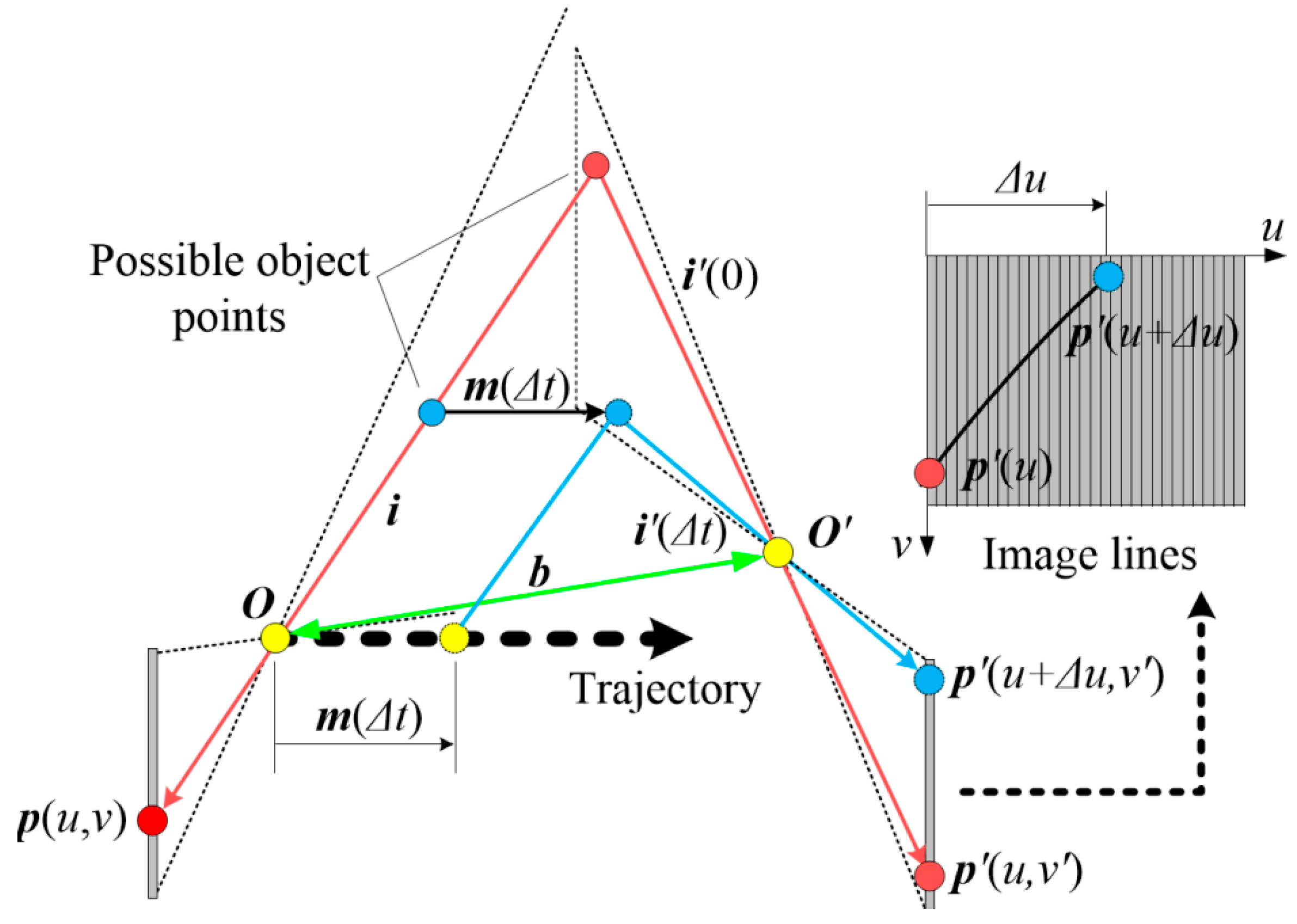

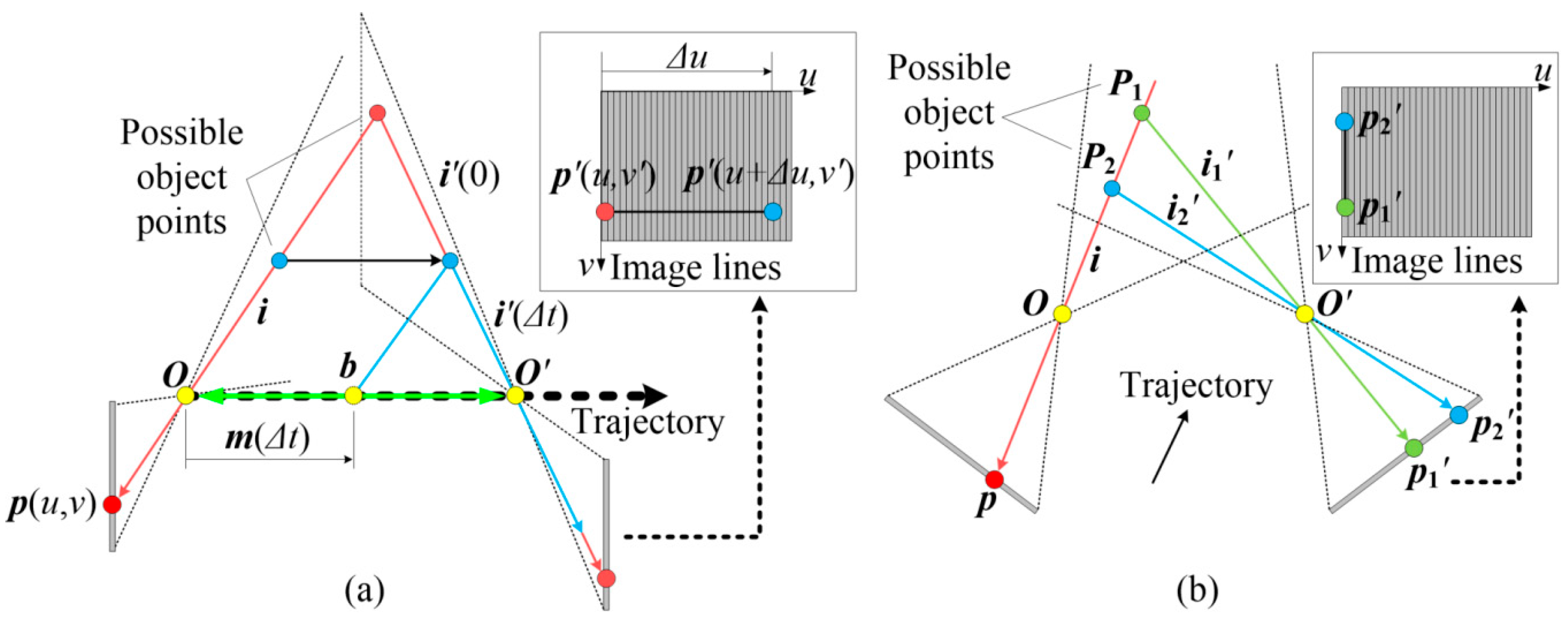

2.2. Stereo Configuration of the Sensor

3. Image Matching Strategy and Matching Error Analysis

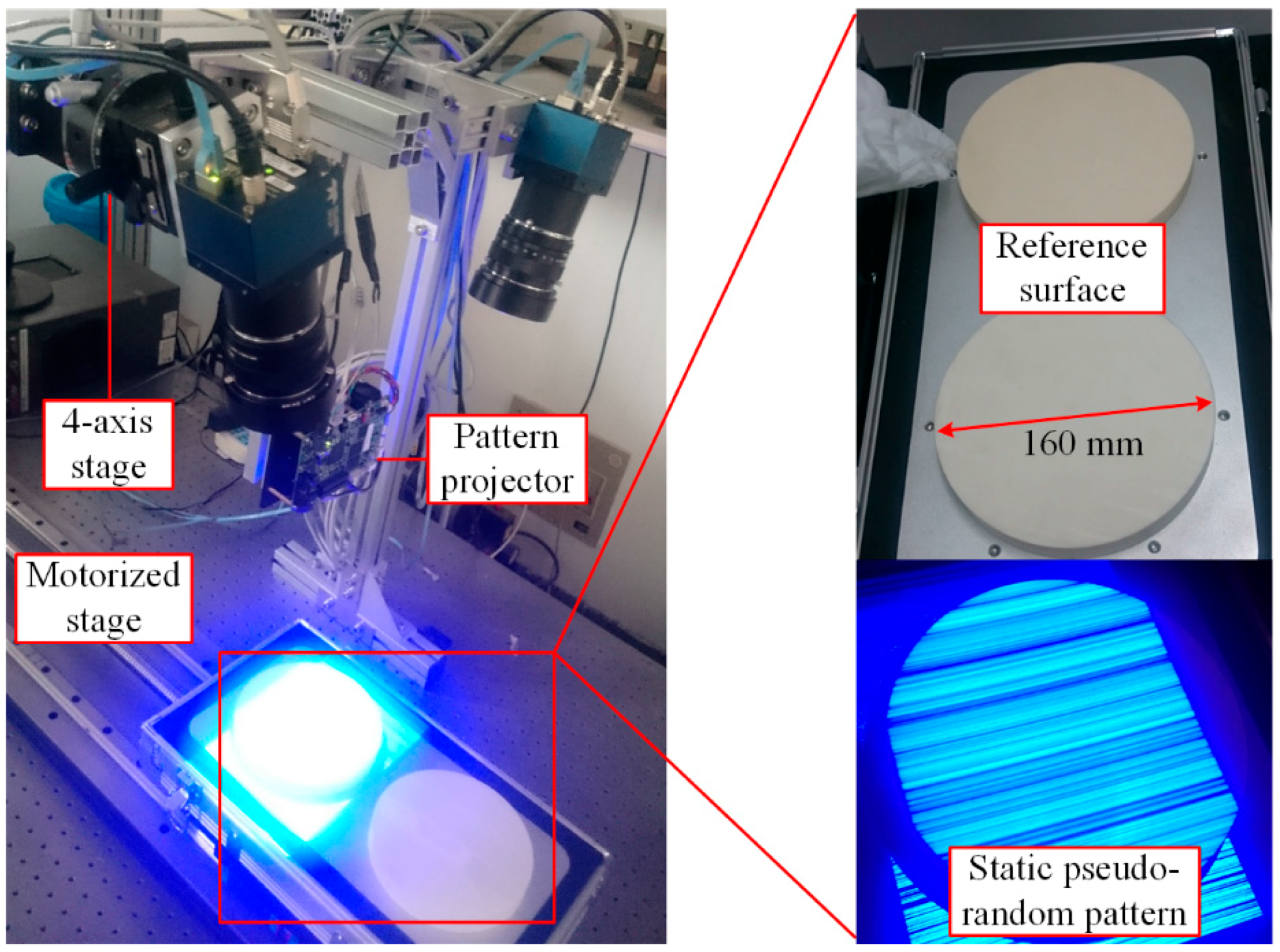

3.1. Structured Light Solution

3.2. Real-Time Correlation Method

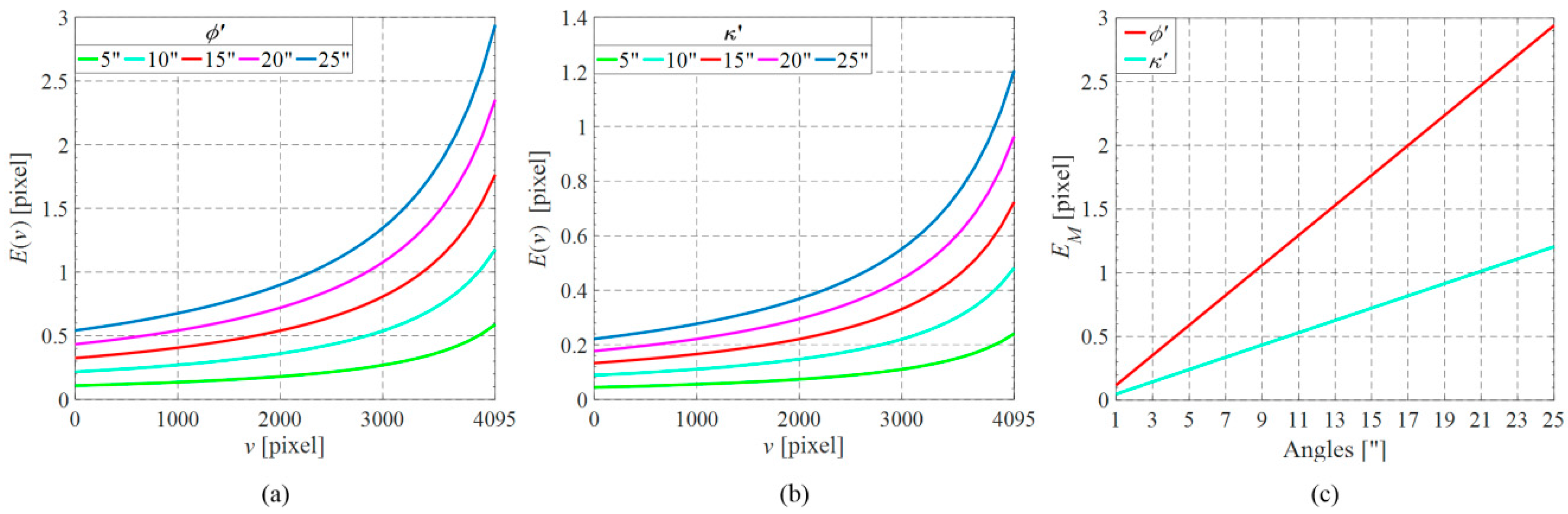

3.3. Matching Error against Non-Coplanar Viewing Planes

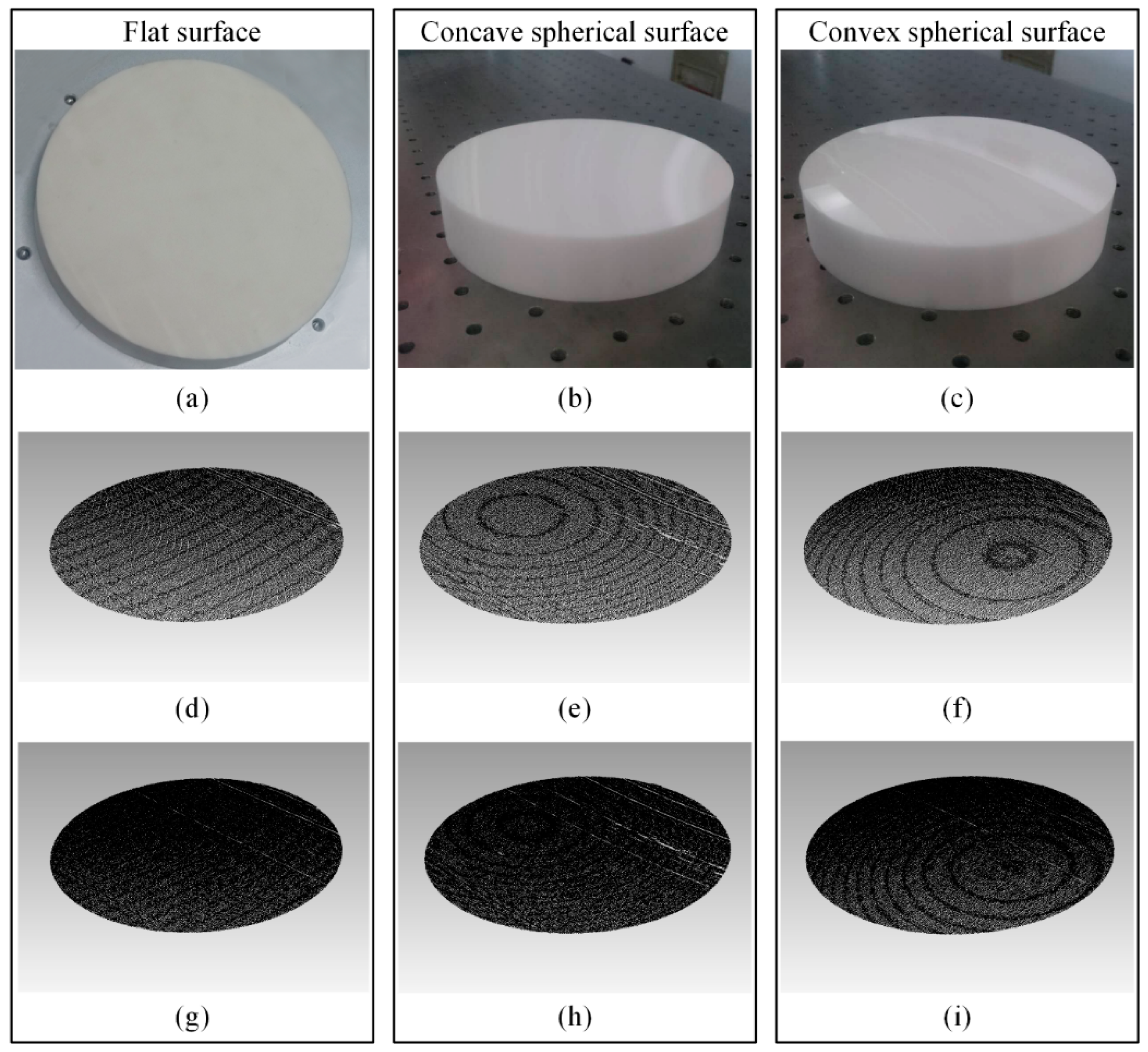

4. Experimental Results

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Chen, F.; Brown, G.M.; Song, M. Overview of three-dimensional shape measurement using optical methods. Opt. Eng. 2000, 39, 10–22. [Google Scholar]

- Sansoni, G.; Trebeschi, M.; Docchio, F. State-of-the-art and applications of 3D imaging sensors in industry, cultural heritage, medicine, and criminal investigation. Sensors 2009, 9, 568–601. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S. Recent progresses on real-time 3D shape measurement using digital fringe projection techniques. Opt. Lasers Eng. 2010, 48, 149–158. [Google Scholar] [CrossRef]

- Li, B.; Wang, Y.; Dai, J.; Lohry, W.; Zhang, S. Some recent advances on superfast 3D shape measurement with digital binary defocusing techniques. Opt. Lasers Eng. 2014, 54, 236–246. [Google Scholar] [CrossRef]

- Heist, S.; Kühmstedt, P.; Tünnermann, A.; Notni, G. Theoretical considerations on aperiodic sinusoidal fringes in comparison to phase-shifted sinusoidal fringes for high-speed three-dimensional shape measurement. Appl. Opt. 2015, 54, 10541–10551. [Google Scholar] [CrossRef] [PubMed]

- Heist, S.; Lutzke, P.; Schmidt, I.; Dietrich, P.; Kühmstedt, P.; Tünnermann, A.; Notni, G. High-speed three-dimensional shape measurement using GOBO projection. Opt. Lasers Eng. 2016, 87, 90–96. [Google Scholar] [CrossRef]

- Dantec Dynamics. Available online: http://www.dantecdynamics.com/digital-image-correlation (accessed on 30 October 2016).

- Teledyne Dalsa. Available online: https://www.teledynedalsa.com/imaging/products/cameras/selector/?model=Line+Scan (accessed on 30 October 2016).

- Zhang, P.; Jay Arre, T.; Ide-Ektessabi, A. A line scan camera-based structure from motion for high-resolution 3D reconstruction. J. Cult. Herit. 2015, 16, 656–663. [Google Scholar] [CrossRef]

- Ilchev, T.; Lilienblum, E.; Michaelis, B.; Joedicke, B.; Schnitzlein, M. A stereo line sensor system to high speed capturing of surfaces in color and 3D shape. In Proceedings of the International Conference on Computer Graphics Theory and Applications and International Conference on Information Visualization Theory and Applications, Rome, Italy, 24–26 February 2012.

- Denkena, B.; Huke, P. Development of a high resolution pattern projection system using linescan cameras. Proc. SPIE 2009, 7389. [Google Scholar] [CrossRef]

- Lilienblum, E.; Al-Hamadi, A. A structured light approach for 3-D surface reconstruction with a stereo line-scan system. IEEE Trans. Instrum. Meas. 2015, 64, 1266–1274. [Google Scholar] [CrossRef]

- Luna, C.A.; Mazo, M.; Lazaro, J.L.; Vazquez, J.F. Calibration of line-scan cameras. IEEE Trans. Instrum. Meas. 2010, 59, 2185–2190. [Google Scholar] [CrossRef]

- Lilienblum, E.; Al-Hamadi, A.; Michaelis, B. A coded 3D calibration method for line-scan cameras. In Pattern Recognition, Proceedings of the 35th German Conference, Saarbrücken, Germany, 3–6 September 2013; Weickert, J., Hein, M., Schiele, B., Eds.; Springer: Berlin, Germany, 2013; Volume 8142, pp. 81–90. [Google Scholar]

- Sun, B.; Zhu, J.; Yang, L.; Yang, S.; Niu, Z. Calibration of line-scan cameras for precision measurement. Appl. Opt. 2016, 55, 6836–6843. [Google Scholar] [CrossRef] [PubMed]

- Gupta, R.; Hartley, R.I. Linear pushbroom cameras. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 963–975. [Google Scholar] [CrossRef]

- Wang, M.; Hu, F.; Li, J. Epipolar resampling of linear pushbroom satellite imagery by a new epipolarity model. ISPRS J. Photogramm. Remote Sens. 2011, 66, 347–355. [Google Scholar] [CrossRef]

- Salvi, J.; Fernandez, S.; Pribanic, T.; Llado, X. A state of the art in structured light patterns for surface profilometry. Pattern Recognit. 2010, 43, 2666–2680. [Google Scholar] [CrossRef]

- Geng, J. Structured-light 3D surface imaging: A tutorial. Adv. Opt. Photonics 2011, 3, 128–160. [Google Scholar] [CrossRef]

- Van der Jeught, S.; Dirckx, J.J.J. Real-time structured light profilometry: A review. Opt. Lasers Eng. 2016, 87, 18–31. [Google Scholar] [CrossRef]

- Wintech Digital. Available online: https://www.wintechdigital.com/ (accessed on 30 October 2016).

- Luhmann, T.; Robson, S.; Kyle, S.; Boehm, J. Close-Range Photogrammetry and 3D Imaging; De Gruyter: Berlin, Germany, 2013. [Google Scholar]

- Hexagon Manufacturing Intelligence. Available online: http://www.hexagonmi.com/products/3d-laser-scanners/leica-tscan-5 (accessed on 30 October 2016).

- Nikon Metrology. Available online: http://www.nikonmetrology.com/en_EU/Products/Laser-Scanning/Handheld-scanning/K-Scan-MMDx-walkaround-scanning (accessed on 30 October 2016).

- Creaform Inc. Available online: http://www.creaform3d.com/en/metrology-solutions/optical-3d-scanner-metrascan (accessed on 30 October 2016).

| vc/N (pixel) | Fy (pixel) | vc’/N’ (pixel) | Fy’ (pixel) | ω’ (°) | Y0’ (mm) | Z0’ (mm) |

| 2048/4096 | 5000 | 2048/4096 | 5000 | 50 | 400 | 75 |

| φ’ (”) | κ’ (”) | X0’ (μm) | vx (mm/s) | vy (mm/s) | vz (mm/s) | F (Hz) |

| 1 to 25 | 1 to 25 | 0 | −60 | 0 | 0 | 500 |

| Measured Suface | Scanning Speed (mm/s) | Screw Jitter (μm) | Max (mm) | Min (mm) | RMS (mm) |

|---|---|---|---|---|---|

| Flat Surface | 60 | 23 | 0.237 | −0.581 | 0.072 |

| 30 | 15 | 0.229 | −0.535 | 0.062 | |

| Concave spherical surface | 60 | 23 | 0.469 | −0.348 | 0.073 |

| 30 | 15 | 0.454 | −0.591 | 0.059 | |

| Convex spherical surface | 60 | 23 | 0.564 | −0.761 | 0.076 |

| 30 | 15 | 0.465 | −0.822 | 0.068 |

| Measured Suface | Scanning Speed (mm/s) | CPU Pixels | GPU Pixels | CPU (Kpixel/s) | GPU (Mpixel/s) |

|---|---|---|---|---|---|

| Flat Surface | 60 | 1,706,452 | 1,702,784 | 15.513 | 19.649 |

| 30 | 3,421,450 | 3,425,468 | 11.367 | 19.328 | |

| Concave spherical surface | 60 | 1,743,740 | 1,749,575 | 12.728 | 17.691 |

| 30 | 3,478,198 | 3,479,648 | 9.275 | 17.259 | |

| Convex spherical surface | 60 | 1,784,801 | 1,781,576 | 15.520 | 19.537 |

| 30 | 3,562,053 | 3,569,705 | 7.727 | 18.761 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, B.; Zhu, J.; Yang, L.; Yang, S.; Guo, Y. Sensor for In-Motion Continuous 3D Shape Measurement Based on Dual Line-Scan Cameras. Sensors 2016, 16, 1949. https://doi.org/10.3390/s16111949

Sun B, Zhu J, Yang L, Yang S, Guo Y. Sensor for In-Motion Continuous 3D Shape Measurement Based on Dual Line-Scan Cameras. Sensors. 2016; 16(11):1949. https://doi.org/10.3390/s16111949

Chicago/Turabian StyleSun, Bo, Jigui Zhu, Linghui Yang, Shourui Yang, and Yin Guo. 2016. "Sensor for In-Motion Continuous 3D Shape Measurement Based on Dual Line-Scan Cameras" Sensors 16, no. 11: 1949. https://doi.org/10.3390/s16111949