Human Age Estimation Method Robust to Camera Sensor and/or Face Movement

Abstract

:1. Introduction

| Category | Method | Strength | Weakness |

|---|---|---|---|

| Age estimation not considering motion blur effect | -AAM [3,4,5] or non-AAM [7,8,9] based methods, local features [10,11], global features [12,13], hybrid feature [14]-based methods, age group classification [10,12], single-level age estimation [5], hierarchical age estimation [3,5,15], deep CNN-based methods [16,17,18,19] | -Produce good estimation results with clear and good quality input images | -Estimation accuracy is degraded significantly with motion blurred images |

| Age estimation considering motion blur effect (Proposed method) | -Motion blur parameters are estimated based on the modified Radon transform with ρ range-based summation and fitting method -Input facial image is pre-classified into one of several groups of motion blur based on estimated motion blur parameters -An appropriate age estimator is applied for each group of motion-blurred images | -Robust to image motion blurring | -Additional procedure for estimating motion blur parameters for image is required |

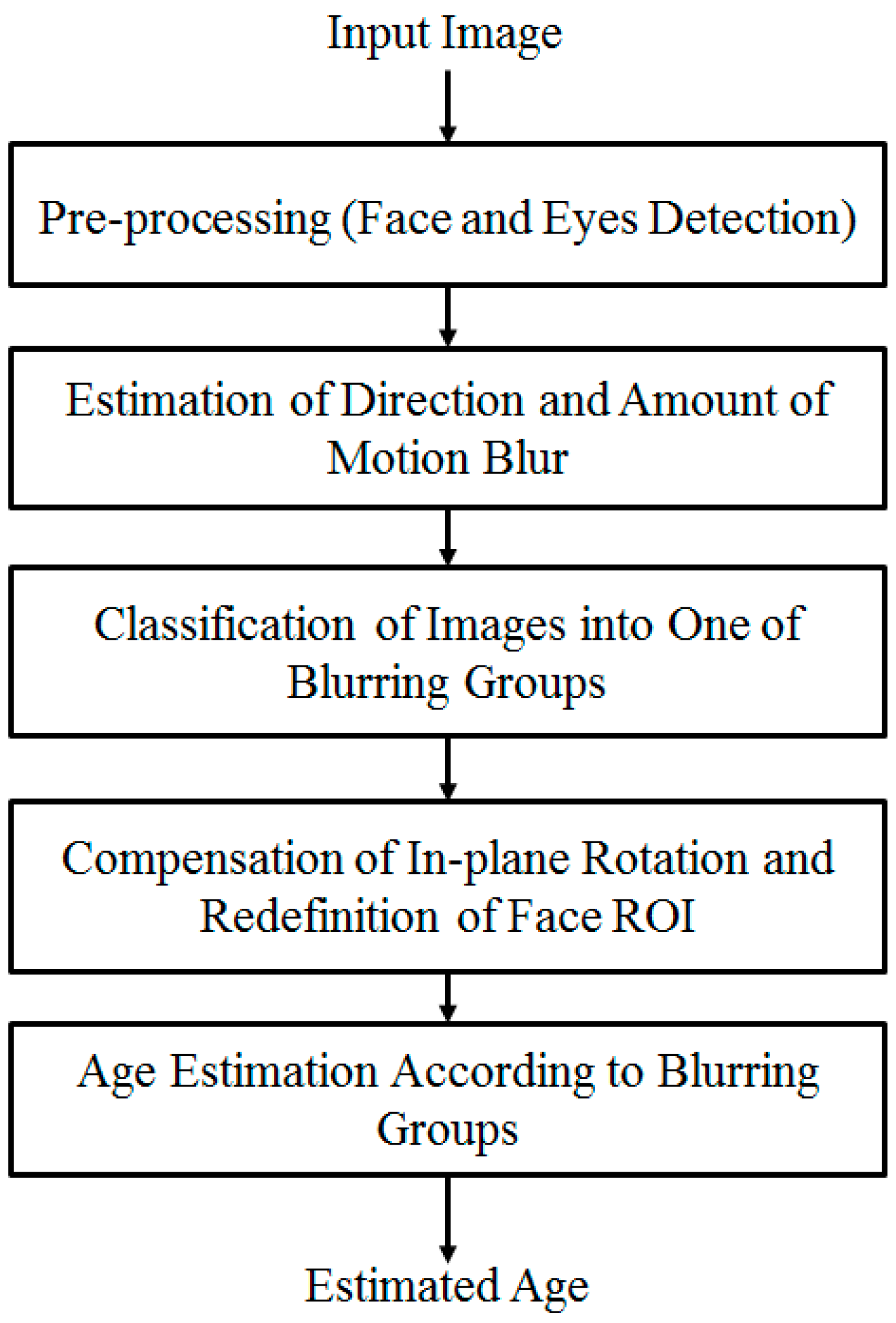

2. Proposed Method for Human Age Estimation Robust to Motion Blurring Effects

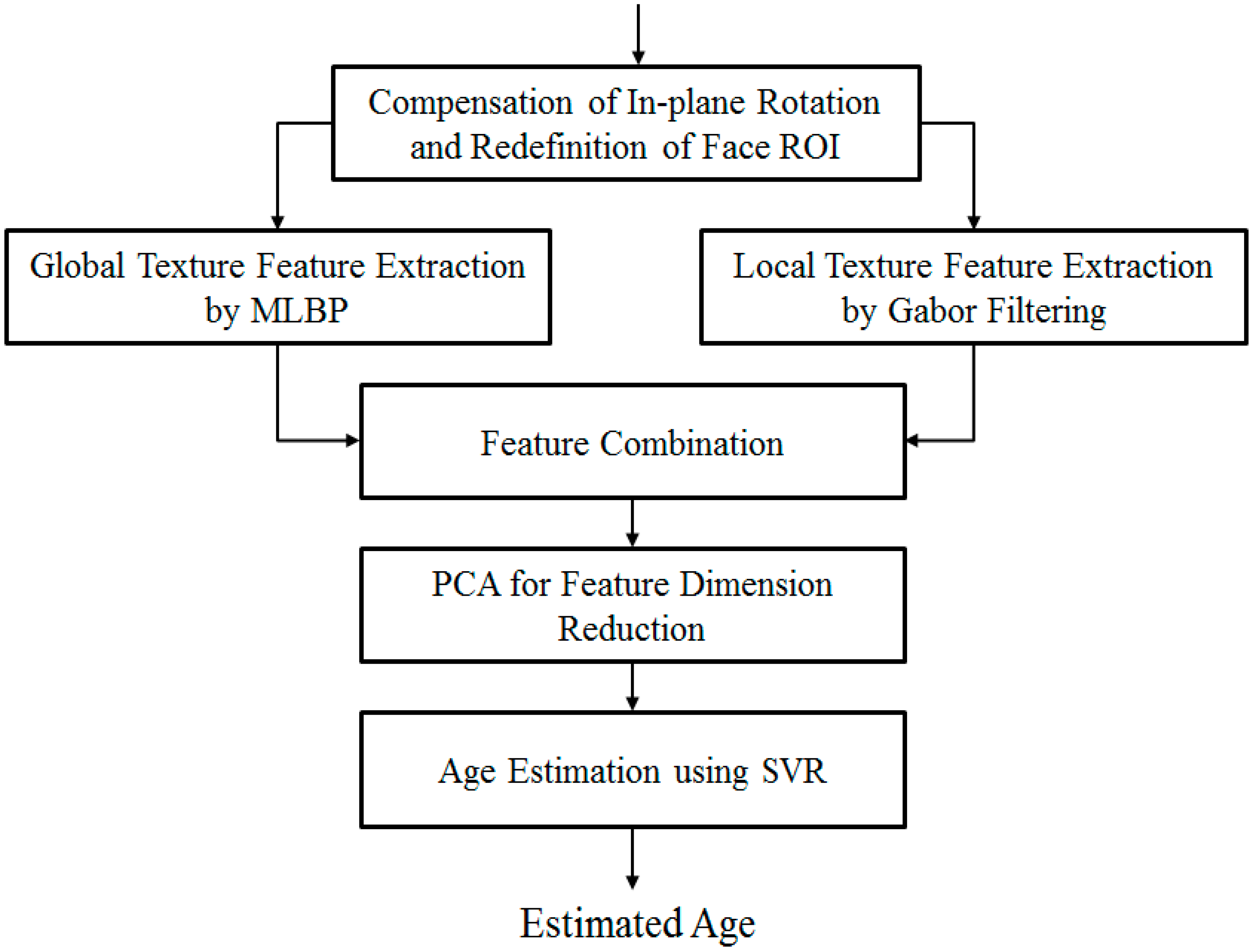

2.1. Proposed Method Overview

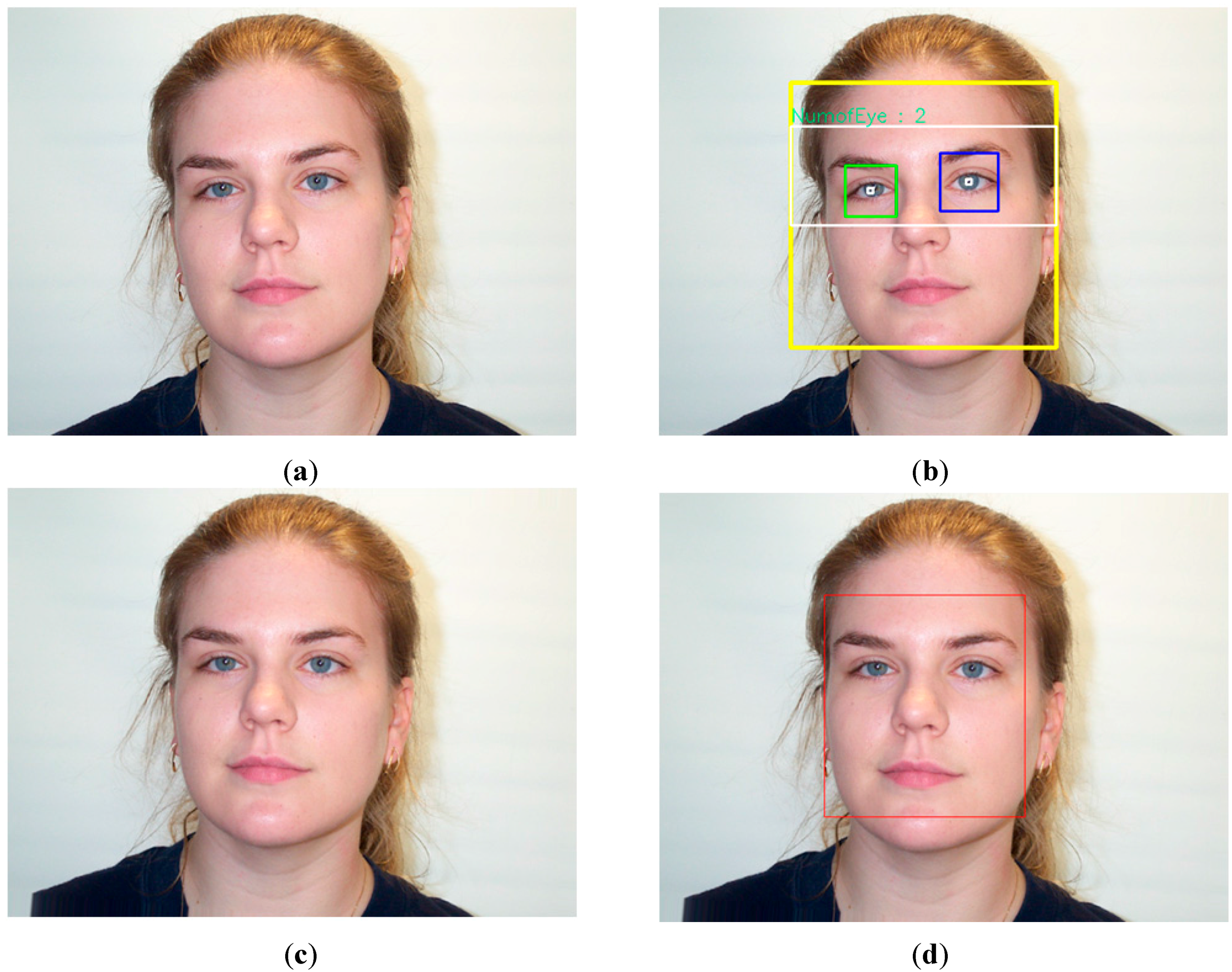

2.2. Pre-Processing Steps for Human Face Detection and in-Plane Rotation Compensation

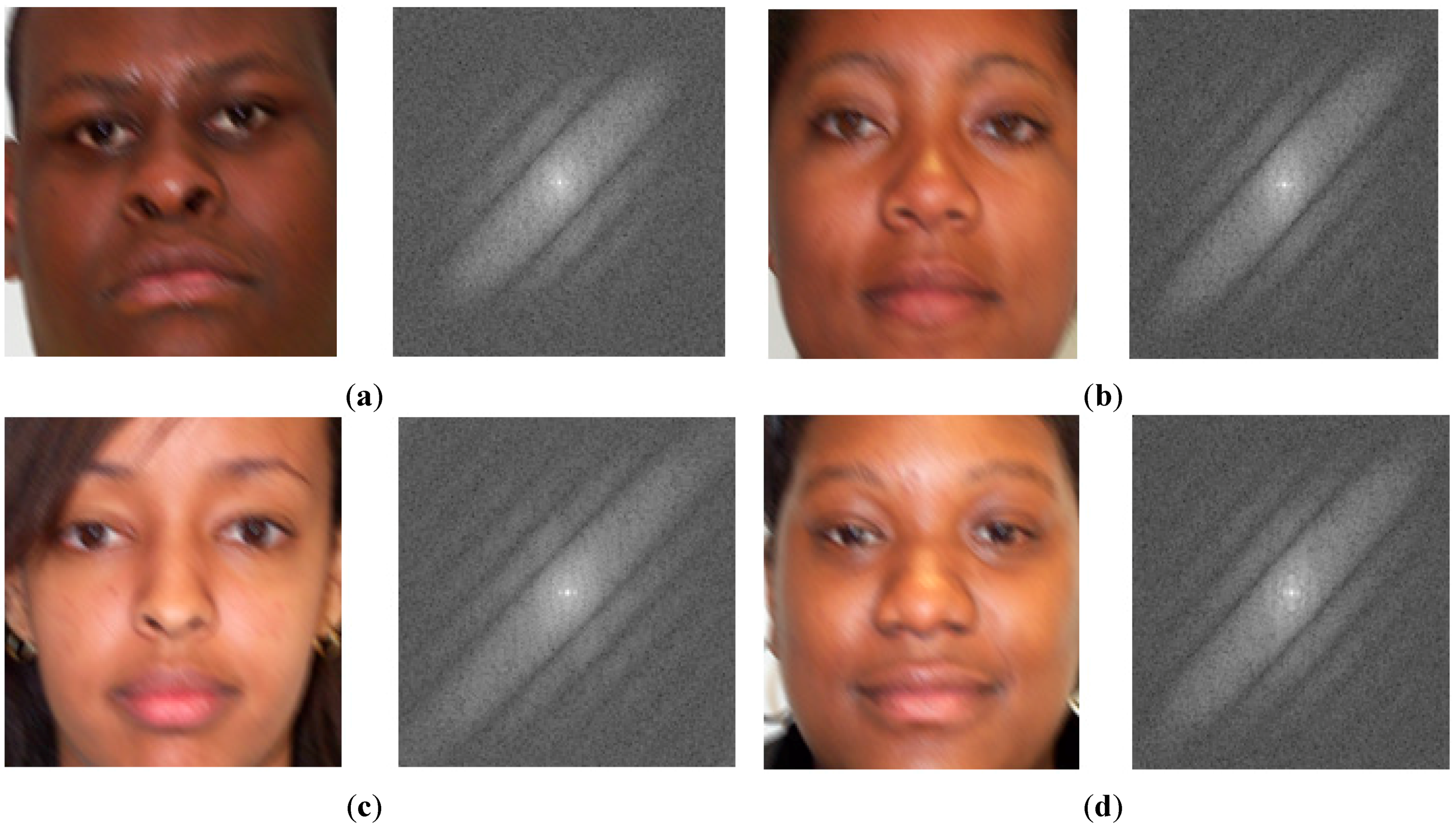

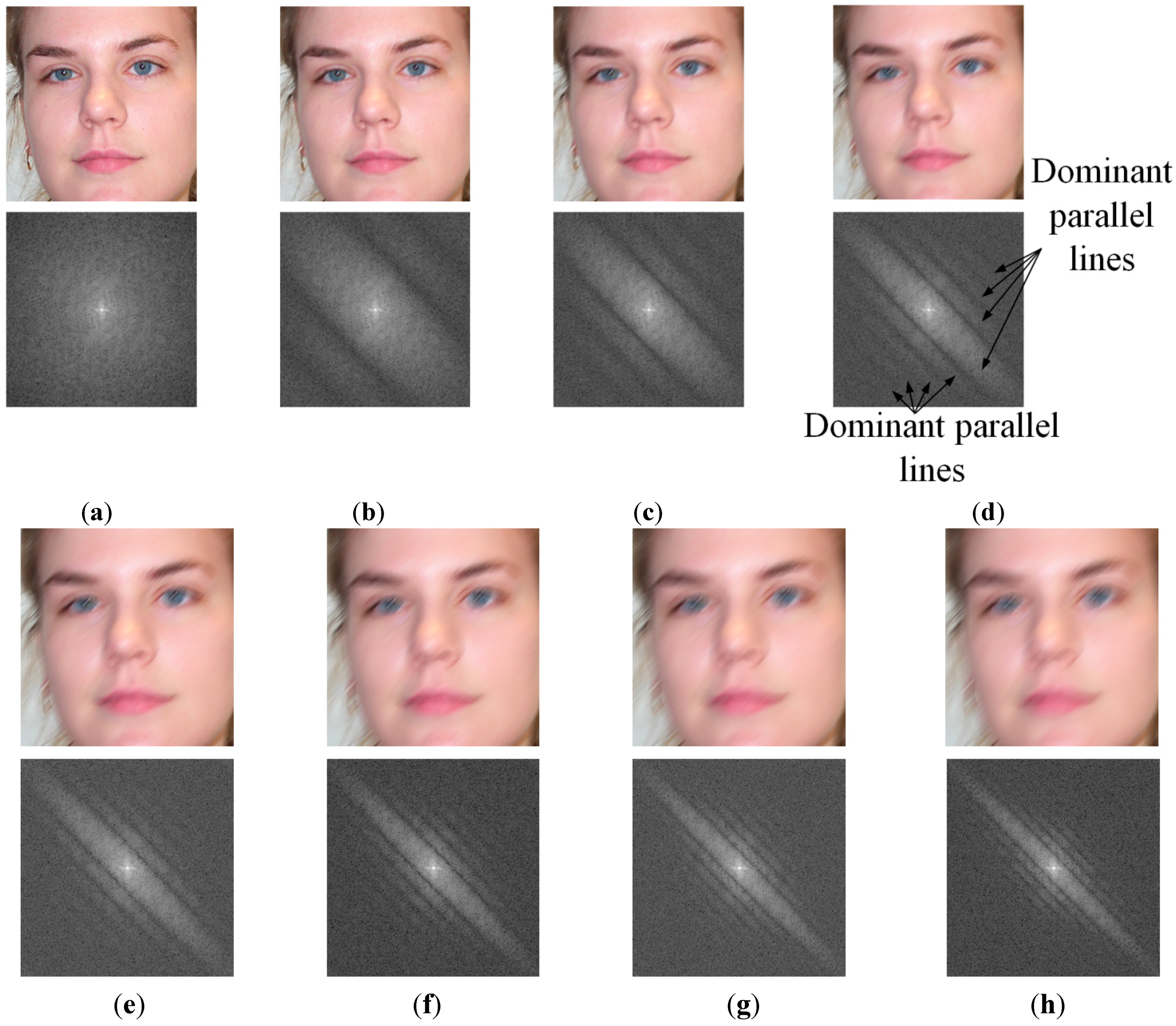

2.3. Proposed Method for Estimating Motion Blur Parameters

2.3.1. Motion Blur Modeling and Its Point-Spread Function

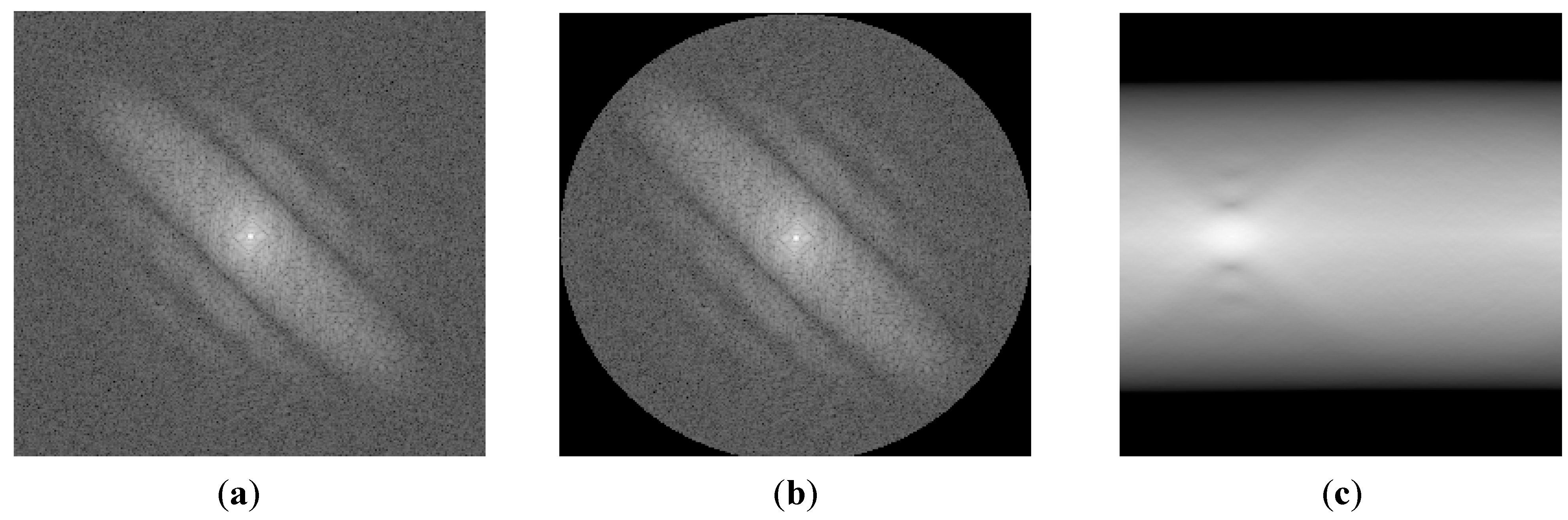

2.3.2. Motion Direction Estimation Based on Modified Radon Transform with ρ Range-Based Summation

| Algorithm 1: Motion Direction Estimation Using Modified Radon Transform with ρ range-based Summation |

|

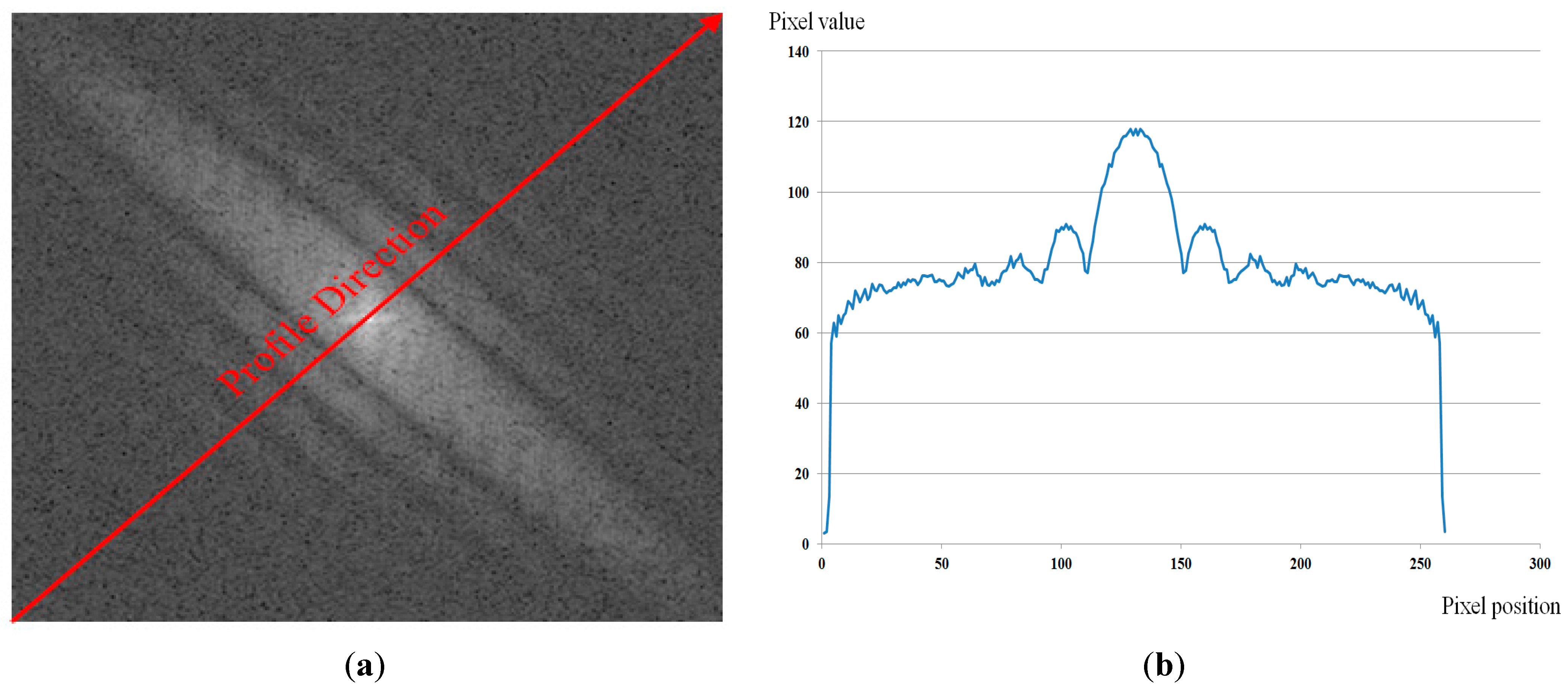

2.3.3. Estimation of Motion Blur Amount (Motion Length)

| Algorithm 2: Estimation of the Amount (Length) of Motion Blur |

|

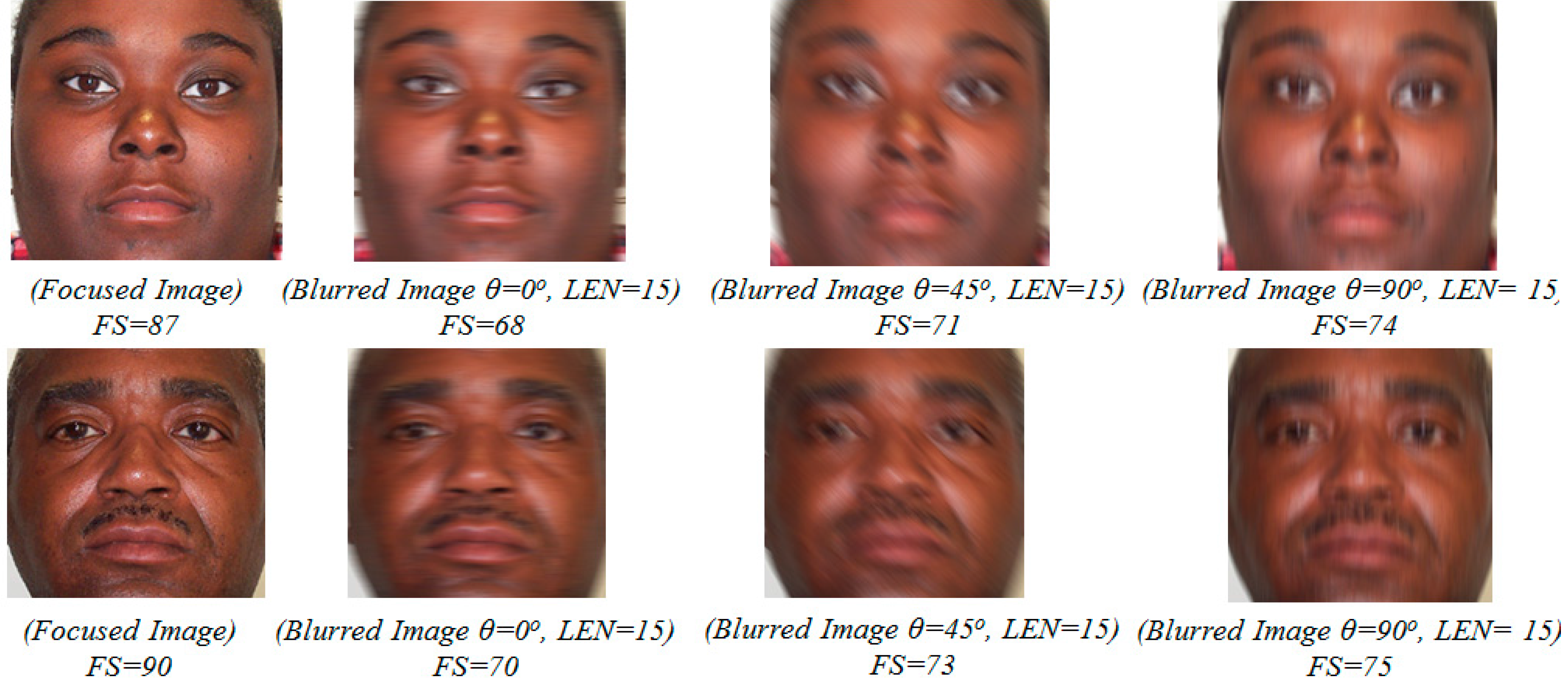

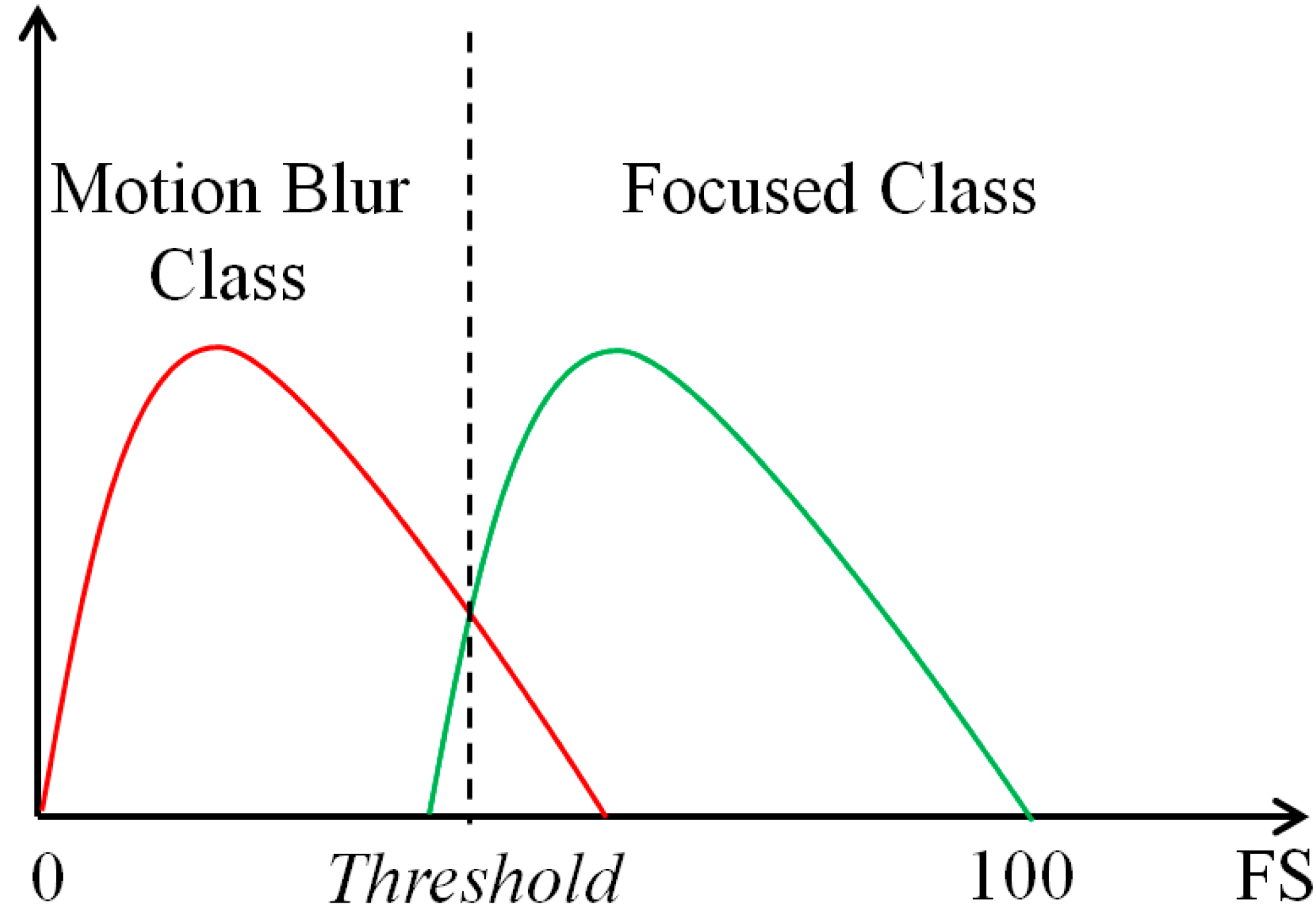

2.3.4. Proposed Focus Score Measurement for Classification of Focused and Motion-Blurred Images

2.4. Human Age Estimation Based on MLBP, Gabor Filtering, PCA, and SVR

2.4.1. Global Age Feature Extraction by MLBP Method

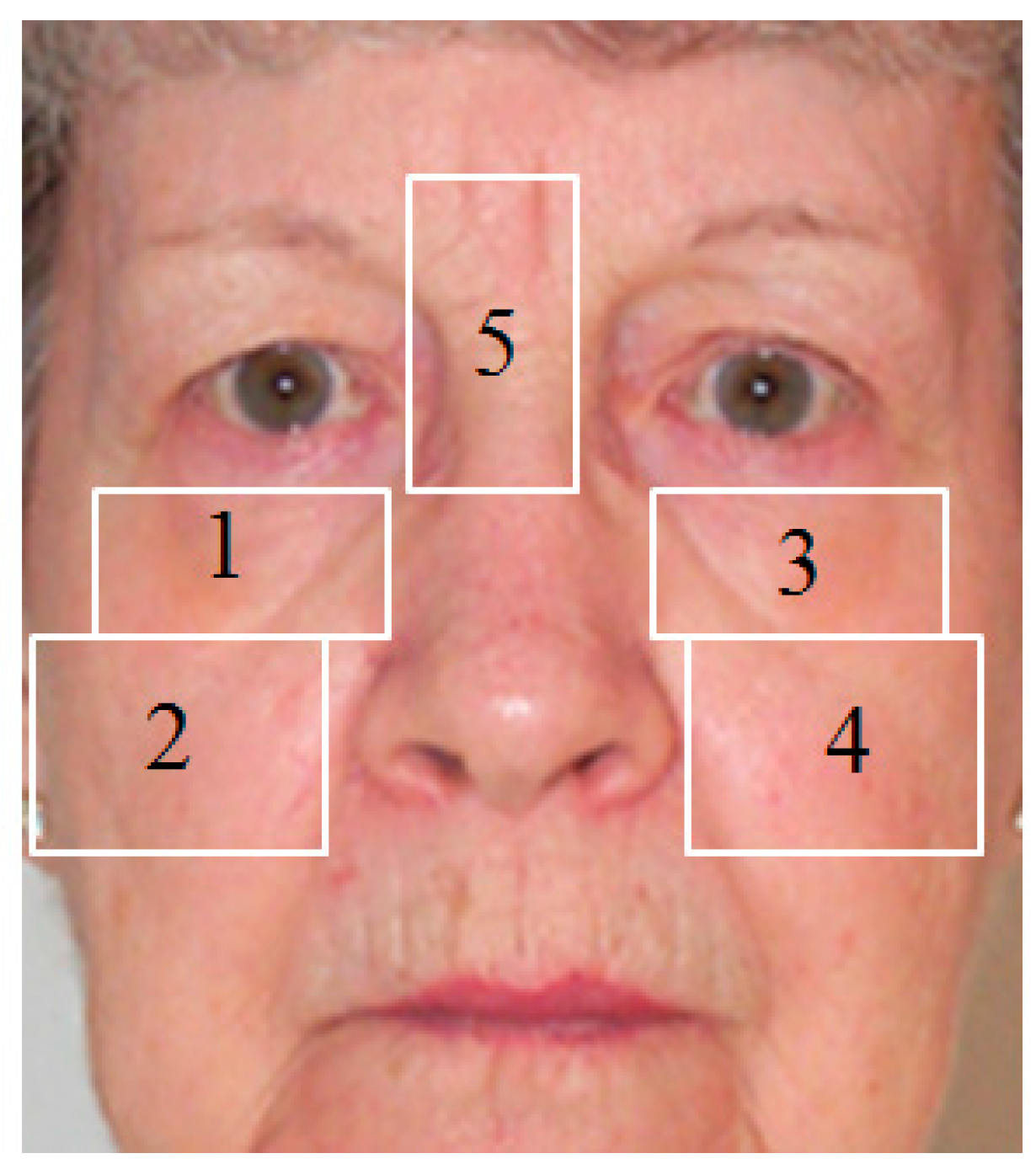

2.4.2. Local Age Feature Extraction by Gabor Filtering

2.4.3. Age Estimation by SVR

3. Experiment Results

3.1. Description of Database and Performance Measurement

| Database | Number of Learning Images | Number of Testing Images | Total |

|---|---|---|---|

| Database Part 1 | 291 | 289 | 580 |

| Database Part 2 | 291 | 289 | 580 |

| Number of Images | Focused Database (Motion Length is 1 and 3) | Slightly Blurred Database (Motion Length is 5, 7, and 9) | Blurred Database (Motion Length is 11, 13, and 15) | Total Number of Images | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 0° | 45° | 90° | 135° | 0° | 45° | 90° | 135° | |||

| Learning Database | 1455 | 873 | 873 | 873 | 873 | 873 | 873 | 873 | 873 | 8439 |

| Testing Database | 1445 | 867 | 867 | 867 | 867 | 867 | 867 | 867 | 867 | 8381 |

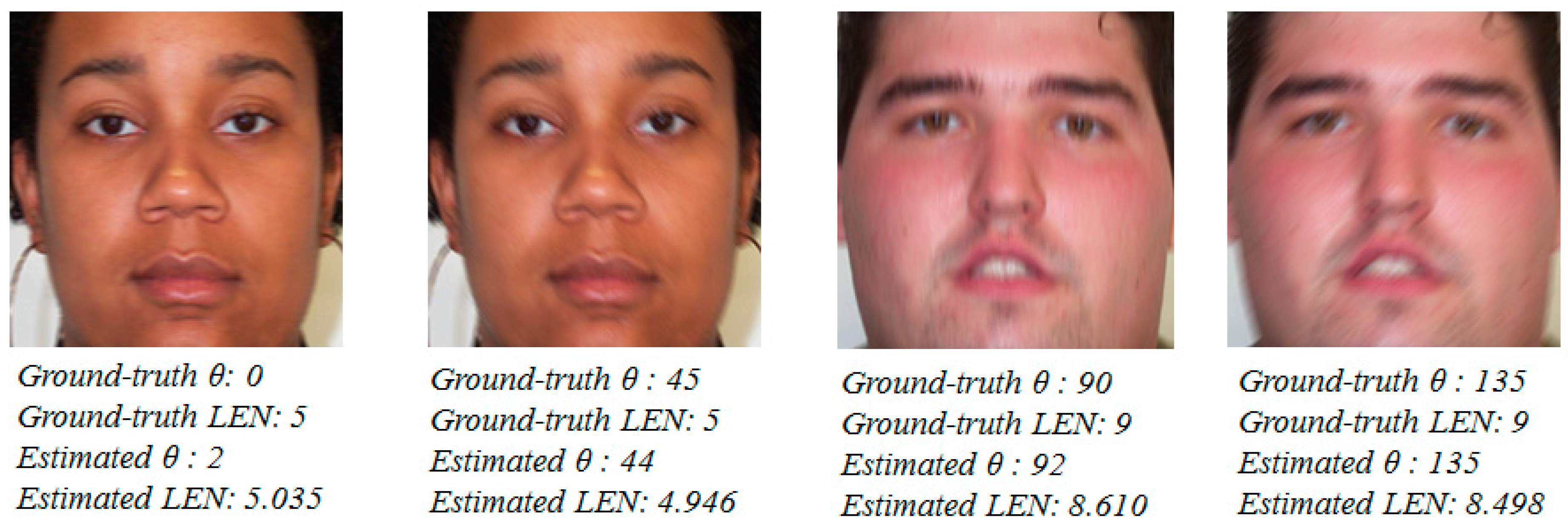

3.2. Performance Evaluation of the Proposed Motion Blur Parameters Estimation

| Number of Images (Testing Database 1/Testing Database 2) | Focused Class (Images without Motion Blur) | Blurred Class (Images with Motion Blur) |

|---|---|---|

| Focused class (Images without motion blur) | 288 (99.654%)/286 (98.962%) | 1 (0.346%)/3 (1.038%) |

| Blurred Class (Images with motion blur) | 14 (0.173%)/14 (0.173%) | 8069 (99.827%)/8069 (99.827%) |

| Average Error of Direction Estimation | Average Error of Amount of Motion Blur (Motion Length) |

|---|---|

| 0.709° | 0.309 |

3.3 Performance Evaluation of Proposed Age Estimation Method

| MAE | Original PAL Database | Focused Database (LEN = 1, 3) | Slightly Blurred Database (LEN = 5, 7, 9) | Blurred Database (LEN = 11, 13, 15) | Average MAE | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0° | 45° | 90° | 135° | 0° | 45° | 90° | 135° | ||||

| Testing Database 1 | 5.89 | 6.45 | 8.90 | 9.89 | 8.22 | 10.67 | 12.58 | 13.14 | 10.90 | 14.11 | 10.26 |

| Testing Database 2 | 6.15 | 6.40 | 8.17 | 9.07 | 7.36 | 9.79 | 11.25 | 11.87 | 9.90 | 12.95 | 9.42 |

| Average MAE of Entire Database | 6.02 | 9.87 | |||||||||

| Classification Rate (%) (Testing Database 1/ Testing Database 2) | Focused Database (LEN = 1, 3) | Slightly Blurred Database (LEN = 5, 7, 9) | Blurred Database (LEN = 11, 13, 15) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 0° | 45° | 90° | 135° | 0° | 45° | 90° | 135° | |||

| Focused Database (LEN = 1, 3) | 100/100 | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | |

| Slightly Blurred Database (LEN = 5, 7, 9) | 0° | 0/0 | 100/100 | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 |

| 45° | 0/0 | 0/0 | 100/100 | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | |

| 90° | 0/0 | 0/0 | 0/0 | 100/100 | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | |

| 135° | 0/0 | 0/0 | 0/0 | 0/0 | 100/100 | 0/0 | 0/0 | 0/0 | 0/0 | |

| Blurred Database (LEN = 11, 13, 15) | 0° | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | 100/100 | 0/0 | 0/0 | 0/0 |

| 45° | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | 100/100 | 0/0 | 0/0 | |

| 90° | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | 100/100 | 0/0 | |

| 135° | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | 0/0 | 100/100 | |

| MAE | Focused Database (LEN = 1, 3) | Slightly Blurred Database (LEN = 5, 7, 9) | Blurred Database (LEN = 11, 13, 15) | Average MAE | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 0° | 45° | 90° | 135° | 0° | 45° | 90° | 135° | |||

| Testing Database 1 | 5.88 | 6.10 | 6.63 | 6.16 | 6.60 | 6.29 | 6.75 | 6.18 | 6.61 | 6.54 |

| Testing Database 2 | 6.14 | 6.18 | 6.47 | 6.25 | 6.68 | 6.36 | 6.62 | 6.31 | 6.87 | 6.41 |

| Average of Entire Database | 6.48 | |||||||||

| Number of Images | Focused Database (Motion Length is 1 and 3) | Slightly Blurred Database (Motion Length is 5, 7, and 9) | Blurred Database (Motion Length is 11, 13, and 15) | Total Number of Images | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 0° | 45° | 90° | 135° | 0° | 45° | 90° | 135° | |||

| Learning Database 1 | 1515 | 909 | 909 | 909 | 909 | 909 | 909 | 909 | 909 | 8787 |

| Testing Database 1 | 1485 | 891 | 891 | 891 | 891 | 891 | 891 | 891 | 891 | 8613 |

| Learning Database 2 | 1505 | 903 | 903 | 903 | 903 | 903 | 903 | 903 | 903 | 8729 |

| Testing Database 2 | 1495 | 897 | 897 | 897 | 897 | 897 | 897 | 897 | 897 | 8671 |

| Number of Images (Testing Database 1/Testing Database 2) | Focused Class (Images without motion blur) | Blurred Class (Images with motion blur) |

|---|---|---|

| Focused class (Images without motion blur) | 297 (100.000%)/299 (100.000%) | 0 (0.0%)/0 (0.0%) |

| Blurred Class (Images with motion blur) | 13 (0.157%)/13 (0.156%) | 8273 (99.843%)/8323 (99.844%) |

| Average Error of Direction Estimation | Average Error of Amount of Motion Blur (Motion Length) |

|---|---|

| 0.837° | 0.332 |

| MAE | Original MORPH Database | Focused Database (LEN = 1, 3) | Slightly Blurred Database (LEN = 5, 7, 9) | Blurred Database (LEN = 11, 13, 15) | Average MAE | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0° | 45° | 90° | 135° | 0° | 45° | 90° | 135° | ||||

| Testing Database 1 | 5.99 | 6.36 | 8.18 | 9.65 | 7.82 | 10.12 | 10.18 | 11.29 | 9.78 | 11.81 | 9.25 |

| Testing Database 2 | 6.02 | 6.42 | 9.10 | 12.29 | 9.51 | 12.72 | 13.18 | 16.08 | 13.29 | 16.84 | 11.76 |

| Average MAE of Entire Database | 6.01 | 10.51 | |||||||||

| MAE | Focused Database (LEN = 1, 3) | Slightly Blurred Database (LEN = 5, 7, 9) | Blurred Database (LEN = 11, 13, 15) | Average MAE | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 0° | 45° | 90° | 135° | 0° | 45° | 90° | 135° | |||

| Testing Database 1 | 5.90 | 6.00 | 5.85 | 5.86 | 6.12 | 6.23 | 6.33 | 6.34 | 6.17 | 6.08 |

| Testing Database 2 | 5.76 | 5.76 | 6.07 | 5.75 | 5.98 | 6.30 | 6.38 | 6.06 | 6.16 | 6.01 |

| Average of Entire Database | 6.05 | |||||||||

| Testing Database 1 | Testing Database 1 | Average MAE |

|---|---|---|

| 7.818 | 8.081 | 7.950 |

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Fu, Y.; Guo, G.; Huang, T.S. Age synthesis and estimation via faces: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1955–1976. [Google Scholar] [PubMed]

- Ramanathan, N.; Chellappa, R.; Biswas, S. Computational methods for modeling facial aging: A survey. J. Vis. Lang. Comput. 2009, 20, 131–144. [Google Scholar] [CrossRef]

- Luu, K.; Ricanek, K., Jr.; Bui, T.D.; Suen, C.Y. Age Estimation Using Active Appearance Models and Support Vector Machine Regression. In Proceedings of the IEEE 3rd International Conference on Biometrics: Theory, Applications, and Systems, Washington, DC, USA, 28–30 September 2009; pp. 1–5.

- Lanitis, A.; Taylor, C.J.; Cootes, T.F. Toward automatic simulation of aging effects on face images. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 442–455. [Google Scholar] [CrossRef]

- Lanitis, A.; Draganova, C.; Christodoulou, C. Comparing different classifiers for automatic age estimation. IEEE Trans. Syst. Man Cybern. Part B 2004, 34, 621–628. [Google Scholar] [CrossRef]

- Choi, S.E.; Lee, Y.J.; Lee, S.J.; Park, K.R.; Kim, J. A Comparative Study of Local Feature Extraction for Age Estimation. In Proceedings of the 11th International Conference on Control, Automation, Robotics and Vision, Singapore, 7–10 December 2010; pp. 1280–1284.

- Nguyen, D.T.; Cho, S.R.; Shin, K.Y.; Bang, J.W.; Park, K.R. Comparative study of human age estimation with or without pre-classification of gender and facial expression. Sci. World J. 2014, 2014, 1–15. [Google Scholar]

- Günay, A.; Nabiyev, V.V. Automatic Age Classification with LBP. In Proceedings of the 23rd International Symposium on Computer and Information Sciences, Istanbul, Turkey, 27–29 October 2008; pp. 1–4.

- Nguyen, D.T.; Cho, S.R.; Park, K.R. Human age estimation based on multi-level local binary pattern and regression method. Lect. Notes Electr. Eng. 2014, 309, 433–438. [Google Scholar]

- Kwon, Y.H.; Lobo, N.D.B. Age Classification from Facial Images. In Proceedings of the Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 762–767.

- Txia, J.D.; Huang, C.L. Age Estimation Using AAM and Local Facial Features. In Proceedings of the International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Kyoto, Japan, 12–14 September 2009; pp. 885–888.

- Gao, F.; Ai, H. Face age classification on consumer images with gabor feature and fuzzy LDA method. Lect. Notes Comput. Sci. 2009, 5558, 132–141. [Google Scholar]

- Fu, Y.; Huang, T.S. Human age estimation with regression on discriminative aging manifold. IEEE Trans. Multimedia 2008, 10, 578–584. [Google Scholar] [CrossRef]

- Choi, S.E.; Lee, Y.J.; Lee, S.J.; Park, K.R.; Kim, J. Age estimation using a hierarchical classifier based on global and local facial features. Pattern Recognit. 2011, 44, 1262–1281. [Google Scholar] [CrossRef]

- Guo, G.; Mu, G.; Fu, Y.; Dyer, C.; Huang, T. A Study on Automatic Age Estimation Using a Large Database. In Proceedings of the IEEE Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 1986–1991.

- Levi, G.; Hassner, T. Age and Gender Classification Using Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 34–42.

- Yan, C.; Lang, C.; Wang, T.; Du, X.; Zhang, C. Age estimation based on convolutional neural network. Lect. Notes Comput. Sci. 2014, 8879, 211–220. [Google Scholar]

- Yi, D.; Lei, Z.; Li, S.Z. Age estimation by multi-scale convolutional network. Lect. Notes Comput. Sci. 2015, 9005, 144–158. [Google Scholar]

- Wang, X.; Guo, R.; Kambhamettu, C. Deeply-Learned Feature for Age Estimation. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2015; pp. 534–541.

- Viola, P.; Jones, M.J. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Wang, H.L.; Wang, J.G.; Yau, W.Y.; Chua, X.L.; Tan, Y.P. Effects of Facial Alignment for Age Estimation. In Proceedings of the 11th International Conference on Control, Automation, Robotics and Vision, Singapore, 7–10 December 2010; pp. 644–647.

- Tiwari, S.; Shukla, V.P.; Singh, A.K; Biradar, S.R. Review of motion blur estimation techniques. J. Image Graph. 2013, 1, 176–184. [Google Scholar] [CrossRef]

- Deshpande, A.M.; Patnaik, S. Radon Transform Based Uniform and Non-uniform Motion Blur Parameter Estimation. In Proceedings of the International Conference on Communication, Information & Computing Technology, Mumbai, India, 19–20 October 2012; pp. 1–6.

- Sun, H.; Desvignes, M.; Yan, Y.; Liu, W. Motion Blur Parameters Identification from Radon Transform Image Gradients. In Proceedings of the 35th Annual Conference of IEEE Industrial Electronics, Porto, Portugal, 3–5 November 2009; pp. 2098–2103.

- Shah, M.J.; Dalal, U.D. Hough Transform and Cepstrum Based Estimation of Spatial-invariant and Variant Motion Blur Parameters. In Proceedings of the International Conference on Advances in Electronics, Computers and Communication, Bangalore, India, 10–11 October 2014; pp. 1–6.

- Shen, Y.; Dang, J.; Lei, T.; Lou, W. Motion Blur Parameters Estimation Based on Frequency and Spatial Domain Analysis. In Proceedings of the 2nd International Conference on Consumer Electronics, Communications and Networks, Yichang, China, 21–23 April 2012; pp. 387–390.

- Fang, X.; Wu, H.; Wu, Z.; Luo, B. An improved method for robust blur estimation. Inf. Technol. J 2011, 10, 1709–1716. [Google Scholar] [CrossRef]

- Ahonen, T.; Hadid, A.; Pietikäinen, M. Face description with local binary patterns: application to face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 2037–2041. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, D.T.; Park, Y.H.; Lee, H.C.; Shin, K.Y.; Kang, B.J.; Park, K.R. Combining touched fingerprint and finger-vein of a finger, and its usability evaluation. Adv. Sci. Lett. 2012, 5, 85–95. [Google Scholar] [CrossRef]

- Shin, K.Y.; Park, Y.H.; Nguyen, D.T.; Park, K.R. Finger-vein image enhancement using a fuzzy-based fusion method with gabor and retinex filtering. Sensors 2014, 14, 3095–3129. [Google Scholar] [CrossRef] [PubMed]

- Lee, W.O.; Kim, Y.G.; Hong, H.G.; Park, K.R. Face recognition system for set-top box-based intelligent TV. Sensors 2014, 14, 21726–21749. [Google Scholar] [CrossRef] [PubMed]

- Shan, C.; Gong, S.; McOwan, P.W. Facial expression recognition based on local binary patterns: A comprehensive study. Image Vis. Comput. 2009, 27, 803–816. [Google Scholar] [CrossRef]

- Manjunath, B.S.; Ma, W.Y. Texture features for browsing and retrieval of image data. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 837–842. [Google Scholar] [CrossRef]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; The MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Song, F.; Guo, Z.; Mei, D. Feature Selection Using Principal Component Analysis. In Proceedings of the International Conference on System Science, Engineering Design and Manufacturing Information, Yichang, China, 12–14 November 2010; pp. 27–30.

- Karim, T.F.; Rahman, M.L.; Lipu, M.S.H.; Sultana, F. Face Recognition Using PCA-Based Method. In Proceedings of the IEEE International Conference on Advanced Management Science, Chengdu, China, 9–11 July 2010; pp. 158–162.

- LibSVM—A Library for Support Vector Machines. Available online: http://www.csie.ntu.edu.tw/~cjlin/libsvm/ (accessed on 31 August 2015).

- Minear, M.; Park, D.C. A lifespan database of adult facial stimuli. Behav. Res. Methods 2004, 36, 630–633. [Google Scholar] [CrossRef]

- PAL database. Available online: http://agingmind.utdallas.edu/facedb (accessed on 31 August 2015).

- The MORPH Database. Available online: https://ebill.uncw.edu/C20231_ustores/web/store_main.jsp?STOREID=4 (accessed on 31 August 2015).

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nguyen, D.T.; Cho, S.R.; Pham, T.D.; Park, K.R. Human Age Estimation Method Robust to Camera Sensor and/or Face Movement. Sensors 2015, 15, 21898-21930. https://doi.org/10.3390/s150921898

Nguyen DT, Cho SR, Pham TD, Park KR. Human Age Estimation Method Robust to Camera Sensor and/or Face Movement. Sensors. 2015; 15(9):21898-21930. https://doi.org/10.3390/s150921898

Chicago/Turabian StyleNguyen, Dat Tien, So Ra Cho, Tuyen Danh Pham, and Kang Ryoung Park. 2015. "Human Age Estimation Method Robust to Camera Sensor and/or Face Movement" Sensors 15, no. 9: 21898-21930. https://doi.org/10.3390/s150921898