Robust Indoor Human Activity Recognition Using Wireless Signals

Abstract

:1. Introduction

- (1)

- A framework for recognizing indoor human actions is proposed based on the recognition of the combination of primitive actions. Some indoor human actions are selected as primitive motions forming a training set at first. Then, in online recognition, a coarse detection is used to distinguish in-place activities from walking to continuous movement.

- (2)

- A new signal preprocess and segmentation method is presented by exploring the properties of CSIs of Wi-Fi signals. An online filtering method is designed to let actions’ CSIs value curves be smooth and contain enough pattern information. And each primitive action’s pattern can be segmented from the outliers of CSIs accurately.

- (3)

- By Kernel SVM based multi-classification with a feature selection method, many activities from the combination of primitive actions can be recognized efficiently insensitive to the location, orientation, speed, and anthropometric differences.

2. Background

2.1. Channel State Information (CSI)

2.2. The Free Space Propagation Model

3. Methodology

3.1. Preparation

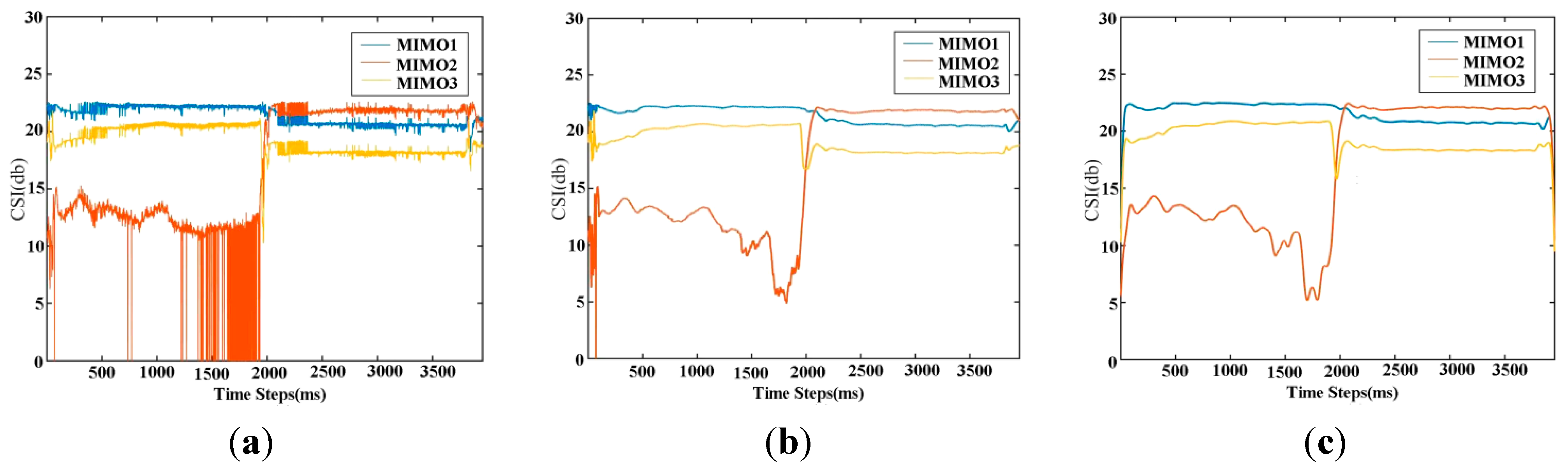

3.2. Filtering

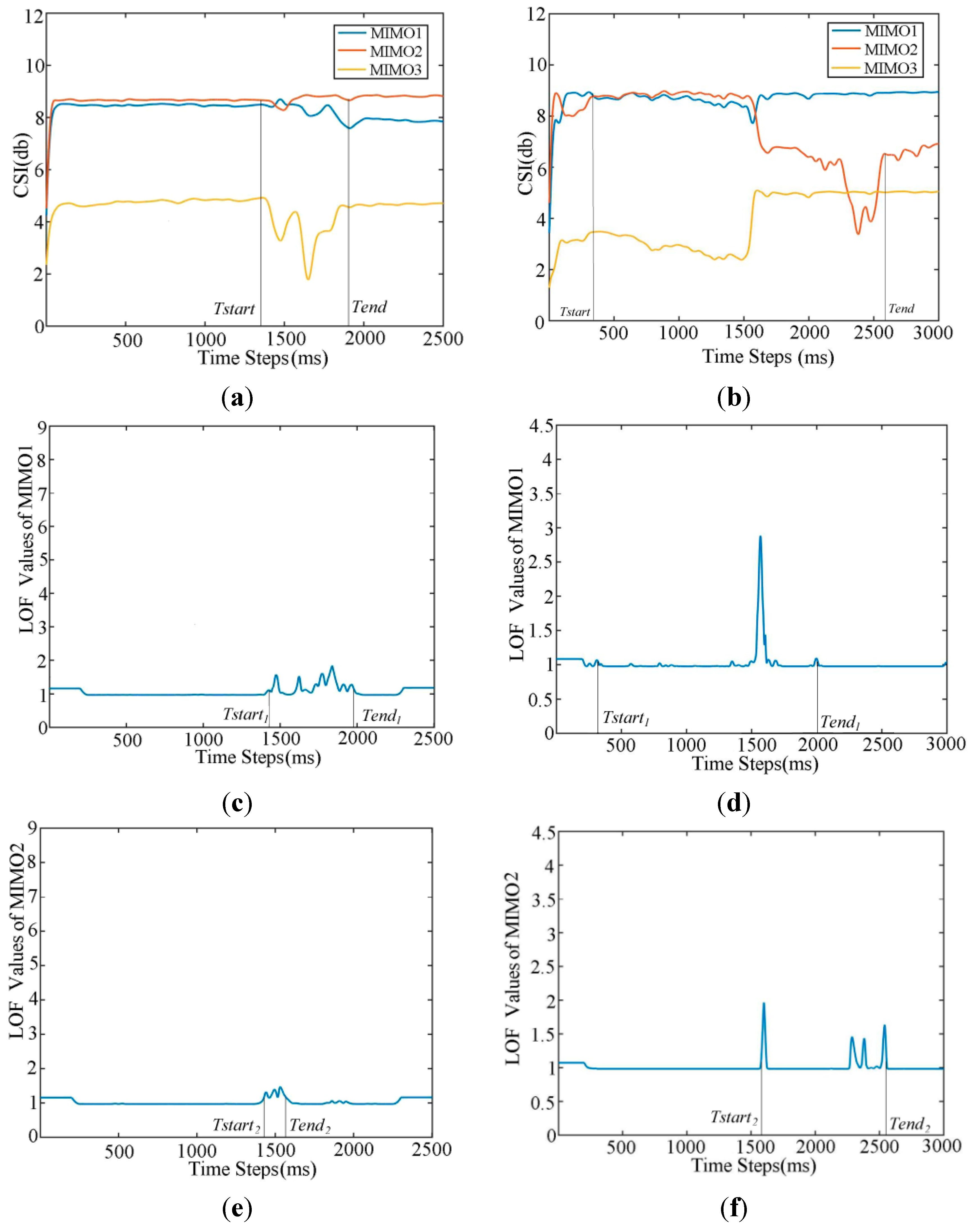

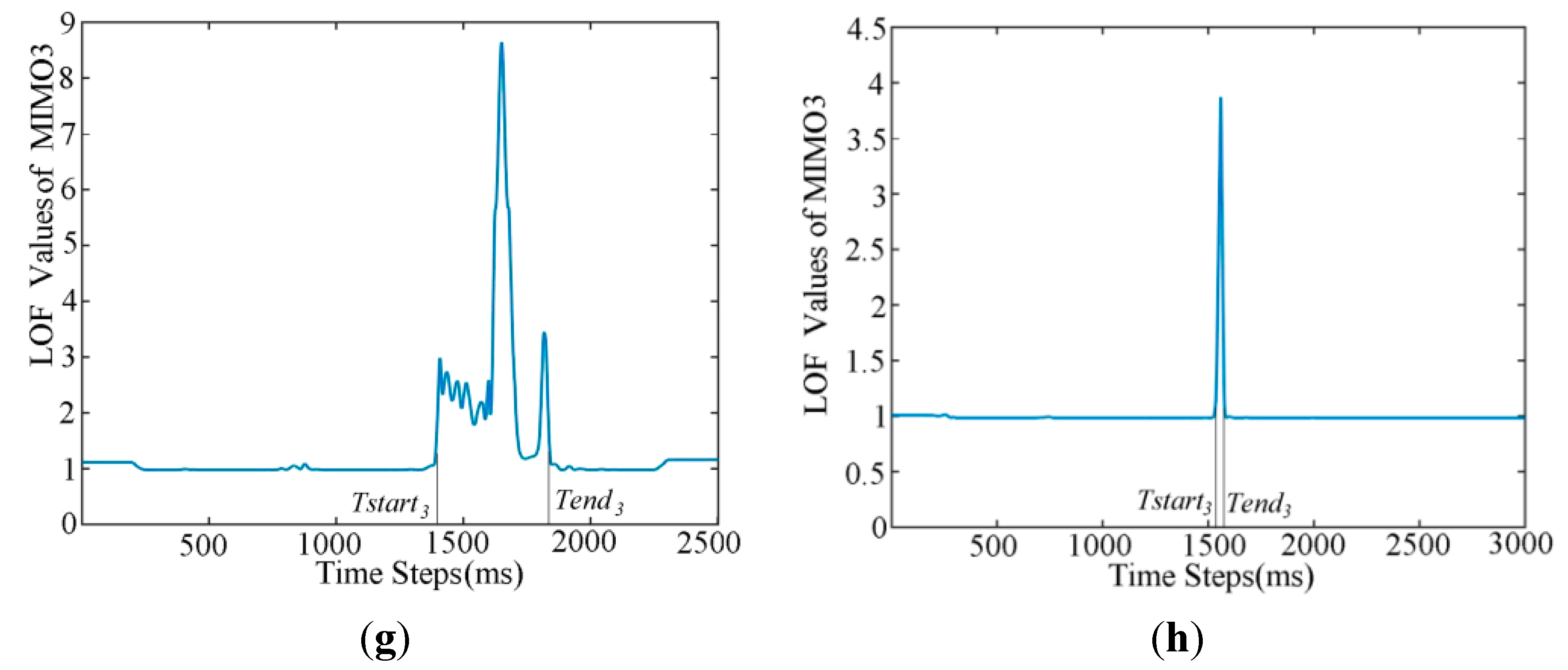

3.3. Pattern Segmentation

- (1)

- Offline Pattern Segmentation

- (2)

- Online Segmentation

3.4. Feature Extraction

3.5. Classification Method

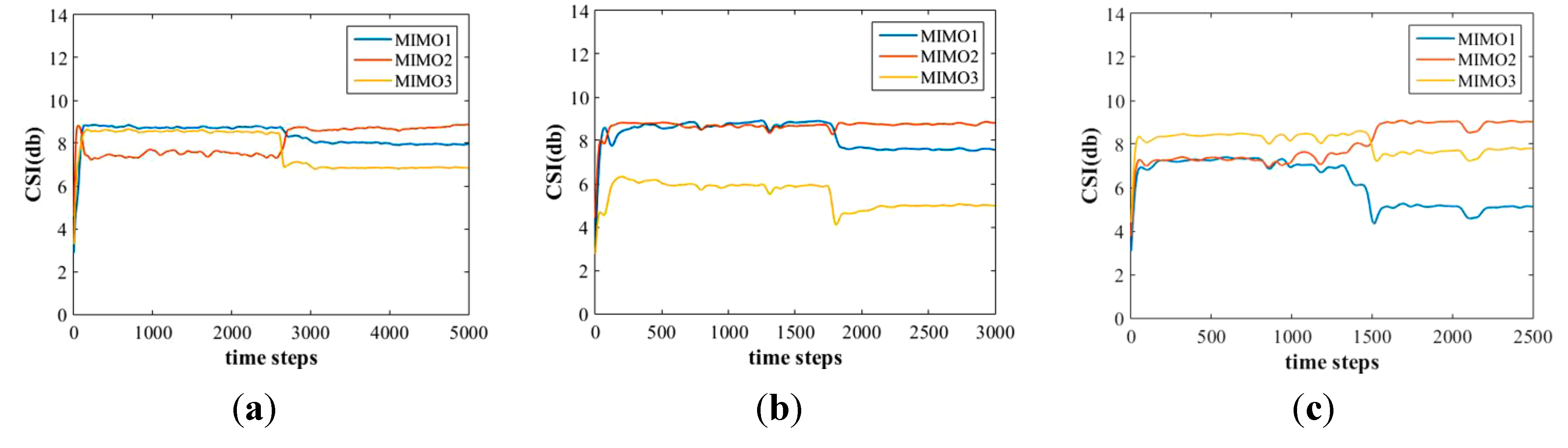

4. Recognition and Evaluation

4.1. Training

| No. | Descriptions | Action Key Points |

|---|---|---|

| AC1 | Squat down to pick up something. | both legs do not bend. |

| AC2 | Stand up from squatting state (AC1). | |

| AC3 | Squat down to pick up something. | both legs bend. |

| AC4 | Stand up from squatting state (AC3). | |

| AC5 | Sit down on a chair. | |

| AC6 | Stand up from a chair/bed. | |

| AC7 | Lie down on the couch/bed. | from standing state |

| AC8 | Sit up from lying state on the floor. | |

| AC9 | Stand up from lying state on the couch/bed. | |

| AC10 | Fall to the floor from standing up. | |

| AC11 | Fall to the floor from sitting on a chair/bed. | |

| AC12 | Stand up from floor from sitting state. | |

| AC13 | Stand up from floor from lying state. |

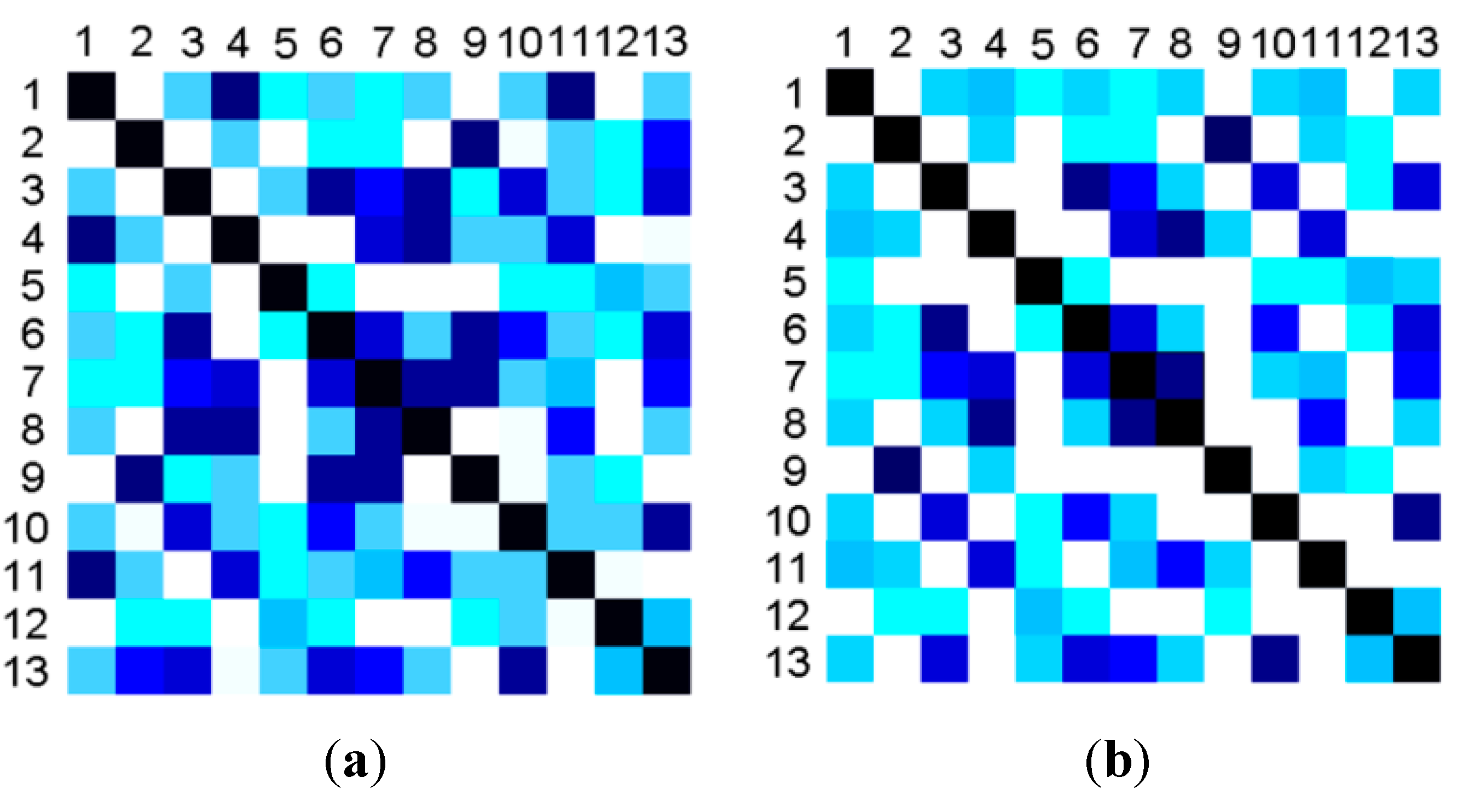

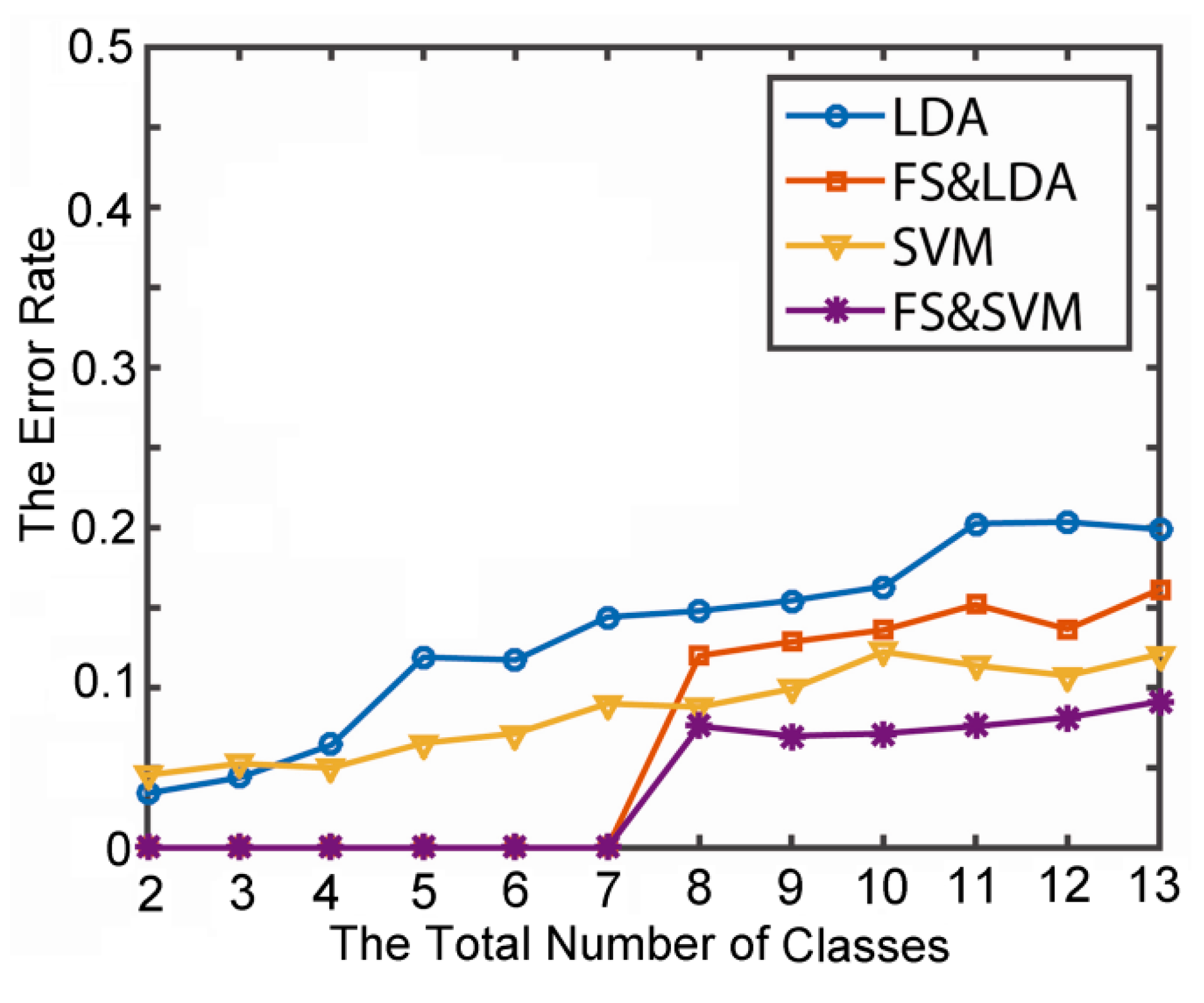

4.2. Feature Selection

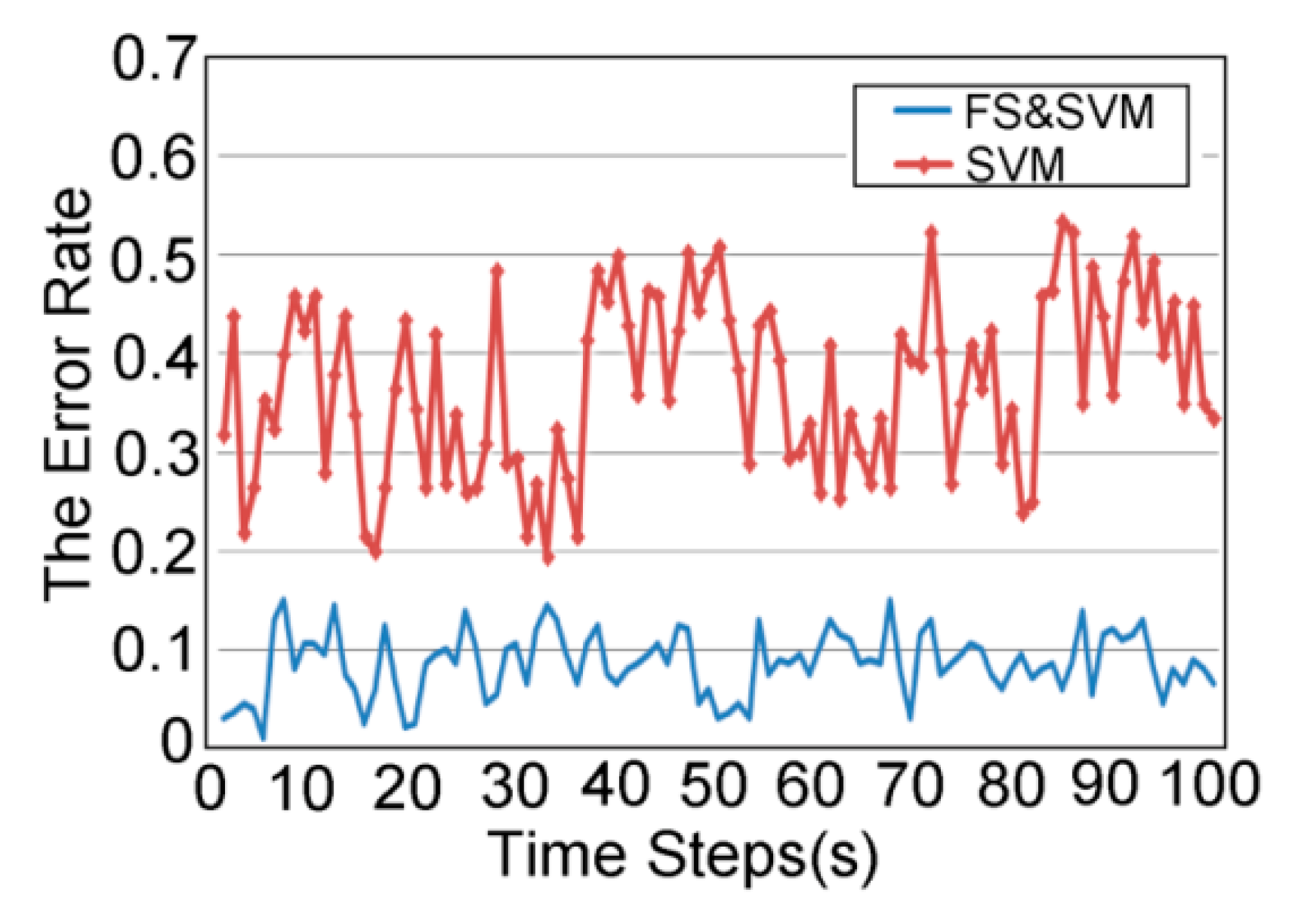

4.3. Classification and Recognition

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Poppe, R. A survey on vision-based human action recognition. Image Vis. Comput. 2010, 28, 976–990. [Google Scholar] [CrossRef]

- Eum, H.; Yoon, C.; Lee, H.; Park, M. Continuous Human Action Recognition Using Depth-MHI-HOG and a Spotter Model. Sensors 2015, 15, 5197–5227. [Google Scholar] [CrossRef] [PubMed]

- Jargalsaikhan, I.; Direkoglu, C.; Little, S.; O’Conner, N. An evaluation of local action descriptors for human action classification in the presence of occlusion. In MultiMedia Modeling; Springer International Publishing: Cham, Switzerland, 2014; pp. 56–67. [Google Scholar]

- Mogotsi, I.C.; Christopher, D.; Raghavan, M.P.; Hinrich, S. Introduction to Information Retrieval; Cambridge University Press: Cambridge, UK, 2008; pp. 192–195. [Google Scholar]

- Weinland, D.; Özuysal, M.; Fua, P. Making action recognition robust to occlusions and viewpoint changes. In Computer Vision—ECCV 2010; Springer Berlin Heidelberg: Berlin, Germany, 2010; pp. 635–648. [Google Scholar]

- Huang, D.; Nandakumar, R.; Gollakota, S. Feasibility and limits of wifi imaging. In Proceedings of the 12th ACM Conference on Embedded Network Sensor Systems, Memphis, TN, USA, 3–6 November 2014; pp. 266–279.

- Xiao, W.; Song, B.; Yu, X.; Chen, P. Nonlinear Optimization-Based Device-Free Localization with Outlier Link Rejection. Sensors 2015, 15, 8072–8087. [Google Scholar] [CrossRef] [PubMed]

- De Sanctis, M.; Cianca, E.; di Domenicom, S.; Provenziani, D.; Bianchi, G.; Ruggieri, M. WIBECAM: Device Free Human Activity Recognition through Wi-Fi Beacon-Enabled Camera. In Proceedings of the 2nd workshop on Workshop on Physical Analytics (WPA’15), Florence, Italy, 19–22 May 2015; pp. 7–12.

- Joshi, K.; Bharadia, D.; Kotaru, M.; Katti, S. WiDeo: Fine-grained Device-free Motion Tracing using RF Backscatter. In Proceedings of the 12th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’15), Oakland, CA, USA, 4–6 May 2015; pp. 189–204.

- Yang, Z.; Zhou, Z.; Liu, Y. From RSSI to CSI: Indoor localization via channel response. ACM Comput. Surv. (CSUR) 2013, 46. [Google Scholar] [CrossRef]

- Han, C.; Wu, K.; Wang, Y.; Ni, L.M. WiFall: Device-free Fall Detection by Wireless Networks. In Proceedings of the IEEE INFOCOM, Toronto, ON, Canada, 27 April–2 May 2014; pp. 271–279.

- Qifan, P.; Sidhant, G.; Shyam, G.; Shwetak, P. Whole-Home Gesture Recognition Using Wireless Signals. In Proceedings of the 19th Annual International Conference on Mobile Computing and Networking, Miami, FL, USA, 30 September–4 October 2013; pp. 27–38.

- Wang, Y.; Liu, J.; Chen, Y.; Gruteser, M.; Yang, J.; Li, H. E-eyes: Device-free location-oriented activity identification using fine-grained WiFi signatures. In Proceedings of the 20th Annual International Conference on Mobile Computing and Networking, Maui, HI, USA, 7–11 September 2014; pp. 617–628.

- Zeng, Y.; Pathak, P.H.; Mohapatra, P. Analyzing Shopper’s Behavior through WiFi Signals. In Proceedings of the 2nd Workshop on Physical Analytics (WPA ’15), Florence, Italy, 19–22 May 2015; pp. 13–18.

- Rappaport, T.S. Wireless Communications, Principles and Practice; Prentice Hall: Bergen, NJ, USA, 1996. [Google Scholar]

- Halperin, D.; Hu, W.; Sheth, A.; Wetherall, D. Tool release: Gathering 802.11n traces with channel state information. ACM SIGCOMM Comput. Commun. Rev. (CCR) 2011, 41. [Google Scholar] [CrossRef]

- Liu, W.Y.; Gao, X.; Wang, L.; Wang, D.Y. Bfp: Behavior-Free Passive Motion Detection Using PHY Information. Wirel. Pers. Commun. 2015, 83, 1035–1055. [Google Scholar] [CrossRef]

- Chapre, Y.; Ignjatovic, A.; Seneviratne, A.; Jha, S. CSI-MIMO Indoor Wi-Fi Fingerprinting System. In Proceedings of the 2014 IEEE 39th Conference on Local Computer Networks (LCN), Edmonton, AB, Canada, 8–11 September 2014; pp. 202–209.

- Gogoi, P.; Bhattacharyya, D.K.; Borah, B.; Kalita, J. A survey of outlier detection methods in network anomaly identification. Comput. J. 2011, 54, 570–588. [Google Scholar] [CrossRef]

- Breunig, M.M.; Kriegel, H.P.; Ng, R.T.; Sander, J. Lof: Identifying density-based local outliers. ACM Sigmod Rec. 2000, 29, 93–104. [Google Scholar] [CrossRef]

- Zhang, Y.; Hamm, N.A.S.; Meratnia, N.; Stein, A.; de Voort, M.; Havinga, P.J.M. Statistics-based outlier detection for wireless sensor networks. Int. J. Geogr. Inf. Sci. 2012, 26, 1373–1392. [Google Scholar] [CrossRef]

- Berndt, D.J.; Clifford, J. Using Dynamic Time Warping to Find Patterns in Time Series. KDD Workshop 1994, 10, 359–370. [Google Scholar]

- Bhavsar, H.; Panchal, M.H.; Patel, M. A comprehensive study on RBF kernel in SVM for multiclsss using OAA. Int. J. Comput. Sci. Manag. Res. 2013, 2, 1–7. [Google Scholar]

- Noury, N.; Fleury, A.; Rumeau, P.; Bourke, A.; Laighin, G.; Rialle, V.; Lundy, J. Fall detection-principles and methods. In Proceeding of the 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 22–26 August 2007; pp. 1663–1666.

- Mubashir, M.; Shao, L.; Seed, L. A survey on fall detection: Principles and approaches. Neurocomputing 2012, 100, 144–152. [Google Scholar] [CrossRef]

- Li, Y.; Ho, K.; Popescu, M. A microphone array system for automatic fall detection. IEEE Trans. Biomed. Eng. 2012, 59, 1291–1301. [Google Scholar] [PubMed]

- Cao, Y.; Yang, Y.; Liu, W. E-falld: A fall detection system using android-based smartphone. In Proceeding of the 2012 9th International Conference on Fuzzy Systems and Knowledge Discovery (FSKD), Chongqing, China, 29–31 May 2012; pp. 1509–1513.

- Sharma, A.; Paliwal, K.K. Linear discriminant analysis for the small sample size problem: An overview. Int. J. Mach. Learn. Cybern. 2015, 6, 443–454. [Google Scholar] [CrossRef]

- Bouzerdoum, A.; Beghdadi, A.; Phung, S.L.; Beghdadi, A. On the analysis of background subtraction techniques using Gaussian mixture models. In Proceedings of the International Conference on Acoustics, Speech, and Signal Processing, ICASSP, Dallas, TX, USA, 14–19 March 2010; pp. 4042–4045.

- Lin, H.H.; Chuang, J.H.; Liu, T.L. Regularized background adaptation: A novel learning rate control scheme for Gaussian mixture modeling. IEEE Trans. Image Process. 2011, 20, 822–836. [Google Scholar] [PubMed]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Jiang, X.; Cao, R.; Wang, X. Robust Indoor Human Activity Recognition Using Wireless Signals. Sensors 2015, 15, 17195-17208. https://doi.org/10.3390/s150717195

Wang Y, Jiang X, Cao R, Wang X. Robust Indoor Human Activity Recognition Using Wireless Signals. Sensors. 2015; 15(7):17195-17208. https://doi.org/10.3390/s150717195

Chicago/Turabian StyleWang, Yi, Xinli Jiang, Rongyu Cao, and Xiyang Wang. 2015. "Robust Indoor Human Activity Recognition Using Wireless Signals" Sensors 15, no. 7: 17195-17208. https://doi.org/10.3390/s150717195