Infrared and Visual Image Fusion through Fuzzy Measure and Alternating Operators

Abstract

:1. Introduction

2. Mathematical Morphology

2.1. Basic Morphological Operators

2.2. Toggle Operator

3. Alternating Operator by Opening and Closing Based Toggle Operator

3.1. Basic Operator

3.2. Multi-Scale Extension

3.3. Alternating Operators

4. Infrared and Visual Image Fusion

4.1. Multi-Scale Fusion Feature Extraction

4.2. Fuzzy Measure Based Final Fusion Feature Calculation

4.3. Infrared and Visual Image Fusion

4.4. Parameter Analysis

5. Experimental Results

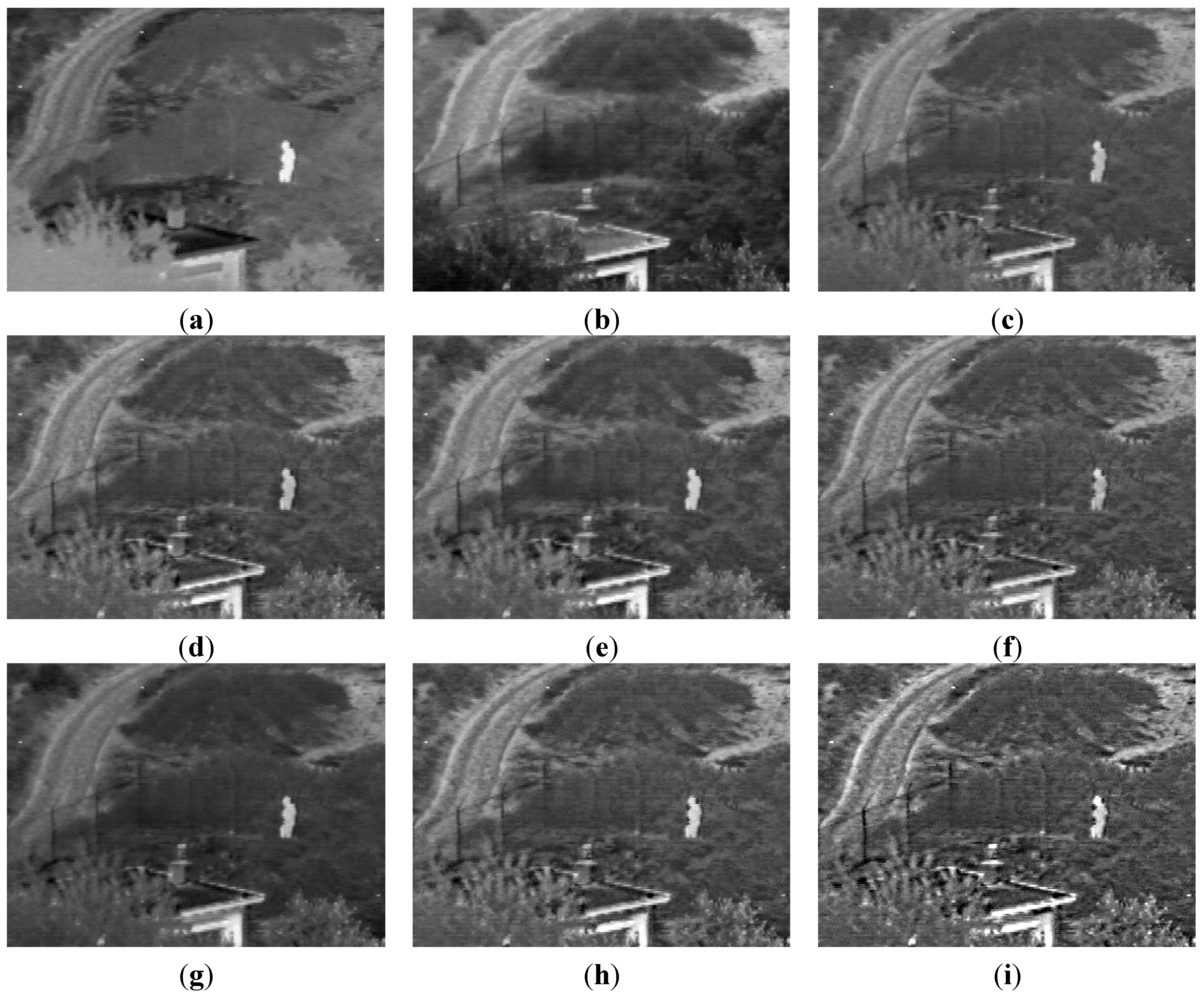

5.1. Visual Comparisons

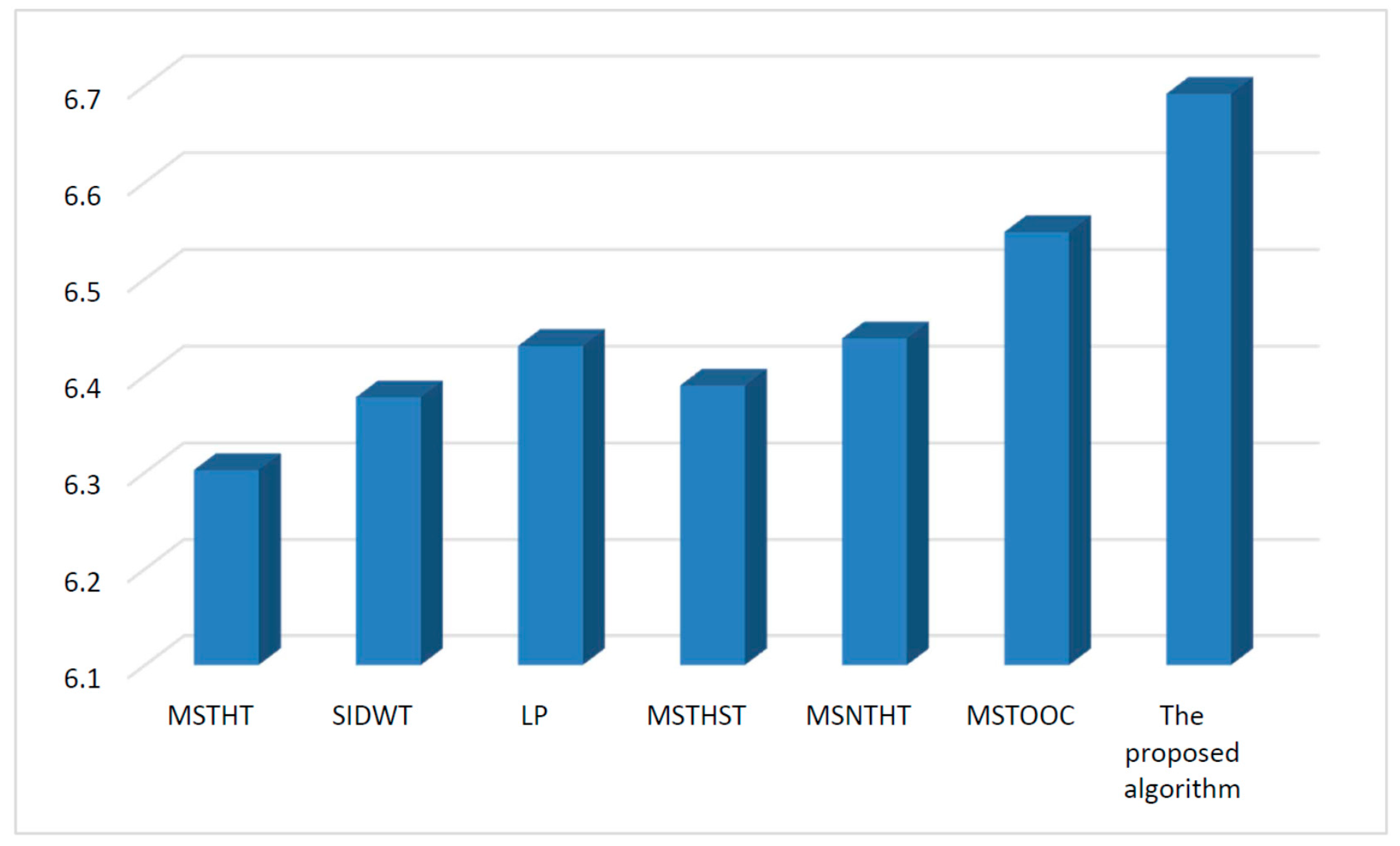

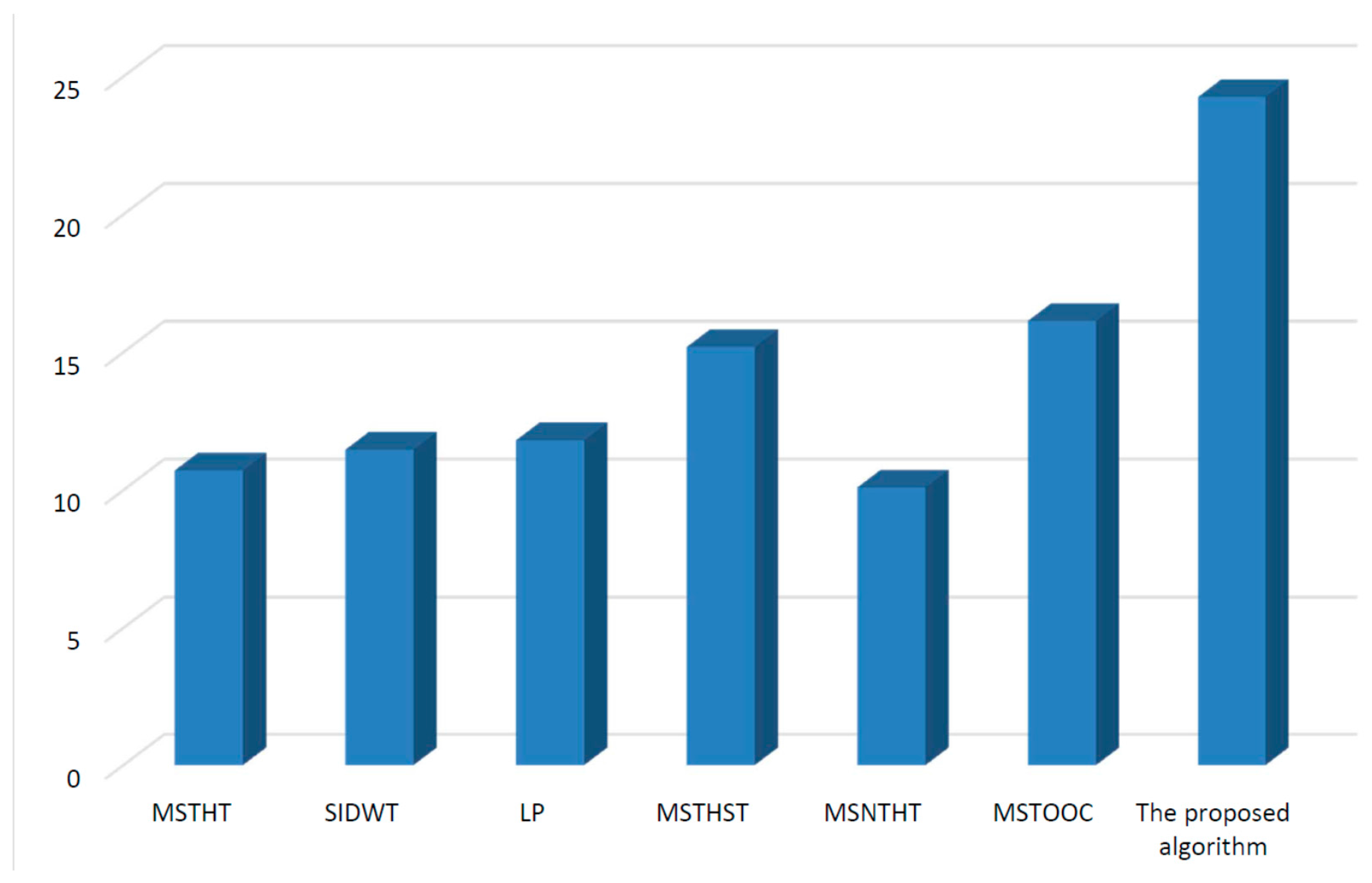

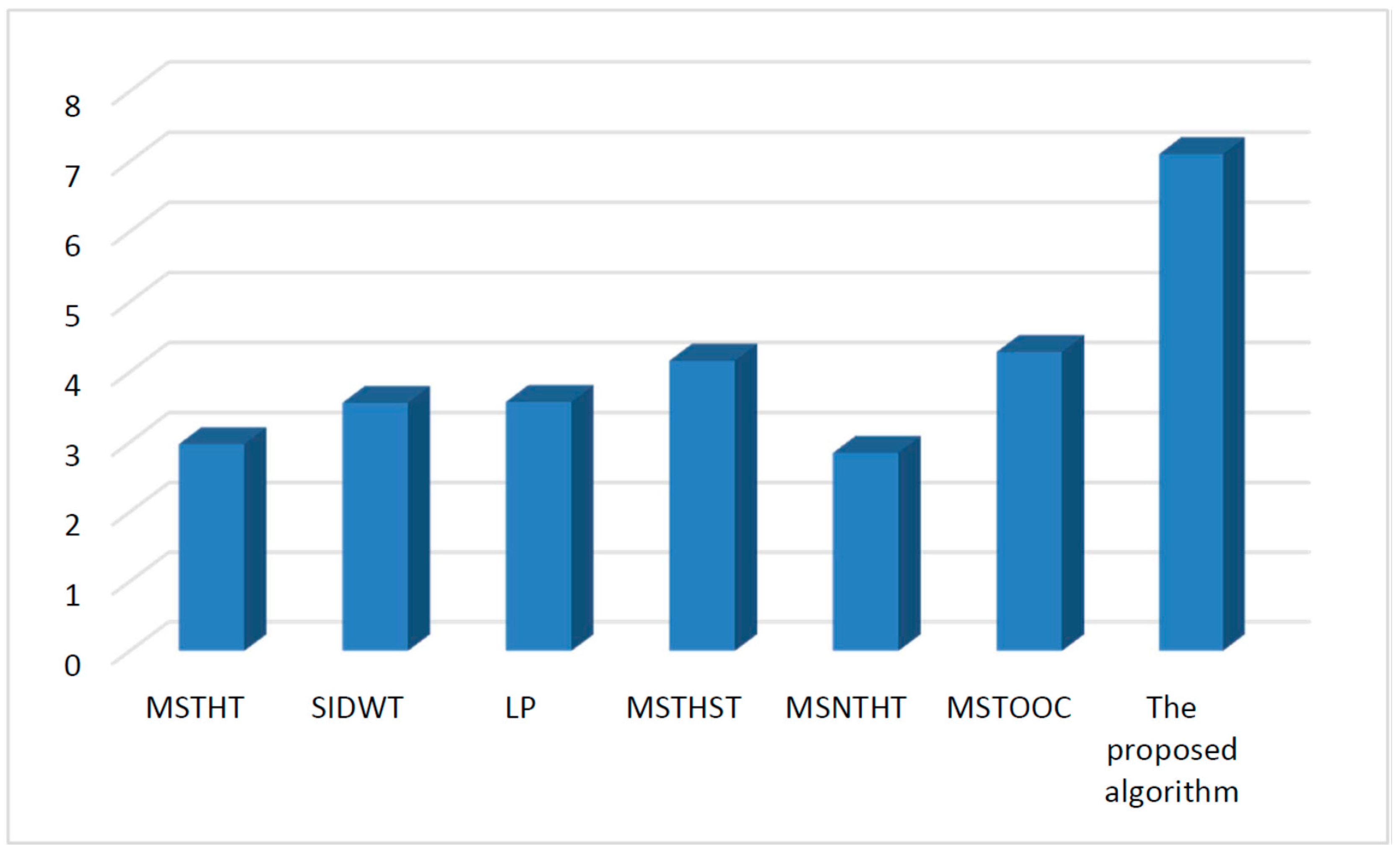

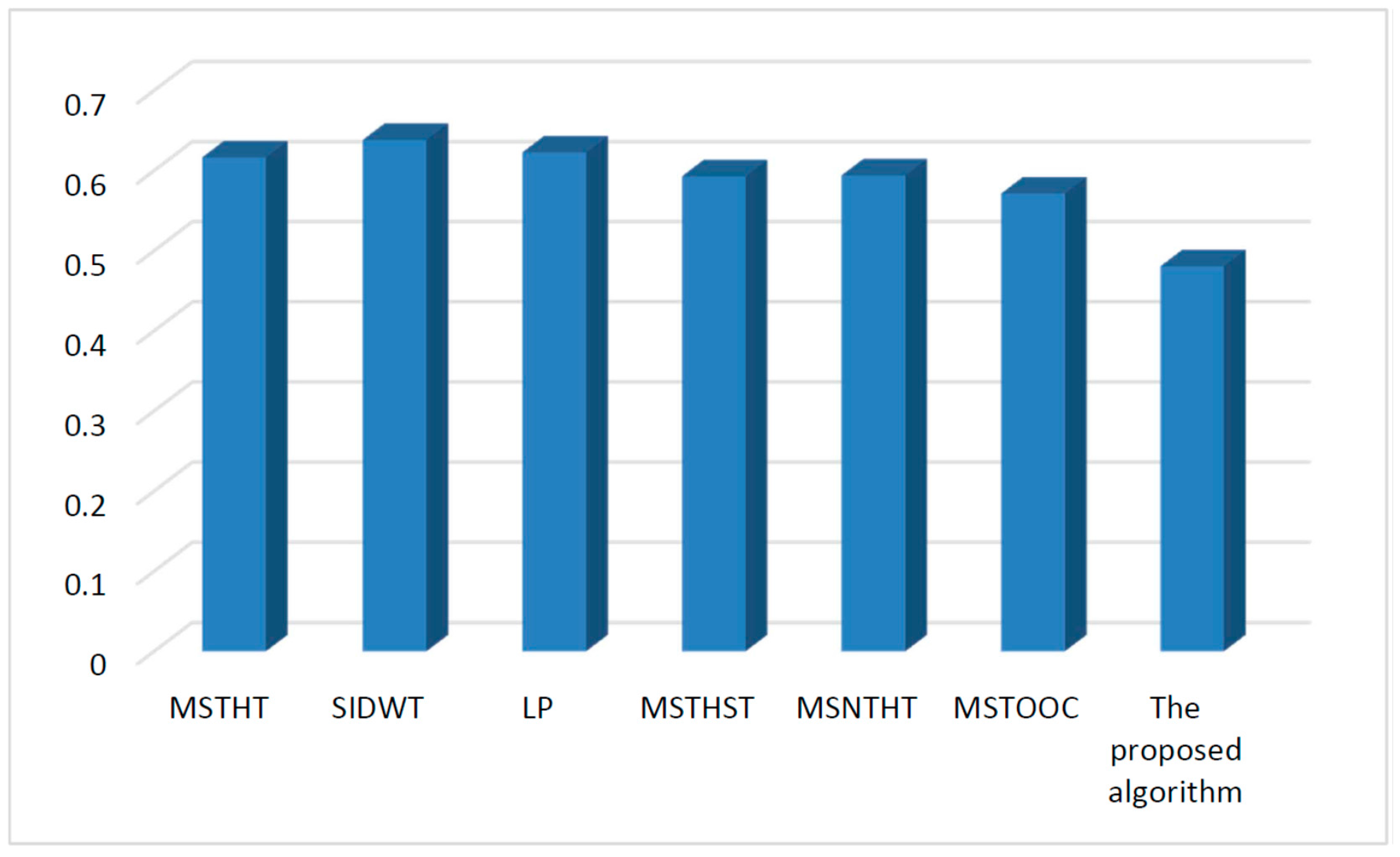

5.2. Quantitative Comparisons

| MSTHT | SIDWT | LP | MSTHST | MSNTH | MSTOOC | Proposed Algorithm |

|---|---|---|---|---|---|---|

| 0.923 | 0.733 | 0.082 | 3.874 | 25.450 | 8.814 | 1.828 |

6. Conclusions

Acknowledgments

Conflicts of Interest

References

- Lieber, C.; Urayama, S.; Rahim, N.; Tu, R.; Saroufeem, R.; Reubner, B. Multimodal near infrared spectral imaging as an exploratory tool for dysplastic esophageal lesion identification. Opt. Express 2006, 14, 2211–2219. [Google Scholar] [CrossRef] [PubMed]

- Leviner, M.; Maltz, M. A new multi-spectral feature level image fusion method for human interpretation. Infrared Phys. Technol. 2009, 52, 79–88. [Google Scholar] [CrossRef]

- Bai, X.; Zhou, F.; Xue, B. Infrared dim small target enhancement using toggle contrast operator. Infrared Phys. Technol. 2012, 55, 177–182. [Google Scholar] [CrossRef]

- Bai, X.; Zhang, Y. Detail preserved fusion of infrared and visual images by using opening and closing based toggle operator. Opt. Laser Technol. 2014, 63, 105–113. [Google Scholar] [CrossRef]

- Bai, X. Morphological infrared image enhancement based on multi-scale sequential toggle operator using opening and closing as primitives. Infrared Phys. Technol. 2015, 68, 143–151. [Google Scholar] [CrossRef]

- Bai, X.; Zhou, F.; Xue, B. Fusion of infrared and visual images through region extraction by using multi scale center-surround top-hat transform. Opt. Express 2011, 19, 8444–8457. [Google Scholar] [CrossRef] [PubMed]

- Jung, H.; Park, S. Multi-sensor fusion of Landsat 8 thermal infrared (TIR) and panchromatic (PAN) images. Sensors 2014, 14, 24425–24440. [Google Scholar] [CrossRef] [PubMed]

- Rehman, N.; Ehsan, S.; Abdullah, S.; Akhtar, M.; Mandic, D.; McDonald-Maier, K. Multi-scale pixel-based image fusion using multivariate empirical mode decomposition. Sensors 2015, 15, 10923–10947. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Zhou, Q.; Chen, Y.; Feng, H.; Xu, Z.; Li, Q. Fusion of visible and infrared images using saliency analysis and detail preserving based image decomposition. Infrared Phys. Technol. 2013, 56, 93–99. [Google Scholar] [CrossRef]

- Mukhopadhyay, S.; Chanda, B. Fusion of 2D grayscale images using multiscale morphology. Pattern Recognit. 2001, 34, 1939–1949. [Google Scholar] [CrossRef]

- Xydeas, C.; Petrović, V. Objective image fusion performance measure. Electron. Lett. 2000, 36, 306–309. [Google Scholar] [CrossRef]

- Pajares, G.; Cruz, J. A wavelet-based image fusion tutorial. Pattern Recognit. 2004, 37, 1855–1872. [Google Scholar] [CrossRef]

- Amolins, K.; Zhang, Y.; Dare, P. Wavelet based image fusion techniques—An introduction, review and comparison. ISPRS J. Photogramm. Remote Sens. 2007, 62, 249–263. [Google Scholar] [CrossRef]

- Nencini, F.; Garzelli, A.; Baronti, S.; Alparone, L. Remote sensing image fusion using the curvelet transform. Inf. Fusion 2007, 8, 143–156. [Google Scholar] [CrossRef]

- Ioannidou, S.; Karathanassi, V. Investigation of the dual-tree complex and shift-invariant discrete wavelet transforms on Quickbird image fusion. IEEE Geosci. Remote Sens. Lett. 2007, 4, 166–170. [Google Scholar] [CrossRef]

- Sun, W.; Hu, S.; Liu, S.; Sun, Y. Infrared and Visible Image Fusion Based on Object Extraction and Adaptive Pulse Coupled Neural Network via Non-Subsampled Shearlet Transform. In Proceedings of International Conference on Signal Processing, Hangzhou, China, 19–23 October 2014; pp. 946–951.

- Yang, Y.; Tong, S.; Huang, S.; Lin, P. Dual-tree complex wavelet transform and image block residual-based multi-focus image fusion in visual sensor networks. Sensors 2014, 14, 22408–22430. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Yang, B. Multifocus image fusion using region segmentation and spatial frequency. Image Vis. Comput. 2008, 26, 971–979. [Google Scholar] [CrossRef]

- Toet, A.; Hogervorst, M.; Nikolov, S.; Lewis, J.; Dixon, T.; Bull, D.; Canagarajah, C. Towards cognitive image fusion. Inf. Fusion 2010, 11, 95–113. [Google Scholar] [CrossRef]

- Mitianoudis, N.; Stathaki, T. Pixel-based and region-based image fusion schemes using ICA bases. Inf. Fusion 2007, 8, 131–142. [Google Scholar] [CrossRef]

- Cvejic, N.; Bull, D.; Canagarajah, N. Region-based multimodal image fusion using ICA bases. IEEE Sens. J. 2007, 7, 743–751. [Google Scholar] [CrossRef]

- Bulanona, D.; Burks, T.; Alchanatis, V. Image fusion of visible and thermal images for fruit detection. Biosyst. Eng. 2009, 103, 12–22. [Google Scholar] [CrossRef]

- Wang, Z.; Ma, Y.; Gu, J. Multi-focus image fusion using PCNN. Pattern Recognit. 2010, 43, 2003–2016. [Google Scholar] [CrossRef]

- Huang, W.; Jing, Z. Multi-focus image fusion using pulse coupled neural network. Pattern Recognit. Lett. 2007, 28, 1123–1132. [Google Scholar] [CrossRef]

- Bai, X.; Chen, X.; Zhou, F.; Liu, Z.; Xue, B. Multiscale top-hat selection transform based infrared and visual image fusion with emphasis on extracting regions of interest. Infrared Phys. Technol. 2013, 60, 81–93. [Google Scholar] [CrossRef]

- Serra, J. Image Analysis and Mathematical Morphology; Academic Press: New York, NY, USA, 1982. [Google Scholar]

- Soille, P. Morphological Image Analysis-Principle and Applications; Springer: Berlin, Germany, 2003. [Google Scholar]

- Bai, X.; Sun, C.; Zhou, F. Splitting touching cells based on concave points and ellipse fitting. Pattern Recognit. 2009, 42, 2434–2446. [Google Scholar] [CrossRef]

- Bai, X.; Zhou, F. Analysis of new top-hat transformation and the application for infrared dim small target detection. Pattern Recognit. 2010, 43, 2145–2156. [Google Scholar] [CrossRef]

- Matheron, G. Random Sets and Integral Geometry; Wiley: New York, NY, USA, 1975. [Google Scholar]

- Sternberg, S. Grayscale morphology. Comput. Vis. Graph. Image Underst. 1986, 35, 333–355. [Google Scholar] [CrossRef]

- Bai, X. Morphological enhancement of microscopy mineral image using opening and closing based toggle operator. J. Microsc. 2014, 253, 12–23. [Google Scholar] [CrossRef] [PubMed]

- Bai, X.; Zhang, Y. Enhancement of microscopy mineral images through constructing alternating operators using opening and closing based toggle operator. J. Opt. 2014, 16, 125407. [Google Scholar] [CrossRef]

- Lai, R.; Yang, Y.; Wang, B.; Zhou, H. A quantitative measure based infrared image enhancement algorithm using plateau histogram. Opt. Commun. 2010, 283, 4283–4288. [Google Scholar] [CrossRef]

- Zadeh, L. Fuzzy sets. Inf. Control 1965, 8, 338–353. [Google Scholar] [CrossRef]

- Ramathilagam, S.; Pandiyarajan, R.; Sathya, A.; Devi, R.; Kannan, S. Modified fuzzy c-means algorithm for segmentation of T1–T2-weighted brain MRI. J. Comput. Appl. Math. 2011, 235, 1578–1586. [Google Scholar] [CrossRef]

- Kaufmann, A. Introduction to the Theory of Fuzzy; Academic Press: New York, NY, USA, 1975. [Google Scholar]

- Bai, X.; Zhou, F.; Xue, B. Infrared image enhancement through contrast enhancement by using multi scale new top-hat transform. Infrared Phys. Technol. 2011, 54, 61–69. [Google Scholar] [CrossRef]

- Bai, X.; Chen, Z.; Zhang, Y.; Liu, Z.; Lu, Y. Spatial Information Based FCM for Infrared Ship Target Segmentation. In Proceedings of the International Conference on Image Processing, Paris, France, 27–30 October 2014; pp. 5127–5131.

- Roberts, J.; Aardt, J.; Ahmed, F. Assessment of image fusion procedures using entropy, image quality, and multispectral classification. J. Appl. Remote Sens. 2008, 2. [Google Scholar] [CrossRef]

- Chen, Y.; Xue, Z.; Blum, R. Theoretical analysis of an information-based quality measure for image fusion. Inf. Fusion 2008, 9, 161–175. [Google Scholar] [CrossRef]

- Aslantas, V.; Kurban, R. A comparison of criterion functions for fusion of multi-focus noisy images. Opt. Commun. 2009, 282, 3231–3242. [Google Scholar] [CrossRef]

- Pradham, P.; Younan, N.; King, R. Concepts of Image Fusion in Remote Sensing Applications. In Image Fusion: Algorithms and Applications; Stathaki, T., Ed.; Academic Press: London, UK, 2008; pp. 391–428. [Google Scholar]

- Piella, G.; Heijmans, H. A New Quality Metric for Image Fusion. In Proceedings of International Conference on Image Processing, Barcelona, Spain, 14–18 September 2003; pp. 173–176.

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bai, X. Infrared and Visual Image Fusion through Fuzzy Measure and Alternating Operators. Sensors 2015, 15, 17149-17167. https://doi.org/10.3390/s150717149

Bai X. Infrared and Visual Image Fusion through Fuzzy Measure and Alternating Operators. Sensors. 2015; 15(7):17149-17167. https://doi.org/10.3390/s150717149

Chicago/Turabian StyleBai, Xiangzhi. 2015. "Infrared and Visual Image Fusion through Fuzzy Measure and Alternating Operators" Sensors 15, no. 7: 17149-17167. https://doi.org/10.3390/s150717149