LiDAR Scan Matching Aided Inertial Navigation System in GNSS-Denied Environments

Abstract

:1. Introduction

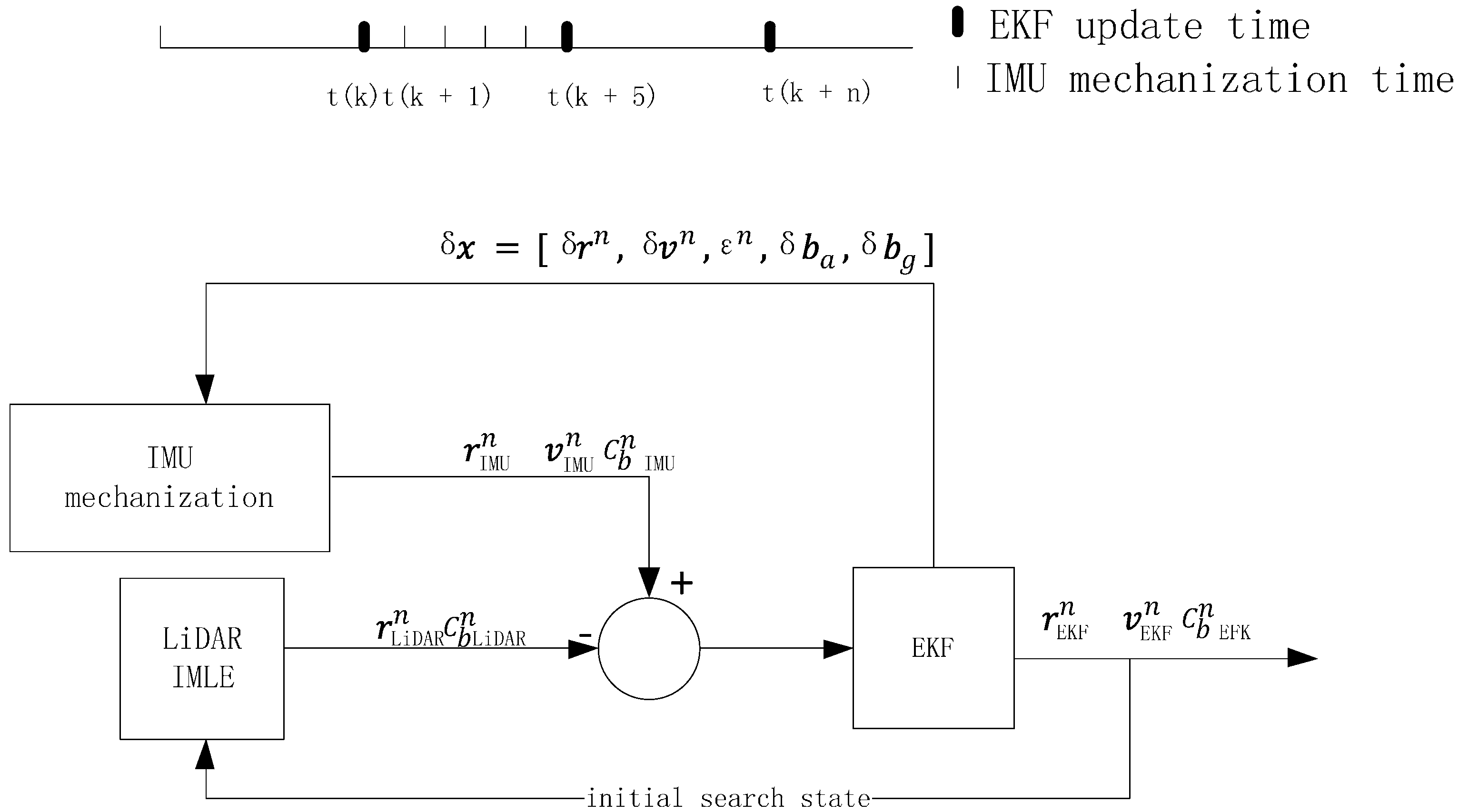

2. INS and LiDAR Fusion Modelling

2.1. INS Modelling

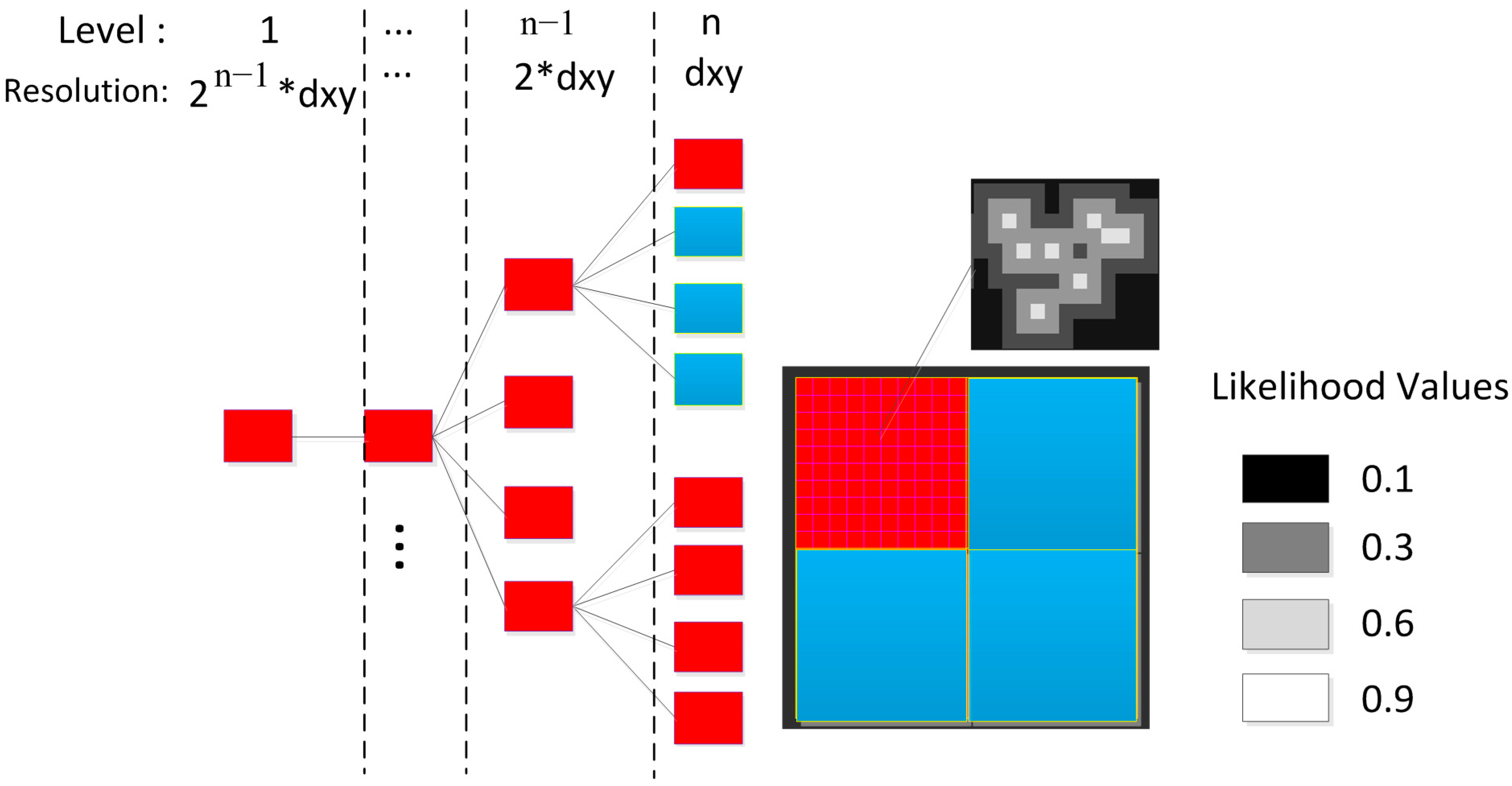

2.2. LiDAR Scan Matching

2.3. EKF Fusion Modelling

3. Results and Discussion

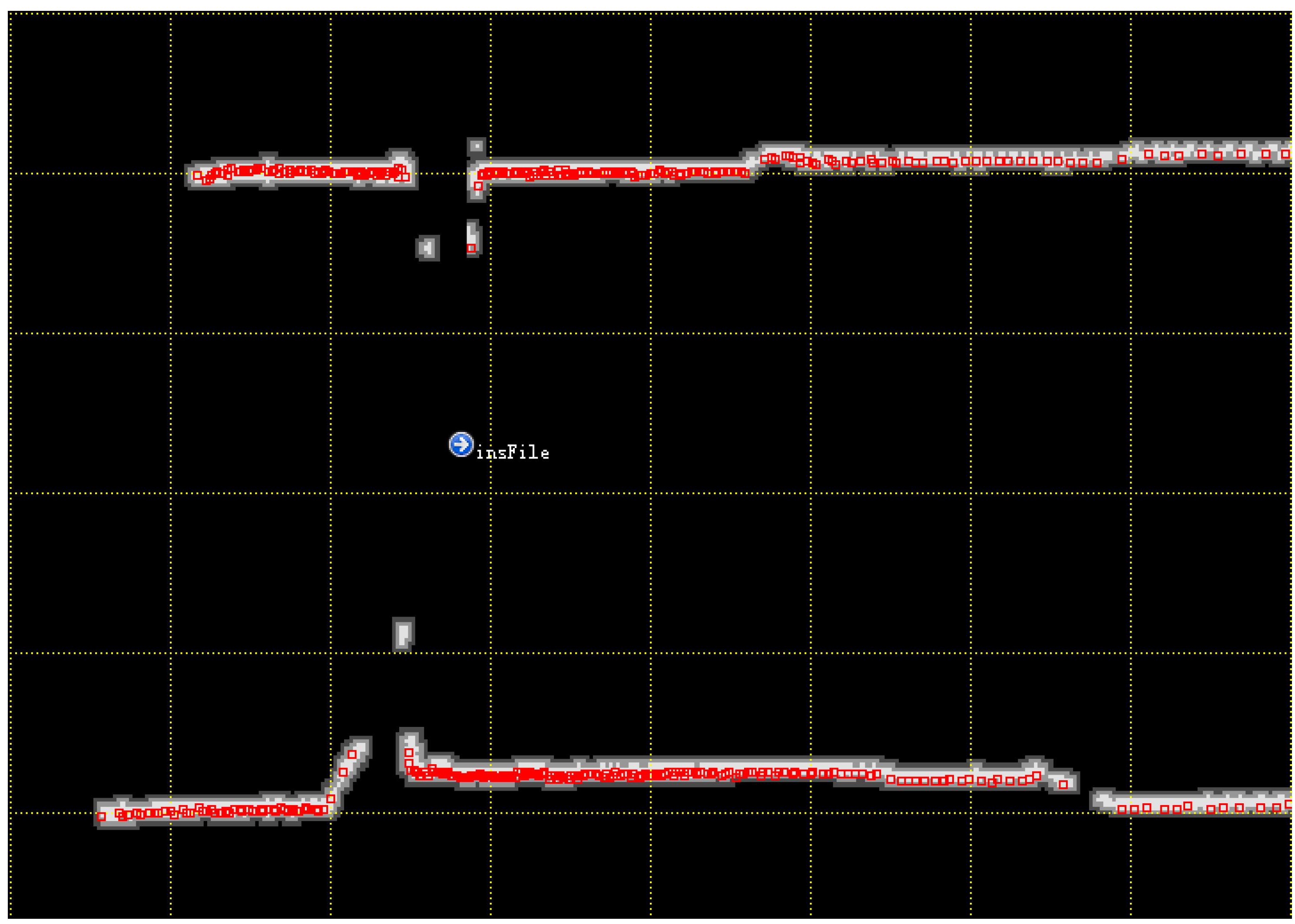

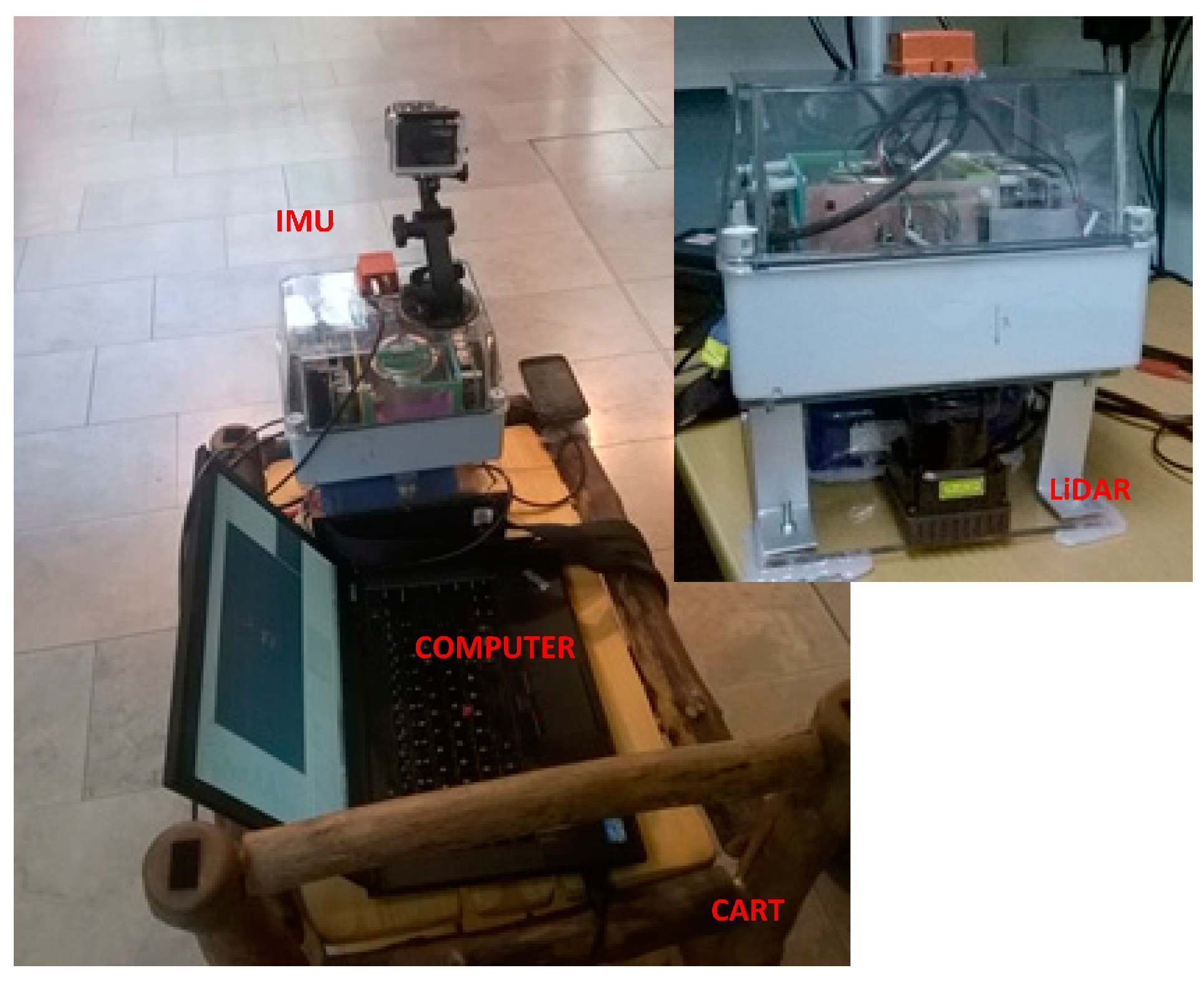

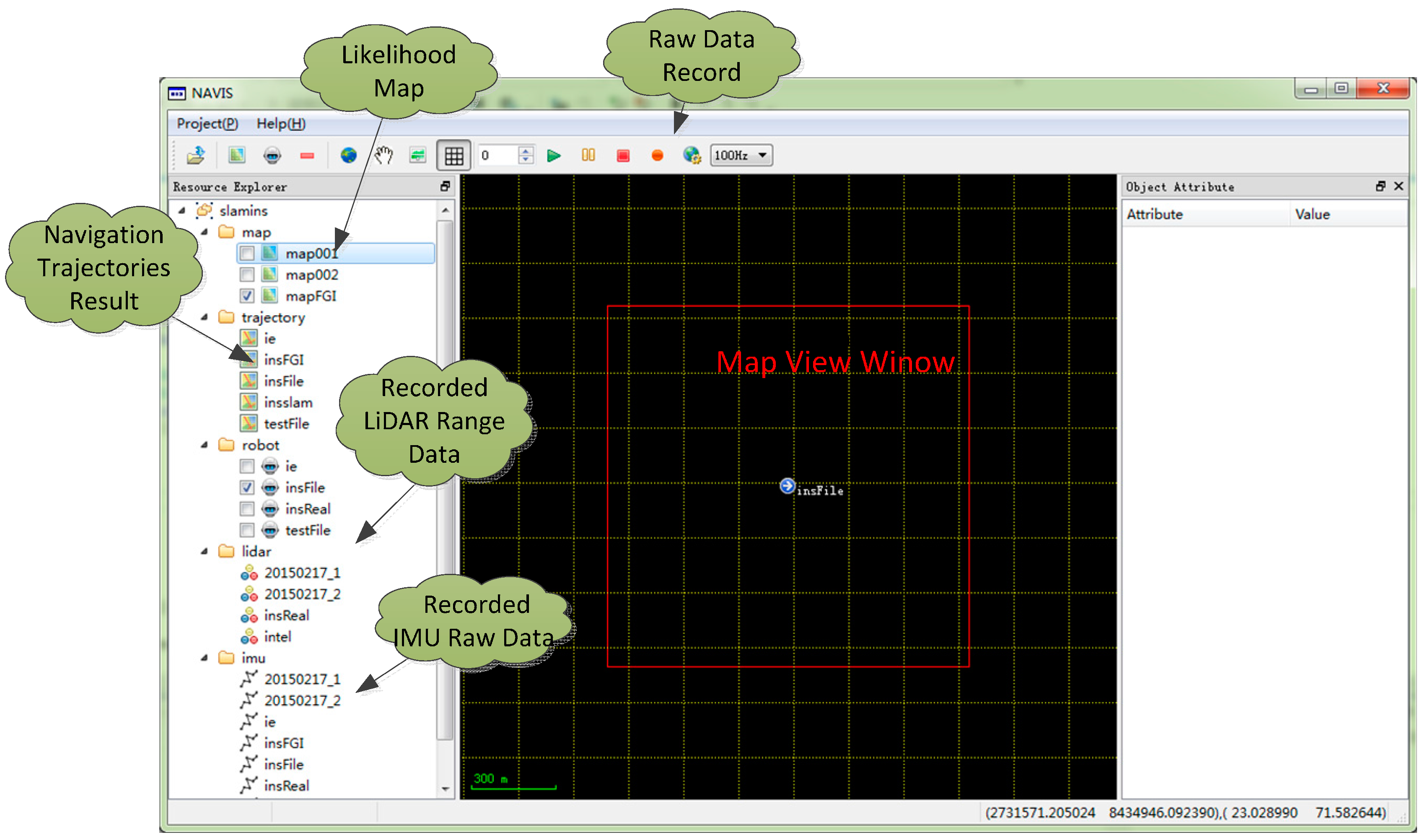

3.1. System Overview

3.2. Evaluation of Stationary Estimation

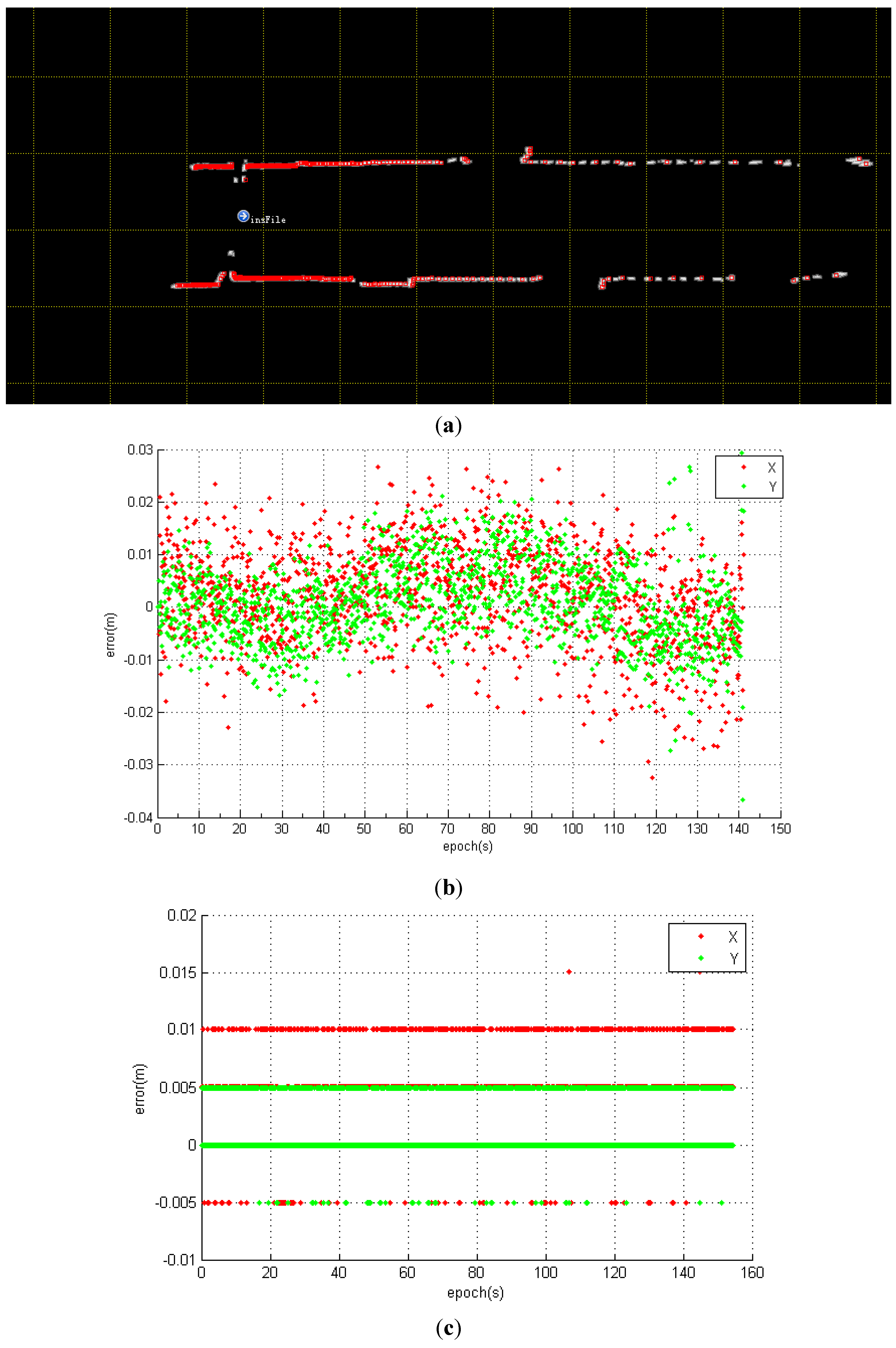

- The error distribution of the LiDAR scan-matching method is stepwise and the error distribution of the IMU + LiDAR resembles white noise. As previously mentioned, the LiDAR scan-matching algorithm is an IMLE, which is a likelihood grid map-based searching method that determines the optimum position from candidates. The likelihood map is divided into small cells as candidate positions according to the map resolution and the angle searching intervals, which are set to 1 cm and 0.25° in this test. We believe this is the primary reason for stepwise error distribution. However, In the IMU + LiDAR combined method, Gaussian error predominates the EKF model, resulting in a white-noise distribution positioning error.

- The estimated positioning and heading results of LiDAR scan matching are better than the IMU + LiDAR fused method. In the static condition, the incoming laser scan has no feature changes. The LiDAR range measure noise is the only stochastic noise source, and with this optimized condition the IMLE easily detects the platform as stationary. However, when a commercial grade IMU is integrated with LiDAR, the accumulated drift of the gyroscope and accelerometer undermines the accuracy of the final positioning result, although it is verified by LiDAR in an EKF. However, the heading errors are minor and can be neglected for positioning processing. It is anticipated that when a higher-grade IMU (tactical-grade or navigation-grade IMU) is integrated, the positioning error can be mitigated.

- The positioning results of the y-axis are better than the results of the x-axis, regardless of whether the IMU is integrated. Table 1 shows the numerical statistics of the stationary experiments. The RMS errors of the x-axis, y-axis, and heading estimation with the IMU + LiDAR solution are 0.009 m, 0.007 m, and 0.065°. However, the corresponding RMS errors with the LiDAR only solution are 0.007 m, 0.004 m, and 0.000°. The RMS error of the x-axis is higher than that of the y-axis because there are more features along the y-axis (along the corridor direction) than the x-axis (across the corridor direction) for scan matching. As shown in Figure 7a, almost all laser scan points are horizontally distributed; only a few points are vertically distributed, which makes the positioning accuracy of the Y direction greater than the X direction. This result proves that environmental features proportionally affect positioning results [19]. The reason that the heading estimation equals 0 is that the search step of the current IMLE is 0.25°, with a maximum detected range of 30 m; a 0.25° heading change will cause a maximum 5.3 cm displacement of the laser point on a 30 m target, and this circumstance never occurs during the stationary test.

| RMS Error | Mean Error | Maximum Error | ||

|---|---|---|---|---|

| IMU + LiDAR | X | 0.009 | 0.007 | 0.033 |

| Y | 0.007 | 0.058 | 0.037 | |

| Heading | 0.065 (degree) | 0.055 (degree) | 0.123 (degree) | |

| LiDAR | X | 0.001 | 0.006 | 0.015 |

| Y | 0.004 | 0.003 | 0.005 | |

| Heading | 0.0 (degree) | 0.0 (degree) | 0.0 (degree) |

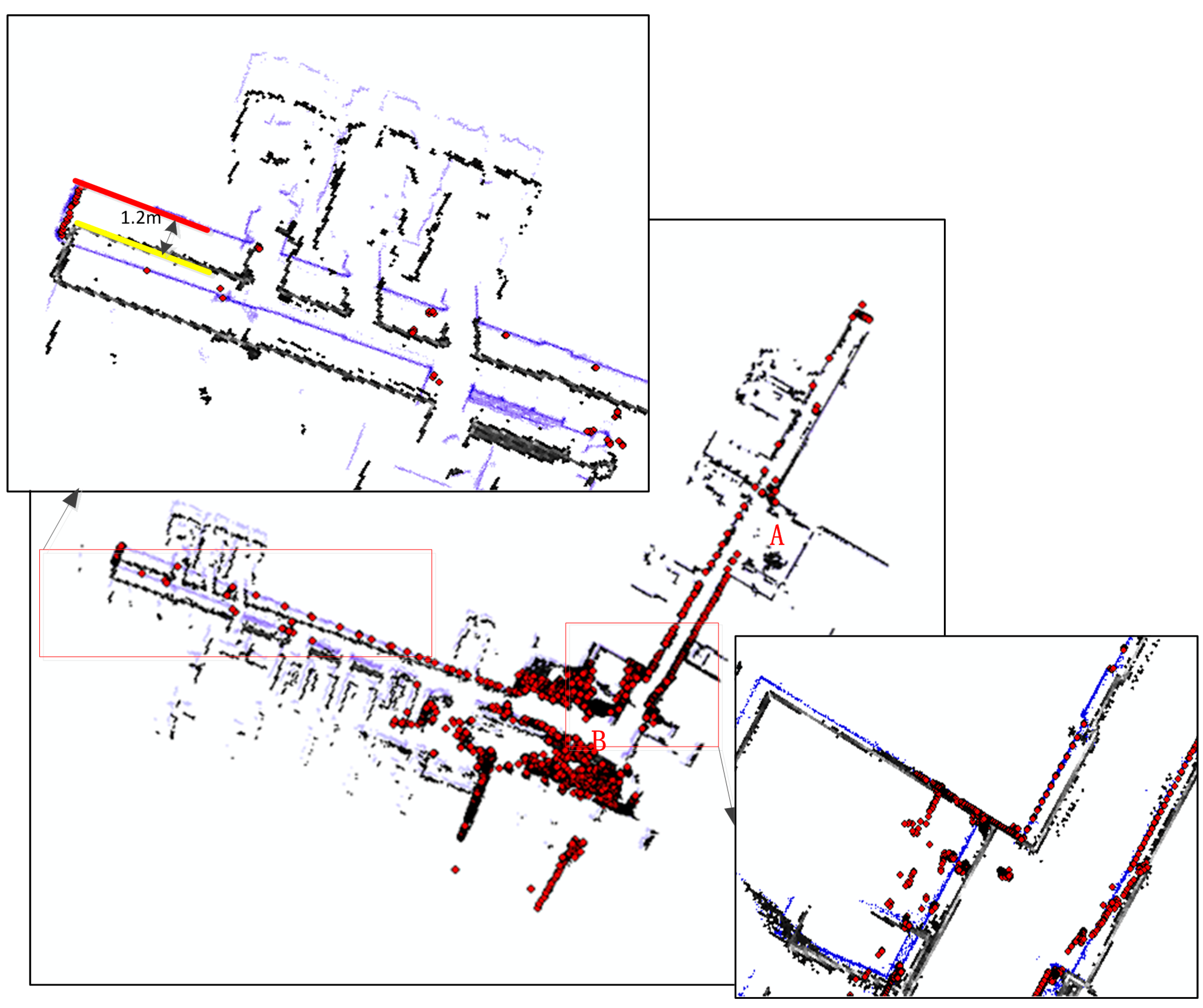

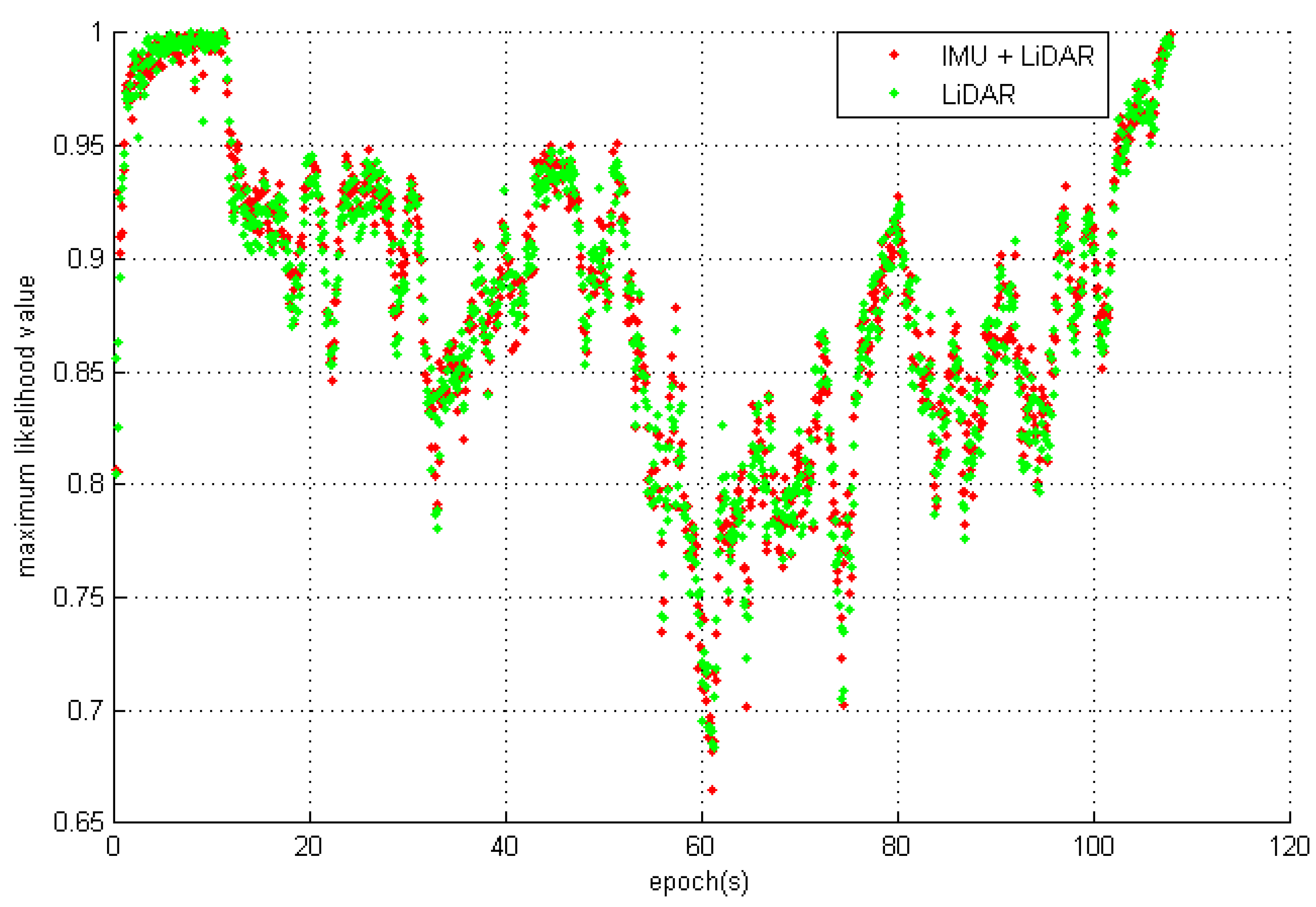

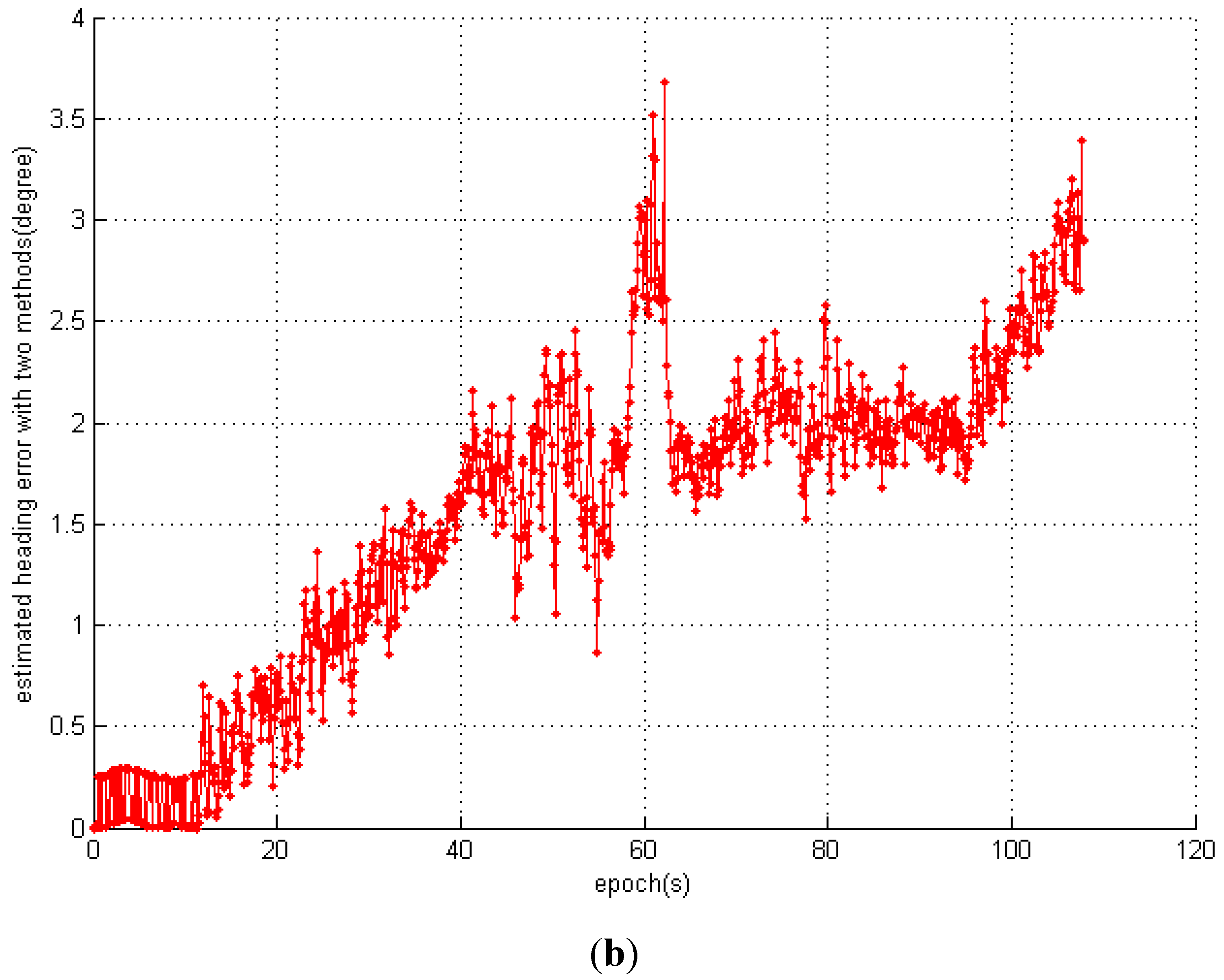

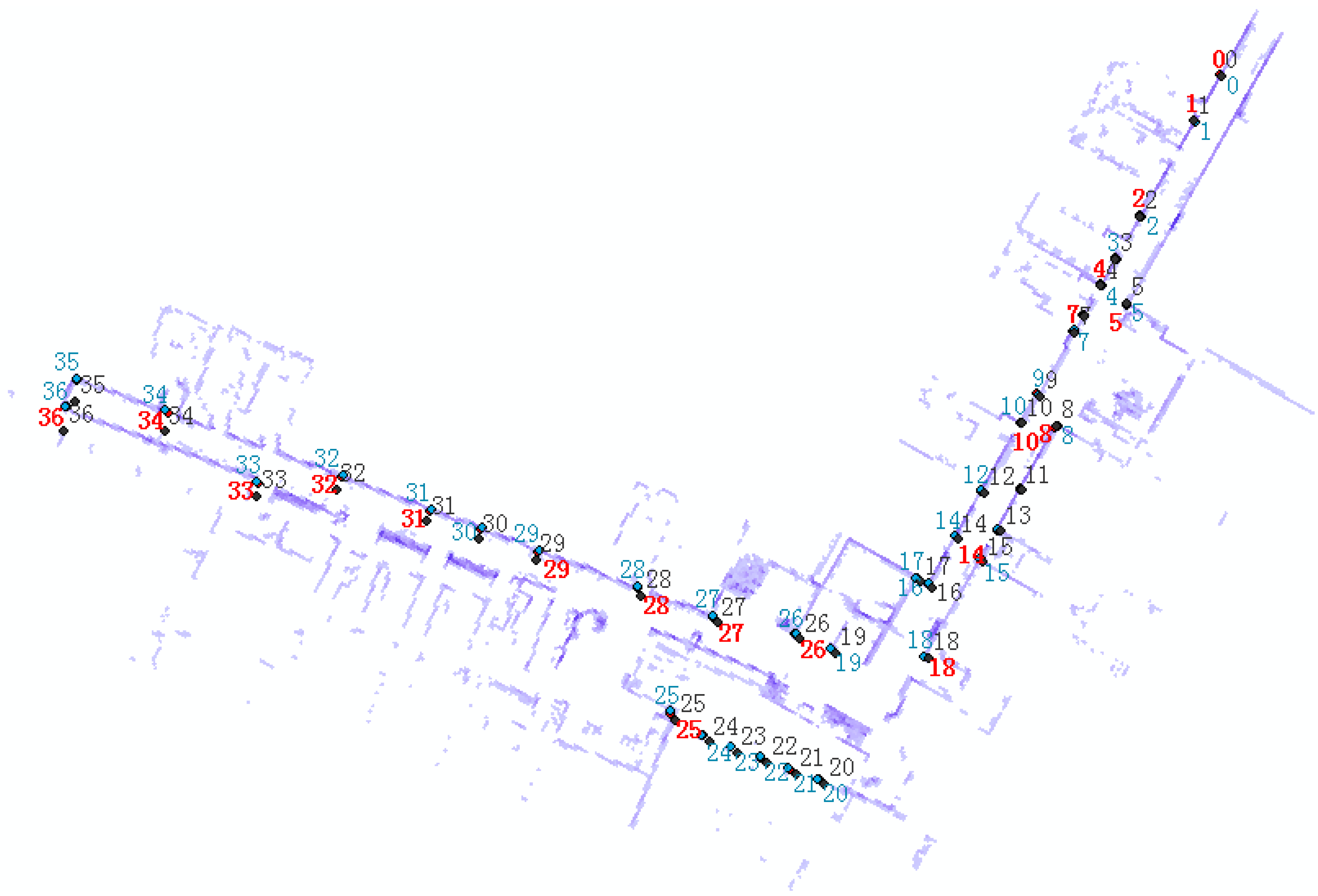

3.3. Evaluation of Dynamic Estimation

| RMS Error | Mean Error | Maximum Error | |

|---|---|---|---|

| IMU+LiDAR | 0.084 | 0.075 | 0.188 |

| LiDAR | 0.433 | 0.336 | 1.195 |

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Fotopoulos, G.; Cannon, M. An overview of multi-reference station methods for cm-level positioning. GPS Solut. 2001, 4, 1–10. [Google Scholar] [CrossRef]

- Miller, M.M.; Soloviev, A.; Uijt de Haag, M.; Veth, M.; Raquet, J.; Klausutis, T.J.; Touma, J.E. Navigation in GPS Denied Environments: Feature-Aided Inertial Systems; Air force research lab eglin afb fl munitions directorate: Valparaiso, FL, USA, 2010. [Google Scholar]

- Veth, M.M.; Raquet, J. Fusion of Low-Cost Imaging and Inertial Sensors for Navigation. J. Inst. Navig. 2007, 54, 11–20. [Google Scholar] [CrossRef]

- Veth, M.J. Fusion of Imaging and Inertial Sensors for Navigation. Ph.D. dissertation, Air Force Institute of Technology (AFIT), Dayton, OH, USA, 2006. [Google Scholar]

- Scaramuzza, D.; Fraundorfer, F. Visual odometry [tutorial]. IEEE Robot. Autom. Mag. 2011, 18, 80–92. [Google Scholar] [CrossRef]

- Jiang, W.; Wang, L.; Niu, X.; Zhang, Q.; Zhang, H.; Tang, M.; Hu, X. High-Precision Image Aided Inertial Navigation with Known Features: Observability Analysis and Performance Evaluation. Sensors 2014, 14, 19371–19401. [Google Scholar] [CrossRef] [PubMed]

- Nützi, G.; Weiss, S.; Scaramuzza, D.; Siegwart, R. Fusion of IMU and vision for absolute scale estimation in monocular SLAM. J. Intel. Robot. Syst. 2011, 61, 287–299. [Google Scholar] [CrossRef]

- Lupton, T.; Sukkarieh, S. Efficient integration of inertial observations into visual SLAM without initialization. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 1547–1552.

- Kneip, L.; Martinelli, A.; Weiss, S.; Scaramuzza, D.; Siegwart, R. Closed-form solution for absolute scale velocity determination combining inertial measurements and a single feature correspondence. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 4546–4553.

- Kleinert, M.; Schleith, S. Inertial aided monocular SLAM for GPS-denied navigation. In Proceedings of the IEEE Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Salt Lake City, UT, USA, 5–7 September 2010; pp. 20–25.

- Klein, I.; Filin, S. Lidar and INS Fusion in Periods of GPS Outages for Mobile Laser Scanning Mapping Systems. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 3812, 231–236. [Google Scholar] [CrossRef]

- Kohlbrecher, S.; Von Stryk, O.; Meyer, J.; Klingauf, U. A flexible and scalable slam system with full 3d motion estimation. In Proceedings of the IEEE International Symposium on Safety, Security, and Rescue Robotics, Kyoto, Japan, 1–5 November 2011; pp. 155–160.

- Soloviev, A. Tight coupling of GPS, laser scanner, and inertial measurements for navigation in urban environments. In Proceedings of the Position, Location and Navigation Symposium, 2008 IEEE/ION, Monterey, CA, USA, 5–8 May 2008; pp. 511–525.

- Wang, X.; Toth, C.; Grejner-Brzezinska, D.; Sun, H. Integration of terrestrial laser scanner for ground navigation in GPS-challenged environment. In Proceedings of the XXI Congress of International Society for Photogrammetry and Remote Sensing, Beijing, China, 3–11 July 2008; pp. 3–11.

- Borges, G.A.; Aldon, M.J. Optimal Robot Pose Estimation using Geometrical Maps. IEEE Trans. Robot. Autom. 2002, 18, 87–94. [Google Scholar] [CrossRef]

- Pfister, S.T. Algorithms for Mobile Robot Localization and Mapping Incorporating Detailed Noise Modeling and Multi-scale Feature Extraction. Ph.D. dissertation, California Institute of Technology, Pasadena, CA, USA, 2006. [Google Scholar]

- Horn, J.P. Bahnführung Eines Mobilen Roboters Mittels Absoluter Lagebestimmung Durch Fusion Von Entfernungsbild-und Koppelnavigations-Daten. Ph.D. Thesis, Technical University of Munich, Munich, BY, Germany, 1997. [Google Scholar]

- Giorgio, G.; Cyrill, S.; Wolfram, B. Improved Techniques for Grid Mapping with Rao-Blackwellized Particle Filters. IEEE Trans. Robot. 2007, 23, 34–46. [Google Scholar]

- Tang, J.; Chen, Y.; Chen, L.; Liu, J.; Hyyppä, J.; Kukko, A.; Chen, R. Fast Fingerprint Database Maintenance for Indoor Positioning Based on UGV SLAM. Sensors 2015, 15, 5311–5330. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.S.; Baeg, S.H.; Yang, K.W.; Cho, K.; Park, S. An enhanced inertial navigation system based on a low-cost IMU and laser scanner. In Proceedings of the SPIE 8387, Unmanned Systems Technology XIV, 83871J, Baltimore, MD, USA, 1 May 2012.

- Maybeck, P.S. Stochastic Models, Estimation And Control; Navtech Book & Software Store: Dayton, NY, USA, 1994. [Google Scholar]

- Tang, J.; Chen, Y.; Jaakkola, A.; Liu, J.; Hyyppä, J.; Hyyppä, H. NAVIS—An UGV Indoor Positioning System Using Laser Scan Matching for Large-Area Real-Time Applications. Sensors 2014, 14, 11805–11824. [Google Scholar] [CrossRef] [PubMed]

- Jekeli, C. Inertial Navigation Systems With Geodetic Applications; Walter de Gruyter: Berlin, Germany, 2001. [Google Scholar]

- Titterton, D.; Weston, J. L. Strapdown Inertial Navigation Technology, 2nd ed.; The American Institute of Aeronautics and Astronautics and the institution of electrical engineers: Reston, FL, USA, 2004. [Google Scholar]

- Shin, E.-H.; Naser, E.-S. Accuracy Improvement of Low Cost INS/GPS for Land Applications; University of Calgary, Department of Geomatics Engineering: Calgary, AB, Canada, 2001. [Google Scholar]

- Shin, E.H. Estimation Techniques for Low-Cost Inertial Navigation. Available online: http://www.geomatics.ucalgary.ca/links/GradTheses.html (accessed on 10 July 2015).

- Kurt, K.; Ken, C. Markov localization using correlation. In Proceedings of the Sixteenth International Joint Conference on Artificial Intelligence, San Francisco, CA, USA, 15 August 1999; pp. 1154–1159.

- Olson, E.B. Real-time correlative scan matching. In Proceedings of IEEE International Conference on Robotics and Automation (ICRA), Kobe, Japan, 12–17 May 2009; pp. 4387–4393.

- Simon, D. Optimal State Estimation: Kalman, H Infinity, and Nonlinear Approaches; John Wiley Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Grewal, M.S.; Andrews, A.P. Kalman Filtering: Theory and Practice; Prentice-Hall, Inc.: Hoboken, NJ, USA, 1993. [Google Scholar]

- MTi User Manual. Available online: https://www.xsens.com/wp-content/uploads/2013/12/MTi-User-Manual.pdf (accessed on 10 July 2015).

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, J.; Chen, Y.; Niu, X.; Wang, L.; Chen, L.; Liu, J.; Shi, C.; Hyyppä, J. LiDAR Scan Matching Aided Inertial Navigation System in GNSS-Denied Environments. Sensors 2015, 15, 16710-16728. https://doi.org/10.3390/s150716710

Tang J, Chen Y, Niu X, Wang L, Chen L, Liu J, Shi C, Hyyppä J. LiDAR Scan Matching Aided Inertial Navigation System in GNSS-Denied Environments. Sensors. 2015; 15(7):16710-16728. https://doi.org/10.3390/s150716710

Chicago/Turabian StyleTang, Jian, Yuwei Chen, Xiaoji Niu, Li Wang, Liang Chen, Jingbin Liu, Chuang Shi, and Juha Hyyppä. 2015. "LiDAR Scan Matching Aided Inertial Navigation System in GNSS-Denied Environments" Sensors 15, no. 7: 16710-16728. https://doi.org/10.3390/s150716710