1. Introduction

Nozzles are a common mechanical component and are widely used in agriculture, manufacturing and the service industry. A micro-spray nozzle is an important component in an agricultural sprayer, which is used to spray water, pesticides and nutrients. Real-time flow uniformity depends on the internal quality of the micro-spray nozzle. Unstable flow will be created because of internal defects. The price and quality of micro-spray nozzles are down due to such defects. The internal defects of micro-spray nozzles usually result during cutting procedures with a CNC (computer numerical control) machine. Chang and Yu [

1] proposed a triangular-pitch shell-and-tube spray evaporator featuring an interior spray technique. The dry-out phenomenon is prevented, and the heat transfer performance is improved in their study. In addition, micro-spray nozzles have to be sorted manually, because the surface of the micro-spray nozzle is heterogeneous, which makes auto-detection very difficult.

Image processing techniques are a powerful method and are widely used to inspect industrial products. Chen

et al. [

2] designed an automatic damage detection system of an engineering ceramic surface with image processing techniques, pattern recognition and machine vision. Paniagua

et al. [

3] proposed a neuro-fuzzy classification system to inspect the quality of industrial cork samples by incorporating techniques based on Gabor filtering techniques and neuro-fuzzy computing with three wavelet-based texture quality features. A microscopic vision system [

4] has been employed to measure the surface roughness of the micro-heterogeneous texture in a deep hole, by virtue of the frequency domain features of microscopic images and a back-propagation artificial neural network optimized by the genetic algorithm. Indeed, neural networks, geometric, color and texture features were often employed in the classification of plants and crops [

5,

6,

7]. Shen

et al. [

8] used the Otsu method, HSI color system and Sobel operator to extract disease spot regions and calculate leaf areas. Park

et al. [

9] employed an unsupervised segmentation algorithm by Gaussian mixture models to segment color image regions.

However, to the best of the authors’ knowledge, there is no literature on the inspection of the internal quality of micro-spray nozzles using image processing techniques. Some research [

10,

11,

12] talked about the determination of drop sizes and shapes for spray nozzles. The drop size distribution of an irrigation spray nozzle was determined using image processing techniques, and the relationship between drop size distribution, operating pressure and nozzle size were discussed [

10]. The droplet shape and size of a diesel spray had been detected using wavelet transform, a Gaussian filter and sub-pixel contour extraction [

11]. The gray level gradient, boundary curvature detection, the Hough transform and the convex-hull method were employed to count and size particles in the field of sprays [

12].

Currently, the internal damages of micro-spray nozzles are manually inspected (sorted) using stereomicroscopes. The procedures are laborious and ineffective. The labor cost was about 80% of the total cost for micro-spray nozzle products. The detection process has to be automated to reduce the total cost. Thus, this paper aims to develop a machine vision system to correctly and automatically inspect the micro-spray nozzle. The specific goals are: (1) to develop an algorithm to segment the defect region; (2) to extract the gray level and texture features; and (3) to classify different grades using the aforementioned features by a classifier.

In summary, we develop a machine vision system to correctly and automatically inspect and classify micro-spray nozzles. The system includes an extraction algorithm of defect regions, a feature estimation algorithm for defect regions and a classifier for the quality of micro-spray nozzles.

2. Materials and Methods

2.1. Micro-Spray Nozzle Structure and Defects

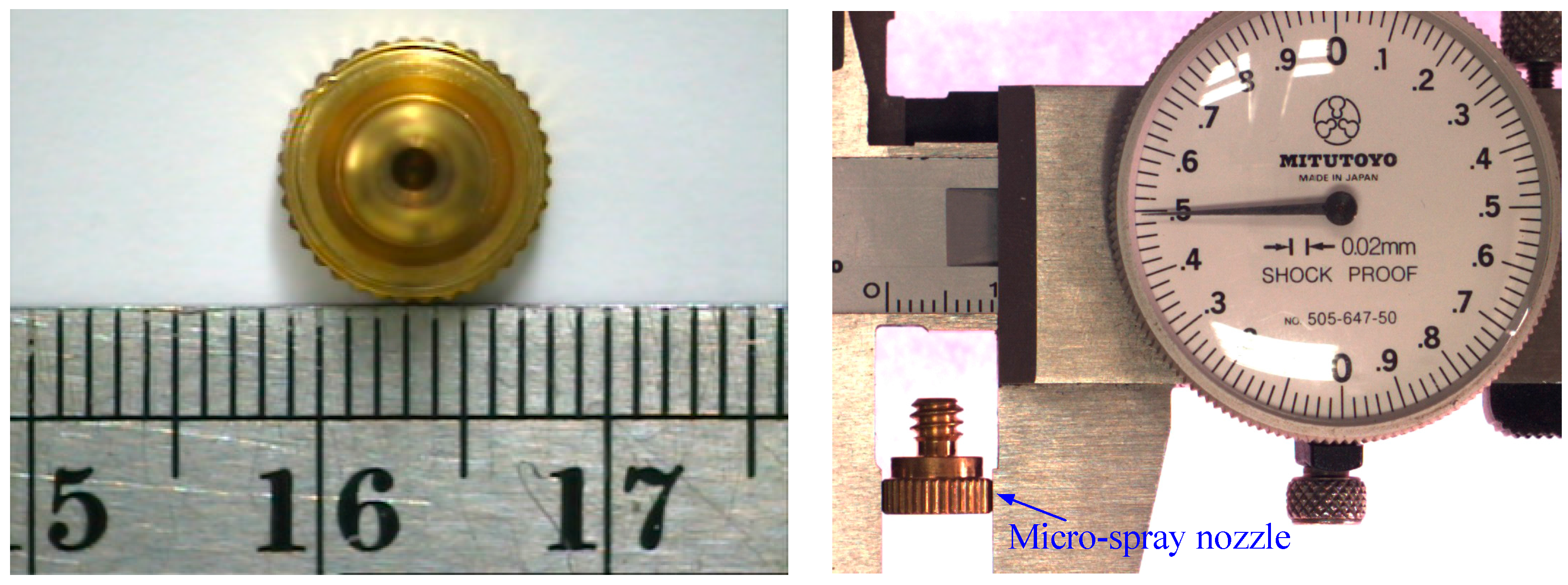

Micro-spray nozzles (shown in

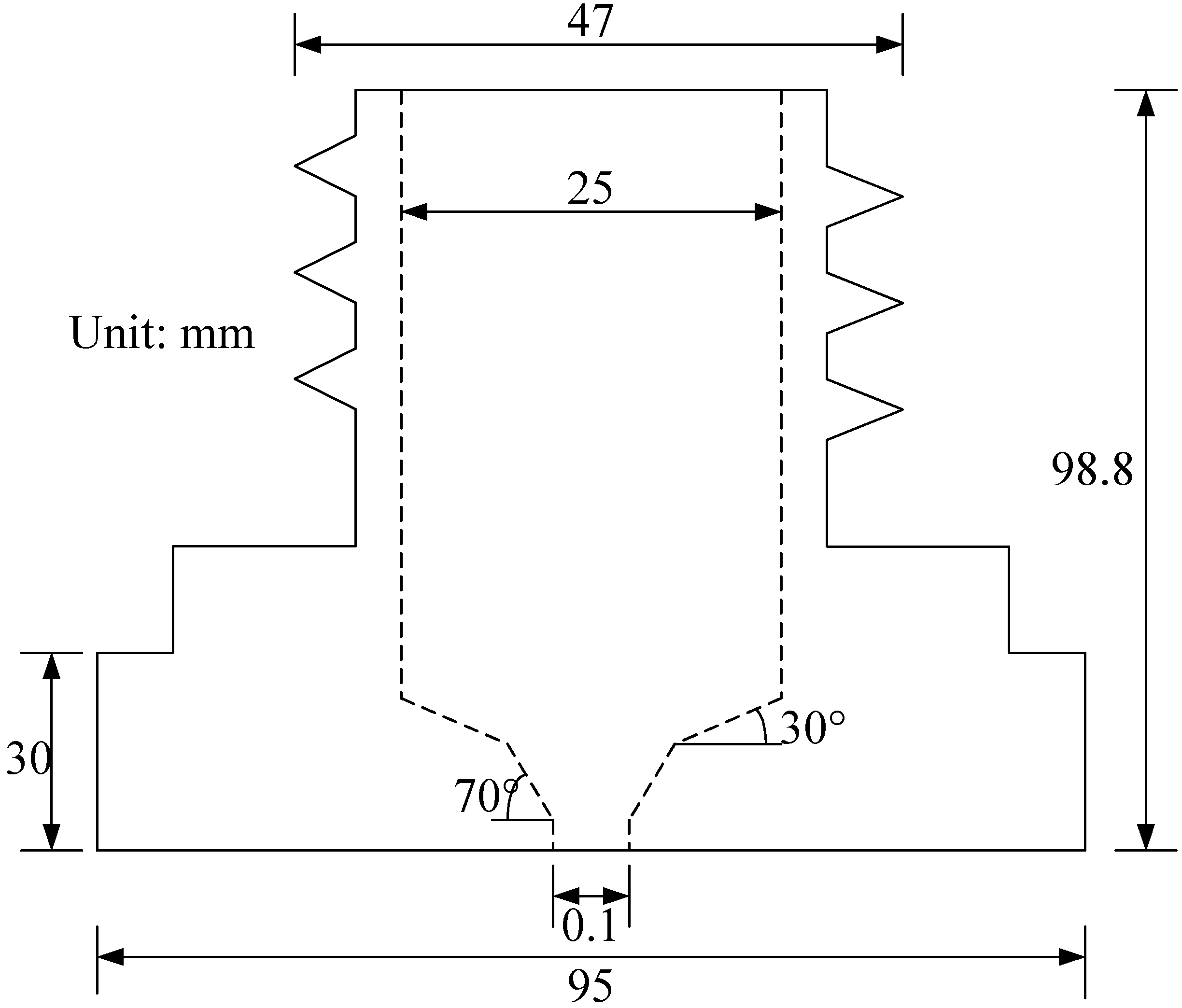

Figure 1) are provided by Natural Fog Multi-Tech Precision Industry Corporation Ltd. (Tai-Chung, Taiwan). The structure profile of the micro-spray nozzle is shown in

Figure 2. The outlet diameter of the micro-spray nozzle is 0.1 mm. There are two inclined annular-planes, A and B (as shown in

Figure 2), on the inside surface of the micro-spray nozzle.

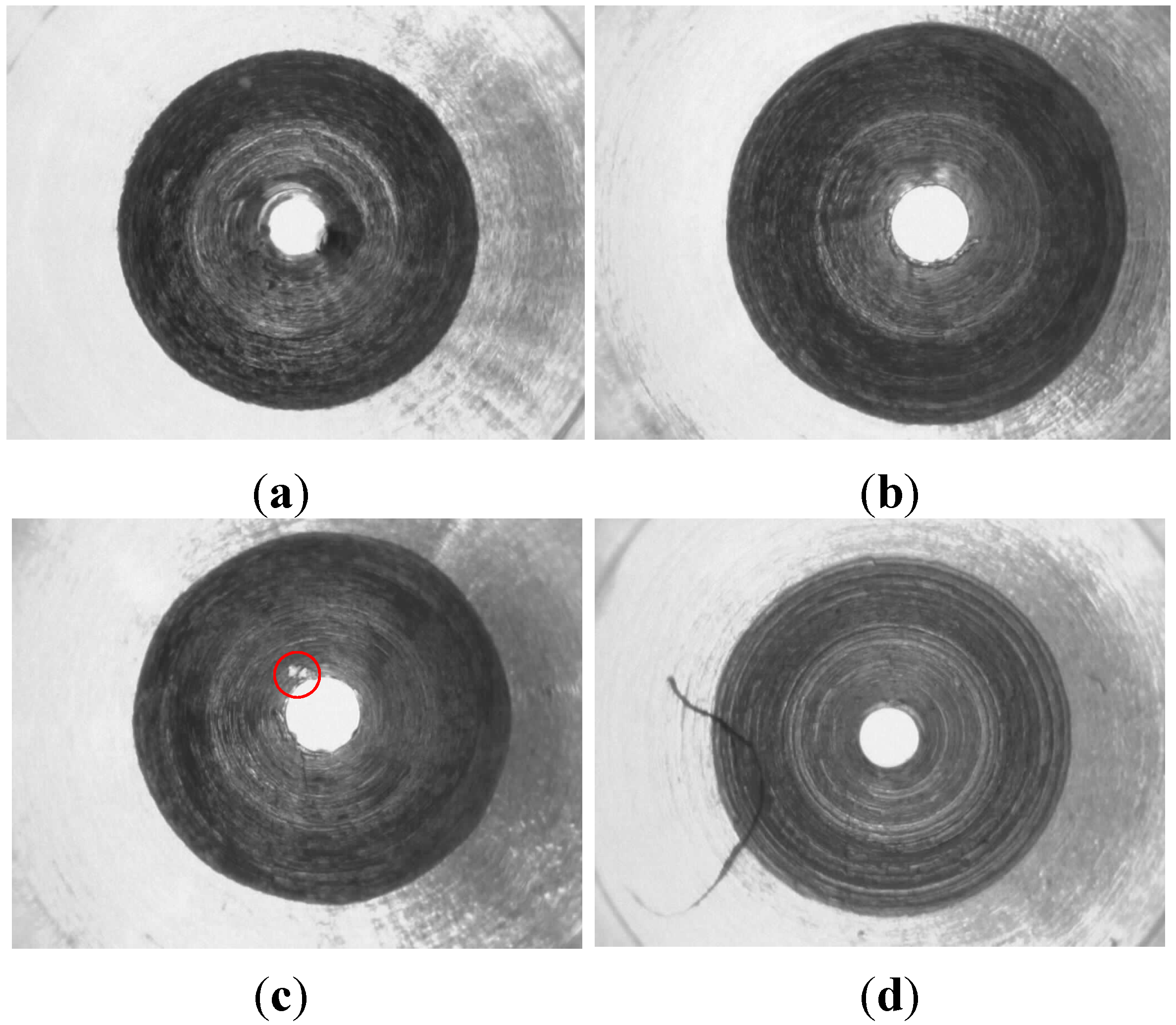

Figure 3 depicts the circle textures in the inner image of micro-spray nozzles after CNC machine manufacturing. The defects of micro-spray nozzles were made by the CNC machine during the manufacturing procedures. The defects appear on the outlet and inclined planes A and B. Four possible defects, which include the outlet shape and deckle edge, pellet and stripe metal filings on inclined planes, are shown in

Figure 3. The purpose of this study is to inspect the defects of micro-spray nozzles automatically using a machine vision system.

Figure 1.

Micro-spray nozzle.

Figure 1.

Micro-spray nozzle.

Figure 2.

The profile of the micro-spray nozzle.

Figure 2.

The profile of the micro-spray nozzle.

Figure 3.

Four possible defects. (a) Small outlet; (b) Deckle edge of outlet; (c) Pellet metal fillings; (d) Strip metal fillings.

Figure 3.

Four possible defects. (a) Small outlet; (b) Deckle edge of outlet; (c) Pellet metal fillings; (d) Strip metal fillings.

2.2. Hardware System

The machine vision system implemented to inspect the inner images of micro-spray nozzles is illustrated in

Figure 4. This system includes an IEEE 1394 CCD color camera (DFK-31BF03, Imaging Source Inc., Bremen, Germany), a stereomicroscope (Stemi 2000-C, Zeiss Inc., Oberkochen, Germany), a front illuminating white light LED with a diffuse filter, a fixed table and a personal computer. Open Source Computer Vision Library (OpenCV1.0, Intel Corporation) and Visual C++ 6.0 programming are linked to the programs to grab images of 1024 × 768 pixels.

Figure 4.

Illustration of the machine vision system for micro-spray nozzle defect inspection.

Figure 4.

Illustration of the machine vision system for micro-spray nozzle defect inspection.

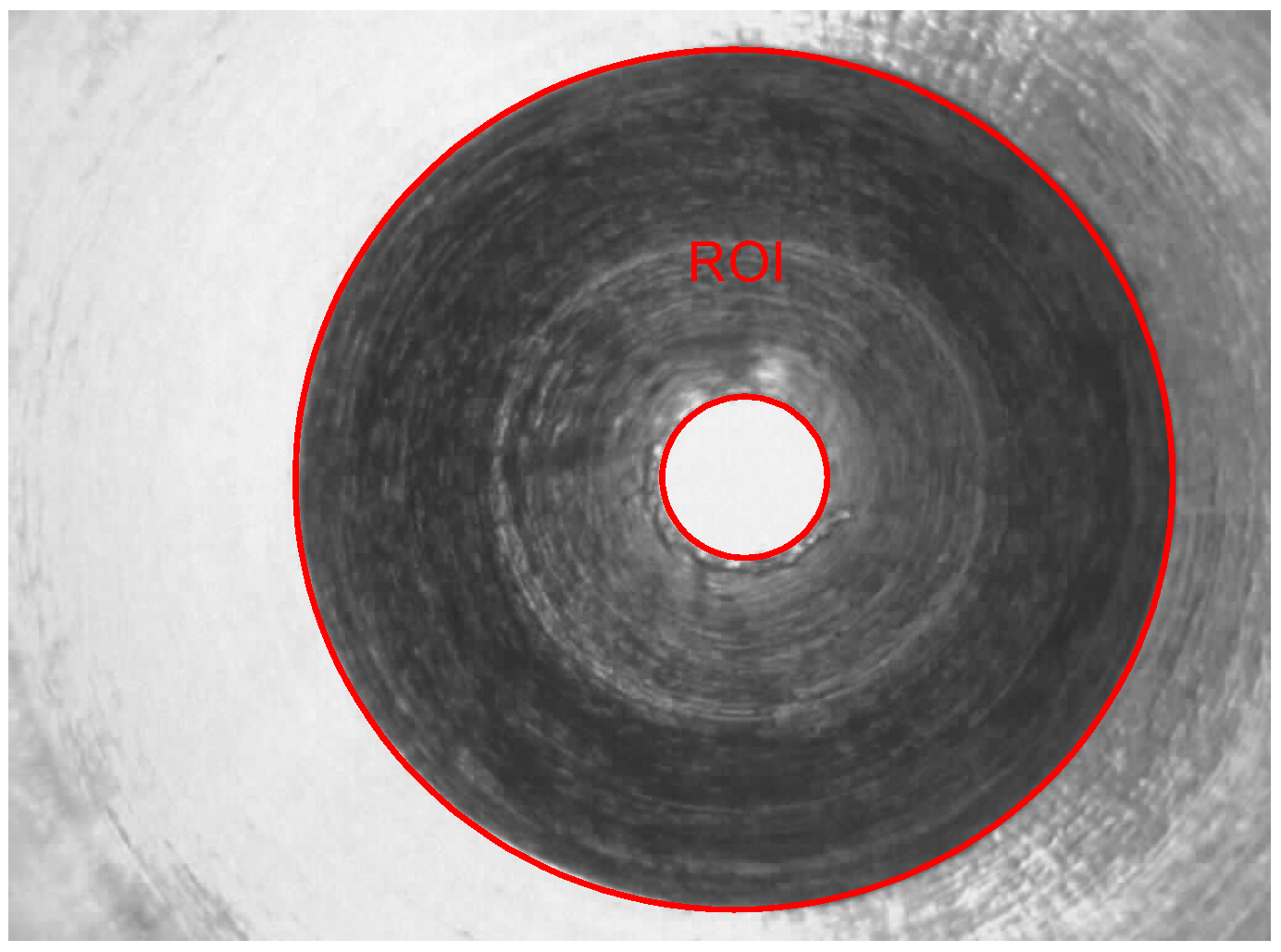

2.3. Region Segmentation and Features Extraction

Algorithms that include the estimation of the outlet’s geometric feature and segmentation of the region of interest (ROI), a circle inspection (CI) algorithm and a classifier are proposed to detect micro-spray nozzle defects. Details of the proposed algorithms are described as follows.

2.3.2. Possible Defect Segmentation

Segmenting defect regions is an important procedure before possible defects (including the deckle edge, pellet and stripe metal fillings) are detected and classified. A prior experiment proceeded as follows. Firstly, an inspection circle is used to find gray levels with scanning resolution in a pixel, as shown in

Figure 8. There are different distribution forms of gray levels in different circles, as illustrated in

Figure 9. However, the difference between defects and non-defects is difficult to distinguish using the thresholding method [

13]. Therefore, a novel method, the circle inspection algorithm (CI algorithm), is proposed for defect region segmentation of micro-spray nozzles in this study.

Figure 8.

The scanning direction of the circle inspection for the ROI.

Figure 8.

The scanning direction of the circle inspection for the ROI.

Figure 9.

The distribution of gray levels of the circle inspection.

Figure 9.

The distribution of gray levels of the circle inspection.

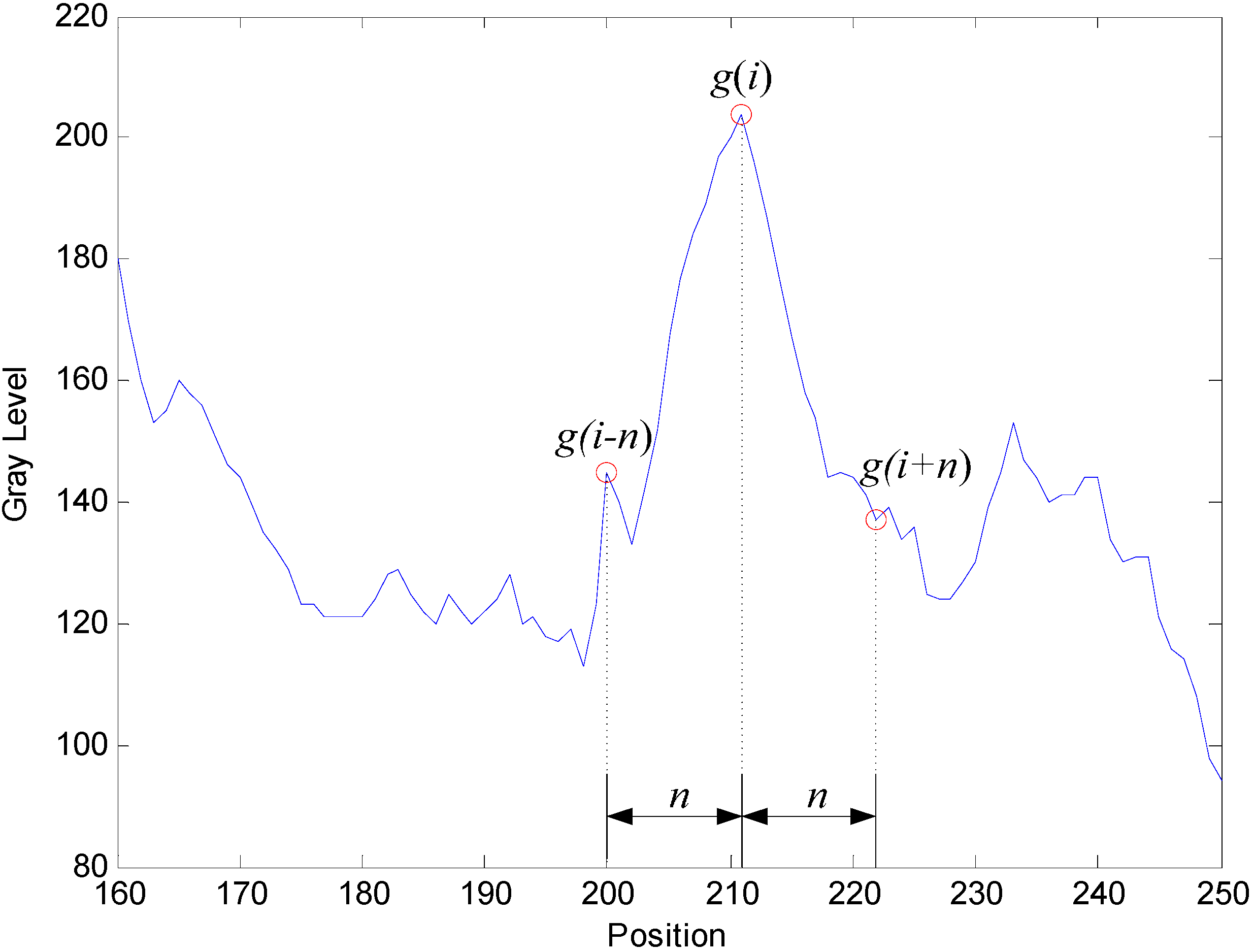

The CI algorithm is effectively executed to extract defect regions for internal images of micro-spray nozzles according to the differences of the gray level gradient. The CI algorithm is described as follows:

- Step 1.

The distribution of the gray level on the image is estimated along the circle CRj (with scanning resolution in a pixel); (xc, yc) is the center, and Rmin < Rj< Rmax.

- Step 2.

Compute the gray level gradient (

) of the circle, as demonstrated in

Figure 10 on Circle 1.

where

g(

i) is the gray level of the circle,

i is the position of the circle and

n is the distance.

- Step 3.

Segment the possible defect regions (

PDR).

where

T is a threshold value.

Figure 10.

Parameters of gray level gradient computation.

Figure 10.

Parameters of gray level gradient computation.

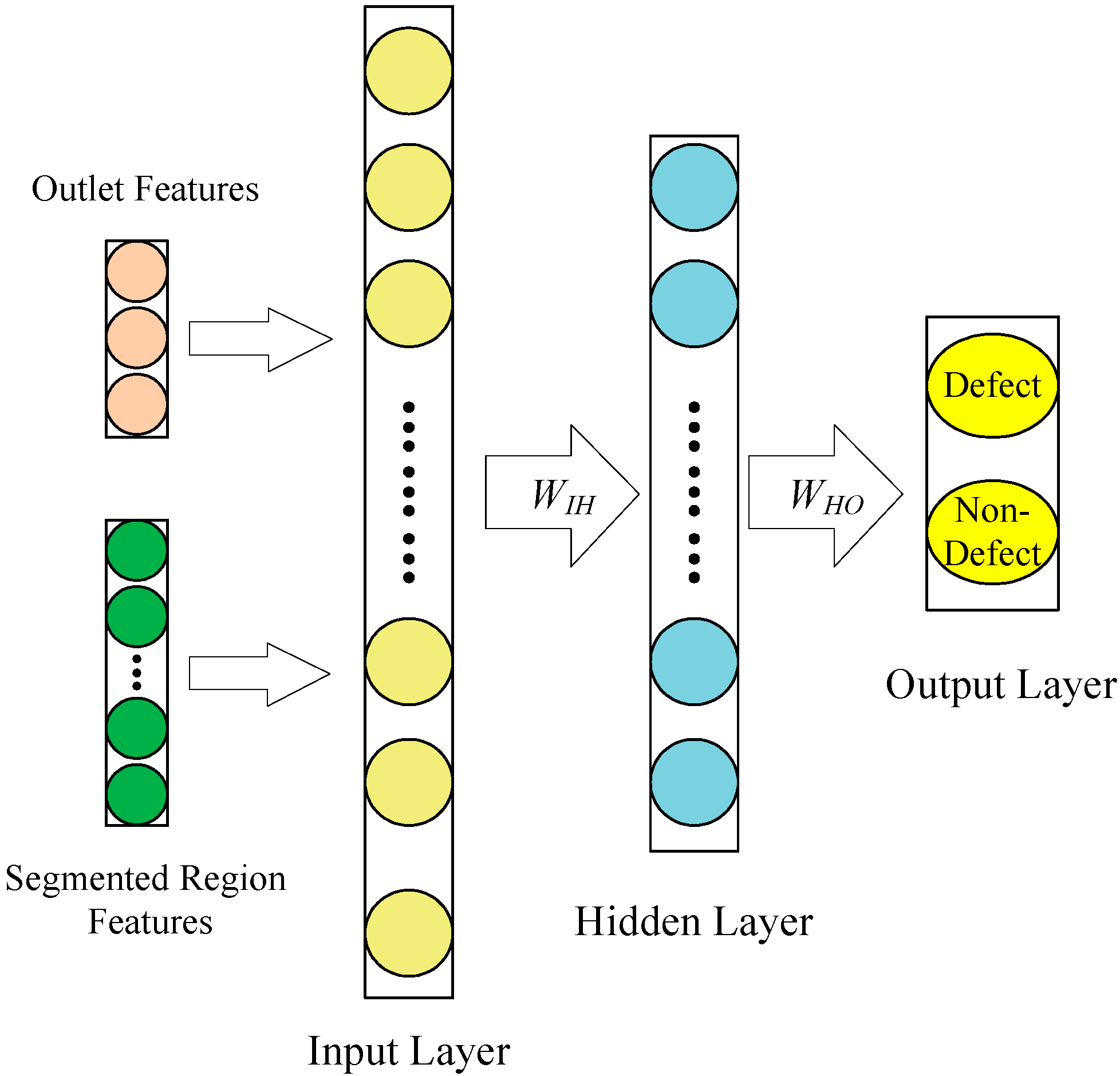

2.4. Classification: Classifier

Geometric, texture and color features analysis have been widely employed in classification. With proper feature selections, the design of a classifier can be greatly simplified. This study adopts the geometric feature (mean diameter, diameter ratio, distance variance), the color features (mean gray level, variance of gray level) and texture features (contrast, energy and entropy from the gray level co-occurrence matrix (GLCMs) [

15]) to classify defects and non-defects using a back propagation neural network [

16] (BPNN, as shown in

Figure 11). Mathematical formulations of these features are given in

Table 1.

Figure 11.

The structure of the back propagation neural network (BPNN) classifier.

Figure 11.

The structure of the back propagation neural network (BPNN) classifier.

Table 1.

Mathematical formulations of the features.

Table 1.

Mathematical formulations of the features.

| Distance Variance | Variance of |

| Mean gray level | |

| Gray level variance | |

| Contrast (orientations 45°, 90°, 135° and 180°) | |

| Entropy (orientations 45°, 90°, 135° and 180°) | |

| Energy (orientations 45°, 90°, 135° and 180°) | |

In this study, the BPNN classifier consists of three layers: an input layer, a hidden layer and an output layer (as shown in

Figure 11). The input layer has 17 nodes, which are related to 3 geometric features, 2 color features and 12 texture features, normalized between 0 and 1. The output layer is made of nodes, related to two categories: defect and non-defect. Initially, the number of nodes

nH in the hidden layer is calculated using the following formula:

where

nI is the number of input nodes and

no is the number of output nodes. The objective of the learning process is to find a relation in a pattern that was made by the features of each segmented region. The BPNN is trained, and the weights are changed until the error convergence criterion approaches 0.1. The error signal is given by:

where

dk(

t) is the desired response for neuron

k (

k = 1~2) and

yk(

t) is the output signal of neuron

k at iteration

t.

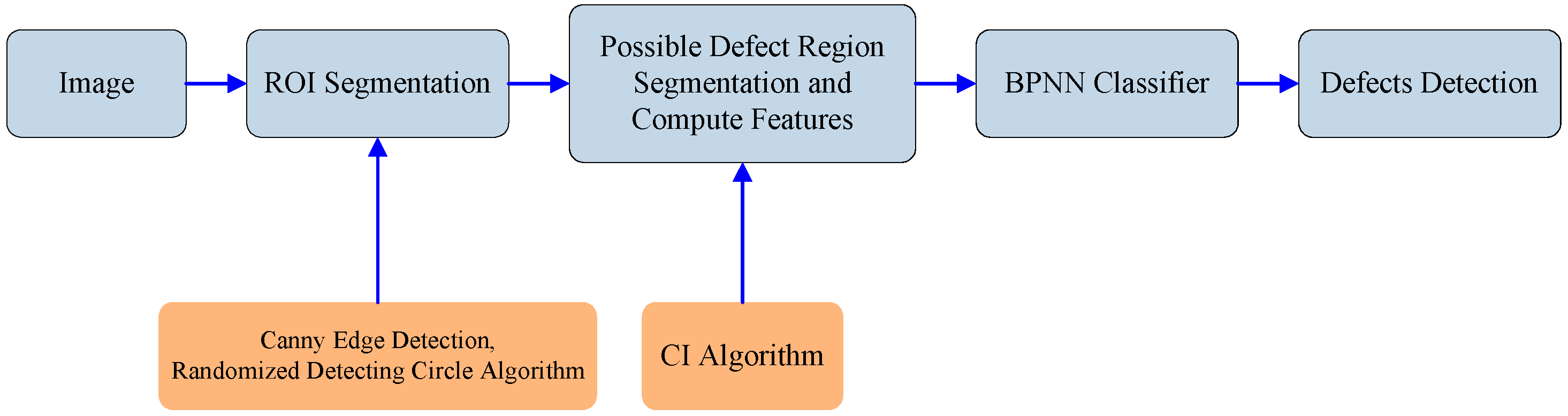

2.5. Overall Descriptions

The overall steps involved in the defect region segmentation and classification are shown in

Figure 12. The algorithm for the classification of defects included image processing techniques, the CI algorithm and the BPNN classifier, are fully described in the previous two sections.

The program employed for detecting and classifying the defects is written in Microsoft Visual C++ 6.0 and OpenCV 1.0.

Figure 12.

Segmentation and classification steps.

Figure 12.

Segmentation and classification steps.

3. Results and Discussion

In this paper, a defect detection (DD) software for a micro-spray nozzle is developed using image processing techniques and the CI algorithm (written in Visual C++ 6.0 with OpenCV 1.0), as outlined in the first few steps shown in

Figure 12. The algorithm is summarized as follows:

- Step 1.

Two geometric features of the outlet are computed.

- Step 2.

The ROIs are segmented using Canny edge detection, a randomized algorithm for detecting circles, hole-filling and the AND logic operators.

- Step 3.

Possible defect regions are segmented by the CI algorithm.

- Step 4.

Estimate 15 features of the segmented regions.

- Step 5.

Establish and test the BPNN classifier to classify defects and non-defects.

The functions of DD include file operations (acquire, load and save images) and image analysis operations (binary operator, hole-filling, remove noises using closing, opening and Canny edge). The geometrics of the outlet, texture and color features of the segmented region are obtained, and defects can be detected by the DD computation accurately and rapidly.

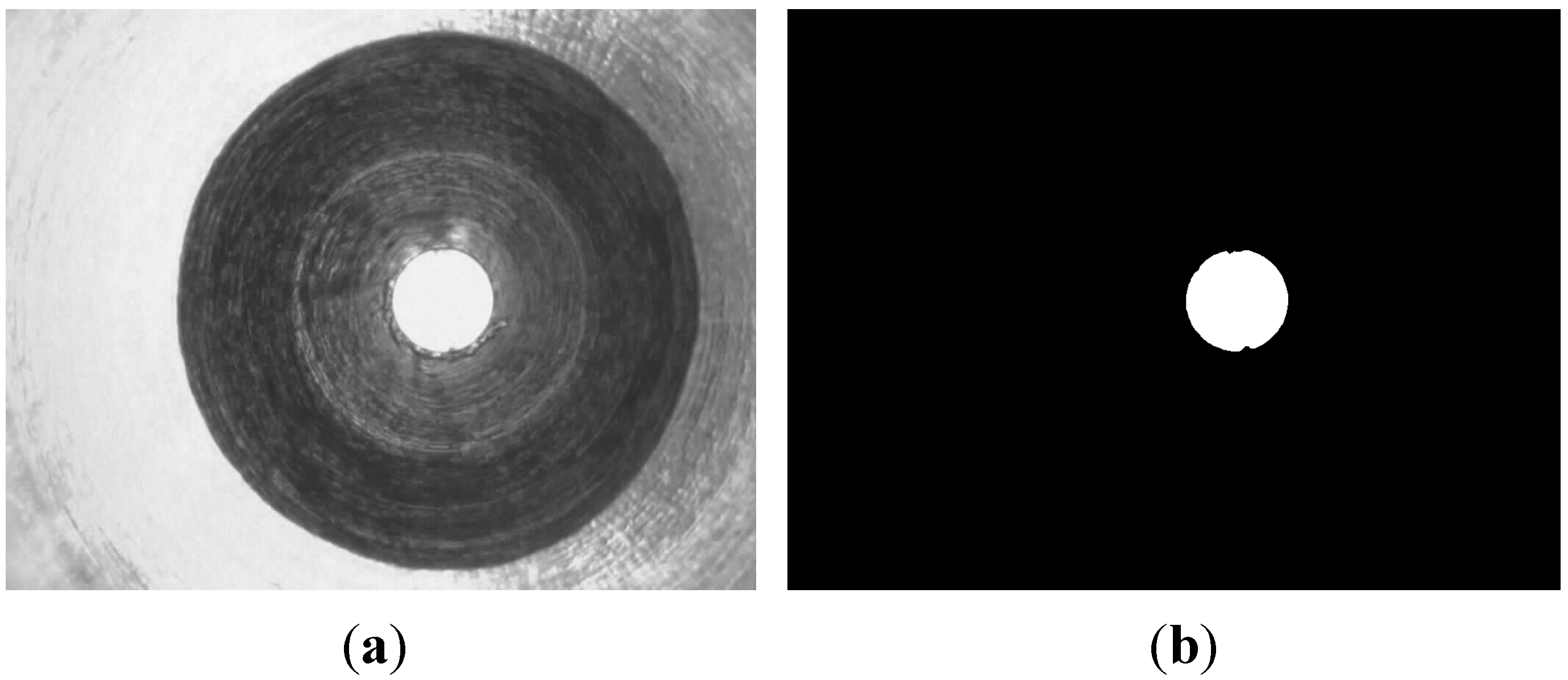

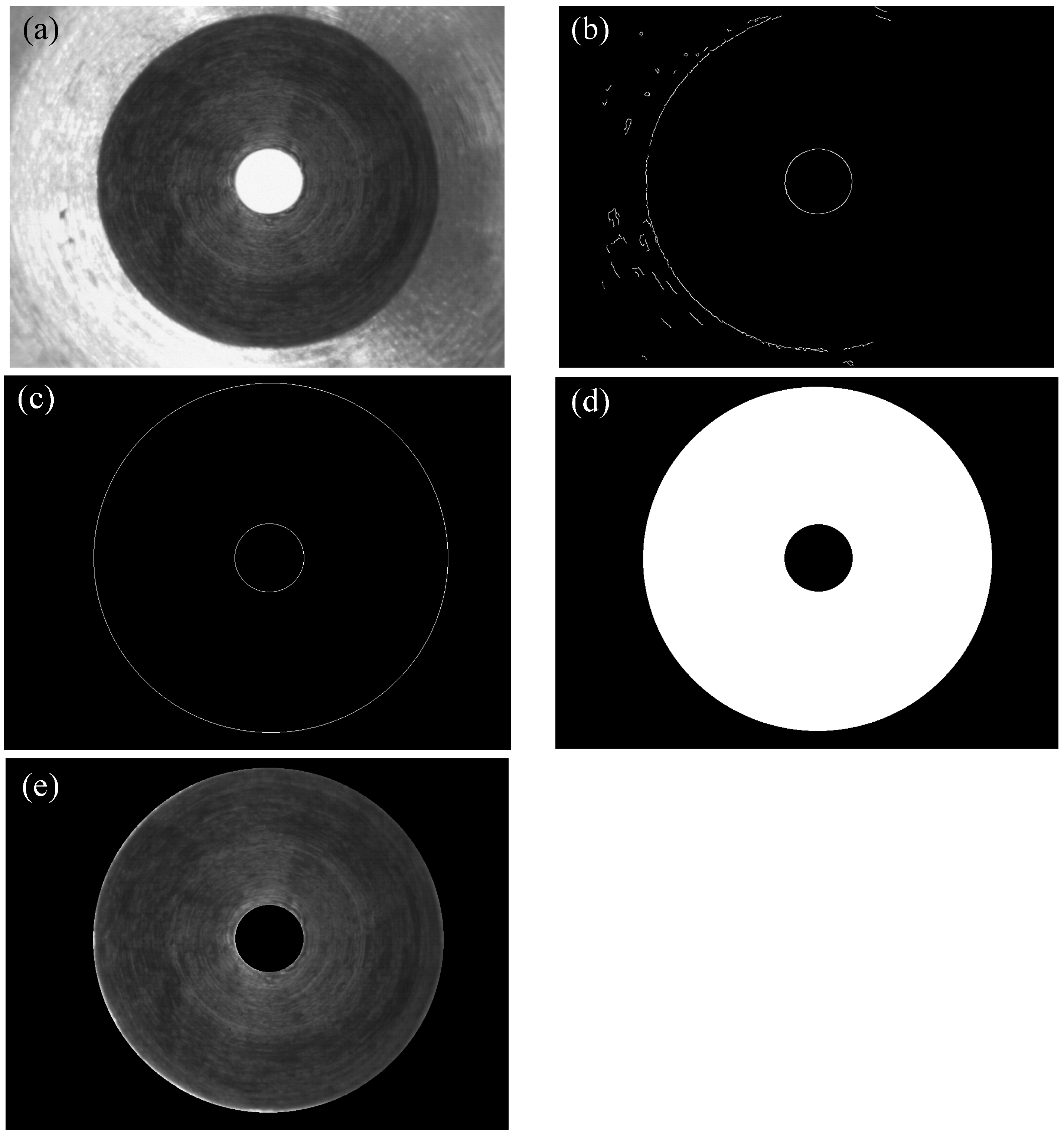

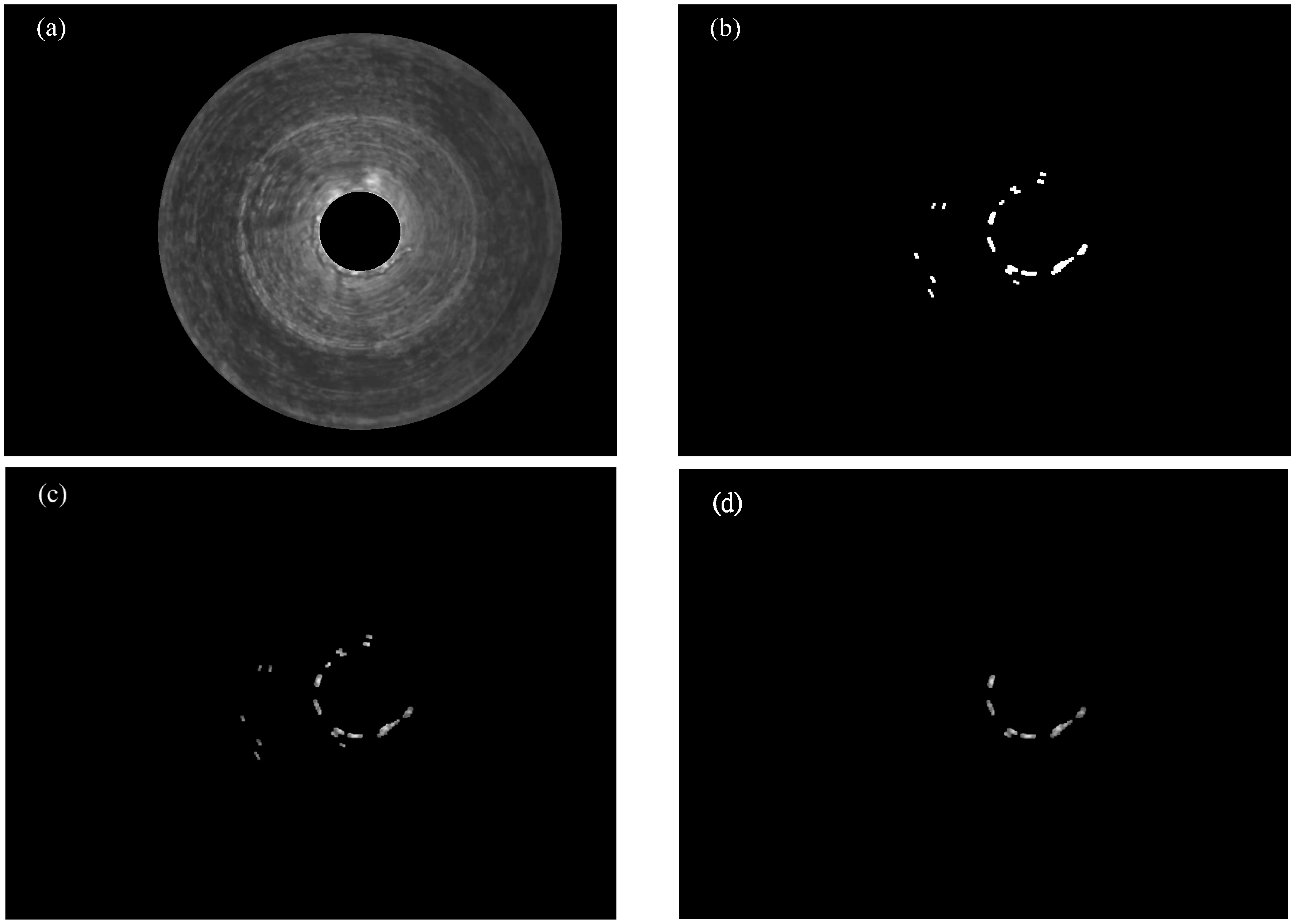

The difference between defects and non-defect regions is not obvious in the original image of a micro-spray nozzle, as shown in

Figure 13a. Possible defect regions, including defects and non-defects, are segmented using the CI algorithm (parameters

n = 6,

T = 11 are selected after some experimentations) and image processing techniques (such as hole-filling, erosion, dilation, opening, closing and Canny edge operators), as indicated in

Figure 13b.

Figure 13c results using the AND logic operator for

Figure 13a,b. Hence, the defects of micro-spray nozzles (

Figure 13d) are detected according to the BPNN classification.

In order to establish the BPNN classifier, 698 samples (with 565 non-defects and 133 defects) are randomly sampled. Eleven hidden nodes are obtained by Equation (3) according to 17 input features, and two output categories. The BPNN classifier is implemented using functions of MATLAB 8.0. For each configuration, the training sample set is randomly selected from all samples until the BPNN convergence is achieved. Over-fitting often occurs when the training set contains some incorrect samples in the BPNN. As the grades of micro-spray nozzles in training samples are already known before the training process, over-fitting is unlikely to occur. To ensure that the influence of over-fitting is trivial during the training process, the maximum number of iterations is set to 100,000. Further studies showed that the same inspection effect is obtained when the error rate convergence criterion is smaller than 0.1. Randomly-sampled images (1486 non-defects and 118 defects) are used to test the system. The accuracy of the classification is 90.71%. The accuracy of outlet shape and strip fillings is 100%. However, a few deckle edges and pellet fillings of the images can not be detected using the proposed algorithms, examples of classification failure and explanations are shown in

Table 2.

Figure 13.

(a) Original image; (b) segmented binary image after the CI algorithm; (c) segmented image after the CI algorithm; (d) classification result.

Figure 13.

(a) Original image; (b) segmented binary image after the CI algorithm; (c) segmented image after the CI algorithm; (d) classification result.

In this study, the proposed system can detect and classify visible defects with a CCD camera accurately and efficiently. In the future, we hope that the detection and classification system can be applied to an auto-inspection system for micro-spray nozzles.

Table 2.

Examples of classification failure and explanations.

4. Conclusions

Real-time flow uniformity depends on the internal quality of the micro-spray nozzle, and unstable flow will result because of internal defects. A novel inspection system for internal defects of micro-spray nozzles is developed in this study. The image processing techniques, Canny edge detection, a randomized algorithm for detecting circles, the circle inspection algorithm and the BPNN classifier are used to establish the micro-spray nozzle detection system. Possible defects can be segmented efficiently using the CI algorithm and image processing techniques. Geometric, color and texture features of the defects are obtained to establish a BPNN classifier. Testing results show that the defects of the micro-spray nozzles can be detected efficiently using the proposed system. In a future study, we aim to refine the inspection algorithm in order to increase the inspection accuracy for the production line of micro-spay nozzles.

Author Contributions

All authors have made significant contributions to the paper. Huang K.Y. initiated the idea to develop the inspection system of micro-spray nozzles. Huang K.Y. and Ye Y.T. developed the inspection algorithms and the classifier together. Ye Y.T. wrote programs and performed the experiment. Huang, K.Y. contributed to the paper organization and technical writing to final version.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chang, T.B.; Yu, L.Y. Optimal nozzle spray cone angle for triangular-pitch shell-and-tube interior spray evaporator. Int. J. Heat Mass Transf. 2015, 85, 463–472. [Google Scholar] [CrossRef]

- Chen, S.; Lin, B.; Han, X.; Liang, X. Automated inspection of engineering ceramic grinding surface damage based on image recognition. Int. J. Adv. Manuf. Technol. 2013, 66, 431–443. [Google Scholar] [CrossRef]

- Paniagua, B.; Vega-Rodríguez, M.A.; Gómez-Pulido, J.A.; Sánchez-Pérez, J.M. Automatic texture characterization using Gabor filters and neurofuzzy computing. Int. J. Adv. Manuf. Technol. 2011, 52, 15–32. [Google Scholar] [CrossRef]

- Liu, W.; Tu, X.; Jia, Z.; Wang, W.; Ma, X.; Bi, X. An improved surface roughness measurement method for micro-heterogeneous texture in deep hole based on gray-level co-occurrence matrix and support vector machine. Int. J. Adv. Manuf. Technol. 2013, 69, 583–593. [Google Scholar] [CrossRef]

- López-García, F.; Andreu-García, G.; Blasco, J.; Aleixos, N.; Valiente, J. Automatic detection of skin defects in citrus fruits using a multivariate image analysis approach. Comput. Electron. Agric. 2010, 71, 189–197. [Google Scholar] [CrossRef]

- Kavdir, I. Discrimination of sunflower, weed and soil by artificial neural networks. Comput. Electron. Agric. 2004, 44, 153–160. [Google Scholar] [CrossRef]

- Camargo, A.; Smith, J.S. An image-processing based algorithm to automatically identify plant disease visual symptoms. Biosyst. Eng. 2009, 102, 9–21. [Google Scholar] [CrossRef]

- Shen, W.; Wu, Y.; Chen, Z.; Wei, H. Grading method of leaf spot disease based on image processing. In Proceedings of the 2008 International Conference on Computer Science and Software Engineering, Wuhan, China, 12–14 December 2008; pp. 491–494.

- Park, J.H.; Lee, G.S.; Park, S.Y. Color image segmentation using adaptive mean shift and statistical model-based methods. Comput. Math. Appl. 2009, 57, 970–980. [Google Scholar] [CrossRef]

- Sudheera, K.P.; Pandab, R.K. Digital image processing for determining drop sizes from irrigation spray nozzles. Agric. Water Manag. 2000, 45, 159–167. [Google Scholar] [CrossRef]

- Blaisot, J.B.; Yon, J. Droplet size and morphology characterization for dense sprays by image processing: Application to the diesel spray. Exp. Fluids 2005, 39, 997–994. [Google Scholar] [CrossRef]

- Lee, S.Y.; Kim, Y.D. Sizing of spray particles using image processing technique. KSME Int. J. 2004, 18, 879–894. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2002. [Google Scholar]

- Chen, T.C.; Chung, K.L. An efficient randomized algorithm for detecting circles. CVGIP Comput. Vis. Image Underst. 2001, 83, 172–191. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textual features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Fausett, L. Fundamentals of Neural Networks: Architectures, Algorithms, and Applications; Prentice-Hall: Upper Saddle River, NJ, USA, 1994. [Google Scholar]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).